Abstract

To achieve the energy transition, energy and energy efficiency are becoming more and more important in society. New methods, such as Artificial Intelligence (AI) and Machine Learning (ML) models, are needed to coordinate supply and demand and address the challenges of the energy transition. AI and ML are already being applied to a growing number of energy infrastructure applications, ranging from energy generation to energy forecasting and human activity recognition services. Given the rapid development of AI and ML, the importance of Trustworthy AI is growing as it takes on increasingly responsible tasks. Particularly in the energy domain, Trustworthy AI plays a decisive role in designing and implementing efficient and reliable solutions. Trustworthy AI can be considered from two perspectives, the Model-Centric AI (MCAI) and the Data-Centric AI (DCAI) approach. We focus on the DCAI approach, which relies on large amounts of data of sufficient quality. These data are becoming more and more synthetically generated. To address this trend, we introduce the concept of Synthetic Data-Centric AI (SDCAI). In this survey, we examine Trustworthy AI within a Synthetic Data-Centric AI context, focusing specifically on the role of simulation and synthetic data in enhancing the level of Trustworthy AI in the energy domain.

1. Introduction

Awareness of energy and energy efficiency is growing rapidly in the face of global climate change, making the energy transition a major societal issue. To achieve the energy transition, efficient, reliable and sustainable energy technologies are needed [1,2,3].

A basic measure of energy efficiency is first of all the ratio between the input and output of a particular energy generation or conversion system. On the basis of this ratio, further efficiency measures and performance indices can be defined that allow two systems or different versions of a particular system to be compared. The aim is usually to increase the efficiency of a given system. Sustainability plays an important role, as the energy source should be based on renewable and environmentally friendly resources. Finally, there is a strong, reciprocal relationship between reliability and efficiency, particularly in the case of renewable energies. This is illustrated by the fact that performance measures for photovoltaic systems include temporal and geographic factors (e.g., actual insolation) that influence the practical efficiency of a system [4].

As demand and supply are becoming increasingly interlinked with the use of renewable energies, new methods are required to adjust supply and demand. Artificial Intelligence (AI) and Machine Learning (ML) models are considered to be a key factor in accomplishing the energy transition [5,6,7] and are already widely used in various areas of the energy sector. These areas include cybersecurity analysis and simulation for power system protection [8,9], simulation-based studies on grid stability, reliability, and resilience [10,11,12,13], simulation and analysis of smart grid technologies and architectures [14,15], as well as advanced simulation techniques for power system modeling and analysis [16,17]. Offering new and promising opportunities, AI technology continues to expand not only in the energy sector [18,19,20] but also in many other domains such as healthcare [21,22] and finance [23,24,25].

With the ongoing development and popularization of AI and ML models, these models are more and more used to perform highly responsible tasks. Since the functionality of ML models is not always fully explainable and reliable—known as the black-box problem [26]—the topic of Trustworthy AI [27,28,29] is becoming increasingly important. When AI approaches are used to improve the efficiency of applications in the energy sector, the question of the reliability and trustworthiness of the AI approaches themselves becomes a crucial issue.

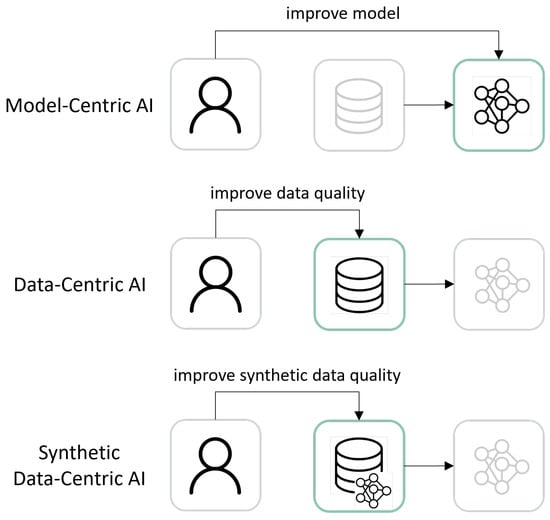

In general, two primary approaches exist for establishing a certain level of trust in AI (see Figure 1). On the one hand, we can focus on the ML algorithms themselves and work towards achieving “fairness” for the models, also referred to as a model-centric approach or Model-Centric AI (MCAI) [30]. On the other hand, we can analyze the data on which these ML algorithms are trained, commonly known as the data-centric approach or Data-Centric AI (DCAI) [31]. The DCAI approach is often disregarded in current AI research [32]. However, ML models require large amounts of data to become robust and functional [33,34] and high-quality datasets are essential. Even the best ML models are unable to perform well if trained on insufficient data [35]. As the interest in AI and ML models continues to grow, the availability of large amounts of data is becoming increasingly important.

Figure 1.

High-level comparison between the Model-Centric AI, Data-Centric AI and Synthetic Data-Centric AI approach based on the graphic presented in [31].

Because data are essential, the DCAI approach is a very promising method for ensuring Trustworthy AI. Data are typically collected in real-time in the physical world. However, collecting a sufficient amount of sensor data this way to be able to meaningfully train ML algorithms is a time-consuming and cumbersome task. In addition, collected data can contain gaps [36] or incorrect samples due to sensor measurement errors, the data themselves or even the ground-truth data are not always annotated or there can be inaccuracies in labeling [37]; data are biased in some way [38], or they cannot be collected at all due to privacy regulations. Since data collection is cumbersome, alternative methods of data collection are already being explored, such as the participatory collection of data from people [39,40,41].

Simulations and synthetic data provide the potential to address and solve real-world data collection problems. For instance, synthetic data allow for reduced data collection costs or to compensate for biases in datasets [42]. Furthermore, synthetic data can be generated fully labeled and annotated with ground truth, without any data gaps or sampling rate variations [17]. As a result, simulations and the generation of synthetic data have grown in popularity and are now utilized in various AI applications across various domains [43], such as energy [44,45,46], healthcare [47,48,49], finance [50,51] or manufacturing [52,53].

However, developing simulations and generating synthetic data poses challenges. Synthetic data are highly domain-dependent, as each domain has its own set of characteristics that must be addressed in order for synthetic data to be sufficiently representative and meaningful. The generation of unbiased synthetic data is a developing discipline and requires further research to take full advantage of this technology [54].

Consequently, we examine the potential and impact of synthetic data in the energy domain from the perspective of Trustworthy AI and investigate the following research question:

Given that Trustworthy AI encompasses the aspects of efficiency, reliability and sustainability, what benefits do synthetic energy data contribute to the development of these aspects of Trustworthy AI in the energy domain?

Because synthetic data offer such high potential to improve Trustworthy AI, we introduce the term Synthetic Data-Centric AI (SDCAI). As illustrated in Figure 1, the SDCAI approach expands upon the Data-Centric AI approach. Using an SDCAI approach, a developer or researcher attempts to enhance the performance of a ML model by generating and refining the synthetic data used to train the model.

This survey is structured as follows: in Section 2, we first examine the different definitions and aspects of Trustworthy AI and determine what we understand by this term. We then provide a more detailed analysis of the individual aspects of Trustworthy AI, including technical robustness and generalization in Section 4.1, transparency and explainability in Section 4.2, reproducibility in Section 4.3, fairness in Section 4.4, privacy in Section 4.5 and sustainability in Section 4.6. For each of these aspects, we will elaborate on how synthetic data are able to contribute to increasing the level of trust in the energy domain. In Section 5, we finally examine the key features of Trustworthy AI that contribute to improving the quality of synthetic data and identify the areas in which synthetic data have the greatest potential to enhance trust.

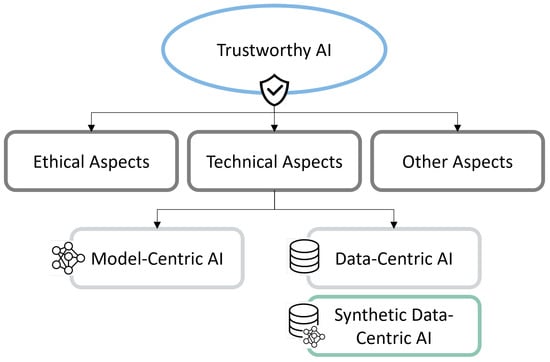

2. Trustworthy AI

While a final definition for Trustworthy AI has not yet been established, the proposals converge in several aspects that are crucial for fostering user acceptance of reliable AI systems. The European Commission’s High-Level Expert Group on AI (HLEG-AI) with the “Assessment List for Trustworthy Artificial Intelligence (ALTAI)” [55] as well as other experts and institutions defined several factors [56,57,58,59,60,61,62]. As mentioned, these definitions for Trustworthy AI overlap in various factors and can generally be divided into technical and non-technical (ethical and other) factors (see Figure 2).

Figure 2.

Schematic view on Trustworthy AI and the involvement of the Synthetic Data-Centric AI approach.

This survey approaches the topic of ensuring Trustworthy AI using synthetic data from a technical perspective, as we are convinced that this is where synthetic data have the most potential to improve the level of trust. In our understanding, enhancing efficiency, reliability, and sustainability requires technical considerations as well. Therefore, these aspects are the most suitable for improvement by using synthetic data.

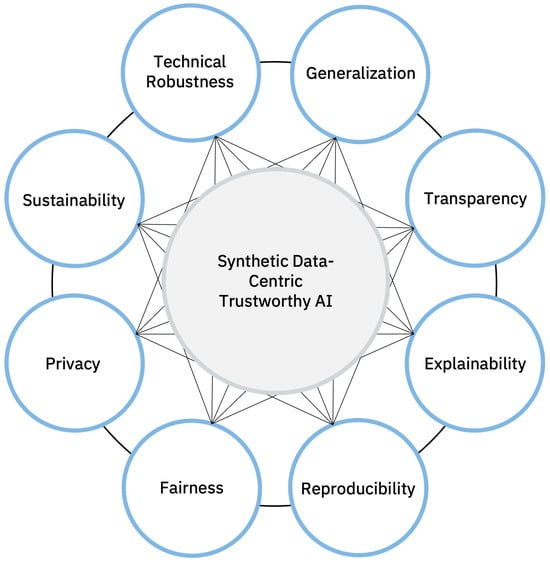

Figure 3 illustrates the technical facets of Trustworthy AI from an SDCAI perspective. In total, we extracted eight key aspects from the previously mentioned definitions: technical robustness, generalization, transparency, explainability, reproducibility, fairness, privacy and sustainability.

Figure 3.

Eight key aspects of the Synthetic Data-Centric AI approach for enhancing Trustworthy AI.

Technical robustness refers to the ability of ML models to provide accurate results even when faced with data that differ from the training data. To accomplish this, ML models require high-quality training data, which involves several factors, such as the availability of ground truth data, the absence of data gaps, and appropriate data labeling (see Section 4.1).

Generalization is closely linked to the aspect of technical robustness and refers to the ability of ML models to perform effectively on unseen data given a limited amount of training data (see Section 4.1).

The principles of transparency and explainability are closely interrelated and emphasize the need for comprehensive visibility and the ability to understand the behavior of an AI system. Transparency, as well as explainability, are also highly dependent on data quality since these factors can only be guaranteed if the training data sufficiently represent the underlying real-world data (see Section 4.2).

If the data quality is inadequate, issues may arise with the reproducibility of experiments and studies (see Section 4.3). Reproducibility requires that ML models developed in scientific work, but also commercial ML models, can be replicated by other researchers at any point in time. Ideally, the experiments described should yield comparable or identical results.

The aspect of fairness is also an essential part of data quality, as imbalanced and biased data may hinder the creation of robust ML models that require balanced datasets (see Section 4.4).

Further, it is important to ensure privacy and security as components of data quality to prevent personal data from being traced back from synthetic data (see Section 4.5).

Sustainability in the context of Trustworthy AI focuses on specific aspects of the overall concept of sustainability, in the sense of renewable and environmentally friendly resources. In this survey, sustainability refers to the fact that AI systems and ML models should be trained and refined on data that are collected/generated under the most environmentally friendly conditions possible, e.g., by using renewable energy resources.

Naturally, the quality of the training data is a critical factor for the optimal functioning and robustness of ML models (see Section 1). As part of the DCAI approach, it is crucial to comprehend the requisite data quality for effective and efficient ML training. Providing high-quality datasets for training ML models is at least as crucial as improving the algorithms themselves. If the data contain biases, it is practically impossible for the trained algorithms to be unbiased.

Therefore, if ML models are to be improved through understanding and optimizing data using the DCAI approach, it is crucial to ensure the data meet certain quality standards. This involves labeling the data, eliminating data gaps, preventing and minimizing bias, and ensuring an adequate quantity is available. Improving dataset quality is a crucial aspect addressed by Trustworthy AI.

Almost all of the technical cornerstones that we have defined to ensure Trustworthy AI are closely related to the quality of the data.

The problems associated with the DCAI approach for ML using real-world data prompted an investigation into whether synthetic data have the potential to reasonably extend this approach in the context of Trustworthy AI. Synthetic data have the ability to provide answers to various issues in several areas related to Trustworthy AI, such as data augmentation for robustness, private data release, data de-biasing and fairness [54].

We are convinced that synthetic data have the potential to be a key enabler in the development of Trustworthy AI. Therefore, this survey focuses on understanding how synthetic energy data can contribute to ensuring Trustworthy AI. To this end, we introduce the new term Synthetic Data-Centric AI (SDCAI) approach, which is an extension of the DCAI approach (see Figure 1). The SDCAI approach addresses the question of how to train ML algorithms on synthetic datasets in a meaningful and reliable way.

In this contribution, we focus specifically on the energy domain. As argued before, there exist serious technological challenges that can be addressed by using ML and AI systems. Among these challenges are the storage and distribution of energy through grids, which play a crucial role in attaining a reliable energy supply in the future. The energy domain is a suitable application example for Trustworthy AI because it shares many characteristics with other domains, which allows the findings of this survey to be widely applicable beyond this particular field into other domains.

To the best of our knowledge, no study has specifically addressed the advances, challenges, and opportunities of synthetic data for the development of Trustworthy AI in the energy domain. Ref. [63] provide a review of advances, challenges, and opportunities in generating data for some aspects of Trustworthy AI [63], but the authors do not address key aspects that we consider in this survey, such as explainability, reproducibility, and sustainability. Furthermore, we are not aware of any research exploring a Data-Centric AI approach for Trustworthy AI, specifically in the energy sector, nor referencing a Synthetic Data-Centric AI approach.

3. Synthetic Data

To explore synthetic data and the SDCAI approach, it is essential to have a clear understanding of the concept of synthetic data. The idea of synthetic data reaches back at least as far as to the Monte Carlo Simulation [64] and can be defined as follows [54]:

Definition 1.

“Synthetic data are data that have been generated using a purpose-built mathematical model or algorithm, with the aim of solving a (set of) data science task(s).” [54].

In the energy domain, the amount of image data processed is not as large as in other domains, such as the healthcare domain [65,66]. The majority of the data that are processed in the context of the energy domain are time-series data, specifically consumption data for different types of energy, including electricity, wind, water, and solar. Additionally, there are time-series data collected from sensors that monitor variables such as temperature, humidity, or motion.

There are many approaches to synthetically generate time series data in the energy domain, e.g., based on using ML models and different neural architectures [44,67,68,69,70,71]. This includes AI applications that make decisions, such as an energy forecast for distribution grids that controls energy supply and demand. This enables the determination of the amount of energy allocated to households and buildings.

The methods that can be used to generate synthetic data, especially in the energy domain, are discussed further in Section 3.2. However, it is necessary to collect and prepare real and synthetic data before generating data.

3.1. Data Preparation

Data preparation is an important component for all types and uses of synthetic data, but especially for ensuring Trustworthy AI principles, and thus for the Synthetic Data-Centric AI approach as we define it.

Data Preparation can be divided into two general sub-areas: data collection (Section 3.1.1) and data preprocessing (Section 3.1.2) [72].

3.1.1. Real-World Data Collection

ML models frequently lack a sufficient amount of labeled data for training [33]. Consequently, collecting real-world data is an essential task in the development of such models. As previously mentioned in Section 1, collecting data in the real world causes many problems in principle, but especially in the energy domain due to its cumbersome and time-consuming nature.

The majority of the energy data collected are about electricity in private households, but other sensor data are also collected in a household, such as gas, temperature, or humidity.

Freely available real-world datasets are often published without appropriate documentation, making them difficult to use [73]. These datasets often suffer from the fact that the data are not fully labeled and there is no guarantee that the labels are correct. Specifically, the ground-truth data can lack annotations or labels [37] or even be non-existent. However, ground-truth data are indispensable for numerous supervised learning problems, such as Non-Intrusive Load Monitoring (NILM) algorithms [74]. In particular, freely available real-world energy data suffer from the fact that never all the ground truth data that make up the smart meter data are available. Various datasets exemplify this problem, such as REFIT [75], GeLaP [76], ENERTALK [77], GREEND [78], IEDL [79], UK-DALE [80] or [81].

The IDEAL dataset contains electrical and gas data for private households, including individual room temperature and humidity readings and temperature readings from the boiler [82]. The available sensor data are augmented by anonymized survey data and metadata including occupant demographics, self-reported energy awareness and attitudes, and building, room and appliance characteristics. Energy data were collected for both consumption and PV generation [83]. Indoor climate variables such as temperature, airflow, relative humidity, CO2 level and illuminance were also collected.

The majority of the available datasets listed contain metadata, but the metadata are incomplete. In some cases, for example, device types are available, but there is no information about a manufacturer or year of construction. All of the datasets listed are systemically biased in some way (see Section 4.4) since they were all collected locally in a single country, or at least on a single continent. Each country has people with their own country-specific habits, population groups and consumer behavior, which ultimately results in their own energy consumption. Furthermore, publicly available datasets pose the issue of representing only a small subset of a population. Due to the large amount of effort involved in collecting the data, such a dataset typically contains only a few households over a short period of time, which leads to statistical bias. To substantially reduce both systemic and statistical biases, it is necessary to obtain data from a larger subsample of a population that is more representative. For example, the dataset should include information from a wider range of countries, population groups, and ethnicities. However, this is challenging to achieve in practice and would require a considerable amount of time.

Synthetic data could help in this case. Simulation tools such as [84,85,86] for electricity or heating [87,88,89,90,91], allow the simulation of all sorts of human behavior and habits and thus also the generation of synthetic energy consumption data. If generated using a simulation, synthetic data have the advantage over collected real-world data of being fully labeled and ensuring “ground truth” for all appliances used without the existence of data holes [17]. However, this human behavior is very complex to simulate, making human behavior one of the most critical parameters in energy models. Nevertheless, there are a number of works that propose and develop concepts for the simulation of human behavior within the energy domain, such as [92,93,94].

Irrespective of the methods used to generate synthetic data, a key challenge in using synthetic data is evaluating the quality of the data and how accurately the synthetic data represent the real data (see Section 4.1). The quality of the synthetic energy data must be guaranteed because it is pointless to generate synthetic energy data that do not adequately reflect the domain (see Section 3.2).

3.1.2. Data Preprocessing

The amount of effort needed for data preprocessing is growing and is already a very large part of the ML model development process, consuming over 80 percent of the time and resources before the actual model can finally be developed [95].

The data preprocessing steps include all steps that are necessary before data can be fed into an AI system and thus used for training or testing ML models. These steps include a number of aspects such as data cleaning, anomaly detection, data anonymization, and data privacy [72].

When discussing the individual aspects of Trustworthy AI throughout this survey (see Section 4), we will occasionally encounter data preprocessing steps, which will be addressed in more detail in that specific aspect.

3.2. Data Generation

There are basically three ways to generate synthetic data: based on real data, without real data, or as a hybrid combination of these two types [96]. These approaches can be applied to the energy domain as well, resulting in synthetic data derived from any or all of the three methods.

For example, ML models can be trained on pure real-world temperature or electricity time series data, which in turn, generate synthetic data [97,98,99,100].

With different simulations, it is also possible to generate synthetic data using both real and synthetic data. For instance, in an electricity simulation of a household, the human behavior of the residents is not generated based on real data, but randomly [84,85,86]. This means that residents randomly turn on and off appliances in an apartment and whenever an appliance is turned on, the simulation uses the real power consumption of the appliance to calculate the total power consumption of the apartment.

It is also possible to generate synthetic energy data without the direct use of real-world data. For example, a simulation can be used to customize the human behavior of residents when turning on appliances, as well as to synthetically generate the energy consumption of individual appliances [101,102,103].

The utility, i.e., the extent to which a synthetic dataset is an exact substitute for real data, depends on the fidelity of the underlying generation model [96]. There is no universal method for measuring the utility of synthetic data [104], instead, there are two different concepts, referred to as general and specific utility measures [105]. A general utility measure concept for synthetic data that is most frequently described is the propensity score [105,106]. This involves developing a classification model that distinguishes between real and synthetic data. If the model cannot distinguish between the two datasets, the synthetic data have a high degree of utility. Since synthetic data are ultimately intended to be used to train and test ML models, it should be ensured that this type of model can be trained and tested on these data. [17] describes a methodology that ensures the quality of the synthetic data by using ML models. The authors use exemplary NILM models trained on both synthetic and real data and then compare their results. They demonstrate that ML models trained on synthetic data can even outperform models trained on comparable real data. Specific measures for the utility of synthetic data are confidence interval overlap [107] and standardized bias [108], which work with statistical methods.

However, there are also risks in using synthetic data to train AI systems such as data quality (including data pollution or data contamination), bias propagation, security risks and misuse [42]. This survey is well aware of the risks of synthetic data, and therefore, addresses these risks for the conditions in the energy domain and develops and presents solutions for them.

In the following sections, the opportunities for using synthetic data to develop Trustworthy AI in the energy domain are discussed in more detail for each of the aspects of Trustworthy AI considered in this survey.

4. Aspects of Trustworthy Synthetic Data-Centric AI

In the following, we consider the individual aspects that are required for an AI application to be considered trustworthy. For each aspect, we will discuss in more detail how synthetic data can be used to improve these aspects of Trustworthy AI (see Section 2) and thereby develop trust in AI (SDCAI approach).

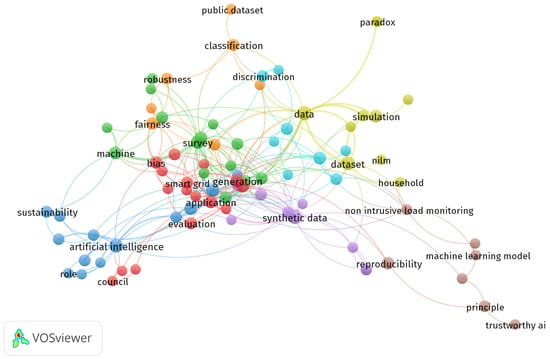

The content of the different aspects under consideration often overlaps, as they are interdependent and partially cover related topics. Figure 4 illustrates a graphical representation of the literature fields discussed in this review, visualized by VOSviewer, a tool for constructing and visualizing bibliometric networks (VOSviewer: https://www.vosviewer.com/, accessed on 15 April 2024).

Figure 4.

Graphical representation of the literature fields discussed in this review, visualized by VOSviewer.

4.1. Technical Robustness and Generalization

To ensure trustworthiness and to prevent and minimize unintended malfunctions, AI systems should have an appropriate level of technical robustness. The term ’technical robustness’ of AI systems is broad and covers many aspects. Among other things, it refers to the ability of ML models to perform on unseen data or robustness against samples that are not very similar to the data on which a model was trained [109]. The concept of technical robustness is an important cornerstone for ensuring Trustworthy AI. The improvement of balanced and robust training techniques and datasets can enhance not only fairness (see Section 4.4) but also explainability (see Section 4.2) [110].

The aspect of generalization is closely related to the aspect of technical robustness and represents the ability of an AI system to make accurate predictions about unknown data based on limited training data [72]. It is preferable for ML models to maximize generalizability while minimizing the amount of training data required, as data collection is both time-consuming and resource-intensive (see Section 4.6).

Although both concepts of technical robustness and generalization are closely related, there is still no general consensus on whether greater robustness is beneficial or disadvantageous for the ability of ML models to generalize. In the literature, there are arguments both in favor and against such an argumentation [111,112,113].

Data design is able to ensure the technical robustness, reliability and generalizability of an AI model trained on those data [114]. It is well understood that variant data with different distributions and different scenarios are important for robustness since training an AI model without such data can seriously affect its performance [72].

Synthetic data offer the powerful advantage of allowing the generation of hypothetical scenarios, such as critical scenarios. AI systems and data-driven models should perform effectively in situations that do not occur sufficiently often in real-world data, such as critical situations, to be considered trustworthy [54]. Including situations that may occur in the real world but are extremely rare and should not be intentionally induced is extremely important, because ML models should be trained and tested with every possible situation that could occur in the real world in order to achieve maximum robustness and reliability. For instance, an AI assistance system designed to assist elderly individuals in private households using various sensor data cannot learn how to behave appropriately in a critical situation if such a scenario is not included in the training data. Moreover, it is not possible to test and verify the performance of the system in such a situation without adequate testing data. Synthetic data have already been applied across multiple domains to increase the robustness of AI systems, including visual machine learning [115] and churn prediction [116]. Synthetic data are also used in other domains, such as covering nuclear power plant accidents [117], ensuring safe drone landings [118], and generating critical autonomous driving situations to improve AI-based systems. A number of techniques have already been used to generate safety-critical driving scenarios, such as those based on accident sketches [119], based on a search algorithm that iteratively optimizes behavior action sequences of the surrounding traffic participants [120], based on influential behavior patterns [121] or based on reinforcement learning [122]. The approaches mentioned here for representing critical situations are domain-specific. The exact definition of what constitutes a critical situation is typically dependent on a particular domain. It remains an open question whether the creation of these critical situations with the methods mentioned can be transferred to other domains. Furthermore, it has not yet been clarified how many and what kind of critical situations must be present in a dataset for ML models to be considered trustworthy.

Especially in the energy domain, it is essential for ML models to be both technically robust and generalizable in order to achieve a high level of transferability. This characteristic is particularly important for ML models, like assistance systems or NILM models, that are trained and developed based on a restricted sample of households but required to be functional and robust in other households [123,124]. In this context, transferability refers to the ability of ML models to be both robust and highly generalizable. This means that the model is able to produce accurate results on households that were not included in the training dataset of the model [125].

However, to be effective in training ML algorithms, synthetic data must be of consistently high quality without bias. Previous research has demonstrated that biased data can negatively impact the generalization properties of ML algorithms [126,127,128]. For instance, if an energy consumption prediction model is only trained using energy data collected from private households in Europe, the model will have difficulties accurately predicting the energy consumption of households in South America or Asia. The energy consumption patterns in these regions are different, as people there have other relevant characteristics, such as different consumer behaviors, different climatic conditions, and different appliances in their households, which result in other forms of energy consumption.

Also, in the energy domain, synthetic data can enhance the robustness and generalizability of ML models by producing a multitude of diverse training datasets. Simulations are capable of generating substantial amounts of high-quality energy data from a variety of demographic groups such as denomination, nationality, gender, age, occupation, income, and different life circumstances (see Section 3.2). This allows ML models to be better prepared for real-world scenarios and substantially enhances the accuracy of predictions and outcomes of AI systems.

In an ideal world, the data for all the described scenarios would be freely available as benchmark datasets. Once available, these datasets can be used to train and test ML models, increasing their robustness and generalizability. The objective of benchmark datasets, which are already used in the ML domain, is to represent the real world as reliably as possible [127] and can also be generated synthetically. The idea of synthetic benchmark datasets is closely related to the concept of transparency and explainability and is, therefore, described in more detail in the following Section.

4.2. Transparency and Explainability

Another key factor in achieving Trustworthy AI is ensuring the transparency, explainability and accountability of ML models and the data used to train and test them. In academic literature, the term transparency is typically understood in the sense that all components of an AI system should be visible and explainable, including the training data [55].

Definition 2.

Transparency can also be defined “as any information provided about an AI system beyond its model outputs.” [129].

The concept of transparency is one of the key topics addressed by Explainable AI (XAI) [130]. The purpose of XAI is to enable individuals to understand precisely and in detail why and on what basis an AI system has or has not made a decision [131]. Ensuring the transparency of the data utilized for training and testing ML models is an important aspect of XAI.

ML models and the data on which they are trained often suffer from a lack of transparency and explainability. For instance, achieving transparency and explainability in black-box models, which are generative ML algorithms where the input and output are known but the functionality is unknown [132], poses significant challenges [133]. Understandably, the unknown nature of the functionality does not encourage trust in the algorithms.

The use of synthetic data can be beneficial since it allows black-box models to be trained on specifically designed data. Training black-box models on high-quality, unbiased data can increase the level of trust in the results of the models [134].

As mentioned previously, a key challenge in generating synthetic data is to evaluate the quality of the data and the accuracy with which it represents the real data. In essence, it is necessary to measure the utility of the data [96] (see Section 3.2). There are a number of XAI methods that allow to measure the extent to which the synthetic data represent real-world scenarios [135]. Auditing methods need to be developed to determine the reliability and representativeness of synthetic data in the energy domain. To achieve this, techniques such as dimensionality reduction [136] or correlation analysis [137] can be used.

To ensure maximum transparency and explainability, it is necessary to carefully consider the methods used for generating synthetic data. The use of generative black-box models to create synthetic datasets can lead to a lack of trust in the generated data, as it is not possible to fully understand and reconstruct how exactly the synthetic data were generated. There are methods and metrics that can be used to evaluate synthetic data generated by black-box models [138].

Another attempt to ensure transparent synthetic energy data is to utilize a human-in-the-loop approach from a data perspective [139]. Involving humans at various stages of data generation can be useful in many different processes, such as data extraction, data integration, data cleaning, data annotation, and iterative labeling [95]. Thus, this approach can also contribute to the development of synthetic data. When using simulations to generate synthetic data, a human-in-the-loop approach is often necessary anyway. This is because the data must be generated by an expert, or at least consulted by one, to achieve high data quality since simulations often require knowledge that a non-expert user does not necessarily possess. However, involving humans in the development process can also introduce risks, such as multiple errors during annotation or data extraction, which can lead to serious consequences for synthetic datasets. These types of risks caused by humans are known as human bias [61] (see Section 4.4).

Another essential component to ensure transparency in data science, in general, and synthetic data, in particular, is the provision of metadata [140]. Synthetic data should always be made publicly available in order to achieve maximum transparency. This includes the synthetic data themselves and all information related to the generation of the data, which is also essential for the reproducibility of the generation process (see Section 4.3). The metadata for a synthetic dataset should describe, among other things, what data were used to generate the dataset, where the original data used came from, how and when the data were collected, what methods and techniques (or ML models) were used to generate the synthetic data and other relevant specifics. It is important to be able to reconstruct where synthetic data originated from and what methods and concepts were used to generate it [141]. Therefore, it is essential to describe exactly how the data are constructed and what data were utilized to generate it. Furthermore, it is critical to explain and publish the data that served as the source for the synthetic data.

Ensuring the quality of this metadata is crucial since this meta information is not useful if it can be misinterpreted or if it is incorrect. Inaccurate or missing meta information can be harmful, but even correct metadata have the potential to cause harm. For example, metadata for synthetic data generation that include information about the ethnicity of the original data can lead to discriminatory behavior [142]. Therefore, the content of metadata should be carefully considered. Since sensitive meta information requires adequate protection, there are techniques available to protect its confidentiality [143].

Besides their beneficial qualities for technical robustness and generalizability (see Section 4.1), synthetic benchmark datasets are a promising approach to ensure both transparency and XAI aspects. Such benchmark datasets allow independent developers of ML algorithms to effectively evaluate and compare the performance of their models on a transparent database [144]. In addition to testing, benchmark datasets allow for the training of ML models. It is particularly important that benchmark datasets maintain a high quality. Any data gaps (see Section 4.1), biases (see Section 4.4), or privacy violations (see Section 4.5) in such datasets would compromise the quality of any ML models trained on them. However, creating high-quality benchmark datasets is typically complex and time-consuming. Thus, it is crucial to make such datasets freely available for public use in order to maximize accessibility to a broad audience [144].

Synthetic benchmark datasets are a relatively new idea with a growing presence across diverse domains, such as geoscience [145], face recognition [146], visual domain adaptation [147], and nighttime dehazing [148]. Nevertheless, benchmark datasets with available ground truth data, referred to as attribution benchmark datasets, are still rare [145]. This is also problematic for the development of Trustworthy AI systems in the energy domain since ground truth data are necessary for the evaluation of different XAI methods as well as for the development of diverse ML models such as NILM [74].

As a result, it would be highly desirable to have synthetic benchmark datasets available for the energy data domain as well. For instance, potential datasets could include energy consumption data alongside reliable ground truth data from households or industry, or energy consumption data stemming from solar, wind, or hydropower plants. To avoid the risk of data bias, these synthetic benchmark datasets should be as diverse as possible. This means that different ethnic groups should be represented, as well as different demographic groups (denomination, nationality, gender, age, occupation, income, etc.) and different standards of living. Further research would be needed to provide high-quality synthetic benchmark datasets for different areas of the energy domain, as to our knowledge no work has been conducted in this domain yet. We are only aware of datasets that consist of real data (see Section 3.1).

4.3. Reproducibility

A further key aspect of Trustworthy AI is the reproducibility of AI systems, which is closely related to the concepts of transparency and Explainable AI (see Section 4.2).

The purpose of reproducibility is to ensure that scientific work and publications can be replicated by other scientists at any given time. Furthermore, it is desirable that the experiments described are capable of producing the same or comparable outcomes.

Reproducibility can be defined as follows:

Definition 3.

“Reproducibility is the ability of independent investigators to draw the same conclusions from an experiment by following the documentation shared by the original investigators” [149].

Although reproducibility is an essential issue in the scientific community, it is unfortunately becoming increasingly difficult to replicate experiments in science in general [150]. In ML research, in particular, it is becoming increasingly difficult to replicate experiments presented and conducted in scientific publications without discrepancies [151,152]. When presenting the results of a trained ML model, there can be a number of reasons for a lack of reproducibility, such as not having access to the training data, not having the code to run the experiments publicly available, or not having conducted a sufficient number of experiments to be able to draw a robust conclusion [151].

The concept of reproducibility can be divided into two categories: the reproducibility of methods and the reproducibility of results [153]. From the perspective of the DCAI approach, the category of methods can include, for example, the reproducibility of data collection and data preprocessing. Reproducibility of results is more likely to be considered on the model side, including, for example, the reproducibility of model settings such as parameters and weights.

Synthetic data are capable of ensuring the reproducibility of data and have already been used for this purpose, for example, by replacing missing or sensitive data with simulated data and then analyzing these data alongside the original data [154]. A variety of techniques are available to reconstruct missing data in time series data. For example, artificial intelligence and multi-source reanalysis data were used to fill gaps in climate data [155], ML methods were used to fill gaps in streamflow data [156] and a multidimensional context autoencoder was used to fill gaps in building energy data [157]. Synthetic data have also been used in other domains to increase reproducibility, such as in biobehavioral sciences [158], in the health data [159], and in synthetic biology [160].

According to [161], an important reproducibility standard is that datasets are transparent and should be published (see Section 4.2). When synthetic datasets are publicly available, researchers are able to ensure that their results are reproducible [158]. This highlights the importance of developing and providing synthetic benchmark datasets, as outlined in Section 4.2. In some cases, however, publication is not possible due to privacy constraints [151]. The use of synthetic data could help in the anonymization of datasets, which will be discussed in more detail later (see Section 4.5).

As already mentioned, also in the energy domain, synthetic data are mainly developed and generated to train and test ML models (see Section 3). Nonetheless, reproducing the outcomes of these ML models can be challenging as they may produce distinct results despite using identical parameters and data for training. This phenomenon is observed for non-intrusive load monitoring models, for example, [17]). Therefore, when measuring the quality of synthetic data using ML models, these inconsistent results of ML models should be explicitly taken into account. One way to address the issue of unstable results of ML models is by running them multiple times and calculating the average of all the results. This approach provides a reasonable level of certainty that the mean value of the results is stable [17]. The number of times experiments must be repeated to achieve a certain level of confidence can be calculated by using Cochran’s sample size [162].

4.4. Fairness

The aspect of fairness is also a fundamental part of Trustworthy AI and is closely linked to the concepts of transparency and explainable AI.

However, the term ’fairness’ of a dataset is not clearly defined. Numerous definitions of fairness exist, including those presented in [163,164,165]. Nonetheless, it is nearly impossible to simultaneously satisfy all constraints mentioned in the literature [166]. For this survey, we understand fair data to be data that are not biased in any way.

Due to their functionality and characteristics, in general, ML models inherit biases from their training data [167,168,169,170,171]. This means that without fair data, it is very difficult to develop fair ML models. Therefore, research was conducted on the creation of fair data [172,173,174].

Bias in data can be understood as an unfairness that results from data collection, sampling, and measurement, whereas discrimination can be understood as an unfairness arising from human prejudice and stereotyping based on sensitive attributes, which can occur intentionally or unintentionally [114]. This Section focuses on discussing data fairness. However, other works consider discrimination theory in much greater detail [175,176,177,178].

There are numerous types of biases in data that, when used for training ML algorithms, may result in biased algorithmic outcomes. These biases include measurement bias, omitted variable bias, representation bias, aggregation bias, sampling bias, longitudinal data fallacy, and linking bias [114]. According to [61], AI bias can be divided into three main categories: human bias, systemic bias and statistical/computational bias. Human bias is a phenomenon that occurs when individuals exhibit systematic errors in their thinking, often stemming from a limited set of heuristic principles. Systemic bias arises from the procedures of certain organizations that have the effect of favoring certain social groups and disfavoring others. Statistical and computational biases are biases that occur when a data sample is not a reasonable representation of the population as a whole.

Many instances exist wherein biased systems have been evaluated for their ability to discriminate against specific populations and subgroups, such as facial recognition [179] and recommender systems [180]. There are numerous instances of data biases, including datasets like ImageNet [181] and Open Images [182], which are used in the majority of studies in this field and consequently exhibit representation bias [183]. Additionally, there exist facial datasets like IJB-A [184] and Adience [185], which lack balance in terms of race, resulting in systemic bias [171].

When creating synthetic data based on real data, there is of course the risk that biases of the real data are unintentionally transferred to the synthetic data [47,169]. For example, if a real-world energy dataset consists only of European energy data, the synthetic households will also reflect the characteristics of European households. This is referred to as the out-of-distribution (OOD) generalization problem [186], a well-known challenge when working with synthetic data [187]. The OOD problem describes a situation where the data distribution of the test dataset is not identical to the data distribution of the training dataset when developing ML models. Synthetic data allow augmenting data, thus, reducing the OOD problem [187].

The problem of unfair datasets is known in the literature, and there are already existing approaches for generating high-quality, fair synthetic data from ‘unfair’ source data [169,188,189]. Methods like the one described in [169] achieve fairness in synthetic data by removing edges between features. This approach can be applied to time series data, but adapting it to image data is challenging.

When generating synthetic data, it is crucial to prevent data bias caused by the absence of underprivileged groups in simulator development. It is also essential to avoid performance degradation when an AI model trained on synthetic data is applied to real-world data. Synthetic datasets were already used in different domains to reduce bias in datasets, including face recognition [190], robotics [191] and healthcare [43].

The design of the data, in general, and thus synthetic data, is crucial for minimizing bias and increasing trustworthiness. There are several ways to avoid data bias and ensure the generation of a fair dataset. These methods involve providing comprehensive documentation of metadata for dataset creation, including the techniques used to produce the dataset, its motivations, and its characteristics [141,192]. This metadata should also include the information about where the data originate and how they were collected [193]. There is also the idea of using labels to better categorize datasets [193] or using methods to detect statistical biases such as Simpson’s paradox [194,195]. To eliminate bias in a dataset, also various preprocessing techniques can be used, including suppression, massaging the dataset, reweighing, and sampling [196].

In general, it is challenging to identify biases in synthetic data during post-processing. Therefore, efforts should be made during pre-processing to ensure that synthetic data are not generated with bias. This can be achieved, for example, by ensuring that the underlying data are unbiased and that the methods for data generation are also unbiased. Nevertheless, biases in synthetic data can also be addressed, for instance, through human checkers who oversee the data generation process [197], or with the assistance of data augmentation techniques [54,198]. There are also approaches that measure the fairness of synthetic data, such as using a two covariate-level disparity metric [199].

4.5. Privacy

Strictly speaking, privacy is not a purely technical aspect of Trustworthy AI. However, technical methods and concepts, particularly on the data side, can substantially contribute to privacy. Hence, we also included this aspect in this survey.

Developing robust and effective AI requires large amounts of data [33], which is not always straightforward to obtain due to data protection regulations. The majority of datasets contain people’s personal information, which is justifiably strictly protected in many countries. Furthermore, the collection of real-world data is generally under strict protection. As exemplified by the collection of facial datasets, privacy concerns can be incredibly complex [200]. Privacy regulations pose a challenge due to differing implementations across countries, necessitating specialized legal expertise for any privacy assessment. In academic research, it is recognized that there exists a known issue regarding the possible disclosure of attributes in datasets. To address this concern, concepts and approaches have already been proposed, such as described in [201,202].

Synthetic data have the potential to provide a solution to the privacy problem described. Nonetheless, it is a widespread misconception to assume that synthetic data always satisfy privacy regulations [54]. If synthetic data are derived from real-world data, they may disclose information about the original data that underlie it, potentially due to comparable distributions, outliers, and low-probability incidents. As a result, producing synthetic data that ensures privacy requires considerable effort.

In general, two objectives can be distinguished for synthetic data and privacy: generating synthetic data to enhance privacy and ensuring privacy in synthetic data.

Privacy in synthetic data can be achieved by using certain techniques, such as data anonymization [203] or data concealment [201]. By synthesizing data, data anonymization can be achieved by removing or anonymizing personal information from the original real-world data to protect privacy [204]. When generating synthetic data, they can often be useful to hide certain information in the data to protect sensitive or confidential data.

According to [205] there are privacy-enhancing technologies exist that allow for legally compliant processing and analysis of personal data, such as federated learning (FL) [206] and differential privacy (DP) [207].

The concept of FL was originally designed and developed to enable the training of ML algorithms while adhering to privacy regulations [208]. FL attempts to protect the security and privacy of local raw training data by maintaining it at its source or storage location, without ever transferring it to a central server [209].

In the energy domain, this means that one way to comply with privacy regulations would be to create synthetic energy data at the edge, such as in a private household or in an industrial building itself. In order to be able to create synthetic data on the edge, the framework used to create it must run on the edge as well. Moreover, the framework should have the capability to store and save the synthetic data within the edge environment. However, not only would the synthetic data need to remain in this environment, but also the ML models that are trained or refined on this synthetic data. ML models such as Non-Intrusive Load Monitoring disaggregation [74], energy forecasting [210] or human activity recognition models [211] would then be trained and fine-tuned by using FL concepts [212,213,214]. As a result, the original real-world data, the synthetic data themselves, as well as the ML models used can remain within the edge environment.

However, there are potential risks associated with utilizing FL systems, as the trained ML models may become vulnerable to attacks if exported from the edge environment [215]. Although this does not allow access to the actual model data, it is still possible to obtain the parameters and weights of the trained ML models [216]. To improve security, FL can be combined with other privacy-enhancing technologies such as differential privacy [217].

Differential Privacy (DP) is a mathematical concept of ensuring privacy by adding noise to data in order to protect personally identifiable information [202]. Increasing the amount of noise added makes it increasingly difficult to recognize the original data, resulting in a greater protection of privacy. The concept of DP has been well-established and applied to the concept of FL [218,219,220]. DP has also been utilized for various use cases involving synthetic data [221,222,223,224].

A major challenge in ensuring privacy is the reliable evaluation of whether the synthesized data are sufficiently anonymous after implementing concepts such as DP, i.e., whether personal data can be derived. Controversial opinions exist in the literature regarding this matter.

According to [225], there are no robust and objective methods to determine whether a synthetic dataset appears sufficiently different from its real-world counterpart to be classified as an anonymous dataset.

Despite this opinion, there are also studies that propose criteria to determine the quality of synthetic data in terms of privacy. According to [226], there are different criteria to measure the quality of the synthetic data in terms of privacy, including the exact match score, the neighbors’ privacy score, and the membership inference score.

The exact match score indicates whether the synthetic data contain any copies of the real-world data [227]. A score of zero implies that there are no duplicates of the authentic data in the synthetic data. However, this score is problematic when synthetic data are generated based on real-world data. For example, if real energy data from freely available datasets are used to create synthetic data, the exact match score will be very high due to the (intended) copies of the real-world data within the synthetic data. However, if the real-world data are anonymized, the synthetic data will also be anonymous, even if the exact match score is high.

Related to the previous score is the neighbors’ privacy score, which measures whether there are similarities between the synthetic data and the real data. Although these are not direct copies, they are potential indicators of information disclosure. For generating synthetic energy data based on real-world data, the neighbors’ privacy score potentially encounters similar issues as those previously addressed in relation to the exact match score.

A membership inference attack aims to uncover the data used for generating synthetic data, even without the attackers having access to the original data [228,229,230]. The membership inference score represents the probability that such an attack will be successful. A high score indicates that it is unlikely that a particular dataset was used to generate synthetic data. Conversely, a low score indicates that it is likely that a particular dataset was used to generate synthetic data. If a dataset is identified by such an attack, private information could be exposed. Despite this, freely available energy datasets have been usually protected by omitting any direct personal information [231,232,233,234].

Before synthetic data can be widely utilized in the energy domain, it is necessary to carefully consider all relevant privacy concerns that have been previously discussed. Moreover, it is important to understand the relative positioning of the produced synthetic data with respect to the original data in relation to the information it potentially reveals.

When generating synthetic energy data using real-world data, the energy consumption data of machines or devices can be included. Depending on the extent to which synthetic data permits inferences to be drawn from real data, there are privacy regulations that must be followed. If the energy consumption patterns of machines cannot be linked to humans, it is likely that data protection regulations are met. On the other hand, if it is possible to identify and reconstruct when the machines were activated, it is possible to draw conclusions about human behavior from the real data. For instance, it is feasible to ascertain how an individual behaves at their home based on their usage of electronic devices in their personal space, or even to determine if an individual is at home at all.

However, human behavior is protected by a much higher level of data privacy, which is very strict and well-protected by data protection regulations in most countries. For instance, as stated in Article 4 (1) of the EU’s General Data Protection Regulation (GDPR) [235], personal data is understood as “any information relating to an identified or identifiable natural person”.

When generating synthetic energy data, it is crucial to ensure that no data protection regulations are violated. This involves preventing the derivation of human behavior that could be traced back to any specific individual based on the synthetic data. If synthetic energy data are generated independently of the behavior of a real individual, and cannot be traced back to a specific person, then they generally do not violate any privacy rights. For instance, to prevent the disclosure of human behavior in synthetic data, it can be anonymized or randomized in a way that maximizes the difficulty of tracing it back to the original real data. Furthermore, the behavior of simulated individuals designed to generate data for critical situations that do not occur in the real world can be entirely custom-built and not based on real behavior. Therefore, the behavior of a real individual cannot be disclosed.

The use of synthetic data in combination with the strong privacy protection of the underlying original data allows a balance between transparency (see Section 4.2), data protection and research objectives [236].

4.6. Sustainability

The concept of sustainability can be considered as a technology part of AI, including the methods to train AI and the actual processing of data by AI [237].

The field of AI sustainability can be divided into two categories: on the one hand, AI methods and concepts that aim to reduce energy consumption and emissions, and on the other hand, the development of environmentally friendly AI itself [237]. This survey focuses on the second category of sustainability, hereafter referred to as the concept of sustainability of AI.

Definition 4.

“Sustainability of AI is focused on sustainable data sources, power supplies, and infrastructures as a way of measuring and reducing the carbon footprint from training and/or tuning an algorithm.” [237].

AI systems generally require a lot of computing resources over a long period of time to achieve robustness and provide valuable outcomes (see Section 4.1). Large models, such as natural language processing models, are dependent on large-scale data centers, which consume vast amounts of energy and resources and thus emit considerable amounts of CO2 [238]. For instance, training the complex architecture of the ChatGPT model requires a considerable amount of computing resources like GPUs over a period of months [239].

The purpose of the concept of sustainability of AI is to highlight these previously mentioned issues and to ensure sustainable and environmentally friendly AI development. With the transition towards sustainable energy and the growing scarcity of resources, it has become crucial to focus on reducing energy and computational resource usage. Therefore, there is a necessity to develop model architectures and training techniques that are more energy-efficient [239]. The carbon footprint of developing and training ML models should be in a healthy proportion to its benefits.

In order to develop more environmentally friendly ML models, understanding the amount of energy, resources, and CO2 emissions consumed in the model development process is crucial. This also covers the emissions of the server during the model training, including the energy consumption of the hardware and the energy grid of the server [240]. There are methods and frameworks available for tracking emissions [240,241]. To achieve sustainability of AI, researchers and developers should publish the energy consumption and carbon footprint data of their ML models [242]. This would enable other researchers and developers to compare the energy usage of their models, which would encourage healthy competition and also be an important contribution to the transparency aspect of Trustworthy AI (see Section 4.2).

There are already some concepts that address how AI systems, and computer systems, in general, can become more environmentally friendly and thus ultimately consume fewer resources. These include green AI [243], cloud computing [244], and power-aware computing [245].

Synthetic data can contribute substantially to the advancement of sustainable development and sustainability itself by reducing the need for data collection in the real world, which can cause numerous problems. Especially in the energy domain, data collection is not only time-consuming but also resource-intensive (see Section 3.1). This is due to the fact that acquiring data in this domain primarily involves the utilization of hardware such as sensors, which consume energy themselves [246] as well as requiring considerable amounts of cost and resources for their production [247,248].

If synthetic data are sufficiently well designed and generated, many of the time- and resource-consuming steps involved in preparing and preprocessing real-world data can be eliminated. These include various steps such as filling gaps in data, annotating data with human assistance, or debiasing data (see Section 4.4).

Following the arguments presented throughout this survey, synthetic data, if generated appropriately, offer the potential to develop more robust and effective ML models. Consequently, this could potentially lead to process improvements, ultimately resulting in energy and resource efficiencies in the long term. This includes ML models focused on improving energy efficiency and sustainability. For instance, there are models designed to enhance sustainability in the food [249] and smart cities domains [250], as well as energy prediction models for buildings [251].

However, the development of simulations and thus the generation of synthetic data is initially associated with development efforts and costs energy and resources [252,253,254]. The greater the amount of data generated via an established simulation framework, the greater the benefit compared to real data. However, this is only valid if the synthetic data prove to be sufficiently useful.

Therefore, it is crucial to ensure that generating synthetic data consumes less energy than collecting data in the real world. This applies to the entire process, including both the development of the simulation framework used to generate the data and the generation of the synthetic data themselves. If it can be ensured that generating synthetic data consumes less energy than collecting real-world data, then synthetic data will be a powerful cornerstone to improving the sustainability and environmental friendliness of AI systems.

5. Discussion: Open Issues and Further Directions

This survey focused on analyzing how synthetic data can contribute to accelerating the development of efficient, reliable, and sustainable aspects of Trustworthy AI in the energy domain. To address the trend of using synthetic data for training ML models, we introduced the term Synthetic Data-Centric AI (SDCAI) as an extension of the DCAI approach. Further, we analyzed different aspects of Trustworthy AI, selected technical factors, and considered them from the perspective of the SDCAI approach. We examined the potential(s), opportunities and risks of synthetic data in the energy domain for each of the technical aspects of Trustworthy AI mentioned in more detail. Although this work focuses on the energy domain, we are convinced that many of the results are transferable to other domains due to their characteristics.

Altogether, we identified a total of eight technical factors in the areas of efficiency, reliability and sustainability that should be satisfied in order for data and the resulting ML models trained on those data to be classified as trustworthy: technical robustness and generalization (see Section 4.1), transparency and explainability (see Section 4.2), reproducibility (see Section 4.3), fairness (see Section 4.4), privacy (see Section 4.5) and sustainability (see Section 4.6). Table 1 summarizes the literature and the main potentials of synthetic data identified in this review to improve the considered technical aspects of Trustworthy AI.

Table 1.

Overview of the literature and the main potentials of synthetic data identified in this review to improve the considered technical aspects of Trustworthy AI.

Considering the results of our analysis, a general and essential feature of synthetic data is their configurable nature and the control afforded by the design-to-generation process, which distinguishes them from real-world data that are only collectible. The circumstances under which real-world data are recorded are often not transparent and reproducible in retrospect. In contrast, due to the controllability of the design-to-generation process, the production of synthetic data is reproducible in structure, repeatable, and widely accessible. This is crucial as synthetic data that are generated properly are not only technically accurate but also transparent and reliable, ensuring key attributes such as correct annotation and labeling of data.

Generating synthetic data allows ML models to be trained and tested on theoretically any amount of data with high variability, resulting in increased technical robustness. In particular, carefully designed synthetic data enable the generation of critical situations that do not occur in real-world data but are essential for achieving a high generalization capability. If generated properly, synthetic data can be used to improve the performance of ML models in the energy domain, thus increasing the technical robustness as well as the generalizability [17].

Additionally, synthetic data enable the development of transparent and more explainable black-box models. The reason for this is that properly designed synthetic datasets have a high level of transparency because it is possible to understand exactly what methods were used to generate them. In general, more transparent and explainable data can increase trust in the ML models trained on these data [134], thus making them more reliable. Synthetic benchmark datasets offer improved transparency by providing completely labeled and annotated data without issues such as data gaps or differing frequencies. Specifically, synthetic benchmark datasets allow for improved ML model reproducibility as transparent and publishable data are a necessary prerequisite for reproducibility [161]. As a consequence, it would be highly desirable to have synthetic benchmark datasets available for the energy data domain as well.

Fairness can be improved through the use of synthetic data and thoughtful data design, which allows avoiding the biases that are often present in real-world datasets. One approach to conducting this is to design data that are representative of a wide range of ethnic and demographic groups. Moreover, synthetic data can also be used to protect privacy by removing or anonymizing personal information from real datasets [204].

Synthetic data allow for minimizing data collection in the physical world, as fewer sensors are required to collect data. Moreover, synthetic data can foster the development of more resilient ML models that are designed to optimize processes to advance sustainability.

Due to the objective of this survey, the aspects of Trustworthy AI considered have a strong technical perspective and are not exhaustive. There are additional non-technical aspects of Trustworthy AI that were not addressed in this survey due to their diminished relevance to synthetic data. Moreover, synthetic data may only be partially effective in addressing non-technical aspects of Trustworthy AI, if effective at all.

The development of methods and concepts for generating trustworthy synthetic data is an ongoing process, and further research is needed to fully exploit the potential of this emerging technology. This survey has identified several open questions that need to be addressed in the future in order to realize the full potential of synthetic data for accelerating the development of Trustworthy AI. These questions involve determining a reliable method of measuring the utility of synthetic data, as well as how to provide a reliable measure that synthetic data are not biased in any way. An additional question that requires further investigation is the optimal balance between real and synthetic data when augmenting data in order to achieve the best possible results. Furthermore, it is necessary to determine which real data should be available in order to generate useful synthetic data. This applies both to the energy domain and across domains. Another question that requires clarification is the extent to which the training and testing of ML models benefit from the inclusion of critical situations in a dataset and whether it is possible to quantify this benefit. Furthermore, it is essential to define the minimum number of critical situations that should be present in a dataset and to determine their composition. With additional research, it may be possible to establish generalizable and cross-domain principles for generating critical situations.

Irrespective of the domain, the decision to use synthetic data for training ML models should be made with caution, as it takes a considerable amount of development time to ensure that the data are useful and can adequately represent a domain. It is essential that synthetic data are of a certain quality and properly designed to achieve Trustworthy AI.

However, once this initial development time has been invested, synthetic data, if generated and used correctly, has great potential to substantially increase the level of trust in AI in any of the considered technical factors. Trustworthy AI principles and methods are capable of improving the quality of synthetic data, so they should be a key consideration when generating synthetic data. As this survey showed when of sufficient quality, synthetic data allow an increase in the level of trust in the technical robustness, generalizability, transparency, explainability, reproducibility, fairness, privacy, and sustainability of AI applications in the energy domain.

Author Contributions

Conceptualization, M.M. and I.Z.; investigation, M.M. and I.Z.; writing—original draft preparation, M.M. and I.Z.; writing—review and editing, M.M and I.Z.; visualization, M.M. and I.Z. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the German Federal Ministry for Economic Affairs and Climate Action (BMWK) as part of the ForeSightNEXT project and by the German Federal Ministry of Education and Research (BMBF) as part of the ENGAGE project.

Data Availability Statement

No new data were created in this review. Data sharing is not applicable to this review.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Chu, S.; Majumdar, A. Opportunities and challenges for a sustainable energy future. Nature 2012, 488, 294–303. [Google Scholar] [CrossRef] [PubMed]

- Steg, L.; Perlaviciute, G.; Van der Werff, E. Understanding the human dimensions of a sustainable energy transition. Front. Psychol. 2015, 6, 805. [Google Scholar] [CrossRef]

- Dominković, D.F.; Bačeković, I.; Pedersen, A.S.; Krajačić, G. The future of transportation in sustainable energy systems: Opportunities and barriers in a clean energy transition. Renew. Sustain. Energy Rev. 2018, 82, 1823–1838. [Google Scholar] [CrossRef]

- Khalid, A.M.; Mitra, I.; Warmuth, W.; Schacht, V. Performance ratio–Crucial parameter for grid connected PV plants. Renew. Sustain. Energy Rev. 2016, 65, 1139–1158. [Google Scholar] [CrossRef]

- Višković, A.; Franki, V.; Jevtić, D. Artificial intelligence as a facilitator of the energy transition. In Proceedings of the 2022 45th Jubilee International Convention on Information, Communication and Electronic Technology (MIPRO), Opatija, Croatia, 23–27 May 2022; pp. 494–499. [Google Scholar]

- Griffiths, S. Energy diplomacy in a time of energy transition. Energy Strategy Rev. 2019, 26, 100386. [Google Scholar] [CrossRef]

- Jimenez, V.M.M.; Gonzalez, E.P. The Role of Artificial Intelligence in Latin Americas Energy Transition. IEEE Lat. Am. Trans. 2022, 20, 2404–2412. [Google Scholar] [CrossRef]

- Sulaiman, A.; Nagu, B.; Kaur, G.; Karuppaiah, P.; Alshahrani, H.; Reshan, M.S.A.; AlYami, S.; Shaikh, A. Artificial Intelligence-Based Secured Power Grid Protocol for Smart City. Sensors 2023, 23, 8016. [Google Scholar] [CrossRef]

- Chehri, A.; Fofana, I.; Yang, X. Security risk modeling in smart grid critical infrastructures in the era of big data and artificial intelligence. Sustainability 2021, 13, 3196. [Google Scholar] [CrossRef]

- Xie, J.; Alvarez-Fernandez, I.; Sun, W. A review of machine learning applications in power system resilience. In Proceedings of the 2020 IEEE Power & Energy Society General Meeting (PESGM), Montreal, QC, Canada, 2–6 August 2020; pp. 1–5. [Google Scholar]

- Shi, Z.; Yao, W.; Li, Z.; Zeng, L.; Zhao, Y.; Zhang, R.; Tang, Y.; Wen, J. Artificial intelligence techniques for stability analysis and control in smart grids: Methodologies, applications, challenges and future directions. Appl. Energy 2020, 278, 115733. [Google Scholar] [CrossRef]

- Omitaomu, O.A.; Niu, H. Artificial intelligence techniques in smart grid: A survey. Smart Cities 2021, 4, 548–568. [Google Scholar] [CrossRef]

- Song, Y.; Wan, C.; Hu, X.; Qin, H.; Lao, K. Resilient power grid for smart city. iEnergy 2022, 1, 325–340. [Google Scholar] [CrossRef]

- Massaoudi, M.; Abu-Rub, H.; Refaat, S.S.; Chihi, I.; Oueslati, F.S. Deep learning in smart grid technology: A review of recent advancements and future prospects. IEEE Access 2021, 9, 54558–54578. [Google Scholar] [CrossRef]

- Bose, B.K. Artificial intelligence techniques in smart grid and renewable energy systems—Some example applications. Proc. IEEE 2017, 105, 2262–2273. [Google Scholar] [CrossRef]

- Tang, Y.; Huang, Y.; Wang, H.; Wang, C.; Guo, Q.; Yao, W. Framework for artificial intelligence analysis in large-scale power grids based on digital simulation. CSEE J. Power Energy Syst. 2018, 4, 459–468. [Google Scholar] [CrossRef]

- Meiser, M.; Duppe, B.; Zinnikus, I. Generation of meaningful synthetic sensor data—Evaluated with a reliable transferability methodology. Energy AI 2024, 15, 100308. [Google Scholar] [CrossRef]

- Jin, D.; Ocone, R.; Jiao, K.; Xuan, J. Energy and AI. Energy AI 2020, 1, 100002. [Google Scholar] [CrossRef]

- Tomazzoli, C.; Scannapieco, S.; Cristani, M. Internet of things and artificial intelligence enable energy efficiency. J. Ambient. Intell. Humaniz. Comput. 2023, 14, 4933–4954. [Google Scholar] [CrossRef]

- Aguilar, J.; Garces-Jimenez, A.; R-moreno, M.; García, R. A systematic literature review on the use of artificial intelligence in energy self-management in smart buildings. Renew. Sustain. Energy Rev. 2021, 151, 111530. [Google Scholar] [CrossRef]

- Yu, K.H.; Beam, A.L.; Kohane, I.S. Artificial intelligence in healthcare. Nat. Biomed. Eng. 2018, 2, 719–731. [Google Scholar] [CrossRef]

- Panch, T.; Mattie, H.; Celi, L.A. The “inconvenient truth” about AI in healthcare. NPJ Digit. Med. 2019, 2, 77. [Google Scholar] [CrossRef]