Abstract

We discuss several algorithms for solving a network optimization problem of simultaneous routing and bandwidth allocation in green networks in a decomposed way, based on the augmented Lagrangian. The problem is difficult due to the nonconvexity caused by binary routing variables. The chosen algorithms, which are several versions of the Multiplier Method, including the Alternating Direction Method of Multipliers (ADMM), have been implemented in Python and tested on several networks’ data. We derive theoretical formulations for the inequality constraints of the Bertsekas, Tatjewski and SALA methods, formulated originally for problems with equality constraints. We also introduce some modifications to the Bertsekas and Tatjewski methods, without which they do not work for an MINLP problem. The final comparison of the performance of these algorithms shows a significant advantage of the augmented Lagrangian algorithms, using decomposition for big problems. In our particular case of the simultaneous routing and bandwidth allocation problem, these algorithms seem to be the best choice.

1. Introduction

In the 2022 year, data transmission networks consumed 260–360 TWh or 1–1.5% of the global electricity use [1]. A strong growth in demand for data center and network services is expected to continue at least until the end of this decade [2], especially because of video streaming and gaming. On the other hand, a global energy crisis causes increase in prices of electricity and energy commodities and the threat of their shortages. All this makes it more important than ever to use the network infrastructure efficiently to guarantee the highest possible quality of service with the lowest possible energy consumption.

In the standard approach, network congestion control along with active queue management algorithms try to minimize an aggregated cost with respect to source rates, assuming that routing is given and constant over the time scale of interest. However, it seems that it would be more effective to treat transport and network layers together and minimize cross-layer costs on the time scale of routing changes, taking into account the energy component.

In paper [3], an approach to solve such a simultaneous routing and flow rate optimization problem in energy-aware computer networks has been presented. The formulation considered two criteria. The first criterion was the cost of a bad quality of service, and the second was the energy consumption. It was earlier shown [4] that such problems, where routing variables are binary, even in the simplified version without the energy component, are NP-hard, which means that for bigger networks it is impossible to obtain the optimal solution in a reasonable time frame. The ordinary Lagrangian relaxation cannot help us to obtain a good assessment of the solution and, as it is in most of the integer linear programming (ILP) and mixed-integer nonlinear programming (MINLP) problems [5,6], a duality gap can be observed [7] (that is a nonzero difference between the minimum of the original problem and the maximum of the dual function) and the violation of many constraints.

In the case of nonconvex problems, the most popular remedy to obtain strong duality, that is the zero gap, and to ensure that the constraints are met is to use augmented Lagrangian [8,9,10,11,12,13,14] and the multiplier or, in other words, shifted penalty function method, based on it [15,16,17]. Since we solve a network MINLP problem, the only formulations interesting to us are those which involve nonconvex constraints or discrete sets. As is stated in [13], continuous optimization over concave constraints can be used to represent the integrality condition. The simplest method of conversion of a discrete set to an equality constraint with a continuous function for any , where and X is compact, is to use a distance function and the constraint [13]. Other methods of continuous reformulations of mixed-integer optimization problems that may be effective in some problems are presented in [18].

Unfortunately, although MILP and MINLP optimization problems have been present from the very beginning in the most important works on the augmented Lagrangian approach [15] and they are very important in practical problems [19,20,21,22], most of the works dealing with nonconvex problems consider only nonconvexity of the objective functions [23,24]. There are relatively few proposals of methods and algorithms also considering nonconvexity of constraints [21,25,26,27,28,29] and even in a recent paper the following statement can be found: “Despite the recent development of distributed algorithms for nonconvex programs, highly complicated constraints still pose a significant challenge in theory and practice” [30]. The proposed algorithms are rather complicated, involve many levels and loops, corrections, linearizations, etc. [21,30,31,32].

In our paper, we try to solve the two-layer network optimization problem using especially adapted classical methods proposed in the literature for problems with nonconvex constraints, which have also some potential for parallelization. Because of that, very intensively developed in the recent years methods from the ADMM family (Alternative Directions Method of Multipliers), which as a Gauss–Seidel type are sequential as such, are treated on an equal footing with others. If necessary, for example, when an algorithm has been formulated for a different type of constraints than those in our problem, we adapt it.

The paper is organized as follows. We first present the studied network problem in Section 2, which is one of the presented in [7]. Next, in Section 3 and Section 4, we review, respectively, the basic augmented Lagrangian algorithms proposed for problems with nonconvex constraints and the methods of their decompositions suitable for parallel computing with some modifications proposed by us. Section 5 describes the transformations of our problem to use the algorithms presented in Section 4. The results of numerical tests on network problems will be shown in Section 6, and the conclusions follow in Section 7.

2. Network Optimization Problem of Simultaneous Routing and Bandwidth Allocation in Energy-Aware Networks

We can describe the problem of optimizing routing and flow rates simultaneously as identifying scalar flow rates and routes (single paths) that satisfy network constraints for given source–destination pairs , where w is a demand (flow) from the set W, that deliver the minimal total cost. Routes are vectors built of binary indicator variables , whether a link l from the set L is used by the connection w.

The problem can be formulated in the following way [7]:

subject to (s.t.)

where N is the set of all nodes and A is the set of all arcs of the network.

This is the third formulation from the paper [7], denoted there by . The objective Function (1) is quadratic and convex. The first component of the function expresses a cost for not delivering the full possible bandwidth for a connection . The second component expresses the total cost of energy used in the network by the connection . Flow conservation law equations are formulated with auxiliary real variables (the function numbers arcs) and binary variables , the equality (2), and three inequalities (5)–(7). Constraints (7) force single path routing and constraints (6) keep the relation between auxiliary variables and binary variables.

3. Augmented Lagrangian Algorithms

We consider the constrained optimization problem of the form

s.t.

where the function is continuous and differentiable, is linear, and the set is compact. For simplicity, we do not consider inequality constraints, because they can be either transformed to equality constraints (10) with the help of (additional) slack variables or be a part of the definition of the set X.

In the 1950s to solve the minimization problem (9)–(10) in the convex case, the Lagrangian relaxation method was proposed [33,34,35], using Lagrangian function defined as

where is the Lagrange multipliers vector. This method used the associated dual functional q for (11) given as

According to the duality theory, the solution maximizes the dual function with respect to the Lagrange multipliers vector, that is, we should calculate

Usually, we iterate alternately on x and in the following way:

where is a scalar stepsize coefficient.

The major drawback of the Lagrangian relaxation method is the requirement that the problem should possess a convex structure, which reduces its applicability. Applying it to nonconvex problems, including problems with discrete variables, can result in a duality gap, whereby the maximum of the dual function does not equal the minimum of the original problem.

Powell [36] and Hestenes [37] convexified the problem (9)–(10) by adding the squared norm of the equality constraints

where is a scalar, penalty parameter. This function is convex in the neighborhood of when is taken sufficiently large and if at the second order sufficient optimality condition

is satisfied.

The method of multipliers algorithm for solving problem (9)–(10), based on the augmented Lagrangian, consists of the following iterations:

When , to reduce the steps of the (18)–(19) algorithm, a multiplier estimate function derived from the first order Karush–Kuhn–Tucker optimality condition [33] can be used [38,39]:

This formula is particularly useful when it is possible to calculate analytically , e.g., when functions are linear. Then, having , one can replace the two-level algorithm (18)–(19) with a single-level one:

4. Separable Problems and Their Decomposition Algorithms

4.1. Classical Separable Problem Formulation

The problem (9)–(10) is said to be separable if it can be written as

where are subvectors of decision variables, , , are given vector functions that describe constraints, and , . The set X is a Cartesian product of the sets in the corresponding subspaces.

The augmented Lagrangian for (22)–(24) can be written as

The separable problem (22)–(24) yields a nonseparable Lagrange function due to the last-quadratic-penalty term, hence decomposition algorithms cannot be applied directly to the dual equivalent of the problem (22)–(24). To avoid the nonseparability, some tricks are necessary, which we describe in the following subsections. The most important conditions of the convergence of the presented algorithms to the optimal point for sufficiently big are that all functions from the problem statement are of class in a neighborhood of the optimal point , gradients of all active constraints are linearly independent at , and that the second order sufficient optimality condition (17) is satisfied (for the ordinary Lagrangian of the problem).

4.2. Bertsekas Decomposition Method

Bertsekas [25] proposed a convexification method that preserves the separability of a modified Lagrangian. It belongs to the proximal point algorithms family and may be interpreted as a multipliers method in disguise [40]. The penalty component expresses now not the square of the constraints function norm but the square of the distance between the x vector and a parameter vector s from its vicinity. In subsequent iterations, this vector approaches x, leading to convergence, while preserving separability. The resulting Lagrangian is

This function is separable and locally convex for from some interval provided that the above mentioned conditions are satisfied at point [25]. In a decomposed form, the augmented Lagrangian (26) can be written as

where

The Bertsekas [25] approach was adapted by us to an MINLP problem. The most efficient proved to be a two-level version with additional scaling coefficient in the formula for changing Lagrange multipliers. This algorithm may be described by the following steps:

where is a relaxation parameter.

4.3. Tanikawa–Mukai Decomposition Method

In the case when in the Bertsekas algorithm , we may apply the approximation of the multipliers vector using the direct formula (20). Unfortunately, when substitutes in (26), the resulting Lagrangian will no longer be separable, because of the term , containing products of functions of different components, . Hence, separability will be preserved if does not depend on x. To address this issue, Tanikawa and Mukai [26] replaced with an approximate and added a penalty term so as to ensure that the minimum point is closer than s to . These improvements resulted in the formula

where is a scalar, and is a symmetric, positive-definite matrix whose elements are of class. The Lagrangian problem (32) is locally strictly convex in x in the neighborhood of if is taken large enough and it has a unique local minimum point as a function of s.

In a decomposed form, (32) can be written as

where

The problem (22)–(24) for can now be solved by a two-level algorithm,

Tatjewski and Engelmann [28] extended this approach to a more general case of the problem (22)–(24), involving local sets , given by inequality constraints

In this case, the Lagrange multipliers vector was calculated from the following formula:

where is the multipliers vector for local inequality constraints

4.4. Tatjewski Decomposition Method

A different approach to handle the nonseparable term in the Function (25) with local sets given by (37), proposed by Tatjewski [27], consists in replacing the Function (25) by

where is an approximation point. The Function (40) is separable

where

The algorithm, which we adapted to an MINLP problem, has, as in the Bertsekas algorithm case, two-levels and an additional scaling factor in the formula for updating the Lagrange multipliers:

where is a relaxation parameter.

4.5. SALA Decomposition Algorithm in ADMM Version

The Alternating Direction Method of Multipliers (ADMM) was proposed by Glowinski and Marrocco [41] and Gabay and Mercier [42]. In the basic version, ADMM solves problems dependent on two subvectors of the decision variables vector—x and s— calculating the new estimates of the solutions in the Gauss–Seidel fashion. For our particular, nonconvex problem with local constraints, the best choice seems to be the Separated Augmented Lagrangian Algorithm (SALA) proposed by Hamdi et al. [29,43,44].

In SALA, first, the problem (22)–(24) is reformulated into

where are additional, artificial variables. To this formulation, the multiplier method with partial elimination of constraints [15] is then applied. The eliminated by means of dualization and a penalty are constraints (47); the constraints (48) are retained explicitly.

The augmented Lagrangian for problem (46)–(49) with elimination of only (47) constraints will have the form (it can be easily proved that ):

where

The SALA method of multipliers can be summed up in the following stages:

Using the ADMM approach for problem (52), we can separate optimizations with respect to x and s. Moreover, the optimization with respect to x can be split among N subproblems, that is, we finally obtain the ADMM algorithm with a Jacobi (i.e., parallelizable) part with respect to the x vector:

5. Decomposition of the Network Problem

Unfortunately, not all the methods presented in Section 4 can be applied to solve our network problem (1)–(8). Tanikawa–Mukai and Tatjewski–Engelmann methods from Section 4.3 are not suitable, because this is a mixed-integer problem, where Karush–Kuhn–Tucker optimality conditions, which are the basis of the explicit formulas for calculation of the Lagrange multipliers, are not well defined. Fortunately, all the remaining methods can be applied.

We will try to solve the problem (1)–(8), decomposing it with respect to flows . Hence, it will be convenient to introduce for every flow w the admissible set resulting from the Equations (2), (3) and (5)–(8). This set for the flow w will be defined as

5.1. The Standard Multiplier Method without Decomposition

The augmented Lagrangian for (1)–(8) is

where are slack variables. To find for every , the value of that minimizes the augmented Lagrangian (61) in current conditions (that is, for given ), we write

The unconstrained minimum of the expression in square brackets in (62) is the scalar at which the derivative is 0

that is

Hence, the solution of problem (62) is

and

We know that at iteration ,

Substituting

into (67), we have

Taking into account (66), we can solve (1)–(8) using the algorithm (18)–(19), which will take the form

with .

5.2. The Bertsekas Method

The augmented Lagrangian in the Bertsekas method for the problem (1)–(8) will have the form

where are slack variables and for . Formula (72) is equivalent to

where

and

To find for every , the value of that minimizes the augmented Lagrangian (72), we will solve independently the following problems:

The unconstrained minimum of the expression in square brackets in (76) is the scalar at which the derivative is 0, that is

Hence,

and the solution of problem (76) is

Now, we can perform the subsequent iterations of the algorithm (29)–(31).

5.3. The Tatjewski Method

The Tatjewski Lagrangian of (1)–(8) in a decomposed form can be written as

where are slack variables. Formula (80) may be rewritten in the following way:

where

and

To find for every , the value of that minimizes the augmented Lagrangian (80), we will solve independently the problems

The unconstrained minimum of the expression enclosed in square brackets in (84) is for the scalar at which the derivative is 0, that is

Hence,

The solution of problem (84) is

Now, we can execute the iterations of the Tatjewski algorithm (43)–(45).

5.4. SALA ADMM Algorithm

In the network problem (1)–(8), constraint binding flows (4) are of the inequality type; hence, in our version of problem formulation to be solved by the SALA algorithm (46)–(49), we introduce slack variables , such that

where

Then, (88) may be written as

The equality constraint for the flow in link will be as follows:

The augmented Lagrangian is

where

The algorithm (55)–(59) adapted to this problem will take the form

6. Numerical Tests

The algorithms described above were implemented and tested on ten network problems of different sizes. The tested networks consisted of loosely connected node clusters with strongly connected nodes. The problems were first solved without decomposition and later with decomposition, allowing for future parallelization (however, our tests were performed in a sequential way).

The Python 3.7.7 programming language was used for all implementations and numerical experiments. To construct and evaluate optimization problems, the amplpy software [45,46] package was employed, the Gurobi solver was used for the optimization, and the NetworkX software package [47] was applied to create and display networks related to optimization problems.

The tests were performed on the machine with AMD Ryzen 5 4600H with Radeon Graphics 3.00 GHz processor, 32 GB RAM, 64-bit operating system, x64-based processor, 512 GB SSD storage, under Windows 10 Pro operating system.

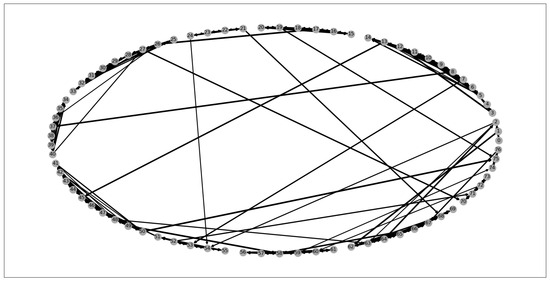

Five distinct network topologies were created for the study. The first two topologies, the medium networks, had fewer variables and can be observed in Table 1 and Figure 1. The second two topologies, the large networks, had more variables and can be seen in Table 1 and Figure 2. The last topology, the extra-large network, had many more variables and can be seen in Table 1 and Figure 3. The source and destination nodes for each problem instance were generated randomly, along with the capacities of links. Instances of the problem were created for all network topologies.

Table 1.

Problem instances.

Figure 1.

Medium network topology.

Figure 2.

Large network topology.

Figure 3.

Extra-large network topology for .

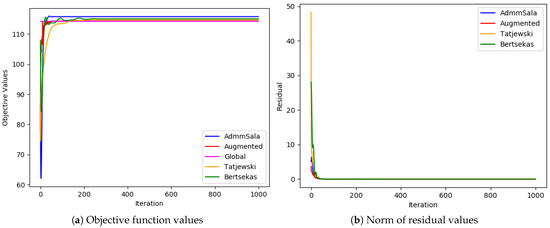

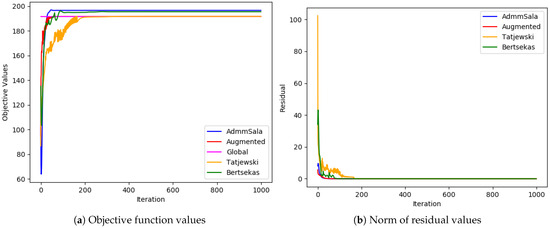

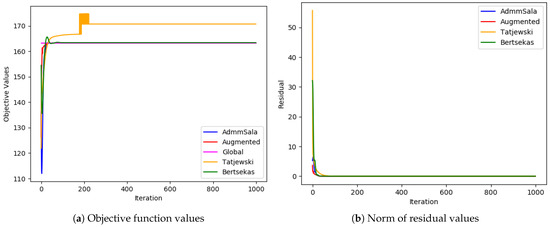

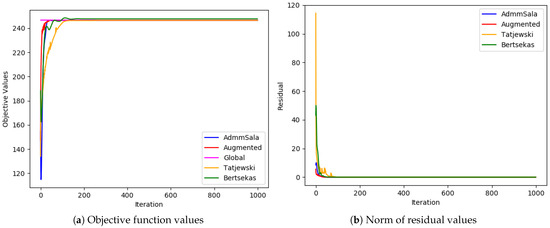

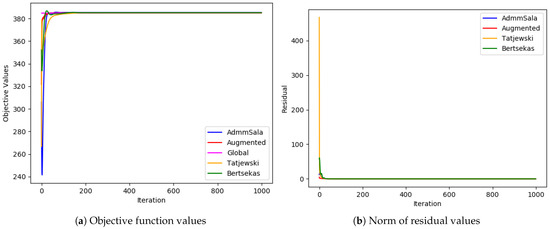

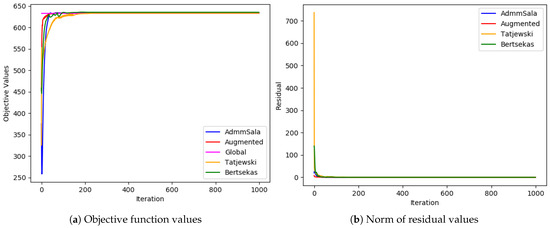

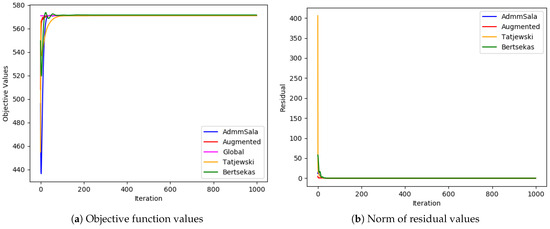

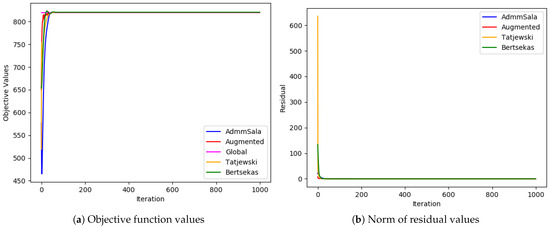

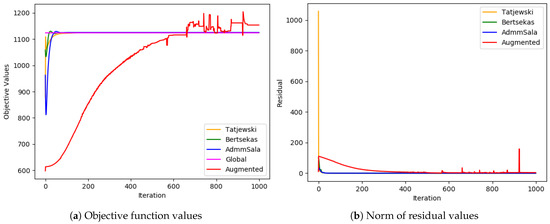

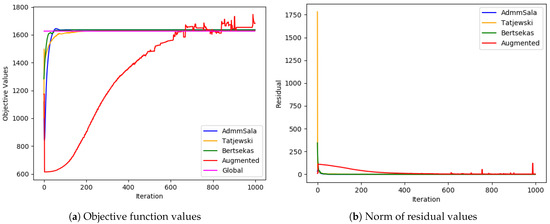

Figure 4, Figure 5, Figure 6, Figure 7, Figure 8, Figure 9, Figure 10, Figure 11, Figure 12 and Figure 13 show plots of values of the objective Function (1) and the norm of constraint residuals in subsequent iterations of all optimization algorithms considered in Section 5.

Figure 4.

Medium problem ( and ).

Figure 5.

Medium problem ( and ).

Figure 6.

Medium problem ( and ).

Figure 7.

Medium problem ( and ).

Figure 8.

Large problem ( and ).

Figure 9.

Large problem ( and ).

Figure 10.

Large problem ( and ).

Figure 11.

Large problem ( and ).

Figure 12.

Extra-large problem ( and ).

Figure 13.

Extra-large problem ( and ).

In most cases, all algorithms based on the augmented Lagrangian method dealt very well with the duality gap (the biggest value of the gap was less than ; in most cases, it was below ), which is a serious issue when Lagrangian relaxation is applied to MINLP problems, such as simultaneous optimization of routing and bandwidth allocation [3,4,7]. Among the algorithms tested, the standard Augmented Lagrangian method achieved the objective value with the least relative error for medium and large-sized problems. In most tests, Tatjewski and ADMM SALA algorithms also produced competitive results, though slightly higher, and the Bertsekas algorithm was a little worse but not always. In the case of very-large problems, the Tatjewski algorithm proved to be the most precise, ADMM, SALA and Bertsekas algorithms were a little worse, but they all delivered approximation of solutions with a very small relative error less than . The ordinary augmented Lagrangian algorithm did not converge within 8 h.

Regarding constraints violation, the algorithms are comparable—they all deliver (of course if they converge) an admissible solution with the assumed accuracy . As expected, none of the algorithms incurred any routing violations, indicating their ability to generate feasible routing solutions that satisfy network flow conservation equations.

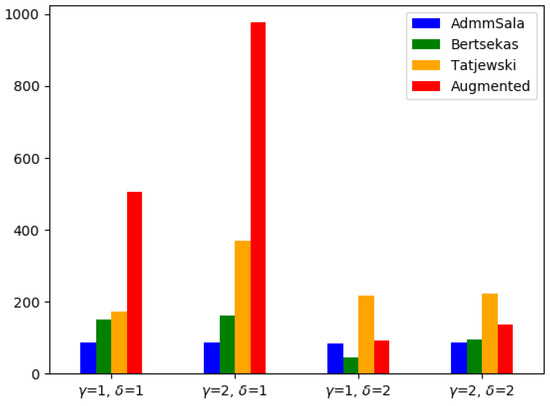

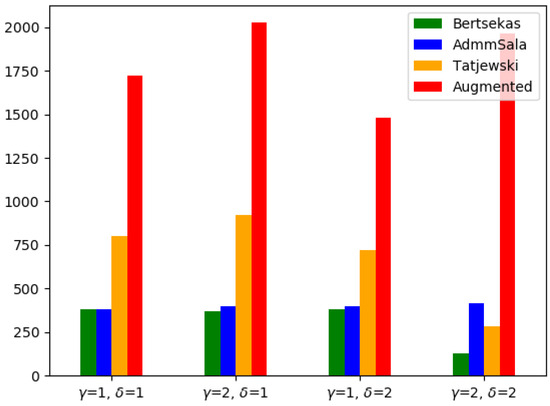

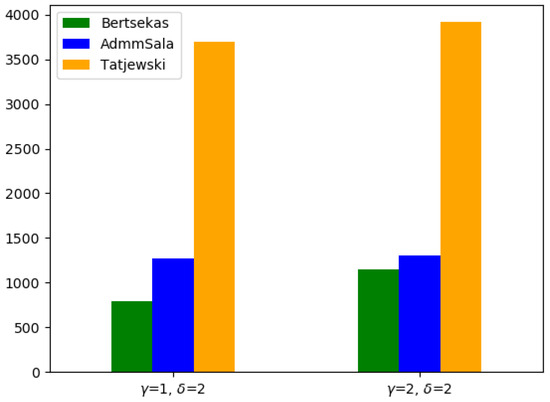

The analysis of runtimes presented in Table 2, Table 3 and Table 4 and Figure 14, Figure 15 and Figure 16 reveals that while the medium problems are solved very fast by one of the best commercial solvers (Gurobi), in the case of large problems, AL-based decomposed algorithms are competitive. The run times of delivering of a good approximation of the optimal solution were 2–3 times shorter for the standard AL algorithm and 4–14 (for one test example even 31.5) times for the algorithms with decomposition. In the case of very large problems the decomposed algorithms were 1.6–26 times faster than Gurobi solver. The standard augmented Lagrangian method required significantly more time to solve both medium and large-sized problems and failed in the case of very-large problems.

Table 2.

Medium problems: Objective values and relative errors (in %) with respect to the centralized (“Global”) Gurobi solution (in brackets) for the tested AL algorithms with, respectively, and , and , and , and .

Table 3.

Large problems: Objective values and relative errors (in %) with respect to the centralized (“Global”) Gurobi solution (in brackets) for the tested AL algorithms with, respectively, and , and , and , and .

Table 4.

Extra-large problems: Objective values and relative errors (in %) with respect to the centralized (“Global”) Gurobi solution (in brackets) for the tested AL algorithms with, respectively, and , and .

Figure 14.

Run times for the Medium problems.

Figure 15.

Run times for the Large problems.

Figure 16.

Run times for the Extra-large problems.

The results highlight the trade-offs between various Lagrangian-based optimization algorithms. The standard augmented Lagrangian excels in finding optimal routing solutions in not-too-big problems but at the cost of increased computational time. The Bertsekas algorithm offers a balanced approach, achieving competitive results with shorter runtimes. Tatjewski’s algorithm maintains high accuracy of solutions for big problems, making it suitable for scenarios with stringent demands. For very big problems ADMM SALA is a little worse in terms of the objective function, but it is quite fast.

It should be mentioned that the above AL-based methods will work also for more complicated two-layer network problems, e.g., with logarithmic objective functions [3].

7. Conclusions

We have discussed several algorithms for solving separable, mixed-integer, problems in a decomposed way and implemented them using Python and amplpy. We have tested these implementations on a network optimization problem of simultaneous routing and bandwidth allocation.

Since this network optimization problem has inequality constraints that bind subproblems, while in the original presentations of these methods, the binding constraints were equalities, we reformulated them.

Based on the results of numerical experiments we can conclude that all augmented Lagrangian-based algorithms (standard, Bertsekas, Tatjewski and SALA) overcome the duality gap, which is a serious disadvantage of using the Lagrangian Relaxation method to solve the two-layer routing and bandwidth allocation problem with single paths [3,4,7]. They deliver a very good approximation of the exact optimal solution in a relatively short time, usually 4–10 times shorter than that of the exact commercial solvers.

Applying parallelization to solve independently local optimization problems for particular flows should give additional speed up of the decomposed algorithms.

The choice of a particular AL-based optimization algorithm should be tailored to the specific requirements of the network problem. Standard augmented Lagrangian stands out as the go-to choice for small problems. The Bertsekas method offers a strong compromise between solution quality and runtime efficiency, and hence, it is suitable for large-scale problems where decomposition is needed, while the Tatjewski method excels in the solution quality. Ultimately, the selection of the algorithm should align with the priorities of the network optimization problem at hand.

Our formal analysis sheds light on the mathematical difficulties of Lagrangian-based optimization algorithms in the context of two-layer network problems, providing some insights for practitioners and researchers in the field of information technology and network optimization.

Our future works on simultaneous routing and bandwidth allocation problem in energy-aware networks will concern different than quadratic QoS components of the objective function (e.g., logarithmic [3]), nonseparable problems and more complicated models of the cost of energy, including unidirectional links.

Author Contributions

Conceptualization, A.K.; methodology, A.C.N. and A.K.; software, A.C.N.; validation, A.C.N. and A.K.; formal analysis, A.C.N. and A.K.; investigation, A.C.N. and A.K.; resources, A.C.N. and A.K.; numerical tests, A.C.N.; writing—original draft preparation, A.C.N. (except for Section 1, Section 2, Section 4.5 and Section 5.4—A.K.); writing—final editing, A.K. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

Data are contained within the article.

Conflicts of Interest

The authors declare no conflicts of interest.

Abbreviations

The most important variables and parameters used in the network problem formulation are as follows:

| set of all network nodes and a single node, respectively; | |

| set of all network arcs and a single arc, respectively; | |

| set of all links labeled by subsequent natural numbers and a single labeled link, respectively; | |

| one-to-one mapping from arcs to links labeled by a single natural number; | |

| set of all demands (flows) and a single demand, respectively; | |

| source and destination node for the specific demand (flow) w, respectively; | |

| flow rate for the specific demand ; | |

| lower and upper bound on the flow rate for the demand w; we assume that ; | |

| capacity of the link l, ; | |

| binary routing decision variable, whether the link l is used by the demand w; | |

| vector of routing variables defining a path for the demand | |

| positive parameter—the weight of the QoS part of the objective function; | |

| positive parameter—the weight of the energy usage part of the objective function. |

References

- Data Centres and Data Transmission Networks. Available online: https://www.iea.org/reports/data-centres-and-data-transmission-networks (accessed on 12 February 2024).

- Koot, M.; Wijnhoven, F. Usage impact on data center electricity needs: A system dynamic forecasting model. Appl. Energy 2021, 291, 116798. [Google Scholar] [CrossRef]

- Jaskóła, P.; Arabas, P.; Karbowski, A. Simultaneous routing and flow rate optimization in energy-aware computer networks. Int. J. Appl. Math. Comput. Sci. 2016, 26, 231–243. [Google Scholar] [CrossRef]

- Wang, J.; Li, L.; Low, S.H.; Doyle, J.C. Cross-layer optimization in TCP/IP networks. IEEE/ACM Trans. Netw. 2005, 13, 582–595. [Google Scholar] [CrossRef]

- Jünger, M.; Liebling, T.; Naddef, D.; Nemhauser, G.; Pulleyblank, W.; Reinelt, G.; Rinaldi, G.; Wolsey, L.A. (Eds.) 50 Years of Integer Programming 1958–2008: From the Early Years to the State-of-the-Art; Springer: Berlin/Heidelberg, Germany, 2010. [Google Scholar]

- Li, D.; Sun, X. Nonlinear Integer Programming; Springer: New York, NY, USA, 2006. [Google Scholar]

- Ruksha, I.; Karbowski, A. Decomposition Methods for the Network Optimization Problem of Simultaneous Routing and Bandwidth Allocation Based on Lagrangian Relaxation. Energies 2022, 15, 7634. [Google Scholar] [CrossRef]

- Rockafellar, R. Augmented Lagrange multiplier functions and duality in nonconvex programming. SIAM J. Control. 1974, 12, 268–285. [Google Scholar] [CrossRef]

- Huang, X.; Yang, X. Duality and Exact Penalization via a Generalized Augmented Lagrangian Function. In Optimization and Control with Applications; Qi, L., Teo, K., Yang, X., Eds.; Springer: Boston, MA, USA, 2005; pp. 101–114. [Google Scholar]

- Huang, X.; Yang, X. Further study on augmented Lagrangian duality theory. J. Glob. Optim. 2005, 31, 193–210. [Google Scholar] [CrossRef]

- Burachik, R.S.; Rubinov, A. On the absence of duality gap for Lagrange-type functions. J. Ind. Manag. Optim. 2005, 1, 33–38. [Google Scholar] [CrossRef]

- Nedich, A.; Ozdaglar, A. A geometric framework for nonconvex optimization duality using augmented lagrangian functions. J. Glob. Optim. 2008, 40, 545–573. [Google Scholar] [CrossRef]

- Boland, N.; Eberhard, A. On the augmented Lagrangian dual for integer programming. Math. Program. 2015, 150, 491–509. [Google Scholar] [CrossRef]

- Gu, X.; Ahmed, S.; Dey, S.S. Exact augmented lagrangian duality for mixed integer quadratic programming. SIAM J. Optim. 2020, 30, 781–797. [Google Scholar] [CrossRef]

- Bertsekas, D.P. Constrained Optimization and Lagrange Multiplier Methods; Academic Press: New York, NY, USA, 1982. [Google Scholar]

- Bertsekas, D.P. Multiplier methods: A survey. Automatica 1976, 12, 133–145. [Google Scholar] [CrossRef]

- Wierzbicki, A.P. A penalty function shifting method in constrained static optimization and its convergence properties. Arch. Autom. I Telemech. 1971, 16, 395–416. [Google Scholar]

- Stein, O.; Oldenburg, J.; Marquardt, W. Continuous reformulations of discrete-continuous optimization problems. Comput. Chem. Eng. 2004, 28, 1951–1966. [Google Scholar] [CrossRef]

- Bragin, M.A.; Luh, P.B.; Yan, B.; Sun, X. A scalable solution methodology for mixed-integer linear programming problems arising in automation. IEEE Trans. Autom. Sci. Eng. 2019, 16, 531–541. [Google Scholar] [CrossRef]

- Chen, Y.; Guo, Q.; Sun, H. Decentralized unit commitment in integrated heat and electricity systems using sdm-gs-alm. IEEE Trans. Power Syst. 2019, 34, 2322–2333. [Google Scholar] [CrossRef]

- Cordova, M.; Oliveira, W.d.; Sagastizábal, C. Revisiting augmented Lagrangian duals. Math. Program. 2022, 196, 235–277. [Google Scholar] [CrossRef]

- Liu, Z.; Stursberg, O. Distributed Solution of Mixed-Integer Programs by ADMM with Closed Duality Gap. In Proceedings of the IEEE Conference on Decision and Control, Cancun, Mexico, 6–9 December 2022; pp. 279–286. [Google Scholar] [CrossRef]

- Hong, M. A distributed, asynchronous, and incremental algorithm for nonconvex optimization: An ADMM approach. IEEE Trans. Control Netw. Syst. 2018, 5, 935–945. [Google Scholar] [CrossRef]

- Lin, Z.; Li, H.; Fang, C. Alternating Direction Method of Multipliers for Machine Learning; Springer: Singapore, 2022. [Google Scholar]

- Bertsekas, D.P. Convexification procedures and decomposition methods for nonconvex optimization problems. J. Optim. Theory Appl. 1979, 29, 169–197. [Google Scholar] [CrossRef]

- Tanikawa, A.; Mukai, H. A new technique for nonconvex primal-dual decomposition of a large-scale separable optimization problem. IEEE Trans. Autom. Control 1985, 30, 133–143. [Google Scholar] [CrossRef]

- Tatjewski, P. New dual-type decomposition algorithm for nonconvex separable optimization problems. Automatica 1989, 25, 233–242. [Google Scholar] [CrossRef]

- Tatjewski, P.; Engelmann, B. Two-level primal-dual decomposition technique for large-scale nonconvex optimization problems with constraints. J. Optim. Theory Appl. 1990, 64, 183–205. [Google Scholar] [CrossRef]

- Hamdi, A.; Mahey, P.; Dussault, J.P. A New Decomposition Method in Nonconvex Programming Via a Separable Augmented Lagrangian. In Recent Advances in Optimization; Gritzmann, P., Horst, R., Sachs, E., Tichatschke, R., Eds.; Springer: Berlin/Heidelberg, Germany, 1997; pp. 90–104. [Google Scholar]

- Sun, K.; Sun, X.A. A two-level distributed algorithm for nonconvex constrained optimization. Comput. Optim. Appl. 2023, 84, 609–649. [Google Scholar] [CrossRef]

- Houska, B.; Frasch, J.; Diehl, M. An Augmented Lagrangian Based Algorithm for Distributed Nonconvex Optimization. SIAM J. Optim. 2016, 26, 1101–1127. [Google Scholar] [CrossRef]

- Boland, N.; Christiansen, J.; Dandurand, B.; Eberhard, A.; Oliveira, F. A parallelizable augmented Lagrangian method applied to large-scale non-convex-constrained optimization problems. Math. Program. 2019, 175, 503–536. [Google Scholar] [CrossRef]

- Kuhn, H.W.; Tucker, A.W. Nonlinear programming. In Proceedings of the Second Berkeley Symposium on Mathematical Statistics and Probability; Neyman, J., Ed.; University of California Press: Berkeley, CA, USA, 1951; Volume 2, pp. 481–492. [Google Scholar]

- Arrow, K.J.; Hurwicz, L. Reduction of constrained maxima to saddlepoint problems. In Proceedings of the Third Berkeley Symposium on Mathematical Statistics and Probability; Neyman, J., Ed.; University of California Press: Berkeley, CA, USA, 1956; Volume 5, pp. 1–20. [Google Scholar]

- Arrow, K.; Hurwicz, L.; Uzawa, H. Studies in Linear and Nonlinear Programming; Stanford Mathematical Studies in the Social Sciences; Stanford University Press: Redwood City, CA, USA, 1958. [Google Scholar]

- Powell, M.J.D. A Method for Nonlinear Constraints in Minimization Problems; Fletcher, R., Ed.; Optimization, Academic Press: New York, NY, USA, 1969; pp. 283–298. [Google Scholar]

- Hestenes, M.R. Multiplier and gradient methods. J. Optim. Theory Appl. 1969, 4, 303–320. [Google Scholar] [CrossRef]

- Fletcher, R. A class of methods for nonlinear programming with termination and convergence properties. In Integer and Nonlinear Programming; Abadie, J., Ed.; North-Holland: Amsterdam, The Netherland, 1970; pp. 157–173. [Google Scholar]

- Mukai, H.; Polak, E. A quadratically convergent primal-dual algorithm with global convergence properties for solving optimization problems with equality constraints. Math. Program. 1975, 9, 336–349. [Google Scholar] [CrossRef]

- Bertsekas, D.P.; Tsitsiklis, J.N. Parallel and Distributed Computation: Numerical Methods; Prentice Hall Inc.: Englewood Cliffs, NJ, USA, 1989. [Google Scholar]

- Glowinski, R.; Marroco, A. On the approximation, by finite elements of order one, and the resolution, by penalization-duality of a class of non-Dirichlet problems linéaires. Math. Model. Numer. Anal. Math. Model. Numer. Anal. 1975, 9, 41–76. [Google Scholar]

- Gabay, D.; Mercier, B. A dual algorithm for the solution of nonlinear variational problems via finite element approximation. Comput. Math. Appl. 1976, 2, 17–40. [Google Scholar] [CrossRef]

- Hamdi, A. Two-level primal-dual proximal decomposition technique to solve large scale optimization problems. Appl. Math. Comput. 2005, 160, 921–938. [Google Scholar] [CrossRef]

- Hamdi, A.; Mishra, S.K. Decomposition methods based on augmented lagrangians: A survey. In Topics in Nonconvex Optimization: Theory and Applications; Springer: New York, NY, USA, 2011; Volume 50, pp. 175–203. [Google Scholar] [CrossRef]

- AMPL Optimization Inc.—Amplpy: Python API for AMPL. Available online: https://github.com/ampl/amplpy/ (accessed on 21 February 2024).

- Fourer, R.; Gay, D.M.; Kernighan, B.W. AMPL: A Modeling Language For Mathematical Programming, 2nd ed.; Duxbury Press: Belmont, CA, USA, 2003; Available online: https://ampl.com/resources/the-ampl-book/ (accessed on 21 February 2024).

- NetworkX—Network Analysis in Python. Available online: https://networkx.org/ (accessed on 12 February 2024).

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).