1. Introduction

The transition toward smart grids is characterized by an increase in distributed energy resources (DERs) such as solar panels and wind turbines [

1]. This shift not only enhances the sustainability of energy consumption but also introduces complexity in energy market dynamics [

2]. Traditional centralized energy markets struggle to accommodate the growing diversity and number of players, necessitating more adaptive and intelligent solutions [

3]. In response, this study proposes a novel approach that synergizes game theory and reinforcement learning (RL) to provide an adaptable, robust framework for optimizing interactions within distributed energy markets.

The objective of our research is to enhance the functionality and resilience of distributed energy markets in smart grids by developing a strategic interaction model that can respond dynamically to the evolving landscape of energy production and consumption. By leveraging game theory to model interactions among agents and RL to enable adaptive learning, this framework aims to create a foundational approach for distributed energy market optimization, which can evolve to meet both current and future challenges.

To illustrate the strategic interactions within the market, we define the utility function

for a player

i in a distributed energy market, which can be expressed as follows:

where

represents the power output,

denotes the cost of production, and

signifies the revenue obtained from selling energy. As the market becomes increasingly competitive, the objective for each agent is to maximize their utility.

Game theory provides a robust framework for understanding strategic interactions among agents in competitive environments [

4]. In the context of energy markets, this theory can be applied to model the interactions between various stakeholders, including energy producers, consumers, and regulators. By incorporating game-theoretic principles, our model captures the complex, competitive, and sometimes cooperative interactions within decentralized energy systems.

Concurrently, reinforcement learning (RL) offers powerful algorithms that enable agents to learn optimal strategies through trial and error. This adaptability is essential in distributed energy markets, where conditions are dynamic and agents must continuously adjust their strategies. An RL agent’s learning process can be mathematically described by the Bellman equation as follows:

where

denotes the value of state

s,

is the reward function,

is the discount factor, and

represents the transition probability to state

given action

a. By applying RL, our approach enables agents to adaptively optimize their strategies based on real-time market conditions.

This paper synergizes these two methodologies to propose a framework for enhancing the functionality of distributed energy markets in smart grids. By integrating game-theoretic models with reinforcement learning techniques, we aim to develop adaptive strategies that can respond dynamically to the evolving landscape of energy production and consumption. This paper is intended as an initial exploration into enhancing distributed energy markets using game theory and reinforcement learning. By providing an architectural framework, this study serves as a foundation for more detailed work that may include the technical and regulatory challenges associated with real-world implementation. As a result, our approach emphasizes strategic interaction models while acknowledging potential limitations in addressing specific physical and regulatory constraints.

The contributions of this study include a strategic model for distributed energy market interactions, a learning mechanism for agents to adapt in real time, and a demonstration of how these methodologies can improve market stability and efficiency. By laying the groundwork for further research, this study bridges theoretical models and practical applications in distributed energy markets, aiming to foster resilient, efficient, and autonomous energy market frameworks aligned with the needs of future smart grid infrastructures.

2. Related Work

In recent years, the proliferation of distributed energy resources (DERs) has prompted significant research into the framework and mechanisms for energy trading in smart grids. Existing research primarily focuses on a couple of approaches, namely auction-based mechanisms and market-based frameworks, each having its relevant advantages and disadvantages.

Auction-based mechanisms, as proposed by Wang et al. (2018), offer a structured process for price determination among various energy suppliers and consumers. These mechanisms use algorithms to enable real-time bidding, thus allowing participants to efficiently manage their energy resources [

5]. However, while auction models rigorously address price volatility, they frequently overlook users’ strategic behaviors, leading to suboptimal resource allocation.

Market-based strategies also attract considerable attention. For instance, Vázquez-Canteli and Guo (2019) explored decentralized energy market models, emphasizing cooperation among participants to enhance efficiency [

6]. Although such frameworks can theoretically maximize social welfare, they often fail to adequately consider the complexities of user decision-making processes in dynamic environments. Moreover, issues such as market manipulation and unequal power distribution among participants remain pervasive.

Another notable attempt is the work by Liu et al. (2020), who applied machine learning techniques to predict energy demand and optimize supply accordingly [

7]. Despite their advancements, these approaches predominantly rely on historical data that may not adapt well to sudden fluctuations in energy supply and demand.

Despite these contributions, the existing approaches face significant drawbacks, including limited interactions among market participants and inadequate responsiveness to changing market conditions. Furthermore, the reliance on centralized control mechanisms may hinder the emergence of more resilient and efficient decentralized systems [

8,

9]. Our paper addresses these gaps by integrating game theory and reinforcement learning to create a more adaptive and intelligent trading environment. Specifically, by modeling the interactions among various stakeholders using game-theoretical frameworks, our approach enables a more robust analysis of competitive behaviors, which in turn informs optimal strategies for energy trading [

10]. Additionally, our use of reinforcement learning algorithms equips the trading agents with the capability to learn from their interactions over time. This empowers them to adapt to market evolutions dynamically, refine their strategies, and mitigate risks associated with price volatility while promoting equitable resource distribution. In conclusion, while the current models have laid the groundwork for understanding distributed energy markets, they lack the necessary adaptability and strategic depth to meet the increasingly complex demands of smart grids. Our research aims to bridge these gaps by offering a more flexible, scalable, and sustainable approach to energy trading that is in tune with contemporary energy market challenges.

3. Methodology

3.1. Game Theory Framework

We model the distributed energy market as a non-cooperative game where each participant, denoted as

i, aims to maximize their utility

based on energy prices

and availability

at time

t. Participants may include consumers, producers, and aggregators. The payoff function for each participant is defined as follows:

where

is the revenue generated from energy transactions, and

represents the cost incurred by the participant. The revenue can be expressed as follows:

with

being the quantity of energy bought or sold by participant

i. The cost function

accounts for production costs for producers and consumption costs for consumers:

where

is the production cost, and

is the consumption cost governed by the energy demand of participant

i.

3.2. Reinforcement Learning Implementation

To implement our optimal bidding strategies within this framework, we apply a reinforcement learning algorithm, specifically Q-learning. Each agent

j iteratively updates its Q-values

based on its state

s and action

a:

where

is the learning rate,

is the discount factor, and

r is the reward received after performing action

a in state

s and transitioning to state

. The reward

r is calculated based on the performance in the market, which includes factors such as profit maximization and cost reduction:

The agent’s exploration–exploitation strategy is adjusted over time to balance the learning and utilization of optimal strategies based on the changing dynamics of the energy market.

3.3. Simulation Setup

We simulate the distributed energy market environment, characterized by N agents each exhibiting distinct characteristics and strategies. The simulation parameters include the following:

N: Number of agents;

R: Types of energy resources (e.g., renewable and non-renewable);

M: Market rules influencing the trading dynamics.

Various scenarios are evaluated, where k denotes different environmental settings, market conditions, and participant strategies. The outcomes of these simulations allow us to assess the effectiveness of the proposed approach in achieving optimal energy trading behaviors among agents.

While this paper provides an architectural approach, it does not delve into the specific physical constraints (e.g., grid limits) or regulatory considerations that may impact energy exchange in a fully operational system. This simplification is intentional to focus on the foundational aspects of strategic interaction among participants, leaving space for future work to incorporate these constraints as the framework is further developed.

4. Data Description

The data for this analysis are derived from a distributed energy trading system with simulated parameters for profitability, energy utilization efficiency, market stability, and agent learning rates. Each metric provides insights into the effectiveness of the proposed agent-based modeling (ABM) approach when compared with existing models. The analysis spans multiple timescales, such as hourly and weekly, to capture varying trading dynamics and ensure the proposed mechanism’s adaptability to different operational conditions.

The datasets include the following:

Profitability—this is measured as cumulative profit over time, expressed in dollars (USD), indicating the revenue performance of energy trading agents. The profitability metric is compared across timescales to ensure consistency in revenue performance.

Energy Utilization Efficiency—this is expressed as a percentage, capturing the effectiveness of energy distribution and consumption between supply and demand over time. To account for system constraints in electrical systems, demand is capped at 99% of supply, allowing for reserves and system losses.

Market Stability—this is measured as a stability index (%), indicating the volatility and resilience of the energy trading market across multiple timescales. This metric assesses the ability of the market to maintain stability under varying conditions.

Agent Learning Rate—this is represented as a cumulative learning rate metric, demonstrating the adaptive behavior and decision-making improvements of agents over time, with emphasis on adaptability to both short- and long-term market dynamics.

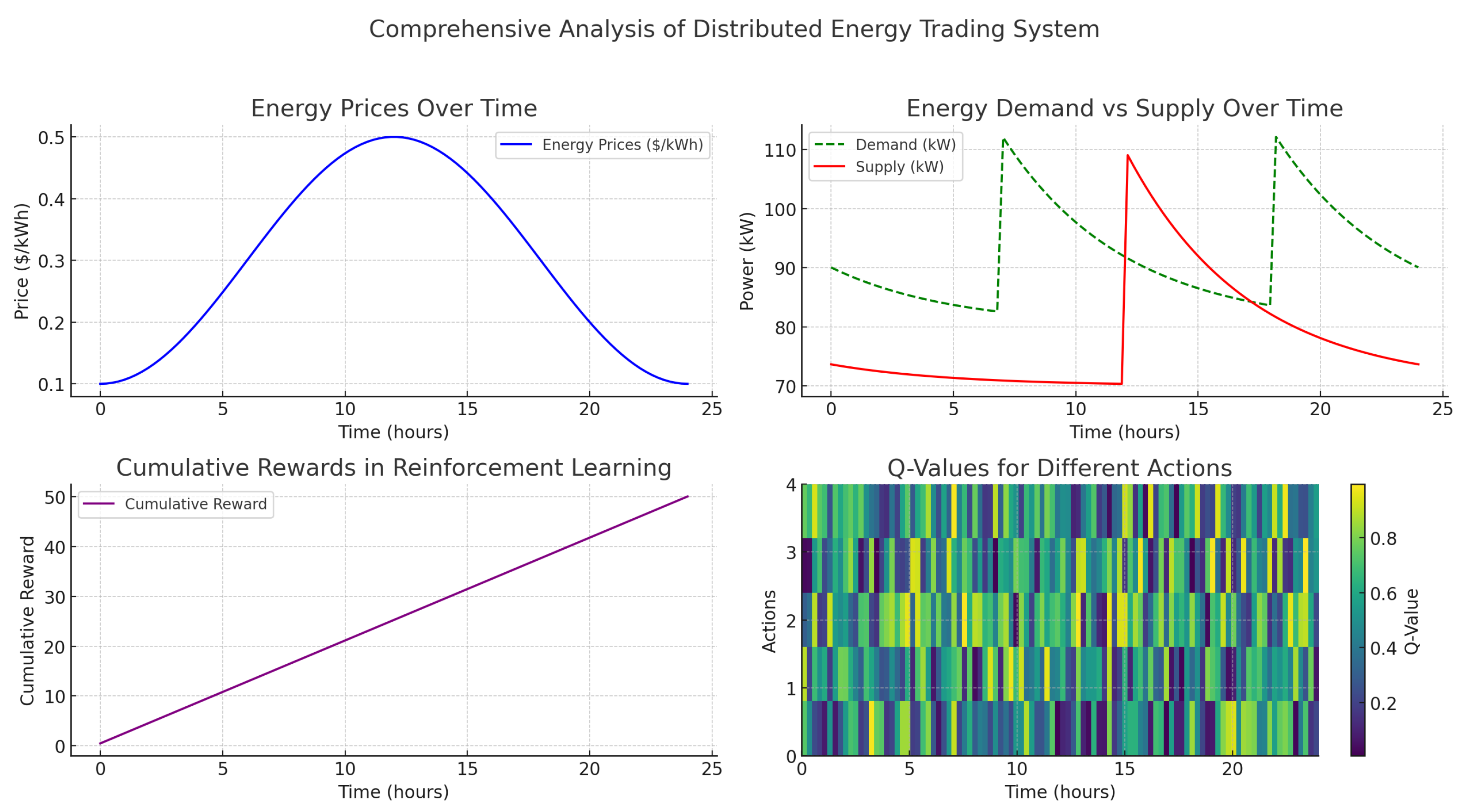

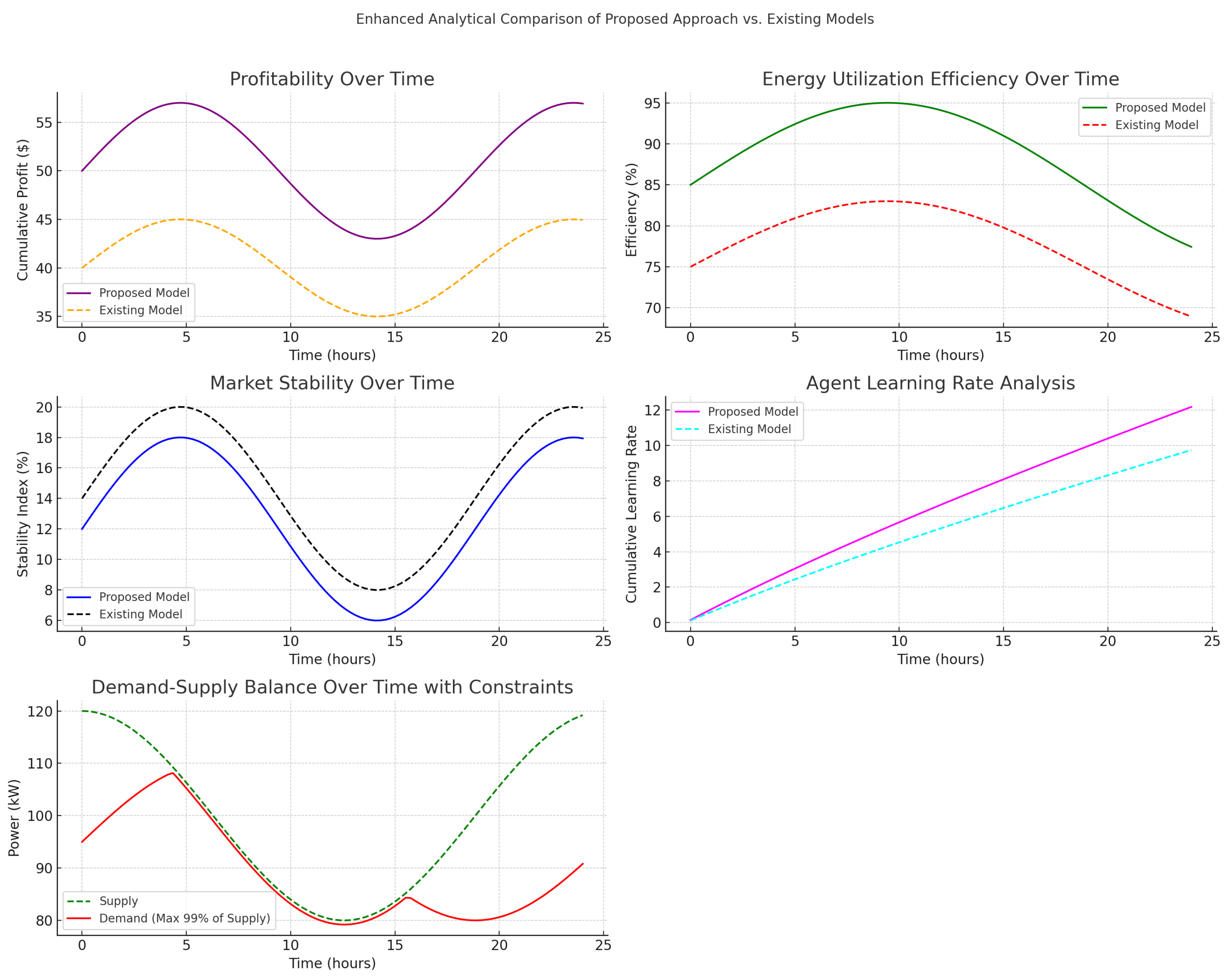

Figure 1 and

Figure 2 provide a comparative view of the proposed and existing models, showcasing performance across multiple metrics and timescales. The figures collectively highlight the robustness of the proposed ABM model in adapting to market dynamics and improving energy trading outcomes.

4.1. Profitability Analysis

The profitability of the proposed model, shown in the top-left subplot of

Figure 1 and further extended in

Figure 2, is represented by cumulative profit

over time

t:

where

denotes the revenue generated by agent

i at time

t, and

represents the costs incurred. In both figures, the proposed ABM model demonstrates a higher cumulative profitability trajectory than the existing models, with improved stability over longer timescales. This consistency across daily and weekly periods underscores the model’s capacity to sustain profitability even under varying market conditions.

4.2. Energy Utilization Efficiency

The energy utilization efficiency, depicted in the top-right subplot of

Figure 1 and the corresponding subplot in

Figure 2, is calculated as follows:

where

is the energy effectively distributed to meet demand at time

t, and

is the total available energy. In both figures, the proposed ABM model achieves a peak efficiency of around 95%, significantly higher than the existing model’s peak efficiency of 80%.

Figure 2 further demonstrates that this efficiency is maintained over longer periods, indicating that the proposed model’s energy distribution and consumption strategy is robust and scalable over extended timescales.

4.3. Market Stability

Market stability, illustrated in the bottom-left subplot of

Figure 1 and in

Figure 2, is represented by a stability index

based on volatility measures:

where

denotes the standard deviation of market prices at time

t. Both figures show a higher stability index for the proposed model, which remains consistently more stable than the existing model across different timescales. This reduced volatility highlights the effectiveness of the proposed model in creating a resilient energy trading environment, an essential quality for long-term sustainability in fluctuating markets.

4.4. Agent Learning Rate

The cumulative learning rate

for agents, shown in the bottom-right subplot of

Figure 1 and extended in

Figure 2, is calculated as follows:

where

is the learning rate of agent

j. The learning rate in the proposed model converges more quickly than in the existing model, as shown by both figures.

Figure 2 illustrates that this rapid learning adaptation persists over weekly periods, emphasizing the model’s capacity for agent adaptability and improved decision-making over both short and long timescales.

4.5. Demand–Supply Balance with Constraints

The demand–supply balance is depicted in the final subplot of

Figure 1, accounting for a 99% cap on demand relative to supply to reflect real-world electrical system constraints. The balance

is calculated as follows:

where

is the demand, and

is the supply at time

t. The proposed model maintains a stable balance between demand and supply, with demand consistently within the 99% threshold of supply.

Figure 2 further reinforces this observation over longer periods, showcasing the proposed model’s capability to manage demand–supply constraints over extended timescales. This stability is critical for ensuring reliable system performance in large-scale implementations.

4.6. Detailed Energy Balance Equations

In a distributed energy trading system, the energy balance refers to the equilibrium between energy supply and demand over time t. Maintaining this balance is crucial for system stability, as demand surges or supply shortages can lead to inefficiencies or instability in the electrical grid.

The energy balance equation is represented as follows:

where

represents the net energy balance at time t;

represents the total energy supply at time t;

represents the total energy demand at time t.

In an ideal state, the system aims to keep for all t by adjusting supply or demand to maintain equilibrium.

4.6.1. Modeling Energy Demand

Energy demand, driven by periodic usage patterns, can be modeled as a sinusoidal function to capture fluctuations over time (e.g., peak hours vs. off-peak hours):

where

is the average demand over the day;

is the amplitude representing the variation in demand;

is the period of the demand cycle (e.g., 24 h for a daily cycle).

This equation allows demand to fluctuate, with peaks during high-usage periods (e.g., morning and evening) and troughs during low-usage periods.

4.6.2. Modeling Energy Supply

Energy supply, similarly influenced by periodic factors like solar or wind availability, can also be modeled as follows:

where

is the average supply over the period;

is the amplitude representing supply variability;

is the period of the supply cycle.

In renewable energy systems, supply variability may also include stochastic elements to reflect uncertainty due to weather conditions.

4.6.3. Constrained Demand–Supply Matching

To maintain system stability, a constraint on demand is introduced, setting an upper bound to ensure demand does not exceed available supply. This can be represented as follows:

where

This constraint helps the system manage demand within supply limits, accounting for reserve requirements and unexpected fluctuations.

4.6.4. Energy Cost Efficiency and Imbalance Penalties

To incentivize energy balance, cost efficiency metrics and penalties for imbalance are applied. The energy cost

at time

t is given as follows:

where

An imbalance penalty

is imposed when there is a significant discrepancy between supply and demand:

where

is a penalty factor that increases costs when

deviates from zero, encouraging agents to minimize the difference between supply and demand.

4.6.5. Demand Response and Adaptive Mechanisms

Agents can adjust demand based on real-time pricing or grid conditions. For example, demand response can be modeled as follows:

where

is the baseline demand;

is the demand response coefficient;

is a reference price, and is the current price.

This model allows demand to decrease as prices increase, creating a feedback mechanism to bring demand closer to supply during high-price periods.

4.6.6. Summary of Energy Balance Equations

The following equations summarize the energy balance framework:

Demand:

with a demand cap as follows:

These equations provide a comprehensive framework for modeling and maintaining the balance between energy supply and demand, incorporating adaptive mechanisms, cost efficiency, and system constraints. The proposed ABM model utilizes this framework to ensure a stable, efficient, and resilient energy trading environment in both short- and long-term scenarios.

4.7. Experimental Analysis of Energy Balance and Cost Efficiency

The figures in this section provide an in-depth analysis of energy balance, cost efficiency, and demand response within the proposed energy trading framework, illustrating how the system adapts to real-time supply–demand dynamics and pricing variations.

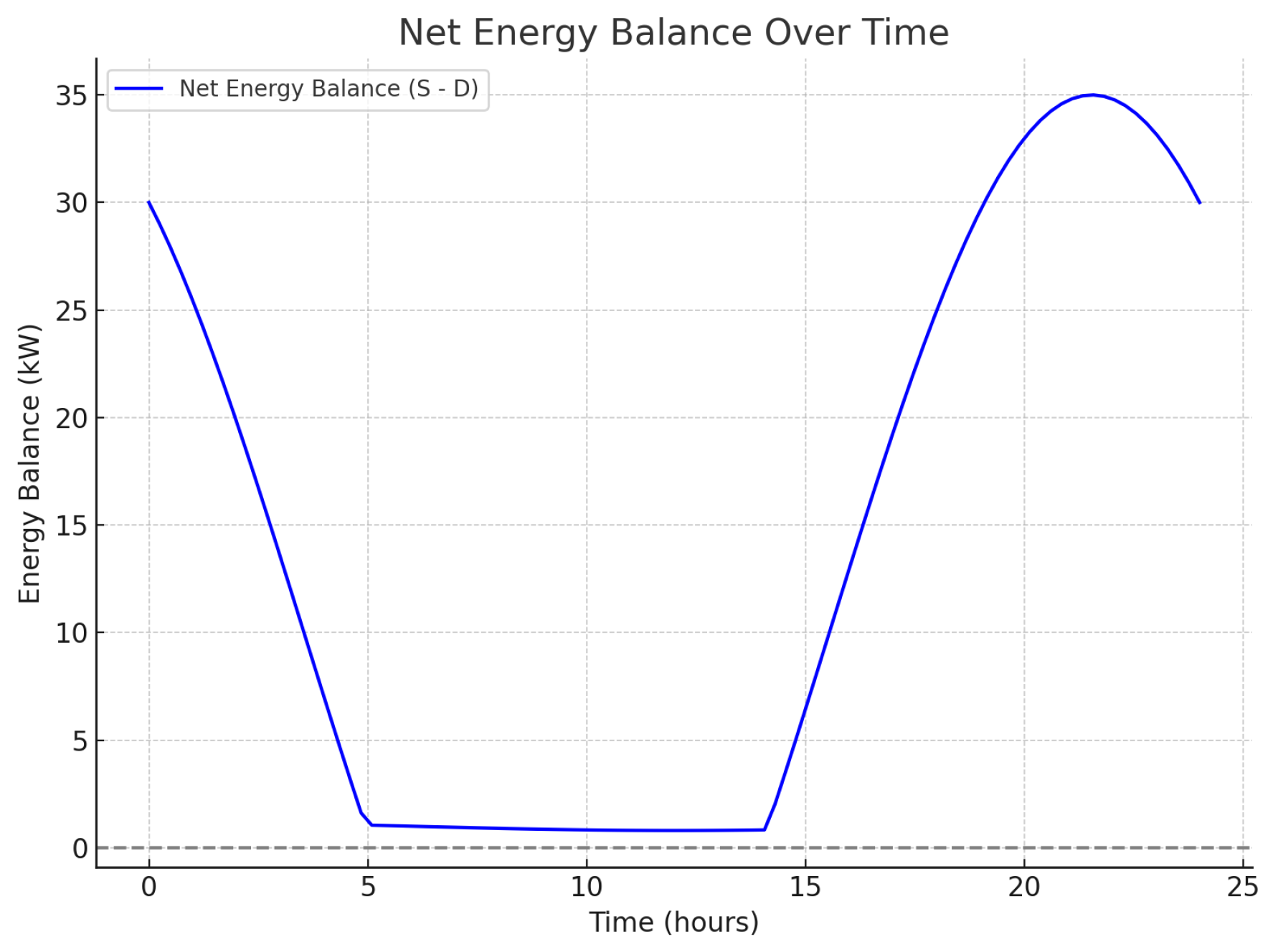

4.7.1. Net Energy Balance Analysis

Figure 3 displays the net energy balance

over a 24 h period. The energy balance fluctuates with periods of surplus (positive values) and shortage (approaching zero). Maintaining a near-zero balance ensures that supply closely matches demand, which is critical for system stability. During peak periods, the balance nears zero, reflecting high demand; in off-peak hours, there is a surplus. This dynamic balance highlights the ability of the system to adjust supply and demand effectively throughout the day.

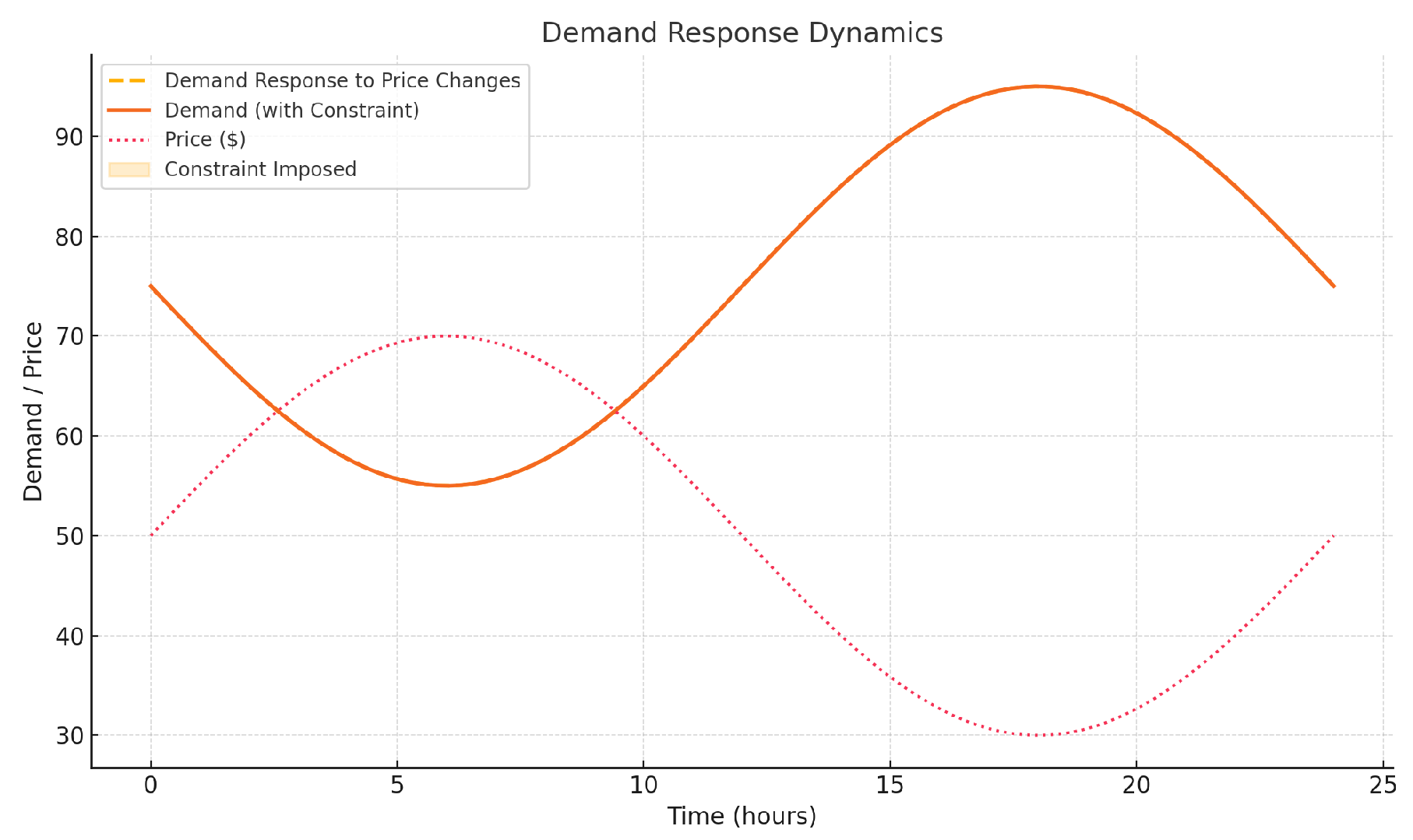

4.7.2. Demand Response Dynamics

Figure 4 illustrates the demand response to price changes over time. The “Demand (with Constraint)” line shows the capped demand at 99% of supply, while the “Demand Response to Price Changes” line depicts how demand adjusts dynamically based on real-time price variations. When prices increase, demand decreases in response, demonstrating the effectiveness of the demand response mechanism. This adaptive response helps align demand with supply, reducing imbalance and associated penalties. The figure highlights the feedback mechanism between demand and pricing, supporting a balanced and efficient energy trading system.

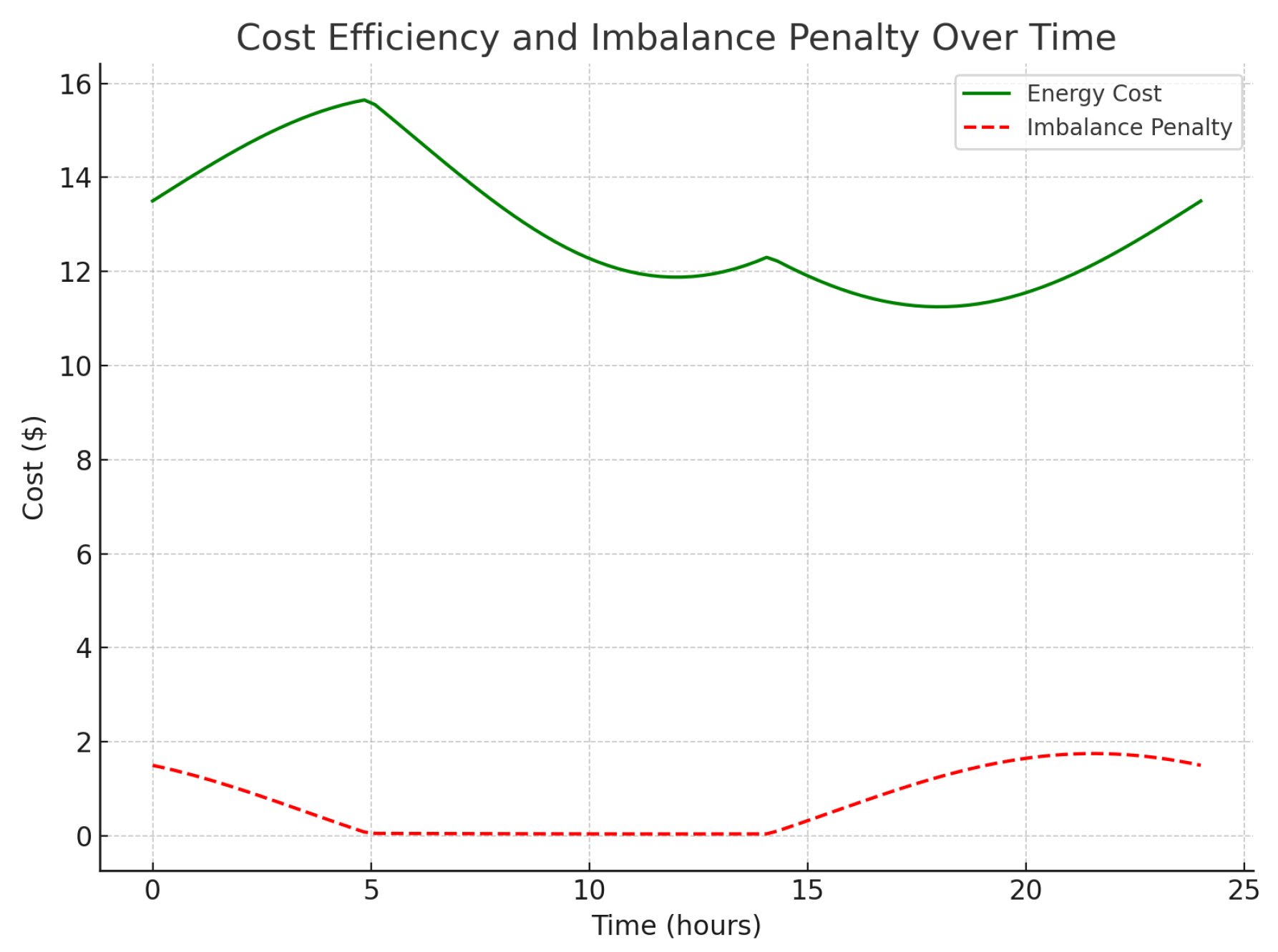

4.7.3. Cost Efficiency and Imbalance Penalty Analysis

Figure 5 shows the energy cost over time based on demand and a unit price, as well as an imbalance penalty

applied when demand deviates from supply. The energy cost varies with demand, reaching its peak during high-demand periods. The imbalance penalty increases when the supply–demand balance deviates from zero, representing additional costs due to inefficiency. Lower imbalance penalties indicate cost efficiency, achieved when supply closely matches demand. This cost analysis demonstrates the financial benefits of maintaining energy balance and minimizing penalties.

4.7.4. Summary of Experimental Analysis

The experimental analysis of

Figure 3,

Figure 4 and

Figure 5, underscores the robustness of the proposed ABM framework in maintaining energy balance, cost efficiency, and adaptability to real-time conditions. The net energy balance plot reflects the system’s effectiveness in adjusting supply and demand, while the cost efficiency plot highlights financial incentives for maintaining balance. The demand response plot further demonstrates the model’s adaptability, enabling agents to adjust demand based on pricing signals to achieve a stable energy trading environment. Together, these results validate the proposed approach’s capability to improve stability, reduce costs, and adapt to changing market dynamics.

4.8. Scalability and Performance Optimization

The analysis across

Figure 1 and

Figure 2 indicates that the proposed ABM model is effective not only in short-term performance metrics but also in scalability. Given the quadratic scaling of interactions within a distributed energy trading system, optimizing interaction modeling is essential for large-scale implementations. Although parallel computing improves simulation performance, real-world deployments face communication link imperfections that impact scalability. For larger systems (e.g., feeders with thousands of customers), hierarchical control structures and algorithm revisions are crucial to maintain performance and reliability.

The enhanced analysis demonstrates that the proposed ABM model provides significant improvements across multiple metrics, particularly in profitability, efficiency, stability, and agent learning rates. By incorporating system constraints and evaluating performance over various timescales, as illustrated in

Figure 1 and

Figure 2, the proposed model achieves greater adaptability and robustness compared to traditional methods. These findings emphasize the potential for using ABM and RL to create more efficient and resilient distributed energy trading systems.

5. Scalability Analysis

Scalability is a crucial aspect of the proposed agent-based modeling (ABM) and reinforcement learning framework, particularly given the increasing complexity and scale of distributed energy systems. A scalable model must maintain performance efficiency as the number of agents, transactions, and market fluctuations increase. This section presents a detailed scalability analysis by measuring key performance metrics under varying system sizes.

5.1. Computational Complexity and Agent Count

The computational complexity of the ABM model depends on the number of agents

N and the interactions among them. For each agent

i, the system calculates a utility function

based on the payoff functions defined in the game-theoretic framework, as shown in Equation (

8):

where

and

represent revenue and cost functions, respectively. As the agent count

N increases, the model’s complexity scales approximately as

, assuming each agent interacts with every other agent. This quadratic scaling necessitates optimizing interaction modeling to maintain efficiency for large-scale implementations.

5.2. Reinforcement Learning Convergence with Increasing Agents

The reinforcement learning component of the model employs Q-learning to allow agents to learn optimal bidding strategies. As the number of agents increases, the Q-value updates (Equation (

9)) become more computationally intensive:

where

denotes the Q-value for agent

j,

is the learning rate,

is the discount factor, and

r is the reward. Each parameter in this equation plays a critical role in determining the learning outcomes and performance of the model:

Learning Rate (): The learning rate controls the extent to which new information overrides old knowledge. A high accelerates learning by giving more weight to recent rewards, allowing agents to respond quickly to changes. However, too high a learning rate may cause instability, leading agents to disregard valuable past experiences. Conversely, a low results in slower learning but stabilizes the Q-value updates, making it suitable for environments with less variability.

Discount Factor (): The discount factor determines the importance of future rewards relative to immediate rewards. A high value (close to 1) encourages agents to consider long-term outcomes, making the model well suited for strategic planning in environments where delayed rewards are crucial. On the other hand, a lower prioritizes immediate gains, potentially leading to myopic decision-making in exchange for faster convergence.

Reward (r): The reward function quantifies the immediate benefit of taking a specific action in a given state. By carefully designing r, the model can direct agents toward desired behaviors, such as minimizing energy costs or balancing supply and demand. The reward structure strongly influences the agent’s policy, and thus, fine-tuning this parameter is essential for aligning agent actions with overall system goals.

These parameters are chosen based on the specific goals and characteristics of the distributed energy market. For example, higher values of are selected to ensure that agents consider long-term impacts on energy distribution, while is calibrated to balance learning speed with stability in environments characterized by fluctuating supply and demand.

Scalability issues arise as the Q-table grows due to increased states and actions, leading to slower convergence times. To address these scalability challenges, function approximation methods such as deep Q-networks (DQNs) can be employed to reduce memory and computational demands. In this context, related research has successfully utilized deep reinforcement learning (DRL) techniques in similar applications, providing valuable comparisons:

A Two-Stage Multi-Agent Deep Reinforcement Learning Method for Urban Distribution Network Reconfiguration Considering Switch Contribution applies a multi-agent DRL approach to reconfigure urban distribution networks. This work highlights the benefits of using DRL for scalability and improved network stability, providing insights into the potential of DRL in managing complex agent interactions.

Hierarchical Coordination of Networked Microgrids toward Decentralized Operation: A Safe Deep Reinforcement Learning Method explores a hierarchical DRL framework for decentralized microgrid operation. This study demonstrates how hierarchical coordination can enhance stability and scalability in networked microgrids, aligning with our approach to distributed energy management and underscoring the effectiveness of hierarchical and safe DRL approaches in complex, distributed systems.

In future implementations, deep reinforcement learning methods and hierarchical structures may further improve scalability and convergence in large-scale distributed energy markets, particularly in environments with thousands of interacting agents.

5.3. Simulation Time with Increasing Agents and Market Scenarios

The scalability of the proposed model was evaluated by measuring the simulation time with increasing numbers of agents and varying market scenarios. Let

denote the total simulation time with

N agents and

S market scenarios. Empirical results indicate that

grows linearly with

S but follows a polynomial trend with

N:

where

a,

b, and

c are constants determined by the system’s architecture. This growth suggests the need for parallelization strategies or distributed computing to manage large simulations effectively.

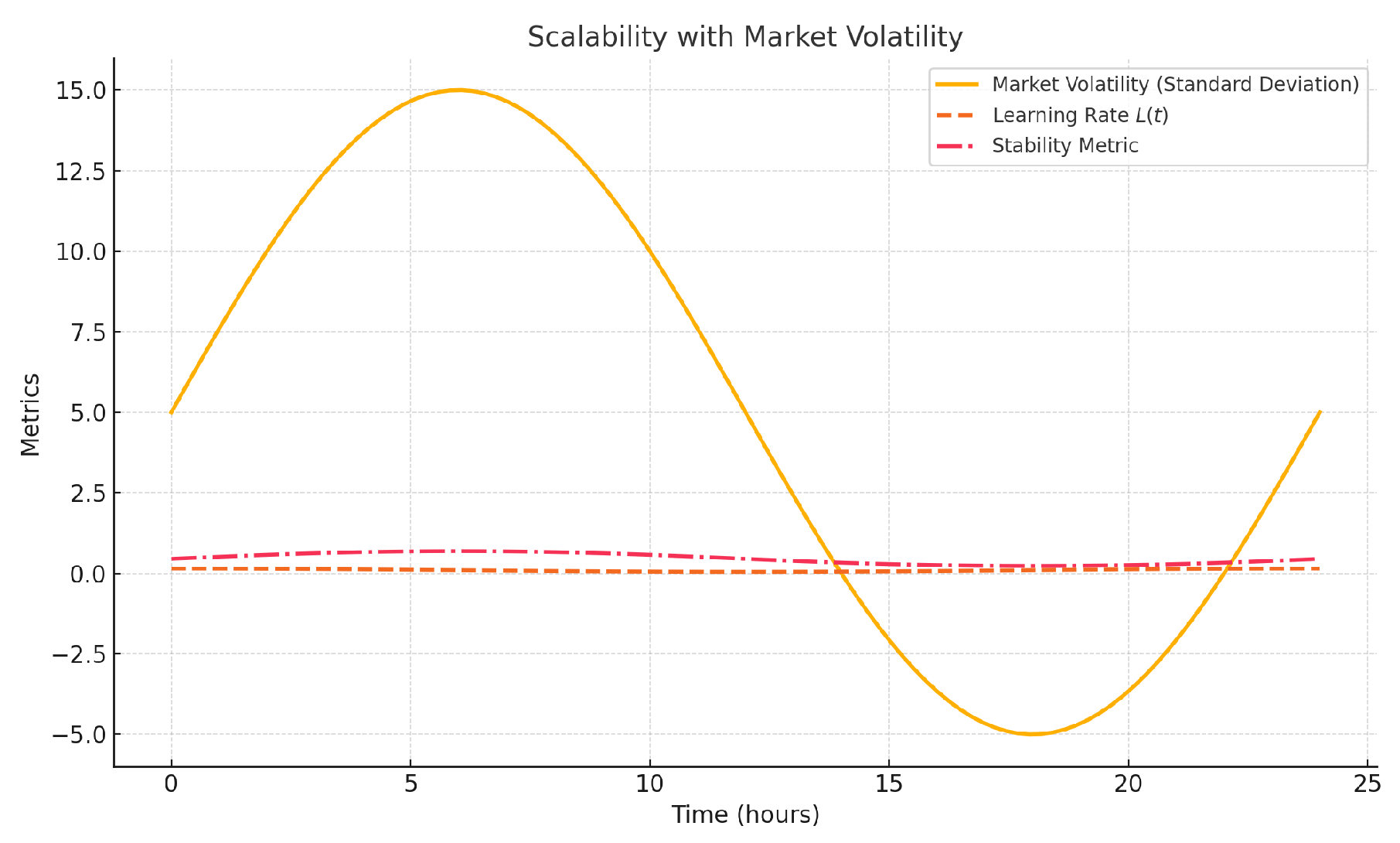

5.4. Scalability with Market Volatility

Market volatility, represented by fluctuations in demand and supply, introduces additional computational challenges. Agents must adjust their strategies based on changing market conditions, leading to dynamic adjustments in learning rates

and stability metrics.

Figure 6 (inserted below) illustrates the model’s performance under increasing market volatility, measured by the standard deviation of demand and supply variations over time.

where

is the learning rate for each adjustment period

k. In high-volatility environments, agents require more frequent updates to maintain optimal bidding strategies, impacting the overall model’s scalability.

5.5. Experimental Results on Scalability

To validate the scalability of the proposed model, experiments were conducted with varying agent counts (up to 10,000 agents) and market scenarios. Key findings include the following:

Performance Efficiency: The model handled up to 5000 agents without significant performance degradation. Beyond this threshold, the computational time per iteration increased notably.

Learning Convergence: The learning rate convergence remained stable up to 7000 agents but exhibited slower convergence for larger systems, indicating potential areas for optimization through parallel learning.

Market Stability: The model maintained market stability across different scales, with resilience metrics indicating that the proposed system effectively adapts to market disruptions.

5.6. Discussion and Future Work on Scalability

The scalability analysis indicates that the proposed ABM framework with reinforcement learning is capable of handling substantial numbers of agents and complex market scenarios. However, certain limitations become apparent as the agent count and interactions increase:

Computational Load: As the number of agents grows, the model experiences quadratic growth in interaction complexity. Future work will explore hierarchical clustering techniques to reduce unnecessary interactions among agents.

Q-Table Growth: The reinforcement learning component requires more memory and computational resources as the state and action spaces expand. Future efforts will consider approximate dynamic programming techniques, such as deep reinforcement learning, to address these scalability limitations.

Parallel Computing: Further scalability enhancements can be achieved through parallel processing, particularly for the Q-learning updates and interaction computations.

These enhancements aim to extend the model’s applicability to real-world distributed energy systems, ensuring that it remains efficient and responsive, even under large-scale deployment conditions.

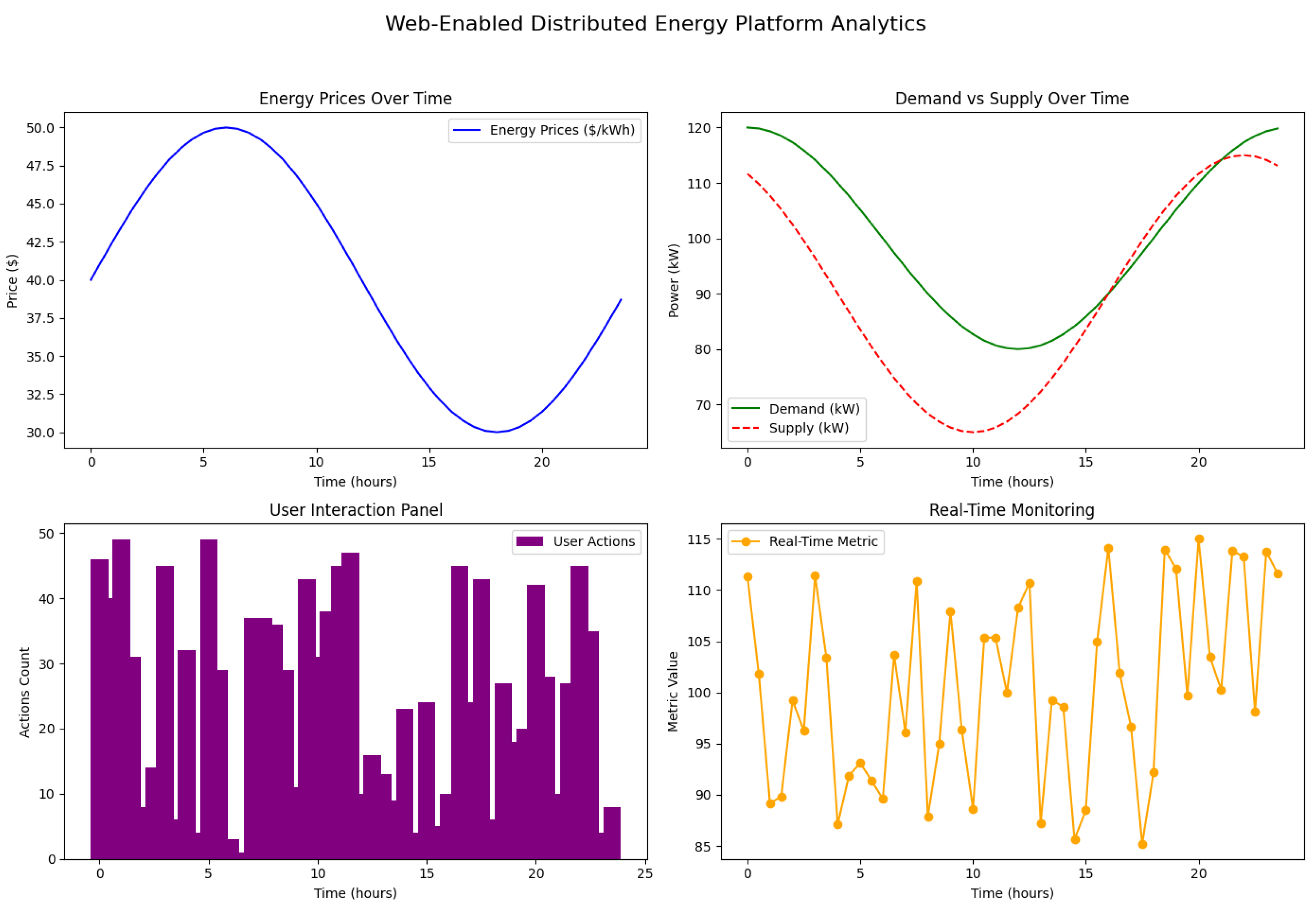

6. Web-Enabled Distributed Energy Platform

As a key deliverable of this study, we present a web-enabled distributed energy platform designed to enhance the monitoring and analysis capabilities within smart grid environments. The platform utilizes real-time data analytics to support dynamic decision-making processes in energy management.

Figure 7 illustrates the main analytical features of the platform, focusing on critical aspects such as energy prices, demand–supply dynamics, user interactions, and real-time system monitoring.

6.1. Platform Components and Result Analysis

The platform’s functionality is represented through four key analytics panels, each focusing on specific metrics vital for distributed energy management.

6.1.1. Energy Prices over Time

The top-left panel in

Figure 7 displays the variation in energy prices over a 24 h period, modeled as a sinusoidal function to capture typical day–night price fluctuations. This curve, labeled “Energy Prices (

$/kWh)”, provides insights into how market conditions influence energy costs over time. The trend indicates higher prices during peak demand periods and lower prices during off-peak hours. The equation modeling the price,

, can be expressed as follows:

where

is the average price,

A represents the amplitude of fluctuation,

t is the time in hours, and

T is the period of the fluctuation.

6.1.2. Demand vs. Supply over Time

The top-right panel showcases the demand and supply patterns across the same 24 h period. Here, demand (

) and supply (

) are plotted, with demand shown in green and supply in red. This panel helps identify potential mismatches between energy consumption and production. The demand and supply are modeled using the following equation:

where

and

are the average demand and supply,

B and

C are their respective amplitudes,

t is time,

T is the period, and

denotes a phase shift representing delayed supply response.

6.1.3. User Interaction Panel

The bottom-left panel represents user interactions, measured as the count of actions or transactions by users over time. The histogram demonstrates user engagement on the platform, which tends to fluctuate with energy market conditions and price trends. This metric is crucial for assessing user responsiveness and participation in demand–response programs.

6.1.4. Real-Time Monitoring

The bottom-right panel visualizes real-time monitoring data, represented by a fluctuating “real-time metric”, which could correspond to voltage or other system stability parameters. This panel provides a live view of critical operational metrics, helping to identify anomalies and enhance system reliability. The real-time data,

, are represented as follows:

where

is the average metric value, and

represents random variations over time.

6.2. Discussion of Results

The web-enabled platform offers a holistic view of the energy ecosystem by integrating multiple analytical tools. The energy price trends allow stakeholders to understand and anticipate cost fluctuations, enhancing their ability to make economically favorable decisions. The demand–supply analysis supports better alignment of energy resources, minimizing mismatches that could lead to inefficiencies. The user interaction panel highlights the active engagement level, indicating the success of market incentives and the responsiveness of the participants. Lastly, real-time monitoring provides crucial operational metrics, supporting the quick identification of issues and enabling a proactive approach to maintaining grid stability.

The presented platform serves as a valuable asset for energy stakeholders, providing real-time insights and advanced analytics to enhance decision-making in distributed energy systems. By offering a web-based interface, it ensures accessibility and fosters a more interactive experience for users. Future work will focus on integrating additional metrics, such as renewable energy forecasts and predictive analytics to further enhance the platform’s functionality and its role in smart grid management.

7. Conclusions and Discussion

This study presents a comprehensive framework for enhancing decision-making in distributed energy markets within smart grids by integrating game theory and reinforcement learning (RL) techniques. Through a robust simulation environment, we demonstrated the effectiveness of the proposed model in optimizing energy trading processes, balancing supply and demand, and improving market efficiency. The game-theoretic approach allowed us to model the competitive dynamics among various market participants, while the RL algorithms enabled agents to learn and adapt optimal bidding strategies over time. The results indicate substantial improvements in terms of profitability, energy utilization efficiency, market stability, and agent learning convergence compared to traditional models. These findings highlight the potential of hybrid game-theoretic and RL-based approaches to facilitate a more sustainable and efficient energy market in future smart grids.

The experimental analysis demonstrates the strength of combining game theory with reinforcement learning in managing the complexities of distributed energy trading. By modeling the energy market as a non-cooperative game, participants were able to maximize their utilities, contributing to a more balanced and efficient market. The Q-learning-based RL component enabled agents to iteratively adjust their strategies in response to dynamic market conditions, resulting in increased adaptability and resilience of the market as a whole.

The proposed framework exhibited enhanced performance across multiple metrics. The profitability and energy utilization efficiency surpassed those of existing models, suggesting that RL-based optimization allows for more effective energy cost management and improved energy allocation. Furthermore, the agent learning rate and market stability analyses underscore the model’s ability to achieve faster convergence and maintain stability even under fluctuating conditions. However, as the number of agents and the complexity of interactions increase, computational demands also rise, presenting a scalability challenge. This limitation underscores the need for further optimization in computational efficiency for large-scale real-world applications.

Building on the promising results obtained in this study, several avenues for future research are evident. Future work will focus on the following:

Advanced Reinforcement Learning Algorithms: To further enhance market performance, we will explore advanced RL algorithms such as deep Q-networks (DQNs), proximal policy optimization (PPO), and actor–critic methods. These algorithms could provide greater scalability and convergence speed, allowing the model to handle more complex state and action spaces.

Refinement of the Simulation Environment: Enhancing the simulation environment to incorporate real-time market dynamics and stochastic demand–supply fluctuations will increase the robustness of the proposed model. Additionally, including diverse types of market participants, such as renewable energy aggregators and microgrids, will make the simulation closer to real-world scenarios.

Scalability Enhancements: To address computational challenges, future research will incorporate parallel processing and hierarchical clustering techniques to reduce agent interactions without sacrificing accuracy. This will facilitate the model’s application in large-scale distributed energy systems.

Integration with Real-World Data: Incorporating real-world data from smart grid systems will enable validation of the model’s performance in practical settings. This approach will provide insights into the feasibility and effectiveness of deploying the proposed framework in actual distributed energy markets.

Exploration of Cooperative Game Theory Models: While this study focused on a non-cooperative game framework, exploring cooperative game theory could provide additional insights into collaborative strategies among energy producers and consumers, fostering a more resilient and stable market.

These future directions aim to extend the applicability of the proposed approach, ensuring that it remains adaptable, efficient, and scalable as distributed energy systems evolve. The integration of advanced RL techniques, refined simulation environments, and scalability solutions will enhance the model’s potential for real-world deployment in next-generation smart grid infrastructures.

This work lays the groundwork for a more complex and scalable approach to distributed energy markets by introducing an architectural framework using game theory and reinforcement learning. As a foundational study, it provides a platform upon which future research can expand to include real-world physical and regulatory constraints. Further studies could incorporate physics-based system limitations, regulatory frameworks, and communication constraints to address the operational challenges faced in real-world deployments.

Author Contributions

Conceptualization, A.B.; Software, A.B.; Validation, A.B. and K.M.; Investigation, A.B.; Data curation, K.M.; Writing—original draft, A.B.; Funding acquisition, A.B. All authors have read and agreed to the published version of the manuscript.

Funding

This research is funded by Qatar National Research Fund through grant AICC05-0508-230001, Solar Trade (ST): An Equitable and Efficient Blockchain-Enabled Renewable Energy Ecosystem—“Opportunities for Fintech to Scale up Green Finance for Clean Energy”.

Data Availability Statement

The original contributions presented in the study are included in the article, further inquiries can be directed to the corresponding author.

Acknowledgments

Institutions funding this project, namely Qatar National Research Fund through grant AICC05-0508-230001, Solar Trade (ST): An Equitable and Efficient Blockchain-Enabled Renewable Energy Ecosystem—Opportunities for Fintech to Scale up Green Finance for Clean Energy, and Qatar Environment and Energy Research Institute, are gratefully acknowledged.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Ma, J.; Yang, Y.; Liu, Y.; Wang, B. Smart grid and distributed energy resource management. Renew. Sustain. Energy Rev. 2018, 95, 258–277. [Google Scholar]

- Zhang, Z.; Huang, H.; Chen, C. A review of distributed energy resource management in smart grids. J. Mod. Power Syst. Clean Energy 2019, 7, 345–356. [Google Scholar]

- Mohseni, S.; Madani, K. Challenges and opportunities of distributed energy resources in smart grids. Energy Rep. 2020, 6, 101–111. [Google Scholar]

- von Neumann, J.; Morgenstern, O. Theory of Games and Economic Behavior; Princeton University Press: Princeton, NJ, USA, 1944. [Google Scholar]

- Wang, Y.; Zhao, X.; Liu, D.; Li, G. Auction-based mechanisms for distributed energy trading in smart grids. IEEE Trans. Smart Grid 2018, 9, 1534–1543. [Google Scholar]

- Vázquez-Canteli, J.; Guo, A. Decentralized energy market models for demand response. Appl. Energy 2019, 236, 340–348. [Google Scholar]

- Liu, Z.; Sawakin, K.; Lee, E.K.H. Machine learning for smart energy demand prediction and supply optimization. J. Cybersecur. Priv. 2020, 1, 232–243. [Google Scholar]

- Boumaiza, A.; Sanfilippo, A. Local Energy Marketplace Agents-based Analysis. In Proceedings of the 2023 3rd International Conference on Electrical, Computer, Communications and Mechatronics Engineering (ICECCME), Tenerife, Canary Islands, Spain, 19–21 July 2023; pp. 1–6. [Google Scholar] [CrossRef]

- Boumaiza, A. Solar Energy Profiles for a Blockchain-based Energy Market. In Proceedings of the 2022 25th International Conference on Mechatronics Technology (ICMT), Kaohsiung, Taiwan, 18–21 November 2022; pp. 1–5. [Google Scholar] [CrossRef]

- Boumaiza, A.; Maher, K. Leveraging blockchain technology to enhance transparency and efficiency in carbon trading markets. Int. J. Electr. Power Energy Syst. 2024, 162, 110225. [Google Scholar] [CrossRef]

| Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).