Abstract

This paper investigates a Local Strategy-Driven Multi-Agent Deep Deterministic Policy Gradient (LSD-MADDPG) method for demand-side energy management systems (EMS) in smart communities. LSD-MADDPG modifies the conventional MADDPG framework by limiting data sharing during centralized training to only discretized strategic information. During execution, it relies solely on local information, eliminating post-training data exchange. This approach addresses critical challenges commonly faced by EMS solutions serving dynamic, increasing-scale communities, such as communication delays, single-point failures, scalability, and nonstationary environments. By leveraging and sharing only strategic information among agents, LSD-MADDPG optimizes decision-making while enhancing training efficiency and safeguarding data privacy—a critical concern in the community EMS. The proposed LSD-MADDPG has proven to be capable of reducing energy costs and flattening the community demand curve by coordinating indoor temperature control and electric vehicle charging schedules across multiple buildings. Comparative case studies reveal that LSD-MADDPG excels in both cooperative and competitive settings by ensuring fair alignment between individual buildings’ energy management actions and community-wide goals, highlighting its potential for advancing future smart community energy management.

1. Introduction

The global push for adopting distributed and renewable energy resources alongside increased electrification and the gradual retirement of dispatchable fossil-fueled power plants is introducing new operational challenges to existing electric power grids [1]. As a result, there is a growing need for a new paradigm of energy management that shifts from supply-side to demand-side control [2]. Under this new paradigm, it becomes critical to comprehensively understand the evolving energy consumption patterns and controllability of diverse electric grid loads and their associated energy assets, particularly heating and cooling systems, which account for the largest portion of residential electricity consumption [3]. Failing to leverage energy consumption flexibility via strategically adapting consumer behaviors can significantly strain the power grid, potentially leading to unexpected generation capacity shortages during this energy transition. A notable example occurred in the summer of 2022, when a heatwave in California brought the state’s power grid to the brink of rolling blackouts, impacting millions of residents. Authorities responded by urging residents to refrain from charging their electric vehicles (EVs) to conserve energy, challenging the feasibility of California’s ambitious goal to eliminate all gas-powered vehicles by 2035 [4,5]. On the other hand, implementing effective demand-side energy management enabled by smart grid technologies can optimize energy consumption, assisting in a stable and reliable power supply while improving consumer economic and comfort outcomes [6,7].

Conventional mathematical methods have been thoroughly studied for effective demand-side energy management systems (EMSs), including rule-based methods often derived from expert knowledge of well-studied systems, which provide a static, definite, and easy-to-implement solution but lack the flexibility and adaptability required for more complex dynamic environments [8]. Optimization-based techniques, such as linear programming [9,10], mixed-integer linear programming [11,12,13,14,15,16], and quadratic programming [17,18,19], utilize mathematical formulations to model and manage objective systems over a fixed time horizon, but can be computationally intensive, hard to scale, and may struggle to adapt to changing conditions. Heuristic algorithms like Particle Swarm Optimization [20,21,22] and Genetic Algorithms [23,24,25] offer alternative approaches for non-linear optimization, but despite handling intricate systems, they often demand significant computational resources and are complex to implement and generalize. Model Predictive Control (MPC) complements these methods by incorporating system dynamics into the optimization process, predicting future states, and making real-time decisions over a moving horizon [26,27]. However, implementing MPC at the community level, which requires coordination among stakeholders, poses challenges due to the evolving conditions and the high costs of modeling large-scale energy assets [28]. Additionally, the performances of all the above-mentioned model-based methods heavily rely on accurate system modeling, meaning that model inaccuracies and constrained data sharing can lead to suboptimal decisions, thereby highlighting the limitations of these methods in demand-side EMSs that require coordination among numerous independent energy consumers.

Unlike model-based methods, Reinforcement Learning (RL) addresses issues including adaptation and generalization to dynamic environments and reduces reliance on precise system models, making it well-suited to explore its potential for demand-side EMS [29]. By leveraging machine learning (ML) capabilities, RL-algorithm-equipped agents (representing heterogenous energy consumers) incrementally learn and understand complex systems through interactions with the environment, which reflect the energy system’s dynamics and external conditions, allowing them to adapt their learned policies to evolving system conditions. RL agents can be implemented via either centralized methods, where a single entity coordinates the learning and decision-making for all agents, or decentralized methods, where multiple agents learn and make decisions independently.

Several centralized methods, including Q-learning [30,31,32], Deep Q-Network (DQN) [33,34], Double Deep Q-Network [35], Deep Deterministic Policy Gradient (DDPG) [36,37,38,39,40], and Proximal Policy Optimization [41], have been explored for both supply-side and demand-side EMSs. A centralized EMS provides a shared global perspective, enabling coordinated decision-making and comprehensive system understanding for efficient energy management. However, a centralized controller managing an entire community’s energy consumption has widely recognized drawbacks: (a) It imposes significant communication overhead due to large datasets shared among many energy consumers and the controller [42]. (b) It faces scalability issues due to limited processing power and increasing computational complexity as the system grows. (c) It represents a single point of failure, making the system vulnerable to outages and reliability issues. (d) Centralized data storage raises concerns about data privacy and security, making the system a target for cyber-attacks.

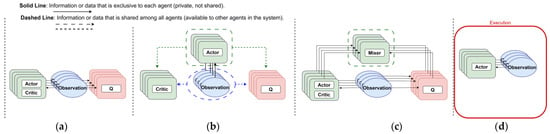

In contrast, decentralized methods rely primarily on local information for decision-making, with limited or no communication between multiple RL agents. This setting can improve problem-solving efficiency through parallel computations and enhance reliability by employing redundant agents [43,44]. Multi-agent reinforcement learning (MARL) approaches are typically categorized into three frameworks: Independent Learning, Centralized Critic, and Value Decomposition. Figure 1 illustrates the three categories and the information that is shared or exchanged between the Q-value function that evaluates actions and an actor–critic that involves: the actor (which makes decisions) and the critic (which evaluates them). Independent Learning in Figure 1a involves the Q-function and actor–critic to only have access to their individual observations, updating their policies without any data exchange, which simplifies the learning process and may improve energy management performance, but only if agents’ actions are non-conflicting [45]. However, this approach does not address two significant technical challenges in MARL: (i) interactions between agents give rise to a non-stationary environment, which inherently complicates learning convergence; and (ii) accurately attributing credit for an action to the responsible agent is difficult under the simultaneous actions of other agents [46]. As a result, it often struggles with achieving objectives that require coordination and typically underperforms compared to centralized strategies. Therefore, the main objective of many decentralized methods is to help each agent build a belief system that aligns its decision-making with its teammates and thus achieves a consensus on the overall system management goals [47].

Figure 1.

Training in Multi-Agent Reinforcement Learning (MARL) Frameworks: Independent Learning (a), Centralized Critic (b), Value Decomposition (c), and Execution Phase (d).

Centralized Training with Decentralized Execution (CTDE) addresses these challenges by allowing agents to use shared information during training while relying solely on local observations during execution, thus mitigating issues of non-stationarity and credit assignment. Centralized Critic, a CTDE framework shown in Figure 1b, requires access to all agents’ observations and actions for optimization after selecting actions, so the critic can accurately assess the impact of each agent’s actions. The decentralized actors (policies) only access local observations. This approach is effective in both competitive and cooperative environments because it can model adversarial interactions where agents compete, as well as collaborative ones. Another CTDE, Value Decomposition (VD), as shown in Figure 1c, differs from Centralized Critic in that agents are required to share only their predicted Q-values or critic values. This framework decomposes the global value function into individual agent-specific value components, enhancing coordination and aligning individual agents’ objectives with overall system goals. This approach is particularly effective in cooperative multi-agent systems. All three approaches only rely on their actors’ learned policies and local observations to make decisions in the execution phase, while the critic and Q-function are removed, as depicted in Figure 1d.

Various MARL algorithms have demonstrated improvements in energy trading, microgrid operation, building energy management, and other applications. Notable algorithms such as MA-DQN [48], MADDPG [49,50], MAAC [51,52], MAA2C [53], MAPPO [54,55,56], MATD3 [57], and MA-Q-learning [45,58,59,60] have been implemented in MARL, enabling RL agents to independently model, monitor, and control their respective environments. Such approaches could naturally solve a complex system’s decentralized control problems, such as the community EMSs we would like to study.

This paper explores a new decentralized MARL-based smart community EMS solution, aiming to address key challenges related to data overhead during execution, single-point failure risks, a nonstationary environment, solution scalability, and the interplay between cooperation and competition among individual community energy consumers. Specifically, a Local Strategy-Driven MADDPG (LSD-MADDPG) is proposed to manage the energy consumption of individual smart buildings equipped with different energy assets in a community. The EMS objective is to flatten the smart community electricity demand curve in a coordinated fashion while reducing the energy costs of individual buildings, all within their respective levels of user satisfaction.

In the MADDPG framework, each RL agent is equipped with an actor responsible for executing actions and a critic that evaluates those actions to optimize the policy learning. When multiple decentralized RL agents are introduced for community energy management, individual agents’ access to the information of the entire community’s energy system could be limited due to physical and/or privacy constraints. Unlike conventional Centralized Critic methods, in the proposed LSD-MADDPG, the critic of an agent does not have access to other agents’ observations; instead, RL agents only share their “strategies”, which are specifically designed quantitative indicators representing each agent’s prioritized intent for energy consumption—i.e., the scheduling of HVAC usage and EV charging—based on the current state of its resources or demands. The critic of each agent leverages these shared “strategies” from others to improve its policy evaluation while ensuring data privacy, whereas its actor’s execution remains locally decentralized. For benchmarking purposes, the proposed demand-side community EMS, i.e., LSD-MADDPG, is tested and compared against the common practice of a naïve controller (NC), a centralized single-agent controller, a fully decentralized MADDPG controller with access to only local observations, and a conventional CTDE MADDPG with a centralized critic.

The major contributions of this work are summarized as follows:

1. A new LSD-MADDPG is proposed for a smart community EMS, which modifies the conventional MADDPG framework to only share “strategies”, instead of the global state for RL agent critic training, demonstrating enhanced control performance. Via an innovatively designed Markov game, LSD-MADDPG achieves superior solution scalability while offering more equitable reward distribution, emphasizing fairness in competition and coordination. Additionally, it maintains data privacy while achieving competitive results against conventional MADDPG in terms of reward, energy cost, EV charging, and building comfort satisfaction, as well as community peak demand reduction.

2. A simulation environment for RL implementations is rigorously modeled, incorporating dedicated HVAC systems and EV charging stations to facilitate the development and testing of advanced control strategies in different community EMS scenarios.

This paper is organized as follows: Section 2 presents a detailed modeling of the smart community EMS problem. Section 3 discusses the Markov game formulation, encompassing the state-action space and reward design for the smart community EMS. Section 4 introduces the proposed MARL framework. Section 5 presents the case studies on different EMS controllers, as well as a comparison of their training and evaluation results, highlighting the performance of the proposed LSD-MADDPG. Finally, the paper concludes with Section 6, summarizing the key findings and outlining future work.

2. Smart Community EMS Modeling

The objective of the smart community EMS is to minimize , including the community’s building energy costs, penalties for EV charging deviations from the target state of charge (SoC), and the community’s peak demand costs, thereby encouraging energy demand shifting. This optimization problem can be expressed as (1):

where and denote the average energy consumption of EV charging and the HVAC system, respectively, for building n during time period t. represents the electricity price during time t and the peak demand price is . The term λ1 is a weighting factor that emphasizes the importance of minimizing energy cost, while λ2 is a weighting factor penalizing deviations from the EVs’ charging targets . is defined as the final SoC of the EV in building n by the end of its charging period. Lastly, λ3 is a weighting factor for reducing community daily peak demand charge.

2.1. Building Electric Vehicle Charging Modeling

The building EV charging model focuses on optimally scheduling energy usage to ensure EVs are adequately charged before departure. Charging occurs only during EV charging periods , defined as the time intervals when the EV is available for charging (2), and within its charging capacity limits (3). represents the constrained energy available for charging, while and are the minimum and maximum charging capacities of the EV charger.

The average amount of energy consumed by the charging station is further constrained by the SoC of EVs plugged in, as modeled in (4). This limit, is derived from the Tesla Model 3’s charging behavior, as it takes roughly 2 h to charge from 0% to 80% and an additional 2 h to charge from 80% to 100% using a 220V charger [61].

The energy losses during EV charging, primarily due to the onboard inverter’s efficiency, and the active cooling of the EV battery when charging at higher amps, for a longer time, or in hot climates, are modeled to fit the charging data published by Tesla Motors Club Forum [61]. The total energy charged to the EV battery, accounting for such charging inefficiency, is calculated by (5), with SoC updated by (6) and constrained by (7), where is the EV battery capacity.

2.2. Building HVAC System Modeling

The building HVAC system modeling ensures temperature comfort levels, providing heating and cooling to the building occupants, every minute m, but the temperature settings can only be adjusted every hour. This modeling approach maintains a consistent optimal hourly scheduling for both building EV charging and HVAC settings while accurately reflecting real-world thermodynamic conditions, where the HVAC system does not run continuously for an entire hour nor ramp up or down every second. Building resistance is used to quantify the building’s ability to resist the flow of heat between the inside and outside environments, considering windows, insulations, and building size [62]. A higher value indicates better insulation and lower heat transfer.

The building temperature Tn,m is updated via (8), accounting for thermal changes from the outdoor temperature and the heating gain Hg,n,m or cooling loss Cg,n,m from the building’s HVAC. In (8), is the mass of air and is the specific heat capacity of air. Building indoor temperature is strictly constrained by the occupants’ comfort levels (9).

The control logic for heating and cooling is enabled by setting the thermostat temperature between the minimum and maximum thermostat setpoints (10)–(11). In this context, and are binary values indicating cooling and heating.

The heating and cooling thermal energy inputs are calculated using (12) and (13), respectively. The HVAC’s heating output is a constant temperature Th,n and its cooling output is Tc,n, both supplied at air flow rate Mn.

The efficiency of the HVAC system, i.e., the coefficient of performance (COP) , exhibits a reciprocal relationship with outdoor temperature, with 273.15 added to convert Celsius to Kelvin. The HVAC system’s COP and efficiency factor determine the amount of thermal energy that can be supplied from electrical energy (14).

The constraints on heating (15) and cooling (16) are further restricted by the maximum HVAC power consumption and . The COP is constrained by the maximum COP, , as shown in (17).

The per minute (18) and per hour (19) electrical energy consumptions of a building HVAC system are then calculated as follows:

3. Markov Game Formulation of the Community EMS

A Markov Game could be designed to reformulate the community EMS problem as elaborated in Section 2 into a model-free RL control framework including N agents (i.e., EMS controllers), a joint action set {A1:N}, a joint observation set {O1:N} representing the local information that each agent can perceive from the global state S, and a joint reward set {R1:N}, as well as a system global state transition function .

State Space Design: Each agent n receives a private observation at time t, which is influenced by the current state of the system environment S → , where state S is affected by the joint actions of all agents. The initial state S0 is sampled from a probability distribution p(S) over the range [Smin, Smax]. The observation for agent n at t is defined as

where represents the current date and time, including month, day, and hour. Since the charging period of an EV at the building charging station is assumed to be unknown to the agent, is a binary value indicating the presence of an EV. is the average temperature of Tn,m within the hour. represents the total demand or “peak” for current time period t (hour in our study) of the community. It is not possible to predict the future precisely, so the daily peak cannot be optimized for a full 24-h period in advance. Instead, provides an hourly “peak” that can be reduced and shifted through learning from historical measurements from the community electricity distribution system.

Action Space Design: The action taken by each agent n is defined as an hourly decision on EV charging and HVAC setting at time t to influence the system environment, i.e., = [, ]. Each agent n employs a deterministic policy πn: → , which maps its local observations to actions . Actions are normalized to the interval [−1, 1] to ensure consistent scaling and facilitate the neural network learning process. The designed agent actions and are transformed into EMS control variables and , as defined in (21)–(23), representing the energy supplied to EV charging and the HVAC thermostat setting, respectively.

The EV charging action is clipped and mapped to a value between the minimum and maximum charging capacities, naturally constraining power as per (3). Similarly, the HVAC action is mapped within the thermostat’s operational range, ensuring compliance with (10).

Reward Design: The reward function is used to guide an RL agent’s learning process by mapping the immediate reward for each action to the state-action-next state tuple (S, A, S’). In the community EMS, each agent’s objective is to find the optimal control policy (π) that maximizes its cumulative reward, i.e., contribution to the EMS objective (1). Thus, the agent-level reward, , is designed to include the HVAC reward component , the EV charging reward component , the electricity cost reward component , and peak demand cost reward component , as shown in (30).

EV charging and SoC constraints (2) and (7) are soft-constrained, with penalties applied to the reward components when constraints are violated (24).

The reward for EV charging is based on the energy supplied to the vehicle, as shown in (25). If the EV is not present and the agent chooses not to charge, a small positive reward is given. This design provides feedback even during non-charging periods, reducing reward sparsity and ensuring appropriate rewards for inaction. Additionally, the agent is rewarded for charging during the designated charging period, independent of the time or EV SoC, encouraging completing charging within allowed time periods while penalizing charging outside, as described in (24). Decoupling the reward from specific time periods and SoC levels allows for flexible time-shifting, as each reward is independent of the charging status at any given time, with the electricity costs and peak demand cost being addressed in a later reward.

The HVAC reward component provides positive feedback when the temperature is maintained within the comfort range and uses a soft-constraint to penalize the agent for deviations from this range, encouraging efficient HVAC management.

The electricity cost reward (27) is designed to penalize the agent based on the cost of electric energy used for EV charging and HVAC operation, scaling them separately to prevent imbalances in different system capacities:

Peak demand calculated in (28) will be rewarded revolving around a predefined threshold D, which could be derived from historical data or based on distribution system operational experience. While D is set as a constant in our daily EMS model, it can be adjusted periodically to account for seasonal variations or system operation condition changes in peak demand. Each building agent is designed to be rewarded or penalized according to its contribution to reducing or increasing the peak demand, as expressed by (29). The reward design works by incentivizing agents to reduce peak demand by providing positive rewards when the total energy consumption is below D. If exceeds D, agents are penalized proportionally based on their contribution to the total demand, encouraging the reduction of energy usage during high demand periods.

Each reward component as designed above is then scaled between [−1, 1], with weight parameters λ applied in (30) to distribute the importance of the reward components within each agent based on the building’s operation prioritizations.

State Transition Functions: After agent n executes an action at observation , the next observation is obtained according to the state transition function. The transition functions of the HVAC and EV are discussed in Section 2 through Equations (8) and (6), but the transition function of EV presence and peak demand Kt are not fully observable by the agents, making this a Partially Observable Markov Decision Process (POMDP). Additionally, individual agents’ lack of comprehensive knowledge of the system’s global state due to physical limitations realistically reflects real-world community EMS settings, with agents mainly relying on their own sensors and local information for operation.

Multi-Agent Decision Problem: Each agent n obtains rewards as a function of the system state and its own action, represented as : S × → ℝ. These rewards are influenced by other agents’ actions. The agent n aims to maximize its total expected reward Rn= over the time horizon T, where γ is a discount factor in time period t.

4. Proposed LSD-MADDPG Algorithm

This section presents an enhanced MADDPG-based framework, resulting in the LSD-MADDPG algorithm tailored for the smart community EMS, offering a practical, scalable, and cost-effective approach. Since buildings in a community cannot be assumed to be able to observe the operation parameters of other buildings, the community EMS problem as modeled in (1)–(19) cannot be solved directly without a central operator maintaining a global view. To address the major limitations of centralized solutions, we explore a model-free MARL algorithm that enables individual building agents to learn optimal control policies (π) from experience data, guiding their actions to maximize the cumulative reward (Rn), which is designed to align with community EMS objectives. Each agent’s reward, defined in (30), incentivizes cooperation to maximize collective reward and pursuing individual objectives, forming a mixed cooperative–competitive dynamic.

In a conventional MADDPG framework, each agent is modeled using the DDPG configuration, including an actor network and a critic network. The critic network is configured to have full access to the observations and actions of other agents in the environment. During the training process, sharing these observations and actions allows each agent to evaluate its own actions in relation to the overall state of the system and the actions of others, building an understanding of the transition probabilities of the system environment and facilitating more informed decision-making based on the collective behaviors observed.

The proposed LSD-MADDPG differs in that each agent’s critic network is restricted to only have access to the “strategy” of other agents in addition to its own local observations and actions, reflecting the physical observability limitations in the community setting. The “strategy” of each building agent is defined as , which is further discretized to improve privacy protection as shown in (31)–(32). These agents’ operation strategies are proposed to introduce a simplified, quantifiable indication of each agent’s energy demand priority at a given time, which could be derived from historical operation experiences. The HVAC operation strategy reflects the urgency for a building to bring temperature into the comfort range, while the EV charging strategy indicates the urgency of scheduling charging.

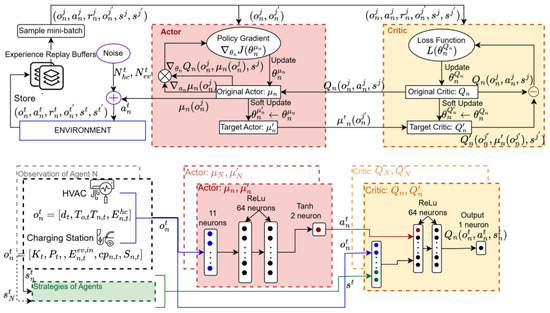

The lower half of the LSD-MADDPG architecture shown in Figure 2 depicts where strategies, observations, and actions are processed in the actor and critic neural networks. Each agent’s actor and critic networks are constructed as deep neural networks with dense layers, with ReLU activations in the hidden layers. The actor network maps RL agent’s local observation to its optimal actions, employing a Tanh activation function in its output layer. Meanwhile, the output layer of the critic network produces a Q-value to evaluate the effectiveness of the actor’s actions by using the agent’s action, local observation, and the shared strategies from all other agents. This expanded perspective allows the critic to more accurately calculate the Q-value for evaluating the actor’s performance. As a result, the critic can guide the actor toward more effective decision-making, improving policy optimization and coordination without oversharing private data.

Figure 2.

Algorithm architecture of LSD-MADDPG.

The upper half of Figure 2 illustrates the LSD-MADDPG architecture for a single agent, including key components such as the replay buffer, which stores past experiences, noise to facilitate exploration, and the detailed actor and critic network structures, including their respective target networks. The designed actor and critic network of agent n is denoted as µn and Qn, and their associated weights are and . The target networks, and , are used to stabilize the training process by being periodically updated with a soft update mechanism, where the weights of the target networks are adjusted as a weighted sum of the current network and the target network, using a small learning rate to ensure gradual changes and prevent large oscillations in weight updates. The critic’s loss function, , is minimized to improve the accuracy of the Q-value estimates by calculating the temporal difference (TD) error between the target Q-value and the predicted Q-value. This loss is used to adjust the critic’s weights to better estimate future rewards. The policy gradient, , is computed to update the actor’s weights and ensure the actor learns to select optimal actions over time by maximizing the expected return, achieved by adjusting the actor’s policy to choose actions that yield higher Q-values.

Because MADDPG is a deterministic algorithm, exploration is not inherently introduced through probabilistic actions, so noise must be introduced to the actions space to encourage exploration and prevent premature convergence to suboptimal solutions. Two types of noise are used to tailor to the specific action: Orstein-Uhlenbeck noise [63], which generates temporally correlated noise , mitigating large deviations in action exploration by selecting temperatures close to one another, and Arcsine noise [64] , characterized by a probability density function heavily weighted towards the minimum and maximum values, making it suitable for boundary decisions such as charging or not charging. Furthermore, to balance exploration and exploitation, ε-greedy is used, which gradually decreases exploration as training progresses, relying more on the learned policy. When not exploring, each agent takes action . During exploration, actions are adjusted by adding noise, resulting in or , where is the output action of the actor network .

Each agent’s actions , along with their observations at t, are applied to the simulated smart community to calculate building-level energy consumptions, resulting in an aggregated community demand on the distribution system. At the end of t, each agent calculates their reward given by (30), reads a new observation , and shares its strategy values with the community. These shared strategies are then accessed by all agents, and the experience tuple is stored in the buffer Ɗ. After every δ number of episodes, all agents’ actor and critic networks are trained by randomly sampling ρ number of transitions from the buffer. The transitions j, j ρ are used to update the neural network weights for both the actor and critic networks. For a detailed, sequential breakdown of this process, refer to Algorithm 1, which was coded in Python 3.8 using PyTorch 2.4 [65]. During training, both actor and critic networks are actively updated, but upon completion, the critic network and replay buffer are discarded. The final policy is then used for online execution, where the agent selects actions based on local observations.

| Algorithm 1 Offline training phase of LSD-MADDPG |

| 1. Initialize Networks and Replay Buffer: - Initialize actor network µn and critic network Qn for each agent, n - Initialize target networks and with weights: and . - Initialize experience replay buffer Ɗ 2. Training Loop - For each episode e - Initialize the environment and get - For each timestep t until terminal state: A. Observe current state and strategies B. Select action using actor network C. Execute action bserve reward , next state and next strategies D. Store transition in Ɗ E. If episode e is divisible by δ: i. Sample minibatch of ρ transitions from Ɗ ii. Update Critic Network: - Compute target action using target actor network: - Compute target Q-value: - Compute current Q-value - Compute critic loss: . - Perform gradient descent on to update iii. Update Actor Network - Compute policy gradient: = . - Perform gradient ascent on to update iv. Update Target Networks - For each agent n 3. End Training |

5. Case Study

A smart community is simulated to evaluate the effectiveness of the proposed LSD-MADDPG demand-side EMS. Each building within the community is simulated to be equipped with an HVAC system and an EV charging station for ease of implementation, which could be expanded to multi-zone HVAC systems and multiple charging stations. The community EMS’s objectives are designed to minimize energy costs, ensure occupant comfort, and meet EV charging demands at the building level while reducing the peak energy demand at the community level.

The performance of the proposed LSD-MADDPG controller is further evaluated via a comprehensive comparison with four other controllers:

- (1)

- Naïve controller (NC): Represents the common practice in building energy management, involving setting the thermostat at a constant 72℉ and using a simple plug-in and charge-at-full-capacity charger. This approach does not optimize the community objective or energy consumption, hence the term ‘naïve’.

- (2)

- DDPG controller: A centralized single-agent RL controller for the entire community EMS operating within the environment and state-action space described in Section 3.

- (3)

- I-MADDPG controller: Independent Learning MADDPG with decentralized training and decentralized execution.

- (4)

- CTDE-MADDPG controller: MADDPG with centralized critic training and decentralized execution, with the agent’s critic network accessing the entire global state.

5.1. Simulation Parameters

Each episode was simulated for 48 h, from 12 p.m. to 12 p.m. two days later, to capture extended peak demand periods, including both morning and evening peaks, and to accommodate realistic EV charging scenarios, where vehicles may arrive, depart, and require multiple charging cycles. Episodes were randomly sampled from yearly data to account for temperature variations and introduce variability. Additionally, in our cases, all weights in (30) were set equally for each reward component; all other simulation parameters are summarized in Table 1.

Table 1.

Training hyperparameters.

5.2. Performance Analysis with Three Identical Buildings

To demonstrate the effectiveness of the proposed LSD-MADDPG in energy management via consumption shifting, all the EV schedules and building attributes, including HVAC system settings, were purposely set as identical across three buildings in the simulated community. This setting ensured that the observed charging patterns resulted from deliberate load-shifting rather than from differences in EV availability. Hourly weather data and electricity prices, which featured two price points for peak ($0.54/kWh) and off-peak hours ($0.22/kWh), were sourced from CityLearn Challenge 2022 Phase 1 dataset [66]. EV presence was consistently maintained across each day, during the hours from 18:00 to 8:00 each day. Table 2 details the building, charging station, EV, and HVAC systems settings in the smart community EMS simulation.

Table 2.

Specifications for buildings and energy systems.

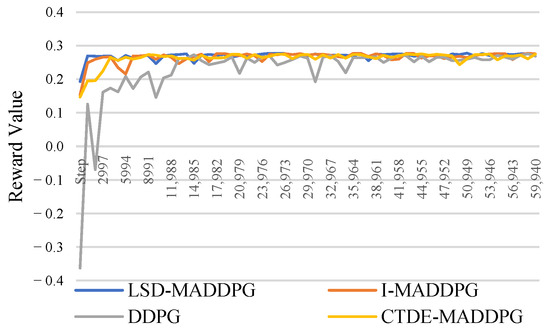

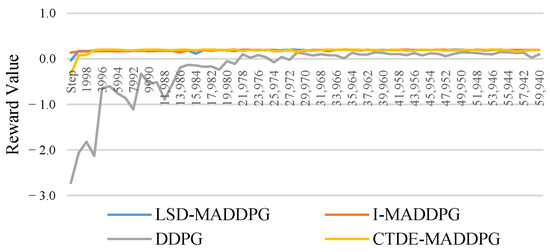

Figure 3 illustrates the training reward values of four RL based EMS controllers, providing a comprehensive performance comparison. The results demonstrate that the LSD-MADDPG exhibits a robust performance in managing variability, consistently achieving higher cumulative rewards. Specifically, LSD-MADDPG outperformed all the other three RL controllers with the highest mean reward of 0.271 and minimal solution variability, indicated by a standard deviation of 0.0071. Meanwhile, the I-MADDPG and CTDE-MADDPG controllers also showed robust performance, with means of 0.270 and 0.269 and standard deviations of 0.0101 and 0.0122, respectively, while the DDPG controller lagged with the lowest mean of 0.251 and highest variability of 0.047682.

Figure 3.

Average training rewards for RL algorithms.

The evaluation was conducted on the CityLearn Challenge 2022 Phase 2 dataset [66], spanning a 48-h period from 12 p.m. on 1 January to 12 p.m. on 3 January. Table 3 presents the 2-day evaluation results of various EMS controllers, detailing their achieved community average daily peak demand, total energy consumption costs, community charging satisfaction, buildings’ peak contribution, total reward, and a Gini Coefficient [67]. Average peak reflects the highest community energy demand recorded within a day, averaged over two days of evaluation. Lower peak demand indicates better community energy load management, to reduce strain on the distribution system. Total cost refers to the total community electricity cost, where lower costs indicate more energy usage during low-price periods. Charge refers to the percentage of required power for the entire community’s EV charging, where a higher value indicates better fulfillment of EV charging demand. Peak contribution sums each building’s contribution in reducing community peak demand, as described in (29), quantifying the reduction in the communities’ hourly peak electricity demand. Total reward represents the controller’s ability to optimize multiple objectives, including cost savings, temperature comfort, peak demand reduction, and efficient EV charging. Higher rewards indicate better overall system performance. Finally, the Gini Coefficient quantifies the equity of reward distribution among agents, where a lower Gini coefficient represents a more equitable allocation of rewards, indicating a fairer distribution of energy cost savings, temperature comfort, peak demand reduction contributions, and EV charging performance across all building agents. Throughout the simulation, building comfort was consistently maintained within predefined temperature requirement bounds; as a result, it is not tabulated.

Table 3.

Evaluation results for three buildings.

The multi-objective problem in the demand-side EMS requires balancing various objectives included in (30). When full community information is shared among agents during training, CTDE-MADDPG demonstrates strong cooperation between building agents, achieving the highest community reward of 38.72. This is reflected in its superior ability to balance all objectives, especially peak contribution and community costs. However, LSD-MADDPG follows closely with a reward of 38.69, highlighting its comparable strong overall performance, without compromising data privacy. Both I-MADDPG and LSD-MADDPG, which promote more competitive environments for individual rewards, lead to higher total charge within the community and exhibit lower Gini Coefficients, resulting in a more equitable distribution of rewards. LSD-MADDPG achieves the lowest Gini Coefficient of 0.0125 and best peak contribution. I-MADDPG excels in charge management but lags in peak contribution and overall rewards, due to its lack of information sharing and thus coordination among RL agents. In contrast, DDPG lacks competition and exploits individual buildings, resulting in the highest Gini coefficient and lowest community charging satisfaction, as it prioritizes reducing costs at the expense of other objectives.

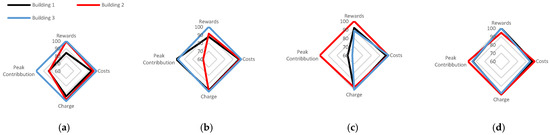

Figure 4 further elaborates individual building agents’ performance across four metrics—i.e., building rewards, peak contribution, energy cost, and charging satisfaction—with LSD-MADDPG showing the most balanced contribution, particularly in peak demand reduction, compared to other RL controllers. The closer proximity of the performance lines for each building agent indicates a more equitable load distribution among buildings, highlighting fairer participation across agents. The values are normalized such that the maximum value for each metric is set to 100%, with other values scaled proportionally. Higher values in building rewards, peak contribution, and charging satisfaction indicate better performance, while lower values in energy cost reflect greater energy efficiency. The LSD-MADDPG’s more balanced energy shifting (i.e., peak demand reduction) among buildings demonstrates that it secures a fair participation/coordination among agents, reducing the burden on any single building to manage the community energy demand. This more equitable cost/benefit distribution enhances system reliability by lowering the risk of over-reliance on specific buildings and improving scalability, allowing the system to remain effective as more buildings are included in the community EMS.

Figure 4.

Performance comparison of controllers for individual buildings across rewards, costs, charge, and peak contribution, including (a) DDPG, (b) CTDE, (c) I-MADDPG, and (d) LSD-MADDPG.

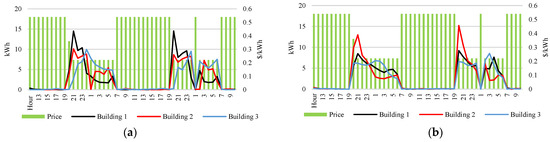

Figure 5 compares the individual buildings’ 48-h energy consumption achieved by CTDE-MADDPG and LSD-MADDPG to illustrate how the two algorithms manage the building HVAC and EV charging in response to varying electricity prices. On the first price drop, coinciding with EV presence, buildings initiate charging, leading to the highest energy peaks observed throughout the day. This is a rational behavior, as charging typically benefits from improved charging efficiencies when the SoC is low. While both algorithms reduce energy consumption during high-price hours, CTDE-MADDPG exhibits steeper fluctuations, with only a few buildings responsible for most of the energy reduction. In contrast, LSD-MADDPG smooths out consumption over a broader range of time, suggesting it prioritizes a more balanced distribution of energy shifting across all buildings, which explains the higher costs due to increased consumption during hour 19 of day 1. By involving more buildings in energy management, LSD-MADDPG achieves a steadier and more evenly distributed electric load among the buildings, while CTDE-MADDPG tends to concentrate energy adjustments to fewer buildings. This feature of LSD-MADDPG is better suited for achieving collective community energy management goals, such as peak reduction and load balancing across the entire community. Furthermore, LSD-MADDPG illustrates its advantages in achieving such collaboration while keeping data private. This proposed approach enables agents to both compete and cooperate effectively, striking a balance between equitable reward distribution and community collaboration.

Figure 5.

Energy consumption of (a) CTDE-MADDPG and (b) LSD-MADDPG comparison.

5.3. Scalability Performance Analysis on the Proposed LSD-MADDPG

To evaluate the scalability of the proposed LSD-MADDPG, a smart community including nine buildings with various EV charging and building HVAC configurations was simulated. Table 4 provides a detailed breakdown of building types and their respective thermal and EV charging station characteristics, capturing the diversity in energy requirements and capacities across various community buildings. These various buildings simulated in the community increase problem complexity and showcase the adaptability of the proposed EMS solution, from smaller residential homes to expansive commercial complexes and their interactions with one another.

Table 4.

Building type and attributes.

Table 5 tabulates electricity prices based on the time of day and specific month, aligning with typical winter and summer peak energy consumption patterns. For instance, winter features morning and evening peaks due to high heating demands, while summer exhibits midday peaks driven by air conditioning usage. Transitional months typically experience more moderate weather conditions. Table 6 provides a detailed breakdown of the hourly presence patterns of EVs across various building types, segmented by day type—weekday, weekend, and holiday, based upon [68]. For each building, the table lists the probability of EV presence during specific hours, reflecting tailored energy management needs.

Table 5.

Peak and off-peak monthly pricing.

Table 6.

Hourly EV presence probability and day classification.

The four MARL controllers were trained using outdoor temperature data from [69] and the same number of timesteps, with average training rewards graphed in Figure 6. Table 7 portrays the way that LSD-MADDPG outperformed all other strategies, achieving the highest mean reward and the lowest variability during training. I-MADDPG and CTDE-MADDPG followed with comparable performance, while DDPG showed significantly lower rewards and higher variability, indicating challenges with scalability and in competitive environments where individual objectives must be balanced.

Figure 6.

Average RL rewards during training.

Table 7.

Controller performance comparison.

The main challenge in algorithm scalability lies in scheduling EV charging to balance peak demand reduction without overly sacrificing individual building needs or community objectives. The advantage of MADDPG, i.e., using separate actors and critics for each controller, is that each building can learn its own objectives while still considering the community goal of reducing peak demand. Peak contribution is primarily influenced by EV schedules because buildings with more charging hours (i.e., EVs are present) can spread out their energy use, while those with fewer hours may have to concentrate their charging demands. As the system scales, managing such flexibilities becomes more complex, and varying schedules across buildings create fluctuations in community peak demand, making efficient coordination more challenging. This is reflected in Table 8’s evaluation results, which present the reward and total community charge, i.e., the total kWh provided to the EVs in the community, calculated as the difference between their initial SoCs and the target SoCs over the 10-day period. The naïve approach assumes all EVs are charged towards their full target SoCs without interruptions when present, establishing the maximum charge demand for the community at the cost of all other objectives.

Table 8.

Controller evaluation case II.

A 10-day evaluation period was chosen to capture the building energy consumption characteristics of different days and schedules, ensuring robustness in various scenarios. LSD-MADDPG outperforms all controllers, which struggle to identify optimal EV charging schedules, demonstrating LSD-MADDPG’s adaptability and scalability in larger scale systems. It achieves the highest reward by meeting 83% of the total community’s EV charging demand. This indicates that LSD-MADDPG scales best, as its trained policy achieved superior results within the same number of timesteps as other algorithms. It demonstrates more efficient adaptability in a complex environment, e.g., competitive settings with diverse buildings, varied equipment capacities, and schedules.

Ultimately, LSD-MADDPG’s crucial strategic insights allow agents to better plan and coordinate their energy usage to optimize community objectives, while satisfying their individual charging needs and maintaining thermal comfort. This proposed approach not only enhances scalability, privacy, and decision-making efficiency, but also supports competition, a critical aspect as the complexity and variability of the community energy system increases.

6. Conclusions

This paper presents an enhanced MARL controller, i.e., LSD-MADDPG, for the demand-side energy management of smart communities. The proposed LSD-MADDPG emphasizes the equivalent priorities of pursuing community-level energy management objectives as well as satisfying individual buildings’ operational requirements in maintaining indoor comfort and optimizing EV charging, two critical elements of energy consumption management in buildings of future smart communities. Through competitive and cooperative interactions among building agents, the proposed LSD-MADDPG EMS controller performs comparably to CTDE-MADDPG in small scale cases while preserving data privacy, but demonstrates better scalability in more complex system environments. This proposed approach, considering the physical limitations of practical EMS systems in data access and privacy preservation, leverages the sharing of energy conservation strategies among agents, avoids the pitfalls of centralized systems—such as single points of failure—and reduces the potential data leakage and communication delays of conventional MADDPG. Future work will aim to incorporate renewable energy sources into the building system, adding complexity but potentially increasing the sustainability and efficacy of demand-side energy management in communities dominated by distributed energy resources (DERs).

Author Contributions

Conceptualization, P.W., N.W. and J.L.; Methodology, P.W., N.W. and J.L.; Validation, P.W.; Writing—original draft, P.W.; Writing—review & editing, P.W. and J.L.; Supervision, J.L.; Funding acquisition, J.L. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded in part by the U.S. National Science Foundation IUSE-2121242 and New Jersey Economic Development Authority (NJEDA) Wind Institute Grant.

Data Availability Statement

The original contributions presented in the study are included in the article, further inquiries can be directed to the corresponding author.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Rathor, S.K.; Saxena, D. Energy management system for smart grid: An overview and key issues. Int. J. Energy Res. 2020, 44, 4067–4109. [Google Scholar] [CrossRef]

- Fernandez, E.; Hossain, M.; Nizami, M. Game-theoretic approach to demand-side energy management for a smart neighbourhood in Sydney incorporating renewable resources. Appl. Energy 2018, 232, 245–257. [Google Scholar] [CrossRef]

- Benítez, I.; Díez, J.-L. Automated Detection of Electric Energy Consumption Load Profile Patterns. Energies 2022, 15, 2176. [Google Scholar] [CrossRef]

- “California Moves toward Phasing Out Sale of Gas-Powered Vehicles by 2035” in NewsHour: Nation. 25 August 2022. Available online: https://www.pbs.org/newshour/nation/california-moves-toward-phasing-out-sale-of-gas-powered-vehicles-by-2035 (accessed on 26 May 2024).

- Albeck-Ripka, L. “Amid Heat Wave, California Asks Electric Vehicle Owners to Limit Charging”. The New York Times. Available online: https://www.nytimes.com/2022/09/01/us/california-heat-wave-flex-alert-ac-ev-charging.html (accessed on 2 July 2023).

- Cecati, C.; Citro, C.; Siano, P. Combined Operations of Renewable Energy Systems and Responsive Demand in a Smart Grid. IEEE Trans. Sustain. Energy 2011, 2, 468–476. [Google Scholar] [CrossRef]

- Khan, H.W.; Usman, M.; Hafeez, G.; Albogamy, F.R.; Khan, I.; Shafiq, Z.; Khan, M.U.A.; Alkhammash, H.I. Intelligent Optimization Framework for Efficient Demand-Side Management in Renewable Energy Integrated Smart Grid. IEEE Access 2021, 9, 124235–124252. [Google Scholar] [CrossRef]

- Liu, H.; Gegov, A.; Cocea, M. Rule Based Networks: An Efficient and Interpretable Representation of Computational Models. J. Artif. Intell. Soft Comput. Res. 2017, 7, 111–123. [Google Scholar] [CrossRef][Green Version]

- Babonneau, F.; Caramanis, M.; Haurie, A. A linear programming model for power distribution with demand response and variable renewable energy. Appl. Energy 2016, 181, 83–95. [Google Scholar] [CrossRef]

- Loganathan, N.; Lakshmi, K. Demand Side Energy Management for Linear Programming Method. Indones. J. Electr. Eng. Comput. Sci. 2015, 14, 72–79. [Google Scholar] [CrossRef]

- Nejad, B.M.; Vahedi, M.; Hoseina, M.; Moghaddam, M.S. Economic Mixed-Integer Model for Coordinating Large-Scale Energy Storage Power Plant with Demand Response Management Options in Smart Grid Energy Management. IEEE Access 2022, 11, 16483–16492. [Google Scholar] [CrossRef]

- Omu, A.; Choudhary, R.; Boies, A. Distributed energy resource system optimisation using mixed integer linear programming. Energy Policy 2013, 61, 249–266. [Google Scholar] [CrossRef]

- Shakouri, G.H.; Kazemi, A. Multi-objective cost-load optimization for demand side management of a residential area in smart grids. Sustain. Cities Soc. 2017, 32, 171–180. [Google Scholar] [CrossRef]

- Wouters, C.; Fraga, E.S.; James, A.M. An energy integrated, multi-microgrid, MILP (mixed-integer linear programming) approach for residential distributed energy system planning—A South Australian case-study. Energy 2015, 85, 30–44. [Google Scholar] [CrossRef]

- Foroozandeh, Z.; Ramos, S.; Soares, J.; Lezama, F.; Vale, Z.; Gomes, A.; Joench, R.L. A Mixed Binary Linear Programming Model for Optimal Energy Management of Smart Buildings. Energies 2020, 13, 1719. [Google Scholar] [CrossRef]

- Li, Z.; Wu, L.; Xu, Y.; Zheng, X. Stochastic-Weighted Robust Optimization Based Bilayer Operation of a Multi-Energy Building Microgrid Considering Practical Thermal Loads and Battery Degradation. IEEE Trans. Sustain. Energy 2021, 13, 668–682. [Google Scholar] [CrossRef]

- Saghezchi, F.; Saghezchi, F.; Nascimento, A.; Rodriguez, J. Quadratic Programming for Demand-Side Management in the Smart Grid. In Proceedings of the 8th International Conference, WICON 2014, Lisbon, Portugal, 13–14 November 2014; pp. 97–104. [Google Scholar] [CrossRef]

- Batista, A.C.; Batista, L.S. Demand Side Management using a multi-criteria ϵ-constraint based exact approach. Expert Syst. Appl. 2018, 99, 180–192. [Google Scholar] [CrossRef]

- Hosseini, S.M.; Carli, R.; Dotoli, M. Robust Optimal Energy Management of a Residential Microgrid Under Uncertainties on Demand and Renewable Power Generation. IEEE Trans. Autom. Sci. Eng. 2021, 18, 618–637. [Google Scholar] [CrossRef]

- Aghajani, G.R.; Shayanfar, H.A.; Shayeghi, H. Presenting a multi-objective generation scheduling model for pricing demand response rate in micro-grid energy management. Energy Convers. Manag. 2015, 106, 308–321. [Google Scholar] [CrossRef]

- Viani, F.; Salucci, M. A User Perspective Optimization Scheme for Demand-Side Energy Management. IEEE Syst. J. 2018, 12, 3857–3860. [Google Scholar] [CrossRef]

- Kumar, R.; Raghav, L.; Raju, D.; Singh, A. Intelligent demand side management for optimal energy scheduling of grid connected microgrids. Appl. Energy 2021, 285, 116435. [Google Scholar] [CrossRef]

- Rahim, S.; Javaid, N.; Ahmad, A.; Khan, S.A.; Khan, Z.A.; Alrajeh, N.; Qasim, U. Exploiting heuristic algorithms to efficiently utilize energy management controllers with renewable energy sources. Energy Build. 2016, 129, 452–470. [Google Scholar] [CrossRef]

- Jiang, X.; Xiao, C. Household Energy Demand Management Strategy Based on Operating Power by Genetic Algorithm. IEEE Access 2019, 7, 96414–96423. [Google Scholar] [CrossRef]

- Eisenmann, A.; Streubel, T.; Rudion, K. Power Quality Mitigation via Smart Demand-Side Management Based on a Genetic Algorithm. Energies 2022, 15, 1492. [Google Scholar] [CrossRef]

- Ouammi, A. Optimal Power Scheduling for a Cooperative Network of Smart Residential Buildings. IEEE Trans. Sustain. Energy 2016, 7, 1317–1326. [Google Scholar] [CrossRef]

- Gbadega, P.A.; Saha, A.K. Predictive Control of Adaptive Micro-Grid Energy Management System Considering Electric Vehicles Integration. Int. J. Eng. Res. Afr. 2022, 59, 175–204. [Google Scholar] [CrossRef]

- Arroyo, J.; Manna, C.; Spiessens, F.; Helsen, L. Reinforced model predictive control (RL-MPC) for building energy management. Appl. Energy 2022, 309, 118346. [Google Scholar] [CrossRef]

- Vamvakas, D.; Michailidis, P.; Korkas, C.; Kosmatopoulos, E. Review and Evaluation of Reinforcement Learning Frameworks on Smart Grid Applications. Energies 2023, 16, 5326. [Google Scholar] [CrossRef]

- Chen, S.-J.; Chiu, W.-Y.; Liu, W.-J. User Preference-Based Demand Response for Smart Home Energy Management Using Multiobjective Reinforcement Learning. IEEE Access 2021, 9, 161627–161637. [Google Scholar] [CrossRef]

- Zhou, S.; Hu, Z.; Gu, W.; Jiang, M.; Zhang, X.-P. Artificial intelligence based smart energy community management: A reinforcement learning approach. CSEE J. Power Energy Syst. 2019, 5, 1–10. [Google Scholar] [CrossRef]

- Alfaverh, F.; Denai, M.; Sun, Y. Demand Response Strategy Based on Reinforcement Learning and Fuzzy Reasoning for Home Energy Management. IEEE Access 2020, 8, 39310–39321. [Google Scholar] [CrossRef]

- Mathew, A.; Roy, A.; Mathew, J. Intelligent Residential Energy Management System Using Deep Reinforcement Learning. IEEE Syst. J. 2020, 14, 5362–5372. [Google Scholar] [CrossRef]

- Forootani, A.; Rastegar, M.; Jooshaki, M. An Advanced Satisfaction-Based Home Energy Management System Using Deep Reinforcement Learning. IEEE Access 2022, 10, 47896–47905. [Google Scholar] [CrossRef]

- Liu, Y.; Zhang, D.; Gooi, H.B. Optimization strategy based on deep reinforcement learning for home energy management. CSEE J. Power Energy Syst. 2020, 6, 572–582. [Google Scholar] [CrossRef]

- Yu, L.; Xie, W.; Xie, D.; Zou, Y.; Zhang, D.; Sun, Z.; Zhang, L.; Zhang, Y.; Jiang, T. Deep Reinforcement Learning for Smart Home Energy Management. IEEE Internet Things J. 2019, 7, 2751–2762. [Google Scholar] [CrossRef]

- Zenginis, I.; Vardakas, J.; Koltsaklis, N.E.; Verikoukis, C. Smart Home’s Energy Management Through a Clustering-Based Reinforcement Learning Approach. IEEE Internet Things J. 2022, 9, 16363–16371. [Google Scholar] [CrossRef]

- Kodama, N.; Harada, T.; Miyazaki, K. Home Energy Management Algorithm Based on Deep Reinforcement Learning Using Multistep Prediction. IEEE Access 2021, 9, 153108–153115. [Google Scholar] [CrossRef]

- Ye, Y.; Qiu, D.; Wu, X.; Strbac, G.; Ward, J. Model-Free Real-Time Autonomous Control for a Residential Multi-Energy System Using Deep Reinforcement Learning. IEEE Trans. Smart Grid 2020, 11, 3068–3082. [Google Scholar] [CrossRef]

- Huang, C.; Zhang, H.; Wang, L.; Luo, X.; Song, Y. Mixed Deep Reinforcement Learning Considering Discrete-continuous Hybrid Action Space for Smart Home Energy Management. J. Mod. Power Syst. Clean Energy 2022, 10, 743–754. [Google Scholar] [CrossRef]

- Härtel, F.; Bocklisch, T. Minimizing Energy Cost in PV Battery Storage Systems Using Reinforcement Learning. IEEE Access 2023, 11, 39855–39865. [Google Scholar] [CrossRef]

- Parvini, M.; Javan, M.; Mokari, N.; Arand, B.; Jorswieck, E. AoI Aware Radio Resource Management of Autonomous Platoons via Multi Agent Reinforcement Learning. In Proceedings of the 2021 17th International Symposium on Wireless Communication Systems (ISWCS), Berlin, Germany, 6–9 September 2021; pp. 1–6. [Google Scholar] [CrossRef]

- Jendoubi, I.; Bouffard, F. Multi-agent hierarchical reinforcement learning for energy management. Appl. Energy 2022, 332, 120500. [Google Scholar] [CrossRef]

- Arora, A.; Jain, A.; Yadav, D.; Hassija, V.; Chamola, V.; Sikdar, B. Next Generation of Multi-Agent Driven Smart City Applications and Research Paradigms. IEEE Open J. Commun. Soc. 2023, 4, 2104–2121. [Google Scholar] [CrossRef]

- Xu, X.; Jia, Y.; Xu, Y.; Xu, Z.; Chai, S.; Lai, C.S. A Multi-Agent Reinforcement Learning-Based Data-Driven Method for Home Energy Management. IEEE Trans. Smart Grid 2020, 11, 3201–3211. [Google Scholar] [CrossRef]

- Kim, H.; Kim, S.; Lee, D.; Jang, I. Avoiding collaborative paradox in multi-agent reinforcement learning. ETRI J. 2021, 43, 1004–1012. [Google Scholar] [CrossRef]

- Ahrarinouri, M.; Rastegar, M.; Seifi, A.R. Multiagent Reinforcement Learning for Energy Management in Residential Buildings. IEEE Trans. Ind. Inform. 2020, 17, 659–666. [Google Scholar] [CrossRef]

- Lu, R.; Bai, R.; Luo, Z.; Jiang, J.; Sun, M.; Zhang, H.-T. Deep Reinforcement Learning-Based Demand Response for Smart Facilities Energy Management. IEEE Trans. Ind. Electron. 2021, 69, 8554–8565. [Google Scholar] [CrossRef]

- Lu, R.; Li, Y.-C.; Li, Y.; Jiang, J.; Ding, Y. Multi-agent deep reinforcement learning based demand response for discrete manufacturing systems energy management. Appl. Energy 2020, 276, 115473. [Google Scholar] [CrossRef]

- Guo, G.; Gong, Y. Multi-Microgrid Energy Management Strategy Based on Multi-Agent Deep Reinforcement Learning with Prioritized Experience Replay. Appl. Sci. 2023, 13, 2865. [Google Scholar] [CrossRef]

- Ye, Y.; Tang, Y.; Wang, H.; Zhang, X.-P.; Strbac, G. A Scalable Privacy-Preserving Multi-Agent Deep Reinforcement Learning Approach for Large-Scale Peer-to-Peer Transactive Energy Trading. IEEE Trans. Smart Grid 2021, 12, 5185–5200. [Google Scholar] [CrossRef]

- Xia, Y.; Xu, Y.; Feng, X. Hierarchical Coordination of Networked-Microgrids Toward Decentralized Operation: A Safe Deep Reinforcement Learning Method. IEEE Trans. Sustain. Energy 2024, 15, 1981–1993. [Google Scholar] [CrossRef]

- Lee, S.; Choi, D.-H. Federated Reinforcement Learning for Energy Management of Multiple Smart Homes With Distributed Energy Resources. IEEE Trans. Ind. Inform. 2022, 18, 488–497. [Google Scholar] [CrossRef]

- Deshpande, K.; Möhl, P.; Hämmerle, A.; Weichhart, G.; Zörrer, H.; Pichler, A.; Deshpande, K.; Möhl, P.; Hämmerle, A.; Weichhart, G.; et al. Energy Management Simulation with Multi-Agent Reinforcement Learning: An Approach to Achieve Reliability and Resilience. Energies 2022, 15, 7381. [Google Scholar] [CrossRef]

- Hossain, M.; Enyioha, C. Multi-Agent Energy Management Strategy for Multi-Microgrids Using Reinforcement Learning. In Proceedings of the 2023 IEEE Texas Power and Energy Conference (TPEC), College Station, TX, USA, 13–14 February 2023; pp. 1–6. [Google Scholar] [CrossRef]

- Pigott, A.; Crozier, C.; Baker, K.; Nagy, Z. GridLearn: Multiagent Reinforcement Learning for Grid-Aware Building Energy Management. Electr. Power Syst. Res. 2021, 213, 108521. [Google Scholar] [CrossRef]

- Chen, T.; Bu, S.; Liu, X.; Kang, J.; Yu, F.R.; Han, Z. Peer-to-Peer Energy Trading and Energy Conversion in Interconnected Multi-Energy Microgrids Using Multi-Agent Deep Reinforcement Learning. IEEE Trans. Smart Grid 2021, 13, 715–727. [Google Scholar] [CrossRef]

- Samadi, E.; Badri, A.; Ebrahimpour, R. Decentralized multi-agent based energy management of microgrid using reinforcement learning. Int. J. Electr. Power Energy Syst. 2020, 122, 106211. [Google Scholar] [CrossRef]

- Fang, X.; Zhao, Q.; Wang, J.; Han, Y.; Li, Y. Multi-agent Deep Reinforcement Learning for Distributed Energy Management and Strategy Optimization of Microgrid Market. Sustain. Cities Soc. 2021, 74, 103163. [Google Scholar] [CrossRef]

- Lai, B.-C.; Chiu, W.-Y.; Tsai, Y.-P. Multiagent Reinforcement Learning for Community Energy Management to Mitigate Peak Rebounds Under Renewable Energy Uncertainty. IEEE Trans. Emerg. Top. Comput. Intell. 2022, 6, 568–579. [Google Scholar] [CrossRef]

- Tesla Motors Club. ‘Charging Efficiency,’ Tesla Motors Club Forum. 2018. Available online: https://teslamotorsclub.com/tmc/threads/charging-efficiency.122072/ (accessed on 4 January 2024).

- MathWorks. “Model a House Heating System” MathWorks. 2024. Available online: https://www.mathworks.com/help/simulink/ug/model-a-house-heating-system.html#responsive_offcanvas (accessed on 3 June 2022).

- Gillespie, D.T. Continuous Markov processes. In Markov Processes; Gillespie, D.T., Ed.; Academic Press: Cambridge, MA, USA, 1992; pp. 111–219. [Google Scholar] [CrossRef]

- Jiang, J.-J.; He, P.; Fang, K.-T. An interesting property of the arcsine distribution and its applications. Stat. Probab. Lett. 2015, 105, 88–95. [Google Scholar] [CrossRef]

- Paszke, A.; Gross, S.; Massa, F.; Lerer, A.; Bradbury, J.; Chanan, G.; Killeen, T.; Lin, Z.; Gimelshein, N.; Antiga, L.; et al. PyTorch: An Imperative Style, High-Performance Deep Learning Library. arXiv 2019, arXiv:1912.01703. [Google Scholar]

- Nweye, K.; Sankaranarayanan, S.; Nagy, G.Z. The CityLearn Challenge 2022. Texas Data Repository, V1. 2023. Available online: https://dataverse.tdl.org/dataset.xhtml?persistentId=doi:10.18738/T8/0YLJ6Q (accessed on 11 September 2023).

- Hasell, J. Measuring inequality: What Is the Gini Coefficient? 2023. Published Online at OurWorldInData.org. Available online: https://ourworldindata.org/what-is-the-gini-coefficient’ (accessed on 6 July 2024).

- Pritchard, E.; Borlaug, B.; Yang, F.; Gonder, J. Evaluating Electric Vehicle Public Charging Utilization in the United States using the EV WATTS Dataset. In Proceedings of the 36th Electric Vehicle Symposium and Exposition (EVS36), Sacramento, CA, USA, 11–14 June 2023; Preprint, NREL, National Renewable Energy Laboratory. Available online: https://www.nrel.gov/docs/fy24osti/85902.pdf (accessed on 14 October 2023).

- Nagy, G.Z. The CityLearn Challenge 2021. Texas Data Repository, V1. 2021. Available online: https://dataverse.tdl.org/dataset.xhtml?persistentId=doi:10.18738/T8/Q2EIQC (accessed on 11 September 2023). [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).