A Survey of Computer Vision Detection, Visual SLAM Algorithms, and Their Applications in Energy-Efficient Autonomous Systems

Abstract

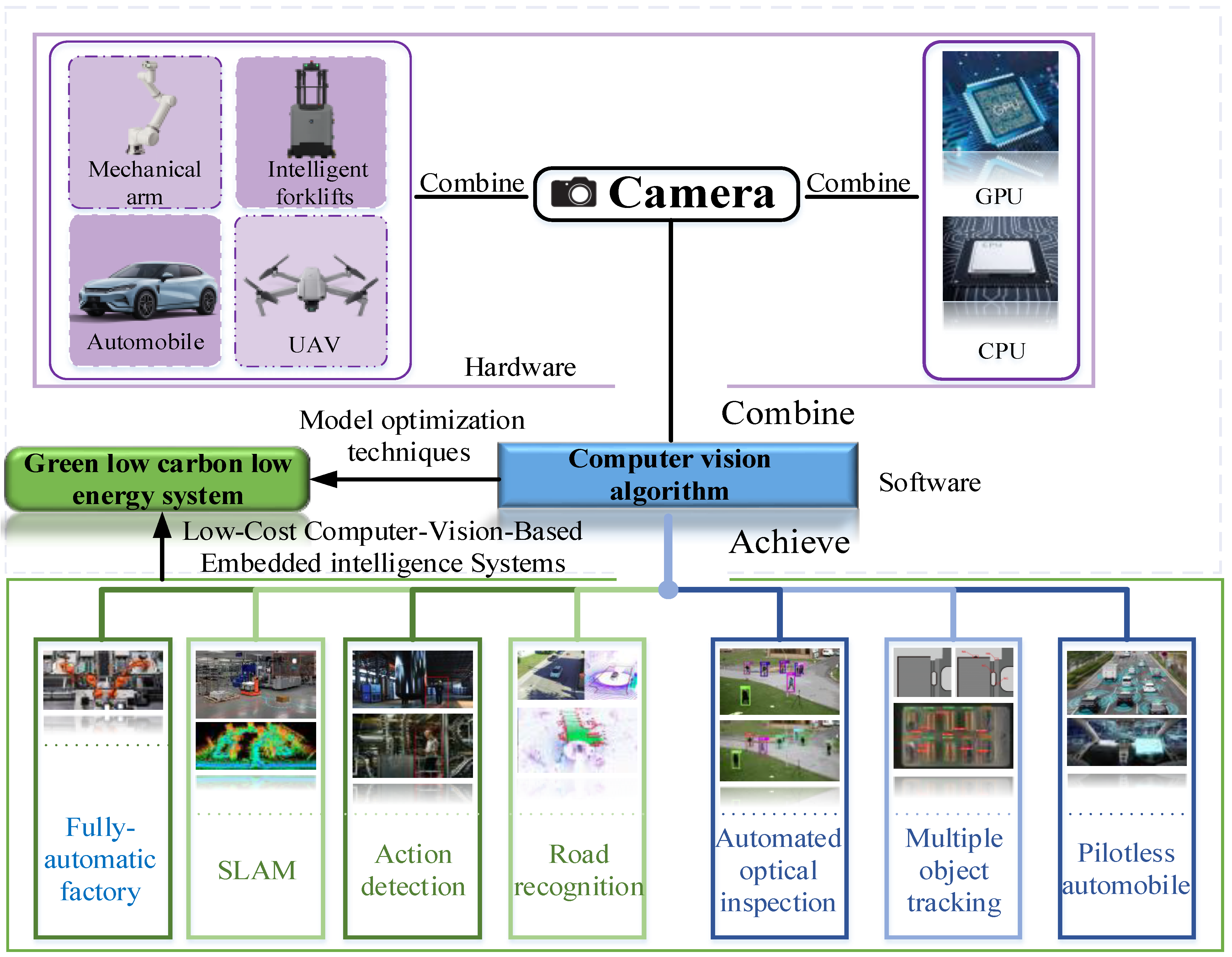

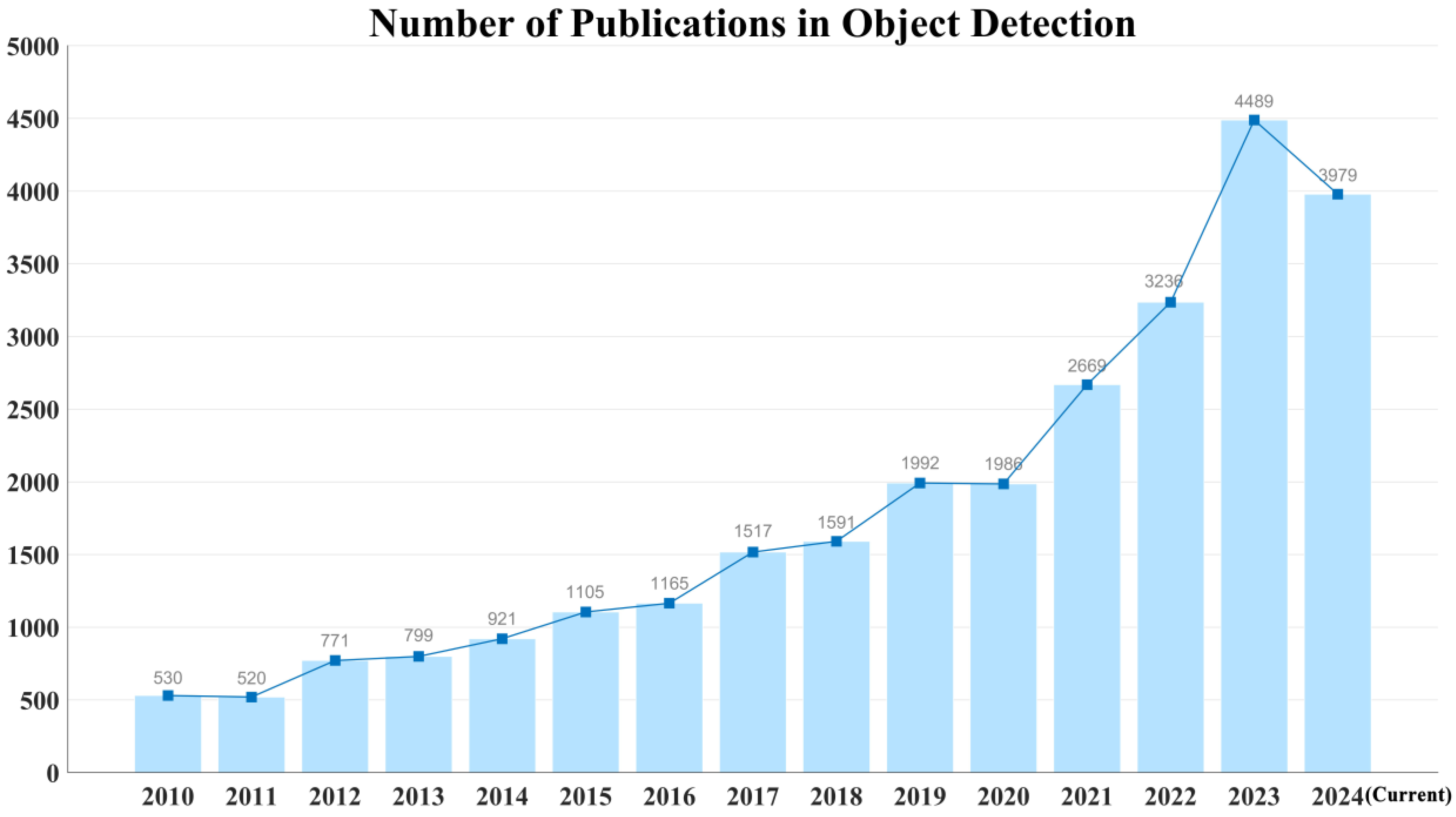

1. Introduction

1.1. Innovative Contributions and Gaps Compared to Related Reviews

- A comprehensive and dimensional review of the evolution of computer vision algorithms.

- 2.

- A comprehensive discussion has been conducted on the classification of datasets that are crucial to computer vision.

- 3.

- The relationship between computer vision algorithms and energy consumption has been explored.

- 4.

- Examples of industrial applications in this research area.

- Integration of energy metrics in algorithm comparison.

- 2.

- Cross-domain application analysis.

- 3.

- Specific emphasis on autonomous navigation and low-carbon impact.

1.2. Selection Criteria for Reviewed Articles

- Relevance to energy efficiency.

- 2.

- Algorithmic advances.

- 3.

- Application-specific case studies.

- 4.

- Citation frequency and impact.

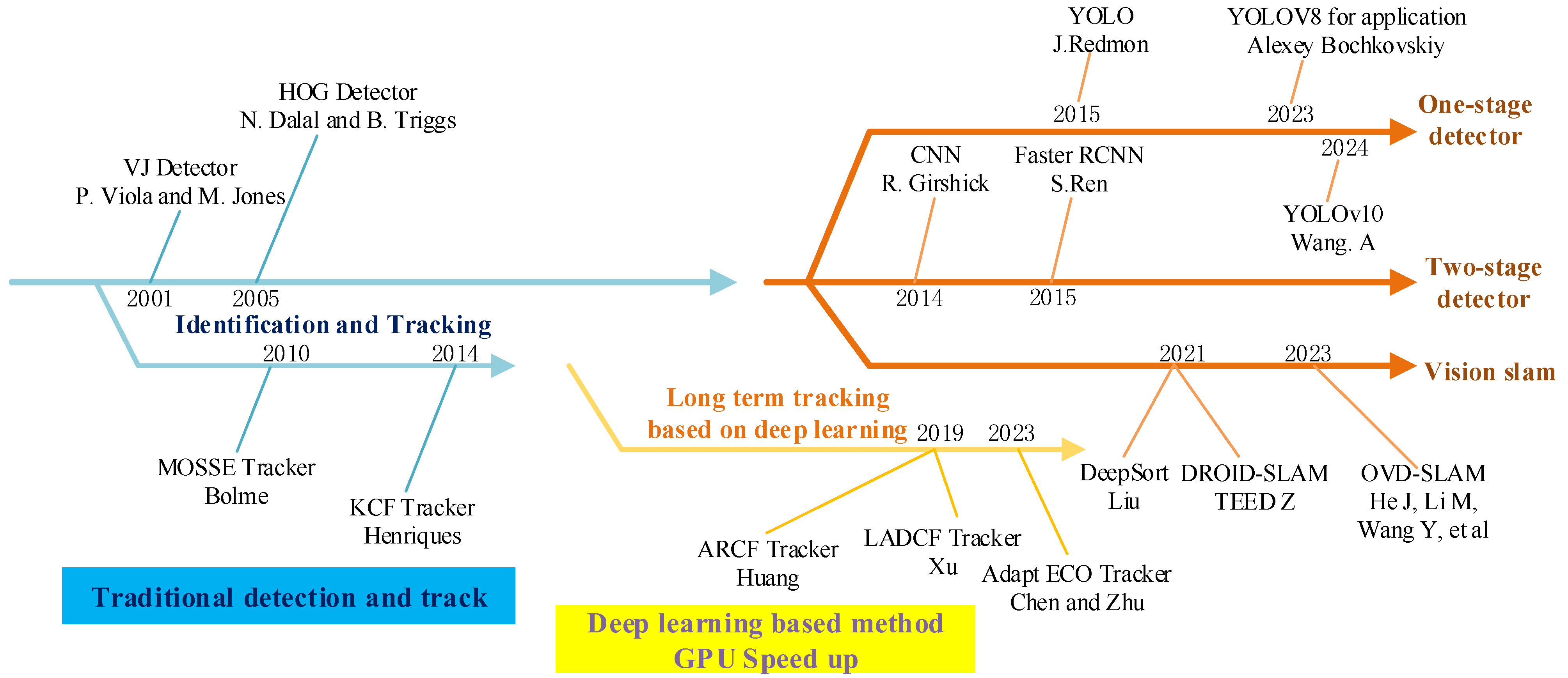

1.3. An Evolutionary Review of Algorithms Related to Computer Vision

- Traditional Computer Vision Algorithms.

- Deep Learning-based Computer Vision Algorithms;

- Visual SLAM Algorithms;

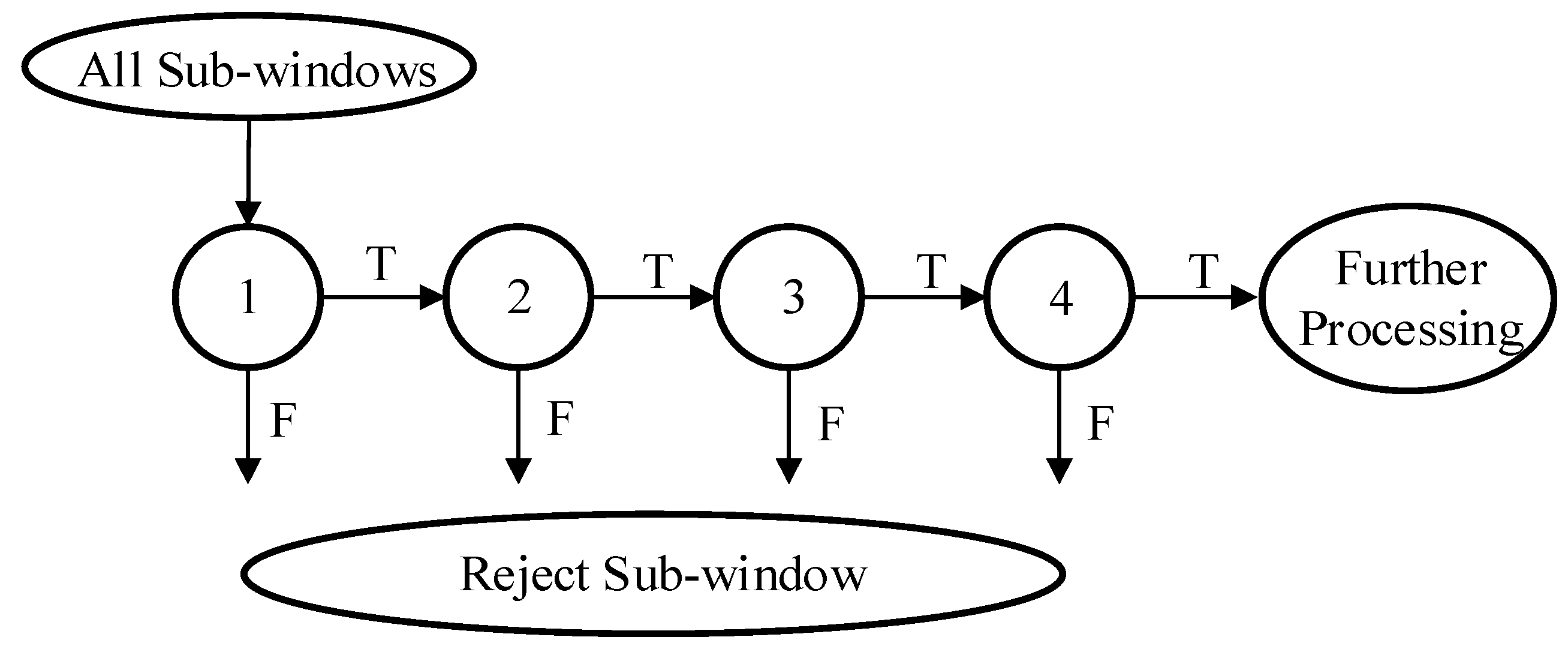

2. Review of Traditional Computer Vision Algorithms

2.1. The Basic Theory of Computer Vision Detection

2.2. The Basic Theory of Computer Vision Tracking

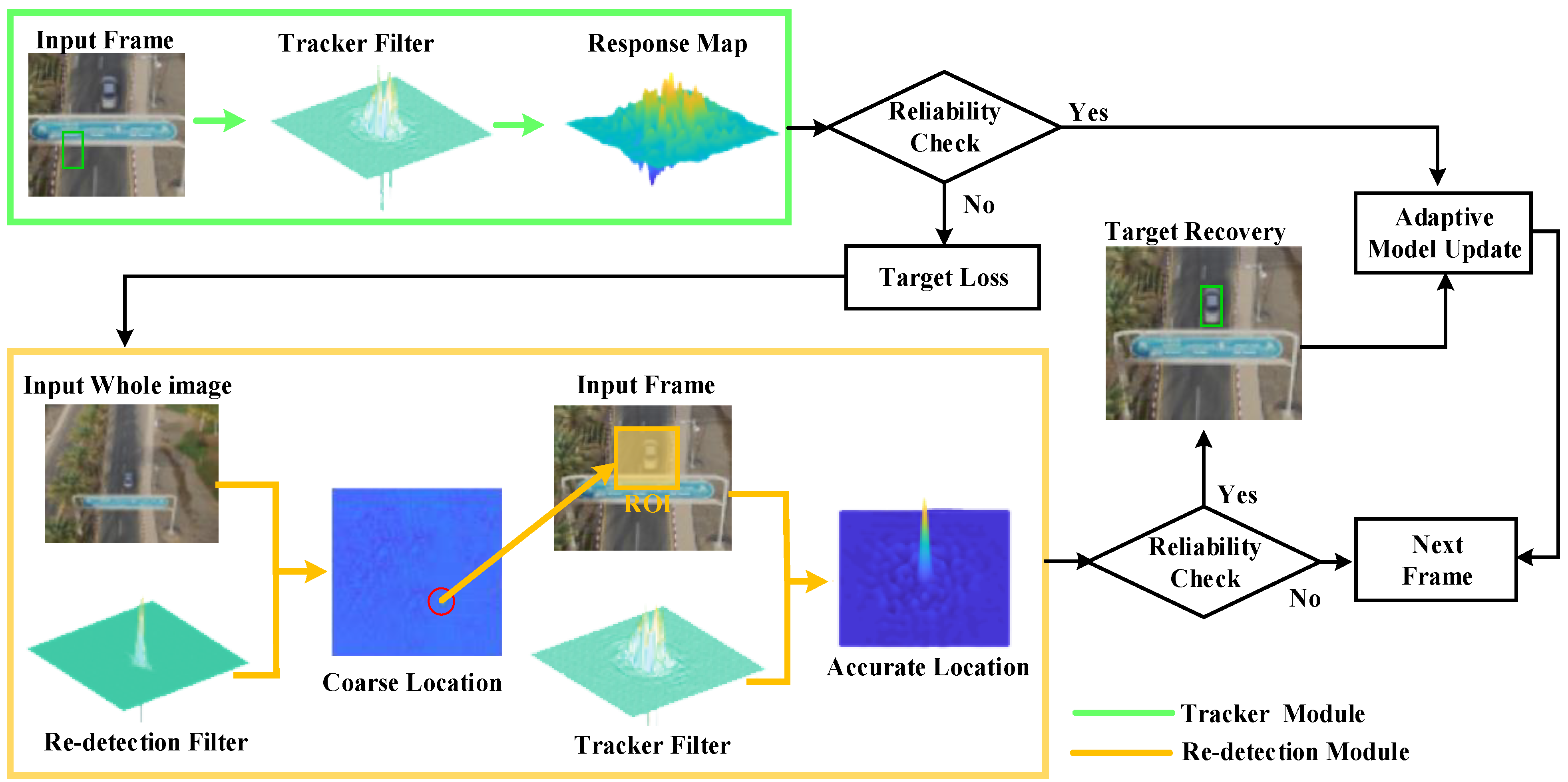

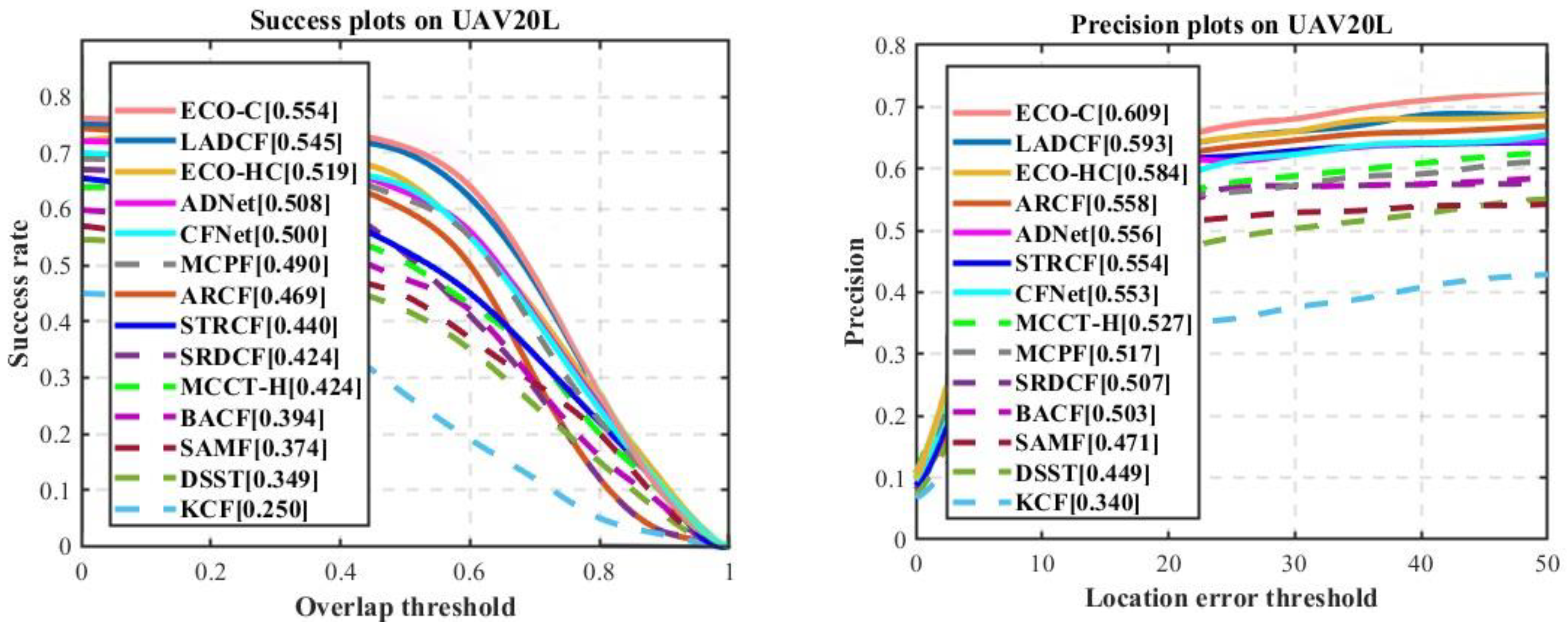

2.3. Adaptive Long-Term Tracking Framework Based on ECO-C

2.4. Discussion of the Application of Traditional Vision Detection and Tracking Algorithms in Autonomous Driving and Contribution to Promoting Green Energy Sustainability

3. Review of Deep Learning-Based Computer Vision

3.1. Computer Vision Datasets

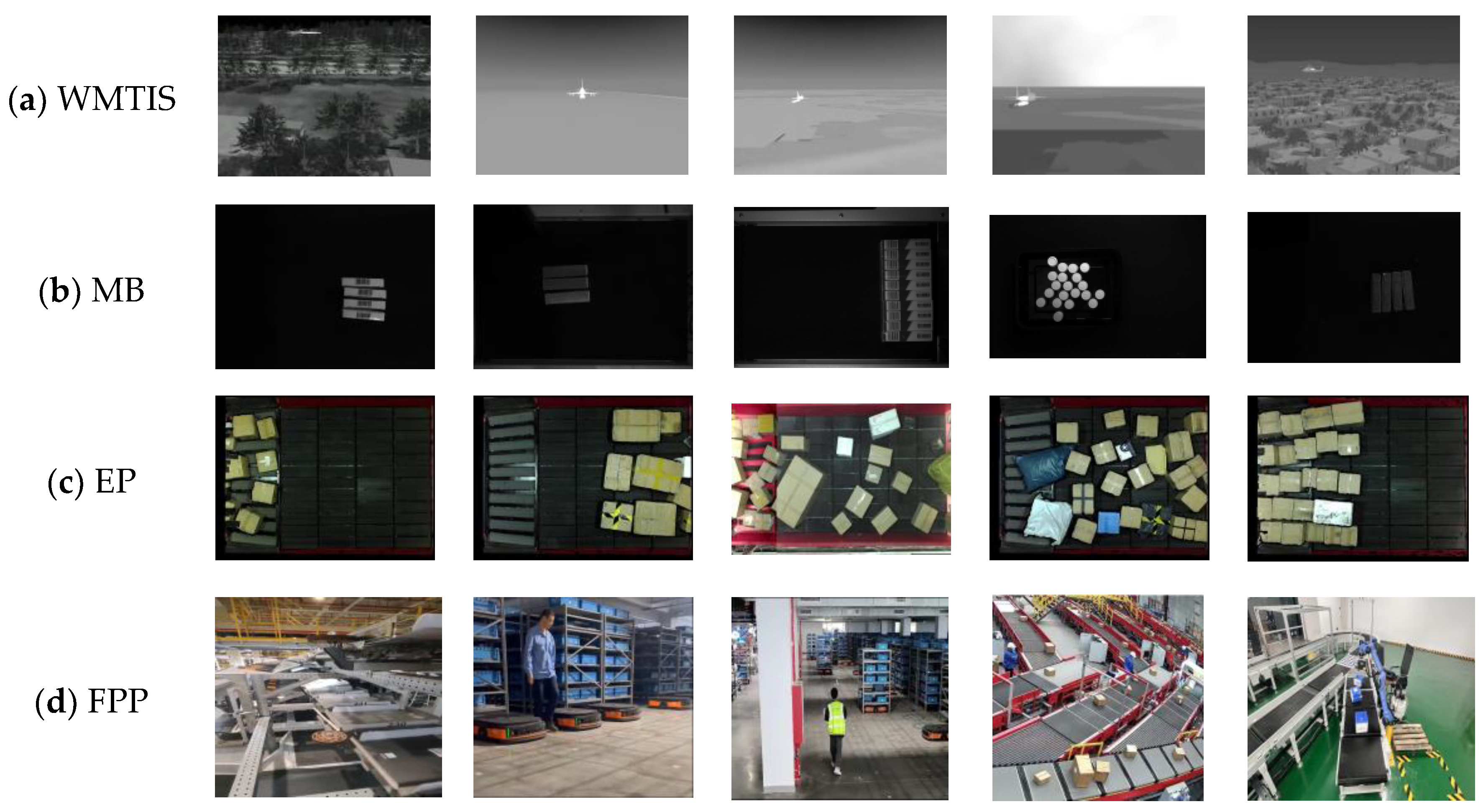

3.1.1. The Thorough Review of Multidimensional Typed Datasets

- One-Dimensional Datasets.

- 2.

- Two-Dimensional Dataset.

- 3.

- Three-Dimensional Datasets.

- 4.

- Three-Dimensional+ Vision Datasets.

- 5.

- Multimodal Sensing datasets.

3.1.2. Examples of Popular and Challenging Computer Vision Datasets and Their Value and Meaning

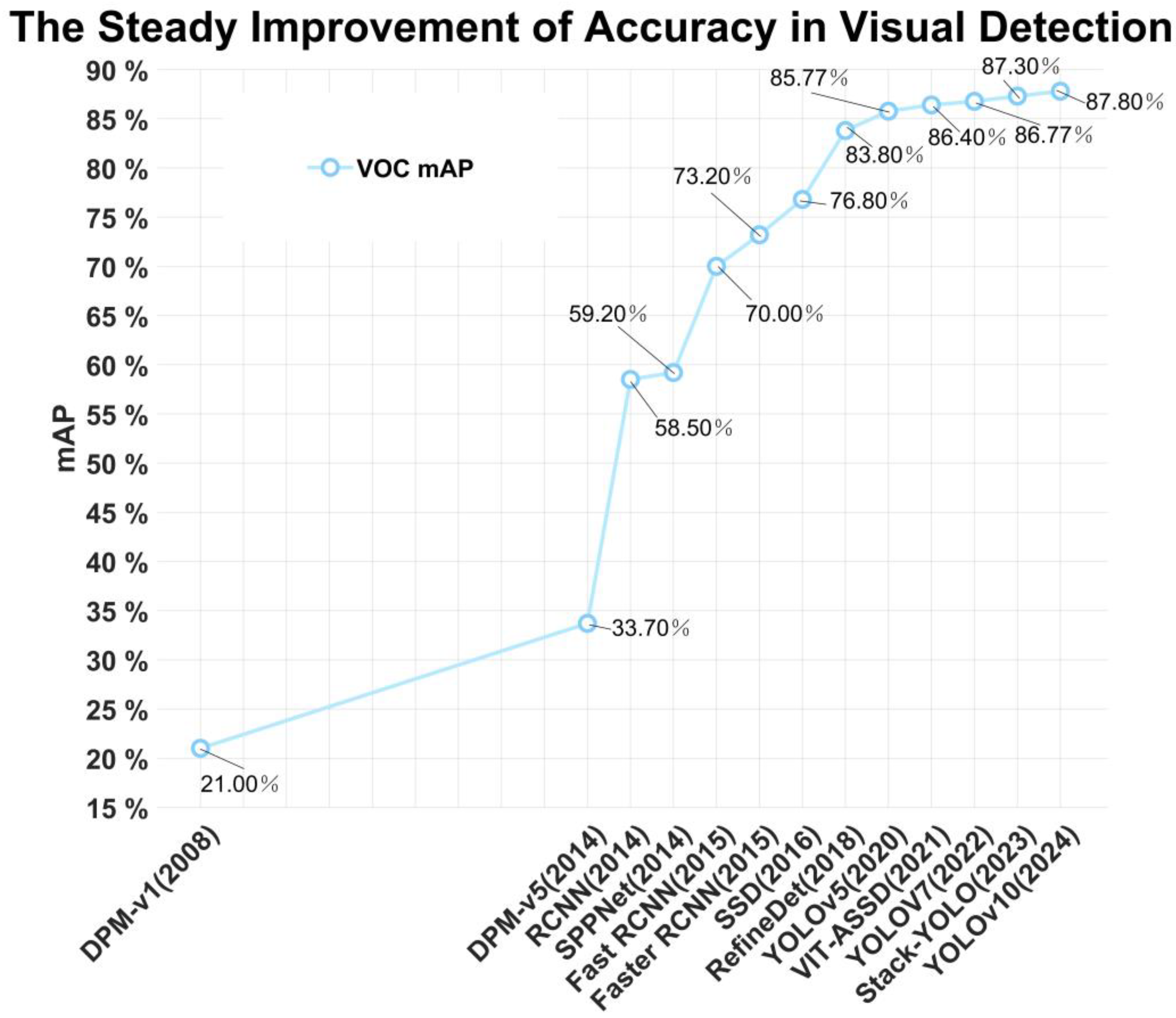

3.2. Review of Deep Learning Computer Vision Based on Convolutional Neural Networks

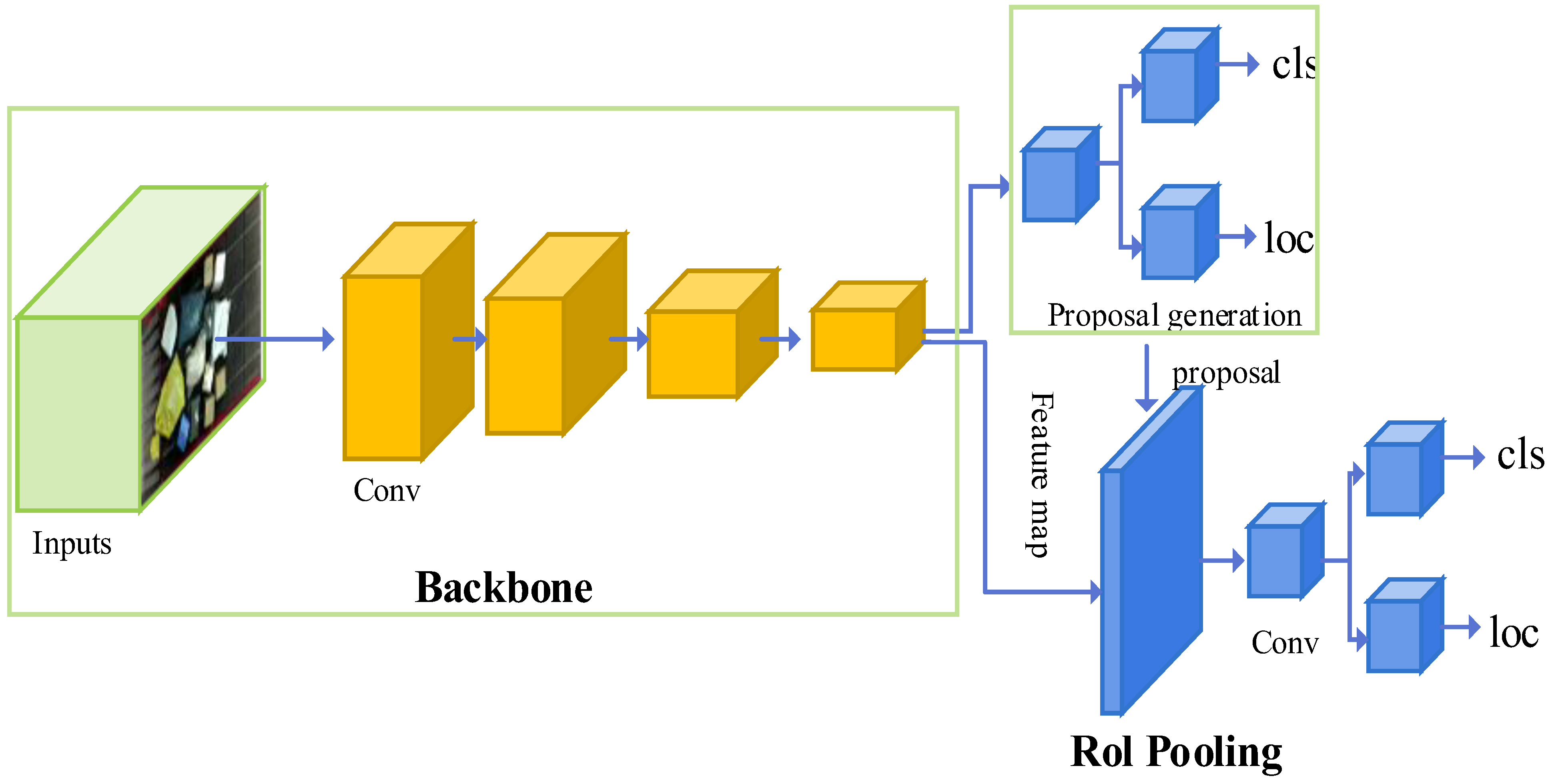

3.3. Computer Vision Detection Applications Based on Convolutional Neural Networks

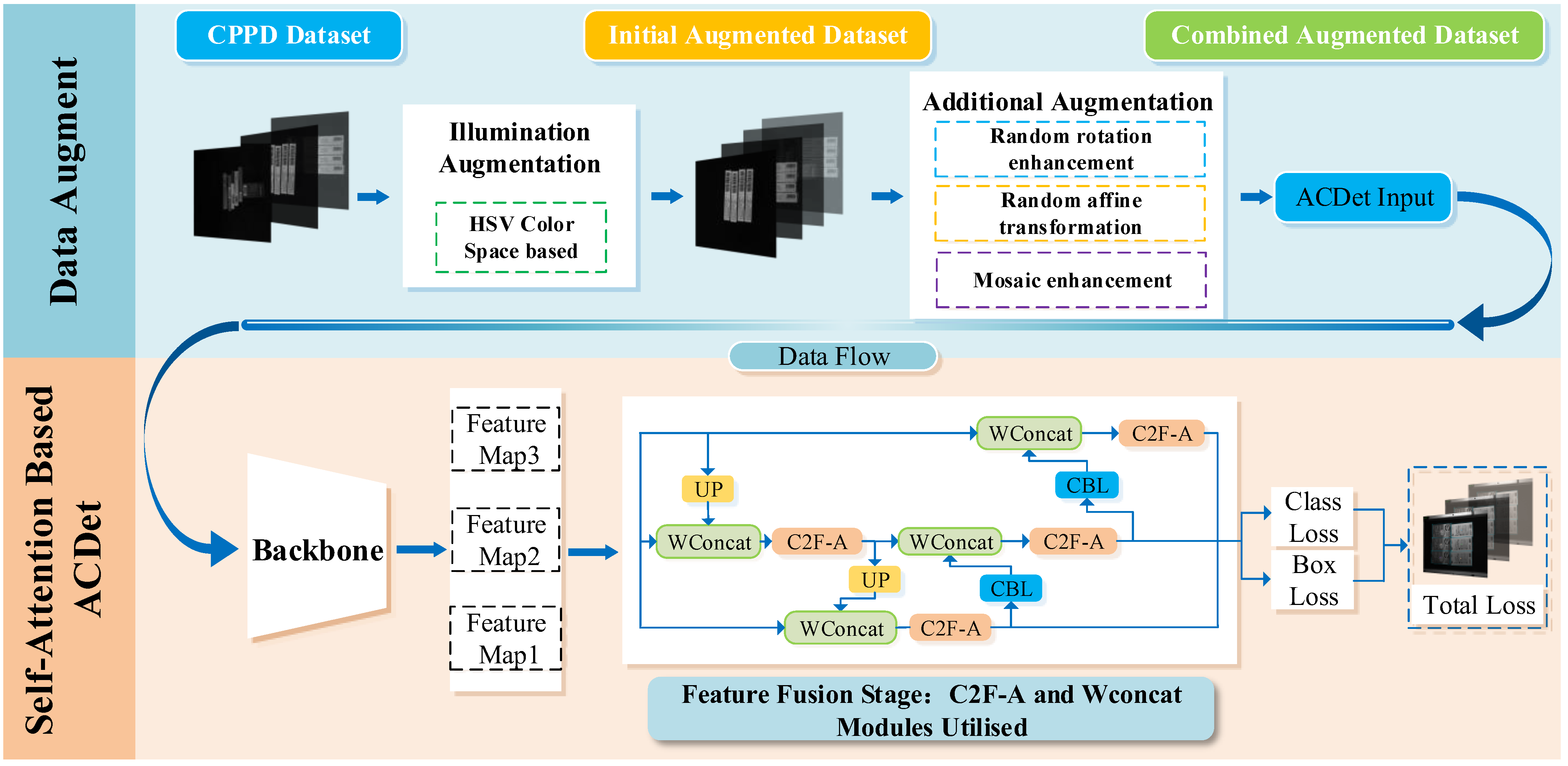

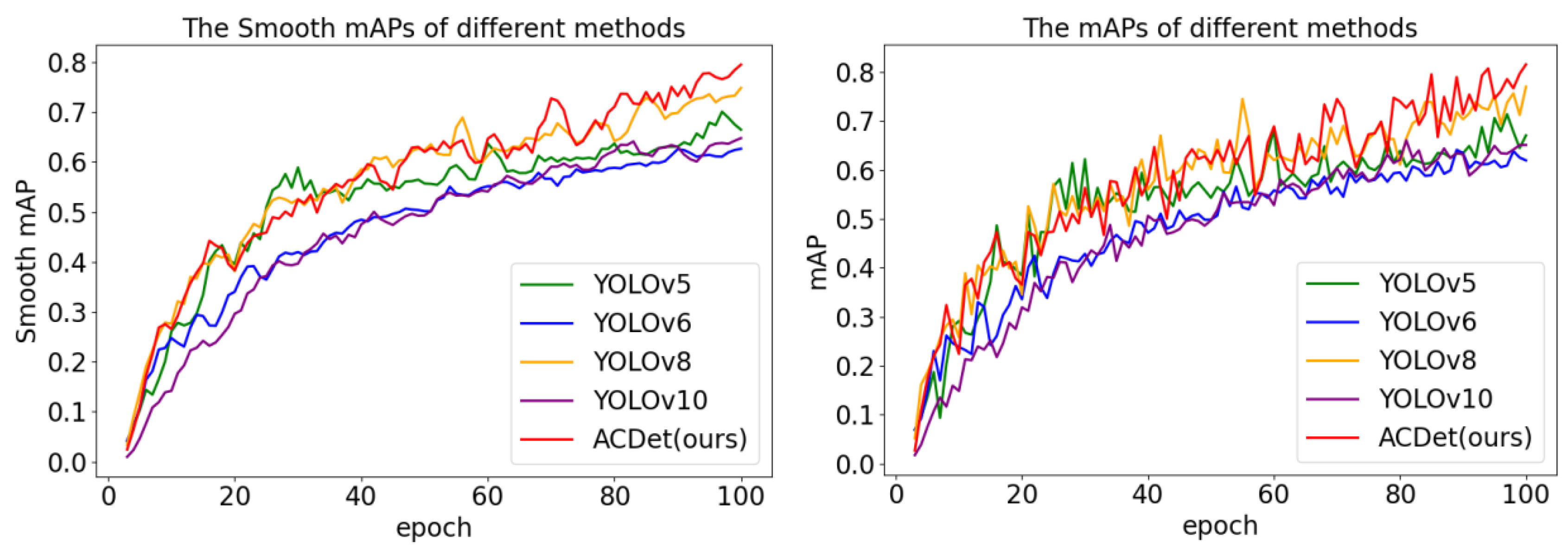

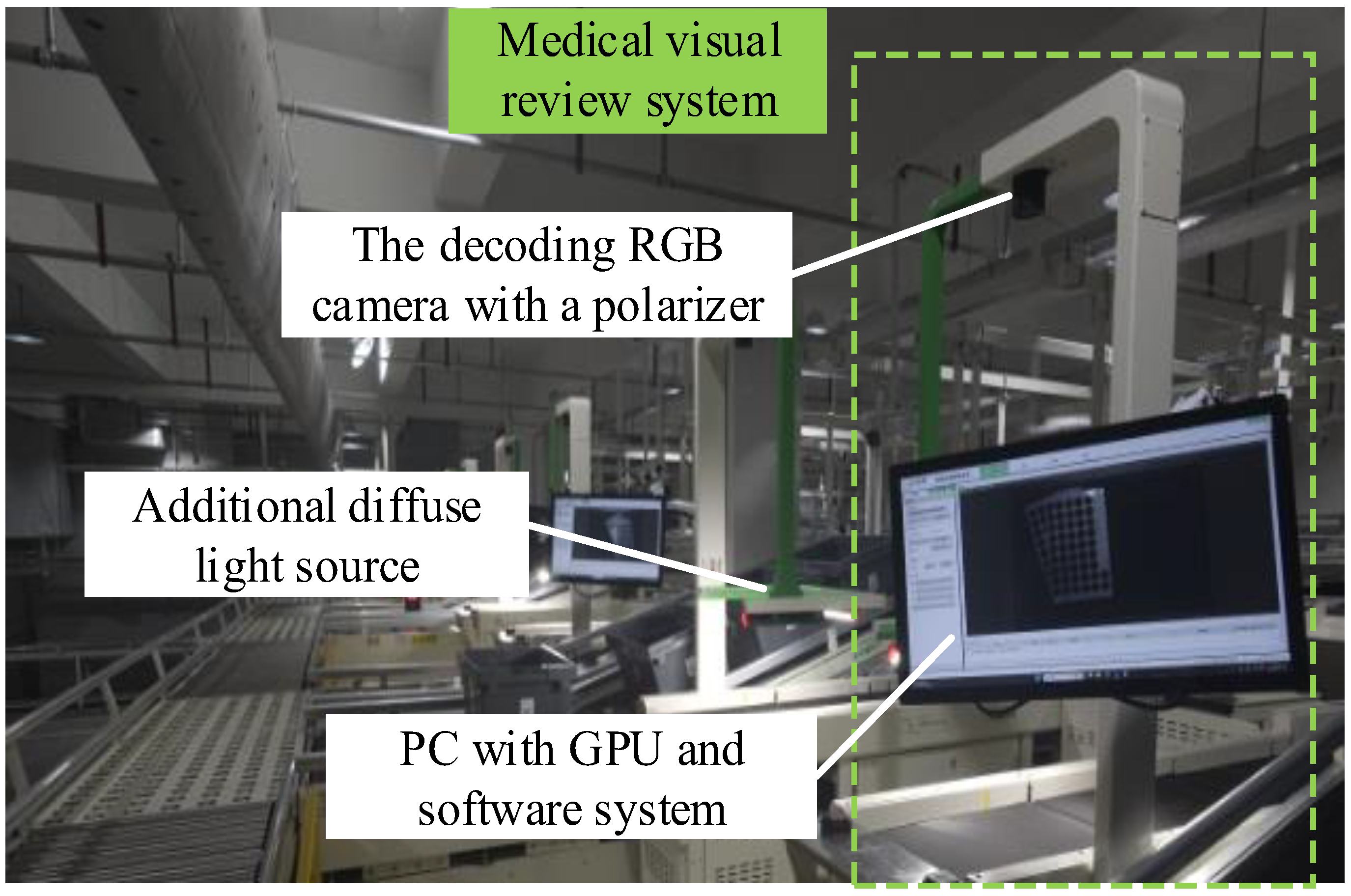

3.3.1. ACDet: A Vector Detection Model for Drug Packaging Based on Convolutional Neural Network

3.4. Exploration and Future Trends in Deep Learning-Based Computer Vision Algorithms

- Enhanced Performance and Real-time Processing

- 2.

- Energy Efficiency and Sustainability

- 3.

- Democratization of AI Research

3.5. Discussion on the Application of Deep Learning-Based Vision Detection Algorithms in Autonomous Driving and Contribution to Promoting Green Energy Sustainability

4. Review of Visual Simultaneous Localization and Mapping (SLAM) Algorithms

4.1. Visual SLAM Datasets

4.2. Review of the SLAM Algorithms

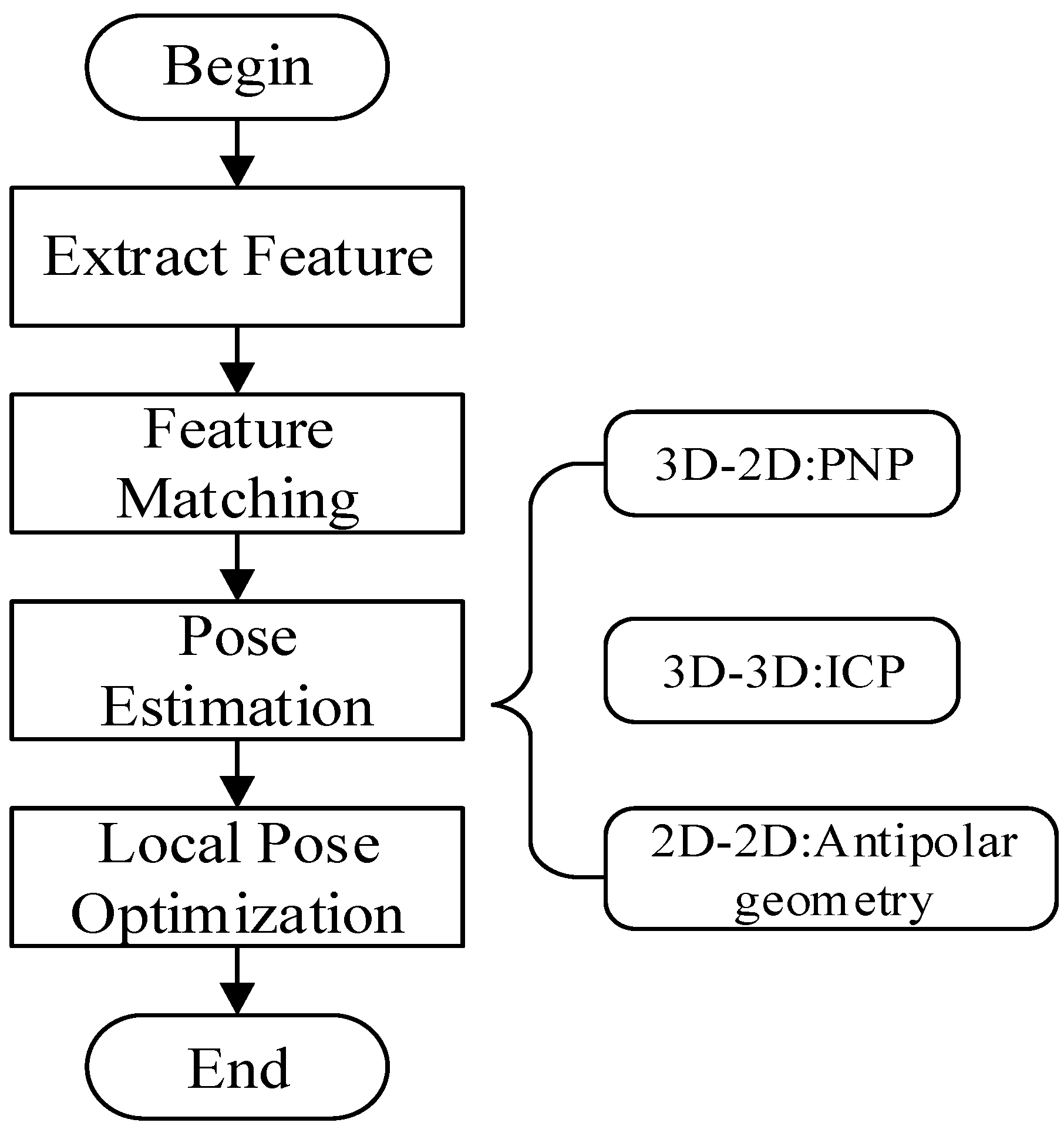

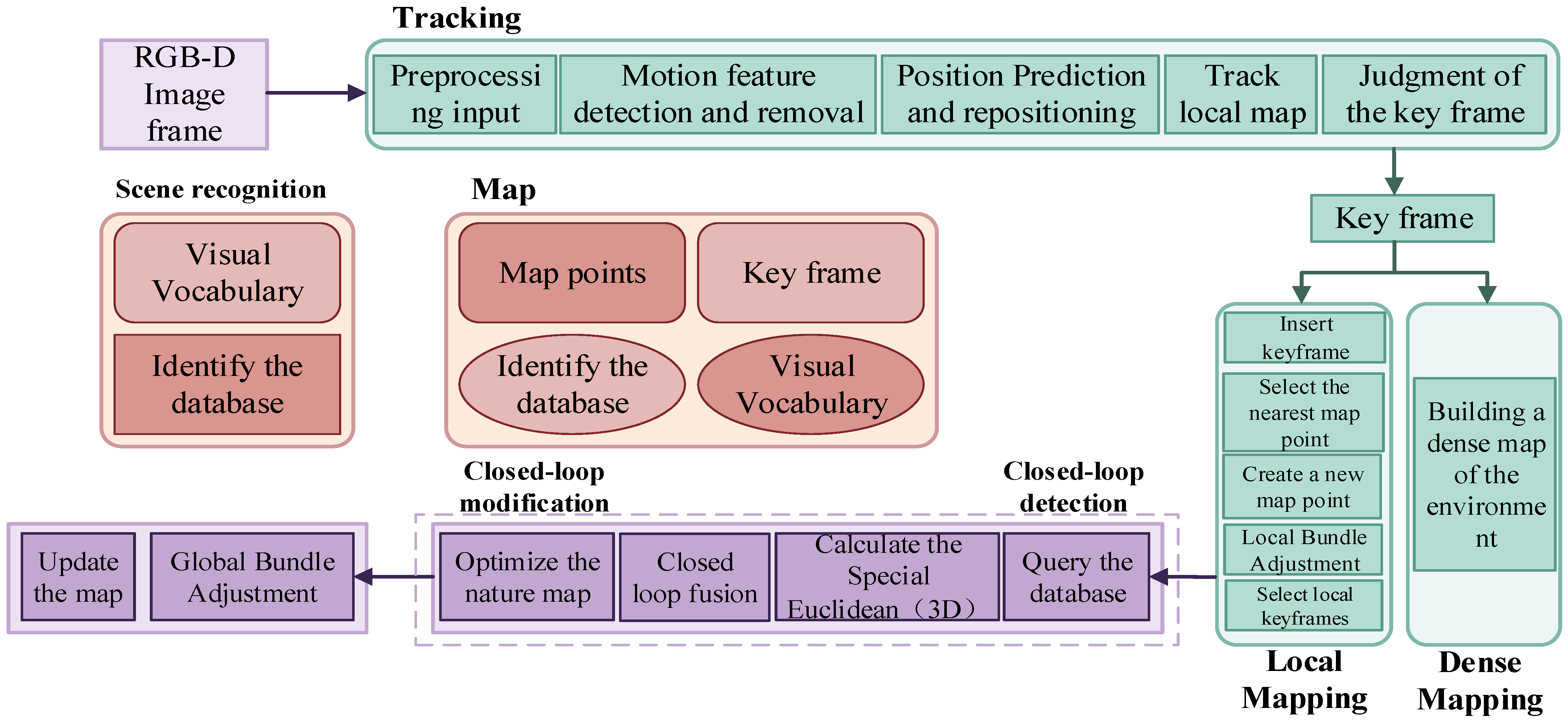

4.2.1. The Basic Principles of SLAM Algorithms

4.2.2. Performance Comparison and Energy Balance Discussion of Mainstream SLAM Algorithms

4.3. Exploration and Future Trends in SLAM Algorithms

- Data volume and labeling: Deep learning necessitates large-scale data and accurate labeling, yet acquiring large-scale SLAM datasets poses a significant challenge;

- Low real-time performance: Visual SLAM often operates under real-time constraints, and even input from low-frame-rate, low-resolution cameras can generate a substantial amount of data, requiring efficient processing and inference algorithms;

- Generalization ability: A critical consideration is whether the model can accurately locate and construct maps in new environments or unseen scenes. Future advancements in deep SLAM methods are expected to increasingly emulate human perception and cognitive patterns, making strides in high-level map construction, human-like perception and localization, active SLAM methods, integration with task requirements, and storage and retrieval of memory. These developments will aid robots in achieving diverse tasks and self-navigation capabilities. The end-to-end training mode and information processing approach, which align with the human cognitive process, hold significant potential.

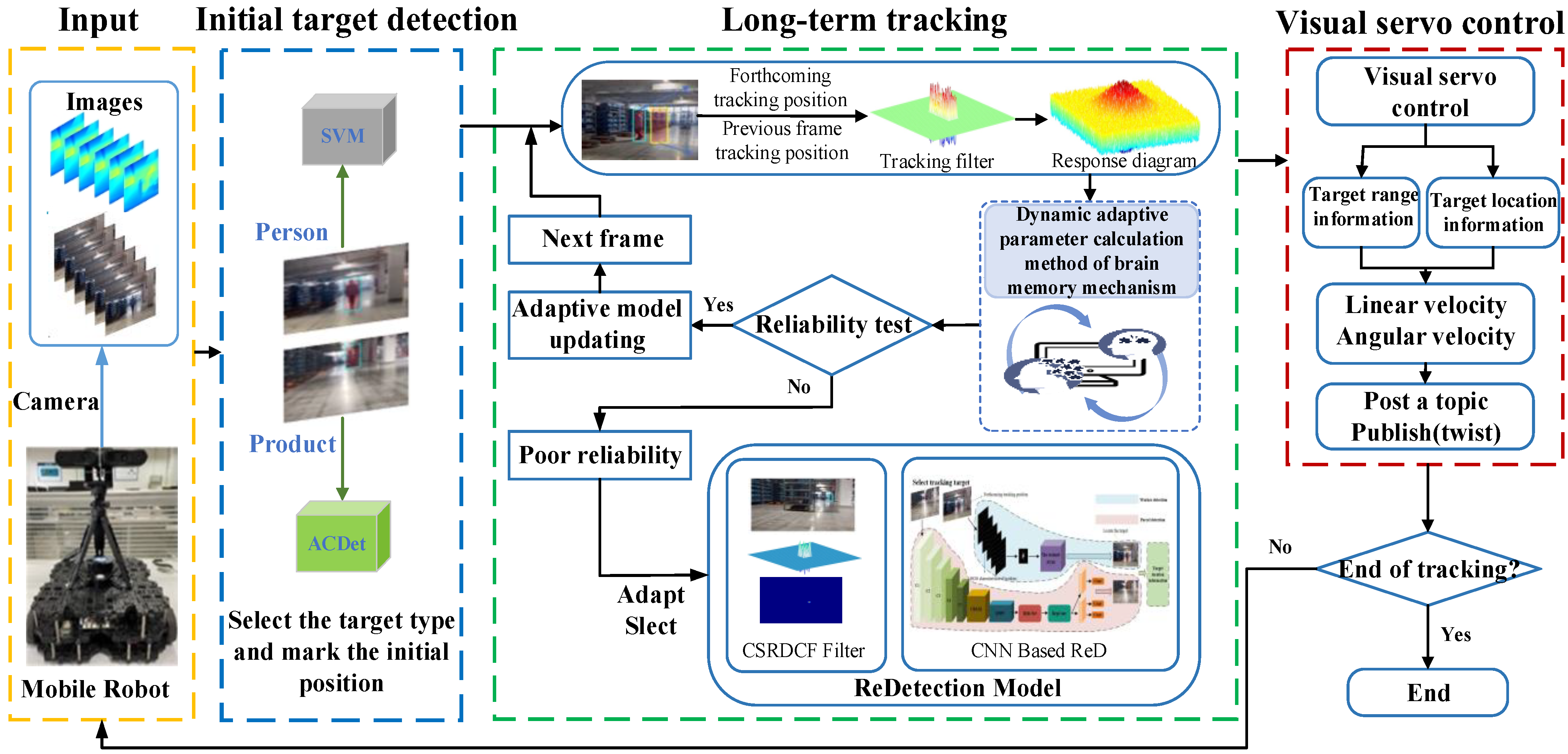

4.4. Visual Framework for Unmanned Factory Applications with Multi-Driverless Robotic Vehicles and UAVs

4.5. Discussion of the Application of SLAM Algorithms in Autonomous Driving and Contribution to Promoting Green Energy Sustainability

4.6. Discussion on the Role of Algorithm Optimization in Improving Energy Efficiency

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

Abbreviations

| SLAM | Simultaneous Localization and Mapping |

| HOG | Histogram of Oriented Gradients |

| DPM | Deformable Part-based Model |

| KITTI | Karlsruhe Institute of Technology and Toyota Technological Institute |

| MOSSE | Minimum Output Sum of Squared Error |

| KCF | Kernelized Correlation Filters |

| LADCF | Learning Adaptive Discriminative Correlation Filters |

| ARCF | Aberrance Repressed Correlation Filters |

| BACF | Background-Aware Correlation Filters |

| CNN | Convolutional Neural Network |

| RPN | Region Proposal Network |

| NMS | Non-Maximum Suppression |

| BA | Bundle Adjustment |

| VJ | Viola–Jones |

| ECO | Efficient Convolution Operators |

| FPS | Frames Per Second |

| VOT | The Visual Object Tracking |

| VOC | Visual Object Classes |

| COCO | Common Objects in Context |

| OTB | Object Tracking Benchmark |

| WMTIS | Weak Military Targets in Infrared Scenes |

| MB | Medicine Boxes |

| XEEEP | Express Packages |

| FPP | Fully Automated Unmanned Factories and Product Detection and Tracking |

| CNNs | Convolutional Neural Networks |

| RoI | Region of Interest |

| AP | Average Precision |

| GPUs | Graphics Processing Units |

| ADAS | Advanced Driver-assistance Systems |

| VO | Visual Odometry |

| PnP | Perspective-n-Point |

| BA | Bundle Adjustment |

| LSD | Large-scale Direct |

| ORB | Oriented FAST and Rotated BRIEF |

| DSO | Direct Sparse Odometry |

| FPGA | Field Programmable Gate Arrays |

| FM | Fast Movement |

| SV | Scale Variation |

| FO | Full Occlusion |

| PO | Partial Occlusion |

| OV | Out-of-View |

| IV | Illuminance Variation |

| LR | Low Resolution |

| RMSE ATE | Root Mean Square Error of absolute trajectory Error |

| SE3 | Special Euclidean Group in Three Dimensions |

| ACDet | Self-Attention and Concatenation-Based Detector |

| SAR | Synthetic Aperture Radar |

References

- Viola, P.; Jones, M. Rapid object detection using a boosted cascade of simple features. In Proceedings of the 2001 IEEE Computer Society Conference on Computer Vision and Pattern Recognition. CVPR 2001, Kauai, HI, USA, 8–14 December 2001; IEEE: Piscataway, NJ, USA, 2001; Volume 1, p. I. [Google Scholar]

- Dalal, N.; Triggs, B. Histograms of oriented gradients for human detection. In Proceedings of the 2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’05), San Diego, CA, USA, 20–25 June 2005; IEEE: Piscataway, NJ, USA, 2005; Volume 1, pp. 886–893. [Google Scholar]

- Bolme, D.S.; Beveridge, J.R.; Draper, B.A.; Lui, Y.M. Visual object tracking using adaptive correlation filters. In Proceedings of the 2010 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, San Francisco, CA, USA, 13–18 June 2010; IEEE: Piscataway, NJ, USA, 2010; pp. 2544–2550. [Google Scholar]

- Henriques, J.F.; Caseiro, R.; Martins, P.; Batista, J. High-speed tracking with kernelized correlation filters. IEEE Trans. Pattern Anal. Mach. Intell. 2014, 37, 583–596. [Google Scholar] [CrossRef] [PubMed]

- Xu, T.; Feng, Z.H.; Wu, X.J.; Kittler, J. Learning Adaptive Discriminative Correlation Filters via Temporal Consistency Preserving Spatial Feature Selection for Robust Visual Object Tracking. IEEE Trans. Image Process. 2019, 28, 5596–5609. [Google Scholar] [CrossRef] [PubMed]

- Huang, Z.; Fu, C.; Li, Y.; Lin, F.; Lu, P. Learning Aberrance Repressed Correlation Filters for Real-Time UAV Tracking. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 2891–2900. [Google Scholar]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich feature hierarchies for accurate object detection and semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; IEEE: Piscataway, NJ, USA, 2014; pp. 580–587. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards real-time object detection with region proposal networks. IEEE Trans. Pattern Anal. Mach. Intell. 2016, 39, 1137–1149. [Google Scholar] [CrossRef]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; IEEE: Piscataway, NJ, USA, 2016; pp. 779–788. [Google Scholar]

- Aboah, A.; Wang, B.; Bagci, U.; Adu-Gyamfi, Y. Real-time multi-class helmet violation detection using few-shot data sampling technique and yolov8. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; IEEE: Piscataway, NJ, USA, 2023; pp. 5350–5358. [Google Scholar]

- Wang, A.; Chen, H.; Liu, L.; Chen, K.; Lin, Z.; Han, J.; Ding, G. Yolov10: Real-time end-to-end object detection. arXiv 2024, arXiv:2405.14458. [Google Scholar]

- Liu, X.; Zhang, Z. A Vision-Based Target Detection, Tracking, and Positioning Algorithm for Unmanned Aerial Vehicle. Wirel. Commun. Mob. Comput. 2021, 2021, 5565589. [Google Scholar] [CrossRef]

- Teed, Z.; Deng, J. Droid-slam: Deep visual slam for monocular, stereo, and rgb-d cameras. Adv. Neural Inf. Process. Syst. 2021, 34, 16558–16569. [Google Scholar]

- Chen, L.; Li, G.; Zhao, K.; Zhang, G.; Zhu, X. A Perceptually Adaptive Long-Term Tracking Method for the Complete Occlusion and Disappearance of a Target. Cogn. Comput. 2023, 15, 2120–2131. [Google Scholar] [CrossRef]

- He, J.; Li, M.; Wang, Y.; Wang, H. OVD-SLAM: An online visual SLAM for dynamic environments. IEEE Sens. J. 2023, 23, 13210–13219. [Google Scholar] [CrossRef]

- Liu, L.; Ouyang, W.; Wang, X.; Fieguth, P.; Chen, J.; Liu, X.; Pietikäinen, M. Deep learning for generic object detection: A survey. Int. J. Comput. Vis. 2020, 128, 261–318. [Google Scholar] [CrossRef]

- Agarwal, S.; Terrail, J.O.D.; Jurie, F. Recent advances in object detection in the age of deep convolutional neural networks. arXiv 2018, arXiv:1809.03193. [Google Scholar]

- Andreopoulos, A.; Tsotsos, J.K. 50 years of object recognition: Directions forward. Comput. Vis. Image Underst. 2013, 117, 827–891. [Google Scholar] [CrossRef]

- Huang, J.; Rathod, V.; Sun, C.; Zhu, M.; Korattikara, A.; Fathi, A. Speed/accuracy trade-offs for modern convolutional object detectors. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 7310–7311. [Google Scholar]

- Grauman, K.; Leibe, B. Visual Object Recognition (Synthesis Lectures on Artificial Intelligence and Machine Learning); Morgan & Claypool Publishers: San Rafael, CA, USA, 2011; p. 3. [Google Scholar]

- Zou, Z.; Chen, K.; Shi, Z.; Guo, Y.; Ye, J. Object detection in 20 years: A survey. Proc. IEEE 2023, 111, 257–276. [Google Scholar] [CrossRef]

- Jiao, L.; Zhang, F.; Liu, F.; Yang, S.; Li, L.; Feng, Z.; Qu, R. A survey of deep learning-based object detection. IEEE Access 2019, 7, 128837–128868. [Google Scholar] [CrossRef]

- Liu, Y.; Dai, Q. A survey of computer vision applied in aerial robotic vehicles. In Proceedings of the 2010 International Conference on Optics, Photonics and Energy Engineering (OPEE), Wuhan, China, 10–11 May 2010; IEEE: Piscataway, NJ, USA, 2010; Volume 1, pp. 277–280. [Google Scholar]

- Felzenszwalb, P.; McAllester, D.; Ramanan, D. A discriminatively trained, multiscale, deformable part model. In Proceedings of the 2008 IEEE Conference on Computer Vision and Pattern Recognition, Anchorage, AK, USA, 23–28 June 2008; IEEE: Piscataway, NJ, USA, 2008; pp. 1–8. [Google Scholar]

- Ju, Z.; Gun, L.; Hussain, A.; Mahmud, M.; Ieracitano, C. A novel approach to shadow boundary detection based on an adaptive direction-tracking filter for brain-machine interface applications. Appl. Sci. 2020, 10, 6761. [Google Scholar] [CrossRef]

- Redmon, J.; Farhadi, A. YOLO9000: Better, faster, stronger. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 7263–7271. [Google Scholar]

- Gao, F.; Huang, T.; Sun, J.; Wang, J.; Hussain, A.; Yang, E. A new algorithm for SAR image target recognition based on an improved deep convolutional neural network. Cogn. Comput. 2019, 11, 809–824. [Google Scholar] [CrossRef]

- Chen, B.X.; Sahdev, R.; Tsotsos, J.K. Person following robot using selected online ada-boosting with stereo camera. In Proceedings of the 2017 14th Conference on Computer and Robot Vision (CRV), Edmonton, AB, Canada, 16–19 May 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 48–55. [Google Scholar]

- Evjemo, L.D.; Gjerstad, T.; Grøtli, E.I.; Sziebig, G. Trends in smart manufacturing: Role of humans and industrial robots in smart factories. Curr. Robot. Rep. 2020, 1, 35–41. [Google Scholar] [CrossRef]

- Danelljan, M.; Bhat, G.; Khan, F.S.; Felsberg, M. ECO: Efficient Convolution Operators for Tracking. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 6931–6939. [Google Scholar]

- Danelljan, M.; Hager, G.; Shahbaz Khan, F.; Felsberg, M. Learning spatially regularized correlation filters for visual tracking. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; IEEE: Piscataway, NJ, USA, 2015; pp. 4310–4318. [Google Scholar]

- Bertinetto, L.; Valmadre, J.; Golodetz, S.; Miksik, O.; Torr, P.H. Staple: Complementary learners for real-time tracking. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; IEEE: Piscataway, NJ, USA, 2016; pp. 1401–1409. [Google Scholar]

- Li, Y.; Zhu, J. A scale adaptive kernel correlation filter tracker with feature integration. In Proceedings of the Computer Vision-ECCV 2014 Workshops, Zurich, Switzerland, 6–7 and 12 September 2014; Part II 13. Springer International Publishing: Cham, Switzerland, 2015; pp. 254–265. [Google Scholar]

- Kiani Galoogahi, H.; Fagg, A.; Lucey, S. Learning background-aware correlation filters for visual tracking. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 1135–1143. [Google Scholar]

- Wang, N.; Zhou, W.; Tian, Q.; Hong, R.; Wang, M.; Li, H. Multi-cue correlation filters for robust visual tracking. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Guangzhou, China, 2–4 December 2016; IEEE: Piscataway, NJ, USA, 2018; pp. 4844–4853. [Google Scholar]

- Li, F.; Tian, C.; Zuo, W.; Zhang, L.; Yang, M.H. Learning spatial-temporal regularized correlation filters for visual tracking. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 4904–4913. [Google Scholar]

- Yun, S.; Choi, J.; Yoo, Y.; Yun, K.; Young Choi, J. Action-decision networks for visual tracking with deep reinforcement learning. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 2711–2720. [Google Scholar]

- Valmadre, J.; Bertinetto, L.; Henriques, J.; Vedaldi, A.; Torr, P.H. End-to-end representation learning for correlation filter based tracking. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 2805–2813. [Google Scholar]

- Zhang, T.; Xu, C.; Yang, M.H. Multi-task correlation particle filter for robust object tracking. In Proceedings of the IEEE Conference on Computer Vision and pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 4335–4343. [Google Scholar]

- Dollar, P.; Wojek, C.; Schiele, B.; Perona, P. Pedestrian detection: An evaluation of the state of the art. IEEE Trans. Pattern Anal. Mach. Intell. 2011, 34, 743–761. [Google Scholar] [CrossRef]

- Geiger, A.; Lenz, P.; Urtasun, R. Are we ready for autonomous driving? the kitti vision benchmark suite. In Proceedings of the 2012 IEEE Conference on Computer Vision and Pattern Recognition, Providence, RI, USA, 16–21 June 2012; IEEE: Piscataway, NJ, USA, 2012; pp. 3354–3361. [Google Scholar]

- Russakovsky, O.; Deng, J.; Su, H.; Krause, J.; Satheesh, S.; Ma, S.; Fei-Fei, L. Imagenet large scale visual recognition challenge. Int. J. Comput. Vis. 2015, 115, 211–252. [Google Scholar] [CrossRef]

- Mottaghi, R.; Savarese, S. Beyond pascal: A benchmark for 3d object detection in the wild. In Proceedings of the IEEE Winter Conference on Applications of Computer Vision, Steamboat Springs, CO, USA, 24–26 March 2014; IEEE: Piscataway, NJ, USA, 2014; pp. 75–82. [Google Scholar]

- Everingham, M.; Van Gool, L.; Williams, C.K.; Winn, J.; Zisserman, A. The pascal visual object classes (voc) challenge. Int. J. Comput. Vis. 2010, 88, 303–338. [Google Scholar] [CrossRef]

- Everingham, M.; Eslami, S.M.A.; Williams, C.K.; Winn, J.; Zisserman, A. The pascal visual object classes challenge: A retrospective. Int. J. Comput. Vis. 2015, 111, 98–136. [Google Scholar] [CrossRef]

- Mueller, M.; Smith, N.; Ghanem, B. A benchmark and simulator for UAV tracking. In Proceedings of the Computer Vision–ECCV 2016: 14th European Conference, Amsterdam, The Netherlands, 11–14 October 2016; Part I 14. Springer International Publishing: Cham, Switzerland, 2016; pp. 445–461. [Google Scholar]

- Wu, Y.; Lim, J.; Yang, M.H. Online object tracking: A benchmark. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Portland, OR, USA, 23–28 June 2013; IEEE: Piscataway, NJ, USA, 2013; pp. 2411–2418. [Google Scholar]

- Lin, T.Y.; Maire, M.; Belongie, S.; Bourdev, L.; Girshick, R.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft coco: Common objects in context. In Proceedings of the Computer Vision–ECCV 2014: 13th European Conference, Zurich, Switzerland, 6–12 September 2014; Part V 13. Springer International Publishing: Cham, Switzerland, 2014; pp. 740–755. [Google Scholar]

- Kuznetsova, A.; Rom, H.; Alldrin, N.; Uijlings, J.R.R.; Krasin, I.; Pont-Tuset, J.; Kamali, S.; Popov, S.; Malloci, M.; Kolesnikov, A.; et al. The open images dataset v4: Unified image classification, object detection, and visual relationship detection at scale. Int. J. Comput. Vis. 2020, 128, 1956–1981. [Google Scholar] [CrossRef]

- Zhu, P.; Wen, L.; Bian, X.; Ling, H.; Hu, Q. Vision meets drones: A challenge. arXiv 2018, arXiv:1804.07437. [Google Scholar]

- Dai, A.; Chang, A.X.; Savva, M.; Halber, M.; Funkhouser, T.; Nießner, M. Scannet: Richly-annotated 3d reconstructions of indoor scenes. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 5828–5839. [Google Scholar]

- Wu, Z.; Song, S.; Khosla, A.; Yu, F.; Zhang, L.; Tang, X.; Xiao, J. 3d shapenets: A deep representation for volumetric shapes. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; IEEE: Piscataway, NJ, USA, 2015; pp. 1912–1920. [Google Scholar]

- Song, S.; Lichtenberg, S.P.; Xiao, J. Sun rgb-d: A rgb-d scene understanding benchmark suite. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; IEEE: Piscataway, NJ, USA, 2015; pp. 567–576. [Google Scholar]

- Silberman, N.; Hoiem, D.; Kohli, P.; Fergus, R. Indoor segmentation and support inference from rgbd images. In Proceedings of the Computer Vision–ECCV 2012: 12th European Conference on Computer Vision, Florence, Italy, 7–13 October 2012; Part V 12. Springer: Berlin/Heidelberg, Germany, 2012; pp. 746–760. [Google Scholar]

- Huang, X.; Cheng, X.; Geng, Q.; Cao, D.; Zhou, H.; Wang, B.; Lin, Y.; Yang, R. The apolloscape dataset for autonomous driving. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Salt Lake City, UT, USA, 18–22 June 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 954–960. [Google Scholar]

- Hwang, S.; Park, J.; Kim, N.; Choi, Y.; Kweon, I.S. Multispectral pedestrian detection: Benchmark dataset and baseline. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; IEEE: Piscataway, NJ, USA, 2015; pp. 1037–1045. [Google Scholar]

- Dau, H.A.; Bagnall, A.; Kamgar, K.; Yeh, C.-C.M.; Zhu, Y.; Gharghabi, S.; Ratanamahatana, C.A.; Keogh, E. The UCR time series archive. IEEE/CAA J. Autom. Sin. 2019, 6, 1293–1305. [Google Scholar] [CrossRef]

- Cordts, M.; Omran, M.; Ramos, S.; Rehfeld, T.; Enzweiler, M.; Benenson, R.; Franke, U.; Roth, S.; Schiele, B. The cityscapes dataset for semantic urban scene understanding. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; IEEE: Piscataway, NJ, USA, 2016; pp. 3213–3223. [Google Scholar]

- Chang, A.X.; Funkhouser, T.; Guibas, L.; Hanrahan, P.; Huang, Q.; Li, Z.; Savarese, S.; Savva, M.; Song, S.; Su, H.; et al. Shapenet: An information-rich 3d model repository. arXiv 2015, arXiv:1512.03012. [Google Scholar]

- Chang, A.; Dai, A.; Funkhouser, T.; Halber, M.; Nießner, M.; Savva, M.; Song, S.; Zeng, A.; Zhang, Y. Matterport3d: Learning from rgb-d data in indoor environments. arXiv 2017, arXiv:1709.06158. [Google Scholar]

- Caesar, H.; Bankiti, V.; Lang, A.H.; Vora, S.; Liong, V.E.; Xu, Q.; Krishnan, A.; Pan, Y.; Baldan, G.; Beijbom, O. nuscenes: A multimodal dataset for autonomous driving. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 14–19 June 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 11621–11631. [Google Scholar]

- Sun, P.; Kretzschmar, H.; Dotiwalla, X.; Chouard, A.; Patnaik, V.; Tsui, P.; Guo, J.; Zhou, Y.; Chai, Y.; Caine, B.; et al. Scalability in perception for autonomous driving: Waymo open dataset. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 14–19 June 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 2446–2454. [Google Scholar]

- De Charette, R.; Nashashibi, F. Real time visual traffic lights recognition based on spot light detection and adaptive traffic lights templates. In Proceedings of the Intelligent Vehicles Symposium, Xi’an, China, 3–5 June 2009; IEEE: Piscataway, NJ, USA, 2009; pp. 358–363. [Google Scholar]

- Timofte, R.; Zimmermann, K.; Van Gool, L. Multi-view traffic sign detection, recognition, and 3D localisation. Mach. Vis. Appl. 2014, 25, 633–647. [Google Scholar] [CrossRef]

- Houben, S.; Stallkamp, J.; Salmen, J.; Schlipsing, M.; Igel, C. Detection of traffic signs in real-world images: The German Traffic Sign Detection Benchmark. In Proceedings of the 2013 International Joint Conference on Neural Networks (IJCNN), Dallas, TX, USA, 4–9 August 2013; IEEE: Piscataway, NJ, USA, 2013; pp. 1–8. [Google Scholar]

- Klare, B.F.; Klein, B.; Taborsky, E.; Blanton, A.; Cheney, J.; Allen, K.; Grother, P.; Mah, A.; Burge, M.J.; Jain, A.K. Pushing the frontiers of unconstrained face detection and recognition: Iarpa janus benchmark a. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; IEEE: Piscataway, NJ, USA, 2015; pp. 1931–1939. [Google Scholar]

- Yang, S.; Luo, P.; Loy, C.C.; Tang, X. Wider face: A face detection benchmark. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; IEEE: Piscataway, NJ, USA, 2016; pp. 5525–5533. [Google Scholar]

- Cheng, G.; Han, J. A survey on object detection in optical remote sensing images. ISPRS J. Photogramm. Remote Sens. 2016, 117, 11–28. [Google Scholar] [CrossRef]

- Wang, J.; Zhang, P.; Chu, T.; Cao, Y.; Zhou, Y.; Wu, T.; Wang, B.; He, C.; Lin, D. V3det: Vast vocabulary visual detection dataset. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Paris, France, 2–6 October 2023; IEEE: Piscataway, NJ, USA, 2023; pp. 19844–19854. [Google Scholar]

- Wang, X.; Wang, S.; Tang, C.; Zhu, L.; Jiang, B.; Tian, Y.; Tang, J. Event stream-based visual object tracking: A high-resolution benchmark dataset and a novel baseline. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 17–21 June 2024; IEEE: Piscataway, NJ, USA, 2024; pp. 19248–19257. [Google Scholar]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.-Y.; Berg, A. Ssd: Single shot multibox detector. In Proceedings of the Computer Vision–ECCV 2016: 14th European Conference, Amsterdam, The Netherlands, 11–14 October 2016; Part I 14. Springer International Publishing: Cham, Switzerland, 2016; pp. 21–37. [Google Scholar]

- Papageorgiou, C.P.; Oren, M.; Poggio, T. A general framework for object detection. In Proceedings of the Sixth international conference on computer vision (IEEE Cat. No. 98CH36271), Bombay, India, 4–7 January 1998; IEEE: Piscataway, NJ, USA, 1998; pp. 555–562. [Google Scholar]

- Kang, K.; Li, H.; Yan, J.; Zeng, X.; Yang, B.; Xiao, T.; Zhang, C.; Wang, Z.; Wang, R.; Ouyang, W.; et al. T-cnn: Tubelets with convolutional neural networks for object detection from videos. IEEE Trans. Circuits Syst. Video Technol. 2017, 28, 2896–2907. [Google Scholar] [CrossRef]

- Lowe, D.G. Distinctive image features from scale-invariant keypoints. Int. J. Comput. Vis. 2004, 60, 91–110. [Google Scholar] [CrossRef]

- Freund, Y.; Schapire, R.; Abe, N. A short introduction to boosting. J.-Jpn. Soc. Artif. Intell. 1999, 14, 1612. [Google Scholar]

- Malisiewicz, T.; Gupta, A.; Efros, A.A. Ensemble of exemplar-svms for object detection and beyond. In Proceedings of the 2011 International Conference on Computer Vision, Barcelona, Spain, 6–13 November 2011; IEEE: Piscataway, NJ, USA, 2011; pp. 89–96. [Google Scholar]

- Girshick, R. Fast R-CNN. In Proceedings of the 2015 IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; IEEE: Piscataway, NJ, USA, 2015; pp. 1440–1448. [Google Scholar]

- Kvietkauskas, T.; Pavlov, E.; Stefanovič, P.; Birutė, P. The Efficiency of YOLOv5 Models in the Detection of Similar Construction Details. Appl. Sci. 2024, 14, 3946. [Google Scholar] [CrossRef]

- Kumar, D.; Muhammad, N. Object detection in adverse weather for autonomous driving through data merging and YOLOv8. Sensors 2023, 23, 8471. [Google Scholar] [CrossRef] [PubMed]

- Wang, C.Y.; Yeh, I.H.; Liao, H.Y.M. Yolov9: Learning what you want to learn using programmable gradient information. arXiv 2024, arXiv:2402.13616. [Google Scholar]

- Felzenszwalb, P.F.; Girshick, R.B.; McAllester, D.; Ramanan, D. Object detection with discriminatively trained part-based models. IEEE Trans. Pattern Anal. Mach. Intell. 2009, 32, 1627–1645. [Google Scholar] [CrossRef] [PubMed]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Spatial pyramid pooling in deep convolutional networks for visual recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 37, 1904–1916. [Google Scholar] [CrossRef]

- Yu, H.; Luo, X. Cvt-assd: Convolutional vision-transformer based attentive single shot multibox detector. In Proceedings of the 2021 IEEE 33rd International Conference on Tools with Artificial Intelligence (ICTAI), Washington, DC, USA, 1–3 November 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 736–744. [Google Scholar]

- Wang, C.Y.; Bochkovskiy, A.; Liao, H.Y.M. YOLOv7: Trainable bag-of-freebies sets new state-of-the-art for real-time object detectors. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; IEEE: Piscataway, NJ, USA, 2023; pp. 7464–7475. [Google Scholar]

- Zheng, C. Stack-YOLO: A friendly-hardware real-time object detection algorithm. IEEE Access 2023, 11, 62522–62534. [Google Scholar] [CrossRef]

- Bochkovskiy, A.; Wang, C.Y.; Liao, H.Y.M. Yolov4: Optimal speed and accuracy of object detection. arXiv 2020, arXiv:2004.10934. [Google Scholar]

- Chen, X.; Ma, H.; Wan, J. Multi-Scale Attention Mechanism for Remote Sensing Image Classification. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–15. [Google Scholar]

- Chhabra, M.; Ravulakollu, K.K.; Kumar, M.; Sharma, A.; Nayyar, A. Improving automated latent fingerprint detection and segmentation using deep convolutional neural network. Neural Comput. Appl. 2023, 35, 6471–6497. [Google Scholar] [CrossRef]

- Chhabra, M.; Sharan, B.; Elbarachi, M.; Kumar, M. Intelligent waste classification approach based on improved multi-layered convolutional neural network. Multimed. Tools Appl. 2024, 81, 1–26. [Google Scholar] [CrossRef]

- Vora, P.; Shrestha, S. Detecting diabetic retinopathy using embedded computer vision. Appl. Sci. 2020, 10, 7274. [Google Scholar] [CrossRef]

- Morar, A.; Moldoveanu, A.; Mocanu, I.; Moldoveanu, F.; Radoi, I.E.; Asavei, V.; Gradinaru, A.; Butean, A. A comprehensive survey of indoor localization methods based on computer vision. Sensors 2020, 20, 2641. [Google Scholar] [CrossRef] [PubMed]

- Sturm, J.; Burgard, W.; Cremers, D. Evaluating egomotion and structure-from-motion approaches using the TUM RGB-D benchmark. In Proceedings of the Workshop on Color-Depth Camera Fusion in Robotics at the IEEE/RJS International Conference on Intelligent Robot Systems (IROS), Vilamoura, Algarve, Portugal, 7–12 October 2012; IEEE: Piscataway, NJ, USA, 2012; Volume 13, p. 6. [Google Scholar]

- Burri, M.; Nikolic, J.; Gohl, P.; Schneider, T.; Rehder, J.; Omari, S.; Achtelik, M.W.; Siegwart, R. The EuRoC micro aerial vehicle datasets. Int. J. Robot. Res. 2016, 35, 1157–1163. [Google Scholar] [CrossRef]

- Schubert, D.; Goll, T.; Demmel, N.; Usenko, V.; Stueckler, J.; Cremers, D. The TUM VI benchmark for evaluating visual-inertial odometry. In Proceedings of the 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Madrid, Spain, 1–5 October 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 1680–1687. [Google Scholar]

- She, Q.; Feng, F.; Hao, X.; Yang, Q.; Lan, C.; Lomonaco, V.; Shi, X.; Wang, Z.; Guo, Y.; Zhang, Y.; et al. Openloris-object: A dataset and benchmark towards lifelong object recognition. arXiv 2019, arXiv:1911.06487. [Google Scholar]

- Ligocki, A.; Jelinek, A.; Zalud, L. Brno urban dataset-the new data for self-driving agents and mapping tasks. In Proceedings of the 2020 IEEE International Conference on Robotics and Automation (ICRA), Paris, France, 31 May–31 August 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 3284–3290. [Google Scholar]

- Klenk, S.; Chui, J.; Demmel, N.; Cremers, D. Tum-vie: The tum stereo visual-inertial event dataset. In Proceedings of the 2021 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Prague, Czech Republic, 27 September–1 October 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 8601–8608. [Google Scholar]

- Wang, W.; Zhu, D.; Wang, X.; Hu, Y.; Qiu, Y.; Wang, C.; Hu, Y.; Kapoor, A.; Scherer, S. Tartanair: A dataset to push the limits of visual slam. In Proceedings of the 2020 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Las Vegas, NV, USA, 24 October 2020–24 January 2021; IEEE: Piscataway, NJ, USA, 2020; pp. 4909–4916. [Google Scholar]

- Zhao, S.; Gao, Y.; Wu, T.; Singh, D.; Jiang, R.; Sun, H.; Sarawata, M.; Whittaker, W.C.; Higgins, I.; Su, S.; et al. SubT-MRS Dataset: Pushing SLAM Towards All-weather Environments. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 16–22 June 2024; IEEE: Piscataway, NJ, USA, 2024; pp. 22647–22657. [Google Scholar]

- Fei, B.; Yang, W.; Chen, W.M.; Li, Z.; Li, Y.; Ma, T.; Hu, X.; Ma, L. Comprehensive review of deep learning-based 3d point cloud completion processing and analysis. IEEE Trans. Intell. Transp. Syst. 2022, 23, 22862–22883. [Google Scholar] [CrossRef]

- Chen, H.; Wang, P.; Wang, F.; Tian, W.; Xiong, L.; Li, H. Epro-pnp: Generalized end-to-end probabilistic perspective-n-points for monocular object pose estimation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; IEEE: Piscataway, NJ, USA, 2022; pp. 2781–2790. [Google Scholar]

- Byravan, A.; Fox, D. Se3-nets: Learning rigid body motion using deep neural networks. In Proceedings of the 2017 IEEE International Conference on Robotics and Automation (ICRA), Singapore, 29 May–3 June 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 173–180. [Google Scholar]

- Wang, S.; Clark, R.; Wen, H.; Trigoni, N. Deepvo: Towards end-to-end visual odometry with deep recurrent convolutional neural networks. In Proceedings of the 2017 IEEE International Conference on Robotics and Automation (ICRA), Singapore, 29 May–3 June 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 2043–2050. [Google Scholar]

- Xiao, L.; Wang, J.; Qiu, X.; Rong, Z.; Zou, X. Dynamic-SLAM: Semantic monocular visual localization and mapping based on deep learning in dynamic environment. Robot. Auton. Syst. 2019, 117, 1–16. [Google Scholar] [CrossRef]

- Duan, R.; Feng, Y.; Wen, C.Y. Deep pose graph-matching-based loop closure detection for semantic visual SLAM. Sustainability 2022, 14, 11864. [Google Scholar] [CrossRef]

- Mur-Artal, R.; Montiel JM, M.; Tardos, J.D. ORB-SLAM: A versatile and accurate monocular SLAM system. IEEE Trans. Robot. 2015, 31, 1147–1163. [Google Scholar] [CrossRef]

- Lin, J.; Zhang, F. R3LIVE++: A Robust, Real-time, Radiance reconstruction package with a tightly-coupled LiDAR-Inertial-Visual state Estimator. arXiv 2022, arXiv:2209.03666. [Google Scholar]

- Wang, R.; Schworer, M.; Cremers, D. Stereo DSO: Large-scale direct sparse visual odometry with stereo cameras. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 3903–3911. [Google Scholar]

- Engel, J.; Schöps, T.; Cremers, D. LSD-SLAM: Large-scale direct monocular SLAM. In Proceedings of the European Conference on Computer Vision, Zurich, Switzerland, 6–12 September 2014; Springer International Publishing: Cham, Switzerland, 2014; pp. 834–849. [Google Scholar]

- Campos, C.; Elvira, R.; Rodríguez, J.J.G.; Montiel, J.M.M.; Tardós, J.D. Orb-slam3: An accurate open-source library for visual, visual–inertial, and multimap slam. IEEE Trans. Robot. 2021, 37, 1874–1890. [Google Scholar] [CrossRef]

- Fabre, W.; Haroun, K.; Lorrain, V.; Lepecq, M.; Sicard, G. From Near-Sensor to In-Sensor: A State-of-the-Art Review of Embedded AI Vision Systems. Sensors 2024, 24, 5446. [Google Scholar] [CrossRef] [PubMed]

- Lygouras, E.; Santavas, N.; Taitzoglou, A.; Tarchanidis, K.; Mitropoulos, A.; Gasteratos, A. Unsupervised human detection with an embedded vision system on a fully autonomous UAV for search and rescue operations. Sensors 2019, 19, 3542. [Google Scholar] [CrossRef] [PubMed]

- Douklias, A.; Karagiannidis, L.; Misichroni, F.; Amditis, A. Design and implementation of a UAV-based airborne computing platform for computer vision and machine learning applications. Sensors 2022, 22, 2049. [Google Scholar] [CrossRef] [PubMed]

- Ortega, L.D.; Loyaga, E.S.; Cruz, P.J.; Lema, H.P.; Abad, J.; Valencia, E.A. Low-Cost Computer-Vision-Based Embedded Systems for UAVs. Robotics 2023, 12, 145. [Google Scholar] [CrossRef]

- Marroquín, A.; Garcia, G.; Fabregas, E.; Aranda-Escolástico, E.; Farias, G. Mobile robot navigation based on embedded computer vision. Mathematics 2023, 11, 2561. [Google Scholar] [CrossRef]

- Nuño-Maganda, M.A.; Dávila-Rodríguez, I.A.; Hernández-Mier, Y.; Barrón-Zambrano, J.H.; Elizondo-Leal, J.C.; Díaz-Manriquez, A.; Polanco-Martagón, S. Real-Time Embedded Vision System for Online Monitoring and Sorting of Citrus Fruits. Electronics 2023, 12, 3891. [Google Scholar] [CrossRef]

| Chapter of this Paper | Category | Algorithm/Datasets | Domain | Key Features | Related Applications |

| Chapter 1 | Development Overview | Caltech; KITTI; MOSSE; R-CNN; OVD-SLAM, etc. | Related Datasets; Visual Detection; Visual Tracking; Visual SLAM. | History and trend of development and application. | Action detection; Simultaneous localization and mapping; Multiple Object Tracking; Pilotless automobile, etc. |

| Chapter 2 | Traditional Target Detection and Tracking Based on Correlation Filtering | Viola–Jones Detector | Face detection | Cascade classifier; integral image processing | Anti-occlusion long-term tracking framework |

| ECO-Tracker | General object tracking | Tracking confidence; peak-to-sidelobe ratio | |||

| Comparison of multiple tracking algorithms | General object tracking; UAV20 dataset | Comprehensive comparison of performance and energy consumption | |||

| Chapter 3 | Deep Learning Object Detection | Review of classification 1D, 2D, 3D, 3D+ vision and multi-modal sensing datasets | Multi-class datasets | Multi-class datasets | Automated latent fingerprint detection and segmentation using deep convolutional neural network; Intelligent waste classification approach based on improved multi-layered convolutional neural network; ACDet: computer vision detection in the medical industry; |

| PASCAL; VOC; UAV, etc. | Well-known public datasets for object detection | Verify and improve algorithm performance | |||

| YOLO series | General object detection | Single-stage detection, real-time processing | |||

| CNN series | General object detection | Region Proposal Network, two-stage detection | |||

| Comparison of multiple deep learning-based detection algorithms | General object detecting, MS COCO, and EP datasets | Comprehensive comparison of performance and energy consumption | |||

| Chapter 4 | Visual SLAM Algorithms | TUM series datasets; SubT-MRS; TartanAir et al. | Well-known public datasets for visual SLAM | Verify and improve algorithm performance | Visual Framework for Unmanned Factory Applications with Multi-Driverless Robotic Vehicles and UAVs |

| LIO-SAM | LiDAR and IMU Integration | High accuracy in large-scale and dynamic environments; | |||

| DROID-SLAM | Localization and Mapping | Deep learning-based, end-to-end feature extraction and optimization; Robust to varying environments | |||

| Comparison of multiple SLAM algorithms | Locate and map TUM-VI dataset | Comprehensive comparison of performance and energy consumption | |||

| Discussion of improving model energy efficiency | Model optimization | Model pruning; quantization |

| Challenge/FPS | ECO-C | LADCF | ECO-HC | ARCF | ADNet | STRCF | STRCF | CFNet | MCCT-H |

| AC | 0.602① | 0.592② | 0.588③ | 0.573 | 0.569 | 0.562 | 0.557 | 0.559 | 0.544 |

| BC | 0.639① | 0.619③ | 0.617 | 0.621② | 0.567 | 0.558 | 0.556 | 0.542 | 0.553 |

| CM | 0.610① | 0.602② | 0.584 | 0.599③ | 0.571 | 0.569 | 0.555 | 0.540 | 0.528 |

| FM | 0.593② | 0.597① | 0.581③ | 0.578 | 0.559 | 0.544 | 0.544 | 0.549 | 0.533 |

| FO | 0.643① | 0.598③ | 0.607② | 0.583 | 0.581 | 0.574 | 0.565 | 0.555 | 0.548 |

| IV | 0.583① | 0.579② | 0.525 | 0.552 | 0.566③ | 0.524 | 0.523 | 0.527 | 0.495 |

| LR | 0.643① | 0.616② | 0.580 | 0.584③ | 0.579 | 0.574 | 0.568 | 0.555 | 0.551 |

| OV | 0.609① | 0.591② | 0.565 | 0.579③ | 0.557 | 0.541 | 0.551 | 0.545 | 0.523 |

| PO | 0.634① | 0.604② | 0.574 | 0.594③ | 0.565 | 0.559 | 0.549 | 0.541 | 0.527 |

| SO | 0.618① | 0.584③ | 0.595② | 0.579 | 0.561 | 0.563 | 0.544 | 0.534 | 0.503 |

| SV | 0.613② | 0.617① | 0.569 | 0.589③ | 0.582 | 0.567 | 0.552 | 0.529 | 0.529 |

| VC | 0.576① | 0.565③ | 0.567② | 0.553 | 0.563 | 0.556 | 0.538 | 0.529 | 0.509 |

| Ave FPS | 36.4 | 16.1 | 41.2① | 32.8 | 11.7 | 22.8 | 31.2 | 38.1 | 26.8 |

| Datasets Type | Representative Datasets | Main Applications | Advantages |

| 1D | UCR Time Series Classification Archive [57] | Time series classification | Working with time series data |

| 2D | COCO, Pascal VOC, ImageNet, Cityscapes [58] | Object detection, classification, segmentation | Rich image data |

| 3D | KITTI, ScanNet, ModelNet, ShapeNet [59] | Three-dimensional reconstruction, object detection, scene understanding | Providing spatial information |

| 3D+ Vision | SUN RGB-D, NYU Depth V2, Matterport3D [60] | Indoor scene understanding | Combining RGB images and depth information |

| Multimodal Sensing | ApolloScape, KAIST, nuScenes [61], Waymo Open Dataset [62] | Autonomous driving, pedestrian detection | Integrating multiple sensor data |

| Dataset/Year | Structure | Diversity | Description | Scale | URL |

| TLR [63] 2009 | Focuses on traffic light detection in urban environments with labeled bounding boxes for traffic lights. | Urban traffic scenes from Paris, mainly focused on traffic lights. | Traffic scenes in Paris | 20,200 Frames | https://github.com/DeepeshDongre/Traffic-Light-Detection-LaRA Accessed on 30 December 2017 |

| KITTI [41] 2012 | Comprises annotated images, 3D laser scans, and GPS data; includes multiple sensors such as stereo cameras, laser and IMU. | Urban and rural driving environments in Germany, diverse in weather, time, and lighting conditions. | The traffic scene analysis in Germany. | 16,000 Images | https://www.cvlibs.net/datasets/kitti/raw_data.php Accessed on 1 June 2012 |

| BelgianTSD [64] 2014 | Contains images of 269 traffic sign categories with labeled bounding boxes. | Diverse traffic signs captured in different weather and lighting conditions. | The traffic sign annotations of 269 types. With the 3D location. | 138,300 Images | https://btsd.ethz.ch/shareddata/ Accessed on 18 February 2014 |

| GTSDB [65] 2013 | Traffic sign detection dataset with annotations for different traffic sign types. | Diverse road environments, including various climates and weather conditions. | Traffic scenes in different climates. | 2100 Images | http://benchmark.ini.rub.de/?section=gtsdb&subsecti-on=news Accessed on 15 July 2013 |

| IJB [66] 2015 | A dataset with various face images and videos for facial recognition and verification tasks. | High diversity in pose, lighting, and background variations. | IJB scenes for recognition and detection tasks. | 50,000 Images and video clips | https://www.nist.gov/programs-projects/face-challenges Accessed on 10 June 2015 |

| WiderFace [67] 2016 | A large-scale face detection dataset containing images with a wide range of scales, occlusions, and poses. | High variability in face scale, pose, occlusion, and lighting. | Face detection scene. | 32,000 Images | http://shuoyang1213.me/WIDERFACE/ Accessed on 17 April 2016 |

| NWPU-VHR10 [68] 2016 | A remote sensing dataset containing images with 10 different object classes from high-resolution satellite imagery. | High diversity in urban and rural environments, multiple object types like aircraft, ships, and vehicles. | Remote sensing detection scenario. | 4600 Images | http://github.com/chaozhong2010/VHR-10_dataset_coco Accessed on 17 July 2019 |

| V3Det [69] 2023 | A dataset designed for large vocabulary visual detection tasks with a wide range of object categories. | Diverse object categories with detailed annotations, covering many daily and industrial objects. | Vast vocabulary visual detection dataset with precisely annotated. | 245,500 Images | https://v3det.openxlab.org.cn/ Accessed on 10 August 2023 |

| EventVOT [70] 2024 | Focuses on high-resolution event-based object tracking, including various categories like pedestrians, vehicles, and drones. | Event-based video data under different weather conditions and environments. | Contains multiple categories of videos, such as pedestrians, vehicles, drones, table tennis, etc. | 1141 Video clips | https://github.com/Event-AHU/EventVOT_Benchmark Accessed on 5 July 2023 |

| Our Own Datasets (Year) | Scale | Description and Application | |

| Images | Objects | ||

| WMTIS (2019) (Weak Military Targets in Infrared Scenes) | 1632 | 1808 | The infrared simulation weak target dataset, constructed based on the infrared characteristics of military targets, includes a series of challenging samples featuring scale variations. These samples represent fighter jets, tanks, and warships across diverse environments, such as desert, coastal, inland, and urban settings. |

| MB (2023) (Medicine Boxes) | 3345 | 9612 | Various types of medicine boxes made of various materials, covering all mainstream types of pharmacies, including challenges such as reflection caused by waterproof plastic film. |

| EP (2022) (Express Packages) | 25,127 | 60,393 | A comprehensive sample covering all types of packages in the logistics and express delivery industry, with sizes ranging from 5 cm to 3 m and heights ranging from 0.5 mm to 1.2 m in various shapes. |

| FPP (2022~2024) (Fully Automated Unmanned Factories and Product Detection and Tracking) | 9716 | 17,435 | Multi-target samples in complex industrial scenes face many challenges, such as easy occlusion, uneven illumination, inconsistent imaging quality, and open scenes. This includes production personnel and samples of various types of products collected through various methods, such as ground robotic vehicles and UAVs. |

| Algorithmic Network | Backbone | AP | FPS | Processing Time (ms/Frame) | GPU Utilization (%) | Memory Usage (MB) | Energy Consumption (Watts) | Energy Efficiency |

| Fast R-CNN [72] | VGG-16 | 19.7 | 15 | 66.7 | 75% | 1150 | 120 | 0.00044 |

| Faster R-CNN [73] | VGG-16 | 21.9 | 25 | 40 | 65% | 1110 | 100 | 0.00191 |

| SSD321 [74] | ResNet-101 | 28.0 | 30 | 33.3 | 62% | 1020 | 90 | 0.00467 |

| YOLOv3 [75] | DarkNet-53 | 33.0 | 45 | 22.2 | 58% | 850 | 85 | 0.01685 |

| RefineDet512+ [76] | ResNet-101 | 41.8 | 40 | 25 | 66% | 950 | 88 | 0.01333 |

| NAS-FPN [77] | AmoebaNet | 48.0 | 20 | 50 | 81% | 1400 | 150 | 0.00114 |

| YOLOv5 [78] | CSP-Darknet53 | 48.9 | 60 | 16.7 | 54% | 750 | 75 | 0.06248 |

| YOLOv8 [79] | CSPNet | 51.8② | 65② | 15.4② | 45% | 6600 | 70② | 0.11910 |

| YOLOv9 [80] | CSPNet | 51.4 | 70① | 14.3① | 42%② | 650① | 68① | 0.13550② |

| YOLOv10 [11] | CSPNet | 52.4① | 70① | 14.3① | 40%① | 660② | 70② | 0.13900① |

| Model | Smooth mAP | mAP | FLOPS/M | Average Processing Time/ms |

| YOLOv5 | 66.49 | 66.95 | 2.58① | 1.78② |

| YOLOv6 | 62.67 | 62.15 | 4.26 | 1.83 |

| YOLOv8 | 74.86② | 75.25② | 3.08 | 1.79 |

| YOLOv10 | 64.69 | 65.10 | 2.71② | 1.77① |

| ACDet | 79.52① | 81.56① | 3.69 | 1.79 |

| Dataset | Release Date | Scale | Collection Situation | Application Areas |

| TUM RGBD [92] | 2012 | Over 100 indoor video sequences | RGB Camera/Depth camera | SLAM, 3D Reconstruction, Robotics |

| EUROC [93] | 2016 | 11 sequences, approx. 50 min | Binocular Camera/RGB Camera/UAV | Visual-Inertial Odometry, SLAM |

| TUM VI [94] | 2018 | Approx. 40 h of indoor and outdoor sequences | RGB Camera/IMU | Visual-Inertial Odometry, SLAM |

| Openloris [95] | 2019 | Over 10 indoor environments | RGB Camera/IMU/Radar | Lifelong Learning, Object Recognition |

| Brno Urban [96] | 2020 | 16 sequences, approx. 100 min | RGB Camera/IMU/Radar/Infrared | Autonomous Driving, Urban Navigation |

| TUM-VIE [97] | 2021 | Approx. 10 h of sequences | Binocular Camera/IMU | SLAM, Robotics, Augmented Reality |

| TartanAir [98] | 2023 | Over 300 km in virtual environments | RGB&RGBD Camera/Optical flow/Semantic segmentation | SLAM, Navigation, Robotics |

| SubT-MRS [99] | 2024 | Over 100 h of underground exploration videos | RGB Camera/IMU/Radar/Thermal imagery | SLAM, Navigation, Robotics |

| Seq. and Index | ORB-SLAM [106] | DROID-SLAM | R3LIVE++ [107] | DSO [108] | LSD-SLAM [109] | ORB-SLAM3 [110] |

| Room1 | 0.057 | 0.040 | 0.028① | 0.032② | 0.037 | 0.033 |

| Room2 | 0.051 | 0.027① | 0.037 | 0.066 | 0.029② | 0.033 |

| Room3 | 0.027 | 0.017① | 0.021② | 0.023 | 0.038 | 0.026 |

| Room4 | 0.052 | 0.058 | 0.043 | 0.033② | 0.021① | 0.033② |

| Room5 | 0.030 | 0.026① | 0.051 | 0.027② | 0.055 | 0.037 |

| Room6 | 0.031① | 0.035 | 0.032② | 0.036 | 0.037 | 0.040 |

| Avg RMSE ATE | 0.041 | 0.033① | 0.035 | 0.036 | 0.036 | 0.034② |

| FPS | 35② | 25 | 30 | 20 | 45① | 15 |

| Processing Time (ms/frame) | 28② | 40 | 33 | 50 | 22① | 66 |

| GPU Utilization (%) | 85% | 90% | 88% | 80% | 70%① | 75%② |

| Memory Usage (MB) | 1850② | 2200 | 2100 | 1900 | 1800① | 2250 |

| Energy Consumption (Watts) | 180① | 240 | 200 | 190 | 375 | 185② |

| Energy Efficiency | 0.424① | 0.127 | 0.2375 | 0.1336 | 0.417② | 0.0704 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chen, L.; Li, G.; Xie, W.; Tan, J.; Li, Y.; Pu, J.; Chen, L.; Gan, D.; Shi, W. A Survey of Computer Vision Detection, Visual SLAM Algorithms, and Their Applications in Energy-Efficient Autonomous Systems. Energies 2024, 17, 5177. https://doi.org/10.3390/en17205177

Chen L, Li G, Xie W, Tan J, Li Y, Pu J, Chen L, Gan D, Shi W. A Survey of Computer Vision Detection, Visual SLAM Algorithms, and Their Applications in Energy-Efficient Autonomous Systems. Energies. 2024; 17(20):5177. https://doi.org/10.3390/en17205177

Chicago/Turabian StyleChen, Lu, Gun Li, Weisi Xie, Jie Tan, Yang Li, Junfeng Pu, Lizhu Chen, Decheng Gan, and Weimin Shi. 2024. "A Survey of Computer Vision Detection, Visual SLAM Algorithms, and Their Applications in Energy-Efficient Autonomous Systems" Energies 17, no. 20: 5177. https://doi.org/10.3390/en17205177

APA StyleChen, L., Li, G., Xie, W., Tan, J., Li, Y., Pu, J., Chen, L., Gan, D., & Shi, W. (2024). A Survey of Computer Vision Detection, Visual SLAM Algorithms, and Their Applications in Energy-Efficient Autonomous Systems. Energies, 17(20), 5177. https://doi.org/10.3390/en17205177