1. Introduction

The boundary element method (BEM) is an efficient approach to solving elliptical partial differential equations (PDEs) since it only requires the discretization of the boundary of the studied domain. However, the method can still be costly, since its computational cost grows at least quadratically with the number of panels in the mesh of the boundary. This cost comes from the computation of a dense matrix of size (where N is the number of panels in the mesh) and from the resolution of the corresponding linear system.

Linear potential flow problems solved with the BEM form the basis of many marine engineering applications, such as the design of wave energy converters (WECs) [

1,

2]. To increase the economic viability of energy extraction, many wave energy projects are considering the development of “wave farms” composed of many WECs [

3,

4]. However, the simulation of the linear potential flow of a complete farm with the BEM is often too computationally costly to be solved with the currently available tools. To work around this limitation, some simpler approximate models, such as that of [

5,

6,

7], have been proposed.

Instead, in the present paper, we investigate a more general technique for limiting the computational cost of the simulation of large linear potential flow problems without any particular hypotheses. The main idea is that the interactions between distant panels in the mesh do not need to be computed with the same high accuracy as that of close panels.

One such technique is the fast multipole method (FMM), which is based on a multipole expansion of the considered Green function and a hierarchical clustering of the physical domain. Then, it approximates far-away interactions by grouping panels’ interactions and transferring their influence along the cluster tree. Thus, it provides a fast matrix–vector product in linear complexity without the need to compute far-away interactions. This method has been applied to hydrodynamics in several works in the past, such as those of [

8,

9,

10,

11].

An alternative is to use low-rank approximations with hierarchical matrices (

-matrices). Reference works on the topic of

-matrices include [

12,

13]. Panels are also grouped in clusters, and the interactions between distant clusters are stored in the influence matrix as low-rank blocks. This approach does not directly rely on the explicit expression of the Green function, unlike in the FMM. It only assumes some properties of the Green function, and low-rank approximations are computed by generating a fraction of the coefficients of the approximated blocks. More general matrix structures involving off-diagonal low-rank blocks have been shown to be an efficient representation for many physical applications.

Unlike most other BEM problems, the Green function of the linear potential flow problem is complex to express and to compute (see [

14] for a discussion of the infinite-depth case; the finite-depth case is even more difficult). Unlike the multipole expansion required for the FMM (as implemented in, e.g., [

10]), the low-rank approximation does not require any particular explicit expression of the Green function. It is, thus, easier to adapt low-rank approximation strategies to the variety of implementations of the Green functions found in the literature.

Let us also briefly mention the precorrected Fast Fourier Transform (pFFT) method, which can also be used to improve the performance of BEM problems. Applications to linear potential flow include [

15,

16].

Despite their popularity, low-rank compression techniques have never (to our knowledge) been applied to linear potential flow problems. The goal of the present study is to test their performance using a prototype implemented in the open-source linear potential solver Capytaine [

17], which is derived from Nemoh (version 2) [

18,

19]. The implementation of the prototype of low-rank compression takes inspiration from the open-source codes of [

20,

21].

In the next section, the theory of the BEM resolution of a linear potential flow problem is briefly recalled. An application of low-rank compression is then presented. In

Section 3, some numerical results are presented in order to test the efficiency of the method.

3. Numerical Experiments

Given the complexity of the Green function for linear potential flow, the best way to assess the validity of low-rank compression is to experimentally test it in real conditions in an existing BEM solver. For this purpose, the open-source BEM solver Capytaine [

17] was used.

Unless stated otherwise, all experiments were performed by computing the full expression of the Green function (

4) and not by using the tabulation and the asymptotic expression (

6).

3.1. Two Floating Hemispheres at Varying Distance

Let us consider two identical floating spheres with a diameter of 1 m, with their centers at the free surface, separated by a distance d, and discretized with N panels each. (Since only the immersed part of the body is discretized, the mesh is actually two half-spheres at distance d.) Unless stated otherwise, we consider a mesh resolution of panels, a wavelength of m, and a water depth of .

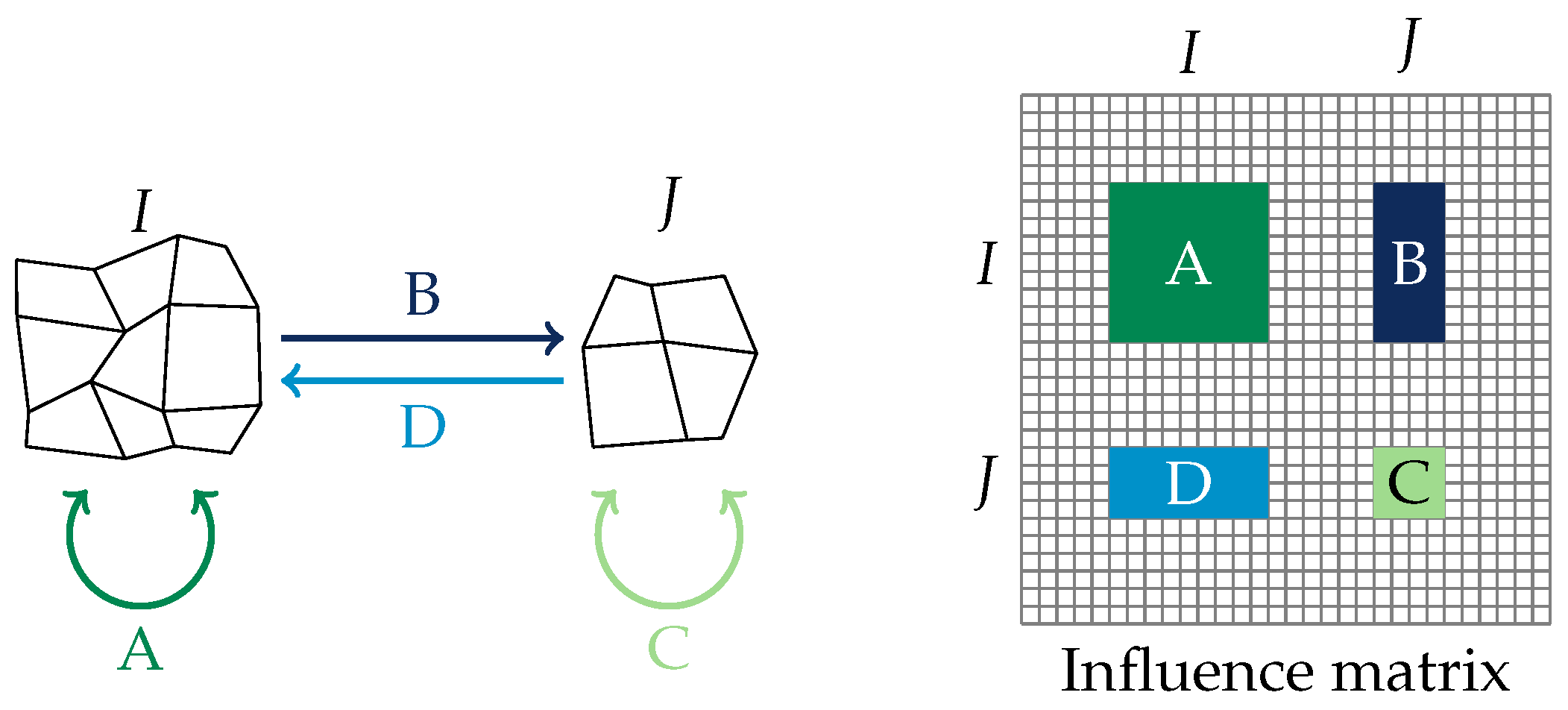

All interaction matrices

S and

K are of size

and can be decomposed into four blocks: two diagonal blocks

A encoding the interactions of a sphere with itself and two non-diagonal blocks

B encoding the interactions of a sphere with the other. Since the spheres are identical, the blocks are actually identical, and the matrices have the following form:

For this numerical experiment, the non-diagonal blocks

B are approximated with low-rank blocks of given rank

r,

, using either the singular value decomposition (SVD) or the adaptive cross-approximation (ACA). The resulting approximated matrix

reads

The relative error of this approximation is evaluated as follows:

where

denotes the Frobenius norm.

The same procedure is repeated for several distances d between the spheres. Each time, the relative error for each rank r is computed for both matrices S and K using both the SVD and the ACA.

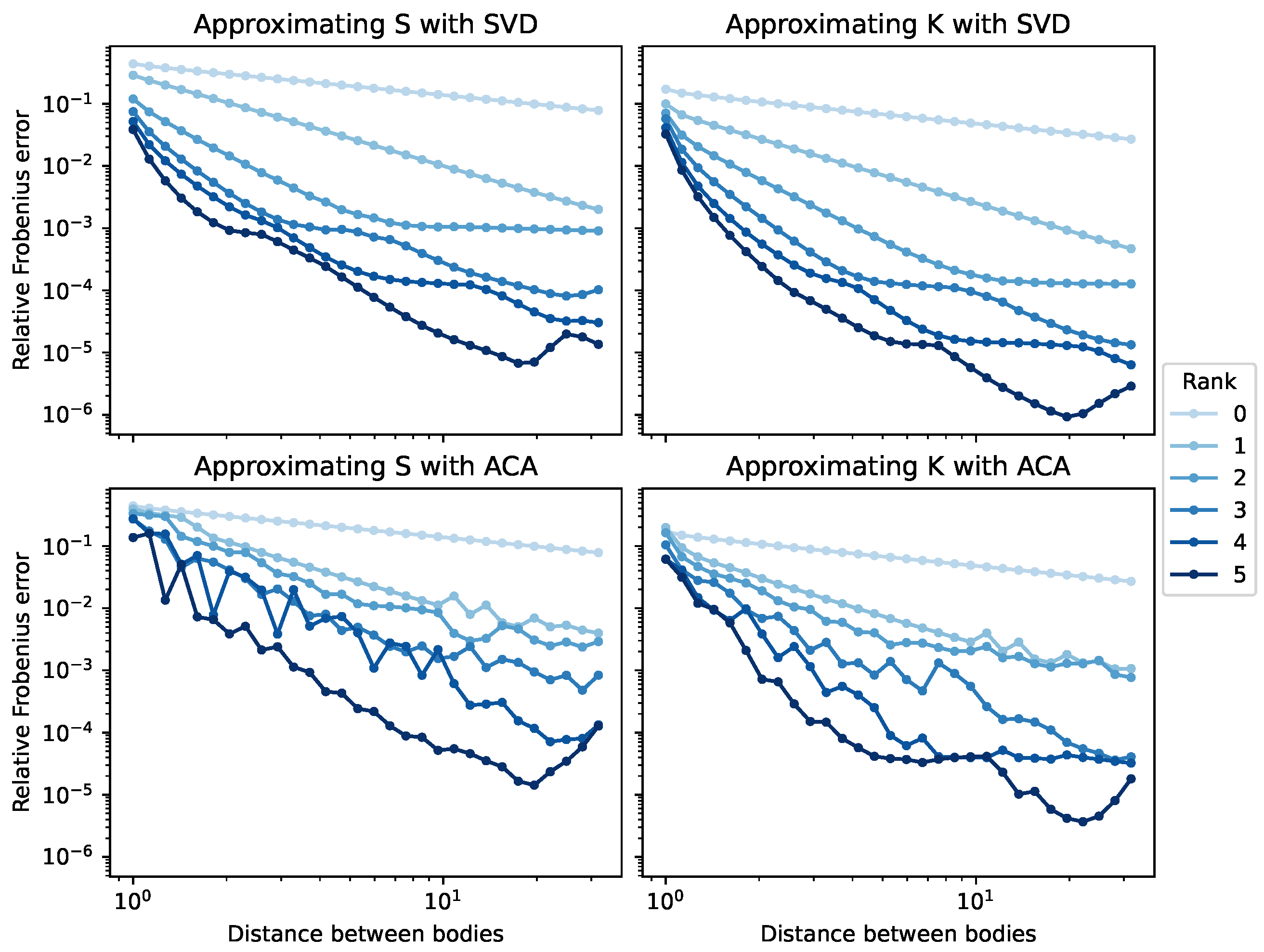

3.1.1. Influence of the Distance and the Rank on the Error

For the first experiment, we want to study the influence of the rank on the relative Frobenius error as a function of the distance. The results are presented in

Figure 3.

Firstly, as expected, the error of the low-rank compression decreases when the distance between the two spheres increases. The error also decreases when the rank of the low-rank compression matrix increases. A matrix of rank 0 (that is, a zero matrix that completely neglects the interaction between the two spheres) is a poor approximation. However, a matrix of rank 2 or 3 is sufficient to have an error of less than 1%. For a block, a compression of rank 2 stores 4% as many coefficients as the full block, meaning that we can reach 99% accuracy with less than 10% of the computation.

The errors in the compression of S and K are fairly similar. Both matrices are as difficult as the other to approximate with such a method.

Finally, we can check the efficiency of the ACA with respect to the optimal low-rank compression obtained with the SVD. The compression obtained from the ACA is noisier and slightly less accurate than that obtained with the SVD, but still decreases with the rank and the distance in the same way as that of the SVD.

3.1.2. Influence of the Distance and the Wavelength on the Error

In this experiment, we study the influence of the wavelength. The rank of the low-rank compression is now fixed to 2, while several wavelengths between

m and

m are now tested. (Smaller wavelengths would require a finer mesh to be accurately captured.) The Rankine

term of the Green function alone (that is, without the frequency-dependent wave term) is also tested. The results are presented in

Figure 4.

The most efficient compression is obtained when the Green function is only the Rankine kernel. For the full Green functions with a wave term, the error always decreases more slowly with the distance than for the Rankine term.

As expected from the discussion in

Section 2.2.2, the compression is more efficient for long wavelengths, that is, low frequencies.

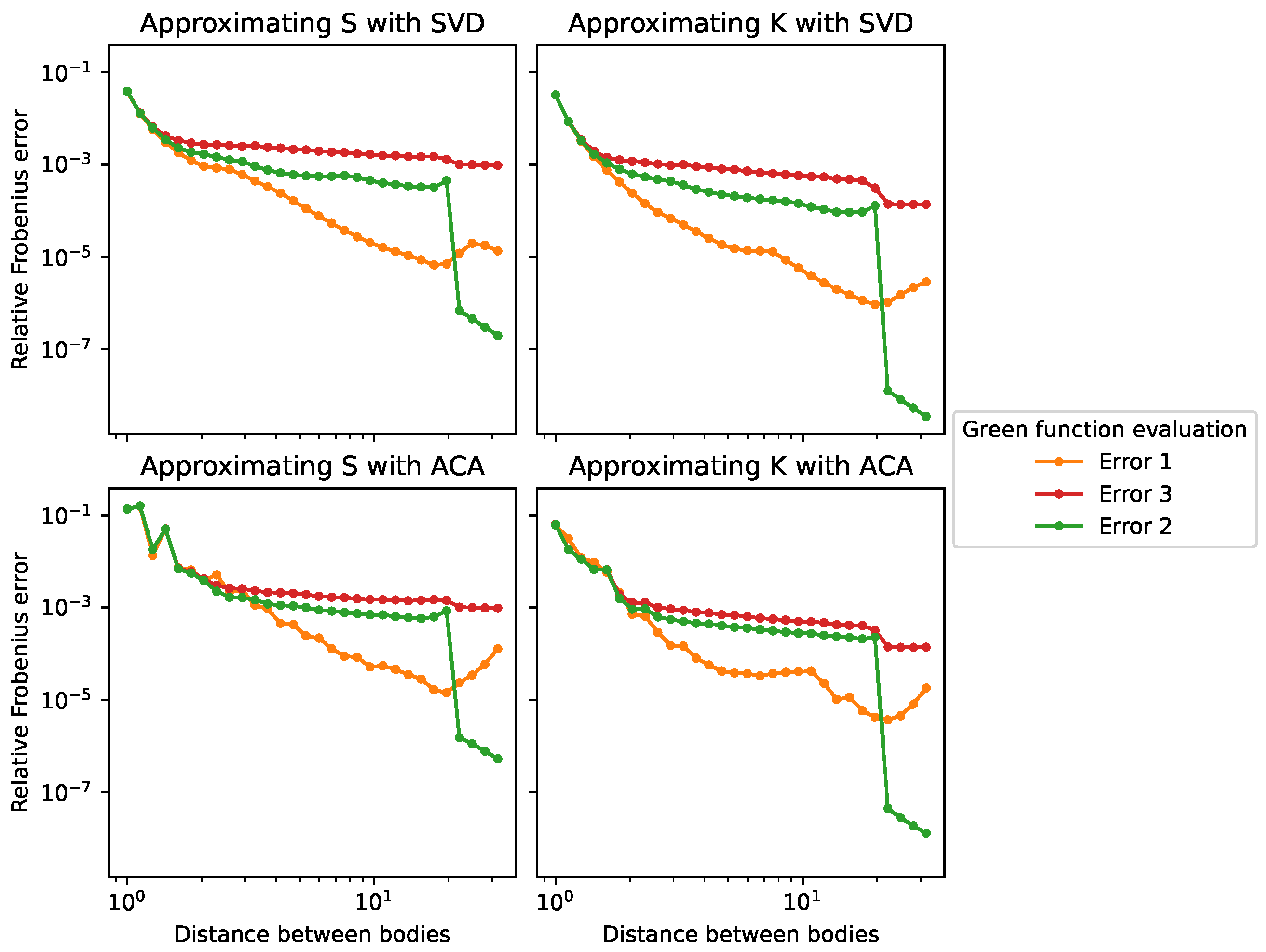

3.1.3. Influence of the Computation Method for the Green Function on the Error

Now, we study the role of the method used to compute the Green function. In the previous experiments, the exact expression of the Green function (

4) was used. However, the default method in Capytaine is not to compute this costly exact expression, but instead to use approximations. For close panels, values are interpolated from a pre-computed table of exact expressions (

4). For distant panels, the asymptotic expression (

6) is used.

When introducing two independent ways to approximate the matrices, there are more ways to compute the error. In

Figure 5, the three errors that we consider are summarized in a diagram. Error 1 is the error due to the low-rank compression of the matrix computed with the exact Green function (

4), as presented in the previous graphs. Since the goal of this study is to evaluate the performance of the low-rank compression, we are interested in error 2, which is the error due to the low-rank compression when working with the approximate Green function. However, since the end goal would be to use both the low-rank compression and the Green function approximation, we also plot error 3, which incorporates the error due to the approximation of the Green function.

The results of the comparison of the exact and the approximate expressions are presented in

Figure 6. Here, the rank of the compression is fixed to 5 (as the difference is less visible for lower ranks), and the wavelength is fixed to 1 m.

A jump is clearly visible in the error of the full approximate matrix with respect to the low-rank approximate matrix (error 2). This corresponds to the switch from the tabulated approximation to the asymptotic approximation, which occurs near . For distances below , the approximation of the tabulated Green function shows worse results than those of the exact Green function. One can suppose that the interpolation in the tabulation introduces spurious “information” in the matrix that is not accurately captured with the low-rank compression. On the contrary, for distances above , the approximation of the asymptotic Green function shows better results than those of the exact Green function. Similarly, one can suppose that the asymptotic expression contains less “information” than the exact Green function and is, thus, easier to compress with a low-rank block.

The errors due to both the compression and the approximation (error 3) are always higher. The use of an approximate formula for the Green function leads to relative errors of the order of . This means that it is useless to try to reach a low-rank compression error of less than when working with the approximate Green function, as the approximation will be the bottleneck. The tuning of Delhommeau’s approximation of the Green function as used in Capytaine is outside the scope of this study.

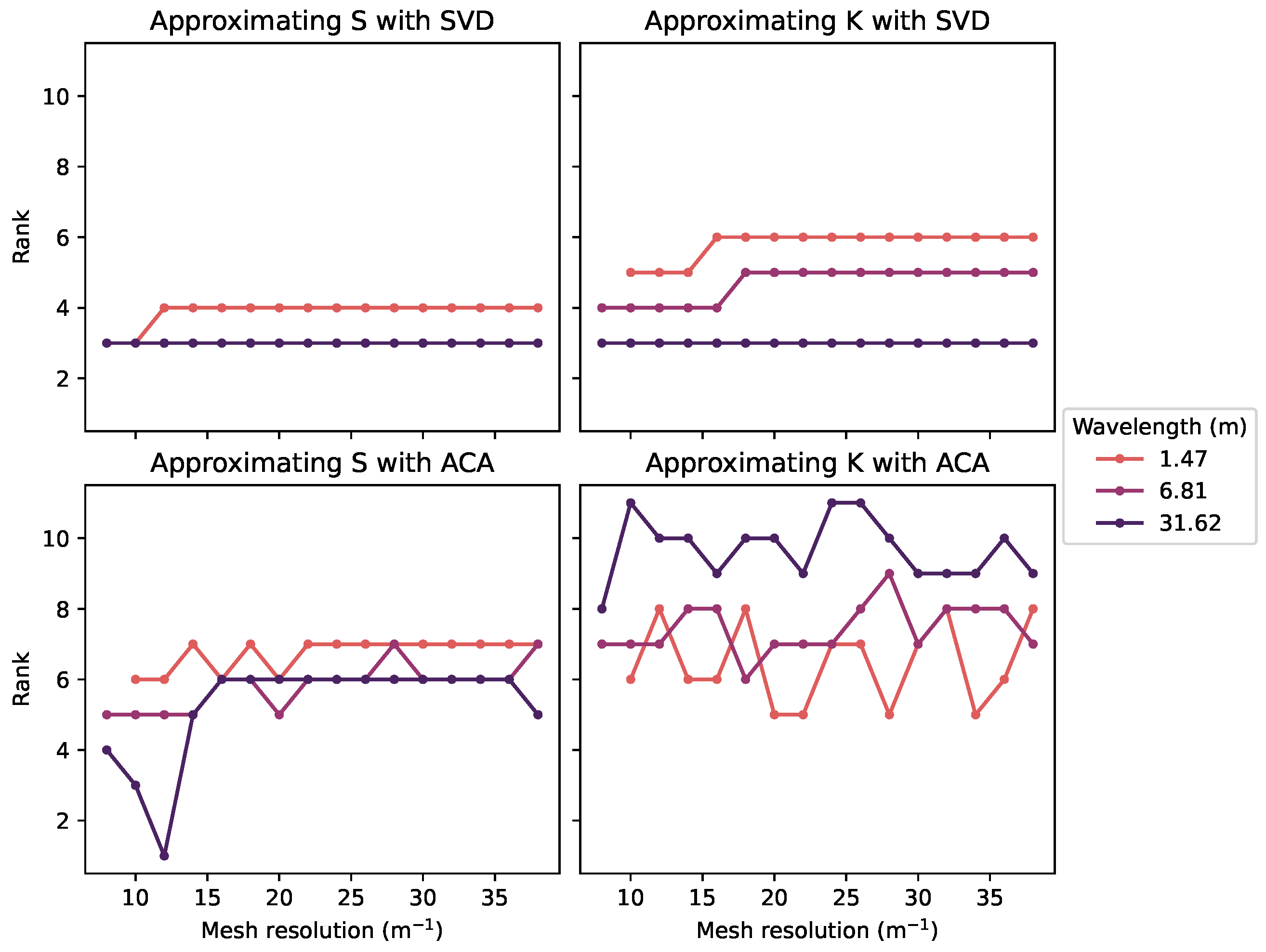

3.1.4. Influence of the Resolution on the Rank

In all of the previous experiments, the rank of the compression was fixed, and then the error was computed. In practice, the opposite is done: A tolerance is set on the error, and then the SVD or ACA searches for the lowest rank that allows the compression of the matrix block with such an error. In all test cases from now on, the tolerance will be fixed instead of the rank.

In this new test case, we experimentally test if the oscillatory kernel has the same behavior as that theoretically predicted by [

28] for the Helmholtz equation. In particular, the influence of the resolution of the mesh on the rank of the approximation is studied. The distance is fixed to 4

, and the tolerance is fixed to

. For several wavelengths and mesh resolutions (such that the mesh resolution is sufficient to capture waves of that wavelength), a low-rank approximation is built using the given tolerance.

The results are presented in

Figure 7. As already noticed in

Section 3.1.2, the lower-wavelength cases are harder to compress with low-rank blocks. As predicted in [

28], the rank is basically constant as the mesh resolution increases when the approximation is computed with the SVD. The results of the ACA are noisier, but the same tendency can be assumed.

For a given geometry and a given wavelength, the rank of the approximation for a given error can be assumed to be constant with the resolution of the mesh. Consequently, the cost of the low-rank blocks only grows like with the number of panels N. In other words, providing a resolution that is too high for the desired accuracy will not be as much of a burden on the performance as it would be for a full matrix.

3.2. Array of Random Floating Spheres

In this section, we introduce a new experiment that is closer to a real application. Forty hemispheres with random radii (between

and 1

) are positioned randomly on a free surface in a square with a size of

. (When a collision between two spheres is detected, the random positioning algorithm is restarted until no collision is found). Depending on the size of the spheres, the full problem is composed of around 5000 panels, which is large enough to be worth approximating with low-rank blocks while being small enough to also be solvable with a full matrix with a reasonable amount of RAM (circa 750

with Capytaine’s default settings). See

Figure 8 for a view of the mesh, as well as an example of result.

The accuracy of the method is not computed on the matrix itself anymore, but rather on the physical output of the solver. For this purpose, the diffraction force

on body

i with respect to the Heave degree of freedom (that is, the vertical component of the diffraction force on the body) is chosen. The reference solution is computed using full matrices, and the relative error in the diffraction force is computed as

where

is the diffraction force vector computed with the low-rank block compression,

is the reference diffraction force vector, and

is the infinite norm.

The range of wavelengths of interest is chosen to be between 6 and 30 . Lower wavelengths are not studied in order to avoid irregular frequencies appearing around wavelengths of 2 .

The interaction matrices can be seen as 40 block × 40 block matrices, where each block is the interaction between two of the hemispheres in the domain. A block in this matrix is computed with a low-rank approximation if the admissibility criterion (

15) is satisfied, where

is set here to

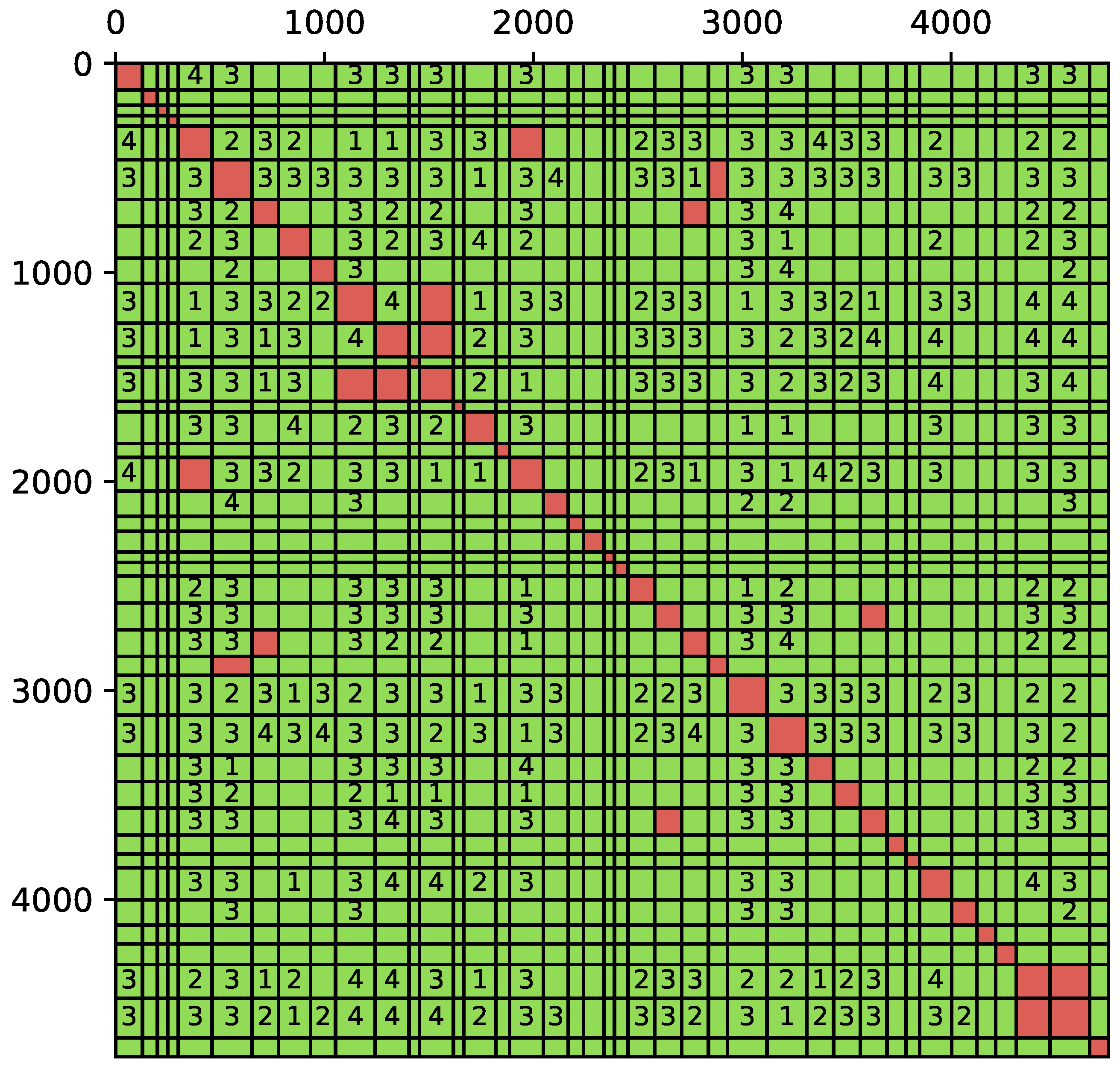

. See

Figure 9 for a graphical representation of such a matrix. When computing the low-rank approximation with the ACA, a tolerance on the error has to be set as a stopping criterion for the iterative process. A higher tolerance leads to sparser matrices with higher errors, and a lower tolerance leads to denser matrices with lower errors. Three different relative error tolerances are tested in this experiment:

,

, and

.

Aside from the relative error in the diffraction force, the main output of interest is the density of the matrix, that is, the ratio of the number of coefficients stored in the matrix to the size of the matrix. The density of the reference method with a full matrix is 1. The density is strongly correlated with the gain in speed and memory of using the compressed matrix.

For each wavelength and each ACA tolerance, the simulation is repeated five times with different random generations of the array of floating spheres. The main results are presented in

Figure 10. As expected, a lower tolerance in the ACA leads to a lower error and a denser matrix. By choosing a tolerance of

, we observe that the error is below 1%, while the density of the matrix is around 10%. In other words, the solver reaches 99% accuracy with only 10% of the coefficients.

The effect of the wavelength is not as clear. With a lower tolerance

, the higher frequencies (lower wavelength) seem to lead to denser matrices, which is consistent with the discussion in

Section 3.1.2. With a higher tolerance, the wavelength does not have a clear effect on the result.

Note that with the block low-rank matrix structure (as illustrated in

Figure 9), the complexity of the method is still

, where

is the number of bodies. The complexity could be reduced to

by using a more complex block structure, such as the

-matrix structure described in

Section 2.2.4. The optimization of the matrix block structure is outside the scope of this study.

4. Discussion and Conclusions

This paper is about the suitability of low-rank approximation for linear potential flow. Due to the complexity of the expression of the Green function, the theoretical sufficient condition for compressibility with low-rank blocks could not be proved. Thus, we relied on numerical experiments to test the efficiency of the low-rank approximation.

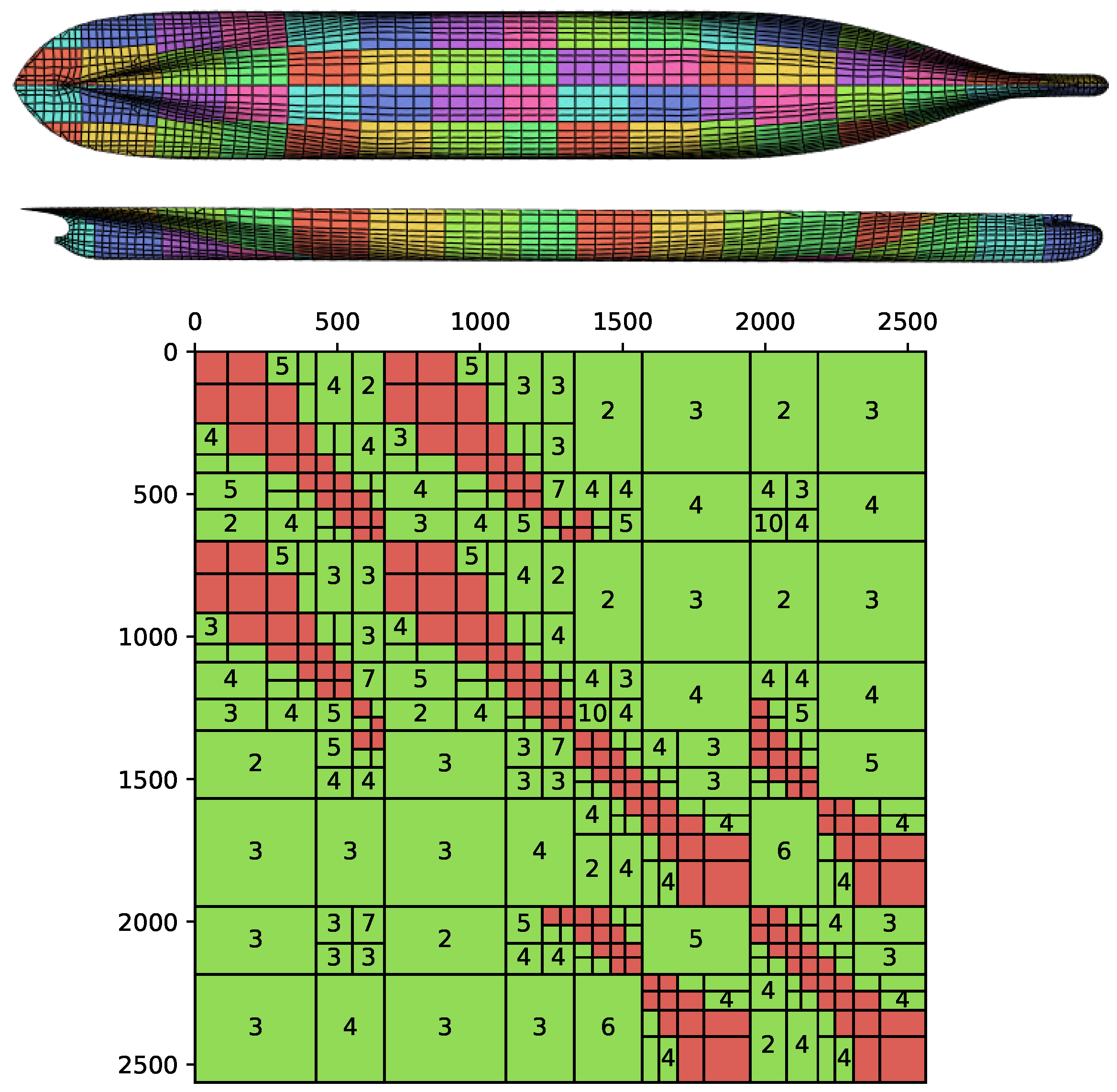

In the numerical examples presented in

Section 3, the clusters of panels used to define the blocks of the matrix corresponded to disjointed bodies. This is the most natural way to split the mesh when studying problems such as those of farms of wave energy converters. However, having each cluster matching one and only one disjoint body is not a requirement of the method. For instance, a mesh with a single large floating body can be split into clusters of panels, and the interaction between these distant clusters can be approximated with low-rank blocks. An illustration is given in

Figure 11, in which the mesh of a realistic ship (provided by the authors of [

33]) is split into a hierarchical cluster of panels, which are used to build a

-matrix. In this illustrative example of possible further applications, the matrices are approximated with a density of ∼21%, while the relative error on the heave component of the diffraction force is around 2%.

Only infinite-depth results have been presented in this paper. Early tests with the finite-depth Green function showed similar efficiency for the low-rank approximation. However, the influence of the computation of the Green function, as presented in

Section 3.1.3, would need to be reconsidered with the additional approximation that was performed to compute the finite-depth Green function with Delhommeau’s method [

18].

The prototype implementation in Capytaine is available to all users of the code and can be used, e.g., to solve very large problems that would not otherwise fit in RAM. However, for most small- to medium-sized problems, its usage is not recommended, as it is often less performant than the default full-matrix method. This lower performance of the more advanced method can be explained by two main factors.

Firstly, the handling of matrices with low-rank blocks requires more bookkeeping than that required by plain full matrices. This is critical in the current implementation of Capytaine, as this bookkeeping is implemented in Python, which is a relatively slow programming language. The block low-rank structure was considered in

Section 3 instead of

-matrices with the hope of reducing this bookkeeping cost. Due to our naive implementation, this cost is still disproportionate, and for this reason, no time benchmark is presented in this paper. A solution would be to use another library with a low-level language as a dependency for dealing with the compression and to additionally use

-matrices, which will also offer better performance in terms of complexity due to their hierarchical nature. Examples of such libraries are Hlib (

http://hlib.org (accessed on 3 January 2024)), HiCMA (

https://ecrc.github.io/hicma/ (accessed on 3 January 2024)), hmat-oss (

https://github.com/jeromerobert/hmat-oss (accessed on 3 January 2024)), and Htool (

https://htool-documentation.readthedocs.io (accessed on 3 January 2024)). The latter is developed by one of the authors. Due to the modular structure of Capytaine, it is possible to integrate this kind of library with it, with the main technical difficulty being the interface between the different programming languages involved.

Secondly, the low-rank block compression interferes with other optimizations used by Capytaine. As an example, the evaluation of the Green function for S and the evaluation of its derivative for K share much common code, and the two matrices are built together by Capytaine. Using matrix compressions would require coupling the compression of both matrices, which, to our knowledge, has never been done. Preliminary tests showed that the rows and columns chosen by the ACA for one of the two matrices gave satisfying results when approximating the second matrix.

Another example is the choice of the linear solver; the low-rank block prototype presented here used a GMRES solver. The default method in Capytaine is to compute the LU decomposition once and apply it for all of the right-hand sides associated with the matrix. When GMRES is used with the block low-rank approximation, it currently restarts the resolution of the linear system from scratch for each right-hand side. One possibility to overcome this issue is to factorize the block low-rank matrix, which can be done efficiently depending on the maximal rank. This approach was recently studied in the context of finite-element matrices [

31,

32]. Another possibility is to use the more involved

-matrix format, which can also perform all matrix operations, unlike other data-sparse formats and, in particular, factorizations, while having even better theoretical complexities. Thus, to solve problems with multiple right-hand sides, a solution to avoid iterative solvers would be to use the approximate LU factorization associated with

-matrices; see [

13] (Section 7.6) or [

12] (Section 2.7). An alternative solution would be to use a more efficient iterative solver, and, in the case of multiple right-hand sides, block iterative methods have been investigated (see [

34] and [

35] (Section 6.12)). Instead of solving each right-hand side separately, these methods solve them all at the same time using matrix–matrix operations, which is more efficient than several matrix–vector operations, and this allows a larger search space to be built. These methods are also usually coupled with recycling techniques to reuse some previous information built before a restart or in a previous solve; see [

36,

37,

38]. Finally, the performance of the GMRES could be improved by the use of a good preconditioner [

39].

Beyond low-rank compression, other data-sparse matrices can be used to reduce the computational cost of BEM solvers. For instance, the local symmetries of a mesh lead to Toeplitz-type matrix structures [

40]. One can imagine coupling these optimizations to reduce the computational cost of BEM solvers for large problems even further in the future.