Abstract

With the global objectives of achieving a “carbon peak” and “carbon neutrality” along with the implementation of carbon reduction policies, China’s industrial structure has undergone significant adjustments, resulting in constraints on high-energy consumption and high-emission industries while promoting the rapid growth of green industries. Consequently, these changes have led to an increasingly complex power system structure and presented new challenges for electricity demand forecasting. To address this issue, this study proposes a 24-step multivariate time series short-term load forecasting algorithm model based on KNN data imputation and BiTCN bidirectional temporal convolutional networks combined with BiGRU bidirectional gated recurrent units and attention mechanism. The Kepler adaptive optimization algorithm (KOA) is employed for hyperparameter optimization to effectively enhance prediction accuracy. Furthermore, using real load data from a wind farm in Xinjiang as an example, this paper predicts the electricity load from 1 January to 30 December in 2019. Experimental results demonstrate that our comprehensive short-term load forecasting model exhibits lower prediction errors and superior performance compared to traditional methods, thus holding great value for practical applications.

1. Introduction

Load forecasting is a crucial prerequisite for maintaining a power system’s dynamic balance between supply and demand. Its accuracy significantly impacts the power system’s planning, operation, and economic dispatching. As a core technology in the power system, load forecasting plays a vital role in ensuring power supply stability, optimizing resource allocation, and assisting decision-making. With the robust development of the global power market, the complexity and dynamics of the power system are escalating. The integration of various distributed new energy sources, the emergence of the new interaction mode of “source-network-charge-storage”, and the pursuit of the power industry to enhance quality and efficiency have imposed higher demands for load forecasting accuracy. Against this backdrop, the continuous improvement and optimization of load forecasting technology have become vital to auxiliary power supply decision-making and maintaining power system stability.

The composition of the load in the force system is relatively straightforward and the prediction scenario is primarily focused on the system level or bus bar due to the traditional electrical level. As a result, the conventional method of load prediction is a relatively simple statistical analysis-based approach. Examples of such models include the linear regression model and the Holt-Winters model [1,2]. While these models are easy to derive and interpret, their generalization capabilities are limited. With the rapid growth of the power system, particularly the development of new power systems, numerous new elements have been introduced, such as distributed renewable energy and electric vehicles, significantly increasing the uncertainty of the load side of the power system. Concurrently, due to the increasing popularity of demand-side management, new roles have emerged, such as producers and consumers, load aggregators, etc., leading to a more active interaction between users and the grid. In light of these complex load factors, it can be observed that the traditional load forecasting methods struggle to construct the load model accurately in the context of new power systems.

To address this issue, data-driven artificial intelligence has progressively emerged as the primary application approach and research direction for power system load forecasting. At present, AI-based methods can be primarily categorized into traditional machine learning techniques and deep learning methods. Prediction approaches relying on conventional machine learning often encompass random forest algorithm [3], decision tree regression [4], gradient lifting tree (GBDT) regression [5], CatBoost regression [6], support vector regression (SVR) [7], extreme gradient boost (XGBoost) [8], and extreme learning machine (ELM) [9], among others. These methods exhibit significant advantages when addressing nonlinear problems. Nonetheless, challenges such as complex data correlation processing, multiple feature dimensions, vast data scales, and slow processing speeds [10] still persist. The deep learning method, on the other hand, can extract hidden abstract features layer by layer from a vast amount of data through multilayer nonlinear mapping, thereby effectively enhancing prediction efficacy. Among various neural networks, the recurrent neural network (RNN) demonstrates effectiveness in addressing wind power prediction-related issues. However, traditional backpropagation neural networks are susceptible to falling into local optimal solutions, leading to gradient disappearance and gradient explosion [10]. To overcome this issue, long short-term memory (LSTM) and gated recurrent unit (GRU) networks introduce unique unit structures to the basic RNN [11,12], making them increasingly applicable to wind speed and wind power prediction. The integration of random forest and LSTM neural network was proposed in the literature [13] for power load prediction, yielding satisfactory results. The literature [14] introduced an algorithm based on a multivariable short-duration memory network (MLSTM) to exploit historical wind power and wind speed data for more accurate wind power forecasting, demonstrating stable performance across different datasets. Temporal convolutional neural networks (TCN) built upon convolutional neural networks (CNNS) were also explored, with the convolutional architecture proven to excel over typical cyclic networks across various tasks and data sets, while the flexible, sensitive field exhibited a longer adequate memory. The literature [15] enhanced the CNN-LSTM model to predict ultra-short-term offshore wind power, implementing an optimal combination of attention-strengthening and spatial characteristic weak modules to boost wind power output reliability. GRU, an LSTM derivative, simplified the neural unit structure and boasted a faster convergence rate. The literature [16] proposed the prediction of low- and high-frequency data using multiple linear regression and GRU neural networks, respectively, and combined the outcomes from each to generate the final prediction. Lastly, the literature [17] merged CNN-GRU, extracting multidimensional load influencing factors through CNN before constructing a feature vector in the form of a time series to be used as the input for the GRU network, thoroughly investigating the dynamic change rule characteristics within the data.

Through an in-depth exploration of machine learning algorithms in load prediction, researchers can select various network models for this purpose and choose the most suitable model algorithm that can significantly enhance the accuracy of load prediction. However, setting hyperparameters in network models through manual experience is both inconvenient and challenging. Consequently, numerous advanced intelligent optimization algorithms have been extensively employed in load forecasting to aid network models in determining appropriate network parameters, thereby improving the accuracy of load forecasting. In recent years, scholars have conducted mathematical simulations to analyze the survival strategies, evolutionary processes, and competitive mechanisms of various creatures in nature as well as the formation, development, and termination of natural phenomena. Additionally, they have examined the relationship between matter and the method of inquiry in natural and human sciences, resulting in the proposal of numerous ROAs. In 1988, Professor Holland, inspired by the biological evolution found in nature, introduced the genetic algorithm (GA) [18], laying the groundwork for the development of advanced intelligence algorithms. Building on the collective behavior of biological groups in nature, Eberhart and his colleagues advanced the particle swarm optimization algorithm (PSO) in 1995 [19], followed by Dorigo et al., who proposed the ant colony algorithm (ACO) [20]. Additionally, algorithms based on physical principles, such as the gravitational search algorithm (GSA) proposed by Rashedi et al. in 2009 [21], and those inspired by human social behavior, such as the instructional learning optimization algorithm (TLBO) proposed by Rao et al. in 2011 [22], were also developed. In the evolution of stochastic optimization algorithms, researchers continuously put forward new methods based on the “No Free Lunch Theorem” (NFL) [23]. The NFL asserts that no learning algorithm is universally superior to all others without prior assumptions regarding the problem domain. This implies that the average performance of all algorithms is identical across all possible problems. This theorem emphasizes the significance of considering specific problem characteristics when selecting a learning algorithm. This also further boosts the performance of existing methods. Notable examples include the marine predator algorithm (MPA) [24], chameleon algorithm (CSA) [25], Archimedes optimization algorithm (AOA) [26], golden eagle optimization algorithm (GEO) [27], Stochastic Frank-Wolfe (SFO) [28], chimp optimization algorithm (ChOA) [29], slime bacteria algorithm (SMA) [30], and dandelion optimization algorithm (DO) [31], African vulture optimization algorithm (AVOA) [32], aphid–ant mutualism algorithm (AAM) [33], beluga optimization algorithm (BWO) [34], elephant clan optimization algorithm (ECO) [35], human happiness algorithm (HFA) [36], and gander optimization algorithm (GOA) [37]. These methods are extensively employed to enhance the precision of relevant load forecasting techniques. For instance, in reference [38], the CSA optimization algorithm is used to improve the load prediction accuracy of DBN-SARIMA. Similarly, reference [39] leverages the GOA algorithm to optimize the accuracy of LSSVR load prediction. Among these, the Kepler optimization algorithm (KOA) [40] is a novel optimization method based on a physical model proposed by Abdel-Basset et al. in 2023. It exhibits a significant improvement in performance compared to previous optimization algorithms.

In the process of handling load data summaries, it is unavoidable that vacant data will occasionally occur. To ensure the continuity of the data and the comprehensiveness of the features, it is crucial to fill in these gaps. The traditional filling methods can be categorized into statistical-based approaches and machine-learning-based approaches. The statistical techniques primarily include mean filling, conditional mean filling, and global constant filling. At present, machine-learning-based methods mainly encompass decision-tree-based filling [41] and neural network regression-based loading [42]. Notably, several statistical methods fail to identify the correlation between the data effectively, resulting in shortcomings regarding the accuracy of filling vacant values. Conversely, several machine-learning-based methods, to some extent, take into account the correlation between the data, thereby exhibiting better performance compared to their statistical counterparts. However, these methods are generally effective only for linear and noise-free data sets. With the progression of information acquisition technology, humans are confronted with a multitude of nonlinear noise data sets. Consequently, the development of high-performance gap-filling algorithms for such nonlinear noise data sets holds significant research value. Among these algorithms, the KNN (K-nearest neighbors) approach, being a relatively novel method, demonstrates excellent nonlinear data fitting performance and exhibits robust resistance to noise interference in tests.

The attention mechanism is a model proposed by Treisman and Gelade to simulate the attention mechanism of the human brain [43]. The emergence of this model has addressed the deficiencies of recurrent neural networks in processing long sequences, such as gradient vanishing and computational inefficiency. Its innovation and application effects have sparked great interest, leading to the proposal of many related models in the past few years. These models have achieved significant success in various artificial intelligence fields such as large language models, computer vision, and audio processing. The attention mechanism exhibits strong modeling capabilities for long-range dependencies and interactions in sequential data, making it highly attractive for time series modeling. Ref. [44] employs a convolutional self-attention mechanism, which incorporates local information into the attention mechanism through causal convolutions and uses a sparse attention strategy to reduce computational complexity. Model [45] adopts a sparse transformer as a generator to learn sparse attention maps for time series prediction and uses a discriminator at the sequence level to improve prediction performance. Ref. [46] achieves logarithmic time complexity and memory overhead through methods such as the prob-sparse self-attention mechanism and a generative decoder, enabling one-time prediction of long time series. Ref. [47] sets time series decomposition as an internal module of deep neural networks and designs an autocorrelation mechanism based on periodicity to discover and represent time series dependencies. Ref. [48] introduces a pyramidal attention module, where the tree structure between scales summarizes features of different resolutions, while adjacent connections within the same scale model time dependencies of different ranges. Ref. [49] proposes a frequency-enhanced transformer method that combines the sparse representation of time series Fourier transforms to capture detailed structures of time series data while using decomposition methods to capture global information. Ref. [50] employs a data segmentation approach to embed the input multivariate time series into a 2D vector to preserve both time and dimension information and then uses a two-stage attention layer to capture cross-time and cross-dimension dependencies.

In summary, this paper proposes a novel method founded on KNN data-filling processing. The BiTCN (bidirectional time convolutional) network is optimized utilizing the Kepler adaptive optimization algorithm (KOA). This study presents a 24-step multivariable time series short-term load regression prediction algorithm, which is based on a bidirectional time convolutional network integrated with a bidirectional gated cycle unit (BiGRU) and attention mechanism. By incorporating power load influencing factors, such as electricity price, load, weather, and temperature, the model trains on a multitude of historical data, extracting and mapping the internal relationship between input and output, ultimately achieving accurate prediction results. During the experiment, the KNN model was utilized to fill in missing values. Subsequently, the feature data were fed into the BiTCN and BiGRU models, where their time series modeling capabilities, in conjunction with the bidirectional context capture and attention to crucial information, were harnessed. This approach capitalized on the strengths of each model, extracting features at different levels and dimensions and merging them. Finally, the attention mechanism was employed to weigh various features based on their respective importance at additional time steps, thereby calculating the attention weight for each time step and facilitating the ultimate prediction. Experimental results indicate that, in comparison to traditional methods, the proposed approach presents a lower prediction error and superior model performance, thus demonstrating a more comprehensive range of application values.

2. Related Methodologies

2.1. Load Type Classification

The load forecasting can be categorized into long-term, short-term, and medium-term load forecasting based on the duration. This is primarily utilized to facilitate power supply and grid infrastructure planning or to develop long-term operation and maintenance strategies. Short-term load forecasting offers valuable insights for daily production planning, frequency modulation unit adjustments, and more, thereby contributing significantly to ensuring the safe and stable operation of the power grid in real time.

2.2. KOA Optimization Algorithm

The Kepler optimization algorithm is a heuristic optimization algorithm proposed by Abdel-Basset et al. [40]. In the KOA, the sun and the planets rotating around the sun in elliptical orbits can be used to represent the search space. Since the positions of the planets (the candidate solutions) relative to the sun (optimal solution) change over time, the search space can be explored and utilized more efficiently. During the optimization process, the KOA will apply the following rules:

- (1)

- The orbital periods of planets (the candidate solutions) are randomly selected from a normal distribution.

- (2)

- The eccentricity of planets (the candidate solutions) is generated randomly within the range of [0,1].

- (3)

- The fitness of each solution is calculated based on the objective function.

- (4)

- In the iterative process, the best solution serves as the central star (sun).

- (5)

- Planets move around the sun in elliptical orbits, resulting in changes in their distance over time.

Based on the above rules, after evaluating the fitness of the initial set, the KOA runs iteratively until a termination criterion is met. Theoretically, the KOA can be regarded as a global optimization algorithm due to its exploration and exploitation phases. The following is a detailed description of the KOA’s iterative process from a mathematical point of view.

Step 1. Initial process:

The population size, denoted as N in this process, will be randomly distributed in d dimensions according to Formula (1), representing the decision variables of an optimization problem.

where denotes the g-th planet (candidate solution) in the search space, it represents the number of candidate solutions in the search space; represents the dimension of the problem to be optimized; represents the dimension of the problem to be optimized; and denote the upper and lower bounds of the g-th decision variable, respectively; and denotes a randomly generated number at [0,1].

The g-th planet (candidate solution) in the search space is denoted by , where represents the total number of candidate solutions in the search space. The dimension of the problem to be optimized is represented by . and denote the upper and lower bounds of the g-th decision variable, respectively. Additionally, represents a randomly generated number within the range [0,1].

For each object’s orbital eccentricity (e), it is initialized as in Formula (2):

where is a randomly generated number whose value ranges from [0,1].

Finally, for the orbital period of each planet (candidate solution), it is initialized as Formula (3):

where is a randomly generated number according to a normal distribution.

Step 2. Define the force of gravity (F):

The law of universal gravitation gives the gravitational pull of the sun and any planet . Gravity is defined as Formula (4):

In the formula for gravitation, is a small number; is the cosmological gravitational constant; e is the eccentricity of planetary orbits, whose value ranges from [0,1], which can give the KOA model more random features; is a randomly generated value between [0,1], which can provide more variation in the optimization process; are the normalized value of , which offers the Euclidean distance between the sun and the planets , which is defined as Formula (5):

where offers the Euclidean distance between the sun’s dimensions and the planets’ dimensions . The masses of the sun and the planets at time can be calculated using the following Formulas (6) and (7):

where the functions and are defined as Formulas (8) and (9):

In the definition of solar mass , a random constant from 0 to 1 is generated to represent the planet’s mass value within the scatter search space. The function , which decreases exponentially with time to control the search accuracy, is defined as Formula (10):

where is a constant; is an initial value, while and are the current iteration number and the maximum iteration number, respectively.

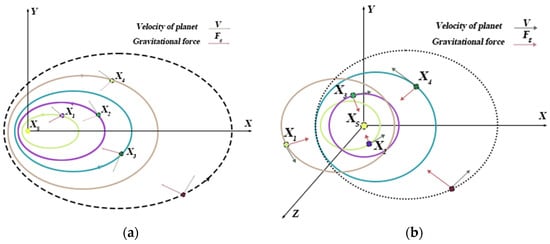

The specific motion is shown in Figure 1.

Figure 1.

Possible positions in (a) 2-dimension and (b) 3-dimension.

Step 3. Calculate the planet’s velocity:

The planetary velocity depends on its setting relative to the sun , and the closer you are to the sun , the greater the velocity; the further away from the sun , the slower it will be. This is understood to mean that as the gravity of the sun tries to capture the planet , the planet increases its speed to avoid being pulled closer and slows down as it escapes. Mathematically, it is understood as the process of the KOA escaping from a local optimum solution, which can be described by the following Formulas (11)–(20):

where the velocity of the planet at a given time is represented by , while represents the planet . and are randomly generated numbers within the range [0,1], while and are random vectors contained in [0,1]. and represent a randomly selected solution from the population. and denote the masses of the sun and planets, respectively; refers to the universal gravitational constant, while is a small value used to prevent division errors caused by zero frequency. denotes the distance between the optimal solution and the object at a specific time. represents the semimajor axis of the planet ’s elliptical orbit at that time, which is defined by Kepler’s third law as shown in the following Formula (21):

where denotes the orbital period of the planet . In our proposed algorithm, it is assumed that the semimajor axis of the elliptical orbit of the planet gradually decreases with time. That is, its solution moves toward the region where the global optimal solution is expected to be found. denotes the Euclidean distance between the normalized and , which is defined as Formula (22):

The purpose of is to calculate the percentage of time steps each object will change. If , the object is close to the sun and will increase its speed to prevent drifting toward the sun due to its huge gravitational pull. Otherwise, the planet will slow down.

Step 4. Escape the local optimum solution:

In the solar system, most objects rotate on their own axis while rotating counterclockwise around the sun ; however, some objects do the opposite, rotating clockwise around the sun. The KOA mimics this mechanism by using this behavior to escape the local algorithm optimal region, which is mainly embodied by a sign F that changes the search direction, thus giving the agent a good chance to scan the search space accurately.

Step 5. Update the object position:

Based on the previous section, the planet will rotate in an elliptical orbit around the sun. During this process, the object will move closer to the sun for some time and away from it. The KOA simulates this behavior mainly using two phases: exploration and exploitation. The KOA will explore objects far from the sun to find new solutions and, more accurately, find the optimal solution. In this way, the exploration and exploitation area around the sun is expanded. In the exploration phase, the target is far away from the sun (the optimal solution) and the whole search area can be explored more effectively. The target distance from the sun is the global optimal solution.

The position update formula is as Formula (23):

where is the new position of the object in time , is the speed needed for the planet to reach the new position, is the best position of the sun found so far, and is used as a sign to change the direction of the search. The position update formula simulates the gravitational force exerted by the sun on the planets. In this formula, a time step is introduced based on the calculation of the distance between the current planet and the sun, multiplied by the gravity of the sun. This modification aids the KOA in exploring its surrounding area after initialization, facilitating more efficient searches for optimal solutions with fewer function evaluations. In general, when the planet is moving away from the sun, the velocity of the planet will represent the KOA’s exploration operator. However, this speed is affected by the sun’s gravitational pull, which contributes to the region around the current better optimal solution for the planet. At the same time, as a planet approaches the sun, its speed will increase dramatically, allowing it to escape the sun’s gravitational pull. In this case, if the sun (the optimal solution) is the local minimum, the velocity represents the local optimal avoidance while the sun’s gravitational representation helps the KOA attack the best solution so far to find a better solution.

Step 6. Update the position with the sun:

If the distance between the sun and the planet is infinitesimally small, then either the planet or the sun emerges as the optimal solution during iterative processes.

This principle is randomly swapped with the formula. The position update formula further improves the KOA’s exploration operator, as shown in Step 5. The mathematical model of the principle is described as Formula (24):

where is an adaptive factor that controls the distance between the sun and the current planet at the time , which is defined as Formula (25):

where is a randomly generated number according to a normal distribution and is a [−2, 1] linear decreasing factor, defined as Formula (26):

where is a cyclic control parameter and gradually reduced from −2 to −1 throughout the optimization process with period as Formula (27):

Step 7. Adjust the parameters:

This step implements an elite strategy to ensure the best placement of the planets and sun. Formula (28) summarizes the process:

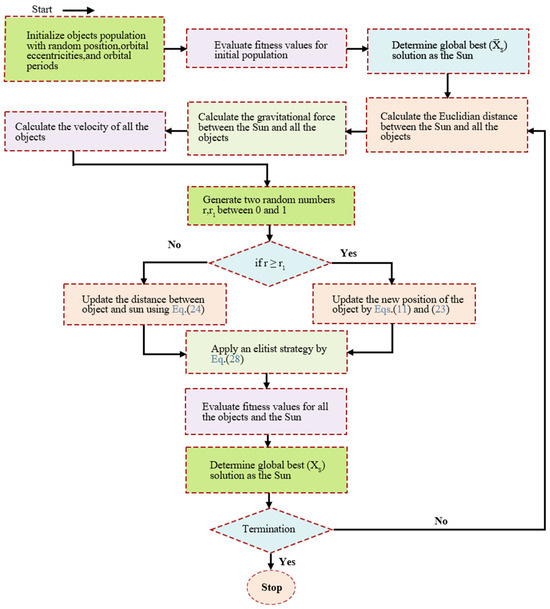

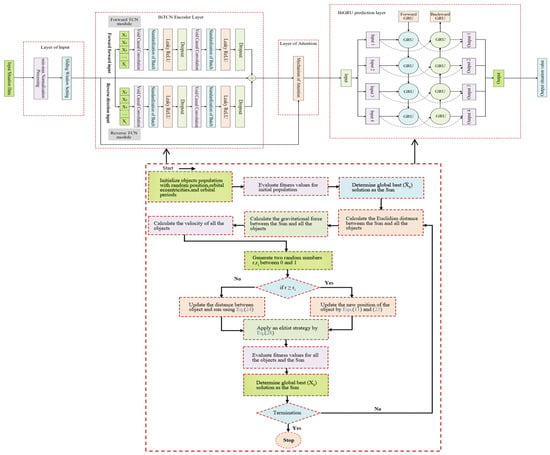

Thus, the specific flow chart of this method is as in Figure 2:

Figure 2.

Flow chart KOA optimization algorithm.

Summary of KOA

The Kepler Optimization Algorithm (KOA) is a heuristic optimization method inspired by the motion of planets around the sun, as proposed by Abdel-Basset et al. In the KOA, candidate solutions, represented as planets, orbit an optimal solution, the sun, in elliptical paths, allowing efficient exploration and exploitation of the search space. The process begins with the random distribution of candidate solutions, followed by the selection of orbital periods and eccentricities from normal and uniform distributions. The fitness of each solution is then calculated to evaluate its quality. During iterative optimization, the best solution serves as the sun, guiding the movement of planets. The velocity of each planet changes based on its distance from the sun, simulating gravitational effects to balance exploration and exploitation. The KOA employs mechanisms to escape local optima by randomly adjusting the search direction. An elite strategy ensures the best solutions are retained and refined, making the KOA a robust global optimization algorithm well suited for complex optimization problems and enhancing prediction accuracy in load forecasting models.

2.3. The BiGRU Model

LSTM is developed from RNN, which changes the neurons in the hidden layer of RNN through the gate mechanism and introduces a self-loop mechanism on the basis of it to improve the shortcomings of gradient disappearance. As a variant of LSTM, the GRU neural network has been optimized in structure, reducing the training time while ensuring high-accuracy prediction. Compared to LSTM, GRU is more concise in design and its structure contains only two gates: the reset gate () and update gate (). The former mainly controls data (or variables?), the input of data, and the composition of previous memory and the latter controls the preservation of the previous memory. The simplified structure of GRU enables it to model sequences for the temporal characteristics of data effectively while maintaining high computational efficiency. The following Formulas (29) and (30) show the governing formulas for GRU units:

where, in Equations (8) and (9), , , , , and the corresponding activation functions as well as the bias , , update , and reset gate are calculated based on the assigned weights. In addition, is the input to the neuron at the time step, and is the cell state vector at the time step . After this, the reset gate is used to start new memory contents. The Hadamard (element product) is calculated using the formula . The reset gate is used to determine what information to eliminate from the previous step. After that, the activity function is applied to produce a new cell state vector .

Finally, the current cell state vector is obtained by passing the reservation information to the next cell. To this end, updating the gate involves the Formulas (31) and (32):

For information retrieval and storage, the GRU neural network adopts a recurrent structure. Still, this structure only considers the previous state of the prediction point, ignoring the future state of the prediction point. However, in actual forecasting, the load information at the previous time is always highly related to the load information at the next time. Therefore, the prediction accuracy cannot be further improved. For deeper time series feature extraction, the BiGRU is introduced here.

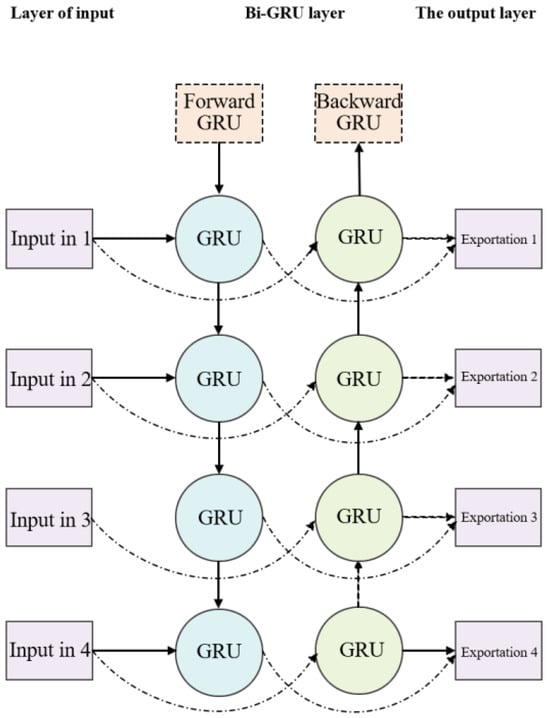

The BiGRU (bidirectional recurrent neural network) consists of a forward GRU layer and an inverse GRU layer, which allows for predicting time series in the opposite direction. In the end, the output will be determined by the state of the forward and inverse layers. Due to the absence of a connection between the forward and inverse layers, BiGRU enables simultaneous consideration of data change patterns compared to one-way GRU, thereby enhancing flexibility, comprehensiveness, and relevance in analysis. This promotes a stronger integration between the model and its processing information while significantly improving prediction accuracy compared to GRU. The structure of BiGRU is shown in Figure 3 and the expression of the network structure is as in Formulas (33)–(35).

where GRU is the traditional GRU network operation process, and are the state and weight of the forward hidden layer at time , and are the state and weight of the backward hidden layer at time and is the bias of the hidden layer at time .

Figure 3.

Diagram of the BiGRU structure.

The specific structure of the BiGRU is shown in Figure 3.

2.4. BiTCN Model

Time convolutional network (TCN) models have gained significant attention and application in the field due to their ability to process time series data in parallel efficiently. Similarly, the study and application of BiLSTM networks have been validated to capture important fluctuation characteristics associated with future time parameters. However, it should be noted that in predicting the current power load, TCN is limited to capturing past parameter fluctuations such as wind speed, temperature, and rate of change. In summary, this paper proposes to optimize the power load forecasting model using the BiTCN algorithm.

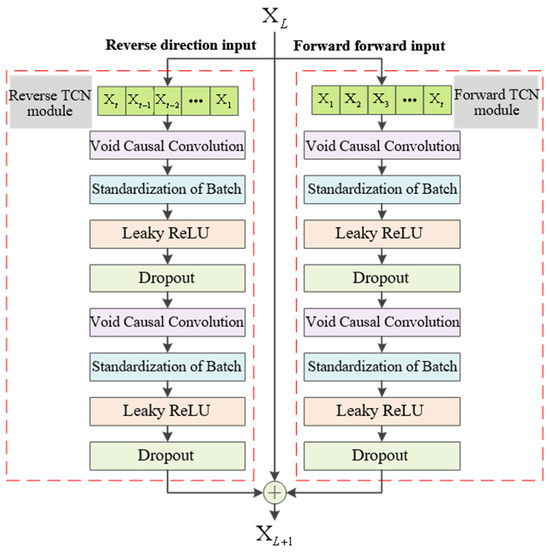

The BiTCN model facilitates bidirectional information integration by simultaneously capturing the fluctuation characteristics of parameter changes in both past and future time dimensions. It achieves this by connecting forward TCN modules, backward TCN modules, and a temporal attention module within each BiTCN module. Multiple BiTCN modules are sequentially connected to construct the overall BiTCN model.

The forward TCN model receives past time parameters as inputs from the module, while the backward TCN model takes future time parameters as inputs. Both models share consistent input parameters and employ temporal attention to merge and process the outputs from both modules, resulting in a novel set of output features. Element-wise input fusion significantly enhances the performance of the model.

We are assuming that the input to the module in the BiTCN model is , where . Firstly, is fed into the forward TCN module to extract historical temporal information from the vibration data and obtain bold time features . Simultaneously, by applying reverse processing on , we accept backward time data , where . Then, it serves as input for the back TCN module to extract future temporal information from the vibration data and acquire backward time features . The formulas for calculating these two types of features are Formulas (36) and (37):

The dimensions of the temporal convolutional kernel for capturing time gaps, the parameter for the leaky ReLU activation function, the dilation rate for atrous convolution, and the parameter for dropout regularization are represented by , , , and , respectively. denotes feature extraction in the forward TCN module while describing feature extraction in the backward TCN module. The structure of BiTCN is shown in Figure 4.

Figure 4.

BiTCN module structure.

2.5. The Attention Mechanism

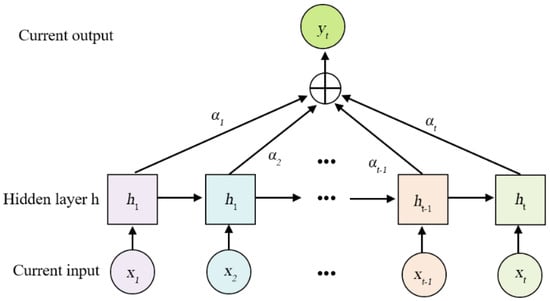

The attention mechanism is a computational approach that emulates the information processing mechanism of the human brain, thereby enhancing the information processing capability of neural networks. It selectively emphasizes important features by assigning weights to input features and applying weight distribution to retain intermediate results in neural network computations. This facilitates learning with a new model while reducing or disregarding the influence of irrelevant features, thus achieving effective information filtering, improving analysis efficiency, enabling better decision-making by models, and enhancing prediction accuracy. For short-term load forecasting tasks, models often need to process large volumes of load data within limited time frames. The value of the load at a specific moment is more closely related to nearby load values than those further away. Therefore, models should primarily focus on recent load values during prediction. This necessitates leveraging attention mechanisms. The attention mechanism can assign varying weights to each time point’s load value by giving higher weights to values closer in time and lower weights as they become more distant from the current time point. Consequently, models can effectively capture valuable information quickly and improve prediction accuracy. Figure 5 illustrates the structure of an attention mechanism representing an attention distribution vector.

Figure 5.

Attention mechanism.

The relevant formulas for this mechanism are Formulas (38)–(40):

The attention-scoring function is in the hidden layer , while and represent the attention weights. denotes the bias term and signifies the dimensionality of input vectors.

2.6. Construction of KOA-BiGRU-BiTCN-Attention Model

To sum up, we can construct the integrated attention mechanism and the BiGRU-BiTCN algorithm optimized by the KOA optimization algorithm as shown in Figure 6:

Figure 6.

Structure of KOA-BiGRU-BiTCN-attention model.

The model begins by receiving time series data and conducting preprocessing, which includes data normalization and sliding window processing. The preprocessed time series data is then fed into subsequent network layers for processing. Initially, the data enters the BiTCN module, where each BiTCN module comprises a forward TCN and a backward TCN to capture the forward and backward information of the time series, respectively. Following this, the attention layer takes the output from the BiTCN layer and identifies key information by computing the correlation between elements within the input sequence. The application of the attention mechanism enables the model to focus on the parts of the data that have the greatest impact on the prediction outcomes, thereby enhancing the prediction accuracy. The output of the attention layer is then fed into the BiGRU prediction layer. The BiGRU layer simultaneously handles forward and backward time series information, leveraging its powerful sequence modeling capability to generate more accurate prediction sequences. Finally, the output of the BiGRU prediction layer is processed by the output layer, generating corresponding prediction results based on the specific task requirements. These results can be regression values, classification probabilities, or other forms of output, depending on the model’s training objectives. Throughout the entire training process, the KOA optimization algorithm is employed to fine-tune the model’s hyperparameters, minimizing the loss function on the validation set to improve the model’s generalization ability.

3. Results and Discussion of KOA-BiGRU-BiTCN-Attention Model

To verify the accuracy and generality of the model, this paper selects the wind power dataset from Xinjiang, China, for a verification experiment and a comparison experiment.

3.1. Example of Xinjiang Wind Power Data

This paper utilizes a total of 35,040 load data points from Xinjiang wind power, spanning from 1 January 2019 to 30 December 2019, along with related meteorological and temporal data to construct and verify a power load prediction model. The power load data have a sampling interval of 15 min, resulting in 96 data points sampled each day. For short-term prediction, we selected the first 34,960 data points as the training dataset and the last 100 data points as the validation dataset. For long-term prediction, the first 28,040 data points were chosen as the training dataset and the previous 7000 data points served as the validation dataset.

Data Preprocessing

By checking the data set, it is found that the data set has missing values. The specific missing values are listed in the following Table 1:

Table 1.

Number and type of missing values.

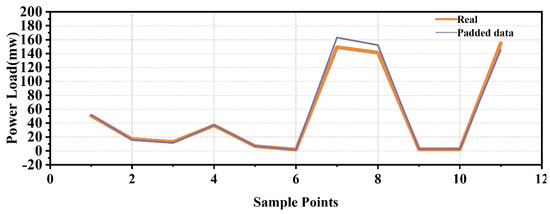

Accordingly, we use the KNN algorithm to fill the vacancy value:

First, we randomly delete a part of the data in the complete time series to simulate the missing value through program processing and use the KNN algorithm to perform a filling simulation to test the model performance, as follows in Figure 7 and Table 2:

Figure 7.

Imputation of missing values for the KNN model.

Table 2.

KNN test performance.

Then, we apply the model to fill the corresponding vacancy value. Next, we carry out the subsequent screening and variable prediction.

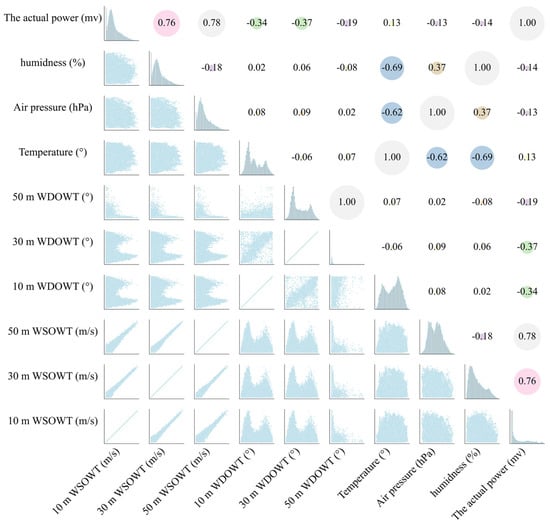

3.2. Selection of Input Variables

After the correlation analysis of different wind speeds, wind direction, and other variables with load data, this paper selects the nine variables with the highest correlation as input variables to train the model and forecast the future load data. The correlation matrix and scatter plots of specific data are shown in Figure 8:

Figure 8.

Correlation matrix and scatter plots.

Previous studies have shown that deep learning models are more sensitive to numbers between 0 and 1 [20]. Therefore, prior to inputting the raw load sequence data into the KOA-BiTCN-BiGRU model, we normalized the data. After training, the data were denormalized to obtain the actual load forecast values.

3.3. Selection of Evaluation Indicators

In order to quantitatively evaluate the accuracy of the prediction model, we use three leading evaluation indicators in this paper. These include mean absolute error, which measures the mean fundamental difference between the predicted value and the actual value; the mean fundamental percentage error, which provides the expected error as a percentage of the true value, allowing us to understand the effect of the error in the aggregate; and root-mean-square error, which is the square root of the mean of the squared prediction error and is able to give the magnitude of the prediction error. The specific calculation formula is as follows:

where and are the predicted value and the measured value, respectively.

3.4. Setting of Model Parameters

Before the experiment starts, we first set the parameters of the relevant model and its optimization algorithm. The relevant parameters of the KOA are as follows Table 3:

Table 3.

KOA model parameters.

The relevant parameters of the BiGRU-BiTCN-attention optimization algorithm are as follows in Table 4:

Table 4.

BiTCN-BiGRU model parameters.

3.5. Load Forecasting Experiment Platform and Result Analysis

3.5.1. Experimental Platform

The experiments described in this paper are run on GPU, the graphics card is NVIDIA GeForce GTX 3060, and the experimental environment is MATLAB 2023a edition.

3.5.2. Analysis of Ablation Experiment Results

In this experiment, the prediction results of TCN, GRU, TCN-GRU, and BiTCN-BiGRU prediction methods were taken as the comparison reference, recorded as the experimental control group, and compared with the prediction results of the proposed model to confirm the following two points:

- (1)

- The feature capture efficiency and prediction accuracy of BiTCN-BiGRU are superior to the basic BiTCN, BiGRU, TCN, and GRU prediction methods.

- (2)

- The KOA, as a population optimization algorithm, can effectively improve the adjustment efficiency and prediction accuracy of hyperparameters.

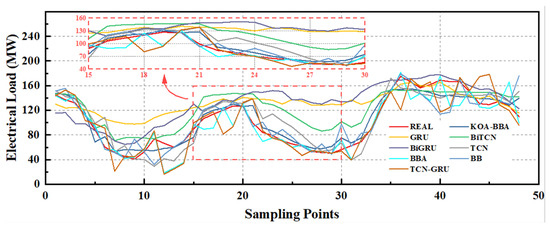

Therefore, on the experimental platform, we conducted relevant experiments and obtained the following results in Table 5 and Figure 9:

Table 5.

Performance comparison of ablation experiments.

Figure 9.

Visualization of the performance of ablation experiments visualization.

The experimental results show that:

The initial model is a network based on a gated recurrent unit (GRU), which performed worst across all evaluation metrics, including RMSE (31.71%), MAPE (39.53%), and MAE (31.99%). After introducing the temporal convolutional network (TCN), the model’s RMSE, MAPE, and MAE improved by 11.38%, 19.48%, and 24.04%, respectively, indicating TCN’s significant advantage in capturing long-term dependencies of time series. The bidirectional GRU (BiGRU) and bidirectional TCN (BiTCN) structures were further utilized to enhance model performance. Compared to unidirectional TCN, the BiTCN model improved by 8.08%, 3.31%, and 6.23% in RMSE, MAPE, and MAE, respectively. The TCN-GRU model, which combines TCN and GRU, also showed enhanced performance compared to the single BiTCN model, with RMSE improving by 6.81%. Furthermore, the BiTCN-BiGRU-attention model, which combines BiTCN and BiGRU with an attention mechanism, achieved significant performance improvements across all evaluation metrics, with RMSE, MAPE, and MAE improving by 8.92%, 28.04%, and 24.49%, respectively. This indicates that the attention mechanism effectively enhances the model’s ability to recognize essential features in time series, thus improving prediction accuracy. The KOA-BiTCN-BiGRU-attention model, with the introduction of the KOA mechanism, achieved the most significant improvements across all indicators, with RMSE, MAPE, and MAE improving by 22.87%, 43.48%, and 30.61%, respectively.

In summary, our experiments demonstrate that the incorporation of TCN, BiGRU, and the attention mechanism significantly enhances the model’s performance in short-term load forecasting. The KOA mechanism further amplifies these improvements, achieving the highest accuracy among all tested models. These results validate the proposed model’s effectiveness and highlight its potential for practical applications in load forecasting.

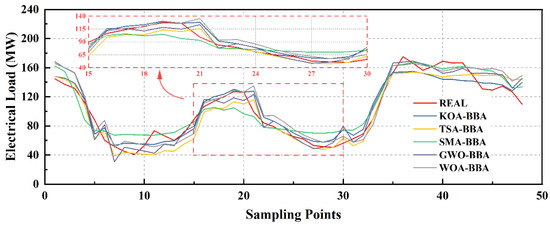

At the same time, in order to verify the optimization efficiency of the KOA, this paper selected the BiTCN-BiGRU-attention model optimized by TSA, SMA, GWO, and WOA optimization algorithms for performance comparison. The relevant algorithms are introduced as follows:

The tunicate swarm algorithm (TSA) is a new optimization algorithm proposed by Kaur et al. It is inspired by the swarm behavior of the capsule to survive successfully in the deep sea. The TSA simulates the jet propulsion and swarm behavior of the capsule during navigation and foraging. This algorithm can solve relevant cases with unknown search space [51].

The slime mold algorithm is an intelligent optimization algorithm proposed in 2020, which mainly simulates the foraging behavior and state changes of physarum polycephalum in nature under different food concentrations. Myxomycetes primarily secrete enzymes to digest food. The front end of myxomycetes extends into a fan shape and the back end is surrounded by a network of interconnected veins. Different concentrations of food in the environment affect the flow of cytoplasm in the vein network of myxomycetes, thus forming other states of myxomycetes foraging [30].

The grey wolf optimizer (GWO) is a population intelligent optimization algorithm proposed in 2014 by Mirjalili et al., scholars from Griffith University in Australia. This algorithm is an optimization search method developed inspired by the prey-hunting activities of grey wolves [52].

The whale optimization algorithm (WOA) is a new swarm intelligence optimization algorithm proposed by Mirjalili and Lewis from Griffith University in Australia in 2016. Inspired by the typical air curtain attack behavior of humpback whales in the process of simulating simple prey, the WOA optimized the relevant process [53].

Table 6.

Performance comparison of different optimization algorithms.

Figure 10.

Visualization of prediction results of different optimization algorithms.

According to the experimental results, the KOA-BiTCN-BiGRU-attention model outperforms other variant models on all evaluation indexes. Specifically, compared to the TSA-BiTCN-BiGRU-attention model, the KOA variant achieved 10.53%, 15.95%, and 11.92% improvement in RMSE, MAPE, and MAE, respectively. Similarly, compared to the SMA-BiTCN-BiGRU-attention model, the KOA variant achieved a 13.15%, 35.32%, and 20.51% improvement on these three metrics, respectively. In addition, compared to the GWO-BiTCN-BiGRU-attention and WOA-BiTCN-BiGRU-attention models, the KOA variant also showed outstanding improvement in RMSE, MAPE, and MAE, reaching 12.32%, 14.47%, 12.21%, 16.19%, 25.58%, and 17.06%, respectively. This proves that the KOA optimization algorithm has obvious advantages over traditional optimization methods.

Then, this paper selects several classical models and sets their relevant parameters consistently with the proposed model for comparative experiments. The models include the decision tree (DT), which builds a tree-like model of decision rules by recursively splitting the dataset into smaller subsets. Each internal node represents a test on an attribute, each branch represents the outcome of the test, and each leaf node represents a prediction result. In time series forecasting, DT predicts a target value at a future point based on feature values from past points in time.

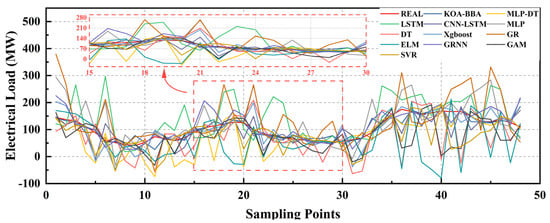

To validate the effectiveness of our proposed model, we compared it against several commonly used time series prediction algorithms, including XGBoost, Gaussian regression, extreme learning machine (ELM), generalized regression neural network (GRNN), generalized additive models regression (GAM), support vector regression (SVR), back propagation neural network (BP), long short-term memory (LSTM), LSTM-CNN, multilayer perceptron (MLP), and MLP-ensemble learning. The specific properties are shown in Table 7 and Figure 11.

Table 7.

Classical algorithms predict comparative performance.

Figure 11.

Visualization of comparison of classical algorithm predictions.

In all the above evaluation indicators, the KOA-BiTCN-BiGRU-attention model showed significant performance improvement compared to other models. Compared to the ELM model, the improvement percentage of KOA-BiTCN-BiGRU-attention on RMSE, MAPE, and MAE was 76.80%, 73.46%, and 79.67%, respectively, showing excellent performance for processing very complex time series data. In addition, compared to the classical LSTM model, the improvement in the KOA-BiTCN-BiGRU-attention model in these three indicators reached 71.12%, 74.02%, and 76.01%, respectively, which had different degrees of improvement compared to other models. This further proves the advanced nature and high efficiency of the model proposed in this paper.

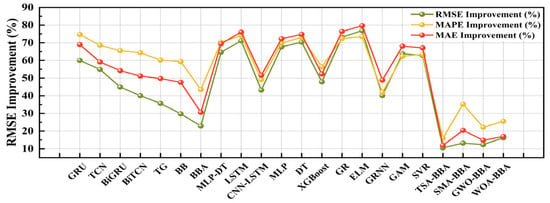

The specific improved performance visualization is as follows in Figure 12:

Figure 12.

Visualization of performance improvement.

In order to evaluate the computational cost of the proposed model, several experiments were carried out under the same parameter setting and experimental environment and the training time and predicted execution time of the model were recorded. The details are shown in Table 8.

Table 8.

Execution time analyses.

The KOA-BiTCN-BiGRU-attention model, with an execution time of 1764 s, outperforms other deep learning models optimized with TSA, SMA, GWO, and WOA by offering shorter run times while maintaining high predictive accuracy. Although it has a slightly higher computation time compared to classical models like XGBoost and Gaussian regression, KOA-BiTCN-BiGRU-attention excels in capturing complex time series patterns, especially in tasks involving nonlinearity and long-term dependencies. Its advanced feature extraction and sequence modeling capabilities make it superior in predictive accuracy, showcasing the significant value of deep learning for complex time series forecasting.

In summary, this section verifies the performance of the proposed 24-step multivariable time series short-term load forecasting model. At the same time, we will further study the model in the future to improve its relevant performance and calculation speed.

4. Conclusions

Electricity demand forecasting, a field of long-standing research, has developed various mature prediction models and theories. With the advancement of global “carbon peak and carbon neutrality” goals and the implementation of numerous carbon reduction policies, certain high-energy-consumption and high-emission industries face restrictions or even transformation, while new green industries are emerging rapidly. These changes have led to significant adjustments in China’s industrial structure, rendering traditional statistical methods obsolete. Furthermore, in the context of the construction of new electricity markets, the structure of power systems has become increasingly complex, enabling bidirectional interactions between electricity and information flow and allowing demand-side resources to participate in grid peak shaving and frequency modulation, directly affecting grid loads. Therefore, considering the impacts of “dual carbon” goals, the construction of new power systems is particularly important in mid-to-long-term electricity demand forecasting. Given the differences in principles, advantages, and applicable scenarios of various prediction models, the selection and optimization of models are crucial to ensure adaptability to the complex power system environment under the influence of “dual carbon” goals. Against this backdrop, this study has arrived at the following main conclusions and achievements:

- (1)

- A novel algorithmic model for short-term load forecasting in a 24-step multivariate time series has been proposed. This model combines KNN data imputation with BiTCN bidirectional temporal convolutional network along with a BiGRU bidirectional gated recurrent unit and attention mechanism. Furthermore, the model’s hyperparameters have been optimized using the Kepler adaptive optimization algorithm (KOA), resulting in enhanced prediction accuracy compared to equivalent time steps.

- (2)

- An integrated approach has been adopted by combining different models to construct a comprehensive short-term electricity load forecasting model. This holistic framework not only provides valuable insights for production-related decisions but also serves as a reference point for formulating and implementing relevant policies.

Author Contributions

Conceptualization, M.X. and W.L.; methodology, M.X.; software, M.X.; validation, S.W., J.T. and P.W.; formal analysis, M.X.; investigation, S.W.; resources, P.W.; data curation, J.T.; writing—original draft preparation, M.X.; writing—review and editing, J.T. and W.L.; visualization, J.T. and C.X.; supervision, P.W.; project administration, J.T. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

Due to the confidentiality agreement signed with the relevant enterprises, the relevant data cannot be disclosed, please understand.

Acknowledgments

Thanks to Peng Wu for the experimental guidance of this paper.

Conflicts of Interest

Author Congjiu Xie was employed by the Pioneer Navigation Control Technology Co., Ltd. The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Nomenclature

| AI | Artificial intelligence |

| WDOWT | Wind direction of wind tower |

| WSOWT | Wind speed of wind tower |

| BiGRU | Bidirectional gated recurrent unit |

| BiTCN | Bidirectional temporal convolutional network |

| CNN | Convolutional neural network |

| DL | Deep learning |

| ELM | Extreme learning machine |

| GAM | Generalized additive models regression |

| GRNN | Generalized regression neural network |

| GRU | Gated recurrent unit |

| KOA | Kepler optimization algorithm |

| KNN | K-nearest neighbors |

| LR | Linear regression |

| LSTM | Long short-term memory |

| MLP | Multilayer perceptron |

| PSO | Particle swarm optimization |

| RNN | Recurrent neural network |

| RMSE | Root mean square error |

| SMA | Slime mold algorithm |

| SVR | Support vector regression |

| TCN | Temporal convolutional network |

| WOA | Whale optimization algorithm |

| XGBoost | Extreme gradient boosting |

References

- Petrušić, A.; Janjić, A. Fuzzy Multiple Linear Regression for the Short Term Load Forecast. In Proceedings of the 2020 55th International Scientific Conference on Information, Communication and Energy Systems and Technologies, Niš, Serbia, 10–12 September 2020. [Google Scholar] [CrossRef]

- Yang, G.; Zheng, H.; Zhang, H.; Jia, R. Short-term load forecasting based on holt-winters exponential smoothing and temporal convolutional network. Dianli Xitong Zidonghua/Autom. Electr. Power Syst. 2022, 46, 73–82. [Google Scholar] [CrossRef]

- Yakhot, V.; Orszag, S.A. Renormalization group analysis of turbulence. I. Basic theory. J. Sci. Comput. 1986, 1, 3–51. [Google Scholar] [CrossRef]

- Vaish, J.; Siddiqui, K.M.; Maheshwari, Z.; Kumar, A.; Shrivastava, S. Day Ahead Load Forecasting using Random Forest Method with Meteorological Variables. In Proceedings of the 2023 IEEE Conference on Technologies for Sustainability (SusTech), Portland, OR, USA, 19–22 April 2023. [Google Scholar] [CrossRef]

- Ding, Q. Long-Term Load Forecast using Decision Tree Method. In Proceedings of the 2006 IEEE PES Power Systems Conference and Exposition, Atlanta, GA, USA, 29 October–1 November 2006. [Google Scholar] [CrossRef]

- Zhang, T.; Huang, Y.; Liao, H.; Liang, Y. A hybrid electric vehicle load classification and forecasting approach based on GBDT algorithm and temporal convolutional network. Appl. Energy 2023, 351, 121768. [Google Scholar] [CrossRef]

- Lu, K.; Sun, W.; Ma, C.; Yang, S.; Zhu, Z.; Zhao, P.; Zhao, X.; Xu, N. Load forecast method of electric vehicle charging station using SVR based on GA-PSO. IOP Conf. Ser. Earth Environ. Sci. 2017, 69, 012196. [Google Scholar] [CrossRef]

- Huang, A.; Zhou, J.; Cheng, T.; He, X.; Lv, J.; Ding, M. Short-term Load Forecasting for Holidays based on Similar Days Selecting and XGBoost Model. In Proceedings of the 2023 IEEE 6th International Conference on Industrial Cyber-Physical Systems, Wuhan, China, 8–11 May 2023. [Google Scholar] [CrossRef]

- Zhang, W.; Hua, H.; Cao, J. Short Term Load Forecasting Based on IGSA-ELM Algorithm. In Proceedings of the 2017 IEEE International Conference on Energy Internet, Beijing, China, 17–21 April 2017. [Google Scholar] [CrossRef]

- Hu, Y.; Qu, B.; Wang, J.; Liang, J.; Wang, Y.; Yu, K.; Li, Y.; Qiao, K. Short-term load forecasting using multimodal evolutionary algorithm and random vector functional link network based ensemble learning. Appl. Energy 2021, 285, 116415. [Google Scholar] [CrossRef]

- Aseeri, A.O. Effective RNN-based forecasting methodology design for improving short-term power load forecasts: Application to large-scale power-grid time series. J. Comput. Sci. 2023, 68, 101984. [Google Scholar] [CrossRef]

- Ajitha, A.; Goel, M.; Assudani, M.; Radhika, S.; Goel, S. Design and development of residential sector load prediction model during COVID-19 pandemic using LSTM based RNN. Electr. Power Syst. Res. 2022, 212, 108635. [Google Scholar] [CrossRef]

- Fang, P.; Gao, Y.; Pan, G.; Ma, D.; Sun, H. Research on forecasting method of mid- and long-term photovoltaic power generation based on LSTM neural network. Renew. Energy Resour. 2022, 40, 48–54. (In Chinese) [Google Scholar] [CrossRef]

- Zhang, Q.; Tang, Z.; Wang, G.; Yang, Y.; Tong, Y. Ultra-short-term wind power prediction model based on long and short term memory network. Acta Energiae Solaris Sin. 2021, 42, 275–281. (In Chinese) [Google Scholar] [CrossRef]

- Rick, R.; Berton, L. Energy forecasting model Based on CNN-LSTM-AE for many time series with unequal lengths. Eng. Appl. Artif. Intell. 2022, 113, 104998. [Google Scholar] [CrossRef]

- Fu, Y.; Ren, Z.; Wei, S.; Wang, Y.; Huang, L.; Jia, F. Ultra-short-term power prediction of offshore wind power based on improved LSTM-TCN model. Proc. CSEE 2022, 42, 4292–4303. (In Chinese) [Google Scholar] [CrossRef]

- Al-Ja’afreh, M.A.A.; Mokryani, G.; Amjad, B. An enhanced CNN-LSTM based multi-stage framework for PV and load short-term forecasting: DSO scenarios. Energy Rep. 2023, 10, 1387–1408. [Google Scholar] [CrossRef]

- Holland, J.H. Genetic algorithms. Sci. Am. 1992, 267, 66–73. [Google Scholar] [CrossRef]

- Kenndy, J.; Eberhart, R. Particle Swarm Optimization. In Proceedings of the ICNN’95-International Conference on Neural Networks, Perth, WA, Australia, 27 November–1 December 1995. [Google Scholar] [CrossRef]

- Dorigo, M.; Di Caro, G.; Gambardella, L.M. Ant algorithms for discrete optimization. Artif. Life 1999, 5, 137–172. [Google Scholar] [CrossRef]

- Rashedi, E.; Nezamabadi-Pour, H.; Saryazdi, S. GSA: A gravitational search algorithm. Inf. Sci. 2009, 179, 2232–2248. [Google Scholar] [CrossRef]

- Rao, R.V.; Savsani, V.J.; Vakharia, D.P. Teaching-learning-based optimization: A novel method for constrained mechanical design optimization problems. Comput.-Aided Des. 2011, 43, 303–315. [Google Scholar] [CrossRef]

- Wolpert, D.H.; Macready, W.G. No free lunch theorems for optimization. IEEE Trans. Evol. Comput. 1997, 1, 67–82. [Google Scholar] [CrossRef]

- Faramarzi, A.; Heidarinejad, M.; Mirjalili, S.; Gandomi, A.H. Marine predators algorithm: A nature-inspired metaheuristic. Expert Syst. Appl. 2020, 152, 113377. [Google Scholar] [CrossRef]

- Braik, M.S. Chameleon Swarm Algorithm: A bio-inspired optimizer for solving engineering design problems. Expert Syst. Appl. 2021, 174, 114685. [Google Scholar] [CrossRef]

- Hashim, F.A.; Hussain, K.; Houssein, E.H.; Mabrouk, M.S.; Al-Atabany, W. Archimedes optimization algorithm: A new metaheuristic algorithm for solving optimization problems. Appl. Intell. 2021, 51, 1531–1551. [Google Scholar] [CrossRef]

- Mohammadi-Balani, A.; Nayeri, M.D.; Azar, A.; Taghizadeh-Yazdi, M. Golden eagle optimizer: A nature-inspired metaheuristic algorithm. Comput. Ind. Eng. 2021, 152, 107050. [Google Scholar] [CrossRef]

- Shadravan, S.; Naji, H.R.; Bardsiri, V.K. The Sailfish Optimizer: A novel nature-inspired metaheuristic algorithm for solving constrained engineering optimization problems. Eng. Appl. Artif. Intell. 2019, 80, 20–34. [Google Scholar] [CrossRef]

- Khishe, M.; Mosavi, M.R. Chimp optimization algorithm. Expert Syst. Appl. 2020, 149, 113338. [Google Scholar] [CrossRef]

- Li, S.; Chen, H.; Wang, M.; Heidari, A.A.; Mirjalili, S. Slime mould algorithm: A new method for stochastic optimization. Future Gener. Comput. Syst. 2020, 111, 300–323. [Google Scholar] [CrossRef]

- Zhao, S.; Zhang, T.; Ma, S.; Chen, M. Dandelion optimizer: A nature-inspired metaheuristic algorithm for engineering applications. Eng. Appl. Artif. Intell. 2022, 114, 105075. [Google Scholar] [CrossRef]

- Abdollahzadeh, B.; Gharehchopogh, F.S.; Mirjalili, S. African vultures optimization algorithm: A new nature-inspired metaheuristic algorithm for global optimization problems. Comput. Ind. Eng. 2021, 158, 107408. [Google Scholar] [CrossRef]

- Eslami, N.; Yazdani, S.; Mirzaei, M.; Hadavandi, E. Aphid-Ant Mutualism: A novel nature-inspired metaheuristic algorithm for solving optimization problems. Math. Comput. Simul. 2022, 201, 362–395. [Google Scholar] [CrossRef]

- Zhong, C.; Li, G.; Meng, Z. Beluga whale optimization: A novel nature-inspired metaheuristic algorithm. Knowl.-Based Syst. 2022, 251, 109215. [Google Scholar] [CrossRef]

- Jafari, M.; Salajegheh, E.; Salajegheh, J. Elephant clan optimization: A nature-inspired metaheuristic algorithm for the optimal design of structures. Appl. Soft Comput. 2021, 113, 107892. [Google Scholar] [CrossRef]

- Kazemi, M.V.; Veysari, E.F. A new optimization algorithm inspired by the quest for the evolution of human society: Human felicity algorithm. Expert Syst. Appl. 2022, 193, 116468. [Google Scholar] [CrossRef]

- Pan, J.S.; Zhang, L.; Wang, R.; Snášel, V.; Chu, S. Gannet optimization algorithm: A new metaheuristic algorithm for solving engineering optimization problems. Math. Comput. Simul. 2022, 202, 343–373. [Google Scholar] [CrossRef]

- Zhang, J.; Wang, S.; Tan, Z.; Sun, A. An improved hybrid model for short term power load prediction. Energy 2023, 268, 126561. [Google Scholar] [CrossRef]

- Li, D.; Ji, X.; Tian, X. Short-term Power Load Forecasting Based on VMD-GOA-LSSVR Model. In Proceedings of the 2021 China Automation Congress, Beijing, China, 22–24 October 2021. [Google Scholar] [CrossRef]

- Abdel-Basset, M.; Mohamed, R.; Abdel Azeem, S.A.; Jameel, M.; Abouhawwash, M. Kepler optimization algorithm: A new metaheuristic algorithm inspired by Kepler’s laws of planetary motion. Knowl.-Based Syst. 2023, 268, 110454. [Google Scholar] [CrossRef]

- Wang, Z.; Qiu, H.; Sun, Y.; Deng, Q. Collaborative Filtering Recommendation Algorithm Based on Random Forest Filling. In Proceedings of the 2019 2nd International Conference on Information Systems and Computer Aided Education, Dalian, China, 28–30 September 2019. [Google Scholar] [CrossRef]

- Liu, X.; Du, S.; Li, T.; Teng, F.; Yang, Y. A missing value filling model based on feature fusion enhanced autoencoder. Appl. Intell. 2023, 53, 24931–24946. [Google Scholar] [CrossRef]

- Mnih, V.; Heess, N.; Graves, A. Recurrent models of visual attention. arXiv 2014, arXiv:1406.6247. [Google Scholar]

- Li, S.; Jin, X.; Xuan, Y.; Zhou, X.; Chen, W.; Wang, Y.X.; Yan, X. Enhancing the locality and breaking the memory bottleneck of Transformer on time series forecasting. In Proceedings of the 33rd International Conference on Neural Information Processing Systems, Vancouver, BC, Canada, 8–14 December 2019; Curran Associates Inc.: Red Hook, NY, USA, 2019; pp. 5243–5253. [Google Scholar]

- Wu, S.; Xiao, X.; Ding, Q.; Zhao, P.; Wei, Y.; Huang, J. Adversarial sparse transformer for time series forecasting. In Proceedings of the 34th International Conference on Neural Information Processing Systems, Vancouver, BC, Canada, 6–12 December 2020; Curran Associates Inc.: Red Hook, NY, USA, 2020; pp. 17105–17115. [Google Scholar]

- Zhou, H.; Zhang, S.; Peng, J.; Zhang, S.; Li, J.; Xiong, H.; Zhang, W. Informer: Beyond efficient transformer for long sequence time-series forecasting. In Proceedings of the AAAI Conference on Artificial Intelligence, Virtually, 2–9 February 2021; Volume 35, pp. 11106–11115. [Google Scholar]

- Wu, H.; Xu, J.; Wang, J.; Long, M. Autoformer: Decomposition transformers with auto-correlation for long-term series forecasting. In Proceedings of the 35th International Conference on Neural Information Processing Systems, Online, 6–14 December 2021; Curran Associates Inc.: Red Hook, NY, USA, 2021; pp. 22419–22430. [Google Scholar]

- Liu, S.; Yu, H.; Liao, C.; Li, J.; Lin, W.; Liu, A.X.; Dustdar, S. Pyraformer: Low-complexity pyramidal attention for long-range time series modeling and forecasting. In Proceedings of the International Conference on Learning Representations (ICLR 2022), Virtual Event, 25–29 April 2022. [Google Scholar]

- Zhou, T.; Ma, Z.; Wen, Q.; Wang, X.; Sun, L.; Jin, R. FEDformer: Frequency enhanced decomposed transformer for long-term series forecasting. In Proceedings of the 39th International Conference on Machine Learning, Baltimore, MD, USA, 17–23 July 2022; pp. 27268–27286. [Google Scholar]

- Zhang, Y.H.; Yan, J.C. Crossformer: Transformer utilizing cross-dimension dependency for multivariate time series forecasting. In Proceedings of the 11th International Conference on Learning Representations (ICLR 2023 Conference), Kigali, Rwanda, 1–5 May 2023. [Google Scholar]

- Kaur, S.; Awasthi, L.K.; Sangal, A.L.; Dhiman, G. Tunicate Swarm Algorithm: A new bio-inspired based metaheuristic paradigm for global optimization. Eng. Appl. Artif. Intell. 2020, 90, 103541. [Google Scholar] [CrossRef]

- Mirjalili, S.; Saremi, S.; Mirjalili, S.M.; Coelho, L.D.S. Multi-objective grey wolf optimizer: A novel algorithm for multi-criterion optimization. Expert Syst. Appl. 2016, 47, 106–119. [Google Scholar] [CrossRef]

- Mirjalili, S.; Lewis, A. The whale optimization algorithm. Adv. Eng. Softw. 2016, 95, 51–67. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).