Infrared Image Object Detection Algorithm for Substation Equipment Based on Improved YOLOv8

Abstract

1. Introduction

- (1)

- There is the challenge of high similarity between classes because the main structure of different substation equipment is often similar, which can lead to false detection.

- (2)

- The scale of substation equipment in infrared images varies greatly because of the different sizes of substation equipment and the shooting distance. Insufficient extraction of multi-scale information will result in missing detection.

- (1)

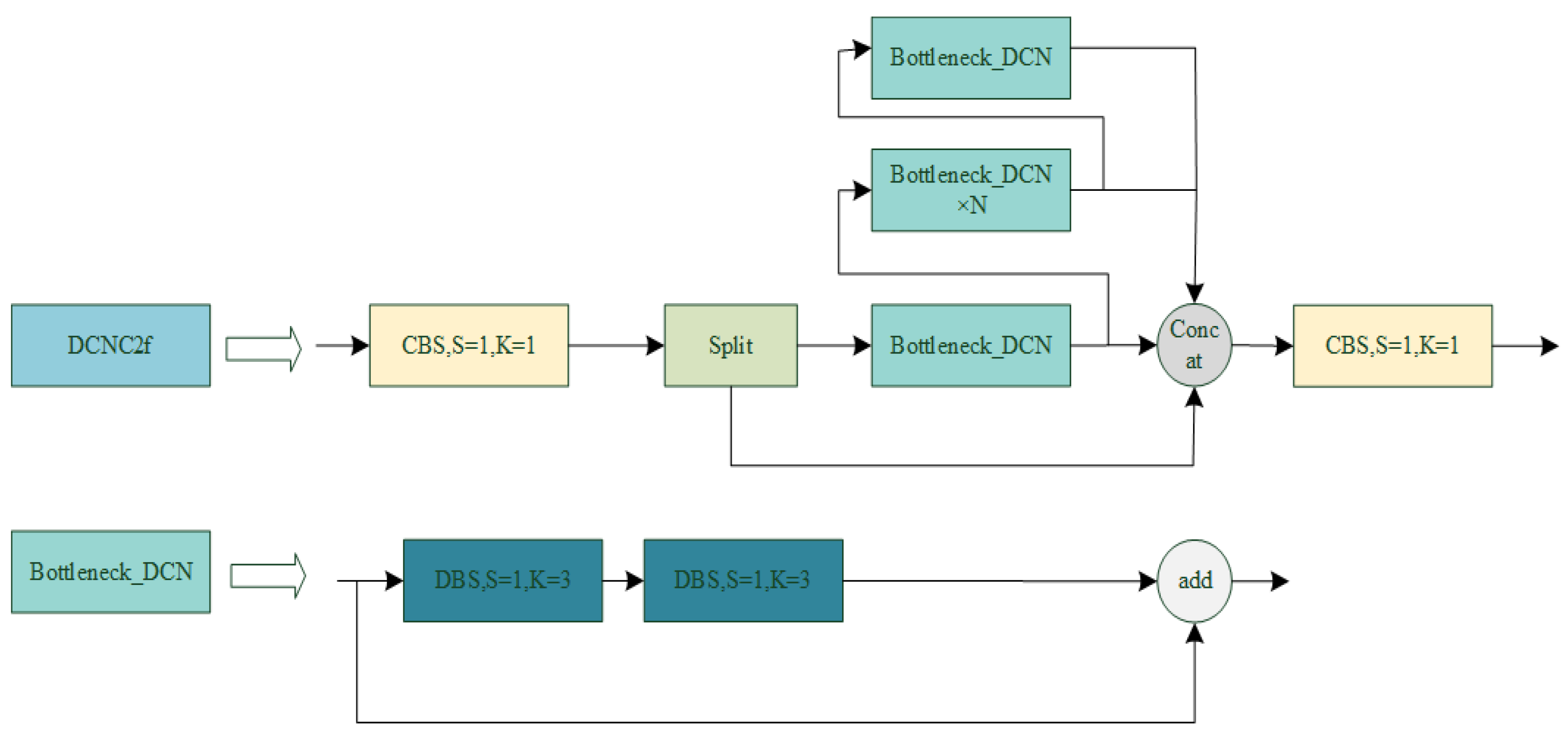

- To solve the problem of false detection caused by high similarity between different classes of substation equipment, a DCNC2f module was established with deformable convolution to improve the feature extraction capability of the model, and to enhance the integrity and effectiveness of the extracted features. The degree of differentiation between the features of different devices is increased, alleviating the problem of false detection.

- (2)

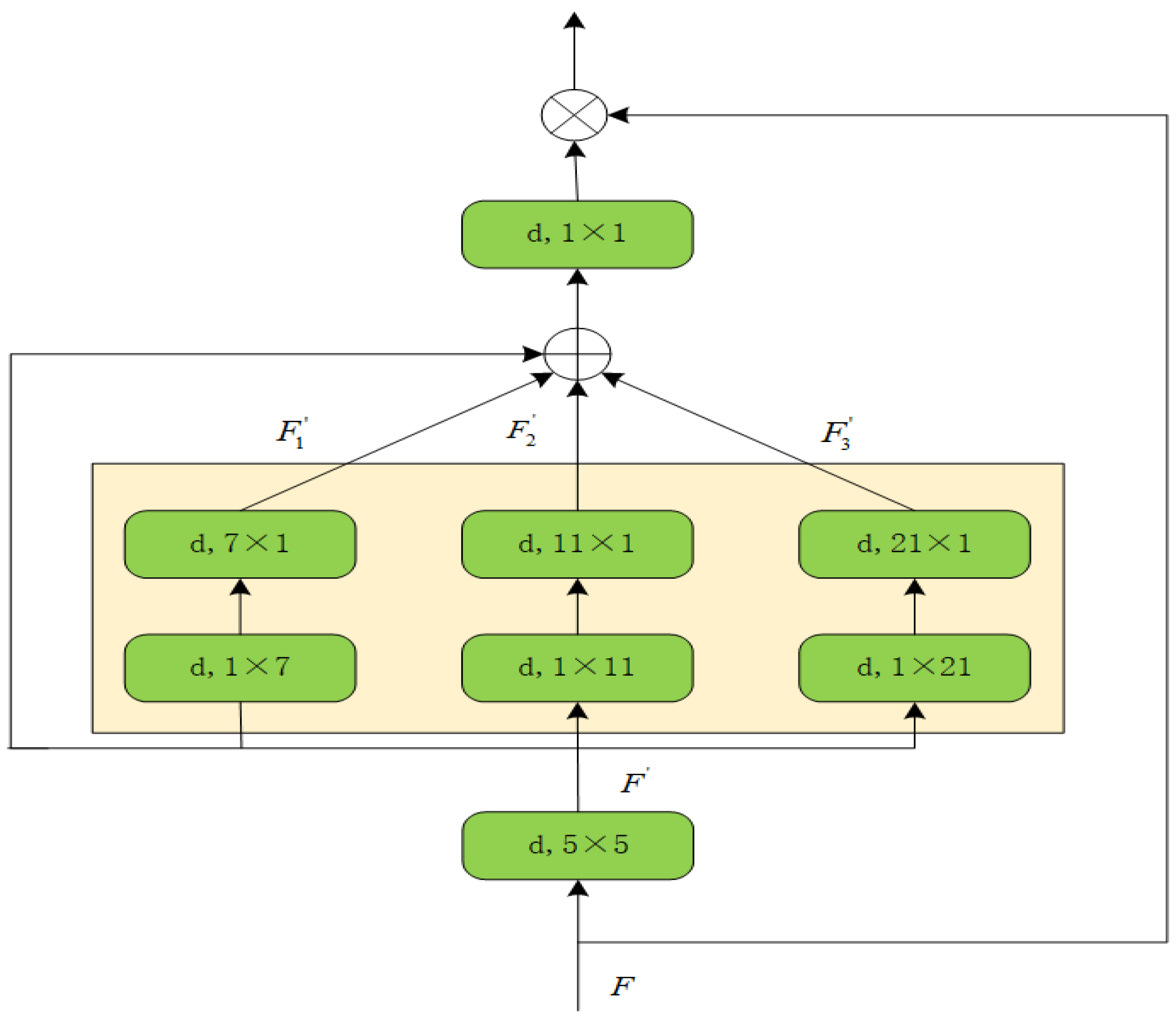

- Aiming at the missing detection caused by the scale change in the substation equipment in infrared images, a multi-scale attention mechanism is introduced to improve the detection ability of the model for multi-scale equipment and reduce the occurrence of missing detection.

- (3)

- The proposed algorithm is compared with other advanced object detection algorithms, demonstrating superior performance in detecting substation equipment in infrared images.

2. Materials and Methods

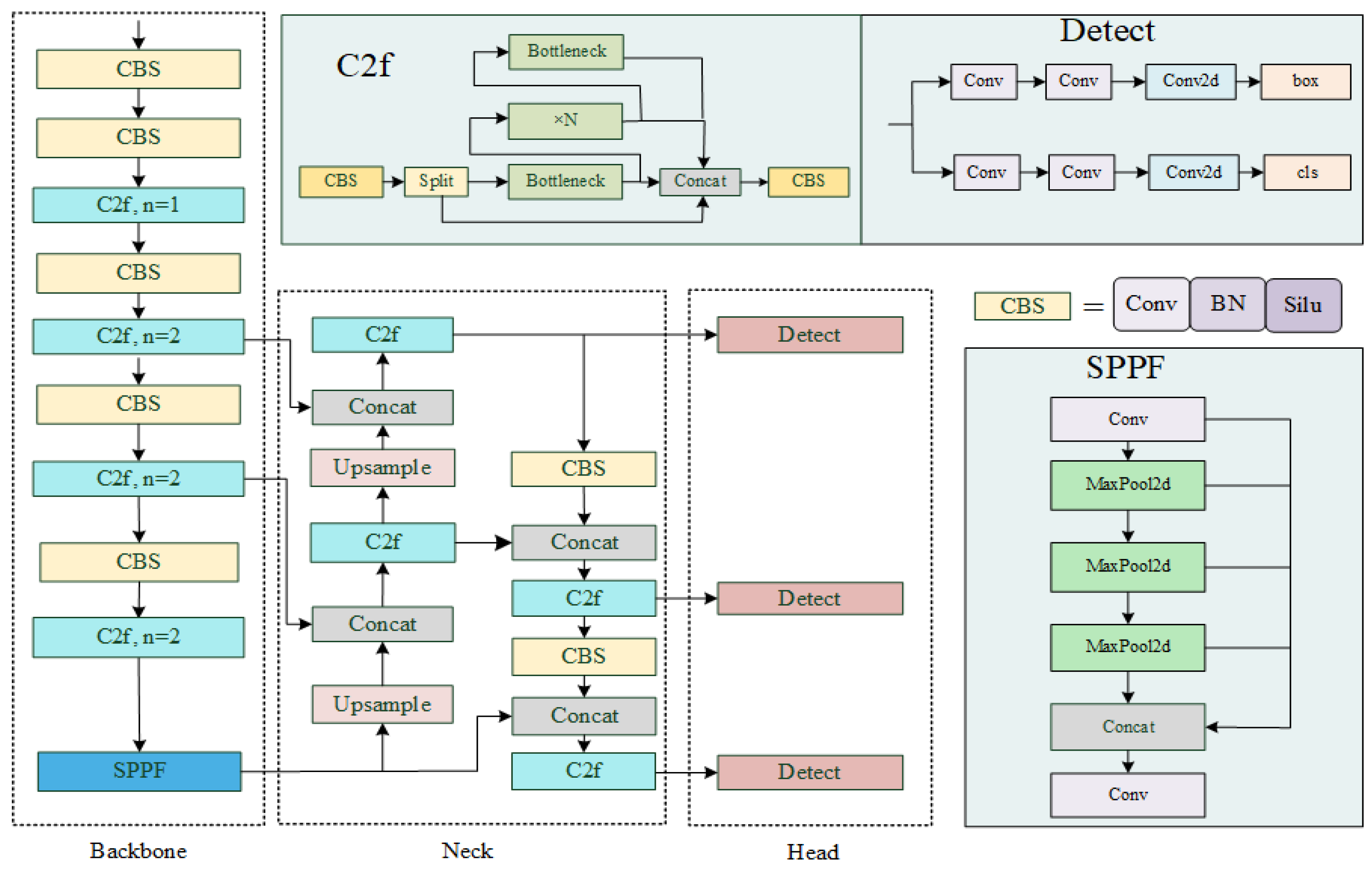

2.1. The Principle of YOLOv8

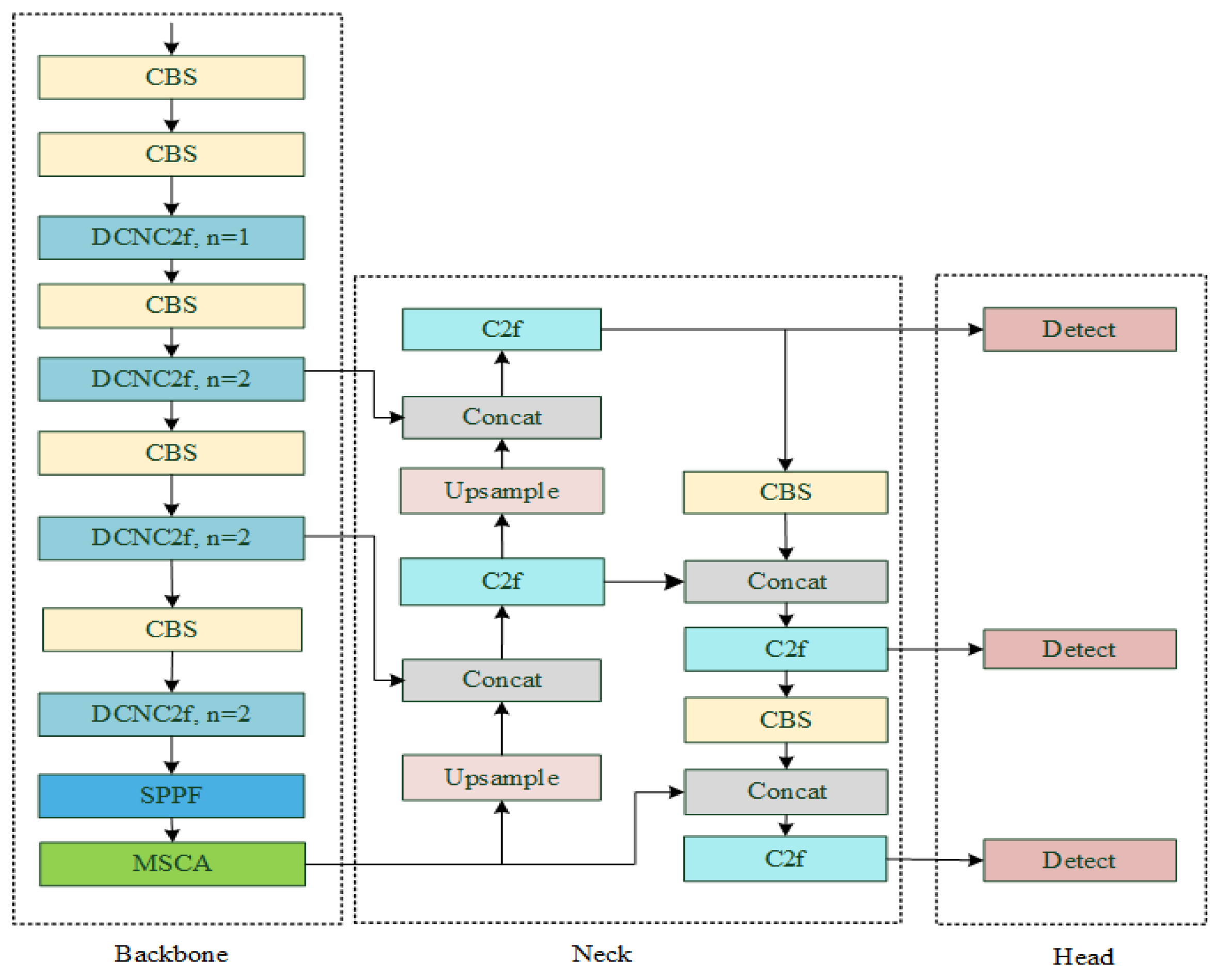

2.2. Improved YOLOv8 Algorithm

2.2.1. Improvement of the C2f Module

2.2.2. Multi-Scale Convolutional Attention

3. Experimental Results and Analysis

3.1. Evaluation Indicators

3.2. Ablation Experiments

3.3. Comparative Experiments

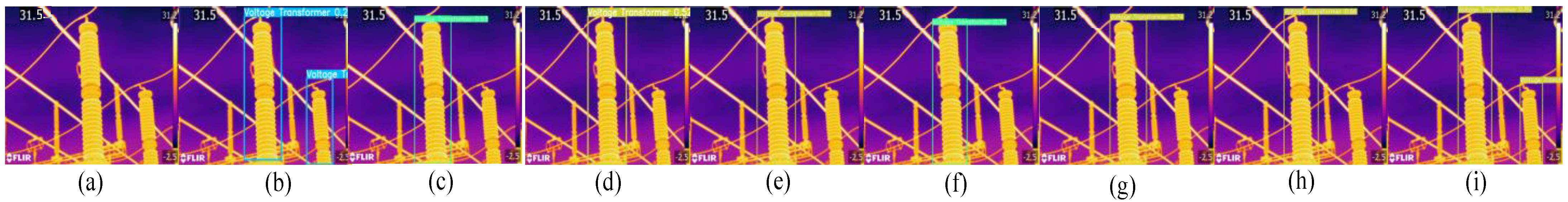

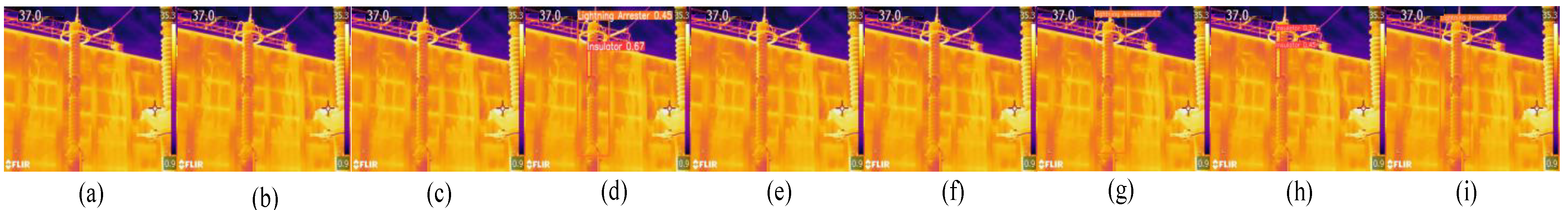

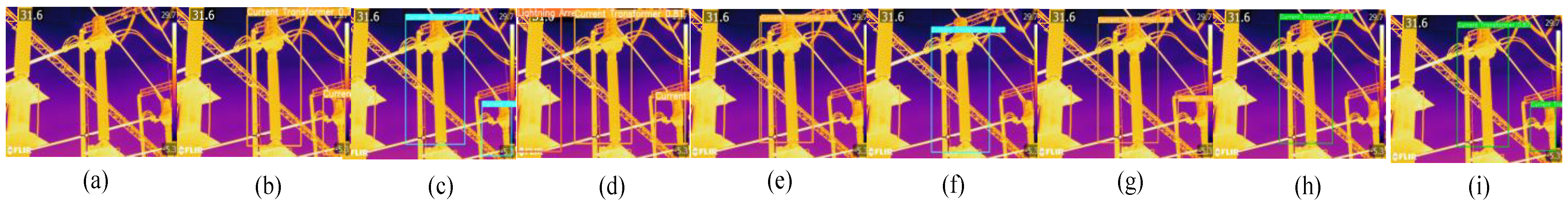

3.4. Visualisation of the Results of Different Methods

4. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Correction Statement

References

- Han, X.; Guo, J.B.; Pu, T.J.; Fu, K.; Qiao, J.; Wang, X.Y. Theoretical Foundation and Directions of Electric Power Artificial Intelligence (I): Hypothesis Analysis and Application Paradigm. Proc. CSEE 2023, 8, 2877–2891. [Google Scholar]

- Zhang, Y.H.; Qiu, C.M.; Yang, F.; Xu, S.W.; Shi, X.; He, X. Overview of Application of Deep Learning with Image Data and Spatio-temporal Data of Power Grid. Power Syst. Technol. 2019, 6, 1865–1873. [Google Scholar]

- Zeng, Z.P. On the Maintenance and Common Fault Handling Methods of Substation Operating Equipment. China Plant Eng. 2024, 5, 53–55. [Google Scholar]

- Liu, Y.P.; Pei, S.T.; Wu, J.H.; Ji, X.X.; Liang, L.H. Deep Learning Based Target Detection Method for Abnormal Hot Spots Infrared Images of Transmission and Transformation Equipment. South. Power Syst. Technol. 2019, 2, 27–33. [Google Scholar] [CrossRef]

- Zhou, J.H.; Huang, T.C.; Xie, X.Y.; Fan, W.J.; Yi, T.T.; Zhang, Y.J. Review of Application Research of Video Image Intelligent Recognition Technology in Power Transmission and Distribution Systems. Electr. Power 2021, 1, 124–134+166. [Google Scholar]

- Liu, J.W.; Yan, Y.; Lin, G.K.; Gao, P. Research on Approaches to Improve the Automation Technology of Power Systems in Substations. China Plant Eng. 2023, 20, 231–233. [Google Scholar]

- Zhao, Z.B.; Feng, S.; Xi, Y.; Zhang, J.L.; Zhai, Y.J.; Zhao, W.Q. The era of large models: A new starting point for electric power vision technology. High Volt. Eng. 2024, 50, 1813–1825. [Google Scholar]

- Wang, Y.B.; Li, Y.Y.; Duan, Y.; Wu, H.Y. Infrared Image Recognition of Substation Equipment Based on Lightweight Backbone Network and Attention Mechanism. Power Syst. Technol. 2023, 10, 4358–4369. [Google Scholar]

- Wang, Q.; Wu, B.; Zhu, P.; Li, P.; Zuo, W.; Hu, Q. ECA-Net: Efficient channel attention for deep convolutional neural networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 11534–11542. [Google Scholar]

- Zhao, Z.B.; Feng, S.; Zhao, W.Q.; Zhai, Y.J.; Wang, H.T. A thermal image detection method for substation equipment incorporating knowledge migration and improved YOLOv6. CAAI Trans. Intell. Syst. 2023, 6, 1213–1222. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all You need. In Proceedings of the 31st International Conference on Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017; pp. 6000–6010. [Google Scholar]

- Quan, Y.; Zhang, D.; Zhang, L.; Tang, J. Centralized feature pyramid for object detection. IEEE Trans. Image Process. 2023, 32, 4341–4354. [Google Scholar] [CrossRef]

- Deng, C.Z.; Liu, M.Z.; Fu, T.; Gong, M.Q.; Luo, B.J. Infrared Image Recognition of Substation Equipment Based on Improved YOLOv7-Tiny Algorithm. Infrared Technol. 2024, 46, 1–8. Available online: http://kns.cnki.net/kcms/detail/53.1053.tn.20240228.1725.002.html (accessed on 2 March 2024).

- Tang, Y.; Han, K.; Guo, J.; Xu, C.; Xu, C.; Wang, Y. GhostNetv2: Enhance cheap operation with long-range attention. Adv. Neural Inf. Process. Syst. 2022, 35, 9969–9982. [Google Scholar]

- Zheng, H.; Sun, Y.; Liu, X.; Djike, C.L.T.; Li, J.; Liu, Y.; Ma, J.; Xu, K.; Zhang, C. Infrared image detection of substation insulators using an improved fusion single shot multibox detector. IEEE Trans. Power Deliv. 2020, 36, 3351–3359. [Google Scholar] [CrossRef]

- Li, Z.; Yang, L.; Zhou, F. FSSD: Feature fusion single shot multibox detector. arXiv 2017, arXiv:1712.00960. [Google Scholar]

- Ou, J.; Wang, J.; Xue, J.; Wang, J.; Zhou, X.; She, L.; Fan, Y. Infrared image target detection of substation electrical equipment using an improved faster R-CNN. IEEE Trans. Power Deliv. 2022, 38, 387–396. [Google Scholar] [CrossRef]

- Zheng, H.; Cui, Y.; Yang, W.; Li, J.; Ji, L.; Ping, Y.; Hu, S.; Chen, X. An infrared image detection method of substation equipment combining Iresgroup structure and CenterNet. IEEE Trans. Power Deliv. 2022, 37, 4757–4765. [Google Scholar] [CrossRef]

- Duta, I.C.; Liu, L.; Zhu, F.; Shao, L. Improved residual networks for image and video recognition. In Proceedings of the 2020 25th International Conference on Pattern Recognition (ICPR), Milan, Italy, 10–15 January 2021; pp. 9415–9422. [Google Scholar]

- Zhou, X.; Wang, D.; Krähenbühl, P. Objects as points. arXiv 2019, arXiv:1904.07850. [Google Scholar]

- Wu, T.; Zhou, Z.K.; Liu, J.F.; Zhang, D.D.; Fu, Q.; Ou, Y.; Jiao, R.N. ISE-YOLO: A Real-time Infrared Detection Model for Substation Equipment. IEEE Trans. Power Deliv. 2024, 39, 2378–2387. [Google Scholar] [CrossRef]

- Wu, C.D.; Wu, Y.L.; He, X. Infrared image target detection for substation electrical equipment based on improved faster region-based convolutional neural network algorithm. Rev. Sci. Instrum. 2024, 95, 043702. [Google Scholar] [CrossRef]

- Han, S.; Yang, F.; Yang, G.; Gao, B.; Zhang, N.; Wang, D.W. Electrical equipment identification in infrared images based on ROI-selected CNN method. Electr. Power Syst. Res. 2020, 188, 106534. [Google Scholar] [CrossRef]

- Lu, L.; Li, M.L.; Xiong, W.; Gong, K.; Ma, H.; Zhang, X. Infrared Image Detection of Substation Equipment Based on Improved YOLOv8. Infrared Technol. 2024, 46, 1–7. Available online: http://kns.cnki.net/kcms/detail/53.1053.TN.20240508.1504.002.html (accessed on 10 May 2024).

- Fu, J.Y.; Zhang, Z.J.; Sun, W.; Zou, K.X. Improved YOLOv8 Small Target Detection Algorithm in Aerial Images. Comput. Eng. Appl. 2024, 60, 100–109. [Google Scholar]

- Wang, C.Y.; Bochkovskiy, A.; Liao, H.Y.M. YOLOv7: Trainable bag-of-freebies sets new state-of-the-art for real-time object detectors. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 7464–7475. [Google Scholar]

- Wang, C.Y.; Yeh, I.H.; Liao, H.Y.M. YOLOv9: Learning What You Want to Learn Using Programmable Gradient Information. arXiv 2024, arXiv:2402.13616. [Google Scholar]

- Hao, Y.; Liu, B.; Zhao, B.; Liu, E.H. Small object detection algorithm based on improved YOLOv8 for remote sensing. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2023, 17, 1734–1747. [Google Scholar]

- Wang, G.; Chen, Y.F.; An, P.; Hong, H.Y.; Hu, J.H.; Huang, T.G. UAV-YOLOv8: A small-object-detection model based on improved YOLOv8 for UAV aerial photography scenarios. Sensors 2023, 23, 7190. [Google Scholar] [CrossRef]

- Yang, S.Z.; Wang, W.; Gao, S.; Deng, Z.P. Strawberry ripeness detection based on YOLOv8 algorithm fused with LW-Swin Transformer. Comput. Electron. Agric. 2023, 215, 108360. [Google Scholar] [CrossRef]

- Min, L.T.; Fan, Z.M.; Dou, F.Y.; Lv, Q.Y.; Li, X. Nearshore Ship Object Detection Method Based on Appearance Fine-grained Discrimination Network. J. Telem. Track. Command. 2024, 45(2), 1–9. [Google Scholar]

- Deng, Z.G.; Dai, G.; Wu, X.G.; Deng, Y.J.; Wang, W.; Chen, M.; Tu, Y.; Zhang, F.; Fang, H. An image recognition model for minor and irregular damage on metal surface based on attention mechanism and deformable convolution. Comput. Eng. Sci. 2023, 45, 127–135. [Google Scholar]

- Dai, J.; Qi, H.; Xiong, Y.; Li, Y.; Zhang, G.; Hu, H.; Wei, Y. Deformable convolutional networks. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 764–773. [Google Scholar]

- Zhu, X.; Hu, H.; Lin, S.; Dai, J. Deformable convnets v2: More deformable, better results. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 9308–9316. [Google Scholar]

- Guo, M.H.; Lu, C.Z.; Hou, Q.; Liu, Z.; Cheng, M.M.; Hu, S.M. Segnext: Rethinking convolutional attention design for semantic segmentation. Adv. Neural Inf. Process. Syst. 2022, 35, 1140–1156. [Google Scholar]

- Wang, A.; Chen, H.; Liu, L.H.; Chen, K.; Lin, Z.J.; Han, J.G.; Ding, G.G. YOLOv10: Real-Time End-to-End Object Detection. arXiv 2024, arXiv:2405.14458. [Google Scholar]

| Parameter | Configure |

|---|---|

| Operating System | Ubuntu 18.04 |

| Deep Learning Framework | Pytorch 1.11.3 |

| CPU | Intel(R) Xeon(R) Gold 6148 CPU |

| GPU | NVIDIA GeForce RTX 3090, 24 GB |

| Graphics Card Memory | 24 G |

| Programming Language | Python 3.8 |

| YOLOv8n | DCNC2f | MSCA | mAP@0.5/% | mAP@0.5:0.95/% | Params/M | FLOPs/G |

|---|---|---|---|---|---|---|

| √ | 90.1 | 64.6 | 3.00 | 8.2 | ||

| √ | √ | 91.6 | 68.1 | 3.17 | 7.8 | |

| √ | √ | 91.5 | 67.5 | 3.10 | 8.2 | |

| √ | √ | √ | 92.7 | 68.5 | 3.26 | 7.8 |

| Confidence | Model | mAP@0.50/% | mAP@0.50:0.95/% | Params/M | FLOPs/G |

|---|---|---|---|---|---|

| 0.001 | YOLOv8n | 89.8 | 63.1 | 3.00 | 8.2 |

| Ours | 92.4 | 66.7 | 3.26 | 7.8 | |

| 0.01 | YOLOv8n | 90.1 | 64.6 | 3.10 | 8.2 |

| Ours | 92.7 | 68.5 | 3.26 | 7.8 | |

| 0.1 | YOLOv8n | 90.0 | 66.6 | 3.00 | 8.2 |

| Ours | 92.5 | 69.6 | 3.26 | 7.8 | |

| 0.5 | YOLOv8n | 85.3 | 65.6 | 3.00 | 8.2 |

| Ours | 89.7 | 69.6 | 3.26 | 7.8 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Xiang, S.; Chang, Z.; Liu, X.; Luo, L.; Mao, Y.; Du, X.; Li, B.; Zhao, Z. Infrared Image Object Detection Algorithm for Substation Equipment Based on Improved YOLOv8. Energies 2024, 17, 4359. https://doi.org/10.3390/en17174359

Xiang S, Chang Z, Liu X, Luo L, Mao Y, Du X, Li B, Zhao Z. Infrared Image Object Detection Algorithm for Substation Equipment Based on Improved YOLOv8. Energies. 2024; 17(17):4359. https://doi.org/10.3390/en17174359

Chicago/Turabian StyleXiang, Siyu, Zhengwei Chang, Xueyuan Liu, Lei Luo, Yang Mao, Xiying Du, Bing Li, and Zhenbing Zhao. 2024. "Infrared Image Object Detection Algorithm for Substation Equipment Based on Improved YOLOv8" Energies 17, no. 17: 4359. https://doi.org/10.3390/en17174359

APA StyleXiang, S., Chang, Z., Liu, X., Luo, L., Mao, Y., Du, X., Li, B., & Zhao, Z. (2024). Infrared Image Object Detection Algorithm for Substation Equipment Based on Improved YOLOv8. Energies, 17(17), 4359. https://doi.org/10.3390/en17174359