1. Introduction

Edge computing is a transformative approach in the field of distributed computing, designed to bring data processing closer to the data source, such as sensors, Internet of Things (IoT) devices, or local servers. It emerged from the increasing demand for real-time data processing capabilities, as it minimizes the need for data transmission over long distances. To fully understand the role of edge computing, it is essential to address its relationship with cloud computing.

Cloud computing has been one of the most popular topics in the information technology area over the past decades. In October 2007, IBM and Google announced a collaboration in cloud computing [

1,

2], which marked a significant milestone. Since then, cloud computing has been widely discussed and used. Typically, the cloud computing paradigm is based on transmission control protocol (TCP)/internet protocol (IP) and involves the high integration of configurable computing resources [

3]. It encompasses data storage, management software, data transmission network, cloud center, and related applications. Cloud computing operates as a computational paradigm that collects data from the end devices and sends it to a centralized cloud server for further processing and computation. It boasts advanced characteristics such as being service-oriented, loosely coupled, and strongly fault-tolerant [

1]. Cloud servers usually possess supercomputing power capable of handling various large-scale computing tasks.

Conventionally, cloud computing is implemented using three cloud computing services: Software-as-a-service (SaaS) is responsible for running, managing, and maintaining the applications and the infrastructure on which the applications run; Platform-as-a-service (PaaS) provides customers with cloud resources such as hardware to develop, run and manage consumer-created applications without building and maintaining these resources; Infrastructure-as-service(IaaS) delivers computing, storage, virtual machines, and other fundamental resources to consumers.

With the development of network-connected intelligent devices and the growing needs of end users, intelligence has become integrated into various aspects of the industry and people’s daily lives [

4]. The significantly increasing number of smart edge devices has led to a larger data scale. According to Cisco’s statistics, there were 17.1 billion devices on the Internet in 2016 [

5]. The Cisco Annual Internet Report (2018–2023) White Paper published in 2020 [

6] indicated that there were 18.4 billion devices connected to the Internet, projected to rise to 29.3 billion by the end of 2023. Dell Technologies also predicts that there will be 41.6 billion IoT devices in 2025, producing 79.4 zettabytes (ZB) of data [

7]. These statistics highlight the growing data scales and increasing complexity of data processing tasks. The limitations of cloud-based architectures are becoming evident. Firstly, intelligent devices are often used for real-time data processing. For example, an IoT-enabled intelligent automobile [

8] generates a significant amount of data [

9], necessitating servers to receive and handle the data. The response speed of these servers is critical. Secondly, end devices are often placed across a wide range of geographical locations. The failure of some end devices and the long transmission distance increase resource consumption. Thirdly, ensuring the security of end devices’ data is another significant concern. Typically, cloud centers collect and store vast amounts of user personal data, including private information. Information leakage can lead to unacceptable consequences.

To address these challenges, an alternative solution that can process the massive data instantly, efficiently, and safely is necessary. Consequently, edge computing has emerged and gained popularity in recent years. It represents a variant of distributed computing architecture designed to process data at the grid edge with the end devices [

10]. Instead of transmitting data from each device at the edge of the network to the cloud center, the edge computing architecture enables the edge servers to collect, process, and store the data locally. By allowing data to be collected, processed, and stored on local edge servers, both the response speed and the safety of sensitive data can be improved. Furthermore, the management of the end devices across various geographical locations can rely on these local edge servers, helping eliminate the vision gaps between cloud servers and local edge devices.

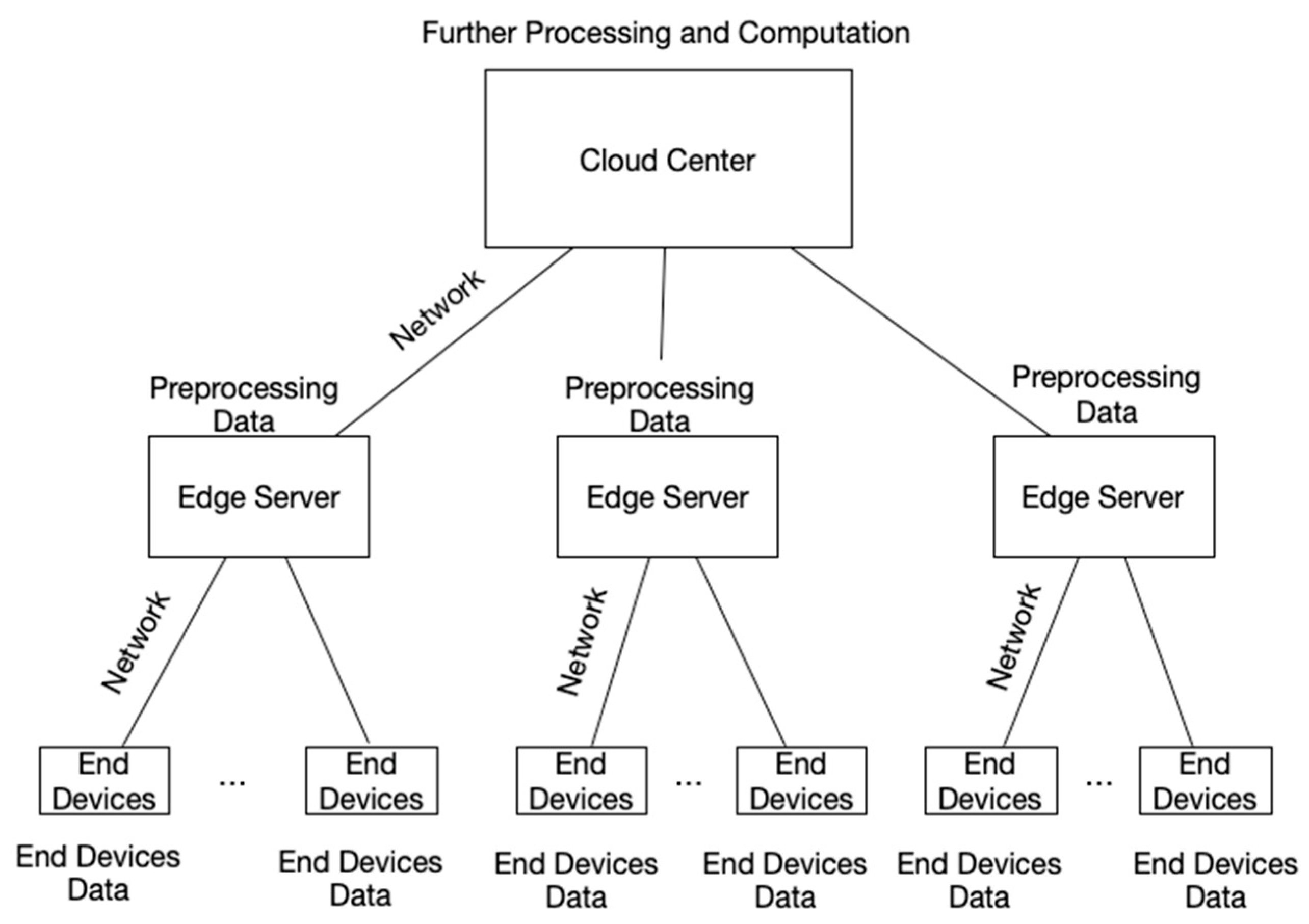

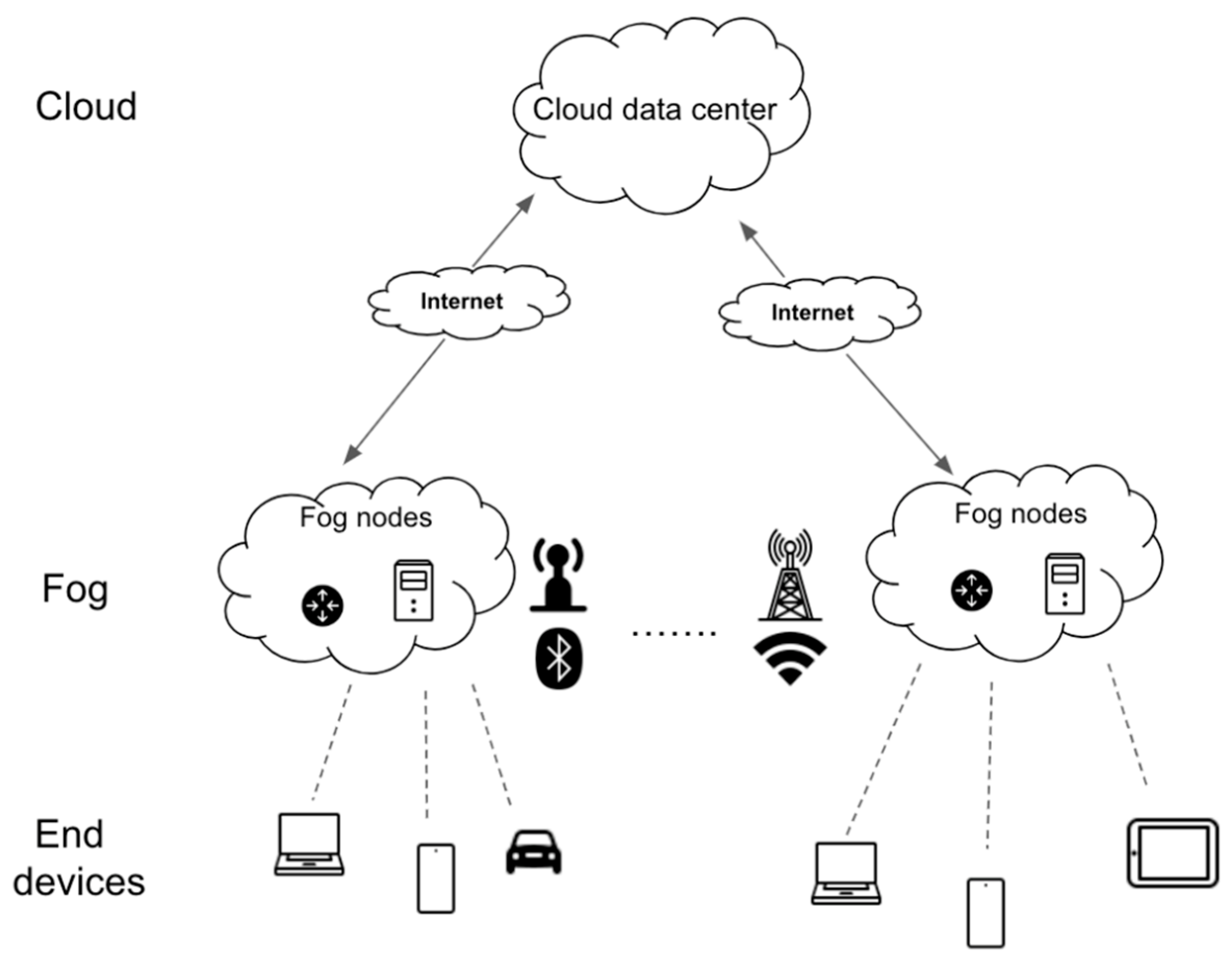

As shown in

Figure 1, the edge computing architecture comprises three tiers: central tier, edge tier, and device tier [

11]. The central tier aims to provide wide connectivity coverage using cloud computing services, where vast computational resources and storage capabilities are employed to process and manage large-scale data tasks. The edge tier serves as an intermediary layer that extends cloud capabilities closer to the data source by deploying edge servers between the cloud and end devices to handle intermediate data processing, reducing latency and bandwidth usage while still leveraging cloud resources for more intensive tasks. Edge computing further pushes data processing to the very edge of the network, right at or near the data sources. The device tier consists of various end devices with limited computing and storage resources, such as sensors and actuators, responsible for collecting raw data. The edge server layer receives raw data from the local end devices nearby and pre-processes the data. Subsequently, the pre-processed data is sent to the edge computing layer or cloud server for further processing and computing. As interconnected layers, cloud computing and edge computing collectively enhance the efficiency and effectiveness of data processing in today’s digital infrastructures. The proximity reduces latency, conserves bandwidth [

12], enhances security, reduces energy consumption of cloud centers, and improves the responsiveness of applications.

Edge computing communication can be categorized into two layers: (i) access networks, which connect the device tier with the edge tier, and (ii) transport networks, which connect the edge tier with the central tier.

In edge computing systems, communications are frequently between access points and end devices. The edge servers typically perform as small data centers that are co-located with the wireless access points. For example, the edge servers can be placed in the local base stations to eliminate the additional expenditure. Access points are also responsible for accessing large-scale cloud data centers. Different access technologies, including cellular wireless networks and fiber-wireless access networks, can be used to connect edge devices and edge servers. When edge devices are positioned at the wireless access point, they can directly access edge servers through the radio channels. In such scenarios, cooperative communication abilities of wireless channels can optimize task offloading through relaying via nearer mobile devices.

In recent years, the 5th generation mobile network (5G) has emerged as a cellular network technology capable of providing higher-quality communication and improving the quality of service. This feature enables 5G to support highly interactive applications with high throughput and low latency [

13]. Edge computing can benefit from 5G by bringing computing resources from the cloud to the end users. Compared with the traditional cloud computing model, edge computing in 5G is more suitable for highly computational and interactive applications.

Edge computing has been successfully applied in various areas. Recently, the increasing need for renewable energy integration and decarbonization has led to a rapid expansion in the deployment of distributed energy resources (DERs) and sensors. Power systems have become one of the areas that benefit significantly from edge computing.

Power systems are complex, hierarchical physical networks that generate, transmit, and distribute electricity to consumers, carrying massive amounts of information. Wide-area coordinated management and control among generation, transmission, load, and distribution is crucial to ensure reliable power system operations [

14]. Cloud computing is a paradigm that can provide end users with various services by integrating various virtualization technologies on the cloud side. This mechanism enables the operators and end users to access abundant computing resources and data storage capacities via the network without purchasing and maintaining hardware. In the past few decades, cloud computing has been increasingly used in power system analysis and control. For example, in 2014, Mercury Energy used cloud computing to provide services to help customers manage their power consumption [

15]. Measurements collected from multi-sources at substations are sent to the cloud centers for future processing and analysis. With the development of smart grids, an increasing number of network-connected devices are integrated into power systems, leading to an exponential increase in data loads. Effectively managing and processing the massive data generated by these widely distributed devices is a critical challenge, especially for traditional centralized approaches that require real-time processing. Compared with cloud computing, edge computing can offer a decentralized paradigm that allows the smart devices to process the data at the edge of the network by themselves. Furthermore, the raw data collected by end devices can be stored and pre-processed in the small data centers at the network edge instead of sending to the centralized center. These features enable real-time monitoring and control of these devices, support advanced metering infrastructure (AMI), and aid in predictive maintenance. Edge computing can also benefit enterprises by increasing the reliability of the power supply systems.

This paper aims to provide a comprehensive review of the state-of-the-art edge computing technology for power system engineers who may not be very familiar with the fundamental science of edge computing yet but are interested in understanding its capabilities, architectures, and existing and potential applications in a variety of domain areas, particularly power systems. The main contributions of this paper include the following:

A review of generalized edge computing architectures and their applications;

A review of popular edge computing technologies;

A review of edge computing applications in power systems that are oriented from the architectures;

A discussion of future opportunities of edge computing in power systems.

The rest of the paper is organized as follows:

Section 2 presents the state-of-the-art of edge computing architecture;

Section 3 reviews the main technologies and relative works of edge computing in general areas;

Section 4 reviews the existing applications of edge computing in power systems;

Section 5 discusses the works in this paper and future opportunities for edge computing applications in power systems;

Section 6 concludes the paper.

3. Review of Edge Computing Technologies

The emergence of edge computing has accelerated the development of the IoT and plays a pivotal role in an intelligent society. In addition to edge computing architecture, the technologies used in designing edge computing applications have become popular research topics within the intelligent community. This section mainly includes reviews of computational offloading, resource placement, traffic offloading, caching, energy efficiency, and storage.

3.1. Computational Offloading

In edge computing, computational offloading refers to the technique that transfers heavy computation tasks to nearby edge servers [

57]. With the increasing number of resource-demanding applications, improving hardware in mobile ends and networks will still not be able to keep up with the trend in demand. Therefore, computational offloading has gained attention in edge computing research. Many researchers have studied computational offloading to resource-rich edge servers. Computational offloading is the key technology to enable MAEC to run complex applications on edge equipment by delivering compute-intensive tasks to separate edge servers. There have been many related research achievements mainly discussing offloading strategies.

In 2016, Zhang et al. [

58] proposed a mobile edge computing-based offloading framework in a cloud-enabled vehicular network. In this framework, an efficient offloading strategy is designed. Meanwhile, considering the limitation of the edge servers and latency tolerance of the computing tasks, a contract-based computing resource scheduling scheme is designed. The experiments show that the proposed scheme improves the performance of the MAEC utility.

In 2020, Zhang et al. [

59] also focused on task offloading in vehicular edge computing networks. Various delay-sensitive vehicular applications have emerged due to the rapid development of vehicular networks. MAEC is becoming a promising paradigm, benefiting vehicular edge computing networks by offloading the vehicles’ compute-intensive tasks to nearby edge nodes. The authors introduced fiber-wireless technology to address load-balancing issues and proposed a software-defined networking (SDN)-based load-balancing task offloading scheme in Wi-Fi-enhanced vehicular edge computing networks. This scheme uses SDN to support centralized network and vehicle data management. The experiment results demonstrate that this scheme can achieve superior performance on delay reduction.

Real-time analytics of video content are widely recognized as a prime application for edge computing. They often involve heavy computing tasks and a large amount of data. In 2018, Hung et al. [

60] proposed VideoEdge, which is a hierarchical architecture. This architecture includes cameras, clusters, and the cloud for video analytics targeting query optimizations. The architecture presents a prominent demand for determining the optimal balance between various resources and precision, thus reducing the search space. VideoEdge, for each video inquiry, chooses the most suitable amalgamation of algorithmic elements and distributes them across diverse clusters. VideoEdge operates under the assumption that camera-based computing is absent, shifting the entirety of computer vision processing to secluded clusters.

Xu et al. [

61] proposed a trust-aware task offloading method (TOM) for video surveillance in edge computing-enabled Internet of Vehicles. This method aims to minimize the response time of the services and achieve the load balance among the edge nodes. By analyzing the process of video tasks, the authors formulated the problems of task offloading, time cost of services, and privacy entropy as a multi-objective optimization problem. To address the task offloading issue, they chose SPEA2 (improved Strength Pareto Evolutionary Algorithm) [

62] to solve the objective problem of task offloading. The experiment results show that TOM achieves better performance on edge resource utilization and load balance among the nodes.

3.2. Resource Placement

The geographical distribution of resources is one of the most important components in edge computing as it supports the mobility of edge computing applications. Mobile ends collect data and transfer it to the nearest edge computing nodes. The cloud center supports application mobility by anchoring the server’s position and transferring data to the server through the network.

In the area of resource placement, some works focus on the placement of edge nodes. To calculate the cost and average latency of the edge computing network, factors such as the location and the number of edge nodes must be considered. The study [

63] proposed the Cost Aware Cloudlet Placement in Mobile Edge Computing (CAPABLE) strategy, considering both the Cloudlet cost and average end-to-end delay. This strategy also includes a Lagrange-based heuristic algorithm and a workload allocation scheme to minimize the mobility delay. The results show that CAPABLE minimizes the average end-to-end delay between users and their Cloudlets while also reducing the cost of the Cloudlets. In another study [

64], researchers presented a framework to address the edge server placement problem. The goal of this framework is to optimize server placement strategies and reduce the costs of deploying edge computing networks.

Nowadays, data-intensive tasks that need large storage space and rich calculation resources have become common in edge computing. Current research usually focuses on transmitting data from end devices to edge servers but ignores the data storage on lightweight edge servers with limited resources. Jin et al. [

65] presented an efficient graph-based iterative algorithm for edge-side data placement problems. Compared to the traditional storage strategy, the proposed algorithm increases the cache hit rate significantly.

Geographical mobility leads to another challenge in effectively managing hardware resources. Virtualization is one of the fundamental edge computing technologies to address this issue. Virtualization technology, usually in the form of virtual machines (VMs) or containers, provides flexible and reliable management for edge computing. In [

66], the authors designed a complementary algorithm for dynamic VM placement. The proposed algorithm decides whether there is a more suitable place for the VM allocation before the offloaded task is processed at the VM. The algorithm is based on the predicted mobility of users and the load of the base stations’ communication and computation resources.

3.3. Traffic Offloading

Traffic offloading refers to offloading traffic that meets specific offloading rules to mobile edge networks. It is vital in mobile edge computing as it focuses on providing high bandwidth and low latency.

Abdelwahab et al. [

67] presented an edge cloud architecture called REPLISOM. REPLISOM is a long-term evolution (LTE)-aware edge cloud architecture with optimized LTE memory replication protocol. This protocol enables end devices to transmit the memory replica to its neighbor devices by using device-to-device technology. The receiving devices compress the received replicas. The edge servers pull the completed compressed data from the receiving devices. This architecture alleviates LTE bottlenecks and effectively schedules memory replication to avoid resource-allocating conflicts. Evaluation results demonstrate that this architecture can reduce delay and cost for traffic offloading of IoT applications.

To alleviate network bottlenecks and reduce data latency, Kumar et al. [

68] investigated vehicular delay-tolerant networks (VDTNs). This architecture enables effective data transmission to various devices using a store-and-carry-forward mechanism. Massive computations, such as decisions about charging and discharging, are performed by mobile devices at the edge of the network. Experimental results demonstrate an increase in throughput and a decrease in response time and latency.

Wen et al. [

69] proposed a further improvement of communication in mobile edge computing by introducing an energy-efficient device-to-device (D2D) offloading network scheme. The D2D technique is used for traffic offloading as well as task balancing. Experimental results show that the proposed MEC-D2D model and MEC-D2D-Relay model reduce energy consumption and inter interference. Additionally, both models can avoid traffic congestion and provide a rapid response to end users.

3.4. Caching

Edge computing caching includes base station caching, distribution networks, and transparent caching, all of which significantly impact content distribution efficiency and service quality. Unlike traditional methods, edge computing caches can store content in edge nodes, enabling users to access data nearby instead of transmitting it from the cloud center. Additionally, edge computing caches reduce network delay and enhance service quality.

Li et al. [

70] proposed a cache-aware task scheduling strategy in edge computing to address issues related to improper placement and utilization of caching. They obtained an integrated utility function considering data transmission cost, caching value, and cache replacement penalty. Furthermore, they presented a cache locality-based task scheduling method, treating the task scheduling problem as a weighted graph affected by the positions of required data. Experimental results demonstrate that the proposed task scheduling algorithm performs better than other baseline algorithms in cache hit ratio, data transmission time, response time, and energy consumption.

Zhang et al. [

71] illustrated that edge caching can reduce the burden on the backhaul network, but existing works often treat storage and computing resources separately, ignoring the mobility characteristic of edge caching and portable end devices. They proposed a cooperative edge caching framework for 5G networks, utilizing edge computing resources to enhance edge caching capability. In the proposed framework, smart vehicles are employed as collaborative caching nodes for bringing contents to end equipment and sharing content cache tasks with base stations. The experiments show that the proposed framework alleviates content access latency and improves the utilization of cache resources.

Xia et al. [

72] investigated the collaborative caching problem in the edge computing environment, aiming to reduce the data caching and migration cost. This collaborative edge data caching problem (CEDC) is formulated as a constrained optimization problem. An online algorithm called CEDC-Online (CEDC-O) based on Lyapunov optimization is proposed.

3.5. Energy Consumption

Edge computing reduces latency, enhances security, and offloads heavy computation from the cloud to the network edge. While energy consumption in cloud centers has been extensively studied, research on the energy efficiency of edge computing is still needed. Communication among edge devices, servers, and cloud centers is complex, making energy efficiency a hot topic in edge computing.

Zhang et al. [

73] presented an energy-aware offloading scheme for optimizing resource allocation. The core technology of this scheme is an iterative search algorithm combining interior penalty functions. This algorithm takes the residual energy of smart devices’ batteries as the weighting factor of power consumption and latency. Results demonstrate that the proposed algorithm can find the optimal solution for energy consumption.

In [

74], Li et al. studied the energy consumption of energy-aware edge server placement. This problem is formulated as a multi-objective optimization problem. An energy-aware edge server placement algorithm involving particle swarm optimization is investigated to find the optimal solution. Experiments indicate that this algorithm can improve the computing resource utilization and minimize the energy consumption.

Hao et al. [

75] introduced green energy sources such as wind energy into edge devices. Nodes are divided into clusters, each containing several edge nodes to ensure a minimum distance between nodes in the cluster. A scheduling heuristic algorithm is implemented to schedule tasks and transfer energy based on the clustering method. Experiment results show that the proposed method reduces total energy consumption both in and out of the system.

3.6. Efficient and Safe Storage

The limited storage resources of end devices tend to affect user experience. Therefore, efficient and secure storage solutions are worth investigating in the field of edge computing.

Xing et al. [

76] proposed a distributed multi-level storage (DMLS) model with a multiple-factors least frequently used (mLFU) algorithm to address storage limitations. In this model, storage levels are composed of terminal devices at the edge. The mLFU is utilized to upload data from the current device to higher storage levels and subsequently remove this data from the device.

In summary, this section outlines popular edge computing technologies and state-of-the-art research. Computational offloading and traffic offloading are two topics that leverage edge computing architecture to extend computing resources and bandwidth. The resource placement subsection discusses how to strategically deploy edge nodes. Caching, storage, and energy consumption pertain to hardware in edge computing.

Table 2 lists the references in this section.

4. Review of Edge Computing in Power Systems

Power systems are large-scale and comprehensive electrical networks that support critical electricity services [

77]. In recent years, intelligent monitoring, control, and computational equipment have emerged, transforming traditional power systems characterized by unidirectional communication and centralized energy production into smart grids. These smart grids incorporate bidirectional communication and decentralized energy sources, including renewable energy, and utilize intelligent monitoring and control technologies to enhance grid efficiency, reliability, and sustainability. This technological shift is pivotal in addressing the growing energy demands and decarbonization challenges. This section reviews the applications of edge computing within smart grids to illustrate how it offers new opportunities to solve the issues brought by these modern systems.

4.1. Introduction of Smart Grids and Grid Edge

Smart grids are advanced supply-to-demand networks that automate and decentralize power flow [

77], constituting a cyber–physical system that integrates computation, communication, and control (3C) technologies [

78]. The IEEE [

79] defines smart grids as “

self-sufficient systems which allow integration of any type and any scale generation sources to the grid that reduces the workforce targeting sustainable, reliable, safe and quality electricity to all consumers”, while the U.S. Department of Energy [

80] defines the grid edge as “

The grid edge is where buildings, industry, transportation, renewables, storage, and the electric grid come together. More specifically, it’s the area where electricity distribution transitions between the energy utility and the end user”.

The transition to smart grids provides numerous benefits, including more efficient electricity transmission, quick and more accurate detection and restoration from power disturbances, reduced cost for power utility management, lower electricity rates, and better integration of large-scale renewable energy sources [

81].

The general structure of the smart grid can be classified into three layers: the user layer, the information system layer, and the physical system layer [

78]. The user layer serves as an interface for system operators to interact with the system. The information system layer handles transmission, receives data from the physical system layer, and processes it. The physical system layer consists of multiple sensors, executors, and transmission lines.

Smart grids encompass a vast array of components, including power generation, transmission, distribution, and consumer interface components. It can be further partitioned into different function components. The smart device interface component is responsible for managing smart devices connected to the electronic devices. The storage component is related to the energy storage capability, improving reliability in cases of inconsistency from renewable energy sources and mismatches between peak consumption. The transmission component is the backbone of the power system connecting main substations and load centers. The monitoring and controlling components are used for surveillance, prediction, and handling of reliability issues, instability, and congestion. Finally, the demand-side management component aims to modify consumer demand to reduce costs by reducing the use of expensive generators and delaying capacity addition.

While the emergence of the smart grid brings numerous benefits, it also presents challenges. The increasing number of smart meters and other smart equipment results in growing communication demands, potentially leading to high latency issues as end devices communicate with the central system. Edge computing can help mitigate these challenges by leveraging its advanced features. As redundancy of the elements increases in a smart grid, a comprehensive Smart Grid Architecture Model (SGAM) can help determine how to design edge computing deployed smart grid architecture. A well-designed architecture is the base of edge computing-enabled smart grids [

82].

In recent years, a more complex and comprehensive concept known as the grid edge has emerged. The grid edge encompasses various technologies implemented near the customer side or at the ends of power grids, involving building electrification, distributed power generation and storage [

83], data storage and transmission, demand-side management [

84], power transmission, and distribution, among others. Typical research topics include connected utilities and infrastructure, energy storage, virtual power plants, distributed energy resources, cybersecurity and data privacy, and more. These emerging topics indicate that the grid edge will be studied more extensively, with both academia and business energy companies updating their strategies to adapt to disruptive solutions such as smart meters and smart grids becoming increasingly prevalent in the market. A study reports that 75% of enterprise data will be produced at the grid edge by 2025 [

84]. Meanwhile, experts from Red Hat predict that within the next three years, IT budget costs will account for 30% of the total [

84]. Research on the grid edge is necessary and well worth the investment.

The primary goals of edge grid technology programs are to create new energy architectures that address decentralization and handle the increasing volume of data. Historically, electricity was generated by the centralized utilities and transmitted to end users. As modern power systems evolve, traditional energy models no longer suffice. Decentralized power centers at the grid edge offer similar benefits with fewer risks and lower costs, motivating both academia and industry to focus on decentralization and grid edge research. By shifting to smaller hubs near the consumer side, energy loss and risks can be reduced, and distribution centers can be more flexible and easily maintained.

Additionally, the emergence of IoT, smart meters, and other technologies has led to a data explosion, overwhelming centralized systems with data collection, transmission, and analysis. Consequently, the power industry prioritizes grid-edge innovations. Significant projects such as the BrooklynQueens Demand Management program (BQDM) [

85] and California’s Demand Response Auction Mechanism (DRAM) [

86] exemplify successful grid edge initiatives [

84]. Well-designed energy models based on the grid edge can also decrease the costs of energy transportation and storage and improve the stability of the power supply.

As enterprises and countries race to realize digital transformation in the energy sector and net-zero emissions, AI deployed at the edge (edge AI) becomes a popular technology. Edge AI can effectively manage distributed energy resources [

87]. Noteworthy AI [

88] uses AI-enabled vehicle-mounted cameras to help monitor pole-mounted assets. Small boxes also attach to trucks with computation resources and communications gear in them. These boxes link to a processing unit in the cab to execute machine learning tasks [

89]. Siemens Energy uses AI deployed at the edge of power plants to realize autonomous management [

90].

Many researchers are exploring the use of edge computing technology to enhance power system applications. The following applications have been reviewed in this paper:

Power Grid Distribution Monitoring;

Smart Meters Management;

Data Collection and Analysis;

Anomaly Detection;

Measurement Placement;

State Estimation;

Energy Storage;

Resource Management;

Security of Grid Edge Management System;

Renewable Energy Forecasting.

4.2. Power Grid Distribution Monitoring

Power grid monitoring is one of the most important research topics in smart grids today. With advancements in power grid technology, power sources have diversified. Devices for renewable energy, such as solar energy and wind energy, have a varied geographical distribution. Furthermore, the emerging smart end devices used in smart grids have made traditional manual inspection inadequate for ensuring safety and efficiency. Smart grid monitoring and control have become increasingly complex, necessitating real-time responses. Many researchers have focused on this issue and proposed various applications to address these challenges.

In 2018, Huang et al. [

91] emphasized the importance of power grid monitoring in preventing severe safety accidents. Recognizing that traditional manual detection cannot meet the needs of modern power grids, the authors proposed an edge computing framework for real-time monitoring. They developed this framework by moving part of the computation from the central cloud to the edge servers. Their experiments showed that the detection delay was reduced by up to 85% compared to cloud-based solutions.

Monitoring real-time smart meters is another popular topic. In 2020, Tom et al. [

92] focused on AMI in smart grids. Given that smart grid infrastructures are geographically distributed, there is a demand for effective monitoring and control systems. A good routing protocol is crucial for the metering infrastructure to communicate effectively with the fog router. A standard routing protocol for IPv6 over Low Power Personal Area Network (6LoWPAN) is the routing protocol for Low Power Lossy Area Network (RPL). Smart meters within the 6LoWPAN network communicate with fog routers, which results in nodes far from the fog routers having low packet delivery ratios and high delays. The authors proposed an advanced RPL scheme to address this issue. Instead of communicating with the fog router directly, aggregator-based RPL nodes were set between edge nodes and the fog router. Performance evaluations showed that this scheme effectively increased packet delivery ratios and reduced end-to-end delays.

Sun et al. [

93] proposed an edge computing node planning model for real-time transmission line monitoring. Monitoring transmission lines is crucial to ensure the stable operation of the power grid. The emergence of real-time online monitoring services enhances the safety of the transmission lines, but it also introduces a high latency requirement. Edge computing can bring the edge nodes closer to the terminal, thereby reducing latency. In their paper, an edge computing node placement model was established, and a genetic algorithm based on a predator search strategy was proposed to overcome the shortcomings of the original genetic algorithm. Simulation experiments demonstrated that this improved genetic algorithm performed better in planning the placement of edge nodes.

The work presented in [

94] focuses on the communication system models used for voltage profile monitoring and power loss estimation in smart grids. The author implemented and compared two different architectures: cloud-based and cloud–fog-based. Experimental results showed that the cloud–fog-based approach significantly reduced the total simulation time and the data size transferred to the cloud.

4.3. Smart Meters Management

Energy resources play a crucial role in the development of a country and its economy. A stable energy source can provide significant benefits. To achieve this goal, governments have been making efforts to build and deploy smart grid infrastructures in recent years. As an essential device to measure and record the energy consumption of the customer side, smart meters need to be optimized for more efficient and stable power systems. This subsection presents the state-of-the-art edge computing-based applications in smart grids.

In smart grids, smart meters can help to detect anomalies in real-time quickly and effectively. However, sending data within a short time slice is not normal because of the bottleneck of the communication network and storage media. Utomo et al. [

95] proposed prediction techniques by combining deep neural network (DNN), support vector regression (SVR), and k-nearest neighbors (KNN). To predict anomalies in the next few weeks, the timestep is set as one day. This can help users to check their usage and decide whether to prepare sufficient resources or not. The proposed techniques also involve Edge Meter Data Management System (MDMS) and Cloud-MDMS in model training.

Smart meters are typical end devices that can collect and send energy data. The usage of smart meters can establish a close relationship between smart grids and cyber-physical systems. With the development of the smart grid, an increasing number of smart meters have been installed. The malfunction detection of large-scale meters has become an issue. Liu et al. [

96] proposed a meter error estimation method and studied a low-voltage energy system model. The proposed method includes a decision tree to sort data with different loss levels. It also generates data clusters with different energy usage behavior. Then, a data matrix can be established, and a recursive algorithm is used to calculate the equation and estimate the meter error. By comparing the regulation threshold and the meter error, the malfunctioning meter can be detected. Furthermore, this approach can be applied in an edge computing environment.

Kumari et al. [

97] focused on transferring smart meter data from consumers to the operator within the given time with minimum energy consumption. The authors proposed a smart metering system using edge computing in Long Range (LoRa). All devices in a single house are connected to a smart meter. The edge device and the LoRa node are responsible for processing and transferring the data collected by smart meters. The energy multivariate time series are considered as the energy consumption of various electric devices. At first, the system uses a deep learning-based compression model to reduce the size of the time series. Then, it finds the optimal energy time series that can decrease energy consumption. Finally, an algorithm is adopted to obtain the appropriate spreading factors to transfer the time series from consumers to the operator within the given time. The experiments show that this system can transfer the time series within the given time with higher energy efficiency.

In recent years, the computing capabilities of IoT devices have significantly grown, and processing data in the end devices has become possible. The work in [

98] presents a smart metering system that can process data analysis on the smart meter where it is produced. This system is based on a multi-core edge computing architecture. The experimental results show that this architecture reduces the processing time.

As the penetration of smart meters and the diversity of participants increase, allowing users to obtain and use the information behind the meter will be highly beneficial. To achieve this goal, the concept of an unbundled smart meter (USM) was introduced in the European Nobel Grid project [

99]. The USM has two components: a smart metrology meter and a smart meter extension. The smart metrology meter, a sensor module, includes interfaces that enable users to read real-time information. The smart meter extension is a framework embedded in the USM that supports various new functionalities. In [

100], Qin et al. proposed an artificial intelligent agent for USM based on deep learning. This agent combines deep learning models and real-time data from USM to help customers and utilities manage their energy usage more effectively. Meanwhile, the spatiotemporal decomposition technique involving different deep learning models in time series is deployed in the agent for load and photovoltaic power forecasting. The experiment results show that this spatiotemporal decomposition agent performs well in controlling operating costs and battery degradation costs.

4.4. Data Collection and Analysis

An advanced smart metering system is essential for the success of the smart grid. This system is one of the important components for data collecting and analyzing in smart grids. Hence, it plays an important role in ensuring the safe, stable, and efficient operation of smart grids. In this subsection, the emerging applications for data collection and analysis using an advanced smart metering system will be discussed.

Connectivity verification of the distribution system is an urgent problem. To solve this problem, Si et al. [

101] introduced a cloud-based collaboration mechanism to determine outlier users and valid connections. In this paper, the authors came up with an affinity propagation clustering-based local outlier factor (AP-LOF) algorithm that can effectively identify and verify the voltage outlier. Moreover, they set up a mechanism in the cloud center to correct the identified outliers. The experiments are based on actual smart meter data. The results show that the performance of this algorithm is better compared with traditional methods. Additionally, the calculation efficiency is improved significantly because of the cloud–edge collaboration scheme.

Improving the analyzing capability of the smart meter is another notable research topic. Sirojan et al. [

102] presented that traditional smart meters are only able to measure and show the digital number of energy usage. To enable the smart meter to function in modern advanced metering systems, the authors proposed an embedded edge computing paradigm in the smart meter. This paradigm improves accuracy, latency, and bandwidth significantly by bringing data analytics to the smart meters.

4.5. Anomaly Detection

Anomaly detection, also known as outlier detection, is a process that collects and observes data to find behaviors that deviate from the standard pattern. Due to the wide usage of smart meters, anomaly detection of smart meter data has attracted much attention from academia. Abnormal energy consumption in daily life happens very often, such as electricity leakage and theft. Therefore, anomaly detection of meter data can benefit society and the economy significantly.

One of the detection methods is centralized detection. This method, which combines multiple techniques such as big data processes, analyzes the smart grid data uniformly. The infrastructure, such as smart meters, measures consumers’ energy consumption and sends the measurements to the data center for analysis. For future processing, the centralized facilities execute the detection algorithms to analyze the large volume of data gathered from electricity smart meters and these algorithms have great performance in detecting anomalous power consumption. Cui et al. [

103] focused on centralized anomaly detection in school electricity facilities. The authors investigated hybrid anomaly detection models and collected electricity consumption data for analysis. According to the evaluation, the system can effectively detect the anomaly electricity consumption in the school. Silva et al. [

104] proposed a centralized anomaly detection method based on Long Short-Term Memory (LSTM) and Negative Selection. This method can be applied to anticipate the anomalies in electricity consumption. Meanwhile, this method can also predict the occurrence of anomalies and monitor power consumption in real-time.

Another commonly used detection method is decentralized detection. The traditional centralized anomaly detection methods rely on installing many electricity meter devices in households. The power consumption data are measured and collected by these meters and transmitted to the centralized data center for future processing and analysis. In the recent smart grid architecture, a large scale of smart devices are distributed across various facilities over a large geographical area for monitoring power consumption. It is difficult for traditional centralized detection methods to handle such a large amount of data generated by smart meters. In this case, edge computing has become one of the solutions to reduce latency and provide better performance in data processing. Liang et al. [

105] proposed an anomaly detection method with edge computing architecture. Instead of applying the anomaly detection model in the data center, the authors distributed the model on the edge node devices. Moreover, to reduce the delay, two-way communication is adopted in this method. The experiment results show that this method greatly reduces the communication delay and improves detection accuracy. Zheng et al. [

106] presented an edge computing-based electricity theft detection method. Electricity theft by low voltage (LV) users could cause power loss and electric shock. It is essential to monitor and identify anomalous users of electricity theft. However, smart meters of LV users occasionally fail to update the data to the centralized advanced metering infrastructure. In the proposed approach, the Granger causality test is applied in the distribution transformer unit with edge computing. Since the distribution transformer unit collects the data without distortion, the proposed method can prevent the distortion caused by updating failure. Furthermore, fog computing can be introduced as another decentralized paradigm for anomaly detection. Jaiswal et al. [

107] proposed a hierarchical fog computing architecture to develop anomaly detection machine learning models. The proposed anomaly detection consists of two parts: model training and anomaly detection. The evaluation results confirm the efficacy of the proposed architecture.

4.6. Measurement Placement

MEC extends a cloud computing scheme and leverages servers near end-users at the network edge to provide a cloud-like environment. An appropriate measurement placement strategy plays a crucial role in the performance of such service-based applications. The features of modern smart grids, such as large-scale geographical distribution, bring challenging issues to measurement placement. In this subsection, several research topics on measurement placement are summarized and discussed.

Zhao et al. [

108] focused on low-delay resource scheduling in power systems. The paper presents that as an increasing number of smart devices are used in smart grids, the massive data and calculations become heavy burdens for the power system. To improve processing efficiency, the authors proposed two heuristic algorithms combined with edge computing architecture. For the virtual machine sequence issue, sorting the virtual machines with the critical path algorithm is ideal for reducing the computing time. The second stage is to use an enhanced best-fit algorithm to improve the placement strategy, avoiding an increase in computing time. The experiments show that this two-stage algorithm ensures the optimal placement of virtual machines and effectively shortens the computing time of the power system.

Fault detection plays a primary role in modern smart grids. In paper [

109], a framework based on the phasor measurement unit (PMU) and edge computing is proposed for fault detection and localization. In this framework, PMUs with embedded personal computers are placed at a primary substation and a secondary substation at the end of a feeder. This framework makes the fault detection and localization perform at the edge of the local network. The communication delay and the data loss are eliminated.

4.7. State Estimation

In modern power systems, state estimation (SE) is a necessary approach to monitor power grids. SE is a method to process the imperfect measurements and find the actual values of the unknown variables. It is a digital scheme that can estimate the true state of the power system. Modern power systems perform many functions, such as generating and transmitting electricity across multiple geographic areas. Computer-based management systems have emerged for controlling and managing such complex systems. Power systems typically are divided into several areas. Different areas are interconnected with each other. All areas need to keep the frequency the same under steady-state conditions [

110]. However, power systems constantly suffer from disturbances covering a wide range of conditions. Therefore, real-time monitoring and control are essential functions in power systems. SE can provide real-time data for many of the central control and dispatch functions in a power system [

111].

In power systems, there are always some imperfect measurements that are considered unknown variables. SE can estimate the true values of these unknown variables by using statistical criteria. With the development of the smart grid, the volume of the measuring data is increasing. In this situation, real-time control requires a wider bandwidth and more computing resources. To solve this issue, the state estimation can be executed at the edge nodes in the local area. Then, the estimated results can be updated in the control center. Edge computing is an ideal architecture to achieve this goal.

Meloni et al. [

112] proposed a cloud–edge-based architecture for SE in modern smart grids. This solution combines cloud resources and edge computing advantages. Meanwhile, virtualization techniques are used to split the processing of measurement data from the physical devices. The traditional evaluation of the state of the power grid is performed at a slow rate and is suitable for steady-state operating conditions. The increasing scale of the power grid challenges power management. Therefore, the proposed system includes sensing devices such as PMUs that can dynamically monitor and control the system and properly handle anomalous states. This effective wide-area measurement system is based on PMUs and is bandwidth-consuming. To improve accuracy and reduce delay, edge computing is introduced. The edge devices in the network are considered micro-cloud servers to host the advanced processing functions and application modules. The results show that this cloud–edge-based architecture can ensure the accuracy and latency of the estimation. The bandwidth is greatly reduced even when the PMUs execute full operating estimation. In another work, Kuraganti et al. [

113] proposed a distributed hierarchy-based framework to ensure the SE data is not attacked and manipulated. Typically, the data for SE are collected from the PMUs and transmitted to a fixed center for future processing. The proposed framework guarantees that both data aggregation and SE are executed on random devices. The simulations on the Institute of Electrical and Electronics Engineers (IEEE) 5-bus system and IEEE 118-bus system show that the framework can observably protect the SE data.

4.8. Energy Storage

An increasing number of wide-range power outages have become more frequent in recent years due to drastic climate change. Nowadays, extreme weather happens more frequently, and these conditions challenge the stability and recovery capability of the power system. The impact of power outages is more significant than before. To enhance the reliability of the power system, Chuangpishit et al. [

114] presented an advanced mobile energy storage system (MESS) to enhance the resilience of the power system. MESS can be considered a primary technology to offer a clean alternative to diesel or gas generators and provide emergency outage management. Typically, the MESS is small in size and cannot supply all the critical loads during the outage recovery time. Meanwhile, there are two more challenges: reconfiguring the customer connections and dedicating the backup energy storage system; the increasing size and number of devices are considered critical loads. As the technology of MESS evolves, the system size and the grid scale are growing rapidly. The article introduces several MESS use cases with planned or unplanned power outages. It also shows the business benefits the MESS can bring. The results indicate that MESS is an ideal power supply during recovery time. However, MESS is dependent on geography and some other distribution system characteristics. Utilities should use MESS based on their conditions and requirements.

4.9. Resource Management

Since the grid edge is a comprehensive concept covering a series of resources at the customer side, behind-the-meter (BTM) resources at the grid edge have become a popular topic in recent years. In [

115], Y. Y. C. Zhang illustrated that BTM resources are treated as a primary component of the power grid. These resources, such as DERs and flexible loads, are important during the traditional power systems’ transition to net-zero emissions and 100% renewable generation. Therefore, monitoring and forecasting these resources are critical goals in power system management. This paper focuses on discussing the gaps and issues of grid edge visibility. The gap in this paper is considered as “

the deficiency in the performance of a grid entity’s responsibilities (e.g., efficiency or reliability) as a result of limitations in either the existing sensing and measurement technologies or the surrounding institutional frameworks or standards”. The gaps in grid edge visibility include four categories: weather forecasting, grid edge monitoring, data standards, and stakeholder obstacles.

The first gap is weather sensing and forecasting. Accurate real-time forecasts and weather monitoring play an important role in forecasting grid edge DERs and the net load. The traditional method relies on third-party weather forecasting data, which are collected on a very large spatial and temporal scale. To improve the accuracy and reduce latency of the weather data, one solution is to develop local weather monitoring sensors. The local weather monitoring sensors at the grid edge can provide real-time weather data and benefit resource management. The second gap is the lack of real-time visibility of grid edge components. One avenue to address this issue is to sort the grid edge devices by type and by load. Different devices are suitable for using different monitors to collect data. The third gap is the lack of standard data formats among different applications. With the development of the grid edge resources, standard data can increase communication effectiveness. Last but not least, stakeholder capability and interest also harm the grid edge visibility.

As distributed energy resources become the secondary side of the grid edge, distributed grid edge control has become a new challenge for utilities. For distributed utility management, volt and var are two primary parameters. However, objectives such as minimizing distribution loss, saving energy, and reducing peak demand are far from easy for existing controlling strategies to achieve. Therefore, Moghe [

116] presented a distributed grid edge control by using autonomous fast-acting hybrid power electronics devices.

The first contribution is that the presented distributed controlling strategy is an “edge-up” architecture instead of the traditional “top-down” approach. Autonomous devices called the Edge of Network Grid Optimization (ENGO), are introduced to realize the “edge-up” strategy. ENGO devices are managed by a software layer called the Grid Edge Management System (GEMS) [Wärtsilä Energy Storage—GEMS Digital Energy Platform Available online:

https://storage.wartsila.com/technology/gems/ (accessed on 27 June 2024)]. The ENGO-GEMS system provides an effective solution for utilities to manage the distributed grid and achieve the objectives mentioned above. Furthermore, a control principle, implemented with the coordination of set points between all ENGO devices and the major assets, was presented in the ENGO-GEMS system.

Due to the diversity of energy resources and increasing demand for energy, the way the power system produces and transmits energy is becoming more and more complex. The adoption of these new energy resources presents new grid management challenges. In recent years, artificial intelligence (AI) and machine learning have been introduced at the grid edge to ensure the reliability and resiliency of energy systems. In [

117], Y.C. Zhang et al. proposed edge AI, an advanced AI technology that can handle the complex grid edge more efficiently. The connected physical devices near the customer are embedded with AI applications and are in charge of computation. Rather than completing the computation in the central data center, handling the data with the devices close to where it is produced can make the operation of edge devices more stable and reliable.

4.10. Security of Grid Edge Management System

Network-constrained energy management systems at the grid edge depend on the interconnection of a circuit segment to deliver and receive load measurement. However, this raises the risk of invisible false data injection attacks (FDIAs). To eliminate this risk, the paper [

118] proposes a framework to detect the FDIAs on loads and replace the anomalous data with forecasted values. The detection algorithm in this framework comprises seven steps. The first step is to implement state estimation with the received measurements. Then, the bad data detection algorithm is proposed to check for anomalies in the load. If anomalous data are detected, the system will replace the bad data with the forecasted values. Otherwise, a data-driven autoencoder (AE) approach in another layer is responsible for identifying potential attacks in the measurements. If there is no indication that the measurements are attacked, the measured data can be used in a grid-edge energy management system. This security enhancement framework is tested by using an IEEE 13-bus system with three integrated DERs. The evaluation results show that the proposed framework can effectively mitigate the risk of FDIAs and enhance the resilient operation of grid-edge energy management systems. The emergence of inverter-based DERs prompts diverse renewable energy sources to integrate into the primary grid. Inverter-based DERs bring many considerable benefits, such as improving power quality by minimizing harmonics and responding rapidly to fault isolation. Although inverter-based DERs can provide improvements, there are still challenges that need to be resolved. The first challenge, as presented by Gao et al. [

119], is that the inherent instability of renewable energy sources threatens the reliability of the system. The existing forecasting method still cannot predict the variance between estimated and real values, which can result in serious reliability issues. The second challenge is the stability of the system. Low inertia can reduce the system’s stability margin. To address the limitations mentioned above, the authors proposed a cyber–physical security assessment framework. The framework consists of a cyber–physical system model involving dynamic attributes of inverter-based DERs. The proposed framework was evaluated with IEEE 13-node and 123-node test feeders, and the results show that this framework demonstrates significant improvement in resilience and stabilization.

4.11. Renewable Energy Monitoring and Forecasting

In recent years, the shortage of energy resources has become a popular topic. It is urgent to solve this issue by improving the efficiency of energy usage and developing renewable energy resources. This subsection focuses on monitoring and forecasting the generation and consumption of power produced by renewable energy resources.

In recent years, the power facilities of renewable energy, such as photovoltaic systems, have been involved in a digital revolution. With the increasing scale of the distributed renewable energy systems, it is essential to develop a new computing paradigm in monitoring. In [

120], Abdelmoula et al. proposed a framework for distributed photovoltaic systems monitoring and fault detection in a smart city. This edge computing framework enables the data to be processed at the edge of the network, reducing the time and bandwidth consumption. Four light machine learning models are tested and selected as the best performers and implemented in the edge nodes. The experiment results show that this framework effectively improves the accuracy of fault detection in photovoltaic systems.

In [

121], Lv et al. focused on forecasting short-term electricity generation and consumption. In this paper, smart grid 2.0 is mentioned as the basic knowledge that refers to the next generation of power grid, including distributed renewable energy resources, bi-directional energy, and modern digital energy management systems. Smart microgrid is one of the techniques that can help with realizing smart grid 2.0. It can provide automated management of distributed renewable energy resources. To realize smart microgrids, integrating edge computing and AI (edge–AI) plays a key role. An edge–AI-based forecasting method is proposed in this article. This method includes a wind generation forecasting algorithm implemented in edge devices based on convolutional neural network (CNN) and gated recurrent unit (GRU) machine learning models, and an electric load prediction algorithm in end users’ devices. Experiment results on real-world datasets from China and Belgium show that the proposed algorithms improve the prediction accuracy of wind power generation and consumption forecasting.

In [

122], Nammouchi et al. discussed the energy management of microgrids. This paper aims to address the microgrid monitoring issue. The proposed edge–AI framework integrates machine learning models for forecasting power supply and demand at the edge of the smart microgrids. Experiments on real-time data under different scenarios demonstrate that the proposed framework can fit different types of microgrids and improve the flexibility and performance of microgrids.

In recent years, hydrogen has become a popular renewable energy carrier and has gained great attention from enterprises and governments. Saatloo et al. [

123] proposed a sustainable energy platform integrating plugin electric vehicles and fuel cell electric vehicles with hydrogen as energy carriers. Furthermore, a decentralized model deployed in a mobile edge computing system is implemented for this integrated hydrogen microgrid to interact in a local energy market. According to the experiment results, the proposed platform can reduce the total cost.

In summary, this section focuses on nine primary topics in edge computing-enabled smart grids, as shown in

Table 3. Power grid distribution monitoring is one of the most important research topics aimed at handling the geographical diversity in renewable energy areas. With the development of the smart grid, smart meters play a crucial role in smart power systems. Research on smart grid detection can provide a safer and more stable power supply. The advanced metering system is another significant component of the smart grid, with several applications discussed in this subsection. Furthermore, anomaly and fault detection have been receiving increasing attention from the smart grid community recently. Therefore, its subsection includes three popular methods of anomaly detection. The definition and benefits of SE are discussed, and two applications focused on different topics are described in its subsection. Finally, energy storage, resource management, and cyber security are emerging topics associated with grid edge. Exploring the use of edge computing technology in these areas helps enhance the resilience and reliability of the power grid. References in this section are summarized in

Table 3 by research topics and listed in

Table 4 by detailed settings in edge computing architecture.

5. Discussion and Future Opportunities

The major objective of this review paper is to comprehensively summarize and study edge computing and its advantages that can benefit various industries, especially the energy industry. In

Section 2 and

Section 3, edge computing architectures and relative technologies are explored. The practical examples in

Section 2 and

Section 3 demonstrated that edge computing can solve various issues by modifying specific edge computing frameworks based on basic edge computing architectures. These examples can also prove that edge computing can be used in a wide range of fields, such as smart cities and smart health. In the future, edge computing can contribute to more traditional fields and help with digital transformation. In

Section 4, various edge computing technologies in the smart grid edge are discussed and illustrate that with the development of the smart grids and grid edge, the usage of distributed smart devices and energy resources is increasing. Edge computing can be an ideal solution to fulfill different requirements in the energy industry, such as forecasting the generation of electricity with renewable energy resources.

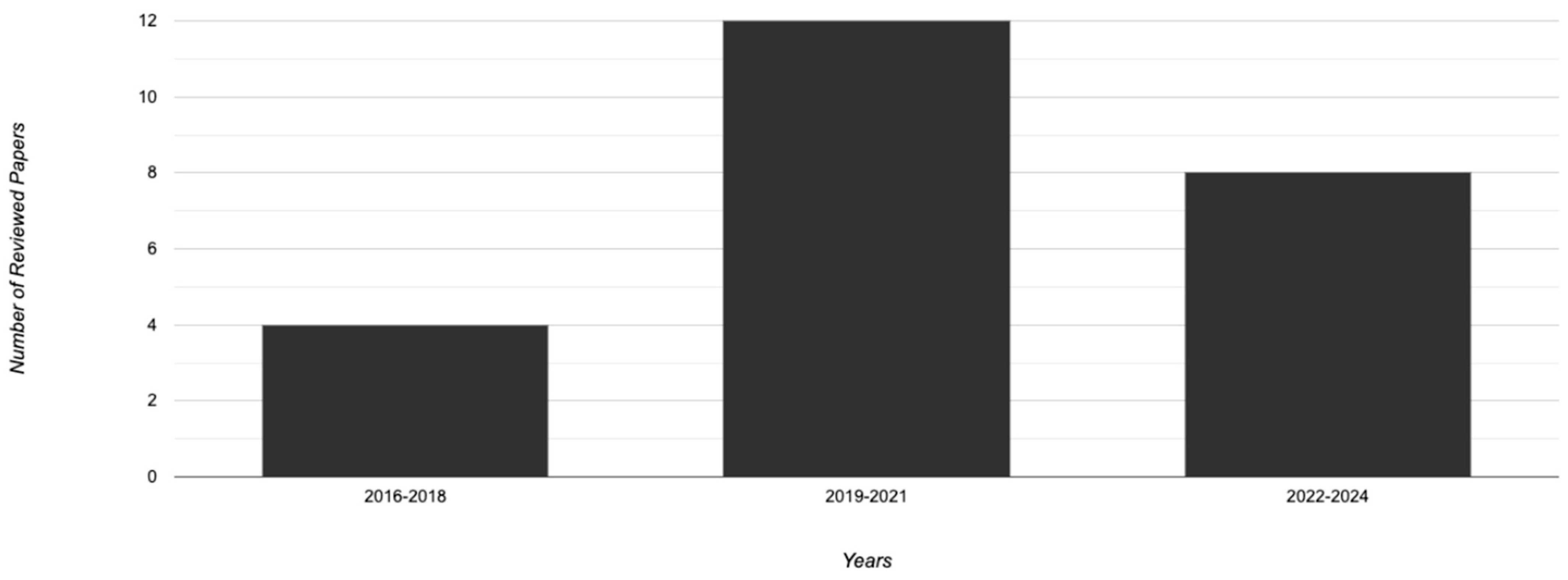

Figure 6 also illustrates that edge computing has become more and more important for energy systems and has been widely discussed in recent years.

The emergence of the latest technologies has also proved that there are still numerous opportunities and topics to explore. In this section, we discuss several future opportunities for edge computing at the grid edge, including grid edge intelligence, 5G-based grid edge application, and grid edge workflow management.

5.1. Grid Edge Intelligence

At present, machine learning and AI are popular topics. As is well known, machine learning is a subset of AI and is a highly efficient approach for forecasting and decision-making. For power system management, it is important to keep the system operating stably and ensure grid resiliency. Machine learning, as a highly successful mathematical method, has great potential to satisfy these requirements well.

However, to pursue higher accuracy with machine learning models, a large amount of training and verification is required. Three general methods to resolve this conflict are improving the hardware of edge devices, optimizing machine learning models, and partitioning the models deployed on the end devices at the grid edge. For hardware improvement, the basic method is to add chips that can accelerate the training speed into the edge devices. Multi-core processing systems, multiprocessors, or even graphics processing units (GPUs) can be used in edge devices to accelerate the training speed. For partitioning the models on the edge devices, the independent model can be deployed in all edge devices. Another architecture is where edge devices cooperate with local edge servers to handle data processing and model training. High-performance computing (HPC) can also be introduced into edge intelligence and offer opportunities [

124]. HPC is an ideal tool that can support real-time processing of vast datasets. Furthermore, this computational strength enables edge devices and edge servers to run scalable and complex machine learning algorithms. Although most systems and compilers running on smart devices or edge servers can provide parallel processing, the machine learning algorithms that can be executed in parallel still need to be developed with HPC technologies. With the integration between HPC and machine learning, the devices at the grid edge can complete more complex tasks, such as data preprocessing and model training.

With the development of modern power systems and energy resources, the power grid infrastructure encounters more and more uncertainties, such as erratic load [

125]. It is important for algorithms used in power systems to deal with massive real-time uncertain variables. The power system is a huge and complex system with a large number of nonlinear variables and uncertainties. Machine learning techniques can be used to propose models for uncertainty quantification. For example, machine learning regressions are one of the effective methods contributing to the development of models for uncertainty quantification.

There are also many other opportunities for machine learning to integrate into the grid edge. Implementing machine learning or deep learning-based fault detection in embedded edge devices can significantly augment the reliability of power systems. Cybersecurity of the grid edge has arisen due to the sensitive data stored and transmitted at the edge. Machine learning and AI can benefit the identifying and responding to potential threats.

5.2. 5G-Based Grid Edge Application

5G technologies are evolving rapidly and provide great opportunities for the development of edge computing. They allow for low delay, large bandwidth, and high-capacity communication. These advantages can benefit the power industry by efficiently connecting a vast number of smart edge devices. Additionally, 5G helps to establish reliable and accurate real-time control in smart grids. 5G is vital for solving problems in traditional power grids and extending the scale of DERs.

Although 5G brings opportunities to the traditional power industry, it also raises challenges. One of the most urgent problems to be solved is the rapid growth of data volume. The combination of 5G and edge computing is an ideal approach to balance the computational and data transmission burden. However, this also brings about a series of new challenges, such as data storage and security problems in edge devices. Furthermore, 5G-based edge computing can support faster fault detection, recovery, and real-time operations.

5.3. Grid Edge Workflow Management

Managing and optimizing operations at the edge of the power grid becomes more challenging with the increasing data volume and complexity. An efficient grid edge workflow is required to ensure the functional operation of data collection, monitoring, processing, and analysis, as well as reliable and secure communication and interoperability among diverse devices. Implementing automated control mechanisms, utilizing advanced algorithms, and deploying best-fit edge computing architectures to facilitate the operation of edge devices at the grid edge will certainly be a focus of research to enhance edge computing applicability and efficiency in power system applications.

6. Conclusions

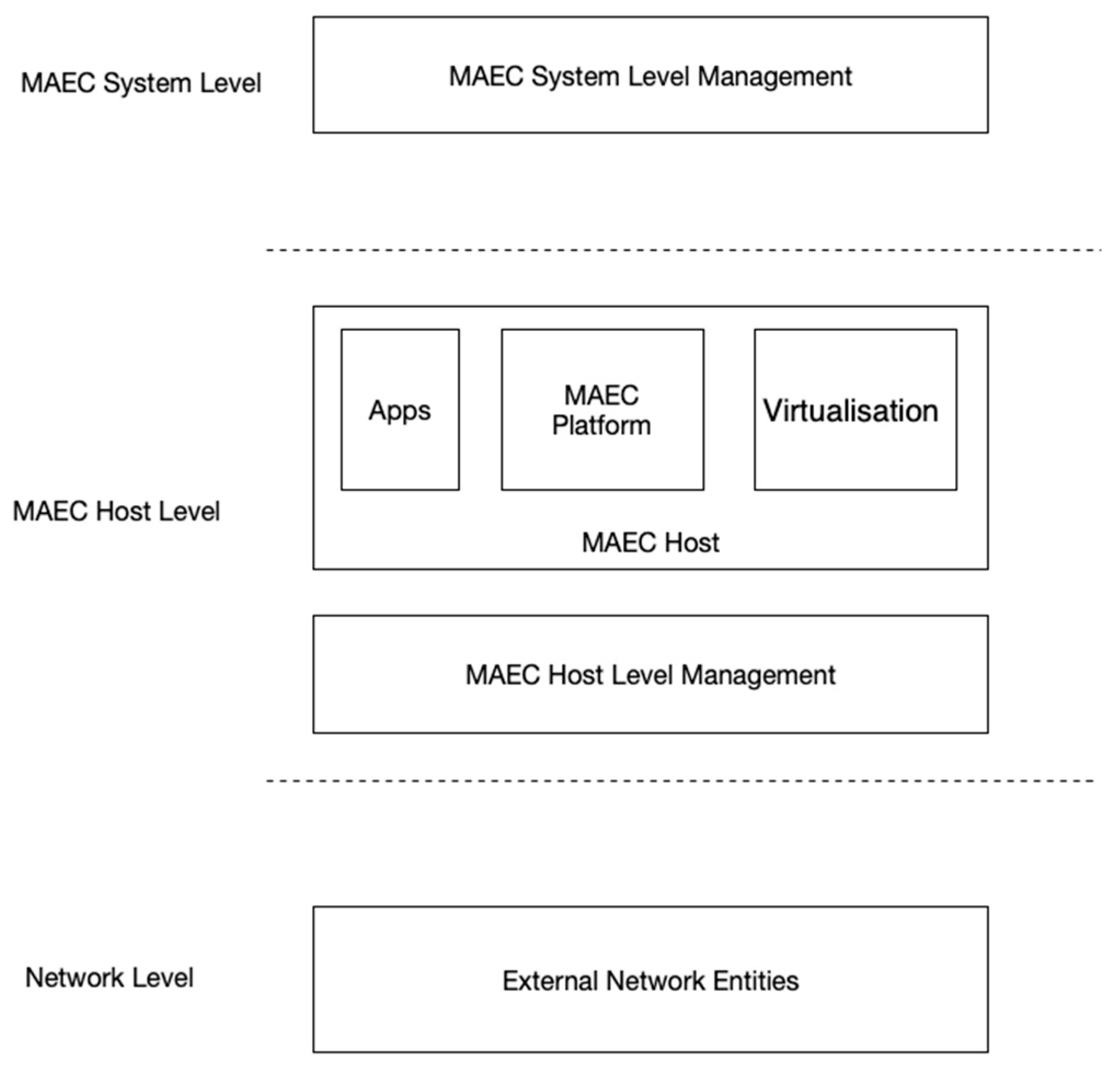

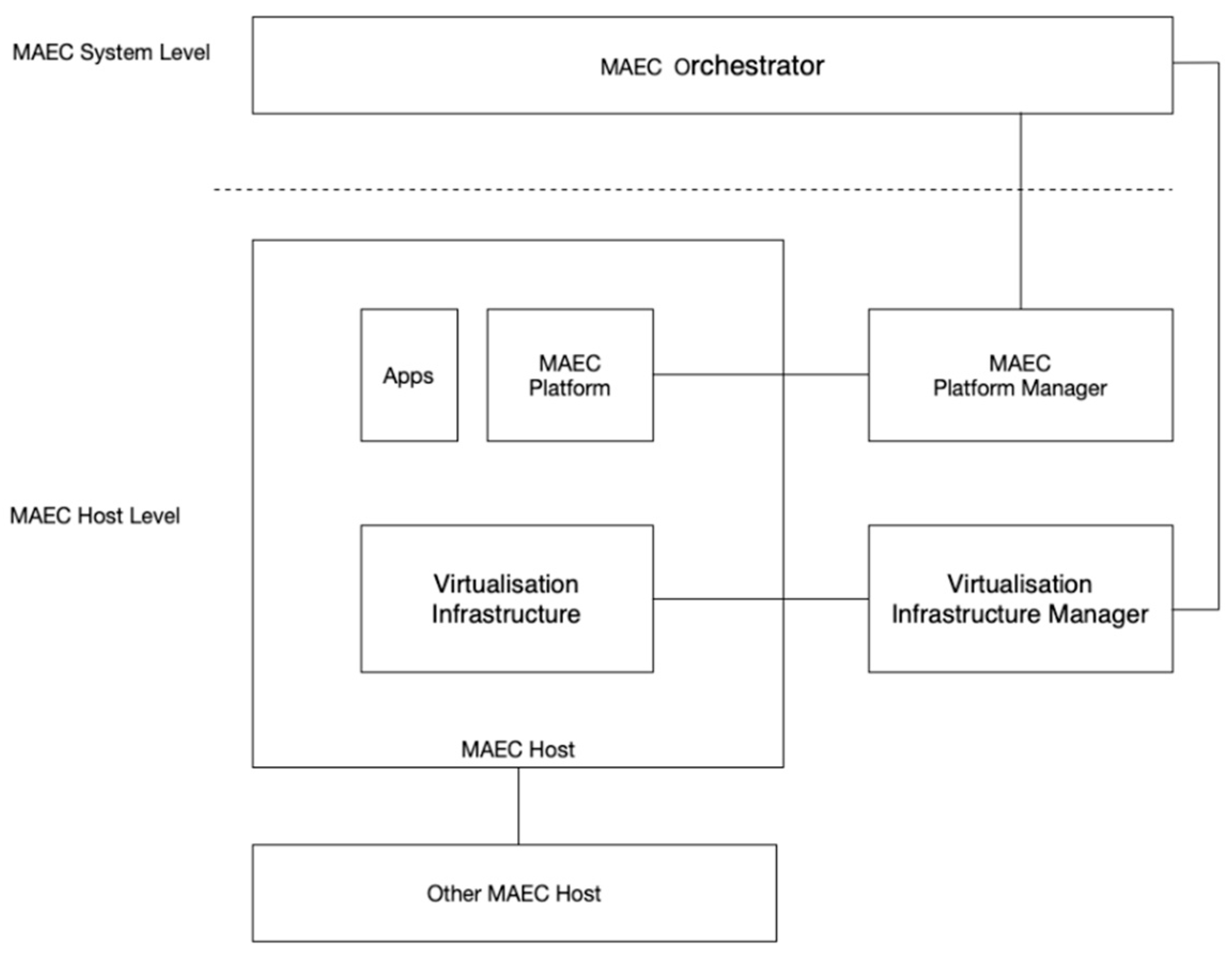

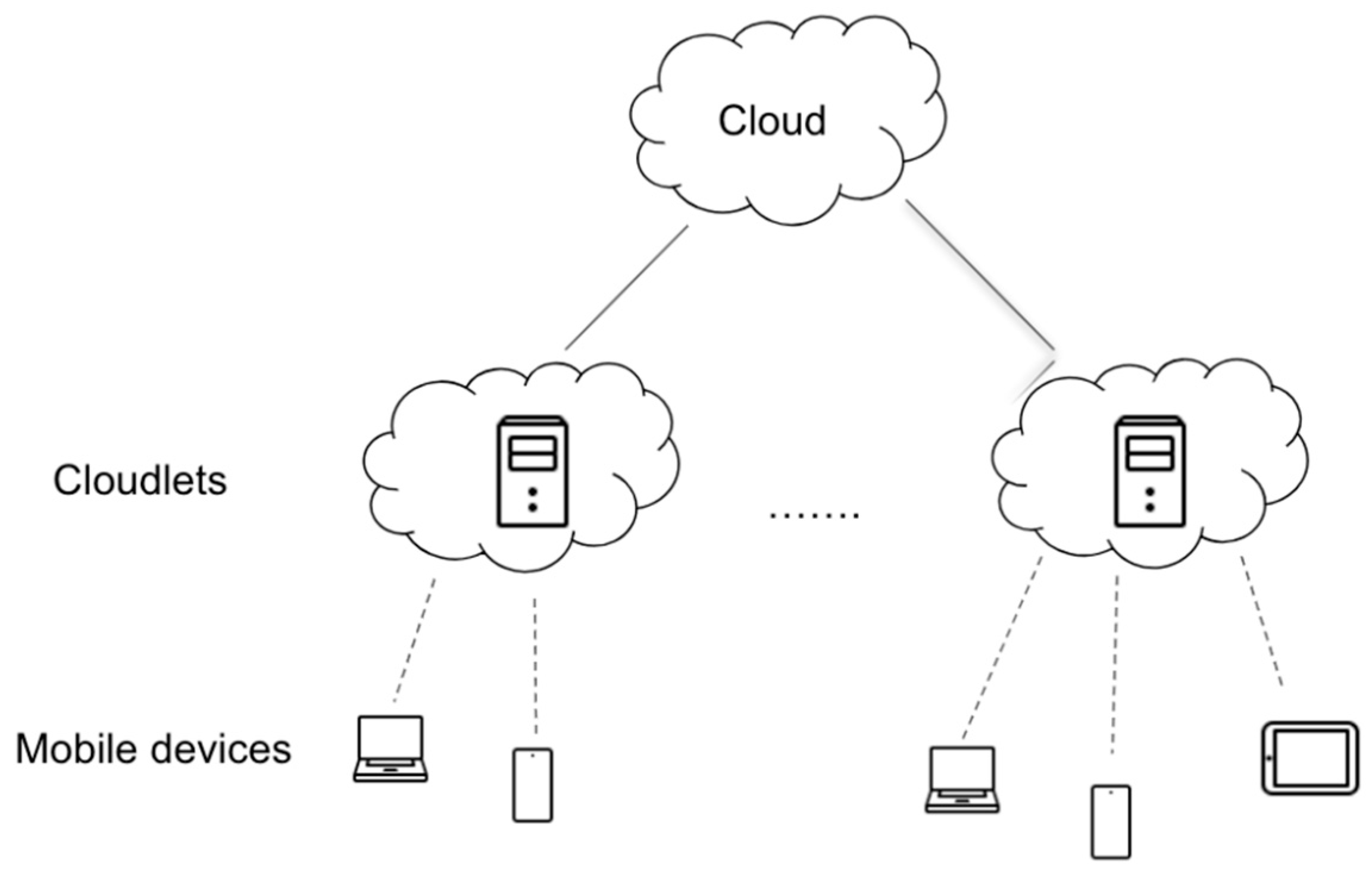

This paper provides a comprehensive review of edge computing from the aspects of basic concepts, architecture, communication, and popular technologies. Edge computing, as an emerging distributed computing paradigm, enables data processing and computing at the edge of the network, close to the customer side. Three popular edge computing architectures are introduced: MAEC, which operates distributed computing tasks on the edge devices at the grid edge; Cloudlet computing, which features a Cloudlet layer between the cloud center and the edge devices, where Cloudlets perform as small edge servers to handle lightweight computing tasks; and fog computing, which includes a fog layer comprised of numerous fog nodes. The state-of-the-art technologies used in edge computing are comprehensively introduced. Various applications discussed demonstrate that edge computing has become a crucial technology across various fields.

This paper also explores a wide range of power applications that utilize edge computing technologies, effectively demonstrating how these technologies are enhancing power system management. As the number of smart devices in power grids grows, along with the increasing demands for speed and security, edge computing is expected to be deployed in increasingly diverse scenarios, providing substantial benefits.

Finally, the paper discusses several future opportunities related to emerging techniques as an extension of the review.

The articles mentioned in this review paper are all extracted from Google Scholar. The selection criteria are as follows: The search terms are closely related to the topics discussed in every subsection; The summarized works should be published in recent years; The papers should clearly describe the usage of edge computing.

This review paper serves as a reference to researchers interested in edge computing to comprehend the fundamental knowledge, learn about the popular applications, and advance research in power systems.