Predicting the Health Status of a Pulp Press Based on Deep Neural Networks and Hidden Markov Models

Abstract

1. Introduction

1.1. Maintenance

1.2. Condition-Based Maintenance—CBM

1.3. Diagnosis and Prognosis

1.4. Predictive Maintenance PdM

1.5. HMM-GRU to Perform Maintenance

1.6. Related Work

1.7. Contributions of the Paper

1.8. Paper Structure

2. Background

2.1. Principal Component Analysis (PCA)

2.2. K-Means Algorithm

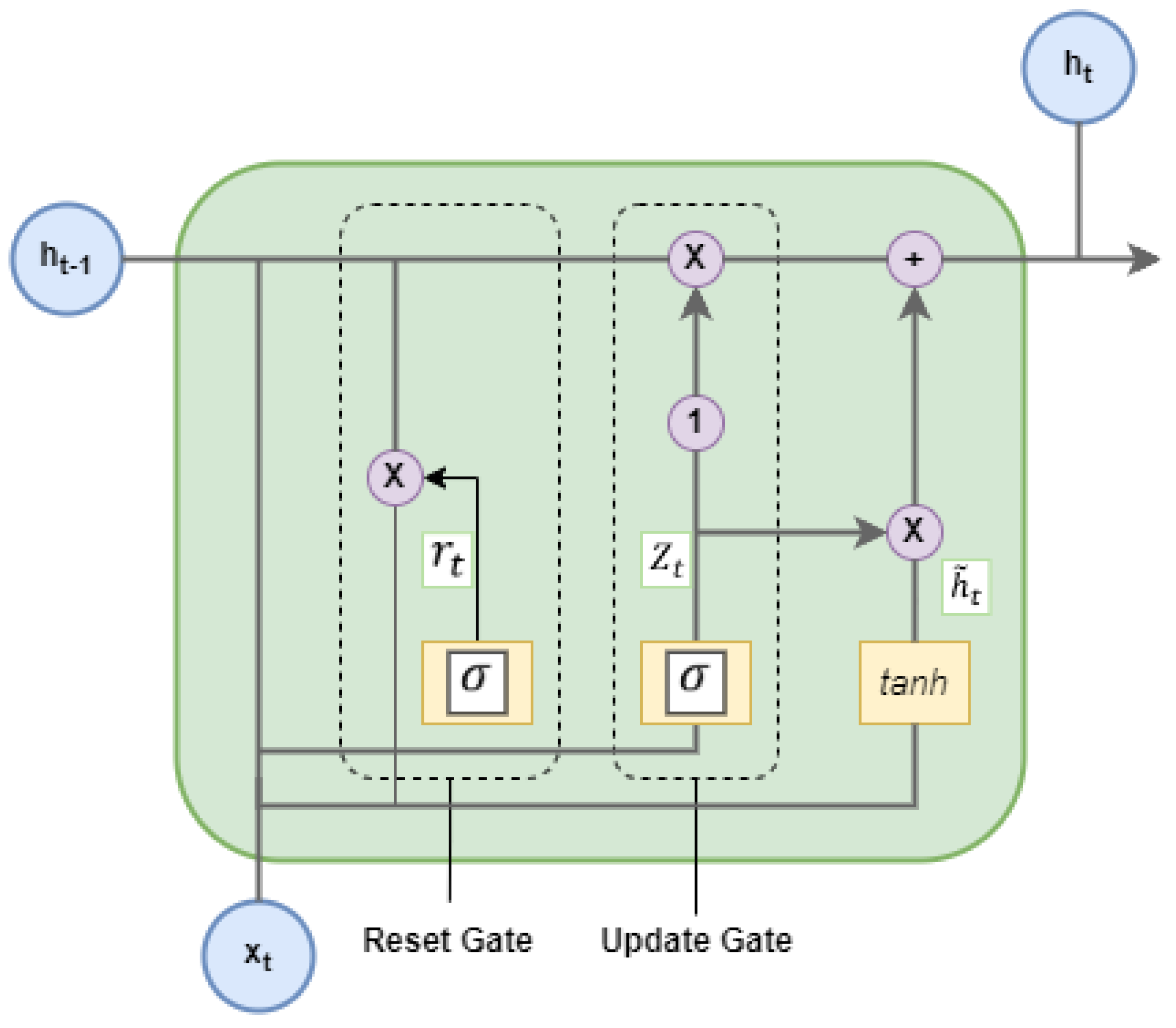

2.3. Gated Recurrent Unit (GRU)

- The algorithm starts with the calculation of the update gate for time step t (Equation (3)):when connecting the network unit, the value is multiplied by its respective weight , as well as the value , which contains the information of the previous units in . The results of these multiplications are then summed, and an activation function is applied to normalise the result between 0 and 1. In this way, the relevant information is kept and irrelevant information is filtered out.

- The reset gate allows the model to determine how much past information should be forgotten, thus controlling how much information is retained (Equation (4)):

- In this step, new memory content is introduced that uses the reset gate to store the most important information from the past. After obtaining the toggle signal, the toggle reset activation function is used to obtain the reset data and combine them with the activation function, resulting in .is capable of controlling the range of output values between −1 and 1. It is possible to observe that the input data are incorporated and the hidden information is regulated by the activation function.

- Finally, the vector is calculated to contain the relevant information of the current unit and transmit it to the next stage of the network, determining what should be kept from the previous stages . The end result, , contains the current unit and previous step information that is relevant to the final output (Equation (6)):

2.4. Hidden Markov Models (HMMs)

- N represents the number of hidden states, where a certain state ;

- M is the number of observable states, where the observation at time t corresponds to the hidden state and is represented by ;

- is the initial distribution of the hidden states, where ;

- A is the matrix of transition probabilities between hidden states, where and where equals:

- B is the emission matrix, where the probability that the jth hidden state generates the ith observable state is represented, where . is represented by:

- Evaluation

- Training

- Decoding

3. Methodology

4. Case of Study

4.1. Data Preparation

4.2. Feature Generation

4.3. PCA

4.4. K-Means Clustering

4.5. HMM

4.6. Prognostic with GRU Model

5. Discussion

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| (ANN) | Artificial neural network |

| (CBM) | Condition-based maintenance |

| (CM) | Corrective maintenance |

| (CNN) | Convolution neural networks |

| (CPS) | Cyberphysics systems |

| (DBSCAN) | Density-based spatial clustering of applications with noise |

| (DNN) | Deep neural network |

| (GMM) | Gaussian mixture model |

| (GRU) | Gated recurrent unit |

| (HMM) | Hidden Markov model |

| (ICA) | Independent component analysis |

| (IoT) | Internet of things |

| (LDA) | Linear discriminant analysis |

| (LSTM) | Long short-term memory |

| (MAE) | Mean absolute error |

| (MAPE) | Mean absolute percentage error |

| (ML) | Machine learning |

| (PCA) | Principal components analysis |

| (PCs) | Principal components |

| (PdM) | Predictive maintenance |

| (RMSE) | Root-mean-square error |

| (RUL) | Remaining useful life |

| (TBM) | Time-based maintenance |

| (WT) | Wavelet transform |

References

- Zhang, M.; Amaitik, N.; Wang, Z.; Xu, Y.; Maisuradze, A.; Peschl, M.; Tzovaras, D. Predictive Maintenance for Remanufacturing Based on Hybrid-Driven Remaining Useful Life Prediction. Appl. Sci. 2022, 12, 3218. [Google Scholar] [CrossRef]

- Hu, J.; Sun, Q.; Ye, Z.S. Condition-Based Maintenance Planning for Systems Subject to Dependent Soft and Hard Failures. IEEE Trans. Reliab. 2021, 70, 1468–1480. [Google Scholar] [CrossRef]

- Kumar, P.; Sushil, C.; Basu, K.; Chandra, M. Quantified Risk Ranking Model for Condition-Based Risk and Reliability Centered Maintenance. J. Inst. Eng. Ser. C 2017, 98, 325–333. [Google Scholar] [CrossRef]

- Pais, J.; Raposo, H.D.; Farinha, J.; Cardoso, A.J.M.; Marques, P.A. Optimizing the life cycle of physical assets through an integrated life cycle assessment method. Energies 2021, 14, 6128. [Google Scholar] [CrossRef]

- Huynh, K.T.; Grall, A.; Berenguer, C. A Parametric Predictive Maintenance Decision-Making Framework Considering Improved System Health Prognosis Precision. IEEE Trans. Reliab. 2019, 68, 375–396. [Google Scholar] [CrossRef]

- Chuang, C.; Ningyun, L.U.; Bin, J.; Yin, X. Condition-based maintenance optimization for continuously monitored degrading systems under imperfect maintenance actions. J. Syst. Eng. Electron. 2020, 31, 841–851. [Google Scholar] [CrossRef]

- Zhu, Z.; Xiang, Y. Condition-based maintenance for multi- component systems: Modeling, structural properties, and algorithms. IISE Trans. 2021, 53, 88–100. [Google Scholar] [CrossRef]

- Koochaki, J.; Bokhorst, J.A.C.; Wortmann, H.; Klingenberg, W. The influence of condition-based maintenance on workforce planning and maintenance scheduling. Int. J. Prod. Res. 2013, 51, 2339–2351. [Google Scholar] [CrossRef]

- Leoni, L.; Carlo, F.D.; Abaei, M.M.; Bahootoroody, A. A hierarchical Bayesian regression framework for enabling online reliability estimation and condition-based maintenance through accelerated testing. Comput. Ind. 2022, 139, 103645. [Google Scholar] [CrossRef]

- Lee, S.M.; Lee, D.; Kim, Y.S. The quality management ecosystem for predictive maintenance in the Industry 4.0 era. Int. J. Qual. Innov. 2019, 5, 1–11. [Google Scholar] [CrossRef]

- Liu, B.; Do, P.; Iung, B.; Xie, M. Stochastic Filtering Approach for Condition-Based Maintenance Considering Sensor Degradation. IEEE Trans. Autom. Sci. Eng. 2020, 17, 177–190. [Google Scholar] [CrossRef]

- Kolhatkar, A.; Pandey, A. Predictive maintenance methodology in sheet metal progressive tooling: A case study. Int. J. Syst. Assur. Eng. Manag. 2022. [Google Scholar] [CrossRef]

- Raposo, H.; Farinha, J.T.; Pais, E.; Galar, D. An integrated model for dimensioning the reserve fleet based on the maintenance policy. WSEAS Trans. Syst. Control 2021, 16, 43–65. [Google Scholar] [CrossRef]

- Zhang, J.; Zhao, X.; Song, Y.; Qiu, Q. Joint optimization of condition-based maintenance and spares inventory for a series—Parallel system with two failure modes. Comput. Ind. Eng. 2022, 168, 108094. [Google Scholar] [CrossRef]

- Soltanali, H.; Khojastehpour, M.; Farinha, T.; Pais, J.E. An Integrated Fuzzy Fault Tree Model with Bayesian Network-Based Maintenance Optimization of Complex Equipment in Automotive Manufacturing. Energies 2021, 14, 7758. [Google Scholar] [CrossRef]

- Li, Y.; Peng, S.; Li, Y.; Jiang, W. A review of condition-based maintenance: Its prognostic and operational aspects. Front. Eng. Manag. 2020, 7, 323–334. [Google Scholar] [CrossRef]

- Oakley, J.L.; Wilson, K.J.; Philipson, P. A condition-based maintenance policy for continuously monitored multi-component systems with economic and stochastic dependence. Reliab. Eng. Syst. Saf. 2022, 222, 108321. [Google Scholar] [CrossRef]

- Shin, M.K.; Jo, W.J.; Cha, H.M.; Lee, S.H. A study on the condition based maintenance evaluation system of smart plant device using convolutional neural network. J. Mech. Sci. Technol. 2020, 34, 2507–2514. [Google Scholar] [CrossRef]

- Peng, S.; Feng, Q.M. Reinforcement learning with Gaussian processes for condition-based maintenance. Comput. Ind. Eng. 2021, 158, 107321. [Google Scholar] [CrossRef]

- Hsu, J.Y.; Wang, Y.F.; Lin, K.C.; Chen, M.Y.; Hsu, J.H.Y. Wind Turbine Fault Diagnosis and Predictive Maintenance Through Statistical Process Control and Machine Learning. IEEE Access 2020, 8, 23427–23439. [Google Scholar] [CrossRef]

- Staden, H.E.V.; Boute, R.N. The effect of multi-sensor data on condition-based maintenance policies. Eur. J. Oper. Res. 2021, 290, 585–600. [Google Scholar] [CrossRef]

- Kenda, M.; Klob, D.; Bra, D. Condition based maintenance of the two-beam laser welding in high volume manufacturing of piezoelectric pressure sensor. J. Manuf. Syst. 2021, 59, 117–126. [Google Scholar] [CrossRef]

- Kumar, G.; Jain, V.; Gandhi, O.P. Availability analysis of mechanical systems with condition-based maintenance using semi-Markov and evaluation of optimal condition monitoring interval. J. Ind. Eng. Int. 2018, 14, 119–131. [Google Scholar] [CrossRef]

- Adu-amankwa, K.; Attia, A.K.A.; Janardhanan, M.N.; Patel, I. A predictive maintenance cost model for CNC SMEs in the era of industry 4.0. Int. J. Adv. Manuf. Technol. 2019, 104, 3567–3587. [Google Scholar] [CrossRef]

- Tsuji, K.; Imai, S.; Takao, R.; Kimura, T.; Kondo, H. A machine sound monitoring for predictive maintenance focusing on very low frequency band. SICE J. Control Meas. Syst. Integr. 2021, 14, 27–38. [Google Scholar] [CrossRef]

- Rodrigues, J.; Martins, A.; Mendes, M.; Farinha, T.; Mateus, R.; Cardoso, A.J. Automatic Risk Assessment for an Industrial Asset Using. Energies 2022, 15, 9387. [Google Scholar] [CrossRef]

- Ingemarsdotter, E.; Lena, M.; Jamsin, E.; Sakao, T.; Balkenende, R. Challenges and solutions in condition-based maintenance implementation—A multiple case study. J. Clean. Prod. 2021, 296, 126420. [Google Scholar] [CrossRef]

- Liu, Y.; Yu, W.; Dillon, T.; Fellow, L.; Rahayu, W.; Li, M. Empowering IoT Predictive Maintenance Solutions With AI: A Distributed System for Manufacturing Plant-Wide Monitoring. IEEE Trans. Ind. Inform. 2022, 18, 1345–1354. [Google Scholar] [CrossRef]

- Lin, C.Y.; Hsieh, Y.M.; Cheng, F.T.; Huang, H.C.; Adnan, M. Time Series Prediction Algorithm for Intelligent Predictive Maintenance. IEEE Robot. Autom. Lett. 2019, 4, 2807–2814. [Google Scholar] [CrossRef]

- Soltanali, H.; Khojastehpour, M.; Pais, J.E.; Farinha, J. Sustainable Food Production: An Intelligent Fault Diagnosis Framework for Analyzing the Risk of Critical Processes. Sustainability 2022, 14, 1083. [Google Scholar] [CrossRef]

- Ghasemi, A.; Yacout, S.; Ouali, M.S. Optimal condition based maintenance with imperfect information and the proportional hazards model. Int. J. Prod. Res. 2007, 45, 989–1012. [Google Scholar] [CrossRef]

- Martins, A.B.; Farinha, J.T.; Cardoso, A.M. Calibration and Certification of Industrial Sensors—A Global Review. WSEAS Trans. Syst. Control 2020, 15, 394–416. [Google Scholar] [CrossRef]

- Martins, A.; Fonseca, I.; Farinha, T.; Reis, J.; Cardoso, A. Online Monitoring of Sensor Calibration Status to Support Condition-Based Maintenance. Sensors 2023, 23, 2402. [Google Scholar] [CrossRef]

- Wu, Z.; Guo, B.I.N.; Tian, X.; Zhang, L. A Dynamic Condition-Based Maintenance Model Using Inverse Gaussian Process. IEEE Access 2020, 8, 104–117. [Google Scholar] [CrossRef]

- Nguyen, K.A.; Do, P.; Grall, A. Condition-based maintenance for multi- component systems using importance measure and predictive information. Int. J. Syst. Sci. Oper. Logist. 2014, 1, 228–245. [Google Scholar] [CrossRef]

- Zhang, W.; Yang, D.; Wang, H. Data-Driven Methods for Predictive Maintenance of Industrial Equipment: A Survey. IEEE Syst. J. 2019, 13, 2213–2227. [Google Scholar] [CrossRef]

- Pais, E.; Farinha, J.T.; Cardoso, A.J.; Raposo, H. Optimizing the life cycle of physical assets—A review. WSEAS Trans. Syst. Control. 2020, 15, 417–430. [Google Scholar] [CrossRef]

- Yam, R.C.M.; Tse, P.W.; Li, L.; Tu, P. Intelligent Predictive Decision Support System for Condition-Based Maintenance. Adv. Manuf. Technol. 2001, 17, 383–391. [Google Scholar] [CrossRef]

- Harald, R.; Schj, P.; Wabner, M.; Frie, U. Predictive Maintenance for Synchronizing Maintenance Planning with Production. Adv. Manuf. Autom. VII 2018, 451, 439–446. [Google Scholar] [CrossRef]

- Popescu, T.D.; Aiordachioaie, D.; Culea-Florescu, A. Basic tools for vibration analysis with applications to predictive maintenance of rotating machines: An overview. Int. J. Adv. Manuf. Technol. 2022, 118, 2883–2899. [Google Scholar] [CrossRef]

- Chen, C.; Zhu, Z.H.; Member, S.; Shi, J. Dynamic Predictive Maintenance Scheduling Using Deep Learning Ensemble for System Health Prognostics. IEEE Sens. J. 2021, 21, 26878–26891. [Google Scholar] [CrossRef]

- Kiangala, K.S.; Wang, Z. An Effective Predictive Maintenance Framework for Conveyor Motors Using Dual Time-Series Imaging and Convolutional Neural Network in an Industry 4.0 Environment. IEEE Access 2020, 8, 121033–121049. [Google Scholar] [CrossRef]

- Mateus, B.C.; Mendes, M.; Farinha, J.T.; Assis, R.; Cardoso, A.M. Comparing LSTM and GRU Models to Predict the Condition of a Pulp Paper Press. Energies 2021, 14, 6958. [Google Scholar] [CrossRef]

- Antunes, J.; Torres, J.; Mendes, M.; Mateus, R.; Cardoso, A. Short and long forecast to implement predictive maintenance in a pulp industry. Eksploat. Niezawodn. Maint. Reliab. 2022, 24, 33–41. [Google Scholar] [CrossRef]

- Mateus, B.C.; Mendes, M.; Farinha, J.T.; Cardoso, A.M. Anticipating future behavior of an industrial press using lstm networks. Appl. Sci. 2021, 11, 6101. [Google Scholar] [CrossRef]

- Zhang, Z.; Wang, Y.; Wang, K. Intelligent fault diagnosis and prognosis approach for rotating machinery integrating wavelet transform, principal component analysis, and artificial neural networks. Int. J. Adv. Manuf. Technol. 2013, 68, 763–773. [Google Scholar] [CrossRef]

- Martins, A.; Fonseca, I.; Farinha, J.; Reis, J.; Cardoso, A. Maintenance prediction through sensing using hidden markov models—A case study. Appl. Sci. 2021, 11, 7685. [Google Scholar] [CrossRef]

- Yu, J. Adaptive hidden Markov model-based online learning framework for bearing faulty detection and performance degradation monitoring. Mech. Syst. Signal Process. 2017, 83, 149–162. [Google Scholar] [CrossRef]

- Arpaia, P.; Cesaro, U.; Chadli, M.; Coppier, H.; De Vito, L.; Esposito, A.; Gargiulo, F.; Pezzetti, M. Fault detection on fluid machinery using Hidden Markov Models. Measurement 2020, 151, 107126. [Google Scholar] [CrossRef]

- Mateus, B.; Mendes, M.; Farinha, J.; Martins, A.; Cardoso, A. Data Analysis for Predictive Maintenance Using Time Series and Deep Learning Models—A Case Study in a Pulp Paper Industry. In Proceedings of IncoME-VI and TEPEN 2021; Springer International Publishing: Cham, Switzerland, 2023; pp. 11–25. [Google Scholar] [CrossRef]

- Chen, H.; Hsu, J.Y.; Hsieh, J.Y.; Hsu, H.Y.; Chang, C.H.; Lin, Y.J. Predictive maintenance of abnormal wind turbine events by using machine learning based on condition monitoring for anomaly detection. J. Mech. Sci. Technol. 2021, 35, 5323–5333. [Google Scholar] [CrossRef]

- Tipler, S.; Alessio, G.D.; Haute, Q.V.; Parente, A.; Contino, F.; Coussement, A. Predicting octane numbers relying on principal component analysis and artificial neural network. Comput. Chem. Eng. 2022, 161, 107784. [Google Scholar] [CrossRef]

- Gu, Y.K.; Zhou, X.Q.; Yu, D.P.; Shen, Y.J. Fault diagnosis method of rolling bearing using principal component analysis and support vector machine. J. Mech. Sci. Technol. 2018, 32, 5079–5088. [Google Scholar] [CrossRef]

- Booker, N.K.; Knights, P.; Gates, J.D.; Clegg, R.E. Applying principal component analysis (PCA) to the selection of forensic analysis methodologies. Eng. Fail. Anal. 2022, 132, 105937. [Google Scholar] [CrossRef]

- Kamari, A.; Schultz, C. A combined principal component analysis and clustering approach for exploring enormous renovation design spaces. J. Build. Eng. 2022, 48, 103971. [Google Scholar] [CrossRef]

- Lim, Y.; Kwon, J.; Oh, H.S. Principal component analysis in the wavelet domain. Pattern Recognit. 2021, 119, 108096. [Google Scholar] [CrossRef]

- Babouri, M.K.; Djebala, A.; Ouelaa, N.; Oudjani, B.; Younes, R. Rolling bearing faults severity classification using a combined approach based on multi-scales principal component analysis and fuzzy technique. Int. J. Adv. Manuf. Technol. 2020, 107, 4301–4316. [Google Scholar] [CrossRef]

- Zhu, T.; Cheng, X.; Cheng, W.; Tian, Z.; Li, Y. Principal component analysis based data collection for sustainable internet of things enabled Cyber—Physical Systems. Microprocess. Microsyst. 2022, 88, 104032. [Google Scholar] [CrossRef]

- Park, J.H.; Kang, Y.J. Evaluation index for sporty engine sound reflecting evaluators’ tastes, developed using K-means cluster analysis. Int. J. Automot. Technol. 2020, 21, 1379–1389. [Google Scholar] [CrossRef]

- Yang, Y.; Liao, Q.; Wang, J.; Wang, Y. Application of multi-objective particle swarm optimization based on short-term memory and K-means clustering in multi-modal multi-objective optimization. Eng. Appl. Artif. Intell. 2022, 112, 104866. [Google Scholar] [CrossRef]

- Voronova, L.I.; Voronov, V.; Mohammad, N. Modeling the Clustering of Wireless Sensor Networks Using the K-means Method. In Proceedings of the International Conference on Quality Management, Transport and Information Security, Information Technologies (IT QM IS), Yaroslavl, Russia, 6–10 September 2021; pp. 740–745. [Google Scholar] [CrossRef]

- Sinaga, K.P.; Yang, M.S. Unsupervised K-Means Clustering Algorithm. IEEE Access 2020, 8, 80716–80727. [Google Scholar] [CrossRef]

- Sinaga, K.P.; Hussain, I.; Yang, M.S. Entropy K-Means Clustering With Feature Reduction Under Unknown Number of Clusters. IEEE Access 2021, 9, 67736–67751. [Google Scholar] [CrossRef]

- Niño-adan, I.; Landa-torres, I.; Portillo, E.; Manjarres, D. Influence of statistical feature normalisation methods on K-Nearest Neighbours and K-Means in the context of industry 4.0. Eng. Appl. Artif. Intell. 2022, 111, 104807. [Google Scholar] [CrossRef]

- Lakshmi, K.; Visalakshi, N.K.; Shanthi, S. Data clustering using K-Means based on Crow Search Algorithm. Sādhanā 2018, 43, 1–12. [Google Scholar] [CrossRef]

- Ni, Y.; Zeng, X.; Liu, Z.; Yu, K.; Xu, P.; Wang, Z.; Zhuo, C.; Huang, Y. Faulty feeder detection of single phase-to-ground fault for distribution networks based on improved K-means power angle clustering analysis. Int. J. Electr. Power Energy Syst. 2022, 142, 108252. [Google Scholar] [CrossRef]

- Liao, Q.Z.; Xue, L.; Lei, G.; Liu, X.; Sun, S.Y.; Patil, S. Statistical prediction of water fl ooding performance by K-means clustering and empirical modeling. Pet. Sci. 2022, 19, 1139–1152. [Google Scholar] [CrossRef]

- Yoo, J.H.; Park, Y.K.; Han, S.S. Predictive Maintenance System for Wafer Transport Robot Using K-Means Algorithm and Neural Network Model. Electronics 2022, 11, 1324. [Google Scholar] [CrossRef]

- Han, P.; Wang, W.; Shi, Q.; Yue, J. A combined online-learning model with K-means clustering and GRU neural networks for trajectory prediction. Ad Hoc Netw. 2021, 117, 102476. [Google Scholar] [CrossRef]

- Chen, L.; Shan, W.; Liu, P. Identification of concrete aggregates using K-means clustering and level set method. Structures 2021, 34, 2069–2076. [Google Scholar] [CrossRef]

- Visalaxi, S.; Sudalaimuthu, T. Endometrium Phase prediction using K-means Clustering through the link of Diagnosis and procedure. In Proceedings of the 8th International Conference on Signal Processing and Integrated Networks (SPIN), Amity University, Noida, India, 26–27 August 2021; pp. 1178–1181. [Google Scholar] [CrossRef]

- Cho, K.; van Merrienboer, B.; Gulcehre, C.; Bahdanau, D.; Bougares, F.; Schwwenk, H.; Bengio, Y. Learning Phrase Representations using RNN Encoder-Decoder for Statistical Machine Translation. In Proceedings of the 2014 Conference on Empirical Methods in Natural Language Processing (EMNLP), Doha, Qatar, 25–29 October 2014; pp. 1724–1734. [Google Scholar] [CrossRef]

- Ke, K.; Hongbin, S.; Chengkang, Z.; Brown, C. Short-term electrical load forecasting method based on stacked auto- encoding and GRU neural network. Evol. Intell. 2019, 12, 385–394. [Google Scholar] [CrossRef]

- Lin, S.; Fan, R.; Feng, D.; Yang, C.; Wang, Q.; Gao, S. Condition-Based Maintenance for Traction Power Supply Equipment Based on Decision Process. IEEE Trans. Intell. Transp. Syst. 2022, 23, 175–189. [Google Scholar] [CrossRef]

- Benhaddi, M.; Ouarzazi, J. Multivariate Time Series Forecasting with Dilated Residual Convolutional Neural Networks for Urban Air Quality Prediction. Arab. J. Sci. Eng. 2021, 46, 3423–3442. [Google Scholar] [CrossRef]

- Liu, X.; Lin, Z.; Feng, Z. Short-term offshore wind speed forecast by seasonal ARIMA—A comparison against GRU and LSTM. Energy 2021, 227, 120492. [Google Scholar] [CrossRef]

- Gugnani, V.; Kumar, R. Analysis of deep learning approaches for air pollution prediction. Multimed. Tools Appl. 2022, 81, 6031–6049. [Google Scholar] [CrossRef]

- Veeramsetty, V.; Reddy, K.R.; Arjun, M.S.; Gaurav, M. Short-term electric power load forecasting using random forest and gated recurrent unit. Electr. Eng. 2022, 104, 307–329. [Google Scholar] [CrossRef]

- Wang, Y.; Zou, R.; Liu, F.; Zhang, L.; Liu, Q. A review of wind speed and wind power forecasting with deep neural networks. Appl. Energy 2021, 304, 117766. [Google Scholar] [CrossRef]

- Wang, S.; Chen, J.; Wang, H.; Zhang, D. Degradation evaluation of slewing bearing using HMM and improved GRU. Measurement 2019, 146, 385–395. [Google Scholar] [CrossRef]

- Becerra-rico, J.; Aceves-fernández, M.A.; Esquivel-escalante, K.; Pedraza-ortega, J.C. Airborne particle pollution predictive model using Gated Recurrent Unit (GRU) deep neural networks. Earth Sci. Inform. 2020, 13, 821–834. [Google Scholar] [CrossRef]

- Zhao, Z.; Wang, Y.; Feng, M.; Peng, G.; Liu, J.; Jason, B.; Tao, Y. Autoregressive State Prediction Model Based on Hidden Markov and the Application. Wirel. Pers. Commun. 2018, 102, 2403–2416. [Google Scholar] [CrossRef]

- Sun, L.; Li, Y.; Du, H.; Liang, P.; Nian, F. Fault Diagnosis Method of Low Noise Amplifier Based on Support Vector Machine and Hidden Markov Model. J. Electron. Test. 2021, 37, 215–223. [Google Scholar] [CrossRef]

- Li, Y.; Li, H.; Chen, Z.; Zhu, Y. An Improved Hidden Markov Model for Monitoring the Process with Autocorrelated Observations. Energies 2022, 15, 1685. [Google Scholar] [CrossRef]

- Jandera, A.; Skovranek, T. Customer Behaviour Hidden Markov Model. Mathematics 2022, 10, 1230. [Google Scholar] [CrossRef]

- Lin, T.; Wang, M.; Yang, M.; Yang, X. A Hidden Markov Ensemble Algorithm Design for Time Series Analysis. Sensors 2022, 22, 2950. [Google Scholar] [CrossRef] [PubMed]

- Yao, Y.; Zhao, X.; Wu, Y.; Zhang, Y.; Rong, J. Clustering driver behavior using dynamic time warping and hidden Markov model. J. Intell. Transp. Syst. 2021, 25, 249–262. [Google Scholar] [CrossRef]

- Liu, H.; Wang, K.; Li, Y. Hidden Markov Linear Regression Model and Its Parameter Estimation. IEEE Access 2020, 8, 187037–187042. [Google Scholar] [CrossRef]

- Li, W.; Ji, Y.; Cao, X.; Qi, X. Trip Purpose Identification of Docked Bike-Sharing From IC Card Data Using a Continuous Hidden Markov Model. IEEE Access 2020, 8, 189598–189613. [Google Scholar] [CrossRef]

- Park, S.; Lim, W.; Sunwoo, M. Robust lane-change recognition based on an adaptive hidden Markov model using measurement uncertainty. Int. J. Automot. Technol. 2019, 20, 255–263. [Google Scholar] [CrossRef]

- Shang, Z.; Zhang, Y.; Zhang, X.; Zhao, Y.; Cao, Z.; Wang, X. Time Series Anomaly Detection for KPIs Based on Correlation Analysis and HMM. Appl. Sci. 2021, 11, 1353. [Google Scholar] [CrossRef]

- López, C.; Naranjo, Á.; Lu, S.; Moore, K. Hidden Markov Model based Stochastic Resonance and its Application to Bearing Fault Diagnosis. J. Sound Vib. 2022, 528, 116890. [Google Scholar] [CrossRef]

- Feng, Y.; Xu, W.; Zhang, Z.; Wang, F. Continuous Hidden Markov Model Based Spectrum Sensing with Estimated SNR for Cognitive UAV Networks. Sensors 2022, 22, 2620. [Google Scholar] [CrossRef]

- Soleimani, M.; Campean, F.; Neagu, D. Integration of Hidden Markov Modelling and Bayesian Network for fault detection and prediction of complex engineered systems. Reliab. Eng. Syst. Saf. 2021, 215, 107808. [Google Scholar] [CrossRef]

- Martins, A.; Fonseca, I.; Torres, F.J.; Reis, J.; Cardoso, A.J.M. Prediction Maintenance Based on Vibration Analysis and Deep Learning—A Case Study of a Drying Press Supported on Hidden Markov Model. SSRN. 2022. Available online: https://ssrn.com/abstract=4194601 (accessed on 3 January 2023).

- Gokilavani, N.; Bharathi, B. Test case prioritization to examine software for fault detection using PCA extraction and K-means clustering with ranking. Soft Comput. 2021, 25, 5163–5172. [Google Scholar] [CrossRef]

- Fard, N.; Xu, H.; Fang, Y. A unique solution for principal component analysis-based multi-response optimization problems. Int. J. Adv. Manuf. Technol. 2016, 82, 697–709. [Google Scholar] [CrossRef]

- Gang, A.; Bajwa, W.U. A linearly convergent algorithm for distributed principal component analysis. Signal Process. 2022, 193, 108408. [Google Scholar] [CrossRef]

- Sancho, A.; Ribeiro, J.C.; Reis, M.S.; Martins, F.G. Cluster analysis of crude oils with k-means based on their physicochemical properties. Comput. Chem. Eng. 2022, 157, 107633. [Google Scholar] [CrossRef]

- Reddy, S.; Nethra, S.; Sherer, E.A.; Amritphale, A. Prediction of the number of COVID-19 confirmed cases based on K-means-LSTM. Array 2021, 11, 100085. [Google Scholar] [CrossRef]

- Li, Y.; Chu, X.; Tian, D.; Feng, J.; Mu, W. Customer segmentation using K-means clustering and the adaptive particle swarm optimization algorithm. Appl. Soft Comput. 2021, 113, 107924. [Google Scholar] [CrossRef]

- Yang, W.; Chen, L. Machine condition recognition via hidden semi-Markov model. Comput. Ind. Eng. 2021, 158, 107430. [Google Scholar] [CrossRef]

- Lee, Z.; Ean, W.; Najah, A.; Marlinda, A.; Malek, A. A systematic literature review of deep learning neural network for time series air quality forecasting. Environ. Sci. Pollut. Res. 2022, 29, 4958–4990. [Google Scholar] [CrossRef]

- Chung, J.; Gulcehre, C.; Cho, K.; Bengio, Y. Empirical Evaluation of Gated Recurrent Neural Networks on Sequence Modeling. NIPS 2014 Deep Learning and Representation Learning Workshop. 2014. Available online: https://arxiv.org/abs/1412.3555v1 (accessed on 3 January 2023).

- Gruber, N.; Jockisch, A. Are GRU Cells More Specific and LSTM Cells More Sensitive in Motive Classification of Text? Front. Artif. Intell. 2020, 3, 1–6. [Google Scholar] [CrossRef]

- Cho, W.; Choi, E. Big data pre-processing methods with vehicle driving data using MapReduce techniques. J. Supercomput. 2017, 73, 3179–3195. [Google Scholar] [CrossRef]

- Guo, J.; Wang, L.; Fukuda, I.; Ikago, K. Data-driven modeling of general damping systems by K-means clustering and two-stage regression. Mech. Syst. Signal Process. 2022, 167, 108572. [Google Scholar] [CrossRef]

- Ferreira, V.; Pinho, A.; Souza, D.; Rodrigues, B. A New Clustering Approach for Automatic Oscillographic Records Segmentation. Energies 2021, 14, 6778. [Google Scholar] [CrossRef]

| Parameter | Mathematical Equation | Parameter | Mathematical Equation |

|---|---|---|---|

| Mean | A Factor | ||

| Standard deviation | B factor | ||

| Variance | SRM | ||

| RMS | SRM shape factor | ||

| Absolute maximum | Latitude factor | ||

| Coefficient of skewness | Fifth moment | ||

| Kurtosis | Sixth moment | ||

| Crest factor | Median | ||

| Margin factor | Mode | ||

| RMS shape factor | Minimum | ||

| Impulse factor |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Martins, A.; Mateus, B.; Fonseca, I.; Farinha, J.T.; Rodrigues, J.; Mendes, M.; Cardoso, A.M. Predicting the Health Status of a Pulp Press Based on Deep Neural Networks and Hidden Markov Models. Energies 2023, 16, 2651. https://doi.org/10.3390/en16062651

Martins A, Mateus B, Fonseca I, Farinha JT, Rodrigues J, Mendes M, Cardoso AM. Predicting the Health Status of a Pulp Press Based on Deep Neural Networks and Hidden Markov Models. Energies. 2023; 16(6):2651. https://doi.org/10.3390/en16062651

Chicago/Turabian StyleMartins, Alexandre, Balduíno Mateus, Inácio Fonseca, José Torres Farinha, João Rodrigues, Mateus Mendes, and António Marques Cardoso. 2023. "Predicting the Health Status of a Pulp Press Based on Deep Neural Networks and Hidden Markov Models" Energies 16, no. 6: 2651. https://doi.org/10.3390/en16062651

APA StyleMartins, A., Mateus, B., Fonseca, I., Farinha, J. T., Rodrigues, J., Mendes, M., & Cardoso, A. M. (2023). Predicting the Health Status of a Pulp Press Based on Deep Neural Networks and Hidden Markov Models. Energies, 16(6), 2651. https://doi.org/10.3390/en16062651