Abstract

In a fully mechanized mining face, there is interference between the hydraulic support face guard and the shearer drum. The two collisions seriously affect coal mine production and personnel safety. The identification of a shearer drum can be affected by fog generated when the shearer drum cuts forward. It is hydraulic support face guard recovery, not the timely block shearer drum, that will also affect the recognition of the shearer drum. Aiming at the above problems, a shearer drum identification method based on improved YOLOv5s with dark channel-guided filtering defogging is proposed. Aiming at the problem of fog interference affecting recognition, the defogging method for dark channel guided filtering is proposed. The optimal value of the scene transmittance function is calculated using guided filtering to achieve a reasonable defogging effect. The Coordinate Attention (CA) mechanism is adopted to improve the backbone network of the YOLOv5s algorithm. The shearer drum features extracted by the C3 module are reallocated by the attention mechanism to the weights of each space and channel. The information propagation of a shearer drum’s features is enhanced by such improvements. Thus, the detection of shearer drum targets in complex backgrounds is improved. S Intersection over Union (SIoU) is used as a loss function to improve the speed and accuracy of the shearer drum. To verify the effectiveness of the improved algorithm, multiple and improved target detection algorithms are compared. The algorithm is deployed at Huangling II mine. The experimental results present that the improved algorithm is superior to most target detection algorithms. In the absence of object obstruction, the improved algorithm achieved 89.3% recognition accuracy and a detection speed of 48.8 frame/s for the shearer drum in the Huangling II mine. The improved YOLOv5s algorithm provides a basis for identifying interference states between the hydraulic support face guard and shearer drum.

1. Introduction

In recent years, the “Guidance on Accelerating the Development of Intelligent Coal Mine” and the “Guide to Intelligent Coal Mine Construction 2022 Edition” were published in China, which call for the vigorous promotion of intelligent development of coal mines and the development of intelligent identification of the abnormal state of fully mechanical coal mining working face. The traditional coal mining industry is gradually transforming into an intelligent coal mine with few people. The interference may cause serious safety accidents between the hydraulic support face guard and the shearer drum present in Figure 1. The original manual follow-the-machine approach is to check the interference between the shearer drum and the hydraulic support face guard. However, it cannot meet the intelligent mining requirements. Therefore, machine vision is a good method for underground identification. It is important to study the target detection algorithm of the shearer drum in the foggy environment of a fully mechanized mining face.

Figure 1.

Interference status between hydraulic support face guard and shearer drum.

The identification of a shearer drum can be affected by the fog generated when a shearer drum cuts forward. The defogging method based on physical models has been widely used. Inspired by the Radon transform, the method proposed by Sebastián Salazar Colores et al. [1] is customized for image de-fogging by calculating dark channels, using statistical calculations and heuristic methods to avoid saturated areas. Y Yang et al. [2] developed a novel single-image dehazing method based on Gaussian adaptive transmission (GAT). GAT can enhance the visibility of dust haze images effectively. But this method is not very effective in dense fog conditions. It is not applicable for fully mechanized mining face environments. W Zhang and J Lu et al. [3] proposed a novel Gaussian distribution-based algorithm for atmospheric light estimation, which successfully removed the fog of the images. However, the colors of the recovered image are oversaturated by this method. He et al. [4] proposed the Dark Channel Prior (DCP) method, but it has the disadvantages of long processing time and color distortion. Ehsan et al. [5] proposed a new strategy for calculating dual transmission graphs using dark channel and atmospheric light and refining the transmission graphs using a gradient domain-guided filter to remove any residual adverse effects. Salazar et al. [6] proposed a computationally effective defogging method based on morphological operations and Gaussian filtering, which provides a better defogging effect in the case of uniform fog, but it has a poor image processing effect on dense fog. Zhang et al. [7] proposed an image-defogging algorithm combining a multiscale convolution network model and multiscale Retinex, which can effectively restore the image without fog. Mao [8] et al. proposed a method based on boundary constraints and nonlinear background regularization, which is of great importance for the monitoring of fully mechanical mining faces. Mao [9] et al. used a dark channel prior defogging algorithm to reduce the impact of fog on the clarity of surveillance videos. They used a custom convolution method to sharpen the image. The above methods are basically applied maturely in ordinary atmospheric environments. However, a fully mechanized mining face has the disadvantage of low illumination; fog is more serious and complex than in ordinary atmospheric environments. The existing methods of defogging cannot be well applied to the actual working environment of a fully mechanized mining face.

With the development of intelligent mining technology in coal mines, interference identification between hydraulic support face guard and shearer drum is a study hot spot. Zhang et al. [10,11] used Kalman filtering to fuse the data obtained from inclination sensors and gyroscopes to improve the measurement accuracy of the hydraulic support face guard position. Combining virtual reality technology, multi-sensor information fusion technology and envelope box collision detection technology. Wang et al. [12] proposed a method that combines virtual rays and envelope boxes based on a virtual environment development platform; however, this method is costly. Zhang et al. [13] designed a 5G communication-based attitude detection system for overrunning a hydraulic support face guard with a distributed edge detection system. Ren et al. [14] designed a hydraulic support face guard attitude solution method to obtain hydraulic support face guard height and attitude angle information by fusing the feature information of the feature points with a depth camera. However, shearer drum position and attitude information are not extracted for analysis. Most of the above methods to detect the interference of hydraulic support face guards and shearer drums are focused on the identification study of a hydraulic support face guard. The primary research is on hydraulic support face guard attitudes to assist manual followers in identifying abnormal states—these methods are not sufficient for coal intelligence requirements.

In recent years, machine vision and deep learning have been applied to fully mechanical mining faces. Zhang et al. [15] used machine vision methods to solve the target image recognition problem. Higher accuracy of hydraulic support face guard position measurements was achieved. Wang et al. [16] used fog image clarification algorithms with machine vision measurement methods to non-contact the monitor retraction status of a hydraulic support face guard, and this image recognition measurement method is in real-time and highly accurate. Man et al. [17] designed a hydraulic support face guard image feature extraction algorithm to measure and calculate the positional model of the hydraulic support face guard and shearer drum to achieve identification of interference between the hydraulic support face guard and shearer drum.

In recent years, CNN-based feature extraction techniques have been rapidly developed and became the main means of target detection and recognition [18,19]. Faster R-CNN, YOLO and SSD are the three most widely used methods [20,21,22]. Redmon [23] et al. proposed the extremely fast detection YOLO method, which first resizes the input image to a specified size. Then, it performs a convolution operation using a regression-like processing method to extract the features of the target for detection. YOLO detection speed is nearly 7 times faster than Faster R-CNN, but the accuracy is lower. Deep learning is not well applied due to the harsh environment of the fully mechanical operations of mines. Hydraulic support faces also lead to poor recognition of the shearer drum. Loss of detection targets and poor real-time and accuracy of detection targets are urgent problems that must be solved. In summary, the current deep learning methods mainly analyze hydraulic support face guard position attitude information to identify whether interference occurs. There is less research on the identification of a shearer drum.

In this study, our main contribution is to propose an intelligent identification method for a shearer drum based on improved YOLOv5s with dark channel-guided filtering defogging. Aiming at the problem of fog interference affecting recognition, the defogging method for dark channel guided filtering is proposed. Aiming at the problem that hydraulic support face causes poor recognition of a shear drum, a Coordinate Attention(CA) mechanism was adopted to improve the backbone network of the YOLOv5s algorithm. The S Intersection over Union is used as a loss function of the YOLOv5s algorithm to improve the speed and accuracy of a shearer drum. The shearer drum can be accurately and efficiently identified in foggy environments and under obscured conditions in the proposed method. It provides a basis for identifying interference states between hydraulic support face guard and shearer drum, which is of great significance for the safe and efficient operation of a fully mechanical mining face.

2. The Defogging Method for Dark Channel Guided Filtering in Fully Mechanized Mining Face

The harsh atmospheric conditions of fully mechanized mining face and fog and dust during coal mining make it impossible to capture the exact position of a shearer drum on video. In such an environment, common target detection algorithms tend to lose the shearer drum target. Therefore, the clarity method of fog imaging in a fully mechanized mining face is applied to improve the robustness of the target detection algorithm.

The fully mechanical mining workface is connected to the surface atmosphere through a ventilation system; therefore, its atmospheric dispersion is similar to an atmospheric environment and is not a closed indoor environment. The atmospheric scattering model is a primary physical model for describing fog images, which is presented in Equation (1).

where, is a foggy image, is a fog-free image, is global atmospheric light, is generally considered to be constant, is scene transmittance function.

Assuming that fog is homogeneous, scene transmittance is presented in Equation (2).

where, is the extinction coefficient of the medium, is the depth of field.

The method approximates atmospheric transmittance of a fully mechanical underground mining face by optimizing scenario transmittance function.

where is the output grey scale value, is the conversion multiplier, and is the original grey scale value. According to the atmospheric scattering model proposed in Equation (1), recovers fog-free image from known observed fog-dust images. Once is obtained, the fog-free image can be obtained.

where, is usually taken as 0.0001 to prevent the denominator from appearing as 0, and is equivalent to the medium extinction coefficient in Equation (4), which is used to fine-tune the defogging effect.

The guided filtering is used to find the transmittance . Guided image filtering is a kind of edge smoothing filter, which is a powerful filter for image edge smoothing, detail enhancement, and image fusion and denoising. It is based on the principle of filtering the input image by a guide image, and the output image can fully obtain the change details of the guide image while retaining the overall features of the input image.

The input image is denoted as p, the guide image is denoted as I, and the filtered output image is denoted as . Let the following linear relationship exist in the window centered at k. Let be a square window of radius . and are the linear factors in the window, which are fixed in the window. It can be seen from Equation (5) that the linear model guarantees that will produce the corresponding edge only when has an edge in the window. Since the role of the bootstrap filtering lies in searching for the optimal solution of the linear factor (, ) that minimizes the difference between the input image p and the output image . The cost function in the window is presented in Equation (6).

In Equation (6), is an adjustment parameter to prevent ak from taking too large a value. The expression of the optimal solution of () can be obtained by linear regression analysis, which is presented in Equation (7).

In Equation (8), || is the number of pixels in window , and are the variance and mean value of in the window , respectively, and p is the mean value of p in the window. Since point may be contained in more than one window and the values of and are different for different windows, it is necessary to calculate the mean values of and within the window centered on point and then find the value of .

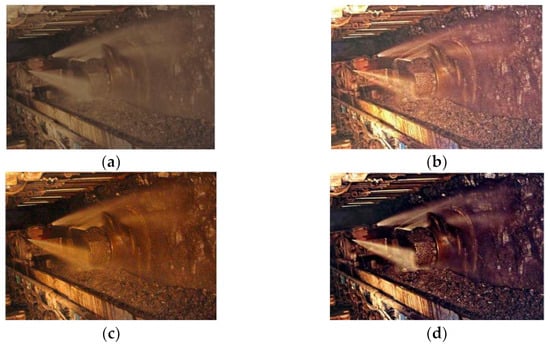

In this study, we use this method to defog the dataset, making fog and dust images clear and solving the problem of the small amount of data. The results of defog processing are presented in Figure 2. The original image is presented in Figure 2a. The image after processing with the proposed algorithm is presented in Figure 2b. The image after processing with the dark channel defogging algorithm is presented in Figure 2c. The image after processing with Berman’s algorithm is presented in Figure 2d.

Figure 2.

Clear processing of the fog and dust image in a fully mechanized mining face. (a) Original image. (b) Proposed. (c) He. (d) Berman.

Aiming at measuring the effectiveness of the proposed image clarification method, the Entropy function and Brenner function are selected to measure the change of clarity before and after processing. The magnitude of the values of these functions is proportional to the sharpness of the image. The Entropy values and Brenner values before and after image clarification are presented in Table 1. The results show that the Entropy value and Brenner value of the clarified image are greater than the original image and greater than other algorithms. It indicates in Figure 2 and Table 1 that the image is clearer after processing by the proposed clarification method. The defog algorithm is added to the frame-by-frame detection part of YOLOv5s, which will make more easier to detection the shearer drum.

Table 1.

Image sharpness index.

3. The Improved YOLOv5s Algorithm

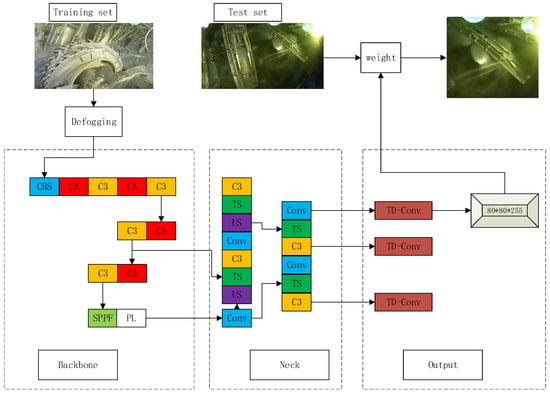

The shearer drum training set is defogged. And it is input into the improved YOLOv5s. The trained weight model is tested by the test set. The general flow chart for shearer drum identification is presented in Figure 3.

Figure 3.

General flow chart for shearer drum identification.

A defogging algorithm based on guided filtering is applied to the image data of a fully mechanized mining face. CA mechanism is adopted in YOLOv5 Backbone for improvement. The processed data set images are input into the improved YOLOv5 for training to get weights. The test sets are used to verify the trained models. Finally, the shearer drum in the image is identified.

3.1. CA Mechanism-Based Approach to Shearer Drum Recognition Accuracy Enhancement

In the shearer drum picture, the target has incomplete information about features proposed by the algorithm due to a foggy environment and obstruction of objects. In addition, the original target detection algorithm has a low recognition rate for the obscured targets. The shearer drum position in the picture is relatively stable, with a good position and direction information. Therefore, we proposed to use Coordinate Attention to improve the YOLOv5s algorithm. Compared to other mechanisms, Coordinate Attention is a lightweight, flexible mechanism that considers both the channel and position relationships. It not only captures cross-channel information but also includes direction-aware and position-sensitive information.

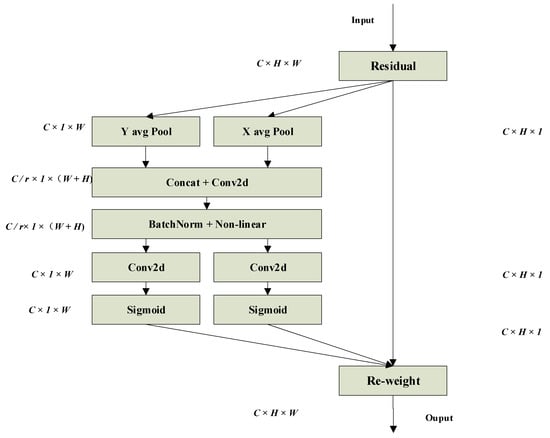

The Coordinate Attention mechanism is a novel and efficient attention mechanism. By embedding the position information into channel attention, the shearer drum feature information can be obtained in a wider range, avoiding the introduction of large overhead. Instead of introducing 2D global pooling in order to avoid causing loss of a shearer drum location information, this attention mechanism proposes to decompose channel attention into two parallel 1D feature encodings, aggregating the input features into two separate direction-aware feature mappings along vertical and horizontal directions, respectively, to efficiently integrate the spatial coordinate information. The two feature mappings with the embedded direction-specific information are subsequently feature-coded into two separate attentional mappings, each capturing distant dependencies of the input feature map along one spatial direction. The location information can therefore be stored in generated attention mapping. The two attention mappings are then multiplied together and applied to input feature mapping, emphasizing the expression of interest. The Coordinate Attention mechanism is presented in Figure 4.

Figure 4.

Structure of the Coordinate Attention mechanism.

Specifically, given an input X, each channel is transcoded along horizontal and vertical coordinates using two spatially scoped pooling kernels (, 1) and (1, ), respectively. Thus, the output of the th channel at height can be presented in Equation (10).

Similarly, the output of th channel of width can be presented in Equation (11)

The above two transformations aggregate features along two spatial directions, respectively to generate a pair of direction-aware feature mappings. Given the aggregated feature mappings generated by Equations (10) and (11), they must be first stitched together and then passed to share the 1 × 1 convolutional transform function , as presented in Equation (12).

where denotes the stitching operation along the spatial dimension, δ is a nonlinear activation function, and is an intermediate feature mapping that encodes spatial information in horizontal and vertical directions. Then, f is split into two independent tensors and along the spatial dimension. After using two convolutional transform functions and they are used to transform and , respectively, into a tensor with same number of channels as input , so it can be presented in Equations (13) and (14).

The outputs and are expanded and used as attention weights, respectively. Finally, output Y of the Coordinate Attention mechanism is presented in Equation (15).

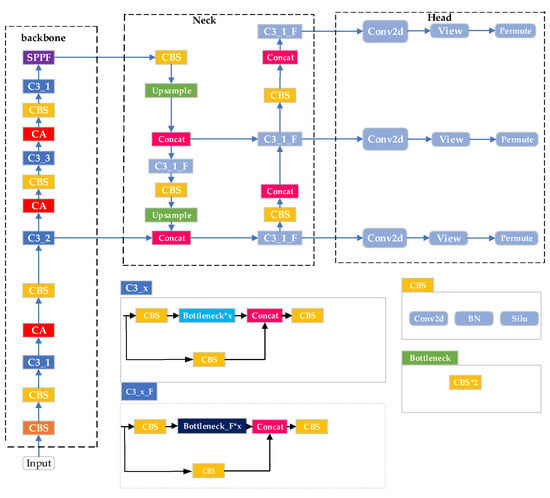

The improved YOLOv5s network structure is presented in Figure 5, with the red module in the figure being the improved module.

Figure 5.

The network structure of the improved YOLOv5s.

The input image is extracted and downsampled by the CBS module, and then extracted by the first C3 convolutional module. The CA module is used in the backbone network to process the shearer drum position and attitude information extracted by C3. The last step in Backbone is processed by the SPPF module. The information processed by the Backbone is transmitted to the Neck.

3.2. Accelerated Inference of SIOU for Shearer Drum Target Detection Algorithm

YOLOv5s uses the C Intersection over Union (CIoU) as a loss function of the Bounding box, while the Logits loss function and binary cross entropy (BCE) are used to calculate the loss of target score and class probability, respectively, where the CIoU is presented in Equations (16) and (17).

where the denotes the intersection ratio between the true frame and predicted frame, denotes the Euclidean distance between the center point of the predicted frame and ground truth frame, denotes the shortest diagonal length of the minimum enclosing frame of the predicted frame and ground truth frame, is a positive equilibrium parameter, denotes the consistency of aspect ratio between the predicted Shearer drum frame and ground truth frame, and and can be presented in Equations (18) and (19).

where , and , denote the width and height of truth and prediction boxes, respectively. The CIoU Loss function integrates coverage area, centroid distance and aspect ratio, and can measure its relative position well, and can also solve the problem of optimizing the horizontal and vertical orientation of the prediction frame, but the method does not consider the matching of orientation between the shearer drum prediction frame and the shearer drum target frame, and this deficiency leads to a slower convergence rate. We use the SIoU Loss function to replace the CIoU loss function in this study in order to solve this problem. The SIoU calculation can be presented in Equations (20) and (21).

where , denotes the prediction frame and truth frame, denotes the shape cost, and denotes the redefined distance cost after taking into account the angle cost, , and the formula is presented in Equations (22) and (23).

where , , in Equation (22) denotes the degree of concern for ; , , where in Equation (23) is defined as

where , and , denote the centroid coordinates of a shearer drum truth frame and prediction frame, respectively. The SIoU redefines distance loss by introducing vector angle between desired regressions, effectively reducing degrees of freedom of regressions, accelerating convergence of the network and further improving regression accuracy. Therefore, SIoU Loss is used as a loss function for border regression.

4. Experiments and Results Analysis

4.1. Setup of Data Set and Experimental Platform

The datasets used for experiments are derived from Huangling II in Shaanxi. A total of 5000 real images were obtained, and 1000 different angles of the state were selected. In this study, the sample images were annotated and saved according to the format of the PASCAL VOC dataset, and 70% of the images from dataset images were randomly selected for the training model. The LAMBELING APP was used to annotate the training data set with minimum external rectangular boxes of hydraulic support face guard and shearer drum, ensuring that each bounding box contained only hydraulic support face guard or shearer drum and as few background pixels as possible.

The experimental platform equipment and parameters are presented in Table 2.

Table 2.

Configuration and parameters of experimental platform.

4.2. Evaluation Indicators

In this study, we use Average Precision (AP) and Mean Average Precision (mAP) as the evaluation index for target detection to evaluate trained models.

where, P is Precision and R is Recall. TP means both the predicted and true values are positive, FP means the predicted value is negative, and the true value is positive, FN means the predicted value is positive and the true value is negative.

4.3. Experimental Validation

We conducted experimental comparisons by ablation experiments to verify the effectiveness of the defogging algorithm, Coordinate Attention mechanism and SIoU loss function. In the experiments, the training set used defogged and non-defogged datasets produced from the field data of Huangling II, and the validation set used a dataset produced from the field data of Huanglong II. The recognition accuracy and processing speed of the various improved algorithms are presented in Table 3.

Table 3.

Ablation experimental results.

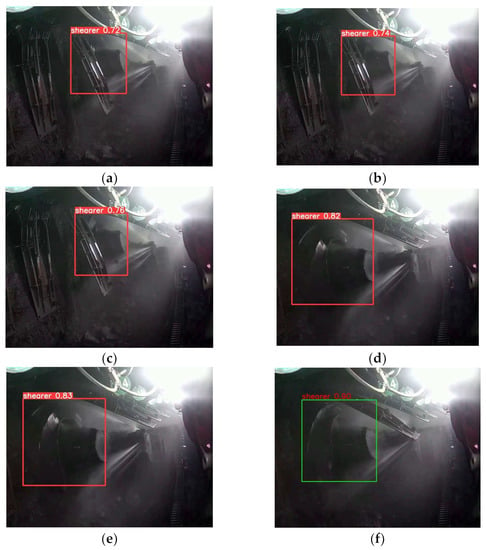

The results of the original algorithm are presented in Figure 6a. It can be seen that the recognition accuracy is not high because of the foggy environment and the effect of occlusion. As presented in Figure 6b, the results of the algorithm with the addition of defogging are shown. The mAP increased from 72% to 74.1%, with an increase of 2.1%. It can be seen that adding defogging to detection has less impact on the YOLOv5s’ detection speed, but the recognition accuracy has improved. As presented in Figure 6c, the results of the algorithm improved by CA are shown. The mAP increased from 72% to 76.1%, with an increase of 4.1%. It can be seen that CA enables the mobile network to obtain information over a larger area by embedding shearer drum position information into the channel attention, making shearer drum information easier to extract. Therefore, it can be concluded that the CA mechanism is effective in improving detection accuracy by embedding shearer drum position information into channel attention and increasing the weight value of a shearer drum in the interference state. As presented in Figure 6d, the results of the improved algorithm for CA+defogging are shown. The mAP increased from 72% to 82%, with an increase of 10%. It can be seen that the improved algorithm with defogging and CA is effective in increasing recognition accuracy and speed. As presented in Figure 6e, the results of the improved algorithm for CA+defogging+SIOU are shown. The mAP increased from 72% to 83%, experimentally showing an increase of 11%. SIoU redefines distance loss by introducing vector angle between required regressions, effectively reducing degrees of freedom of regressions, speeding up network convergence and further improving regression accuracy. The results of the improved algorithm for CA+defogging+SIOU are shown in Figure 6f, where the improved recognition is verified using an arbitrary image from a different time period than the previous one.

Figure 6.

Shearer drum recognition results for various improved algorithms. (a) YOLOv5. (b) defogging. (c) CA. (d) Defogging + CA. (e) Defogging + CA + SIOU. (f) The result of another frame.

5. Discussion

The analysis is compared with existing deep learning methods in order to highlight the superiority of our proposed detection method. We have also classified the current mainstream algorithms. The detection accuracy of the proposed algorithm is 90.3%, which is higher than most of the algorithms in the one and two stages. The proposed algorithm is a one-stage algorithm and therefore has a definite advantage over the detection speed of two-stage algorithms. The effectiveness of the algorithm is fully demonstrated by the fact that the backbone network and defogging process designed by the algorithm plays a good role. The specific results of the experiment are presented in Table 4.

Table 4.

Individual algorithm testing indicators for fully mechanical working faces.

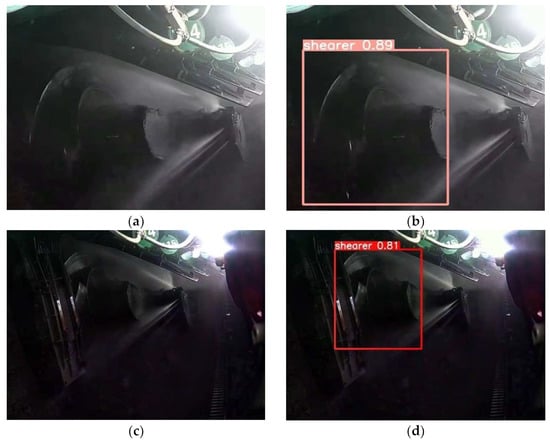

This target detection algorithm was deployed and implemented on the Huangling II mine. We compared the original algorithm with the improved algorithm. As shown in Figure 7a, the shearer drum does not recognize when the hydraulic support face guard and shearer drum are about to interfere with each or due to fogging dust caused by coal mining. As shown in Figure 7b, the improved YOLOv5s recognition effect clearly presents that the shearer drum can be recognized when there is an imminent interference collision between the hydraulic support face guard and shearer drum and that the shearer drum is recognized with an accuracy of 89.3%. As shown in Figure 7c, YOLOv5s is unable to recognize the shear drum due to the hydraulic support face guard obscuring it. As shown in Figure 7d, the improved YOLOv5s extracts the location information to improve recognition. This experiment adequately demonstrates the effectiveness of our proposed algorithm for identifying the shearer drum based on the improved YOLOv5s with dark channel-guided filtering defogging.

Figure 7.

Identification results of a shearer drum in fog interference and hydraulic support face guard shielding conditions. (a) The original algorithm results in scenario one. (b) The proposed algorithm results in scenario one. (c) The original algorithm results in scenario two. (d) The proposed algorithm results in scenario two.

In this study, we propose a method to recognize the shearer drum in the harsh environment of fully mechanized mining face. The experimental results show that hydraulic support face guard and fog environment have great influence on the detection accuracy of the shearer drum. Our proposed method effectively solves both problems. For the future research content in this field, we believe that the means of defogging should be more advanced. The experimental research results will hopefully serve as useful feedback information for improvements for identifying interference states between hydraulic support face guard and shearer drum.

6. Conclusions

An improved YOLOv5s deep learning method based on the dark channel guided filtering defogging algorithm processing is proposed for shearer drum recognition in foggy environments and under obscured conditions. The proposed method is verified by experiments. The following conclusions are drawn:

- In terms of fog interference in underground coal mines, a dark channel-guided filtering defogging algorithm is proposed. The results show that the dark channel-guided filtering defogging algorithm can reduce the impact of fog and improve the accuracy of the detection algorithm.

- In terms of the lack of location information extraction for the YOLOv5s target detection algorithm, an improved backbone network based on the coordinated attention mechanism is proposed. The results showed that the mAP of YOLOv5s increased by 5.1% from 72% to 77.1%.

- In terms of the network convergence speed is slow due to the large freedom of loss function regression for YOLOv5s, the SIoU is taken as the loss function of the YOLOv5s algorithm. The experimental result presents that the accuracy and speed of the YOLOv5s detection are improved overall.

- This method was deployed in Huangling II mine to identify the shear drums. The results present an average identification accuracy is 89.3%, and a recognition speed is 48.8 frame/s in an unobstructed scenario. The average identification accuracy is 81.2%, and the recognition speed is 40 frame/s in the sheltered scenario. The proposed method can meet the identification requirements in a fully mechanized mining face.

- At present we have mainly studied shearer drum identification. An anti-collision warning method for shearer drum and hydraulic support guard plates is a future research direction.

Author Contributions

For this research article, Q.M. was in charge of the methodology; M.W. was in charge of the writing, review and data analysis; J.Z., X.X. and X.H. were in charge of the data curation and field inspection. All authors have read and agreed to the published version of the manuscript.

Funding

General project of Shaanxi coal joint fund of Shaanxi Provincial Department of science and Technology (2019JLM-39). Shaanxi science and technology innovation team project (2018TD-032). Coal Mine Electromechanical System Intelligent Measurement and Control Innovation Team Project (2022TD-043).

Data Availability Statement

Not applicable.

Acknowledgments

The study was approved by Xi’an University of Science and Technology.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Colores, S.S.; Moya-Sánchez, E.U.; Ramos-Arreguín, J.-M.; Cabal-Yépez, E. Statistical multidirectional line dark channel for single-image dehazing. IET Image Process. 2019, 13, 2877–2887. [Google Scholar] [CrossRef]

- Yang, Y.; Zhang, C.; Liu, L.; Chen, G.; Yue, H. Visibility restoration of single image captured in dust and haze weather conditions. Multidimens. Syst. Signal Process. 2020, 31, 619–633. [Google Scholar] [CrossRef]

- Zhang, W.; Lu, J.; Xu, X.; Hou, X. Estimation of atmospheric light based on gaussian distribution. Multimed. Tools Appl. 2019, 78, 33401–33414. [Google Scholar] [CrossRef]

- He, K.; Sun, J.; Tang, X. Single image haze removal using dark channel prior. IEEE Trans. Pattern Anal. Mach. Intell. 2010, 33, 2341–2353. [Google Scholar] [PubMed]

- Ehsan, S.M.; Imran, M.; Ullah, A.; Elbasi, E. A Single Image Dehazing Technique Using the Dual Transmission Maps Strategy and Gradient-Domain Guided Image Filtering. IEEE Access 2021, 9, 89055–89063. [Google Scholar] [CrossRef]

- Salazar-Colores, S.; Ramos-Arreguín, J.M.; Ortiz Echeverri, C.J.; Cabal-Yepez, E.; Pedraza-Ortega, J.C.; Rodriguez-Resendiz, J. Image dehazing using morphological opening, dilation and Gaussian filtering. Signal Image Video Process. 2018, 12, 1329–1335. [Google Scholar] [CrossRef]

- Zhang, W.; Dong, L.; Pan, X.; Zhou, J.; Qin, L.; Xu, W. Single image defogging based on multi-channel convolutional MSRCR. IEEE Access 2019, 7, 72492–72504. [Google Scholar] [CrossRef]

- Mao, Q.; Wang, Y.; Zhang, X.; Zhao, X.; Zhang, G.; Mushayi, K. Clarity method of fog and dust image in fully mechanized mining face. Mach. Vis. Appl. 2022, 33, 30. [Google Scholar] [CrossRef]

- Mao, Q.; Li, S.; Hu, X.; Xue, X. Coal Mine Belt Conveyor Foreign Objects Recognition Method of Improved YOLOv5 Algorithm with Defogging and Deblurring. Energies 2022, 15, 9504. [Google Scholar] [CrossRef]

- Zhang, K. Research on Hydraulic Bracket Attitude Monitoring Method Based on Information Fusion Technology. Bachelor’s Thesis, Taiyuan University of Technology, Taiyuan, China, 2018. [Google Scholar]

- Zhang, K.; Lian, Z. Hydraulic bracket attitude angle measurement system. Ind. Mine Autom. 2017, 43, 40–45. [Google Scholar]

- Wang, M.Y.; Zhang, X.H.; Ma, H.W.; Du, Y.Y.; Zhang, Y.M.; Xie, N.; Wei, Q.N. Remote control collision detection and early warning method for comprehensive mining equipment. Coal Sci. Technol. 2021, 49, 110–116. [Google Scholar]

- Zhang, J.; Ding, J.K.; Li, R.; Wang, H.; Wang, X. Research on 5G-based attitude detection technology of overhead hydraulic bracket. Coal Min. Mach. 2022, 43, 39–41. [Google Scholar]

- Ren, H.W.; Li, S.S.; Zhao, G.R.; Fu, K.K. Measurement method of support height and roof beam posture angles for working face hydraulic support based on depth vision. J. Min. Saf. Eng. 2022, 39, 72–81+93. [Google Scholar]

- Zhang, X.; Wang, D.; Yang, W. Vision-based measurement of hydraulic bracket position detection method. Ind. Min. Autom. 2019, 45, 56–60. [Google Scholar]

- Wang, Y.; Li, H.; Guo, W.; He, H.; Jia, G. An image recognition-based method for monitoring the retraction status of hydraulic support face guard. Ind. Min. Autom. 2019, 45, 47–53. [Google Scholar]

- Mang, Y. Research on Monitoring Technology of Interference between Hydraulic Support Face Guard and Coal Mining Machine Shearer Cut-Off. Bachelor’s Thesis, China University of Mining and Technology (Beijing), Beijing, China, 2019. [Google Scholar]

- Li, J.; Liang, X.; Shen, S.; Xu, T.; Feng, J.; Yan, S. Scale-aware fast R-CNN for pedestrian detection. IEEE T-Ransactions Multimed. 2017, 20, 985–996. [Google Scholar] [CrossRef]

- Li, Y.; He, K.; Sun, J. R-FCN: Object detection via region-based fully convolutional networks. Adv. Neural Inf. Process. Syst. 2016, 29, 379–387. [Google Scholar]

- LeCun, Y.; Boser, B.; Denker, J.S.; Henderson, D.; Howard, R.E.; Hubbard, W.; Jackel, L.D. Backpropagation Applied to Handwritten Zip Code Recognition. Neural Comput. 2014, 1, 541–551. [Google Scholar] [CrossRef]

- Girshick, R. Fast R-CNN. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015; pp. 1440–1448. [Google Scholar]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.Y.; Berg, A.C. SSD:Single Shot MultiBox Detector. In Proceedings of the European Conference on Computer Vision (ECCV), Amsterdam, The Netherlands, 11–14 October 2016; pp. 21–37. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).