Short-Term Power Load Forecasting Based on Feature Filtering and Error Compensation under Imbalanced Samples

Abstract

1. Introduction

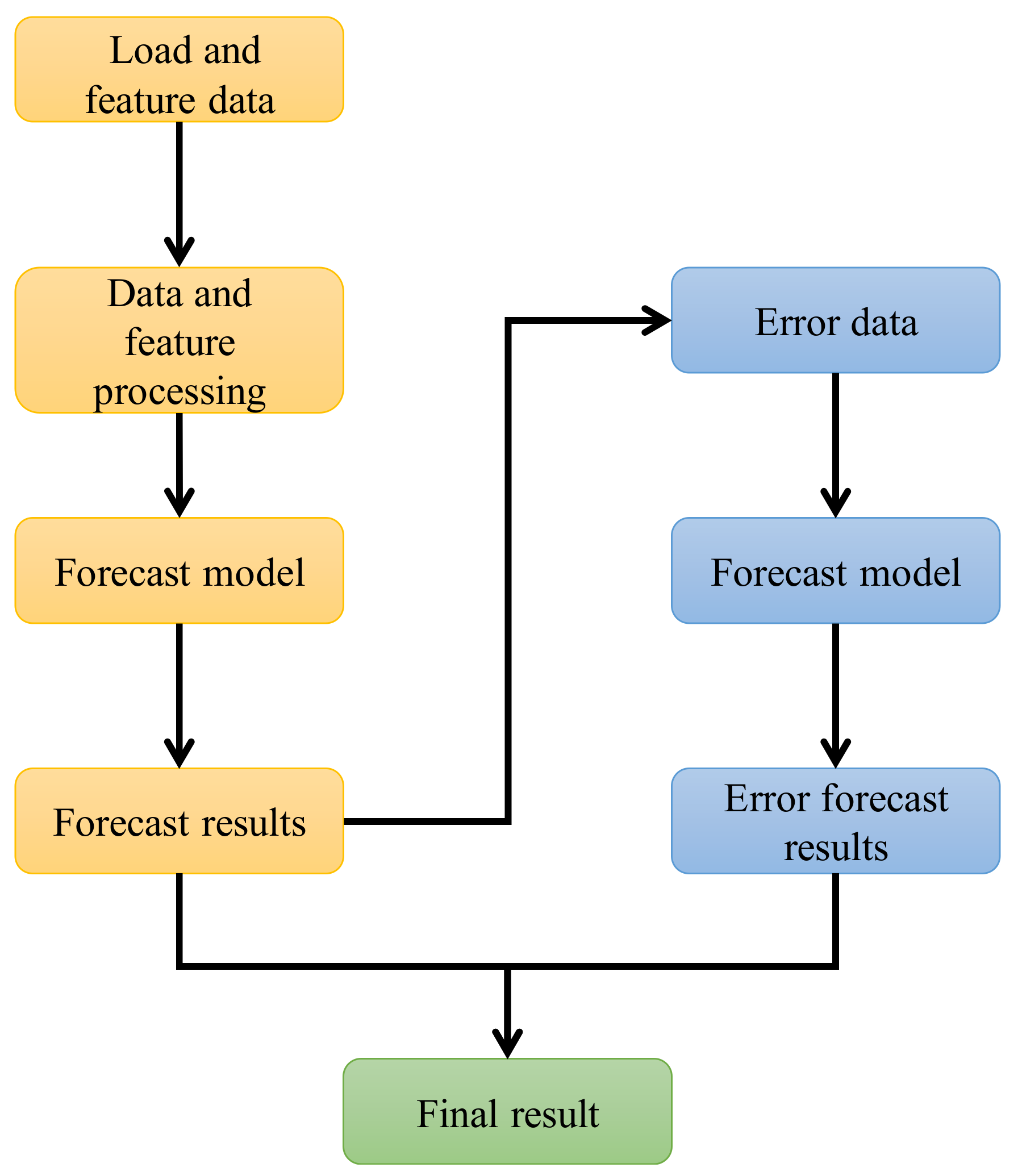

- Constructing an error compensation model and using the prediction results of the errors to compensate for the original results can effectively improve the overall prediction accuracy.

- By expanding the minority sample data, the imbalance of the load data is alleviated, and the accuracy of the prediction results in the minority sample area can be effectively improved.

- Improvements to GRU ensure prediction accuracy while simplifying the model’s structure and improving the overall operational efficiency.

2. Data Processing Methods

2.1. Feature Selection Process Based on Kernel Principal Component Analysis

- Standardization of data. Find the kernel matrix K and use the radial basis kernel function to complete the mapping of the original data from data space to feature space.

- The centralized kernel matrix is used to correct the kernel matrix , where is the matrix of with 1/N for each element.

- Calculate the eigenvalues of the matrix The eigenvalues determine the magnitude of the variance. Arrange the eigenvalues in descending order to obtain the arranged eigenvector .

- Schmitt orthogonalization and unitization of the eigenvectors to obtain the master sequence

- Calculate the contribution of each feature value , and select the first t principal components as the retained features if > p, according to the set requirement p.

2.2. Sample Expansion Methods Based on the Synthesis of a Small Number of Probability Distributions

- 1.

- Conducting sample screening

- 2.

- Select a few samples and synthesize

3. Modelling and Improvement of Main Algorithm

3.1. Error Compensation Model

3.2. Optimizing VMD with WOA

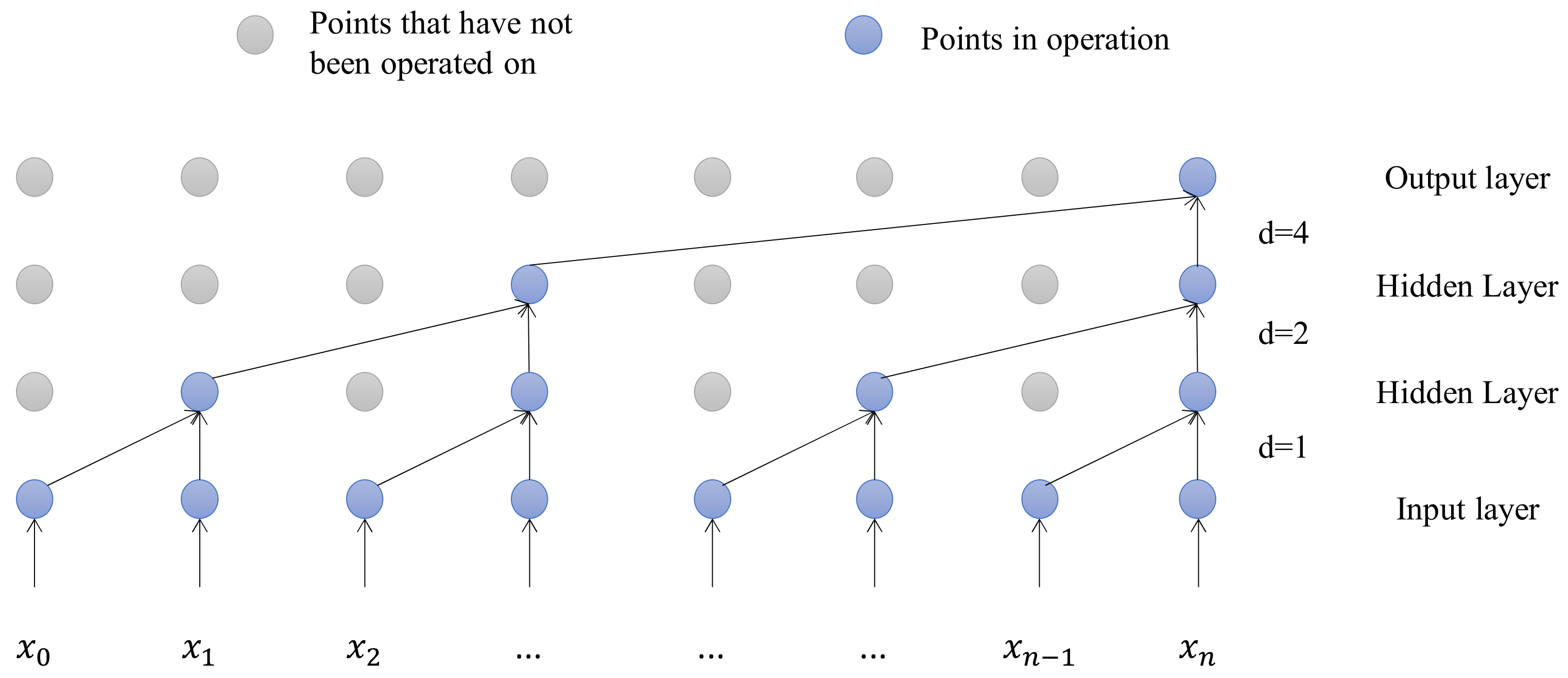

3.3. Feature Extraction Using Temporal Convolutional Networks

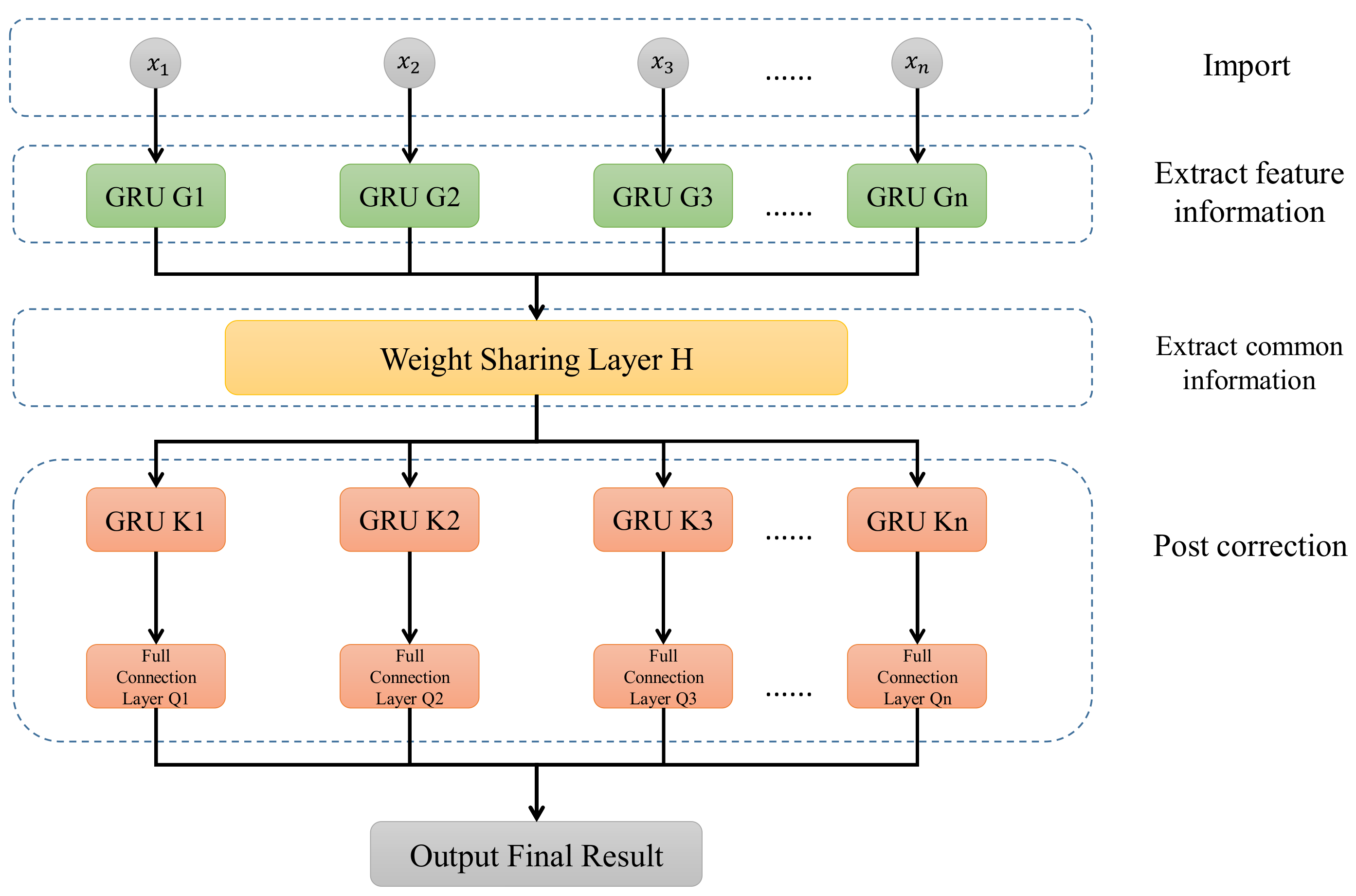

3.4. Improved Gated Recurrent Unit

4. Results and Discussions

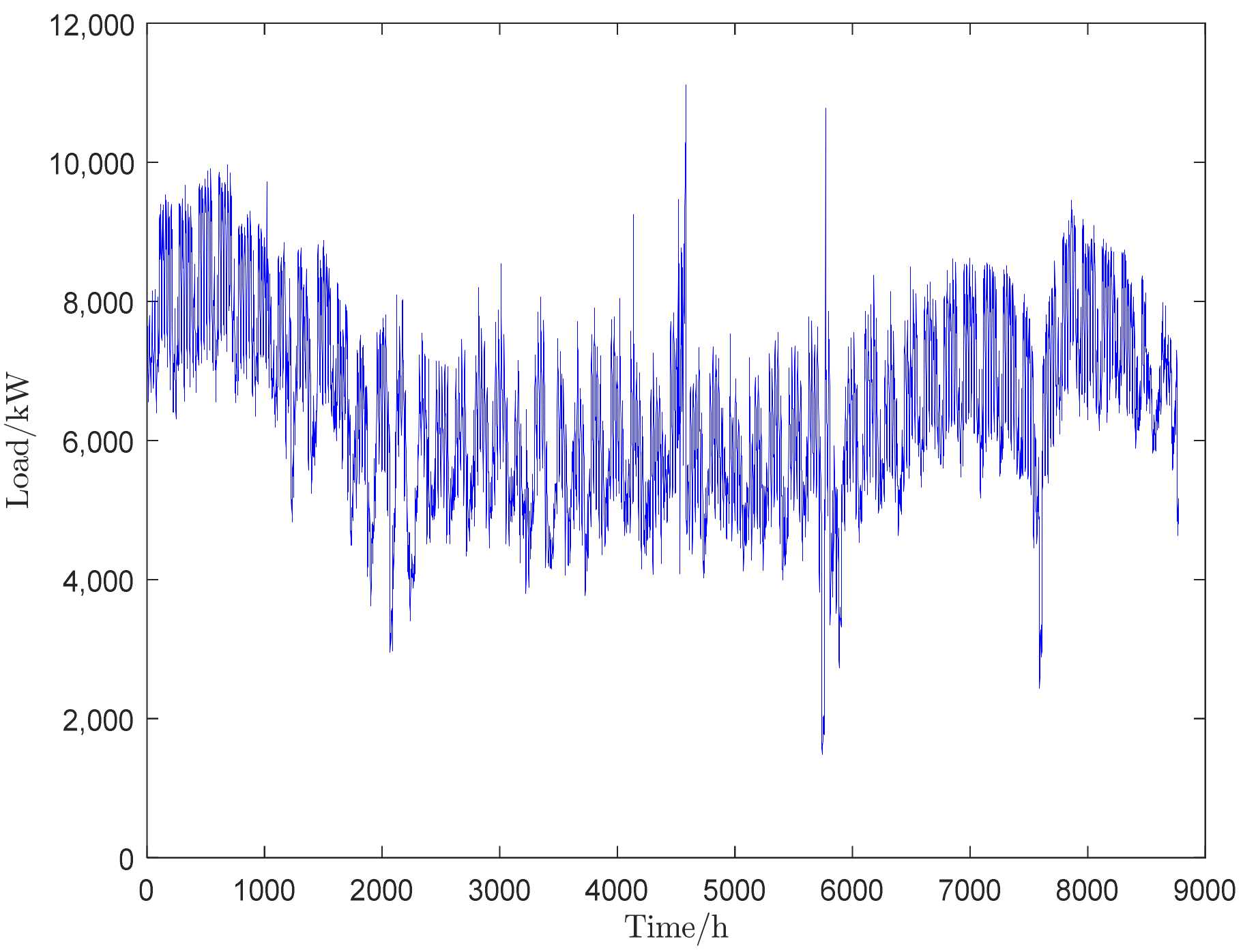

4.1. Experimental Background and Evaluation Indicators

4.2. Feature Filtering Process

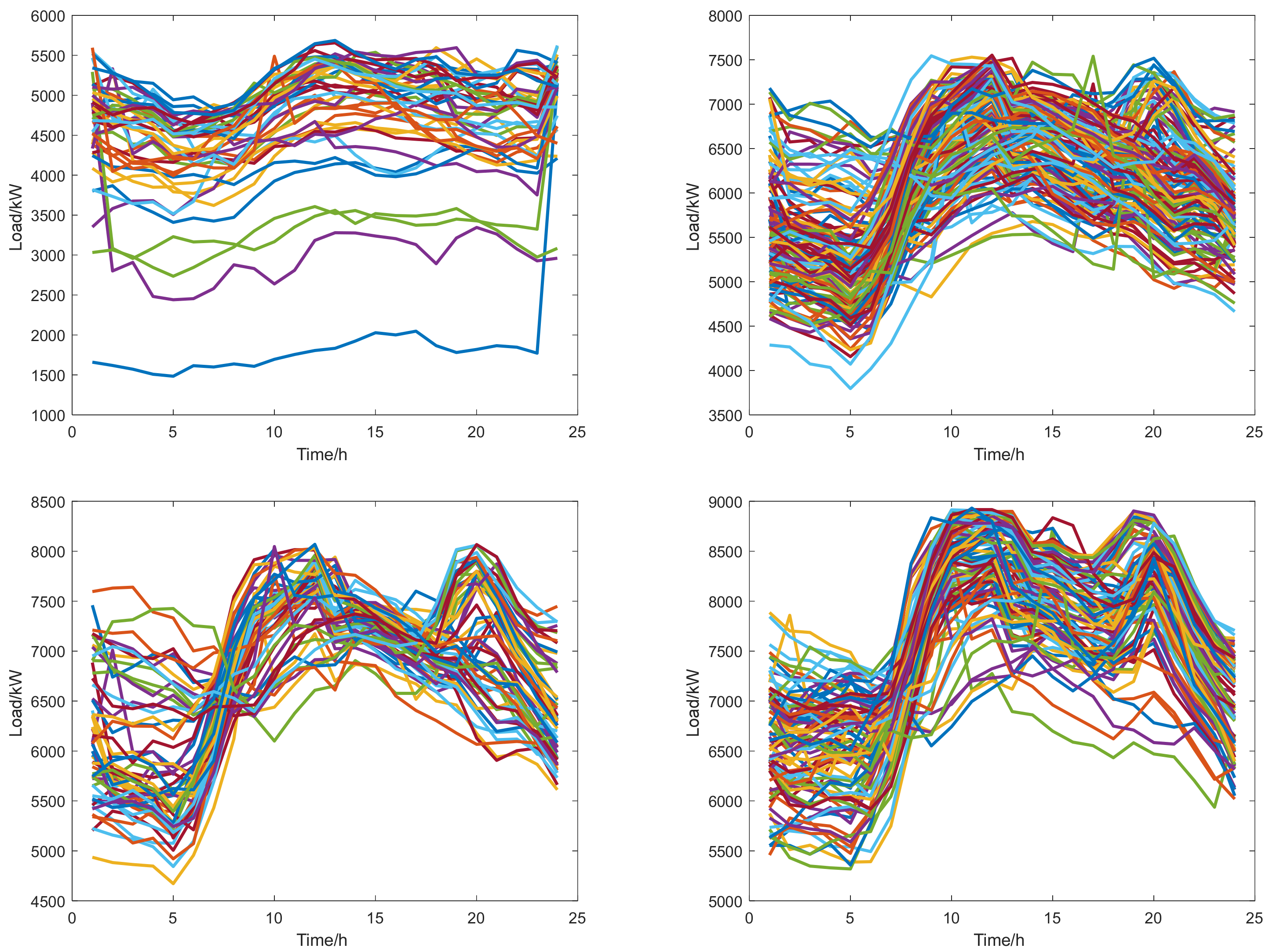

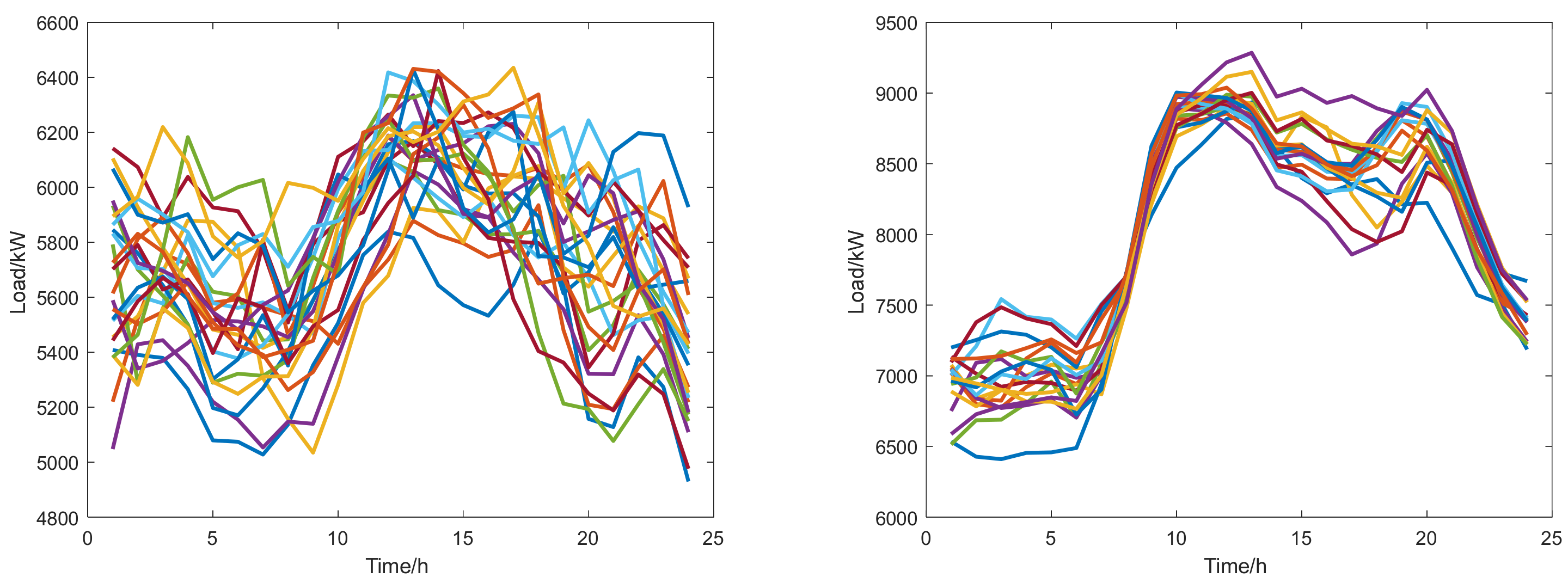

4.3. SyMProD-Based Sample Expansion

4.4. Experimental Results and Analysis

4.4.1. Algorithm Vertical Improvement Comparison Experiments

4.4.2. Algorithm Cross-Sectional Comparison Experiments

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Appendix A

| Serial Number | Features | Contribution Rate | Whether to Retain (Threshold 0.5) |

|---|---|---|---|

| 1 | Average temperature | 0.854 | Yes |

| 2 | Humidity | 0.843 | Yes |

| 3 | Wind speed | 0.732 | Yes |

| 4 | Date Type | 0.712 | Yes |

| 5 | Precipitation | 0.665 | Yes |

| 6 | Illumination | 0.547 | Yes |

| 7 | Pneumatic pressure | 0.331 | No |

| 8 | Air visibility | 0.318 | No |

| 9 | Air density | 0.201 | No |

| Main Algorithm Runtime | Total Running Time (Including Time Consumed for Reference Adjustment) | |

|---|---|---|

| Method 1 | VMD: 16 min GRU: 141 min | 201 min |

| Method 2 | VMD: 16 min TCN: 47 min GRU: 101 min | 208 min |

| Method 3 | WOA-VMD: 23 min TCN: 69 min IGRU: 77 min | 189 min |

| Method 4 | WOA-VMD: 23 min TCN: 71 min IGRU: 94 min | 188 min |

| Method in this paper | WOA-VMD: 23 min TCN: 67 min IGRU: 80 min | 170 min |

Appendix B

Appendix C

- Experimental software: Python 3.8; Pycharm 2018

- Experimental environment: PyTorch; Keras; Tensorflow

- Computer configuration:

- CPU: Intel i5 4200U

- GPU: NVIDIA GT740M

- RAM: 16 GB

- Operating system: Windows 10 Professional Edition

- Empirical mode decomposition (EMD)

- Variational mode decomposition (VMD)

- Whale optimization algorithm (WOA)

- Long-short-term-memory (LSTM)

- Gated recurrent unit (GRU)

- Synthetic minority based on probabilistic distribution (SyMProD)

- Kernel principal component analysis (KPCA)

- Mean envelope entropy (MEE)

- Temporal convolutional networks (TCN)

- Mean absolute error (MAE)

- Mean absolute percentage error (MAPE)

- Root mean square error (RMSE)

- Extreme gradient boost (XGBoost)

- Light gradient boost machine (LightGBM)

- Least squares support vector machine (LSSVM)

References

- Kong, W.; Dong, Z.Y.; Jia, Y.; Hill, D.J.; Xu, Y.; Zhang, Y. Short-Term Residential Load Forecasting Based on LSTM Recurrent Neural Network. IEEE Trans. Smart Grid 2017, 10, 841–851. [Google Scholar] [CrossRef]

- Dewangan, F.; Abdelaziz, A.Y.; Biswal, M. Load Forecasting Models in Smart Grid Using Smart Meter Information: A Review. Energies 2023, 16, 1404. [Google Scholar] [CrossRef]

- Zhang, X.; Wang, R.; Zhang, T.; Liu, Y.; Zha, Y. Short-Term Load Forecasting Using a Novel Deep Learning Framework. Energies 2018, 11, 1554. [Google Scholar] [CrossRef]

- Niu, D.; Yu, M.; Sun, L.; Gao, T.; Wang, K. Short-term multi-energy load forecasting for integrated energy systems based on CNN-BiGRU optimized by attention mechanism. Appl. Energy 2022, 313, 118801. [Google Scholar] [CrossRef]

- Wu, H.; Liang, Y.; Heng, J. Pulse-diagnosis-inspired multi-feature extraction deep network for short-term electricity load forecasting. Appl. Energy 2023, 339, 120995. [Google Scholar] [CrossRef]

- Mounir, N.; Ouadi, H.; Jrhilifa, I. Short-term electric load forecasting using an EMD-BI-LSTM approach for smart grid energy management system. Energy Build. 2023, 288, 113022. [Google Scholar] [CrossRef]

- Li, J.; Deng, D.; Zhao, J.; Cai, D.; Hu, W.; Zhang, M.; Huang, Q. A Novel Hybrid Short-Term Load Forecasting Method of Smart Grid Using MLR and LSTM Neural Network. IEEE Trans. Ind. Inform. 2021, 17, 2443–2452. [Google Scholar] [CrossRef]

- Su, J.; Han, X.; Hong, Y. Short Term Power Load Forecasting Based on PSVMD-CGA Model. Sustainability 2023, 15, 2941. [Google Scholar] [CrossRef]

- Wang, Y.; Zhang, W.; Li, X.; Wang, A.; Wu, T.; Bao, Y. Monthly Load Forecasting Based on an ARIMA-BP Model: A Case Study on Shaoxing City. In Proceedings of the 2020 12th IEEE PES Asia-Pacific Power and Energy Engineering Conference (APPEEC), Nanjing, China, 20–23 September 2020; pp. 1–6. [Google Scholar]

- Wang, Y.; Zhang, N.; Chen, X. A Short-Term Residential Load Forecasting Model Based on LSTM Recurrent Neural Network Considering Weather Features. Energies 2021, 14, 2737. [Google Scholar] [CrossRef]

- Yuan, J.; Wang, L.; Qiu, Y.; Wang, J.; Zhang, H.; Liao, Y. Short-term electric load forecasting based on improved Extreme Learning Machine Mode. Energy Rep. 2021, 7, 1563–1573. [Google Scholar] [CrossRef]

- Huang, N.E.; Shen, Z.; Long, S.R.; Wu, M.C.; Shih, H.H.; Zheng, Q.; Yen, N.-C.; Tung, C.C.; Liu, H.H. The empirical mode decomposition and the Hilbert spectrum for nonlinear and non-stationary time series analysis. Proc. R. Soc. London. Ser. A Math. Phys. Eng. Sci. 1998, 454, 903–995. [Google Scholar] [CrossRef]

- Zhou, Y.; Lin, Q.; Xiao, D. Application of LSTM-LightGBM Nonlinear Combined Model to Power Load Forecasting. J. Phys. Conf. Ser. 2022, 2294, 012035. [Google Scholar] [CrossRef]

- Meng, Z.; Xie, Y.; Sun, J. Short-term load forecasting using neural attention model based on EMD. Electr. Eng. 2021, 104, 1857–1866. [Google Scholar] [CrossRef]

- Zheng, H.; Yuan, J.; Chen, L. Short-Term Load Forecasting Using EMD-LSTM Neural Networks with a Xgboost Algorithm for Feature Importance Evaluation. Energies 2017, 10, 1168. [Google Scholar] [CrossRef]

- Dragomiretskiy, K.; Zosso, D. Variational Mode Decomposition. IEEE Trans. Signal Process. 2014, 62, 531–544. [Google Scholar] [CrossRef]

- Wang, N.; Li, Z. Short term power load forecasting based on BES-VMD and CNN-Bi-LSTM method with error correction. Front. Energy Res. 2023, 10, 1076529. [Google Scholar] [CrossRef]

- Xu, C.; Chen, H.; Xun, W.; Zhou, Z.; Liu, T.; Zeng, Y.; Ahmad, T. Modal decomposition based ensemble learning for ground source heat pump systems load forecasting. Energy Build. 2019, 194, 62–74. [Google Scholar] [CrossRef]

- Wang, S.; Wei, L.; Zeng, L. Ultra-short-term Photovoltaic Power Prediction Based on VMD-LSTM-RVM Model. IOP Conf. Series Earth Environ. Sci. 2021, 781, 042020. [Google Scholar] [CrossRef]

- Wei, J.; Wu, X.; Yang, T.; Jiao, R. Ultra-short-term forecasting of wind power based on multi-task learning and LSTM. Int. J. Electr. Power Energy Syst. 2023, 149, 109073. [Google Scholar] [CrossRef]

- Semmelmann, L.; Henni, S.; Weinhardt, C. Load forecasting for energy communities: A novel LSTM-XGBoost hybrid model based on smart meter data. Energy Inform. 2022, 5, 24. [Google Scholar] [CrossRef]

- Hua, H.; Liu, M.; Li, Y.; Deng, S.; Wang, Q. An ensemble framework for short-term load forecasting based on parallel CNN and GRU with improved ResNet. Electr. Power Syst. Res. 2023, 216, 109057. [Google Scholar] [CrossRef]

- Chen, Z.; Jin, T.; Zheng, X.; Liu, Y.; Zhuang, Z.; Mohamed, M.A. An innovative method-based CEEMDAN–IGWO–GRU hybrid algorithm for short-term load forecasting. Electr. Eng. 2022, 104, 3137–3156. [Google Scholar] [CrossRef]

- Lv, L.; Wu, Z.; Zhang, J.; Zhang, L.; Tan, Z.; Tian, Z. A VMD and LSTM Based Hybrid Model of Load Forecasting for Power Grid Security. IEEE Trans. Ind. Inform. 2021, 18, 6474–6482. [Google Scholar] [CrossRef]

- Inteha, A.; Al Masood, N. A GRU-GA Hybrid Model Based Technique for Short Term Electrical Load Forecasting. In Proceedings of the 2nd International Conference on Robotics, Electrical and Signal Processing Techniques (ICREST), Dhaka, Bangladesh, 5–7 January 2021; pp. 515–519. [Google Scholar] [CrossRef]

- Yang, T.; Huang, L.; Fu, P.; Chen, X.; Zhang, X.; Chen, T.; He, S. Distributed energy power prediction of the variational modal decomposition and Gated Recurrent Unit optimization model based on the whale algorithm. Energy Rep. 2022, 8, 24–33. [Google Scholar] [CrossRef]

- Yu, M.; Niu, D.; Gao, T.; Wang, K.; Sun, L.; Li, M.; Xu, X. A novel framework for ultra-short-term interval wind power prediction based on RF-WOA-VMD and BiGRU optimized by the attention mechanism. Energy 2023, 269, 126738. [Google Scholar] [CrossRef]

- Kunakorntum, I.; Hinthong, W.; Phunchongharn, P. A Synthetic Minority Based on Probabilistic Distribution (SyMProD) Oversampling for Imbalanced Datasets. IEEE Access 2020, 8, 114692–114704. [Google Scholar] [CrossRef]

- Zhu, A.; Zhao, Q.; Yang, T.; Zhou, L.; Zeng, B. Condition monitoring of wind turbine based on deep learning networks and kernel principal component analysis. Comput. Electr. Eng. 2023, 105, 108538. [Google Scholar] [CrossRef]

- Fan, Y.; Wang, H.; Zhao, X.; Yang, Q.; Liang, Y. Short-Term Load Forecasting of Distributed Energy System Based on Kernel Principal Component Analysis and KELM Optimized by Fireworks Algorithm. Appl. Sci. 2021, 11, 12014. [Google Scholar] [CrossRef]

- Tao, C.; Lu, J.; Lang, J.; Peng, X.; Cheng, K.; Duan, S. Short-Term Forecasting of Photovoltaic Power Generation Based on Feature Selection and Bias Compensation–LSTM Network. Energies 2021, 14, 3086. [Google Scholar] [CrossRef]

- Mirjalili, S.; Lewis, A. The Whale Optimization Algorithm. Adv. Eng. Softw. 2016, 95, 51–67. [Google Scholar] [CrossRef]

- Luo, W.; Dou, J.; Fu, Y.; Wang, X.; He, Y.; Ma, H.; Wang, R.; Xing, K. A Novel Hybrid LMD–ETS–TCN Approach for Predicting Landslide Displacement Based on GPS Time Series Analysis. Remote. Sens. 2022, 15, 229. [Google Scholar] [CrossRef]

- Ma, Q.; Wang, H.; Luo, P.; Peng, Y.; Li, Q. Ultra-short-term Railway traction load prediction based on DWT-TCN-PSO_SVR combined model. Int. J. Electr. Power Energy Syst. 2021, 135, 107595. [Google Scholar] [CrossRef]

- Wang, Y.; Chen, J.; Chen, X.; Zeng, X.; Kong, Y.; Sun, S.; Guo, Y.; Liu, Y. Short-Term Load Forecasting for Industrial Customers Based on TCN-LightGBM. IEEE Trans. Power Syst. 2020, 36, 1984–1997. [Google Scholar] [CrossRef]

- He, L.; Li, Z.; Xie, Y.; Liu, Y.; Liu, M. Buckling failure mechanism and critical buckling load prediction method of super-long piles in soft-clay ground in deep water. Ocean Eng. 2023, 276, 114216. [Google Scholar] [CrossRef]

| Algorithm Used | Algorithm Settings | |

|---|---|---|

| Method 1 | VMD-GRU algorithm | Neurons: 128 Activation function: RELU Learning rate: 0.01 Training style: Adam |

| Method 2 | VMD-TCN-GRU algorithm | Neurons: 128 Activation function: RELU Learning rate: 0.01 Training style: Adam |

| Method 3 | WOA-VMD-TCN-improved GRU algorithm | Neurons: 128 Activation function: RELU Learning rate: 0.01 Training style: Adam |

| Method 4 | WOA-VMD-TCN-improved GRU algorithm (for minority sample expansion) | Neurons: 128 Activation function: RELU Learning rate: 0.01 Training style: Adam |

| Method in this paper | WOA-VMD-TCN-improved GRU algorithm (for minority sample expansion and feature screening to construct error compensation models) | Neurons: 128 Activation function: RELU Learning rate: 0.01 Training style: Adam |

| MAE/kW | MAPE/% | RMSE/kW | |

|---|---|---|---|

| Method 1 | 263.393 | 4.13 | 417.397 |

| Method 2 | 250.305 | 3.87 | 302.359 |

| Method 3 | 194.916 | 3.18 | 310.693 |

| Method 4 | 108.373 | 1.72 | 177.442 |

| Method in this paper | 61.779 | 0.97 | 84.637 |

| MAE/kW | MAPE/% | RMSE/kW | |

|---|---|---|---|

| Method 1 | 599.462 | 8.98 | 936.248 |

| Method 2 | 441.105 | 6.61 | 479.568 |

| Method 3 | 318.029 | 4.73 | 416.602 |

| Method 4 | 128.223 | 1.92 | 297.485 |

| Method in this paper | 38.586 | 0.58 | 45.825 |

| Algorithm Used | Algorithm-Related Settings | |

|---|---|---|

| Method 1 | Extreme gradient boost (XGBoost)algorithm | Number of trees: 100 Maximum tree depth: 20 Leaf nodes: 40 |

| Method 2 | Light gradient boost machine (LightGBM) algorithm | Number of trees: 100 Maximum tree depth: 20 Leaf nodes: 40 |

| Method 3 | Least squares support vector machine (LSSVM) algorithm | Kernel function: RBF Learning rate: 0.01 |

| Method 4 | LSTM algorithm | Neurons: 128 Activation function: RELU Learning rate: 0.01 Training style: Adam |

| Method in this paper | WOA-VMD-TCN-improved GRU algorithm (method in this paper) | Neurons: 128 Activation function: RELU Learning rate: 0.01 Training style: Adam |

| MAE/kW | MAPE/% | RMSE/kW | |

|---|---|---|---|

| Method 1 | 124.053 | 2.53 | 153.771 |

| Method 2 | 98.312 | 1.82 | 123.594 |

| Method 3 | 94.052 | 1.79 | 119.776 |

| Method 4 | 86.351 | 1.57 | 108.573 |

| Method in this paper | 50.561 | 0.96 | 67.511 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wan, Z.; Li, H. Short-Term Power Load Forecasting Based on Feature Filtering and Error Compensation under Imbalanced Samples. Energies 2023, 16, 4130. https://doi.org/10.3390/en16104130

Wan Z, Li H. Short-Term Power Load Forecasting Based on Feature Filtering and Error Compensation under Imbalanced Samples. Energies. 2023; 16(10):4130. https://doi.org/10.3390/en16104130

Chicago/Turabian StyleWan, Zheng, and Hui Li. 2023. "Short-Term Power Load Forecasting Based on Feature Filtering and Error Compensation under Imbalanced Samples" Energies 16, no. 10: 4130. https://doi.org/10.3390/en16104130

APA StyleWan, Z., & Li, H. (2023). Short-Term Power Load Forecasting Based on Feature Filtering and Error Compensation under Imbalanced Samples. Energies, 16(10), 4130. https://doi.org/10.3390/en16104130