Power Quality Disturbance Classification Based on Parallel Fusion of CNN and GRU

Abstract

1. Introduction

- (1)

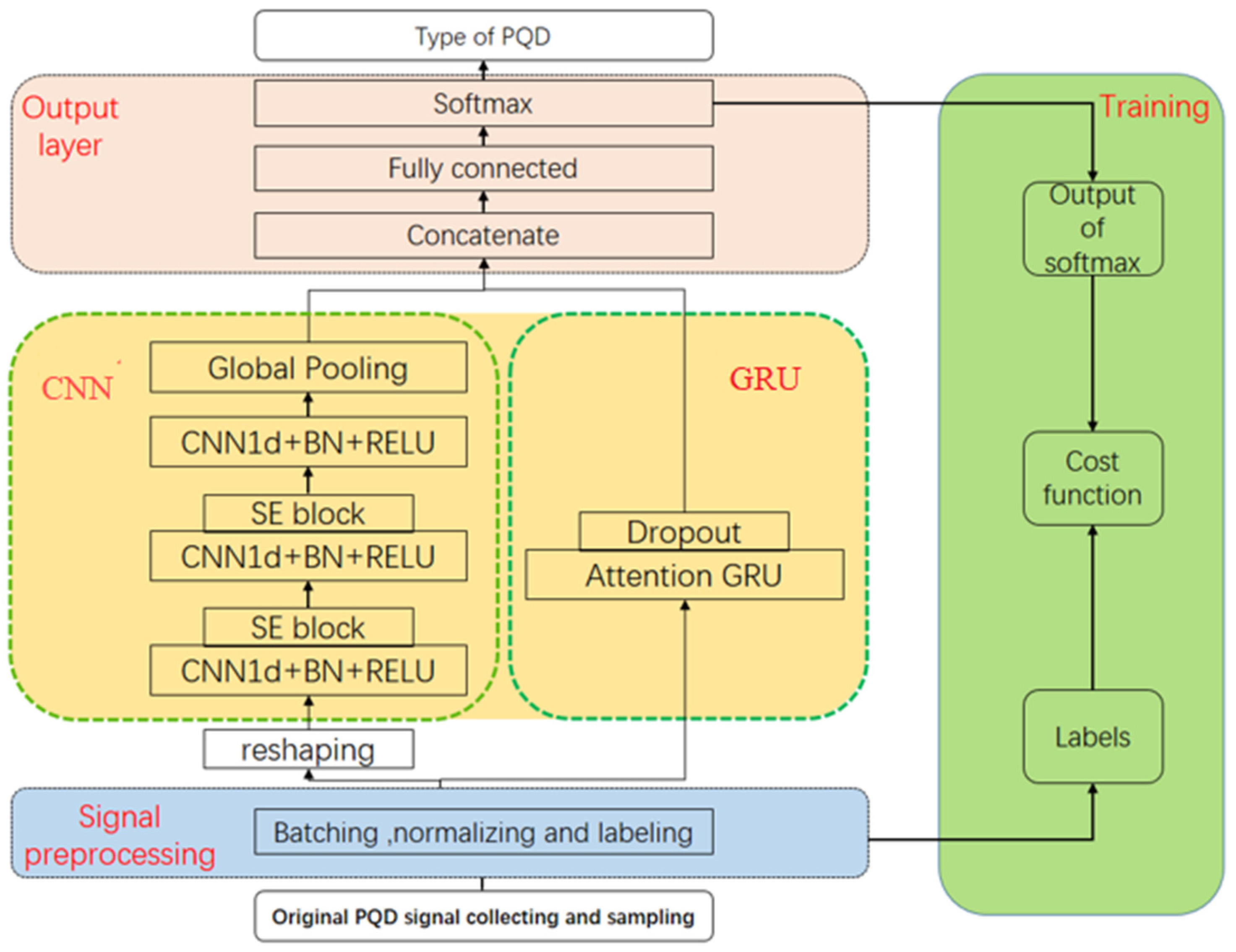

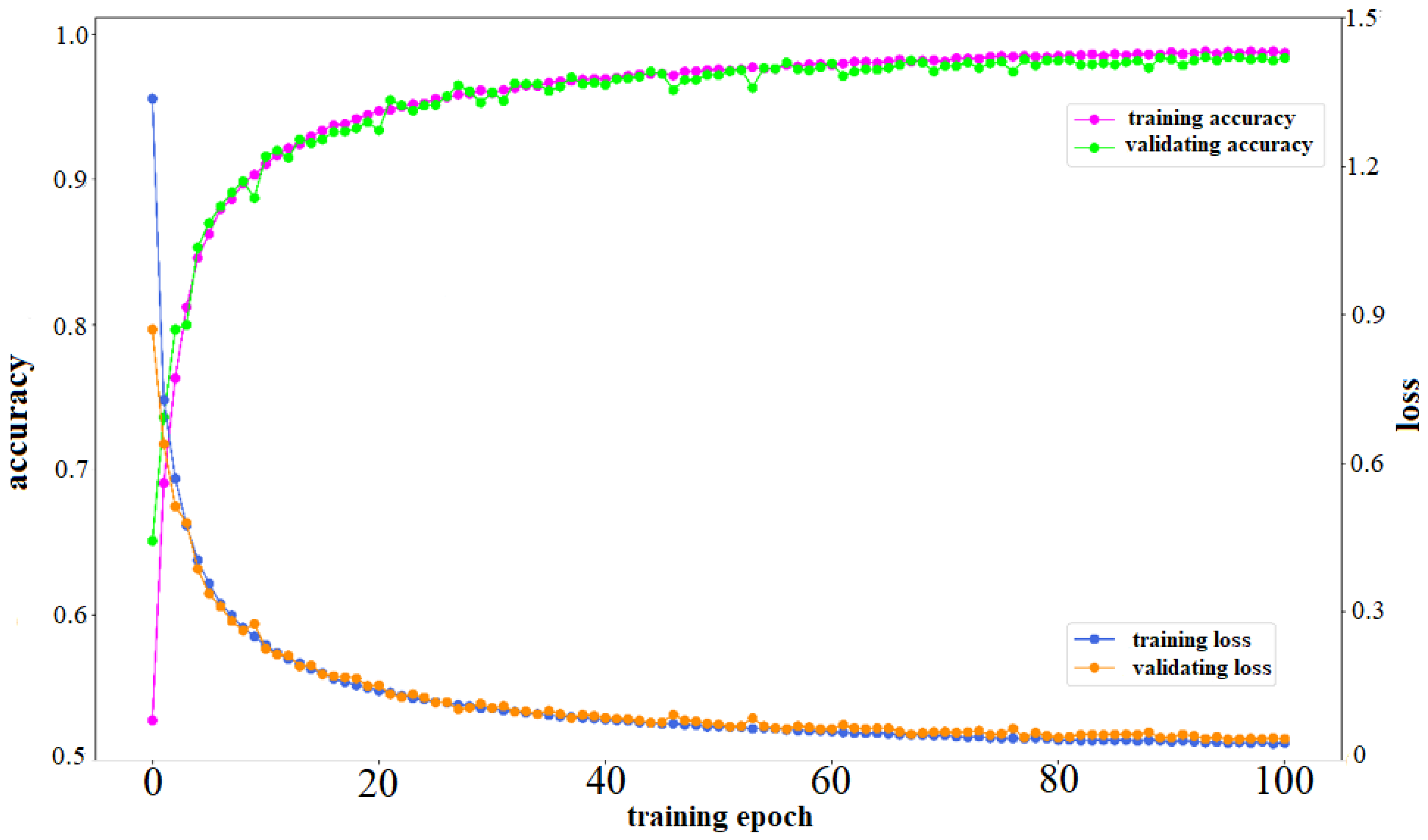

- This paper proposes a novel parallel constructional network (called CNN-GRU-P) composed of a CNN block and a GRU network block for classifying PQDs. By transmitting PQDs into both network blocks simultaneously, the proposed method provides a more comprehensive understanding of the PQDs from two different views. The output of the two networks is fused through the fully connected layer and then transmitted to the Softmax activation layer for classification to obtain a more accurate classification result.

- (2)

- A CNN is utilized to extract short-term features from input PQDs. To further improve the accuracy of the classification, a squeeze-and-excitation operation is incorporated into the convolutional block. The squeeze operation is responsible for extracting contextual information, while the excitation operation captures channel-wise dependencies. By incorporating SE blocks, the weights of feature channels can be recalibrated, resulting in adaptively enhancing feature channels that contain important information and suppressing irrelevant feature channels.

- (3)

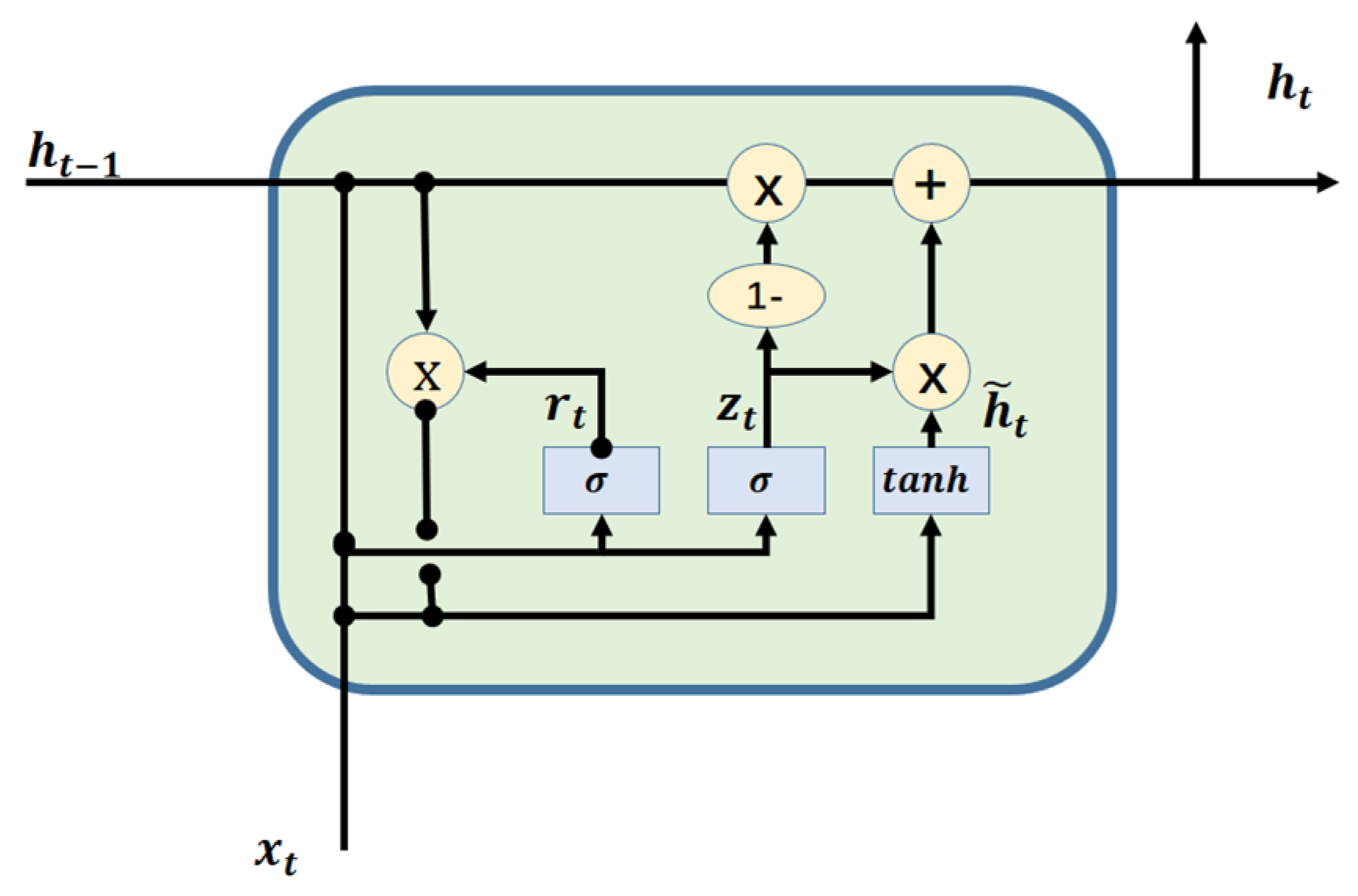

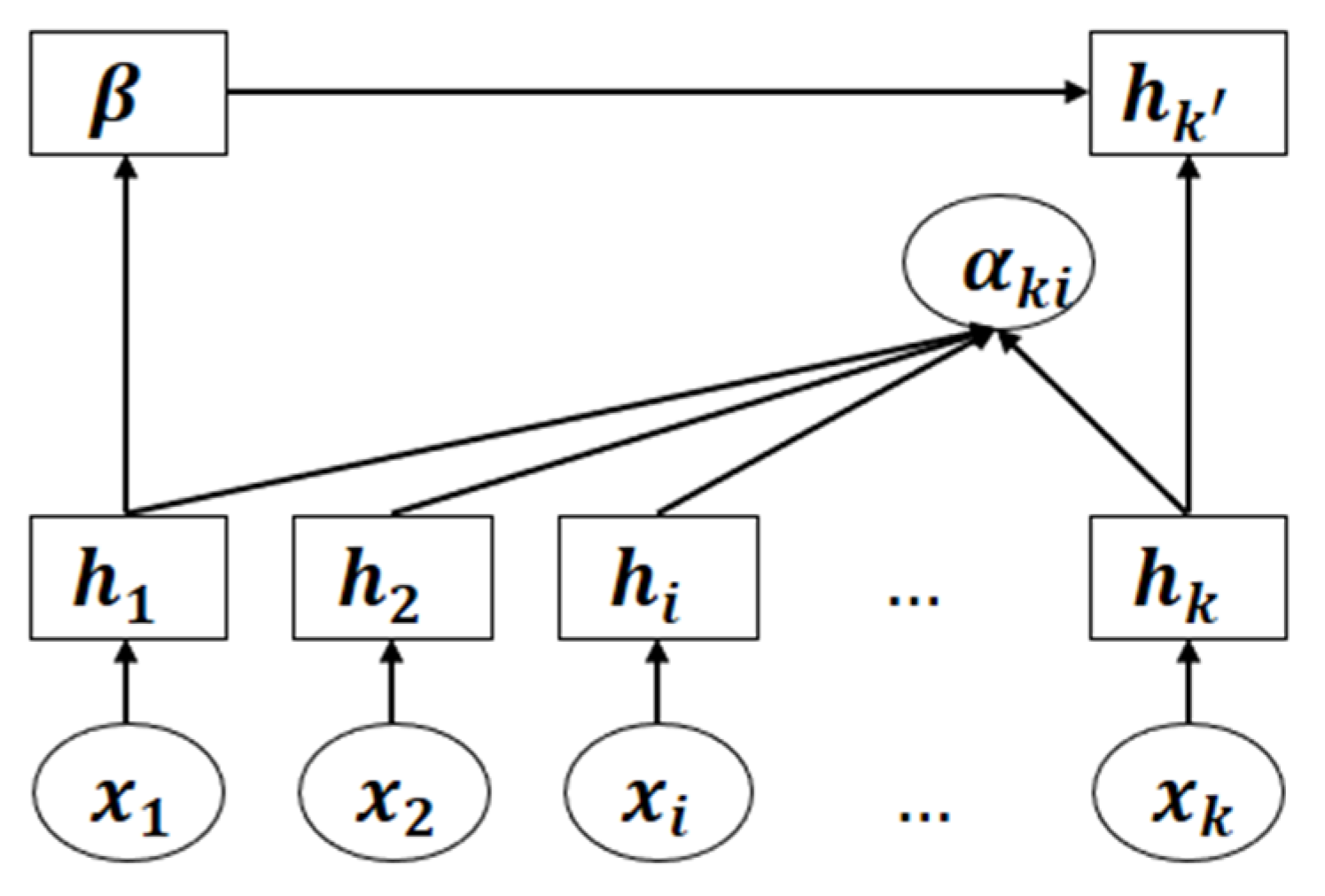

- A GRU network with an attention mechanism is utilized to extract long-term features from PQDs. The attention mechanism is able to assign correlation coefficients between each memory unit, thereby highlighting the impact of important information. This approach significantly enhances the feature extraction ability of the GRU network for PQDs.

- (4)

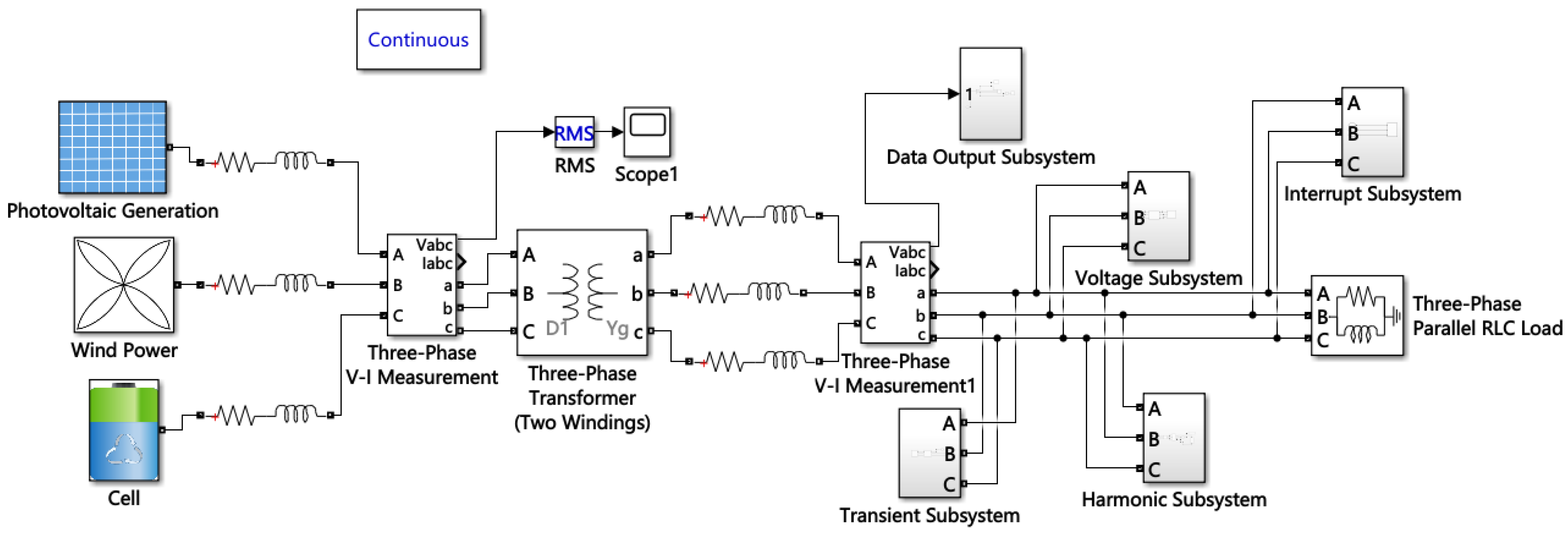

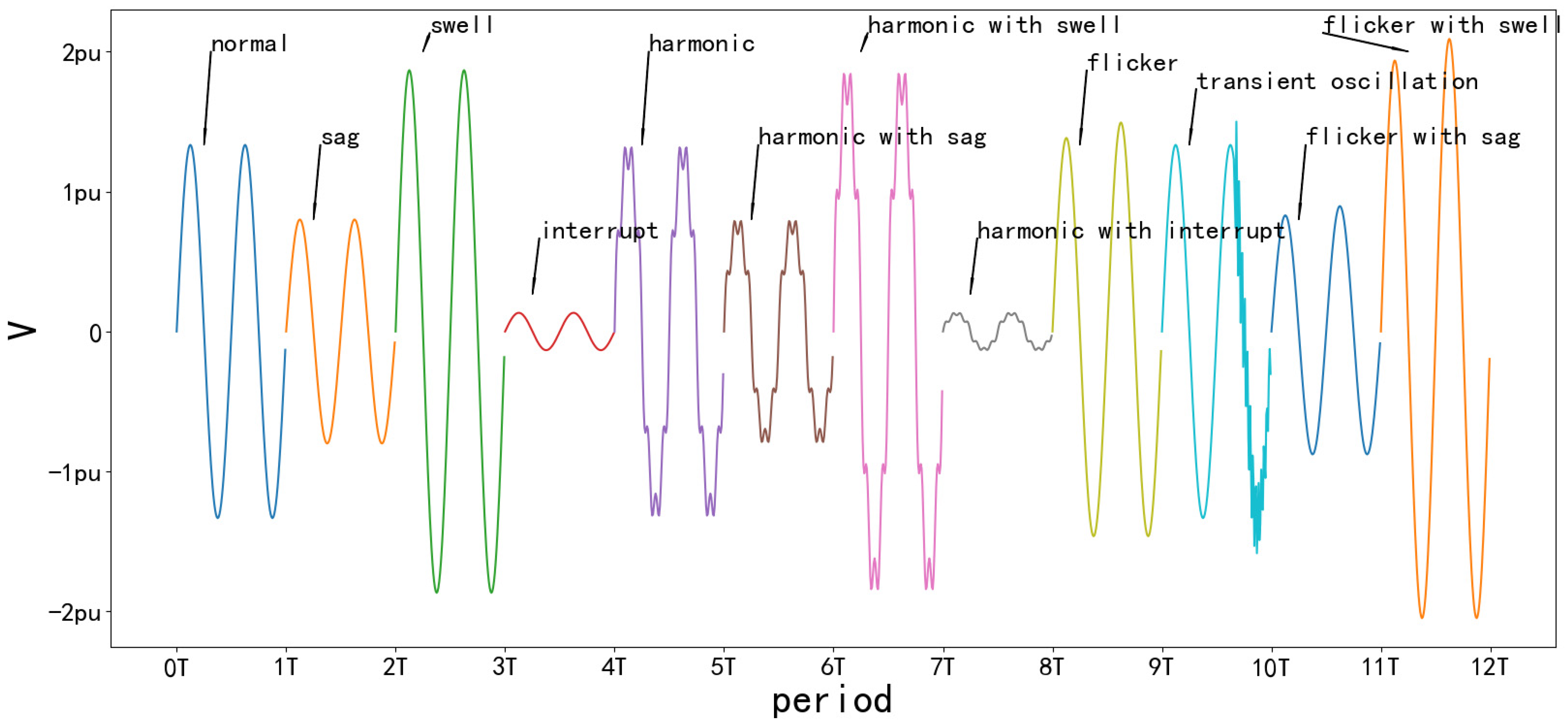

- In order to further analyze the key factors that lead to PQDs in microgrids and validate the effectiveness of the proposed method, a simulation model based on MATLAB-Simulink was established to simulate twelve different types of PQDs. These PQDs are generated through three-phase faults, switching of heavy loads and capacitor banks, and connecting nonlinear loads.

2. Basic Principles of CNN and GRU Neural Network

2.1. Convolutional Neural Network with Squeeze-and-Excitation

2.2. Gate Recurrent Unit with Attention Mechanism

3. PQD Classification Based on CNN-GRU-P

4. Simulation and Analysis

5. Conclusions

- (1)

- This article proposes a novel parallel neural network (CNN-GRU-P) that combines a CNN with SE blocks and a GRU network with an attention mechanism for the classification of PQDs. The CNN-GRU-P method leverages the short-term feature extraction ability of a CNN and the long-term feature extraction ability of a GRU, while adding SE modules and attention mechanisms to enhance the network’s training efficiency. The end-to-end training processes of the network enable automatic feature extraction and selection, merging and replacing existing feature extraction, selection, and classification in the network. Experimental results demonstrate that the classification accuracy of the CNN-GRU-P network in single and composite disturbances outperforms other networks and exhibits good noise resistance. The application of this classification network to power quality classification can improve power quality and ensure the operational reliability of multi-energy systems. It should be noted that the proposed network structure is relatively complex, resulting in longer training times and requiring good training equipment. Nonetheless, the CNN-GRU-P method demonstrates promising results and has the potential to contribute significantly to the field of power quality classification.

- (2)

- A simulation model was established to analyze the factors leading to microgrid PQDs, such as three-phase faults, nonlinear loads, and large capacitor banks. The simulation results show that this method is suitable for accurately identifying single and composite power quality disturbances and provides a feasible solution for solving serious power quality problems in microgrids.

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Zhu, R.; Gong, X.; Hu, S.; Wang, Y. Power Quality Disturbances Classification via Fully-Convolutional Siamese Network and k-Nearest Neighbor. Energies 2019, 12, 4732. [Google Scholar] [CrossRef]

- Oubrahim, Z.; Amirat, Y.; Benbouzid, M.; Ouassaid, M. Power Quality Disturbances Characterization Using Signal Processing and Pattern Recognition Techniques: A Comprehensive Review. Energies 2023, 16, 2685. [Google Scholar] [CrossRef]

- Liao, J.; Zhou, N.; Wang, Q.; Li, C.; Yang, J. Definition and correlation analysis of DC distribution network power quality indicators. Proc. CSEE 2018, 38, 6847–6860+7119. [Google Scholar]

- Kumar, R.; Singh, B.; Shahani, D.T. Symmetrical Components-Based Modified Technique for Power-Quality Disturbances Detection and Classification. IEEE Trans. Ind. Appl. 2016, 16, 3443–3450. [Google Scholar] [CrossRef]

- Zhang, Y.; Zhang, Y.; Zhou, X. Classification of power quality disturbances using visual attention mechanism and feed-forward neural network. Measurement 2022, 188, 110390. [Google Scholar] [CrossRef]

- Wright, P.S. Short-time Fourier transforms and Wigner-Ville distributions applied to the calibration of power frequency harmonic analyzers. IEEE Trans. Instrum. Meas. 1999, 48, 475–478. [Google Scholar] [CrossRef]

- Grossmann, A.; Morlet, J. Decomposition of Hardy Functions into Square Integrable Wavelets of Constant Shape. Math. Anal. 1984, 15, 723–736. [Google Scholar] [CrossRef]

- Abdelsalam, A.A.; Eldesouky, A.A.; Sallam, A.A. Characterization of power quality disturbances using hybrid technique of linear Kalman filter and fuzzy-expert system. Electr. Power Syst. Res. 2012, 83, 41–50. [Google Scholar] [CrossRef]

- Stockwell, R.G.; Mansinha, L.; Lowe, R.P. Localization of the complex spectrum: The S transform. IEEE Trans. Signal Process. 1996, 44, 998–1001. [Google Scholar] [CrossRef]

- Huang, N.E.; Shen, Z.; Long, S.R.; Wu, M.C.; Shih, H.H.; Zheng, Q.; Yen, N.; Tung, C.C.; Liu, H.H. The empirical mode decomposition and the Hilbert spectrum for nonlinear and non-stationary time series analysis. Proc. R. Soc. Lond. Ser. A 1998, 454, 903–995. [Google Scholar] [CrossRef]

- Huang, N.; Peng, H.; Cai, G.; Xu, D. Power quality composite disturbance feature selection and Optimal Decision Tree construction. Proc. CSEE 2017, 37, 776–786. [Google Scholar]

- Cortes, C.; Vapnik, V. Support-vector networks. Mach. Learn. 1995, 20, 273–297. [Google Scholar] [CrossRef]

- Yi, L.; Li, K.; Li, L.; Chen, Z.; Meng, Q. Three-Layer Bayesian Network for Classification of Complex Power Quality Disturbances. IEEE Trans. Ind. Inform. 2018, 14, 3997–4006. [Google Scholar]

- Wang, H.; Wang, P.; Liu, T.; Zhang, B. Power quality disturbance classification based on growth-pruning optimized RBF neural network. Power Syst. Technol. 2018, 42, 2408–2415. [Google Scholar]

- Breiman, L. Random Forests. Machine Learning 2001, 45, 5–32. [Google Scholar] [CrossRef]

- Giri, A.K.; Arya, S.R.; Maurya, R.; Babu, B.C. Power Quality Improvement in Stand-alone SEIG based Distributed Generation System using Lorentzian Norm Adaptive Filter. IEEE Trans. Ind. Appl. 2018, 54, 5256–5266. [Google Scholar] [CrossRef]

- Garcia, C.I.; Grasso, F.; Luchetta, A.; Piccirilli, M.C.; Talluri, G. A Comparison of Power Quality Disturbance Detection and Classification Methods Using CNN, LSTM and CNN-LSTM. Appl. Sci. 2020, 10, 6755. [Google Scholar] [CrossRef]

- Fukushima, K. Neocognitron: A self-organizing neural network model for a mechanism of pattern recognition unaffected by shift in position. Biol. Cybern. 1980, 36, 193–202. [Google Scholar] [CrossRef]

- Qiu, W.; Tang, Q.; Liu, J.; Yao, W. An Automatic Identification Framework for Complex Power Quality Disturbances Based on Multi-fusion Convolutional Neural Network. IEEE Trans. Ind. Inform. 2020, 16, 3233–3241. [Google Scholar] [CrossRef]

- Deng, Y.; Wang, L.; Jia, H.; Tong, X.; Li, F. A Sequence-to-Sequence Deep Learning Architecture Based on Bidirectional GRU for Type Recognition and Time Location of Combined Power Quality Disturbance. IEEE Trans. Ind. Inform. 2019, 15, 4481–4493. [Google Scholar] [CrossRef]

- Cho, K.; Merrienboer, B.V.; Gulcehre, C.; Bahdanau, D.; Bougares, F.; Schwenk, H.; Bengio, Y. Learning Phrase Representations using RNN Encoder-Decoder for Statistical Machine Translation. arXiv 2014, arXiv:1406.1078. [Google Scholar]

- Junior, W.L.R.; Borges, F.A.S.; Rabelo, R.D.A.L.; Lima, B.V.A.D.; Alencar, J.E.A.D. Classification of Power Quality Disturbances Using Convolutional Network and Long Short-Term Memory Network. In Proceedings of the 2019 International Joint Conference on Neural Networks (IJCNN), Budapest, Hungary, 14–19 July 2019; pp. 1–6. [Google Scholar]

- Mohan, N.; Soman, K.P.; Vinayakumar, R. Deep power: Deep learning architectures for power quality disturbances classification. In Proceedings of the 2017 International Conference on Technological Advancements in Power and Energy (TAP Energy), Kollam, India, 21–23 December 2017; pp. 1–6. [Google Scholar]

- Hochreiter, S.; Schmidhuber, J. Long Short-Term Memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef]

- Kumar, R.; Singh, B.; Shahani, D.T.; Chandra, A.; Al-Haddad, K. Recognition of Power-Quality Disturbances Using S-Transform-Based ANN Classifier and Rule-Based Decision Tree. IEEE Trans. Ind. Appl. 2015, 51, 1249–1258. [Google Scholar] [CrossRef]

- Wang, S.; Chen, H. A novel deep learning method for the classification of power quality disturbances using deep convolutional neural network. Appl. Energy 2019, 235, 1126–1140. [Google Scholar] [CrossRef]

- Karim, F.; Majumdar, S.; Darabi, H.; Chen, S. LSTM Fully Convolutional Networks for Time Series Classification. IEEE Access 2018, 6, 1662–1669. [Google Scholar] [CrossRef]

- Ioffe, S.; Szegedy, C. Batch Normalization: Accelerating Deep Network Training by Reducing Internal Covariate Shift. arXiv 2015, arXiv:1502.03167. [Google Scholar]

- Jie, H.; Li, S.; Gang, S.; Albanie, S. Squeeze-and-Excitation Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 42, 2011–2023. [Google Scholar]

- Chung, J.; Gulcehre, C.; Cho, K.H.; Bengio, Y. Empirical Evaluation of Gated Recurrent Neural Networks on Sequence Modeling. arXiv 2014, arXiv:1412.3555. [Google Scholar]

- Tolosana, R.; Vera-Rodriguez, R.; Fierrez, J.; Ortega-Garcia, J. Exploring Recurrent Neural Networks for On-Line Handwritten Signature Biometrics. IEEE Access 2018, 6, 5128–5138. [Google Scholar] [CrossRef]

- Luong, M.T.; Pham, H.; Manning, C.D. Effective Approaches to Attention-based Neural Machine Translation. arXiv 2015, arXiv:1508.04025. [Google Scholar]

- Kingma, D.; Ba, J. Adam: A Method for Stochastic Optimization. arXiv 2015, arXiv:1412.6980. [Google Scholar]

- Sun, Z. Introduction to Simulink Simulation and Code Generation Technology to Proficiency; Beihang University Press: Beijing, China, 2015. [Google Scholar]

- Dhote, P.V.; Deshmukh, B.T.; Kushare, B.E. Generation of power quality disturbances using MATLAB-Simulink. In Proceedings of the 2015 International Conference on Computation of Power, Energy, Information and Communication (ICCPEIC), Melmaruvathur, India, 22–23 April 2015; pp. 0301–0305. [Google Scholar]

- Zhang, Q.; Liu, H. Application of LS-SVM in Classification of Power Quality Disturbances. Proc. CSEE 2008, 28, 106–110. [Google Scholar]

| CNN | GRU | CNN-LSTM | CNN-GRU-P’ | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Types of Disturbances | 20 dB | 30 dB | No Noise | 20 dB | 30 dB | No Noise | 20 dB | 30 dB | No Noise | 20 dB | 30 dB | No Noise |

| Sag | 75.3 | 93.7 | 99.2 | 82.7 | 97.9 | 99.7 | 98.9 | 99.5 | 99.5 | 99.4 | 99.8 | 100.0 |

| Swell | 90.2 | 99.8 | 99.9 | 93.0 | 94.3 | 100.0 | 99.8 | 99.8 | 100.0 | 99.8 | 99.9 | 99.9 |

| Interrupt | 90.7 | 98.0 | 99.8 | 100.0 | 99.7 | 98.7 | 95.7 | 99.5 | 100.0 | 94.8 | 99.1 | 99.9 |

| Oscillatory Transient | 100.0 | 100.0 | 100.0 | 99.0 | 99.7 | 100.0 | 100.0 | 100.0 | 100.0 | 100.0 | 100.0 | 100.0 |

| Harmonic | 84.3 | 83.1 | 88.0 | 79.7 | 84.0 | 95.7 | 94.5 | 91.3 | 94.0 | 98.5 | 98.6 | 99.5 |

| Flicker | 86.9 | 97.8 | 99.9 | 88.3 | 94.3 | 100.0 | 99.9 | 100.0 | 100.0 | 99.9 | 100.0 | 100.0 |

| Harmonic with Sag | 86.2 | 93.9 | 98.3 | 88.3 | 91.7 | 99.7 | 97.3 | 99.4 | 99.4 | 98.6 | 99.7 | 99.8 |

| Harmonic with Swell | 85.9 | 97.2 | 99.4 | 84.0 | 85.0 | 96.7 | 98.0 | 99.4 | 99.8 | 98.8 | 99.9 | 100.0 |

| Harmonic with Interrupt | 83.6 | 93.0 | 98.8 | 96.7 | 82.3 | 98.3 | 93.8 | 95.1 | 99.5 | 95.9 | 96.0 | 99.0 |

| Flicker with Sag | 53.0 | 71.0 | 78.7 | 78.3 | 88.3 | 94.3 | 92.2 | 93.0 | 94.5 | 97.2 | 98.0 | 98.9 |

| Flicker with Swell | 82.1 | 88.7 | 88.6 | 88.0 | 88.0 | 93.3 | 94.5 | 90.0 | 96.8 | 97.5 | 98.4 | 97.9 |

| Flicker with Harmonic | 83.6 | 93.0 | 98.8 | 96.7 | 82.3 | 98.3 | 93.8 | 95.1 | 99.5 | 95.9 | 96.0 | 99.0 |

| Average | 84.5 | 92.8 | 95.7 | 89.2 | 92.1 | 98.0 | 97.0 | 97.2 | 98.6 | 98.3 | 99.1 | 99.6 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Cai, J.; Zhang, K.; Jiang, H. Power Quality Disturbance Classification Based on Parallel Fusion of CNN and GRU. Energies 2023, 16, 4029. https://doi.org/10.3390/en16104029

Cai J, Zhang K, Jiang H. Power Quality Disturbance Classification Based on Parallel Fusion of CNN and GRU. Energies. 2023; 16(10):4029. https://doi.org/10.3390/en16104029

Chicago/Turabian StyleCai, Jiajun, Kai Zhang, and Hui Jiang. 2023. "Power Quality Disturbance Classification Based on Parallel Fusion of CNN and GRU" Energies 16, no. 10: 4029. https://doi.org/10.3390/en16104029

APA StyleCai, J., Zhang, K., & Jiang, H. (2023). Power Quality Disturbance Classification Based on Parallel Fusion of CNN and GRU. Energies, 16(10), 4029. https://doi.org/10.3390/en16104029