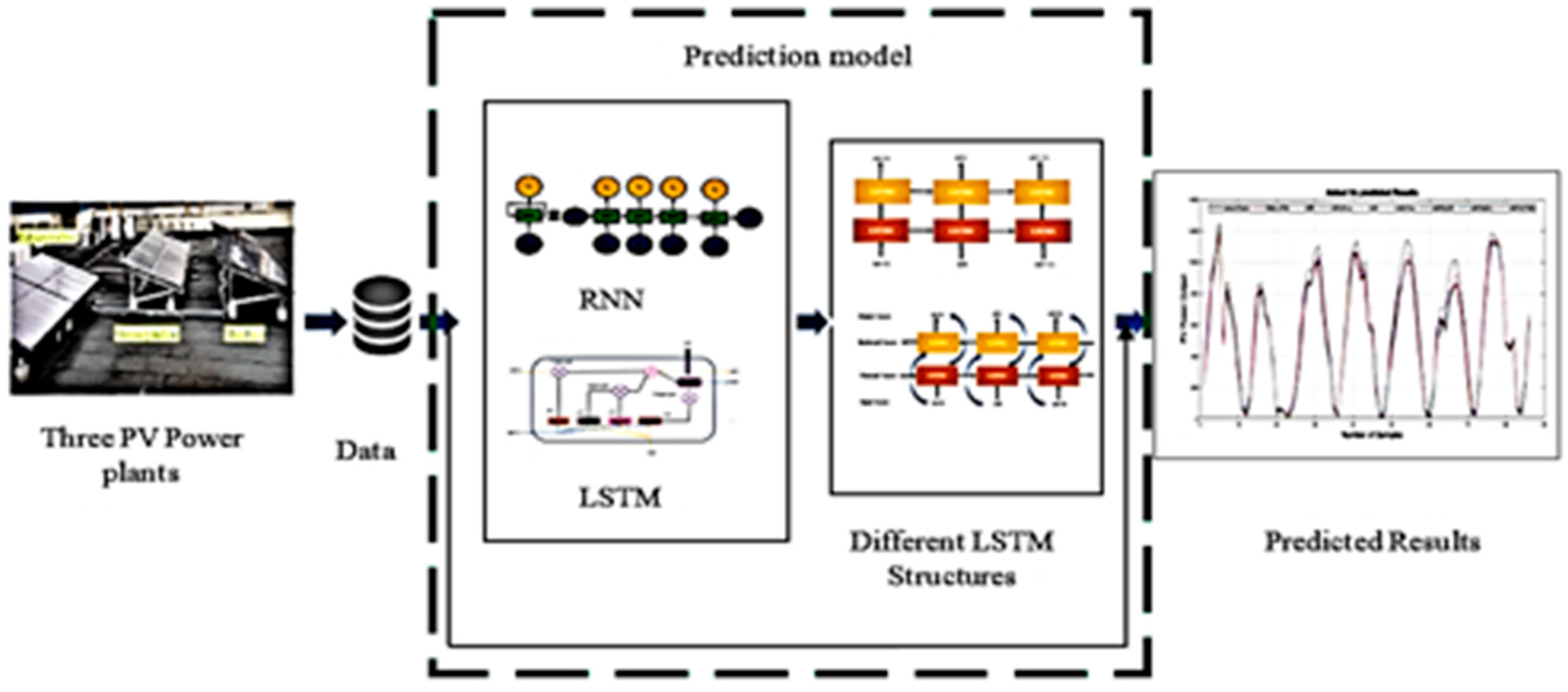

An Hour-Ahead PV Power Forecasting Method Based on an RNN-LSTM Model for Three Different PV Plants

Abstract

:1. Introduction

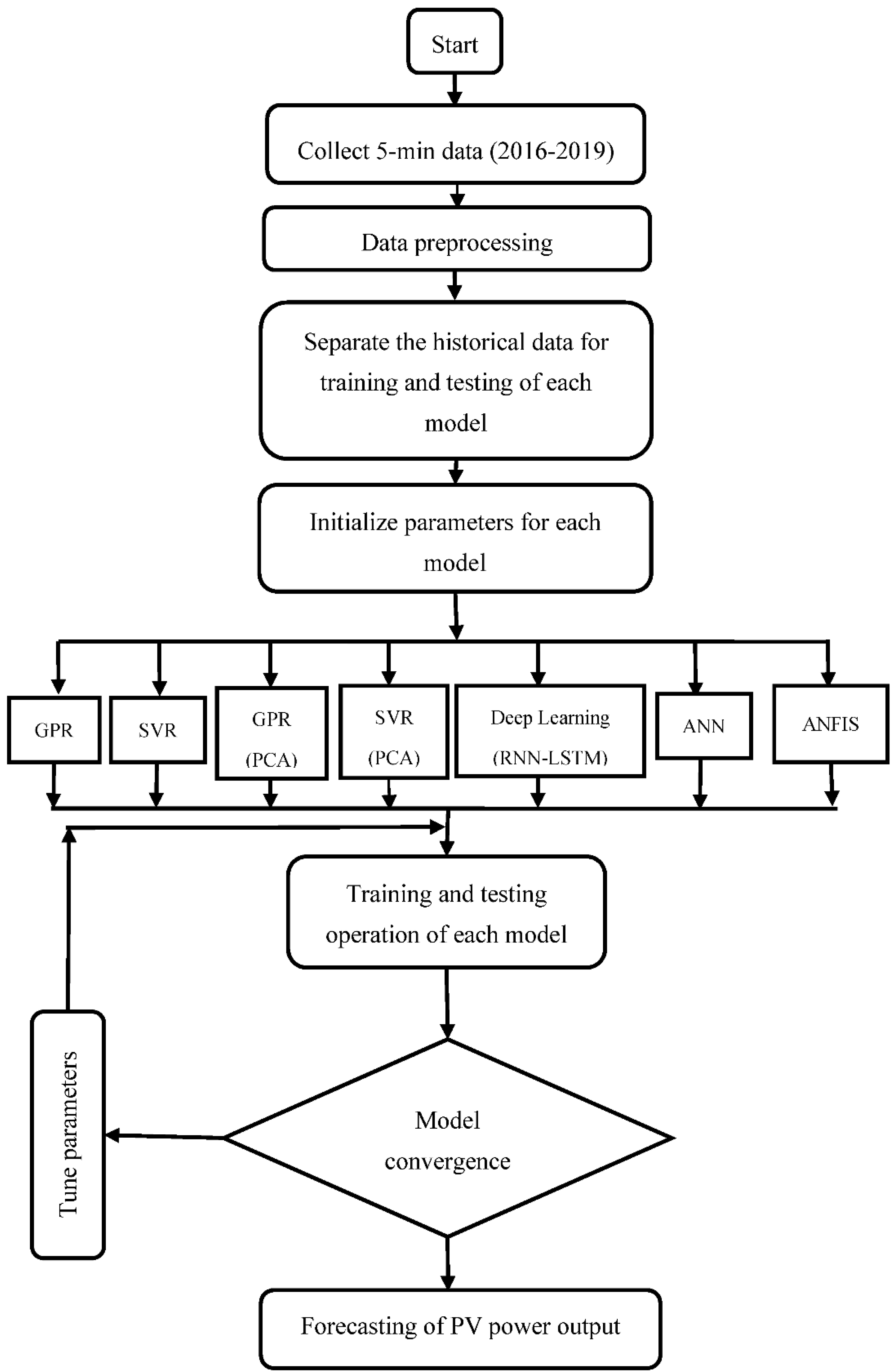

- Data preprocessing is performed for three different plants over the four years recording actual PV data.

- A deep learning algorithm (RNN-LSTM) is proposed for hour-ahead forecasting of output PV power for three independent PV plants on yearly basis for a four-year period.

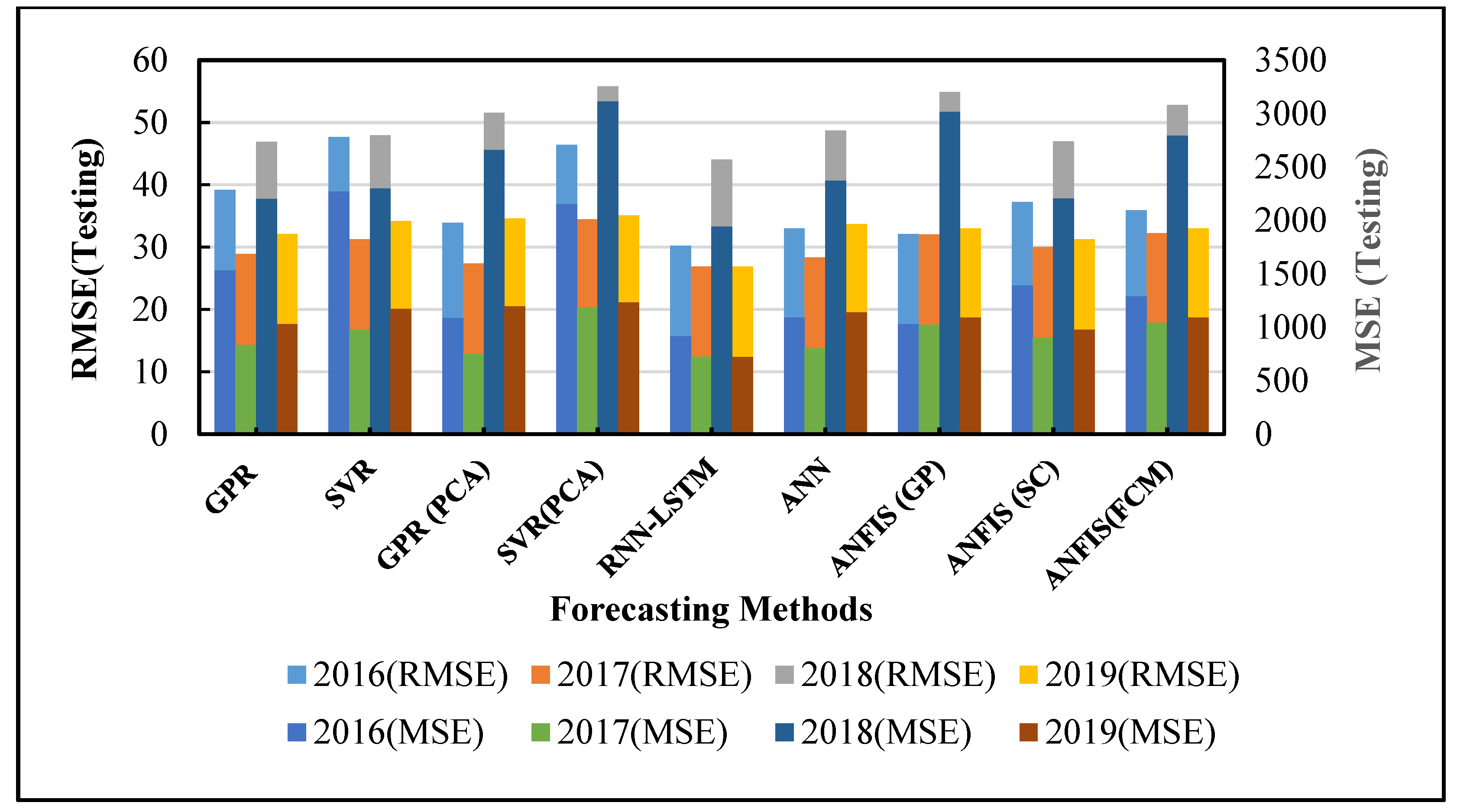

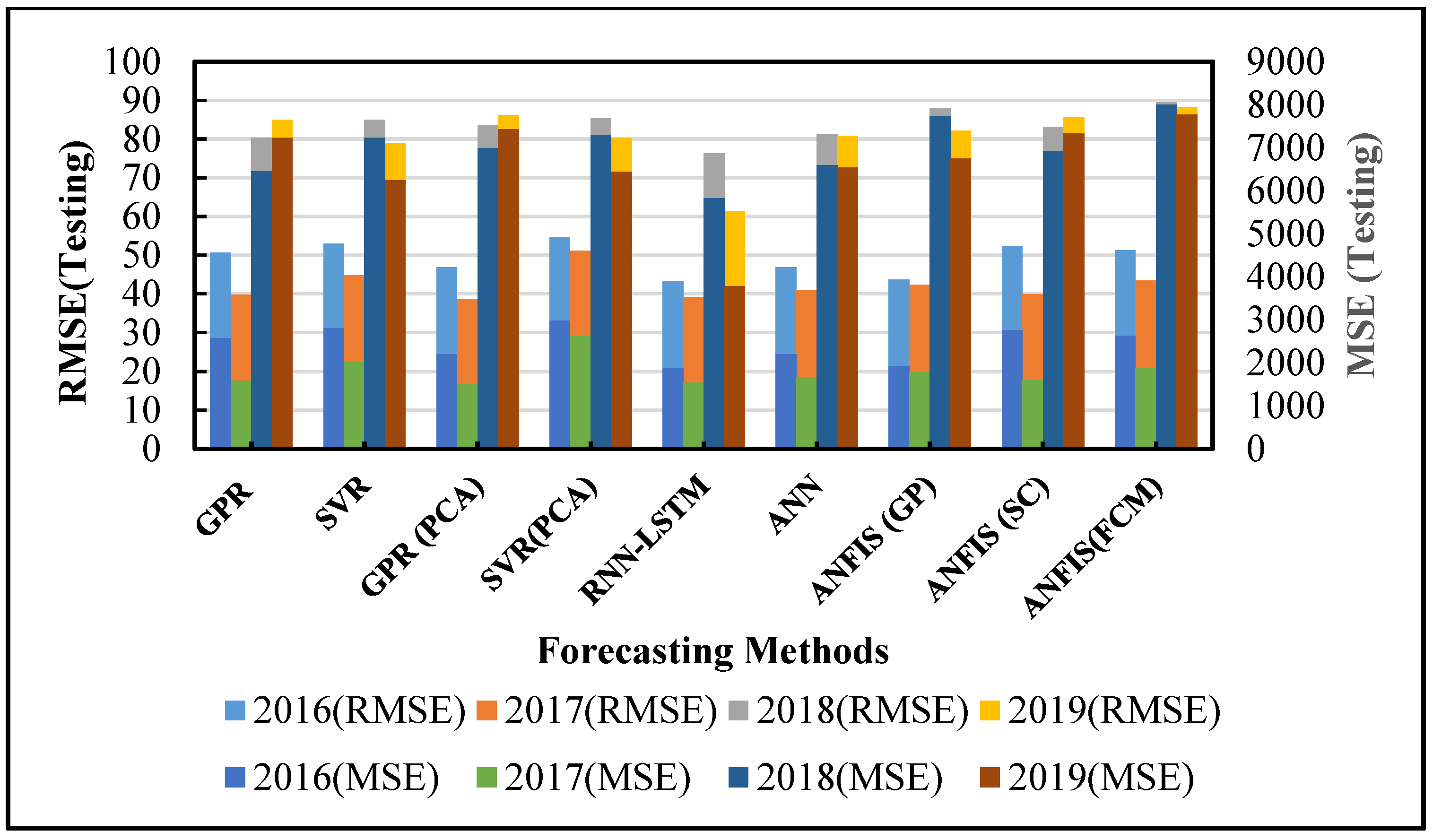

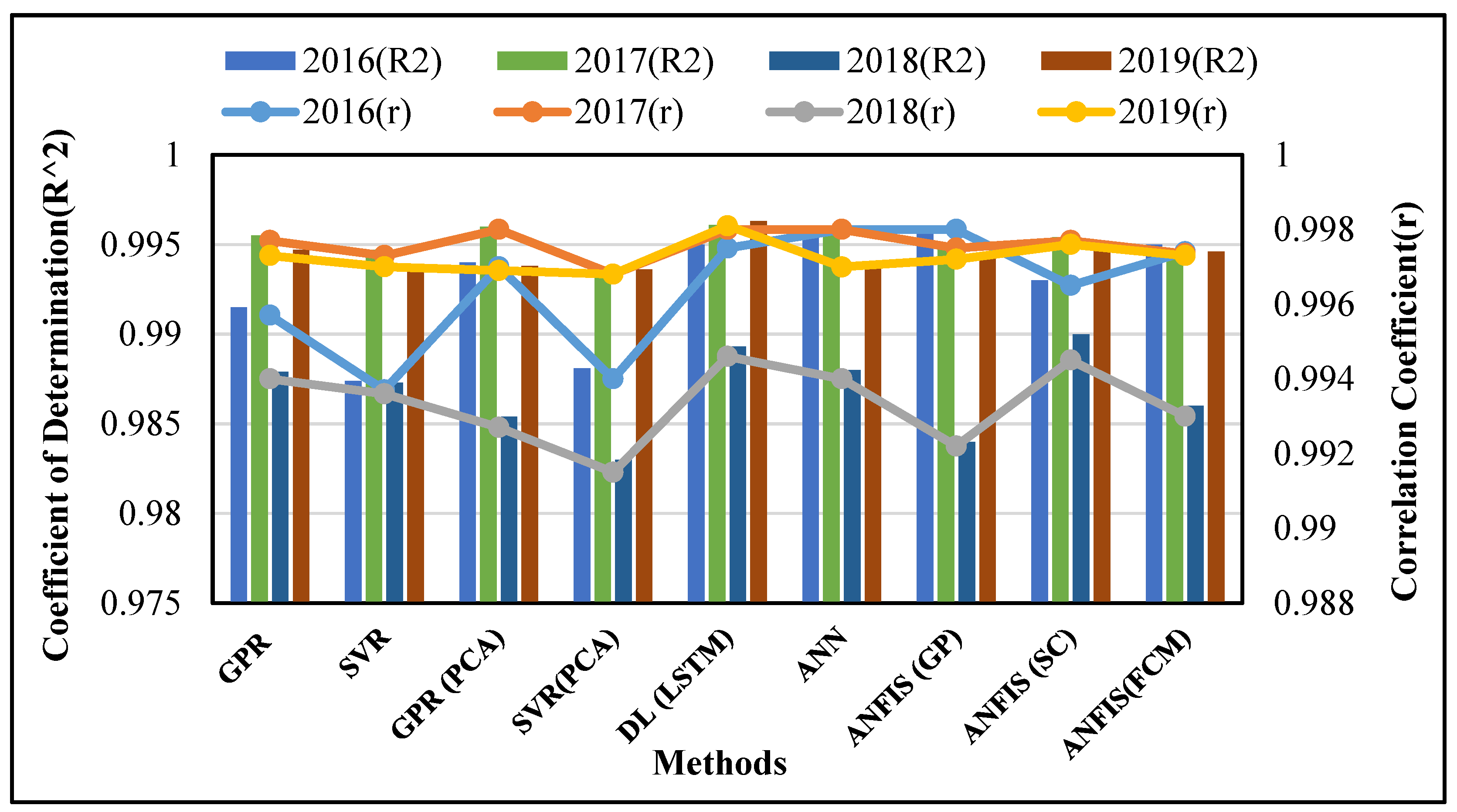

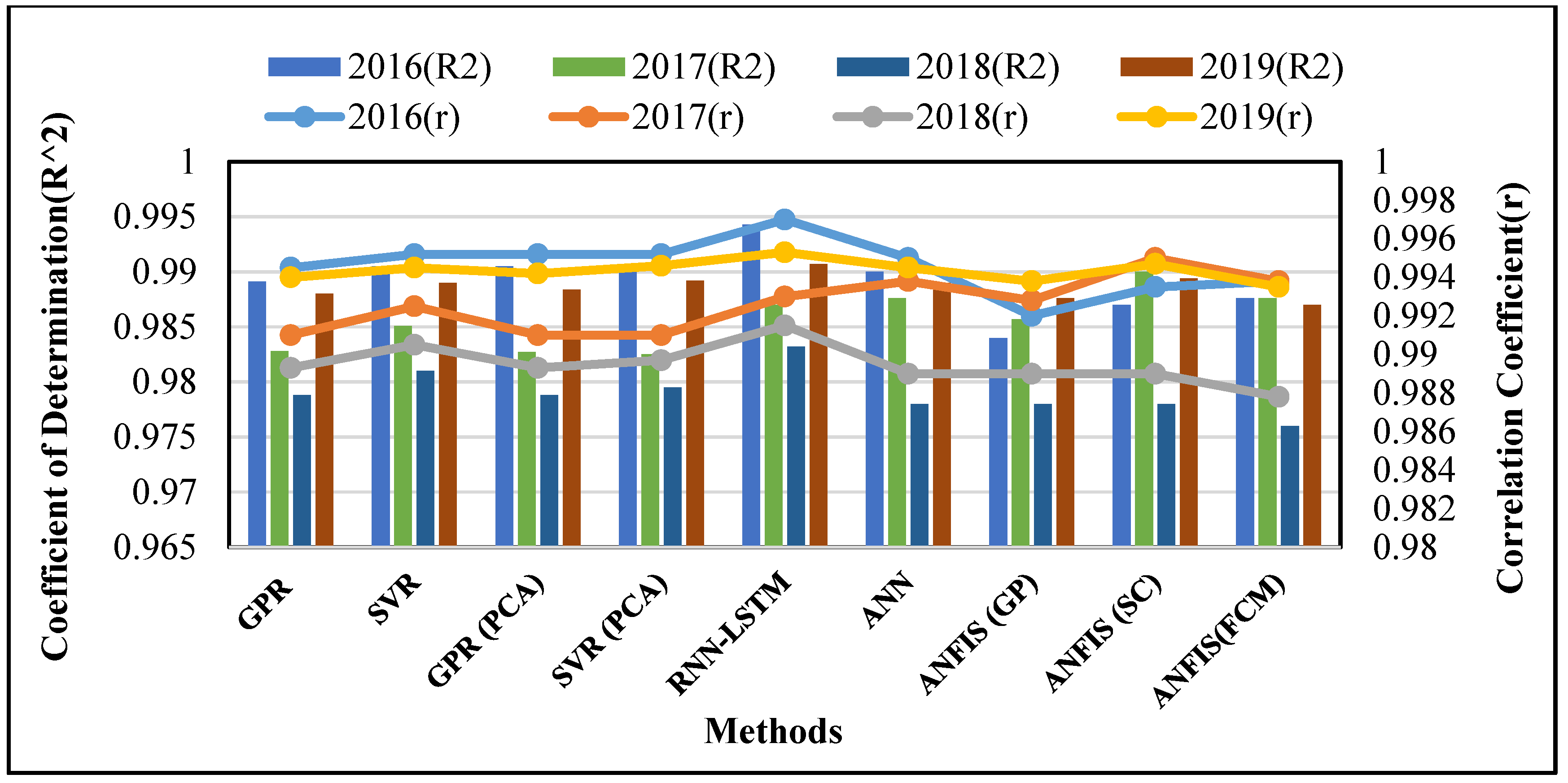

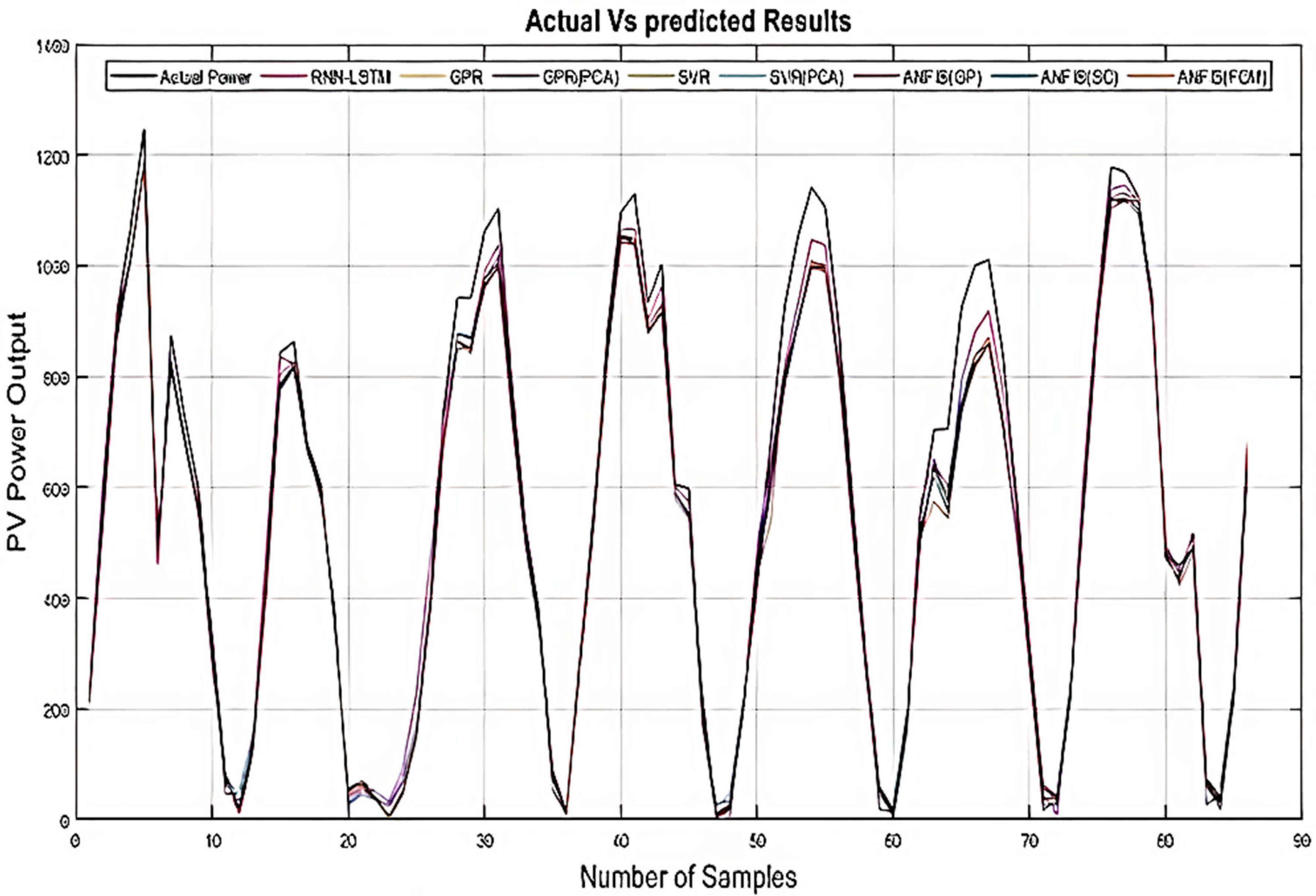

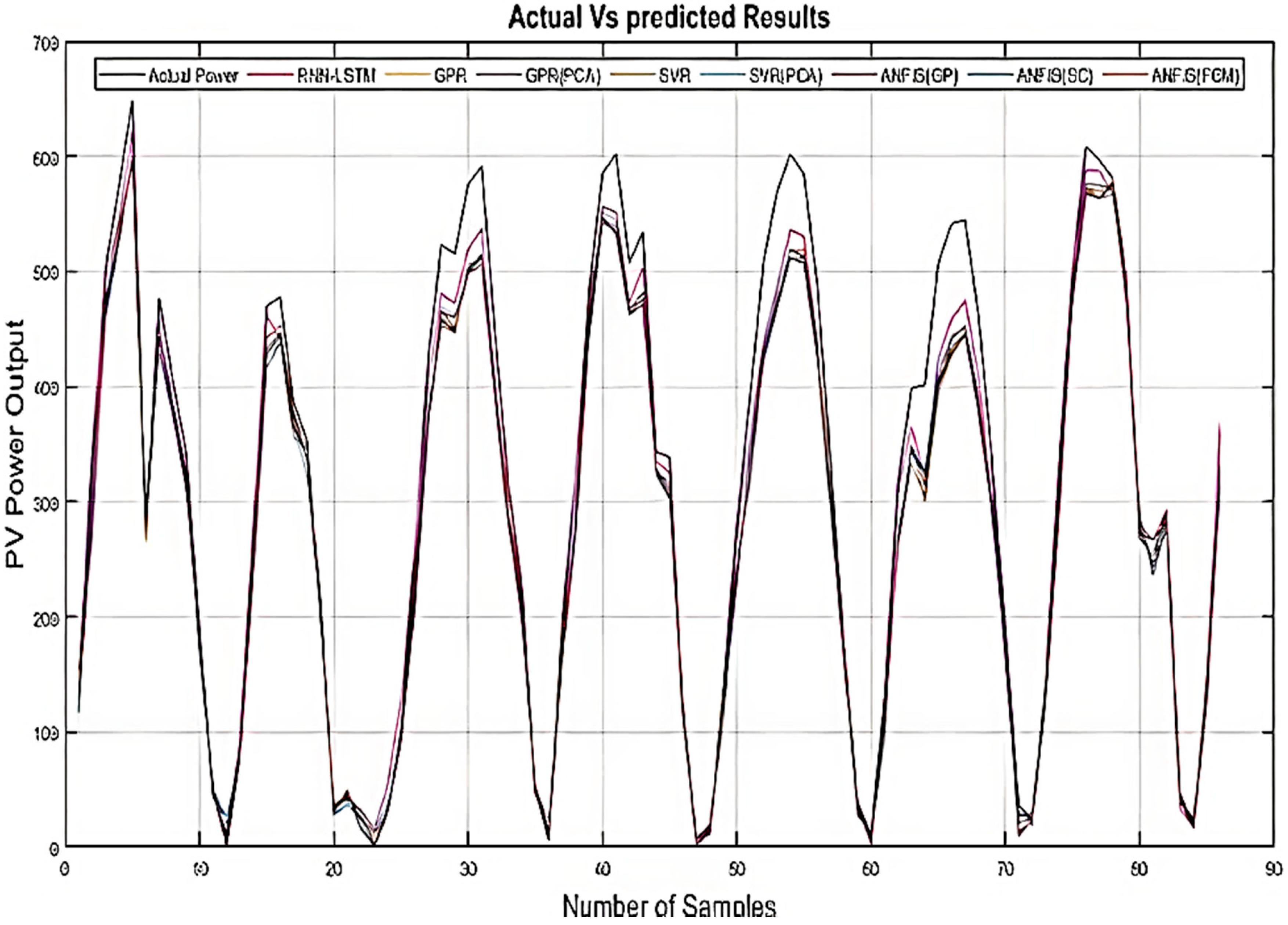

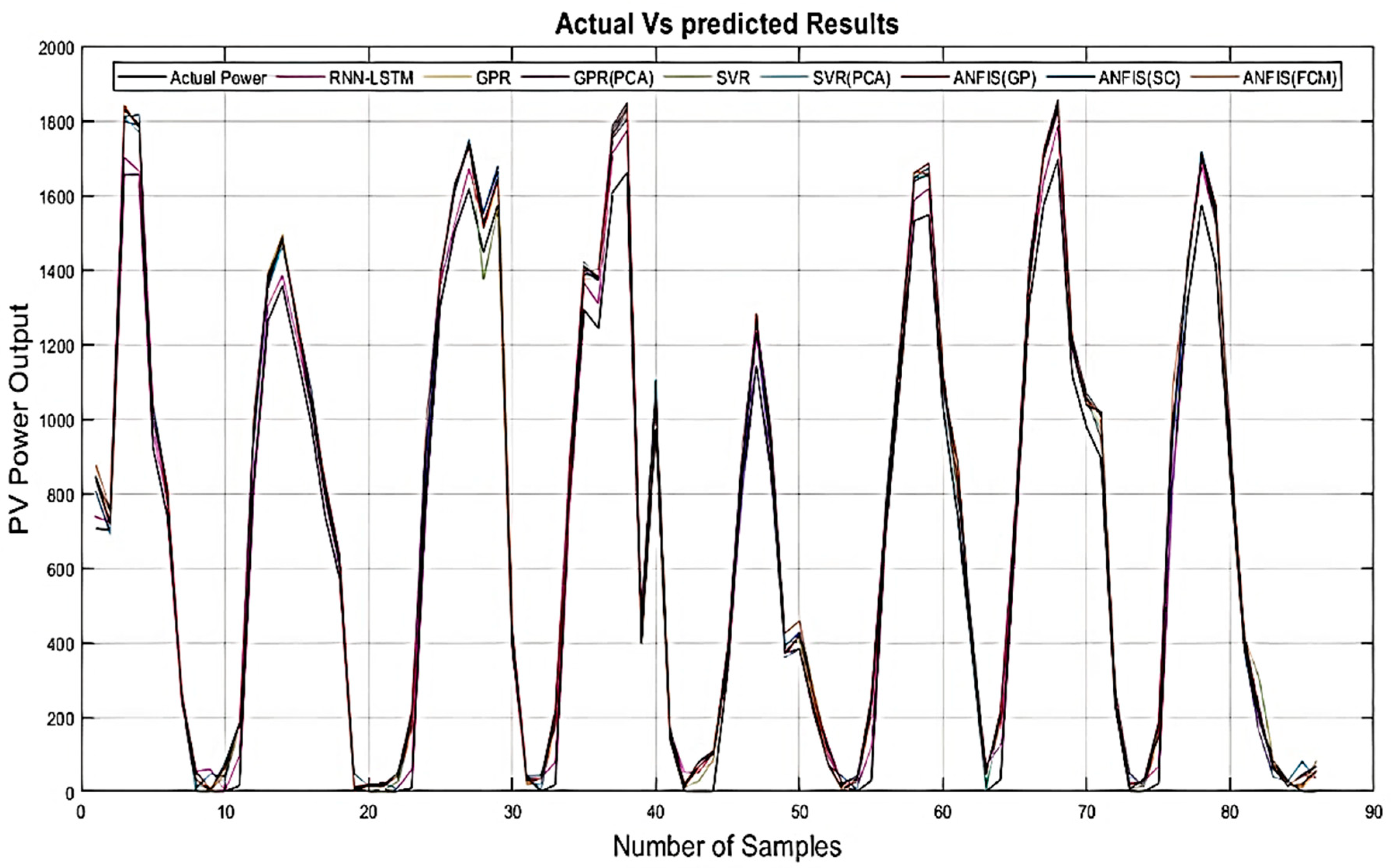

- Annual hour-ahead forecasting of PV output power using SVR, SVR(PCA), GPR, GPR (PCA), ANN, and ANFIS is also performed and compared with the proposed technique (RNN-LSTM) for the considered period (2016–2019).

- Different LSTM structures are also investigated with RNN to determine the most feasible structure, based on 2019 data only.

2. Methodology

2.1. PV Plants Description and Data Set

2.2. Data Preprocessing

2.3. Regression for PV Power Output Prediction

2.3.1. Gaussian Process Regression

2.3.2. Support Vector Regression (SVR)

2.3.3. Principal Component Analysis (PCA)

2.4. Artificial Neural Network (ANN) for PV Power Output Prediction

2.5. Adaptive Neuro-Fuzzy Inference System (ANFIS)

2.5.1. Grid Partitioning

2.5.2. Subtractive Clustering

2.5.3. Fuzzy Cluster Means (FCM)

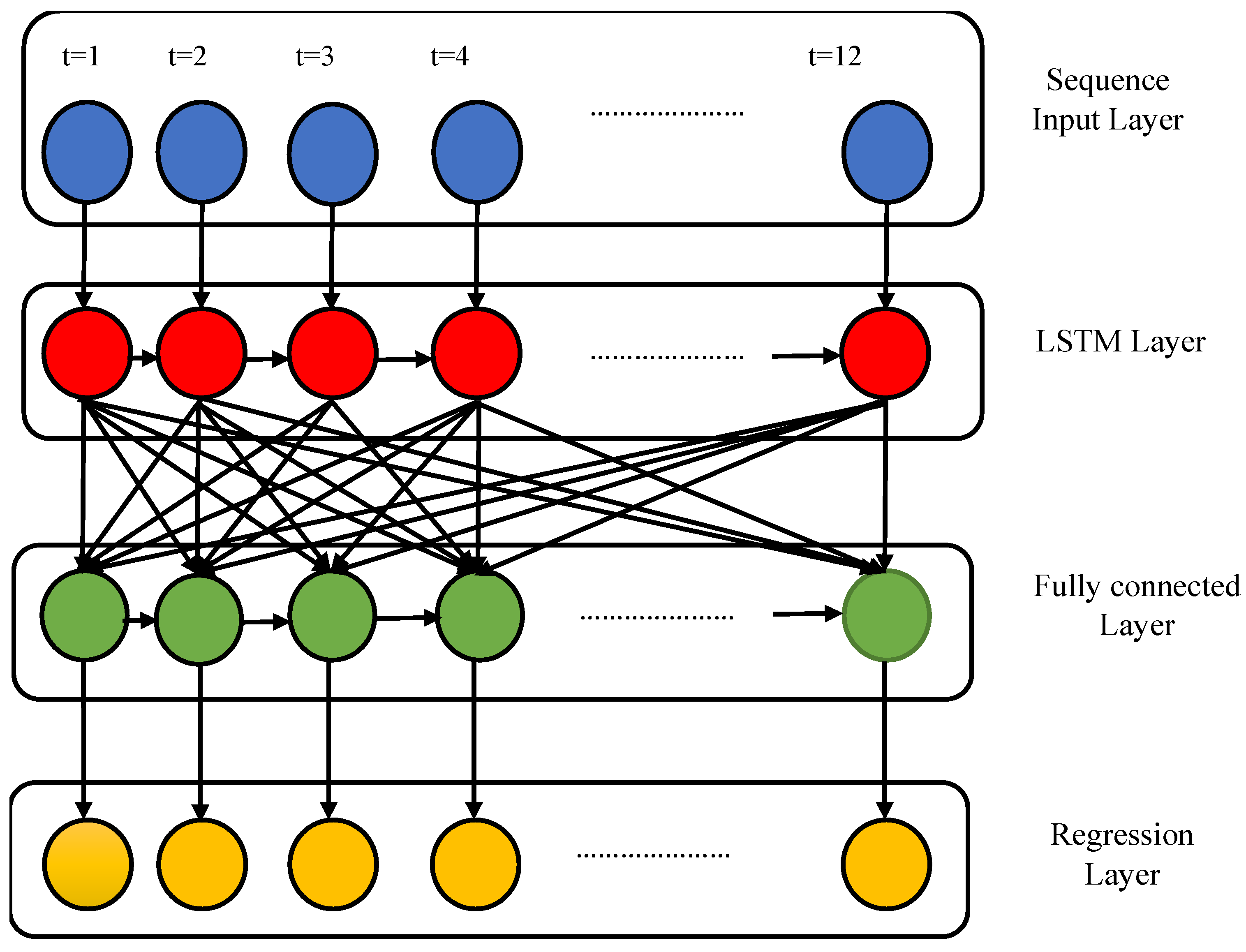

2.6. Deep Learning Network (RNN-LSTM)

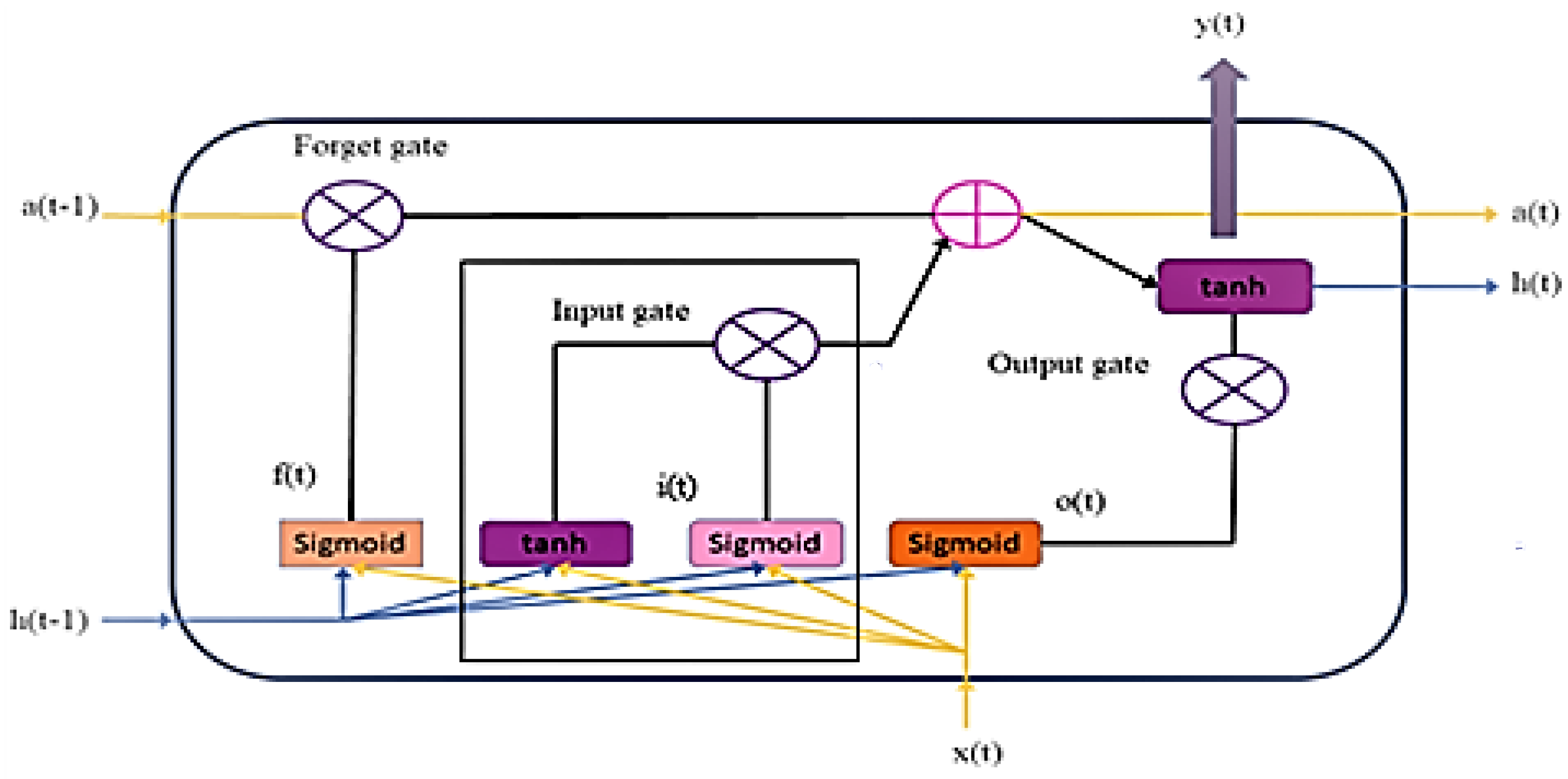

2.6.1. A Basic LSTM Structure

- The LSTM cell decides whether the stored information from the previous cell state a(t − 1) should be removed. On activation, the forget gate receives the current and previous inputs and provides outputs in the form of zero and one. f(t) is calculated using the equation below [60].

- The data to be stored in the cell is divided into two parts: and . The next state is decided by combining these two portions of information [60].

- The information from the previous stages is used to update the new cell state. By losing information in the first phase, f(t) is multiplied with the prior state a(t − 1). The generated information is multiplied by the input. Both components are added to decide the next state a(t), as shown in Equation (16) [60]:

- The final result is decoded in this stage. The hidden state h(t) is discovered by combining a(t)tanh and o(t) in order to conserve important information. The output gate determines the output. The following are the equations for o(t), h(t), and y(t) [60]:

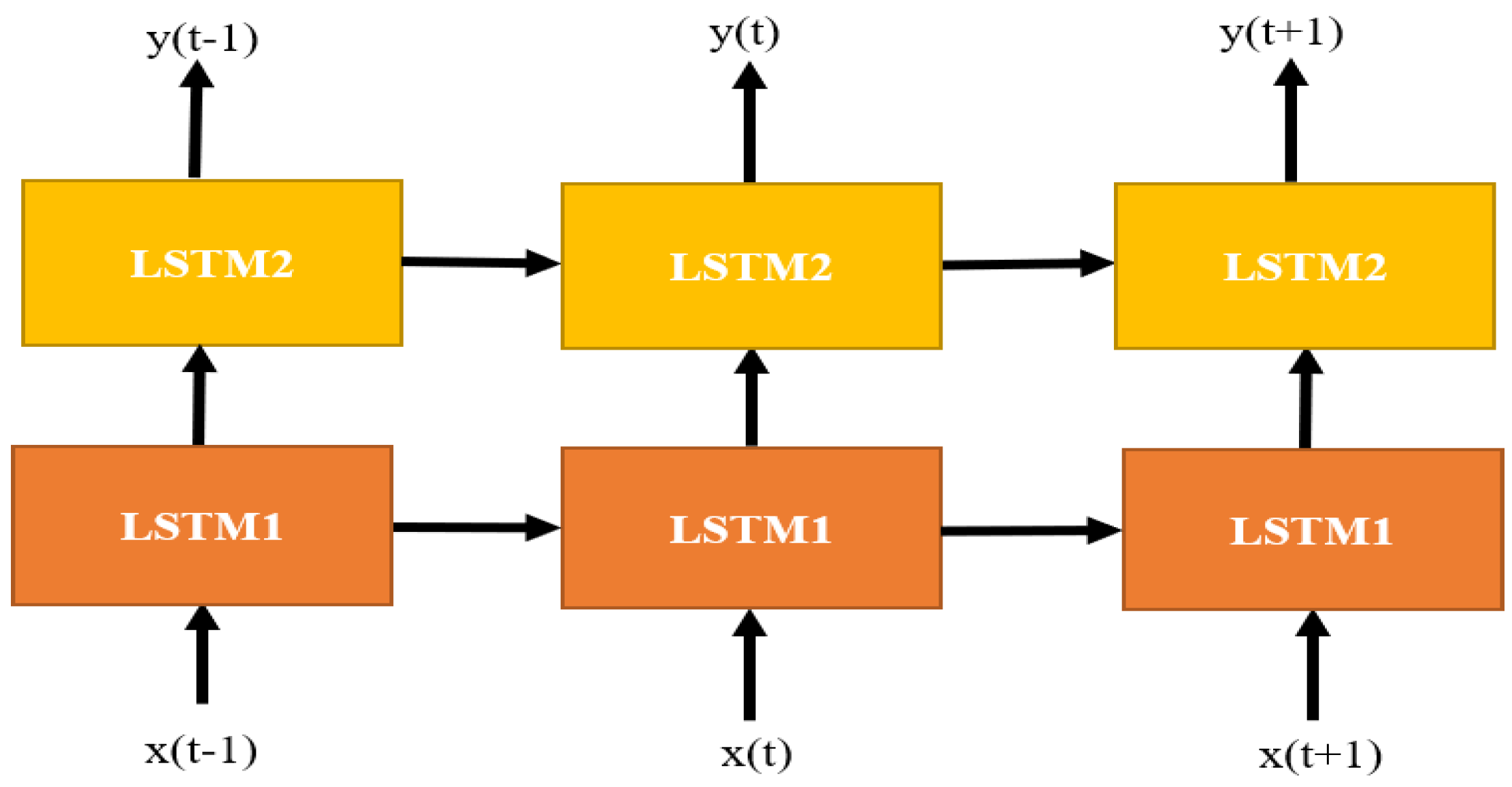

2.6.2. Multilayered LSTM Structures

2.7. Performance Metrics to Measure Forecasting Accuracy

- (a)

- Root mean square error (RMSE):

- (b)

- Mean square error (MSE):

- (c)

- Mean absolute error (MAE):

- (d)

- Correlation coefficient (r):

- (e)

- Coefficient of determination (R2):

3. Results and Discussions

3.1. LSTM Structure Comparison

3.2. Forecasting Performance of Three PV Plants

Statistical Analysis

4. Summary

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Shafiee, S.; Topal, E. When will fossil fuel reserves be diminished? Energy Policy 2009, 37, 181–189. [Google Scholar] [CrossRef]

- Wang, F.; Xuan, Z.; Zhen, Z.; Li, K.; Wang, T.; Shi, M. A day-ahead PV power forecasting method based on LSTM-RNN model and time correlation modification under partial daily pattern prediction framework. Energy Convers. Manag. 2020, 212, 112766. [Google Scholar] [CrossRef]

- Khanlari, A.; Sözen, A.; Şirin, C.; Tuncer, A.D.; Gungor, A. Performance enhancement of a greenhouse dryer: Analysis of a cost-effective alternative solar air heater. J. Clean. Prod. 2020, 251, 119672. [Google Scholar] [CrossRef]

- Ağbulut, Ü.; Gürel, A.E.; Ergün, A.; Ceylan, İ. Performance assessment of a V-trough photovoltaic system and prediction of power output with different machine learning algorithms. J. Clean. Prod. 2020, 268, 122269. [Google Scholar] [CrossRef]

- Das, U.K.; Tey, K.S.; Seyedmahmoudian, M.; Mekhilef, S.; Idris, M.Y.I.; Van Deventer, W.; Horan, B.; Stojcevski, A. Forecasting of photovoltaic power generation and model optimization: A review. Renew. Sustain. Energy Rev. 2018, 81, 912–928. [Google Scholar] [CrossRef]

- Mellit, A.; Kalogirou, S.A.; Hontoria, L.; Shaari, S. Artificial intelligence techniques for sizing photovoltaic systems: A review. Renew. Sustain. Energy Rev. 2009, 13, 406–419. [Google Scholar] [CrossRef]

- Memon, M.A.; Mekhilef, S.; Mubin, M.; Aamir, M. Selective harmonic elimination in inverters using bio-inspired intelligent algorithms for renewable energy conversion applications: A review. Renew. Sustain. Energy Rev. 2017, 82 Pt 3, 2235–2253. [Google Scholar] [CrossRef]

- Xin-gang, Z.; You, Z. Technological progress and industrial performance: A case study of solar photovoltaic industry. Renew. Sustain. Energy Rev. 2018, 81, 929–936. [Google Scholar] [CrossRef]

- Wang, F.; Xu, H.; Xu, T.; Li, K.; Shafie-khah, M.; Catalão, J.P.S. The values of market-based demand response on improving power system reliability under extreme circumstances. Appl. Energy 2017, 193, 220–231. [Google Scholar] [CrossRef]

- Li, K.; Wang, F.; Mi, Z.; Fotuhi-Firuzabad, M.; Duić, N.; Wang, T. Capacity and output power estimation approach of individual behind-the-meter distributed photovoltaic system for demand response baseline estimation. Appl. Energy 2019, 253, 113595. [Google Scholar] [CrossRef]

- Wang, F.; Ge, X.; Li, K.; Mi, Z. Day-Ahead Market Optimal Bidding Strategy and Quantitative Compensation Mechanism Design for Load Aggregator Engaging Demand Response. IEEE Trans. Ind. Appl. 2019, 55, 5564–5573. [Google Scholar] [CrossRef]

- Reikard, G.; Hansen, C. Forecasting solar irradiance at short horizons: Frequency and time domain models. Renew. Energy 2019, 135, 1270–1290. [Google Scholar] [CrossRef]

- Wang, J.; Li, Q.; Zeng, B. Multi-layer cooperative combined forecasting system for short-term wind speed forecasting. Sustain. Energy Technol. Assess. 2021, 43, 100946. [Google Scholar] [CrossRef]

- Akhter, M.N.; Mekhilef, S.; Mokhlis, H.; Shah, N.M. Review on forecasting of photovoltaic power generation based on machine learning and metaheuristic techniques. IET Renew. Power Gener. 2019, 13, 1009–1023. [Google Scholar] [CrossRef] [Green Version]

- Pierro, M.; Bucci, F.; De Felice, M.; Maggioni, E.; Perotto, A.; Spada, F.; Moser, D.; Cornaro, C. Deterministic and stochastic approaches for day-ahead solar power forecasting. J. Sol. Energy Eng. 2017, 139, 021010. [Google Scholar] [CrossRef]

- Prema, V.; Rao, K.U. Development of statistical time series models for solar power prediction. Renew. Energy 2015, 83, 100–109. [Google Scholar] [CrossRef]

- Hirata, Y.; Aihara, K. Improving time series prediction of solar irradiance after sunrise: Comparison among three methods for time series prediction. Sol. Energy 2017, 149, 294–301. [Google Scholar] [CrossRef]

- Shireen, T.; Shao, C.; Wang, H.; Li, J.; Zhang, X.; Li, M. Iterative multi-task learning for time-series modeling of solar panel PV outputs. Appl. Energy 2018, 212, 654–662. [Google Scholar] [CrossRef]

- Bigdeli, N.; Salehi Borujeni, M.; Afshar, K. Time series analysis and short-term forecasting of solar irradiation, a new hybrid approach. Swarm Evol. Comput. 2017, 34, 75–88. [Google Scholar] [CrossRef]

- Wang, F.; Zhen, Z.; Liu, C.; Mi, Z.; Hodge, B.-M.; Shafie-khah, M.; Catalão, J.P.S. Image phase shift invariance based cloud motion displacement vector calculation method for ultra-short-term solar PV power forecasting. Energy Convers. Manag. 2018, 157, 123–135. [Google Scholar] [CrossRef]

- Zaher, A.; Thil, S.; Nou, J.; Traoré, A.; Grieu, S. Comparative study of algorithms for cloud motion estimation using sky-imaging data. IFAC-PapersOnLine 2017, 50, 5934–5939. [Google Scholar] [CrossRef]

- Cheng, H.-Y. Cloud tracking using clusters of feature points for accurate solar irradiance nowcasting. Renew. Energy 2017, 104, 281–289. [Google Scholar] [CrossRef]

- Lima, F.J.L.; Martins, F.R.; Pereira, E.B.; Lorenz, E.; Heinemann, D. Forecast for surface solar irradiance at the Brazilian Northeastern region using NWP model and artificial neural networks. Renew. Energy 2016, 87, 807–818. [Google Scholar] [CrossRef]

- Perez, R.; Lorenz, E.; Pelland, S.; Beauharnois, M.; Van Knowe, G.; Hemker, K.; Heinemann, D.; Remund, J.; Müller, S.C.; Traunmüller, W.; et al. Comparison of numerical weather prediction solar irradiance forecasts in the US, Canada and Europe. Sol. Energy 2013, 94, 305–326. [Google Scholar] [CrossRef]

- Verzijlbergh, R.A.; Heijnen, P.W.; de Roode, S.R.; Los, A.; Jonker, H.J.J. Improved model output statistics of numerical weather prediction based irradiance forecasts for solar power applications. Sol. Energy 2015, 118, 634–645. [Google Scholar] [CrossRef]

- Mathiesen, P.; Collier, C.; Kleissl, J. A high-resolution, cloud-assimilating numerical weather prediction model for solar irradiance forecasting. Sol. Energy 2013, 92, 47–61. [Google Scholar] [CrossRef] [Green Version]

- Hussain, S.; AlAlili, A. A hybrid solar radiation modeling approach using wavelet multiresolution analysis and artificial neural networks. Appl. Energy 2017, 208, 540–550. [Google Scholar] [CrossRef]

- Xue, X. Prediction of daily diffuse solar radiation using artificial neural networks. Int. J. Hydrog. Energy 2017, 42, 28214–28221. [Google Scholar] [CrossRef]

- Alzahrani, A.; Shamsi, P.; Dagli, C.; Ferdowsi, M. Solar Irradiance Forecasting Using Deep Neural Networks. Procedia Comput. Sci. 2017, 114, 304–313. [Google Scholar] [CrossRef]

- Bou-Rabee, M.; Sulaiman, S.A.; Saleh, M.S.; Marafi, S. Using artificial neural networks to estimate solar radiation in Kuwait. Renew. Sustain. Energy Rev. 2017, 72, 434–438. [Google Scholar] [CrossRef]

- Olatomiwa, L.; Mekhilef, S.; Shamshirband, S.; Petković, D.J.R.; Reviews, S.E. Adaptive neuro-fuzzy approach for solar radiation prediction in Nigeria. Renew. Sustain. Energy Rev. 2015, 51, 1784–1791. [Google Scholar] [CrossRef]

- Dolara, A.; Grimaccia, F.; Leva, S.; Mussetta, M.; Ogliari, E. A physical hybrid artificial neural network for short term forecasting of PV plant power output. Energies 2015, 8, 1138–1153. [Google Scholar] [CrossRef] [Green Version]

- De Giorgi, M.; Malvoni, M.; Congedo, P. Comparison of strategies for multi-step ahead photovoltaic power forecasting models based on hybrid group method of data handling networks and least square support vector machine. Energy 2016, 107, 360–373. [Google Scholar] [CrossRef]

- Jang, H.S.; Bae, K.Y.; Park, H.-S.; Sung, D.K. Solar power prediction based on satellite images and support vector machine. IEEE Trans. Sustain. Energy 2016, 7, 1255–1263. [Google Scholar] [CrossRef]

- Da Silva Fonseca, J.G., Jr.; Oozeki, T.; Ohtake, H.; Shimose, K.-i.; Takashima, T.; Ogimoto, K. Forecasting regional photovoltaic power generation-a comparison of strategies to obtain one-day-ahead data. Energy Procedia 2014, 57, 1337–1345. [Google Scholar]

- Wolff, B.; Kühnert, J.; Lorenz, E.; Kramer, O.; Heinemann, D. Comparing support vector regression for PV power forecasting to a physical modeling approach using measurement, numerical weather prediction, and cloud motion data. Sol. Energy 2016, 135, 197–208. [Google Scholar] [CrossRef]

- Tang, P.; Chen, D.; Hou, Y. Entropy method combined with extreme learning machine method for the short-term photovoltaic power generation forecasting. Chaos Solitons Fractals 2016, 89, 243–248. [Google Scholar] [CrossRef]

- Hossain, M.; Mekhilef, S.; Danesh, M.; Olatomiwa, L.; Shamshirband, S. Application of extreme learning machine for short term output power forecasting of three grid-connected PV systems. J. Clean. Prod. 2017, 167, 395–405. [Google Scholar] [CrossRef]

- Deo, R.C.; Downs, N.; Parisi, A.V.; Adamowski, J.F.; Quilty, J.M. Very short-term reactive forecasting of the solar ultraviolet index using an extreme learning machine integrated with the solar zenith angle. Environ. Res. 2017, 155, 141–166. [Google Scholar] [CrossRef] [PubMed]

- Youssef, A.; El-Telbany, M.; Zekry, A. The role of artificial intelligence in photo-voltaic systems design and control: A review. Renew. Sustain. Energy Rev. 2017, 78, 72–79. [Google Scholar] [CrossRef]

- Wang, H.; Lei, Z.; Zhang, X.; Zhou, B.; Peng, J. A review of deep learning for renewable energy forecasting. Energy Convers. Manag. 2019, 198, 111799. [Google Scholar] [CrossRef]

- Narvaez, G.; Giraldo, L.F.; Bressan, M.; Pantoja, A. Machine learning for site-adaptation and solar radiation forecasting. Renew. Energy 2021, 167, 333–342. [Google Scholar] [CrossRef]

- Wang, K.; Qi, X.; Liu, H. A comparison of day-ahead photovoltaic power forecasting models based on deep learning neural network. Appl. Energy 2019, 251, 113315. [Google Scholar] [CrossRef]

- Luo, X.; Zhang, D.; Zhu, X. Deep learning based forecasting of photovoltaic power generation by incorporating domain knowledge. Energy 2021, 225, 120240. [Google Scholar] [CrossRef]

- Chang, G.W.; Lu, H.-J. Integrating Gray Data Preprocessor and Deep Belief Network for Day-Ahead PV Power Output Forecast. IEEE Trans. Sustain. Energy 2018, 11, 185–194. [Google Scholar] [CrossRef]

- Qing, X.; Niu, Y.J.E. Hourly day-ahead solar irradiance prediction using weather forecasts by LSTM. Energy 2018, 148, 461–468. [Google Scholar] [CrossRef]

- Nour-eddine, I.O.; Lahcen, B.; Fahd, O.H.; Amin, B.; Aziz, O. Power forecasting of three silicon-based PV technologies using actual field measurements. Sustain. Energy Technol. Assess. 2021, 43, 100915. [Google Scholar] [CrossRef]

- Halabi, L.M.; Mekhilef, S.; Hossain, M. Performance evaluation of hybrid adaptive neuro-fuzzy inference system models for predicting monthly global solar radiation. Appl. Energy 2018, 213, 247–261. [Google Scholar] [CrossRef]

- Akhter, M.N.; Mekhilef, S.; Mokhlis, H.; Olatomiwa, L.; Muhammad, A. Performance assessment of three grid-connected photovoltaic systems with combined capacity of 6.575 kWp in Malaysia. J. Clean. Prod. 2020, 277, 123242. [Google Scholar] [CrossRef]

- Najibi, F.; Apostolopoulou, D.; Alonso, E. Enhanced performance Gaussian process regression for probabilistic short-term solar output forecast. Int. J. Electr. Power Energy Syst. 2021, 130, 106916. [Google Scholar] [CrossRef]

- Quej, V.H.; Almorox, J.; Arnaldo, J.A.; Saito, L. ANFIS, SVM and ANN soft-computing techniques to estimate daily global solar radiation in a warm sub-humid environment. J. Atmos. Sol.-Terr. Phys. 2017, 155, 62–70. [Google Scholar] [CrossRef] [Green Version]

- Lotfi, H.; Adar, M.; Bennouna, A.; Izbaim, D.; Oum’bark, F.; Ouacha, H. Silicon Photovoltaic Systems Performance Assessment Using the Principal Component Analysis Technique. Mater. Today Proc. 2021, 51, 966–1974. [Google Scholar] [CrossRef]

- Adar, M.; Najih, Y.; Gouskir, M.; Chebak, A.; Mabrouki, M.; Bennouna, A. Three PV plants performance analysis using the principal component analysis method. Energy 2020, 207, 118315. [Google Scholar] [CrossRef]

- Khosravi, A.; Koury, R.; Machado, L.; Pabon, J.J.G. Prediction of wind speed and wind direction using artificial neural network, support vector regression and adaptive neuro-fuzzy inference system. Sustain. Energy Technol. Assess. 2018, 25, 146–160. [Google Scholar] [CrossRef]

- Jang, J.-S.R.; Sun, C.-T.; Mizutani, E.; Computing, S.J. A computational approach to learning and machine intelligence. IEEE Trans. Autom. Control 1997, 42, 1482–1484. [Google Scholar] [CrossRef]

- Benmouiza, K.; Cheknane, A. Clustered ANFIS network using fuzzy c-means, subtractive clustering, and grid partitioning for hourly solar radiation forecasting. Theor. Appl. Climatol. 2019, 137, 31–43. [Google Scholar] [CrossRef]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef]

- Shalev-Shwartz, S.; Zhang, T. Accelerated proximal stochastic dual coordinate ascent for regularized loss minimization. Math. Program. 2016, 155, 105–145. [Google Scholar] [CrossRef] [Green Version]

- Zheng, J.; Zhang, H.; Dai, Y.; Wang, B.; Zheng, T.; Liao, Q.; Liang, Y.; Zhang, F.; Song, X.J.A.E. Time series prediction for output of multi-region solar power plants. Appl. Energy 2020, 257, 114001. [Google Scholar] [CrossRef]

- Massaoudi, M.; Chihi, I.; Sidhom, L.; Trabelsi, M.; Refaat, S.S.; Abu-Rub, H.; Oueslati, F.S. An Effective Hybrid NARX-LSTM Model for Point and Interval PV Power Forecasting. IEEE Access 2021, 9, 36571–36588. [Google Scholar] [CrossRef]

- Liu, G.; Guo, J.J.N. Bidirectional LSTM with attention mechanism and convolutional layer for text classification. Neurocomputing 2019, 337, 325–338. [Google Scholar] [CrossRef]

- Zhang, J.; Verschae, R.; Nobuhara, S.; Lalonde, J.-F. Deep photovoltaic nowcasting. Sol. Energy 2018, 176, 267–276. [Google Scholar] [CrossRef] [Green Version]

- Srivastava, S.; Lessmann, S. A comparative study of LSTM neural networks in forecasting day-ahead global horizontal irradiance with satellite data. Sol. Energy 2018, 162, 232–247. [Google Scholar] [CrossRef]

| Hyperparameters | Range |

|---|---|

| The total number of hidden units | 80–250 |

| Maximum number of epochs | 100–400 |

| Initial rate of learning | 50–200 |

| Learn rate drop period | 0.0001–0.01 |

| Learn rate drop factor | 0.002–1 |

| LSTM Structures | p-si | m-si | a-si | |||

|---|---|---|---|---|---|---|

| MAE | RMSE | MAE | RMSE | MAE | RSME | |

| RNN-LSTM (single layered) | 18.92 | 26.86 | 15.04 | 21.28 | 46.66 | 61.44 |

| RNN-LSTM (double layered) | 19.43 | 32.72 | 17.13 | 25.4 | 49.54 | 63.93 |

| RNN-BiLSTM (single layered) | 41.96 | 49 | 23.93 | 29.14 | 53.43 | 68.41 |

| Predicted Results | [38] | ||||||

|---|---|---|---|---|---|---|---|

| RNN-LSTM | ANN | SVR | ELM | ANN | SVR | ||

| RMSE | p-si | 30.25 | 33 | 47.63 | 54.96 | 60.27 | 71.92 |

| m-si | 19.78 | 26.03 | 25.49 | 59.93 | 101.23 | 103.61 | |

| a-si | 43.37 | 46.9 | 52.92 | 90.41 | 101.99 | 145.38 | |

| R2 | p-si | 0.995 | 0.996 | 0.9874 | 0.9809 | 0.9798 | 0.9750 |

| m-si | 0.9943 | 0.99 | 0.9905 | 0.8675 | 0.8647 | 0.8618 | |

| a-si | 0.996 | 0.996 | 0.9928 | 0.9783 | 0.9754 | 0.9704 | |

| Methodology for Prediction | Ref | Year | Testing RMSE (W/m2) |

|---|---|---|---|

| RNN-LSTM (p-si) | Present study | - | 26.85 |

| RNN-LSTM (m-si) | Present study | - | 19.78 |

| RNN-LSTM (Thin film) | Present study | - | 39.2 |

| LSTM | [62] | 2018 | 139.3 |

| LSTM | [46] | 2018 | 122.7 |

| LSTM | [46] | 2018 | 76.24 |

| LSTM | [63] | 2018 | <29.26 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Akhter, M.N.; Mekhilef, S.; Mokhlis, H.; Almohaimeed, Z.M.; Muhammad, M.A.; Khairuddin, A.S.M.; Akram, R.; Hussain, M.M. An Hour-Ahead PV Power Forecasting Method Based on an RNN-LSTM Model for Three Different PV Plants. Energies 2022, 15, 2243. https://doi.org/10.3390/en15062243

Akhter MN, Mekhilef S, Mokhlis H, Almohaimeed ZM, Muhammad MA, Khairuddin ASM, Akram R, Hussain MM. An Hour-Ahead PV Power Forecasting Method Based on an RNN-LSTM Model for Three Different PV Plants. Energies. 2022; 15(6):2243. https://doi.org/10.3390/en15062243

Chicago/Turabian StyleAkhter, Muhammad Naveed, Saad Mekhilef, Hazlie Mokhlis, Ziyad M. Almohaimeed, Munir Azam Muhammad, Anis Salwa Mohd Khairuddin, Rizwan Akram, and Muhammad Majid Hussain. 2022. "An Hour-Ahead PV Power Forecasting Method Based on an RNN-LSTM Model for Three Different PV Plants" Energies 15, no. 6: 2243. https://doi.org/10.3390/en15062243

APA StyleAkhter, M. N., Mekhilef, S., Mokhlis, H., Almohaimeed, Z. M., Muhammad, M. A., Khairuddin, A. S. M., Akram, R., & Hussain, M. M. (2022). An Hour-Ahead PV Power Forecasting Method Based on an RNN-LSTM Model for Three Different PV Plants. Energies, 15(6), 2243. https://doi.org/10.3390/en15062243