Abstract

The transition towards net-zero emissions is inevitable for humanity’s future. Of all the sectors, electrical energy systems emit the most emissions. This urgently requires the witnessed accelerating technological landscape to transition towards an emission-free smart grid. It involves massive integration of intermittent wind and solar-powered resources into future power grids. Additionally, new paradigms such as large-scale integration of distributed resources into the grid, proliferation of Internet of Things (IoT) technologies, and electrification of different sectors are envisioned as essential enablers for a net-zero future. However, these changes will lead to unprecedented size, complexity and data of the planning and operation problems of future grids. It is thus important to discuss and consider High Performance Computing (HPC), parallel computing, and cloud computing prospects in any future electrical energy studies. This article recounts the dawn of parallel computation in power system studies, providing a thorough history and paradigm background for the reader, leading to the most impactful recent contributions. The reviews are split into Central Processing Unit (CPU) based, Graphical Processing Unit (GPU) based, and Cloud-based studies and smart grid applications. The state-of-the-art is also discussed, highlighting the issue of standardization and the future of the field. The reviewed papers are predominantly focused on classical imperishable electrical system problems. This indicates the need for further research on parallel and HPC approaches applied to future smarter grid challenges, particularly to the integration of renewable energy into the smart grid.

1. Introduction

To date, the global mean temperature continues to rise, and emissions continue to grow, creating great risk to humanity. Efforts and pathways are drawn by many jurisdictions to limit warming to 2 °C and reach net zero CO emissions [1]. This is largely due to the outdated electrical energy system operation and infrastructure, which causes the largest share of emissions of all sectors. Electrical energy systems are, however, witnessing a transition, consequently causing a growth in the scale and complexity of their planning and operation problems. The changing grid topology, decarbonization, electricity market decentralization, and grid modernization mean innovations and new elements are continuously introduced to the inventory of factors considered in grid operation and planning. Moreover, with the accelerating technological landscape and policy changes, the number of potential future paths to Net-Zero increases, and finding the optimal transition plan becomes an inconceivable task.

The use of parallel techniques becomes inescapable, and defaulting HPC competence by the electrical energy and power system community is inevitable in the face of the presumed future and its upcoming challenges. With some algorithmic modifications, parallel computing unlocks the potential to solve huge power system problems that are conventionally intractable. This helps in the reduction of cost and CO emissions indirectly through detailed models that help us find less conservative operational solutions—which reduce thermal generation commitment and dispatch—and plan the transition to a net-zero grid with optimal placement of the continually growing inventory of Renewable Energy (RE) resources and smart component investment—which help us achieve the Net-Zero goal and future proof the grid. Moreover, parallel processing on multiple units is inherently more efficient and reduces energy use. Using multi-threading causes the energy consumption of multi-processors to increase drastically [2]. Thus, in the case of resource abundance, it is more efficient to distribute work on separate hardware. Resource sharing is more effective than resource distribution, which reduces the demand for hardware investment and larger servers. All of these factors make it increasingly important for electrical engineering scientists to familiarize themselves with efficient resource allocation and parallel computation strategies.

North America [3], the EU [4], and many other countries [5] set a target to completely retire coal plants earlier than 2035 and decarbonize the power system by 2050. In addition, the development of Carbon Capture and Storage Facilities is growing [6]. Renewable energy penetration targets are set, with evidence of fast-growing proliferation across the globe, including both transmission-connected Variable Renewable Energy (VRE) [7] and behind the meter distributed resources [8]. The demand profile is changing with increased electrification of various industrial sectors [9] and the transportation sector [10] building electrification, energy efficiency [11,12], and the venture into a Sharing Economy [13].

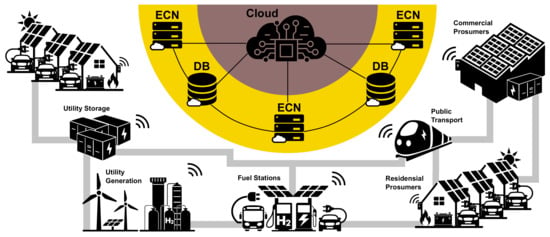

The emerging IoT, facilitated by low latency, low-cost next-generation 5G communication networks, helps roll out advanced control technologies and Advanced Metering Infrastructure [14,15]. This gives more options for contingency remedial operational actions to increase the grid reliability, and cost-effectiveness, such as Transmission Switching [16], Demand Response [17], adding more micro-grids, and other Transmission–Distribution coordination mechanisms [18]. Additionally, they allow lower investment in transmission lines and look for other future planning solutions, such as flow management devices and FACTs [19], Distributed Variable Renewable Energy [20], and Bulk Energy Storage [21].

Moreover, the future grid faces non-parametric uncertainties in the form of new policies such different as carbon taxation, pricing and certifications schemes [22], feed-in-tariffs [23] and time of use tariffs [24], and other incentives. More uncertainties are introduced in smart grid visions and network topological and economic model conceptual transformations. These include the Internet of Energy [25], Power-to-X integration [26], and the transactive grid through Peer-to-Peer energy trading facilitated by distributed ledgers or Blockchain Energy [27]. Such disruptions create access to cheap renewable energy and potential revenue streams for prosumers and encourage load shifting and dynamic pricing. Many of these concepts already have pilot projects in various locations in the world [28]. Due to all the factors mentioned above, cost-effective real-time operations and decision-making while maintaining reliability becomes extremely difficult, as does planning the network transition to sustain such a dynamic nature and stochastic future.

Many current electrical system operational models are non-convex, mixed-integer, and non-linear programming problems [29] and incorporate stochastic framework accounting for weather, load, and contingency planning [30]. Operators must solve such problems for day-ahead and real-time electricity markets and ensure reliability standards are met. In NERC, for example, the reliability standards require transmission operators to perform a real-time reliability assessment every 30 min [31]. The computational burden to solve these decision-making problems, even with our current grid topology and standards, forces the recourse to cutting-edge computational technology and high-performance computing strategies for online real-time applications and offline calculations to achieve tractability. This is part of the reason for the increased funding for High-Performance algorithms for complex energy systems and processes [32].

Operators already use high-performance computation facilities or services in areas such as Transmission and Generation Investment Planning, Grid Control, Cost Optimization, Losses Reduction, Power System Simulation, and Analysis and Control Room Visualization, as seen in [33,34]. However, for operational purposes where problems need to be solved on a short time horizon, system models are usually simplified, and heuristic methods are used, relying on the experience of operators, such as in [35]. As a consequence, these models tend to be conservative in fashion, reaching a reliable solution at the expense of reduced efficiency [36]. According to The Federal Energy Regulatory Commission, finding more accurate solution techniques for complex problems such as AC Optimal Power Flow (ACOPF) could save billions of dollars [37]. This motivates the search for methods to produce high-quality solutions in a reasonable time, and one of the ways to push the wall of speed up is through parallel techniques and HPC. Finding appropriate techniques, formulations, and proper parallel implementation of HPC for electrical system studies has been a research area of interest. Progress has been made to make solving complex, accurate power system models for real-time decisions favorable.

The first work loosely related to parallelism on a high-level task in a power system might have been that of Harvey H. Happ in 1969 [38], in which a hierarchical decentralized multi-computer configuration was suggested, targeting Unit Commitment (UC) and Economic Dispatch (ED). Other similar work in multi-level multi-computer frameworks followed, soon targeting Security and voltage control in the 1970s [39,40,41]. P. M. Anderson from the Electrical Power Research Institute created a special report in 1977, collecting various studies and simulations performed at the time, which explored the potential applications for power system analysis on parallel processing machines [42]. Additionally, several papers came out suggesting new hardware to accommodate power-system-related calculations [43].

In 1992, C. Tylasky et al. made what might be the first comprehensive review of the state-of-the-art of parallel processing for power systems studies [44]. They discussed challenges still relevant today, such as different machine architectures, transparency, and portability of the codes used. A few parallel power system study reviews have been conducted throughout the development of computational hardware. Some had similar goals to this paper reviewing HPC applications for power systems [45,46] and on distributed computing for online real-time applications [47]. Computational paradigms changed exponentially, reducing those reviews to pieces of history. The latest relevant, comprehensive reviews on the topic were by R. C. Green et al. for general power system applications [48], and again focused on innovative smart grid applications [49]. These two handle a variety of topics in power systems. This work adds to existing reviews, providing a fresh overview of the state-of-the-art. It distinguishes itself by providing the full context and history of parallel computation and HPC in electrical system optimization and its development up to the latest work in the field. It also provides a thorough base and background for newcomers to the field of power system optimization in terms of both computational paradigms and applied algorithms. It highlights the importance of defaulting HPC utilization in a net-zero future grid. Finally, it brings to light the necessity of integrating HPC in future studies amidst the energy transition and suggests a framework that encourages future collaboration to accelerate HPC deployment. Table 1 shows a side-by-side comparison of related reviews.

Most of the existing work in the literature applies to classical problems that are still relevant to the future grid. For example, the electrical system stability problems only become bigger and more complex with the new grid paradigms, especially with the introduction of synthetic inertia coming from massive wind and solar plants at many data points. However, the amount of HPC and cloud computing consolidation to the future smart grid vision is small, so only one section of this work introduces frameworks of cloud-smart grid integration with different network architectures and topologies. Accompanying cloud usage in future studies is important, as it introduces new considerations and complications, such as optimal resource allocation and cloud security issues.

This paper is organized as follows: Section 2 and Section 3 identify the main HPC components and parallel computation paradigms. The next six sections review parallel algorithms under both Multiple Instruction Multiple Data (MIMD) and Single Instruction Multiple Data (SIMD) paradigms split into their early development and state-of-the-art for each study, starting with Section 4 PF. Section 5 Optimal Power Flow (OPF). Section 6 UC. Section 7 reviews power system stability studies. Section 8 System State Estimation (SSE). Section 9 reviews unique formulations and studies. Section 10 reviews gird and cloud computation applications of classical problems. Section 11 reviews smart grid cloud-based applications. In these studies, novel approaches and algorithms and modifications to existing complex models are made and parallelized to achieve tractability, or their processing time is reduced below that needed for real-time applications. Many studies showed promising outcomes and made a strong case for further opportunities in using complex system models on HPC and Cloud for operational applications. Section 12 highlights and discusses the present challenges in the studies, re-projects the future of HPC in power systems and energy markets, and recommends a framework for future studies. Section 13 concludes the review.

Table 1.

Previous work and related reviews.

Table 1.

Previous work and related reviews.

| Paper | [44] | [45] | [46] | [47] | [48] | [49] | This Work |

|---|---|---|---|---|---|---|---|

| Year | 1992 | 1993 | 1996 | 1996 | 2011 | 2013 | 2022 |

| Focus | AR 1 | DS 2 | AR | OA 3 | AR | SG 4 | AR |

| Historical Overview | • | • | • | • | |||

| Comprehensive Review | • | • | • | • | • | ||

| Leading edge (2013–2022) | • | ||||||

| Critical Review | • | ||||||

| HPC Tutorial | • | • | • | • | |||

| Cloud/Smart Grid | • | • | |||||

| RE and Net-Zero | • | ||||||

| Guideline and Recommendations | • |

1 All-round; 2 Dynamic Simulation; 3 Online Applications; 4 Smart Grid.

This review does not include machine learning or meta-heuristic parallel applications such as particle swarm and genetic algorithms. Furthermore, while it does include some studies related to the co-optimization of Transmission and Distribution systems, the study excludes parallel analysis and simulations of distribution systems. It is also important to note that this review does not include Transmission and generation system planning problems or models related to grid transitioning because of the lack of parallel or HPC application in the literature on such models, which is addressed in the discussion section.

2. Parallel Hardware

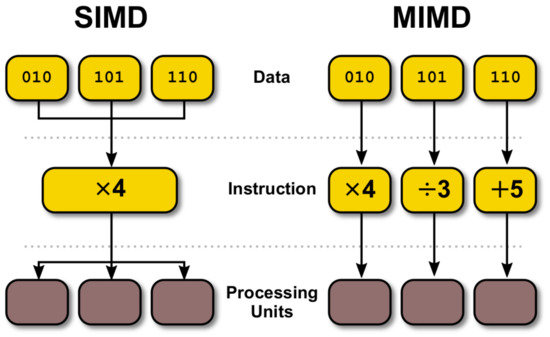

Parallel computation involves several tasks being performed simultaneously by multiple workers on a parallel machine. Here, a worker is a loose term and could refer to different processing hardware (e.g., a core, Central Processing Unit (CPU), or a compute node). Predominantly, parallel machines can be placed under two categories based on The Von Neumann architecture [50], MIMD, and SIMD machines, as shown in Figure 1.

Figure 1.

MIMD is versatile, allowing multiple heterogeneous instructions to be carried out on a pool of data. SIMD is simple and performs the same operation on a pool of data. Instructions are not limited to arithmetics and can take different forms (logical, transfer, etc.).

SIMD architecture dominated supercomputing with vector processors, but that changed soon after general-purpose processors became scalable in the 1990s [51,52]. Followed by transputers and microprocessors designed specifically for aggregation and scalability [53]. The effect of this shift in architecture on the algorithm design can be observed in the coinciding studies mentioned in this paper. The following subsection details the function of the present default computer hardware.

2.1. CPUs

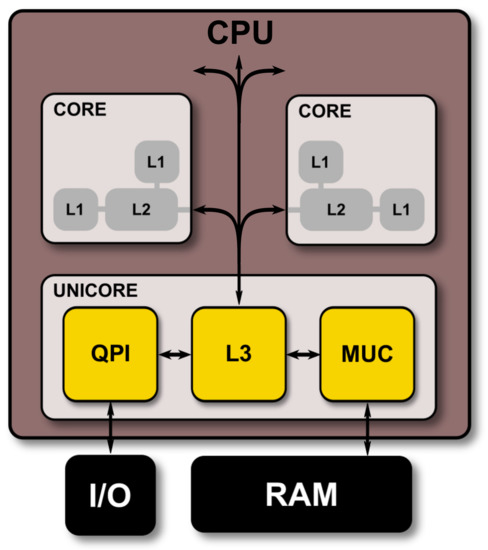

CPUs were initially optimized for scalar programming and executing complex logical tasks effectively until they hit a power wall, leading to multicore architecture [54]. Today, they function as miniature superscalar computers that enable pipelining, task-level parallelism, and multi-threading [55]. They employ a variety of self-optimizing techniques, such as “Speculation”, “Hyperthreading or “Simultaneous Multi-threading”, “Auto vectorization”, and “task dependency detection” [55]. They contain an extra SIMD layer that supports data-level parallelism, vectorization, and fused multiply-add with high register capacity [56,57]. Furthermore, CPUs use a hierarchy of memory and caches, as shown in Figure 2, which allows complex operations without Random Access Memory (RAM) fetching, from high-speed low-capacity (L1) to lower-speed, higher-capacity caches (L2 then L3). They give the CPU a distinct functional advantage over GPUs.

Figure 2.

Architecture illustration resembles a generic CPU from Intel Xeon series [58] showing the interconnection of Quick Path Interconnect (QPI), Memory Control Unit (MCU), with caches and RAM.

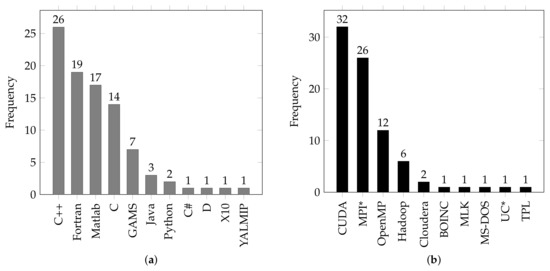

A Workstation CPU can have up to 16 processing cores, and server-level CPUs can have up to 128 cores in certain products [59]. Multi-threading is carried out on Application Programming Interfaces (API) such as Cilk or OpenMP, allowing parallelism of functions and loops. Using several server-level CPUs in multi-processing to solve massive decomposed problems is facilitated by APIs such as MPI.

2.2. GPUs

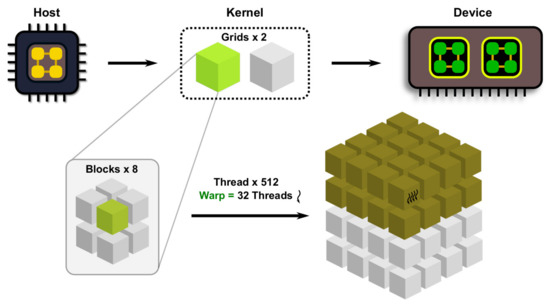

GPUs function very similarly to Vector Processing Units or Array processors, which used to dominate supercomputer design. They are additional components to a “Host” machine that sends kernels, which is essentially the CPU. GPUs were originally designed to render 3D graphics. They are especially good at vector calculations. The representation of 3D graphics has a “grid” nature and requires the same process for a vast number of input data points. This execution has been extended to many applications in scientific computing and machine learning, solving massive symmetrical problems or performing symmetrical tasks. Unlike CPUs, achieving efficiency in GPUs parallelism is a more tedious task due to the fine-grained SIMD nature and rigid architecture. Figure 3 shows a simple breakdown of the GPU instruction cycle.

Figure 3.

GPU instruction cycle and Compute Unified Device Architecture (CUDA) abstractions.

The GPU (Device) interfaces with the CPU (Host) through PCI express bus from which it receives instruction “Kernels”. In each cycle, a Kernel function is sent and processed by vast amounts of GPU threads with limited communication between them. Thus, the symmetry of the parallelized task is a requisite, and the number of parallel threads has to be of specific multiple factors to avoid the sequential execution of tasks. Specifically, they need to be executed in multiples of 32 threads (a warp) and multiples of two streaming processors per block for the highest efficiencies.

GPUs can be programmed in C or C++. However, many APIs exist to program GPUs, such as OpenCL, HIP, C++ AMP, DirectCompute, and OpenACC. These APIs provide high-level functions, instructions, and hardware abstractions, making GPU utilization more accessible. The most relevant interface is the CUDA by NVIDIA since it dominates the GPU market in desktop and HPC/Cloud [60]. CUDAs libraries make NVIDIAs GPU’s power much more accessible to the scientific and engineering communities.

GPU’s different architecture may cause discrepancy and lower accuracy in results, as floating points are often rounded in a different manner and precision than in CPUs [61]. Nevertheless, these challenges can be worked around with CUDA and sparse techniques that reduce the number of ALUs required to achieve a massive speedup. Finally, GPUs can offer a huge advantage over CPUs in terms of energy efficiency and cost if their resources are used effectively and appropriately.

2.3. Other Hardware

There are two more notable parallel devices to mention. One is the Field Programmable Gate Arrays (FPGA). This chip consists of configurable logic blocks, allowing the user to have complete flexibility in programming the internal hardware and architecture of the chip itself. They are attractive as they are parallel, and their logic can be optimized for desired parallel processes. However, they consume a considerable amount of power compared to other devices, such as the Advanced RISC Machine. Those are processors that consume very little energy due to their reduced instruction set, making them suitable for portable devices and applications [62].

3. Aggregation and Paradigms

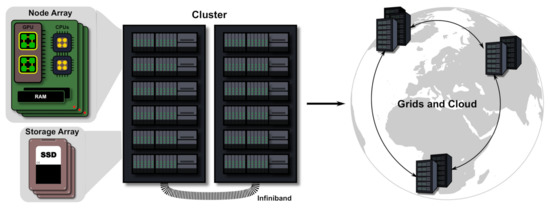

In the late 1970s, project ARPANET took place [63] UNIX was developed [64], and advancement in networking and communication hardware was achieved. The first commercial LAN clustering system/adaptor, ARCNET, was released in 1977 [65], and hardware abstraction sprung in the form of virtual memory, such as OpenVM, which was adopted by operating systems and supercomputers [66]. Around that same time, the concept of computer clusters was forming. Many research facilities and customers of commercial supercomputers started developing their in-house clusters of more than one supercomputer. Today’s HPC facilities are highly scalable and are comprised of specialized aggregate hardware, as displayed in Figure 4. The communication between processes through aggregate hardware is aided by high-level software such as MPI, which is available in various implementations and packages such as Mpi4py in python or Apache, Slurm, and mrjob, to aid in data management, job scheduling, and other routines.

Figure 4.

Powerful chips to Cloud computing.

Specific clusters might be designed or equipped with components geared more toward specific computing needs or paradigms. HPC usually includes tasks with rigid time constraints (minutes to days or maybe weeks) that require a large amount of computation. The High-Throughput Computing (HTC) paradigm involves long-term tasks that require a large amount of computation (months to years) [67]. The Many Task Computing (MTC) paradigm involves computing various distinct HPC tasks and revolves around applications that require a high level of communication and data management [68]. Grid or Cloud facilities provide the flexibility to adopt all the mentioned paradigms.

3.1. Grid Computing

The information age spurring in the 1990s set off the trend of wide-area distributed computing and “Grid Computing”, the predecessor of the Cloud. Ian Foster coined the term with Carl Kesselman and Steve Tuecke, who developed the Globus toolkit that provides grid computing solutions [69]. Many Grid organizations exist today, such as Organizations such as NASA 3-EGEE and Open Science Grid. Grid computing shaped the field of “Metacomputing”, which revolves around the decentralized management and coordination of Grid resources, often carried out by virtual organizations with malleable boundaries and responsibilities. The infrastructure of grids tends to be very secure and reliable, with an exclusive network of users (usually scientists and experts), discouraging virtualization and interactive applications. Hardware is not available on demand; thus, it is only suitable for sensitive, close-ended, non-urgent applications. Grid computing features provenance performance monitoring and is mainly adopted by research organizations.

3.2. Cloud Computing

Cloud computing is essentially the commercialization and effective scaling of Grid Computing driven by demand, and it is all about the scalability of computational resources for the masses. It mainly started with Amazon’s demand for computational resources for its e-commerce activities, which precipitated Amazon to start the first successful infrastructure as a service-providing platform with Elastic Compute Cloud [70] for other businesses that conduct similar activities.

The distinction between Cloud and Grid is an implication of their business model. Cloud computing is way more flexible and versatile than Grid when it comes to accommodating different customers and applications. It relies heavily on virtualization and resource sharing. This makes Cloud inherently less secure, less efficient in performance than Grid, and more challenging to manage, yet way more scalable, on-demand, and overall more resource efficient. It achieves a delicate balance between efficiency and the cost of computation.

Today, AWS, Microsoft Azure, Oracle Cloud, Google Cloud, and many other cloud commercial services provide massive computational resources for various companies such as Netflix, Airbnb, ESPN, HSBC, GE, Shell, and the NSA. It only makes sense that the electrical industry will adopt the Cloud.

3.2.1. Virtualization

The appearance of virtualization caused a considerable leap in massive parallel computing, especially after the software tool Parallel Virtual Machines (PVM) [71] was created in 1989. Since then, tens and hundreds of virtualization platforms have been developed, and are used today on the smallest devices with processing power [72]. Virtualization allows resources to be shared in a pool, where multiple instances of different types of hardware can be emulated on the same metal. This means less hardware can be allocated or invested in Cloud computing for a more extensive user base. Often, the percentage of hardware used is low compared to the requested hardware. Idle hardware is reallocated to other user processes that need it. The instances initiated by users float on the hardware, such as clouds shifting and moving or shrinking and expanding depending on the actual need of the process.

3.2.2. Containers

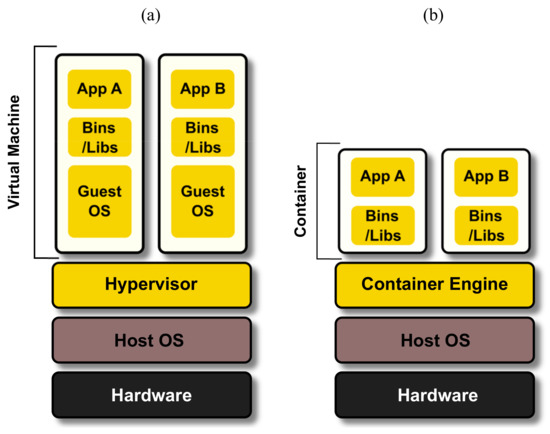

While virtualization makes hardware processes portable, containers make software portable. Developing applications, software, or programs in containers allows them to be used on any Operating System (OS) as long as it supports container engines. That means one can develop a Linux-based software (e.g., that works on Ubuntu 20.04) in a container and run that same application on a machine with Windows OS or iOS installed. This flexibility applies to service-based applications that utilize HPC facilities. An application can be developed on containers, and clients can use it on their cluster or a cloud service. Figure 5 compares virtual machines with container layers. While this shows that a host OS is required, they can also run on bare metal, removing latency and development complexity. Docker and Apptainer (formerly known as singularity) are commonly used containerization engines in Cloud and Grid, respectively [73,74].

Figure 5.

A comparison between Virtual Machine layers (a), Container Layers (b).

3.2.3. Fog Computing

Cloudlets, edge nodes, and edge computing are all related to an emerging IoT trend, Fog Computing. Fogs are computed nodes associated with a cloud that are geographically closer to the end-user or control devices. Fogs mediate between extensive data or cloud computing centers and users. This topology aims to achieve data locality, offering several advantages, such as low latency, higher efficiency, and decentralized computation.

3.3. Volunteer Computing

Volunteer computing is an interesting distributed computing model that originated in 1996 via a Great Internet Mersenne Prime Search [75], allowing individuals connected to the internet to donate their personal computer’s idle resources for a scientific research computing task. Volunteer computing remains active today, with many users and various middleware and projects, both scientific and non-scientific, primarily based on BOINC [76], and in commercial services such as macOS Server Resources [77].

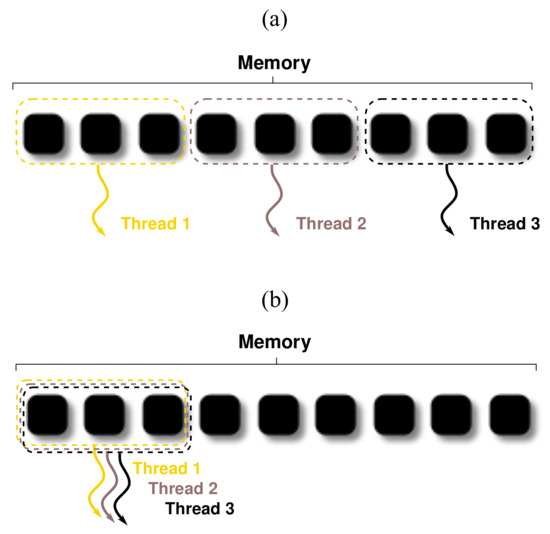

3.4. Granularity

Fine-grained parallelism appears in algorithms that frequently repeat a simple homogeneous operation on a vast dataset. They are often associated with embarrassingly parallel problems. The problems can be divided into many highly, if not wholly symmetrical simple tasks, providing high throughput. Fine-grained algorithms are also often associated with multi-threading and shared memory resources. Coarse-grained algorithms imply moderate or low task parallelism that sometimes involves heterogeneous operations. Today, coarse-grained algorithms are almost synonymous with Multi-Processing, where the algorithms use distributed memory resources to divide tasks into different processors or logical CPU cores.

3.5. Centralized vs. Decentralized

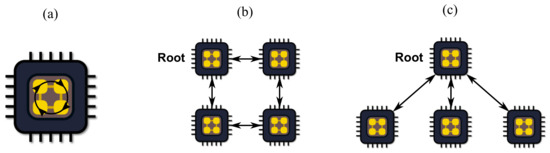

Centralized algorithms refer to problems with a single task or objective function, solved by a single processor, with data stored at a single location. When a centralized problem is decomposed into N subproblems, sent to N number of processors to be solved, and retrieved by the central controller to update variables, re-iterate, and verify convergence, the algorithm becomes a “Distributed” algorithm. The terms distributed and decentralized are often used interchangeably and are often confused in the literature. There is an important distinction to make between them. A Decentralized Algorithm is one in which the decomposed subproblems do not have a central coordinator or a master problem. Instead, the processes responsible for the subproblems communicate with neighboring processes to reach a solution consensus (several local subproblems with coupling variables where subproblems communicate without a central coordinator). The value of each type is not only determined by computational performance but the decision-making policy.

In large-scale complex problems, distributed algorithms sometimes outperform centralized algorithms. The speedup keeps growing with the problem size if the problem has “strong scalability”. Distributed algorithms’ subproblems share many global variables. This means a higher communication frequency, as all the variables need to be communicated back and forth to the central coordinator. Moreover, in some real-life problems, central coordination of distributed computation might not be possible. Fully decentralized algorithms solve this problem as their processes communicate laterally, and only neighboring processes have shared variables. Figure 6 illustrates the three schemes.

Figure 6.

Information exchange between processors in different parallel schemes, (a) Centralized, (b) Decentralized, (c) Distributed. The arrow points in the direction of communication.

3.6. Synchronous vs. Asynchronous

Synchronous algorithms are ones in which the algorithm does not move forward until the parallel tasks at a certain step or iteration are executed. Synchronous algorithms are more accurate and efficient for tasks with symmetrical data and complexity. However, that is usually not the case in power system optimization studies. The efficiency of these algorithms suffers, however, when the tasks are not symmetrical. Asynchronous algorithms allow idling workers to take on new tasks, even if not all the adjacent processes are complete. This is possible only at the cost of accuracy when there are dependencies between parallel tasks. To achieve better accuracy in asynchronous algorithms, “Formation” needs to be ensured, meaning that while subproblems may have a deviation in the direction of convergence, they should keep a global tendency toward the solution.

3.7. Problem Splitting and Task Scheduling

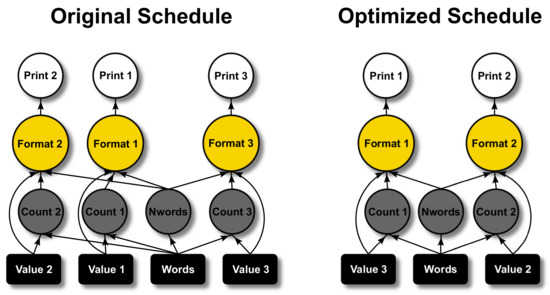

Large emphasis must be placed on task scheduling when designing parallel algorithms. In multi-threading, synchronization of tasks is required to avoid “Race Conditions” that cause numerical errors due to multiple threads accessing the same memory location simultaneously. Hence, synchronization does not necessarily imply that processes will execute every instruction simultaneously but rather in a coordinated manner. Coordination mechanisms involve pipe-lining or task overlapping, which can increase efficiency and reduce the latency of parallel performance. For example, sub-tasks that take the longest time in synchronous algorithms can utilize idle workers of completed sub-tasks if no dependencies prevent such allocation. Dependency analysis is occasionally carried out when splitting tasks. In an elaborate parallel framework, such as in multi-domain simulations or smart grid applications, task scheduling becomes its own complex optimization problem, which is often solved heuristically. However, there exist packages such as DASK [78], which can help with optimal task planning and parallel task scheduling, as shown in Figure 7. DASK is a python library for parallel computing which allows easy task planning and parallel task scheduling optimization. The boxes in Figure 7 represent data and circles represent operations.

Figure 7.

Typical task graph generated and optimized by DASK [78].

3.8. Parallel Performance Metrics

Solution time and accuracy are the main measures of the success of the parallel algorithm. According to the Amhals law of strong scaling, there is an upper limit to the speedup achieved for a fixed-size problem. Dividing a specific fixed-size problem into more subproblems does not result in a linear speedup. However, if the parallel portion of the algorithm increases, then proportionally increasing the subproblems or the number of processors could continuously increase the speedup, according to Gustafson’s Law of strong scaling. The good news is that Gustafson’s law applies to large decomposed power system problems.

There are three types of metrics most frequently used in the literature, as shown in Equation (1)–(3).

A Linear Speedup is considered optimal, while a sublinear speedup is a norm because there is always a serial portion in a parallel code. Efficiency and scalability are vaguely related. Efficiency is mostly used to compare a specific parallel setup to a sequential one, whereas scalability is used to see how the parallel algorithm scales with increased hardware. A scalable algorithm is not necessarily an efficient one. When creating a parallel algorithm, emphasis on the quality of the serial portion of the algorithm must be ensured. A parallel algorithm, after all, is contained and executed by a serial code. Both sequential and parallel programs are vulnerable to major random errors caused by the Cosmic Ray Effect [79], which has been known to cause terrestrial computational problems [80]. Soft errors might be of some concern regarding real-time power system applications. However, in parallel programming, especially in multi-processing, reordering floating-point computations is unavoidable; thus, a tiny deviation in accuracy from the sequential counterpart is expected and should be tolerated, given that the speedup achieved justifies it. All the computing paradigms mentioned above are points to tweak and consider when creating and applying any parallel algorithm.

4. Power Flow

Power Flow (PF) studies are central to all power system studies involving network constraints. The principal goal of PF is to solve the network’s bus voltages and angles to calculate the flows of the network. For some applications, PF is solved using DC power flow equations, approximations based on realistic assumptions. Solving these equations is easy and relatively fast and results in an excellent approximation of the network PF [29]. On the other hand, non-linear full AC Power flow equations need to be solved to obtain an accurate solution, and these require numerical approximation methods. The most popular ones in power system analysis are the Newton Raphson (NR) method, and the Interior Point Method (IPM) [37]. However, these methods are computationally expensive and too slow for real-time applications, making them a target for parallel execution.

4.1. MIMD Based Studies

Table 2 at the end of this section summarizes contributions of MIMD-based PF studies at the end of it. Parallelism in PF in some earlier studies was achieved by restructuring the hardware to suit the algorithm. In what might be the first parallel power flow implementation, Taoka H. et al. designed their multi-processor system around the Gauss Iterative method in 1981, such that each i8085 processor in the system would solve the power flow for each bus [81]. Instead of modifying the algorithm, the hardware was tailored to it, achieving a linear speedup compared to the sequential implementation in [82]. Similarly, one year later, S. Chan and J. Faizo [83] reprogrammed the CDC supercomputer to solve the Fast-Decoupled Power Flow (FDPF) algorithm in parallel. FPGAs were also used to parallelize LU decomposition for PF [84,85]. The hardware modification approach, while effective, is, for the most part, impractical, and it is common sense to modify algorithms to fit existing scalable hardware.

The other way to parallelize PF (or OPF) is through network partitioning. While network partitioning usually occurs at the problem level in OPF, in PF, the partitioning often happens at the solution/matrix level. Such partitioning methods for PF use sparsity techniques involving LU decomposition, forward/backward substitution, and diakoptics that trace back to the late 1960s, predominantly by H. H. Happ [86,87] for load flow on typical systems [88,89], and dense systems [41]. Parallel implementation of PF using this method started in the 1980s on array processors such as the VAX11 [90] and later in the 1990s on the iPSC hypercube [91]. Techniques such as FDPF were also parallelized on the iPSC using Successive Over-relaxation (SOR) on Gauss-Sidel (GS) [92], and on vector computers such as the Cray, X/MP using Newtons FDPF [93]. PF can also be treated as an unconstrained non-linear minimization problem, which is precisely what E. Housos and O. Wing [94] did to solve it using a parallelizable modified conjugate directions method.

When general processors started dominating parallel computers, their architecture was homogenized, and the enhancements achieved by parallel algorithms became comparable and easier to experiment with. This enabled a new target of optimizing the parallel techniques themselves. Chen and Chen used transputer-based clusters to test the best workload/node distribution on clusters, and [95] and a novel BBDF approach for power flow analysis [96]. The advent of Message Passing Interface (MPI) allowed the exploration of scalability with the Generalized Minimal Residual Method (GMRES) in [97] and the multi-port inversed matrix method [98] as opposed to the direct LU method. Beyond this point, parallel PF shifted heavily towards using SIMD hardware (GPUs particularly), except for a few studies involving elaborate schemes. Some examples include transmission/distribution, smart grid PF calculation [99], or Optimal network partitioning for fast PF calculation [100].

Table 2.

Power flow MIMD-based state-of-the-art studies.

Table 2.

Power flow MIMD-based state-of-the-art studies.

| Paper | Contribution |

|---|---|

| [81] | Gauss Iterative method on a specialized machine |

| [82] | Tailored parallel hardware for FDPF |

| [83] | Reprogrammed CDC supercomputer to parallelize FDPF |

| [84,85] | Reprogrammed FPGA to parallelize LU method |

| [90] | LU method on VAX11 |

| [91] | LU method on iPSC hypercube |

| [92] | FDPF using SOR on Gauss–Siedel on IPSC hypercube |

| [93] | Newton FDPF on Cray X/MP |

| [94] | Parallel conjugate directions method |

| [95] | Novel BBDF on transputer-based cluster |

| [97] | MPI scheme parallelizing GMRES |

| [98] | MPI scheme parallelizing multi-port inverse matrix method |

4.2. SIMD Based Studies

4.2.1. Development

GPU dominates recent parallel power system studies. The first power flow study implementation might have been achieved by using a preconditioner Biconjugate Gradient Algorithm and sparsity techniques to implement the NR method on a NVIDIA Tesla C870 GPU [101]. Some elementary approaches parallelized the computation of connection matrices for networks where more than one generator could exist on a bus on a NVIDIA GPU [102]. CPUs were also used in SIMD-based power flow studies since modern CPUs exhibit multiple cores; hence, multi-threading with OpenMP can be used to vectorize NR with LU factorization [103]. Some resorted to GPUs to solve massive batches of PF for Probabilistic Power Flow (PPF) or contingency analysis, thread per scenario, such as in [104]. Others modified the power flow equations to improve the suitability and performance on GPU [105,106].

While many papers limit their applications to NVIDIA GPUs by using CUDA, OpenCL, a general parallel hardware API, has also been used occasionally [107]. Some experimented with and compared the performance of different CUDA routines on different NIVIDIA GPU models [108]. Similar experimentation on routines was conducted to solve ACOPF using FDPF [109]. In [110], NR, Gauss Sidel, and Decoupled PF were tested and compared against each other on GPU. Improvement on the Newtons Method and parallelizing different steps of it were performed previously [111]. Asynchronous PF algorithms were applied on GPU, which sounds difficult, as the efficiency of GPUs depends on synchronicity and hegemony [112]. Even with the existence of CUDA, many still venture into creating their routines with OpenCL [113,114] or direct C coding [115] of GPU hardware to fit their needs for PF. Very recently, a few authors made thorough overviews for parallel power flow algorithms on GPU covering general trends [116,117] and specifically AC power flow GPU algorithms [118]. In the State-of-the-Art subsection, the most impactful work is covered.

4.2.2. State-of-the-Art

Table 3 summarizes the latest SIMD-based PF studies contributions. A lot of the recent work in this area focuses on pre-conditioning and fixing ill-conditioning issues in iterative algorithms to solve the created Sparse Linear Systems (SLS). An ill-conditioned problem exhibits a massive change in output for a minimal change in input, making the solution to the problem hard to find iteratively. Most sequential algorithms are LU-based direct solvers, as they do not suffer from ill-conditioning. However, Iterative solvers such as the Conjugate Gradient method, which have been around since the 1990s [119], are regaining traction for their scalability and parallel computing advancement.

The DC Power Flow (DCPF) problem was solved using the Chebyshev preconditioner and conjugate gradient iterative method in a GPU (448 cores Tesla M2070) implementation in [120,121]. The vector processes involved are easily parallelizable in the most efficient way with CUDA libraries such as CUBLAS and CUSPARSE, which are Basic Linear Algebra Subroutine and Sparse Matrix Operation Libraries. This work used comparisons of sparse matrix storage formats, such as the Compressed Sparse Row (CSR) and Block Compressed Sparse Row (BSR). On the largest system size, a speedup of 46× for the pre-conditioning step and 10.79× for the conjugate gradient step was achieved compared to a sequential Matlab implementation (8-core CPU).

Later, the same author went on to Parallelize the FDPF using the same hardware and pre-conditioning steps [122]. Two natural systems were used, the Polish system, which had groups of locally connected systems, and the Pan-European system, which consisted of several large coupled systems. This topology difference results in a difference in the sparsity patterns of the SLS matrix, which offers a unique perspective. Their proposed GPU-based FDPF was implemented with Matlab on top of MatPower v4.1. In their algorithm, the CPU regularly corresponds with the GPU, sending information back and forth over one iteration. Their tests showed that the FDPF performed better on the Pan-European system because its connections were more ordered than the Polish system. CPU-GPU communication frequently occurred in their algorithm steps, most likely bottlenecking the speedup of their algorithm (less than 3× achieved compared to CPU only).

Instead of adding pre-conditioning steps, M. Wang et al. [123] focus on improving the continuous Newtons method such that a stable solution is found even for an ill-conditioned power flow problem. For example, if any load or generator power exceeds 3.2 p.u. in the IEEE-118 test case, the NR method fails to converge; even if the value is realized in any iteration, their algorithm will still converge to the solution with their method. This was achieved using different-order numerical integration methods. The CPU loads data into GPU and extracts the results upon convergence only, making the algorithm very efficient. The approach substantially improved over the previous work by removing the pre-conditioning step and reducing CPU–GPU communication (speedup of 11× compared to CPU-only implementation).

Sometimes, dividing the bulk of computational load between the CPU and GPU (a hybrid approach) can be more effective depending on the distribution of processes. In one hybrid CPU–GPU approach, a heavy emphasis on the sparsity analysis of PF-generated matrices was made in [124]. When using a sparse technique, the matrices operated on are reduced to ignore the zero terms. For example, the matrix is turned into a vector of indices referring to the non-zero values to confine operations to these values. Seven parallelization schemes were compared, varying the techniques used (Dense vs. Sparse treatment), the majoring type (row vs. column), and the threading strategy. Row/column-major signifies whether the matrix’s same row/column data are stored consecutively. The thread invocation strategies varied in splitting or combining the calculation of P and Q of the mismatch vectors. In this work, two sparsity techniques were experimented with, showing a reduction in operations down to 0.1% of the original number and two or even three orders of magnitude performance enhancement for power mismatch vector operations. In 100 trials, their best scheme converged within six iterations on a four-core host and a GeForce GTX 950M GPU, with a small deviation in solution time between trials. CPU–GPU communication took about 7.79–10.6% of the time, a fairly low frequency. However, the proposed approach did not consistently outperform a CPU-based solution with all of these reductions. The authors suggested that this was due to using higher-grade CPU hardware than the GPU.

Zhou et al. might have conducted the most extensive research in GPU-accelerated batch solvers in a series between 2014 and 2020. They fine-tune the process of solving PPF for GPU architecture in [125,126]. The strategies used include Jacobian matrix packaging, contiguous memory addresses, and thread block assignment to avoid divergence of the solution. Subsequently, they use the LU-factorization solver from previous work to finally create a batch-DPF algorithm [127]. They test their batch-DPF algorithm on three cases: 1354-bus, 3375-bus, and 9241-bus systems. For 10,000 scenarios, they solved the largest case within less than 13 s, showing the potential for online application.

Most of the previous studies solve the PF problem in a bare and limited setup when compared to the work by J. Kardos et al. [128] that involves similar techniques in a massive HPC framework. Namely, preventative Security Constrained Optimal Power Flow (SCOPF) is solved by building on an already existing suite called BELTISTOS [129]. BELTISTOS specifically includes SCOPF solvers and has an established Schurs Complement Algorithm that factorizes the Karush–Kuhn–Tucker (KKT) conditions, allowing for a great degree of parallelism in using IPM to solve general-purpose Non-Linear Programming (NLP) problems. Thus, the main contribution of this work is in removing some bottlenecks and ill-conditioning that exist in Schur’s Complement steps introducing a modified framework (BELTISTOS-SC). The parallel Schur algorithm is bottlenecked by a dense matrix associated with the solution’s global part. This matrix is solved in a single process. Since GPUs are meant to be used for dense systems, they factorize the system and apply forward–backward substitution, solving it using cuSolve, a GPU-accelerated library to solve dense linear algebraic systems.

Table 3.

Power flow SIMD-based state-of-the-art studies.

Table 3.

Power flow SIMD-based state-of-the-art studies.

| Paper | Contribution |

|---|---|

| [120,121] | Chebyshev pre-conditioner and conjugate gradient iterative method |

| [122] | Parallel FDPF |

| [123] | Improving the continuous Newtons method |

| [124] | 7 parallelization strategies with sparsity analysis |

| [125,126] | Probabalistic Power Flow parallelization |

| [127] | Batch decoupled power flow |

| [128] | Security Constrained OPF (BELTISTOS software) |

They performed their experiments using a multicore Cray XC40 computer at the Swiss National Supercomputing Centre. They used 18 2.1 GHz cores, NVIDIA Tesla P100 with 16 GB memory, and many other BELTISTOS and hardware-associated libraries. They tested their modification on several system sizes from PEGASE1354 to PEGASE13659. Their approach sped up the solution of the Dense Schur Complement System by 30× for the largest system over CPU solution of that step, achieving notable speed up in all systems sizes tested. They later performed a large-scale performance study, where they increased the number of computing cores used from 16 to 1024 on the cluster. The BELTISTOS-SC augmented approach achieved up to 500× speedup for the PEGASE1354 system and 4200× for the PEGASE9241 when 1024 cores are used, demonstrating strong scalability up to 512 cores.

5. Optimal Power Flow

Like PF, OPF studies are the basis of many operational assessments such as System Stability Analysis (SSA), UC, ED, and other market decisions [29]. Variations of these assessments include Security Constrained Economic Dispatch (SCED) and SCOPF, both involving contingencies. OPF ensures the satisfaction of network constraints over cost or power-loss minimization objectives. The full ACOPF version has non-linear, non-convex constraints, making it computationally complex and making it difficult to reach a global optimum. DC Optimal Power Flow (DCOPF) and other methods, such as decoupled OPF, linearize and simplify the problem, and when solved, they produce a fast but sub-optimal solution. Because DCOPF makes voltage and reactive power assumptions, it becomes less reliable with increased RE penetration. RE deviates voltages and reactive powers of the network significantly. This is one of the main drivers behind speeding up ACOPF in real-time applications for all algorithms involving it. The first formulation of OPF was achieved by J. Carpenter in 1962 [130], followed by an enormous volume of OPF formulations and studies, as surveyed in [131].

5.1. MIMD Based Studies

5.1.1. Development

OPF and SCOPF decomposition approaches started appearing in the early 1980s using P-Q decomposition [132,133] and including corrective rescheduling [134]. The first introduction to parallel OPF algorithms might have been by Garng M Huang, and Shih-Chieh Hsieh in 1992 [135], who proposed a “textured” OPF algorithm that involved network partitioning. In a different work, they proved that their algorithm would converge to a stationary point and that with certain conditions, optimality is guaranteed. Later, they implemented the algorithm on the nCUBE2 machine [136], showing that both their sequential and parallel textured algorithm is superior to non-textured algorithms. It was atypical for studies at the time to highlight portability, which makes Huang’s work in [92] special. It contributed another OPF algorithm using Successive Overrelaxation by making it “Adaptive”, reducing the number of iterations. The code was applied on the nCUBE2 and ported to Intel iPSC/860 hypercube, demonstrating its portability.

In 1990, M.Teixeria et al. [137] demonstrated what might be the first parallel SCOPF on a 16-CPU system developed by the Brazilian Telecom R&D center. The implementation was somewhat “makeshift” and coarse to the level where each CPU was installed with a whole MS/DOS OS for the multi-area reliability simulation. Nevertheless, it outperformed a VAX 11/780 implementation and scaled perfectly, was still 2.5 times faster than running on, and exhibited strong scalability.

Distributed OPF algorithms started appearing in the late 1990s with a coarse-grained multi-region coordination algorithm using the Auxiliary Problem Principle (APP) [138,139]. This approach was broadened much later by [140] using Semi-Definite Programming and Alternating Direction Method of Multiplier (ADMM). Prior to that, ADMM was also compared against the method of partial duality in [141]. The convergence properties of the previously mentioned techniques and more were compared comprehensively in [142].

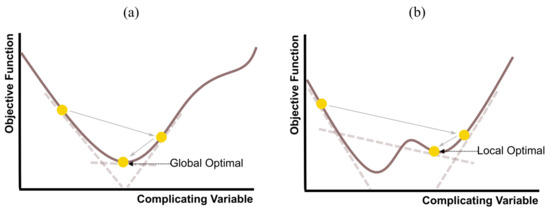

The asynchronous parallelization of OPF first appeared on preventative [143], and corrective SCOPF [144] targeting online applications [47] motivated by the heterogeneity of solution time of different scenarios. Both SIMD and MIMD machines were used, emphasizing portability as “Getsub and Fifo” routines were carried out. On the same token, MPI protocols were used to distribute and solve SCOPF, decomposing the problem with GRMES and solving it with the non-linear IPM method varying the number of processors [145]. Real-time application potential was later demonstrated by using Benders decomposition instead for distributed SCOPF [146]. Benders decomposition is one of the most commonly used techniques to create parallel structures in power system optimization problems, and it shows up in different variations in the present literature. As Figure 8 shows, Benders Decomposition is applied by fixing the complicating variables of the objective function to a different value in every iteration and constructing a profile with benders cuts to find the minimum objective function value with respect to the complicating variables. If the profile is non-convex, then optimality is not guaranteed since the value is changed in steps descending the slope of the cuts.

Figure 8.

The conversion steps of the complicated variable when it is Convex (a) and Non-Convex (b) with respect to the objective function.

5.1.2. State-of-the-Art

Table 4 summarizes the contributions of MIMD-based OPF studies of this section. As mentioned, involving AC equations in large-scale power system studies is crucial and might soon become the standard. The difficulty of achieving this feat varies depending on the application. For example, precise nodal price estimation is attained by solving ACOPF multiple times under different possible system states (Probabilistic ACOPF). This can be effortlessly scaled as each proposed scenario can be solved independently, but a large number of scenarios can exhaust available resources. This is when researchers resort to scenario reduction techniques such as Two-Point Estimation [147]. In this study, it was applied to 10k Monte Carlo (MC) scenarios, and the reduced set was used to solve a conically relaxed ACOPF model following the approach in [148]. Using 40 cores from the HPC cluster of KTH Royal Inst of Tech, the approach resulted in an almost linear speedup with high accuracy on all test cases.

In contrast, parallelizing a monolithic ACOPF problem itself is much more complicated. However, the same authors did this readily since their model was already decomposable due to the conic relaxation [149]. Here, the choice of network partitions is treated as an optimization problem to realize the least number of lines between sub-networks. A graph partitioning algorithm and a modified benders decomposition approach were used, providing analytical and numerical proof that they converge to the same value as the original benders. This approach achieved a lower–upper bound gap of around 0–2%, demonstrating scalability. A maximum number of eight partitions (eight subproblems) were divided on a four-core 2.4 GHz workstation. Beyond four partitions, hyperthreading or sequential execution must have occurred. This is a shortcoming, as only four threads can genuinely run in parallel at each time. Hyper-threading only allows a core to alternate between two tasks. Their algorithm might have even more potential if distributed on an HPC platform.

The ACOPF formulation is further coupled and complicated when considering Optimal Transmission Switching (OTS). The addition of binary variables ensures the non-convexity of the problem, turning it from an NLP to an Mixed Integer Non-Linear Programming (MINLP). In Lan et al. [150], this formulation is parallelized for battery storage embedded systems, where temporal decomposition was performed, recording the State of Charge (SoC) at the end of each 6 h (four subproblems). They employed a two-stage scheme with an NLP first stage to find the ACOPF of a 24 h time horizon and transmission switching in the second stage. The recorded SOCs of the first stage are added as constraints to the corresponding subproblems, which are entirely separable. They tested the algorithm IEEE-188 test case and solved it with Bonmin with GAMS on a four-core workstation. While the coupled ACOPF-OTS formulation achieved a 4.6% Optimality gap at a 16 h 41 min time limit, their scheme converged to a similar gap within 24 m. The result is impressive, considering the granularity of the decomposition. This is yet another example in which a better test platform could have shown more exciting results, as the authors were limited to parallelizing four subproblems. Algorithm-wise, an asynchronous approach or better partition strategy is needed, as one of the subproblems took double the time of all the others to solve.

The inclusion of voltage and reactive power predicate the benefits gained by ACOPF. However, it is their effect on the optimal solution that matters, and there are ways to preserve that while linearizing the ACOPF. The DCOPF model is turned into a Mixed Integer Linear Programming (MILP) in [151] by adding on/off series reactance controllers (RCs) to the model. The effect of the reactance is implied by approximating its value and adding it to the DC power flow term as a constant without actually modeling reactive power. The binary variables are relaxed using the Big M approach to linearize the problem, derive the first-order KKT conditions, and solve it using the decentralized iterative approach. Each node solved its subproblem, making this a fine-grained algorithm, and each subproblem had coupling variables with adjacent buses only. The approach promised scalability, and its convergence was proven in [152]. However, it was not implemented in parallel, and the simulation-based assumptions are debatable.

Decentralization, in that manner, reduces the number of coupling variables and communication overhead. However, this also depends on the topology of the network, as shown in [111]. In this work, a stochastic DCOPF formulation incorporating demand response was introduced. The model network was decomposed using ADMM and different partitioning strategies where limited information exchange occurs between adjacent subsystems. The strategies were implemented using MATLAB and CPLEX on a six-bus to verify solution accuracy and later on larger systems. The ADMMBased Fully Decentralized DCOPF and Accelerated ADMM for Distributed DCOPF were compared. Recent surveys on Distributed OPF algorithms showed that in OPF decomposition and parallelization, ADMM and APP are preferred in most of the studies as a decomposition technique [149,153]. The distributed version converged faster, while the decentralized version exhibited better communication efficiency. More importantly, a separate test showed that decentralized algorithms work better on subsystems that exhibit less coupling (are less interconnected) and vice versa. This breeds the idea that decentralized algorithms are better suited for ring or radial network topologies while distributed algorithms are better for meshed networks [153,154].

Table 4.

Optimal Power Flow MIMD based state-of-the-art studies.

Table 4.

Optimal Power Flow MIMD based state-of-the-art studies.

| Paper | Contribution |

|---|---|

| [147] | Two-Point estimation for scenario reduction (Monte Carlo Solves) |

| [148] | Conically Relaxed ACOPF (Monte Carlo Solves) |

| [149] | Monolithic ACOPF model parallelization using conic relaxation |

| [150] | Battery storage and Transmission Switching with Temporal Decomposition |

| [111] | Comparing decentralized and distributed algorithms on different network topologies |

| [155] | Transmission-Distribution network co-optimization, |

| [99,156] | Two-stage stochastic algorithm accounting for DER |

Distribution networks tend to be radial. A ring topology is rare, except in microgrids. Aside from their topology, they have many differences compared to transmission networks causing the division of their studies and OPF formulations. OPF for Transmission–Distribution co-optimization makes a great case for HPC use in power system studies, as co-optimizing the two together is considered peak complexity. S. Tu et al. [155] decomposed a very large-scale ACOPF problem in the Transmission–Distribution network co-optimization attempt. They devised a previously used approach where the whole network was divided by its feeders, where each distribution network had a subproblem. The novelty in their approach lies in a smoothing technique that allows gradient-based non-linear solvers to be used, particularly the Primal-Dual-Interior Point Method, which is the most commonly used method for solving ACOPF. Similar two-stage stochastic algorithms were implemented to account for the uncertainties in Distributed Energy Resources (DER) at a simpler level [99,156].

S. Tu et al. used an augmented IEEE-118 network, adding distribution systems to all busses, resulting in 9206 buses. Their most extreme test produced 11,632,758 bus solutions (1280 scenarios). Compared to a generic sequential Primal Dual Interior Primal Method (PDIPM), the speedup of their parallelized approach increased linearly with the number of scenarios and scaled strongly by increasing the number of cores used in their cluster. In contrast, the serial solution time increased superlinearly and failed to converge within a reasonable time in a relatively trivial number of scenarios. While their approach proved to solve large-scale ACOPF much faster than a serial approach, it falls short in addressing Transmission–Distribution co-optimization because it merely considered the distribution network as sub-networks with the same objective as the transmission, which is unrealistic.

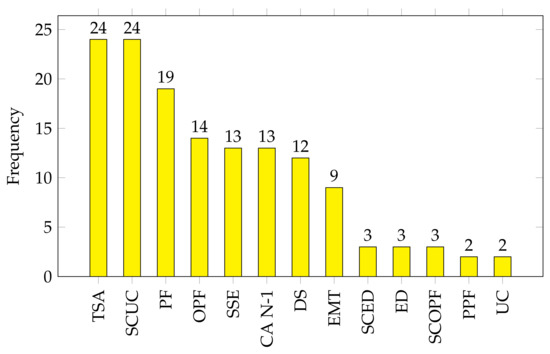

6. Unit Commitment

The UC problem goes back to the 1960s [157]. In restructured electricity markets, Security Constrained Unit Commitment (SCUC) is used to determine the generation schedule at each time point at the lowest cost possible while maintaining system security. A typical formulation used in today’s industry can be found in [158]. Often, implementations use immensely detailed stochastic models involving N-1 or N-2 contingencies [159], ACOPF constraints, and incorporating RE resources DER [160] and distribution networks [161]. This leads to a large number of scenarios and a tremendously complex problem. Decomposition of the problem using Lagrangian Relaxation (LR) methods is very common [36,162] and many formulations are ready to segue for HPC parallel implementation. This includes global optimal solution methods for AC- SCUC, as in [163]. Recent literature on parallel UC is abundant, making this section the largest in this review.

6.1. MIMD-Based Studies

6.1.1. Development

Simulations of parallel environments to implement parallel UC algorithms started appearing around 1994, modeling hydrothermal systems [164] and stochasticity [165] on supercomputers [166] workstation networks [167]. The earliest UC implementations on parallel hardware used embarrassingly parallel metaheuristics, such as simulated annealing [165] and other genetic algorithms [168,169]. However, the first mathematical programming approach might have been conducted by Misra in 1994 [166] using dynamic programming and vector processors. Three years later, K.K. Lau and M.J. Kumar also used dynamic programming to create a decomposable master–slave structure of the problem. It was then distributed over a network of workstations using PVM libraries, with each subproblem solved asynchronously [167]. These, however, were not network-constrained problems.

In 2000, Murillo-Sanchez and Robert J. Thomas [170] attempted full non-linear AC-SCUC in parallel by decomposing the problem using APP but failed to produce any results, upholding the problem’s complexity. In quite an interesting case, volunteer computing with the BOINC system was used to parallelize the MC simulations of stochastic load demand in UC problems with hydrothermal operation [171]. However, that did not include network constraints either. There has been some work conducted on decomposition algorithms for network-constrained UC, but this has rarely been applied in a practical parallel setup. Most parallel implementations happened in the last decade.

6.1.2. State-of-the-Art

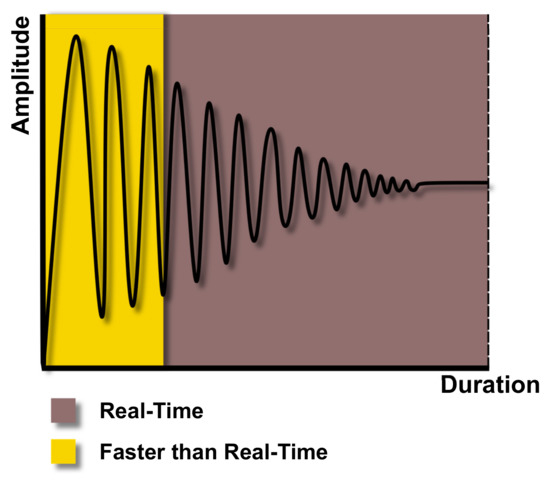

Table 5 summarizes the latest contributions of MIMD-based UC studies of this section. Papavasillio et al. [172], set up the framework of a scenario-based two-stage stochastic framework of UC with reserve requirement for wind power integration, emphasizing wind forecasting and scenario selection methodology. In later work, Papavasiliou compared a benders decomposition approach that removes the second-stage bottlenecking and a Lagrangian Relaxation (LR) algorithm based on [162], where the impact of contingencies on decisions was implied in the constraints [160]. The LR approach proved to be more scalable for that formulation. As a result, Papavasillo chose the LR approach to solving the same formulation by adding DC network constraints [173]. Even though the wind scenarios were carefully selected, there existed instances in which specific subproblems took about double the time of the following most complex subproblem. In a follow-up work, Aravena and Papavasillio resorted to an asynchronous approach in [174] that allows time sharing. This work showed that the synchronous approach to solving subproblems could be highly inefficient, as the idle time of computational resources can reach up to 84% compared to the asynchronous algorithm.

While many SCUC parallel formulations use DC networks, the real challenge is using ACOPF, as exhibited in earlier failed attempts [170]. In [175], the conic relaxation approach mentioned earlier [148] was used to turn the AC-SCUC into a Mixed Integer Second-Order Conic (MISOC) program. It allowed the decomposition of the problem to a master problem, where UC is determined, and a subproblem in which the ACOPF is solved iteratively. In their approach, the active power is variable if the master problem is fixed, and only the reactive power is solved to check if the commitment is feasible. Fixing the active power allows for time decomposition since ramping constraints no longer apply in the subproblems. They compared the computational efficiency of their approach against a coupled DC- SCUC and AC- SCUC, solved using commercial solvers such as GAMS, DICOPT, and SBB. Their approach took only 3.3% of the time taken by previous similar work [176] to find a solution at a 0.56% gap. However, their approach faced accuracy and feasibility issues, and the parallelism strategy was unclear since they created eight subproblems while using three threads.

Temporal decomposition was also used on a unique formulation, Voltage Stability Constrained SCUC (VSC- SCUC) [177]. The problem is an MINLP with AC power flow constraints and added voltage stability constraints that use an index borrowed from [178]. APP decomposition was used to decompose the model into 24 subproblems, and it was compared against conventional AC- SCUC on several test cases. It converged after 55 iterations compared to 44 by the AC-SCUC solution on the IEEE-118 case. The structure and goals achieved by VSC- SCUC make tractability challenging, deeming the approach itself promising. However, the study fell short of mentioning any details about the claimed parallel routine used.

Nevertheless, SCUC decentralization is valued for more than performance enhancement. It can help achieve privacy and security, and it fits the general future of the smart grid, IoT, and market decentralization. In a market in which the ISO sets prices of energy and generators are merely price takers, a decentralization framework called “Self-Commitment” can be created from the UC formulation [179]. Inspired by self-commitment, Feizollahi et al. decentralize the SCUC problem relevant to bidding markets, including temporal constraints [180]. They implement a “Release-and-Fix” process which consists of three ADMM stages of decomposing the network. The first stage finds a good starting point by solving a relaxed model. The second and third stages are iterative; a feasible binary solution is found, followed by a refinement of the continuous variables. They used two test cases (3012 and 3375-bus) and applied different partitions from 20 to 200 sub-networks with different root node (coordinator node) combinations. They also varied the level of constraints in three cases, from no network up to AC network and temporal constraints. A sub 1% gap was achieved in all cases, outperforming the centralized solution in the most complicated cases and showing scalability where the centralized solution was intractable. The scalability saturated, however, at 100 partitions, and one of the key conclusions was that the choice of the root node and partition topologies are crucial to achieving gains.

Multi-Area formulations often involve ED, but rarely UC, as seen in [181]. The UC formulation in this study includes wind generation. The wind uncertainty is incorporated using Adjustable Interval Robust Scheduling of wind power, a novel extension of Interval Optimization Algorithms, a chance-constrained algorithm similar to Robust Optimization. The resulting Mixed-Integer Quadratic Programming (MIQP) model is decentralized using an asynchronous consensus ADMM approach. They verified the solution quality on a three-area six-bus system (achieving a 0.06% gap) and then compared their model against Robust Optimization and Stochastic Optimization models on a three-area system composed of one IEEE-118 bus system each. For a lower CPU time, their model achieved a much higher level of security than the other mentioned models. The study mentions that the parallel procedure took half the time the sequential implementation did, promising scalability. However, no details were given regarding the parallel scheme used and implementation.

Similarly, Ramanan et al. employed an asynchronous consensus ADMM approach to achieve multi-area decentralization slightly differently, as their formulation is not a consensus problem [182]. Here, the algorithm is truly decentralized, as the balance in coupling variables needs only to be achieved between a region and its neighboring one. The solution approach is similar to that of the one from [172], where UC and ED are solved iteratively. They divided the IEEE 118 bus into ten regions; each region (subproblem) was solved by one intel Xeon 2.8 GHz processor. They ensure asynchronous operation by adding 0.2 s of delay for some subproblems. The mean results of the 50 runs demonstrated the time-saving and scaling potential of the asynchronous approach that was not evident in other similar studies [183]. However, the solution quality significantly varied and deteriorated, with an optimality gap reaching 10% for some runs, and no comparison was drawn against a centralized algorithm.

In later work, the authors improved the asynchronous approach by adding some mechanisms, such as solving local convex relaxations of subproblems while consensus is being established. This allowed the subproblem to move to the next solution phase if the binary solution was found to be consistent over several iterations [184]. In addition, they introduced a globally redundant constraint based on production and demand to improve privacy further. Moreover, they used point-to-point communication without compromising the decentralized structure. They implemented their improved approach on an IEEE-3012 bus divided into 75, 100, and 120 regions. A 2.8 GHz core was assigned to solve each region and controller subproblem. They compared their approach, this time against Feizollahis implementation from 2015 [180] and a centralized approach. The idle time of the synchronous approach was higher than the computation time of the Asynchronous approach, doubling the scalability for higher region subdivisions. The gap achieved in all cases was larger than that of the Centralized solution by around 1.5%, which is a huge improvement, considering the previous work and the 18x speedup achieved.

Consensus ADMM methods typically do not converge for MILP problems such as UC without a step size diminishing property [185]. Lagrangian methods, in general, are known to suffer from a zigzagging problem. To overcome the issue, the Surrogate Lagrangian Relaxation (SLR) algorithm was used in [186] to create a distributed asynchronous UC. In later work, their approach was compared against a Distributed Synchronous SLR and a sequential SLR [187] using four threads to parallelize the subproblems. With that, better scalability against the synchronous approach was demonstrated, and a significant speedup was achieved (12× speedup to achieve a 0.03% duality gap in one instance).

To avoid the same zigzagging issue, but for Multi-Area SCUC, Kargarian et al. opted for Analytical Target Cascading (ATC) since multiple options exist for choosing the penalty function in ATC [188]. They take the model from [189] and apply ATC from [190] to decompose the problem into a distributed bi-level formulation, with a central coordinator being the leader and subproblems followers. In this work, they switched the hierarchy by putting the coordinator first, making it the follower instead of the leader. This convexified the followers’ problem, allowing the use of KKT conditions, turning it into a Mixed Complementarity Problem (MCP). Those steps turned the formulation into a decentralized one, as only neighboring subproblems became coupled. They numerically demonstrated that with their reformulation, the convergence properties of ATC were still upheld virtually and that the convex quadratic penalty functions act as local convexifiers of the subproblems. Moreover, they demonstrated numerically how the decentralized algorithm is less vulnerable to cyber attacks. Unfortunately, the approach was not implemented practically in parallel; rather, the parallel solution time was estimated based on the sequential execution of the longest subproblem.

In a similar work tackling Multi-Area SCUC, a variation of ACT is used [191] in which the master problem determines the daily transmission plan, and each area becomes an isolated SCUC subproblem. This problem is much more complicated, as it involves AC power flow equations, HVDC tie-lines, and wind generation. The power injection of the tie lines is treated as a pseudo generator with generation constraints that encapsulate the line flow constraints. This approach removes the need for consistency constraints used in traditional ATC-based distributed SCUC, such as the ones used in [190]. In the case study, they subdivide several systems into three-area networks and split their work into three threads. Comparisons were drawn against a centralized implementation, the traditional distributed form, and four different tie-line modes of operation with varying load and wind generation. Their approach consistently converged at lower times than the traditional ATC algorithm. It slightly surpassed the centralized formulation on the most extensive network of 354-bus, which means the approach has the potential for scalability.

Finding the solution of a UC formulation that involves the transmission network, active distribution network, microgrids, and DER is quite a leap in total network coordination. This challenge was assumed by [161] in a multi-level interactive UC (MLI-UC). The objective function of this problem contained three parts: the cost of UC at the transmission level, the cost of dispatch at the distribution level, and the microgrid level. The three levels’ network constraints were decomposed using the ATC algorithm, turning it into a multi-level problem. A few reasonable assumptions were made to aid in the tractability of the problem. The scheme creates a fine-grained structure at the microgrid level and a coarser structure at the distribution level, both of which were parallelized. The distribution of calculation and information exchange between the three levels provides more information regarding costs at each level and the Distribution of Locational Marginal Pricing (DLMP).

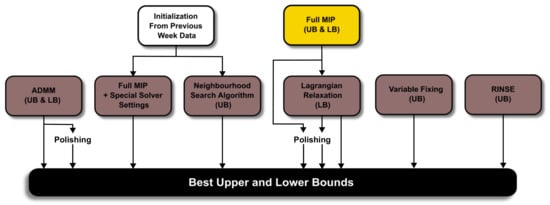

Most of the previous work in power system problems—apart from UC—focuses on the solution process rather than the database operations involved. In [192], a parallel SCUC formulation for hydrothermal power systems is proposed, incorporating pumped hydro. This paper uses graph computing technologies and graph databases (NoSQL) rather than relational databases to parallelize the formulation of their MIP framework. Their framework involves Convex Hull Reformulation, a Special Ordered Set method to reduce the number of variables of the model, constrained relaxation techniques [193], and LU decomposition. The graph-based approach showed significant enhancement in speedup over a conventional MIP solution method on a Tigergraph v2.51 database. Similar applications of NoSQL were explored in other power system studies [194,195,196].