Data-Free and Data-Efficient Physics-Informed Neural Network Approaches to Solve the Buckley–Leverett Problem

Abstract

1. Introduction

2. Mathematical Formulations

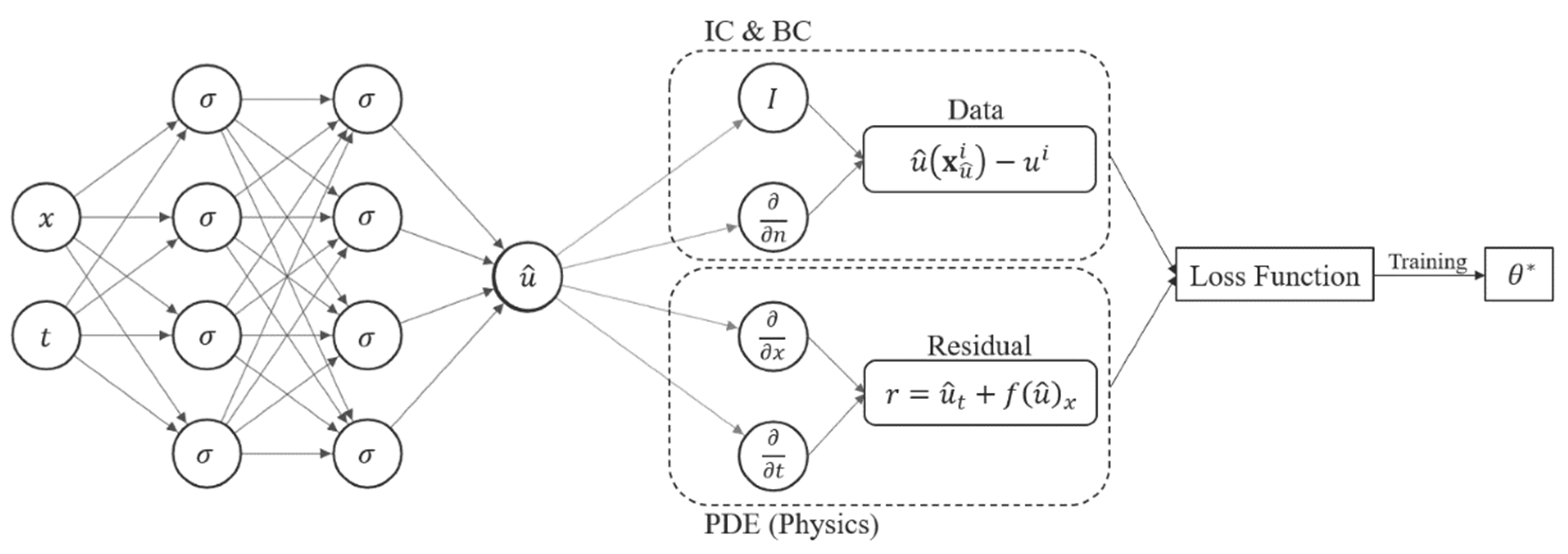

3. Physics-Informed Neural Networks (PINNs)

4. Neural Network Architectures

4.1. Multilayer Perceptron Neural NN Architecture

4.2. Attention-Based NN Architecture

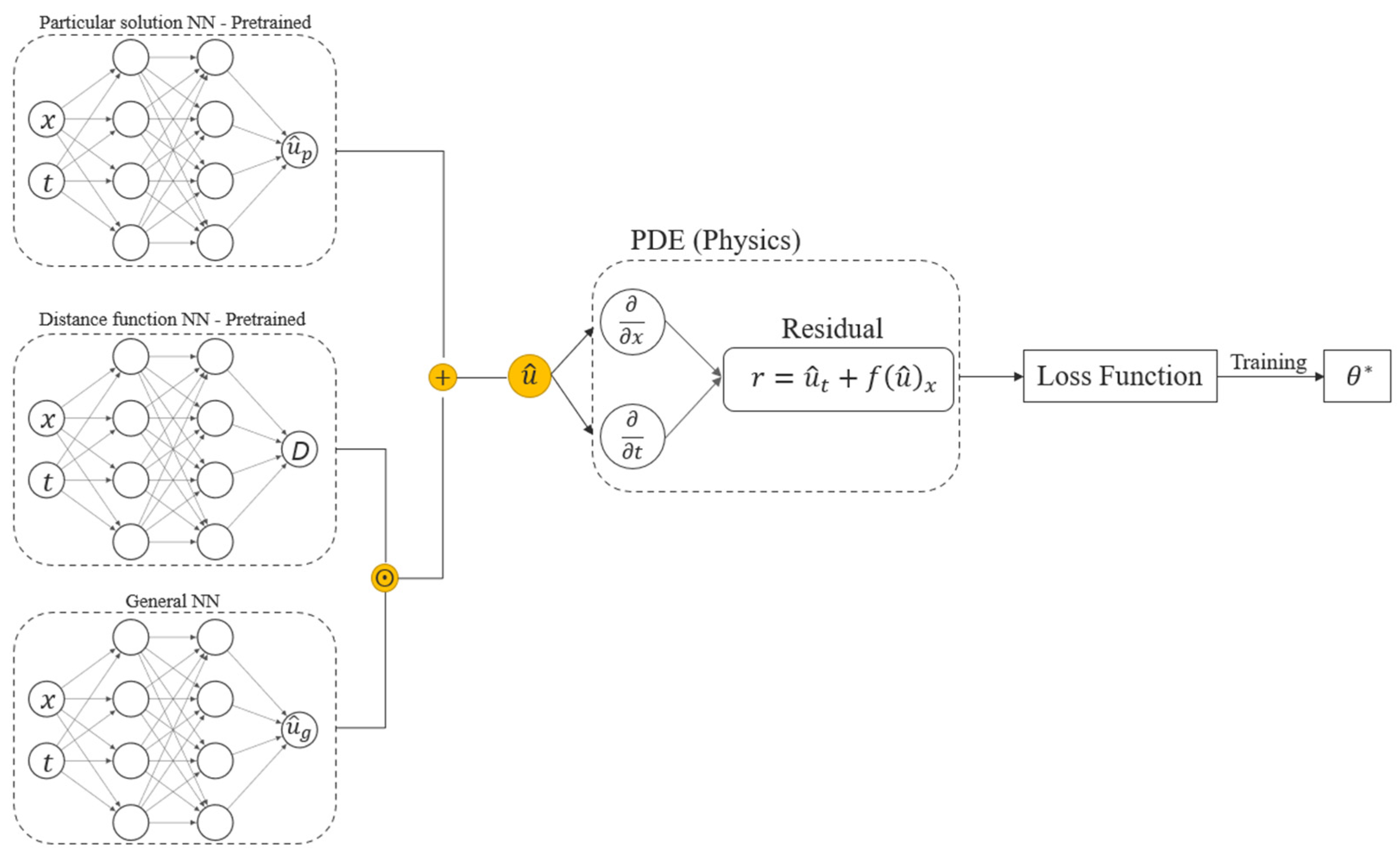

4.3. Data-Free Composite NN Model

5. Results

5.1. Solution of the Buckley–Leverett Problem Using PINNs

5.1.1. MLP Model—Buckley–Leverett

5.1.2. Attention-Based Model—Buckley–Leverett

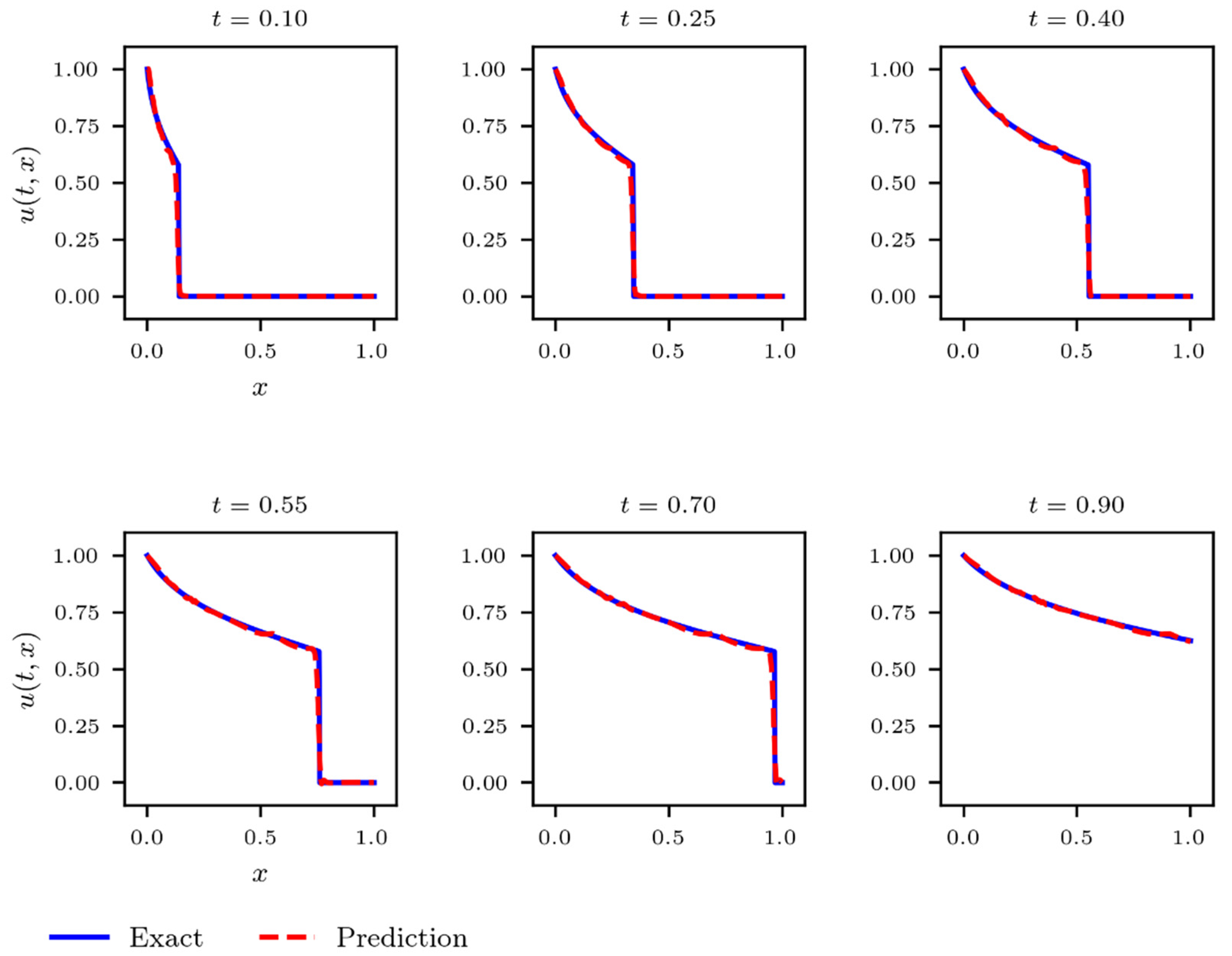

5.2. Data-Free Composite NN Model Solution of the Buckley–Leverett

6. Conclusions and Remarks

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Dake, L.P. Fundamentals of Reservoir Engineering; Elsevier: Amsterdam, The Netherlands, 1983. [Google Scholar]

- Welge, H.J. A Simplified Method for Computing Oil Recovery by Gas or Water Drive. J. Pet. Technol. 1952, 4, 91–98. [Google Scholar] [CrossRef]

- Lax, P.D. Hyperbolic Systems of Conservation Laws and the Mathematical Theory of Shock Waves; Society for Industrial and Applied Mathematics: Philadelphia, PA, USA, 1973. [Google Scholar]

- LeVeque, R.J. Finite Volume Methods for Hyperbolic Problems; Cambridge University Press: Cambridge, UK, 2002; Volume 31. [Google Scholar]

- Lie, K.-A. An Introduction to Reservoir Simulation Using MATLAB/GNU Octave: User Guide for the MATLAB Reservoir Simulation Toolbox (MRST); Cambridge University Press: Cambridge, UK, 2019. [Google Scholar]

- Pasquier, S.; Quintard, M.; Davit, Y. Modeling two-phase flow of immiscible fluids in porous media: Buckley-Leverett theory with explicit coupling terms. Phys. Rev. Fluids 2017, 2, 104101. [Google Scholar] [CrossRef]

- Abreu, E.; Vieira, J. Computing numerical solutions of the pseudo-parabolic Buckley–Leverett equation with dynamic capillary pressure. Math. Comput. Simul. 2017, 137, 29–48. [Google Scholar] [CrossRef]

- Raissi, M.; Perdikaris, P.; Karniadakis, G. Physics-informed neural networks: A deep learning framework for solving forward and inverse problems involving nonlinear partial differential equations. J. Comput. Phys. 2018, 378, 686–707. [Google Scholar] [CrossRef]

- Cuomo, S.; Di Cola, V.S.; Giampaolo, F.; Rozza, G.; Raissi, M.; Piccialli, F. Scientific Machine Learning Through Physics–Informed Neural Networks: Where we are and What’s Next. arXiv 2022, arXiv:2201.05624. [Google Scholar] [CrossRef]

- Raissi, M.; Karniadakis, G.E. Hidden physics models: Machine learning of nonlinear partial differential equations. J. Comput. Phys. 2018, 357, 125–141. [Google Scholar] [CrossRef]

- Zhang, W.; Al Kobaisi, M. On the Monotonicity and Positivity of Physics-Informed Neural Networks for Highly Anisotropic Diffusion Equations. Energies 2022, 15, 6823. [Google Scholar] [CrossRef]

- Zhang, W.; Al Kobaisi, M. Cell-Centered Nonlinear Finite-Volume Methods with Improved Robustness. SPE J. 2020, 25, 288–309. [Google Scholar] [CrossRef]

- Jin, X.; Cai, S.; Li, H.; Karniadakis, G.E. NSFnets (Navier-Stokes flow nets): Physics-informed neural networks for the incompressible Navier-Stokes equations. J. Comput. Phys. 2020, 426, 109951. [Google Scholar] [CrossRef]

- Fuks, O.; Tchelepi, H.A. Limitations Of Physics Informed Machine Learning For Nonlinear Two-Phase Transport In Porous Media. J. Mach. Learn. Model. Comput. 2020, 1, 19–37. [Google Scholar] [CrossRef]

- Fraces, C.G.; Tchelepi, H.; Hamdi, T. Physics informed deep learning for flow and transport in porous media. In SPE Reservoir Simulation Conference; OnePetro: Richardson, TX, USA, 2021. [Google Scholar]

- Diab, W.; Kobaisi, M.A. PINNs for the Solution of the Hyperbolic Buckley-Leverett Problem with a Non-convex Flux Function. arXiv 2021, arXiv:2112.14826. [Google Scholar]

- Lu, L.; Meng, X.; Mao, Z.; Karniadakis, G.E. DeepXDE: A Deep Learning Library for Solving Differential Equations. SIAM Rev. 2021, 63, 208–228. [Google Scholar] [CrossRef]

- Rodriguez-Torrado, R.; Ruiz, P.; Cueto-Felgueroso, L.; Green, C.M.; Friesen, T.; Matringe, S.; Togelius, J. Physics-informed attention-based neural network for solving non-linear partial differential equations. arXiv 2021, arXiv:2105.07898. [Google Scholar]

- Wang, S.; Teng, Y.; Perdikaris, P. Understanding and Mitigating Gradient Flow Pathologies in Physics-Informed Neural Networks. SIAM J. Sci. Comput. 2021, 43, A3055–A3081. [Google Scholar] [CrossRef]

- Rao, C.; Sun, H.; Liu, Y. Physics-Informed Deep Learning for Computational Elastodynamics without Labeled Data. J. Eng. Mech. 2021, 147, 04021043. [Google Scholar] [CrossRef]

- Sun, L.; Gao, H.; Pan, S.; Wang, J.-X. Surrogate modeling for fluid flows based on physics-constrained deep learning without simulation data. Comput. Methods Appl. Mech. Eng. 2019, 361, 112732. [Google Scholar] [CrossRef]

- Samaniego, E.; Anitescu, C.; Goswami, S.; Nguyen-Thanh, V.M.; Guo, H.; Hamdia, K.; Zhuang, X.; Rabczuk, T. An energy approach to the solution of partial differential equations in computational mechanics via machine learning: Concepts, implementation and applications. Comput. Methods Appl. Mech. Eng. 2020, 362, 112790. [Google Scholar] [CrossRef]

- Dong, S.; Ni, N. A method for representing periodic functions and enforcing exactly periodic boundary conditions with deep neural networks. J. Comput. Phys. 2021, 435, 110242. [Google Scholar] [CrossRef]

- Muskat, M. The Flow of Homogeneous Fluids Through Porous Media. Soil Sci. 1938, 46, 169. [Google Scholar] [CrossRef]

- Abadi, M.; Barham, P.; Chen, J.; Chen, Z.; Davis, A.; Dean, J.; Devin, M.; Ghemawat, S.; Irving, G.; Isard, M.; et al. {TensorFlow}: A system for {Large-Scale} machine learning. In Proceedings of the 12th USENIX Symposium on Operating Systems Design and Implementation (OSDI 16), Savannah, GA, USA, 2–4 November 2016; pp. 265–283. [Google Scholar]

- Paszke, A.; Gross, S.; Massa, F.; Lerer, A.; Bradbury, J.; Chanan, G.; Killeen, T.; Lin, Z.; Gimelshein, N.; Antiga, L.; et al. Pytorch: An imperative style, high-performance deep learning library. In Proceedings of the Advances in Neural Information Processing Systems 32 (NeurIPS 2019), Vancouver, BC, Canada, 8–14 December 2019; Volume 32. [Google Scholar]

- McKay, M.D.; Beckman, R.J.; Conover, W.J. A comparison of three methods for selecting values of input variables in the analysis of output from a computer code. Technometrics 2000, 42, 55–61. [Google Scholar] [CrossRef]

- Hornik, K.; Stinchcombe, M.; White, H. Multilayer feedforward networks are universal approximators. Neural Netw. 1989, 2, 359–366. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. In Proceedings of the Advances in Neural Information Processing Systems 30 (NIPS 2017), Long Beach, CA, USA, 4–9 December 2017; Volume 30. [Google Scholar]

- Zhu, C.; Byrd, R.H.; Lu, P.; Nocedal, J. Algorithm 778: L-BFGS-B: Fortran subroutines for large-scale bound-constrained optimization. ACM Trans. Math. Softw. (TOMS) 1997, 23, 550–560. [Google Scholar] [CrossRef]

- Kingma, D.P.; Ba, J. Adam: A method for stochastic optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

| # of Layers | Error | |||

|---|---|---|---|---|

| MLP | 8 | 10,000 | 300 | |

| Attention | 6 | 2000 | 250 | |

| Data-free | 4 | 90,000 | 0 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Diab, W.; Chaabi, O.; Zhang, W.; Arif, M.; Alkobaisi, S.; Al Kobaisi, M. Data-Free and Data-Efficient Physics-Informed Neural Network Approaches to Solve the Buckley–Leverett Problem. Energies 2022, 15, 7864. https://doi.org/10.3390/en15217864

Diab W, Chaabi O, Zhang W, Arif M, Alkobaisi S, Al Kobaisi M. Data-Free and Data-Efficient Physics-Informed Neural Network Approaches to Solve the Buckley–Leverett Problem. Energies. 2022; 15(21):7864. https://doi.org/10.3390/en15217864

Chicago/Turabian StyleDiab, Waleed, Omar Chaabi, Wenjuan Zhang, Muhammad Arif, Shayma Alkobaisi, and Mohammed Al Kobaisi. 2022. "Data-Free and Data-Efficient Physics-Informed Neural Network Approaches to Solve the Buckley–Leverett Problem" Energies 15, no. 21: 7864. https://doi.org/10.3390/en15217864

APA StyleDiab, W., Chaabi, O., Zhang, W., Arif, M., Alkobaisi, S., & Al Kobaisi, M. (2022). Data-Free and Data-Efficient Physics-Informed Neural Network Approaches to Solve the Buckley–Leverett Problem. Energies, 15(21), 7864. https://doi.org/10.3390/en15217864