A Multi-Agent Reinforcement Learning Framework for Lithium-ion Battery Scheduling Problems

Abstract

1. Introduction

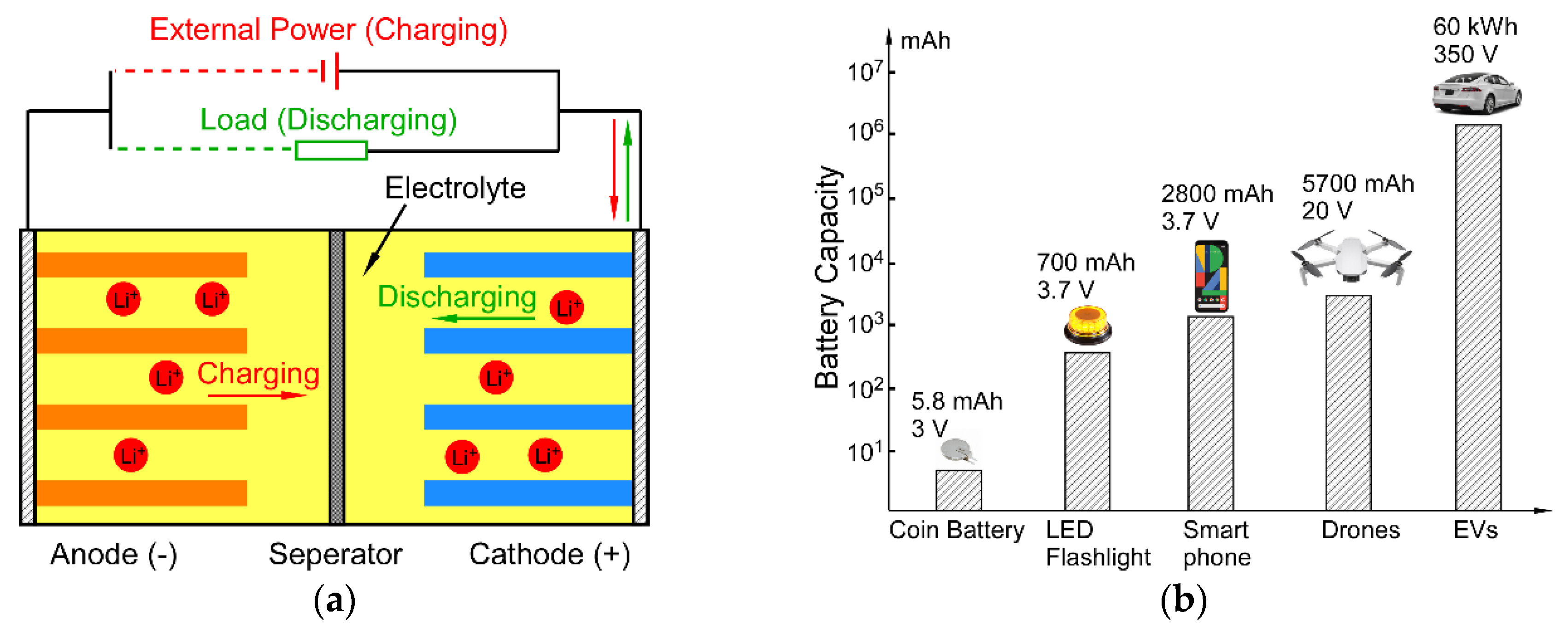

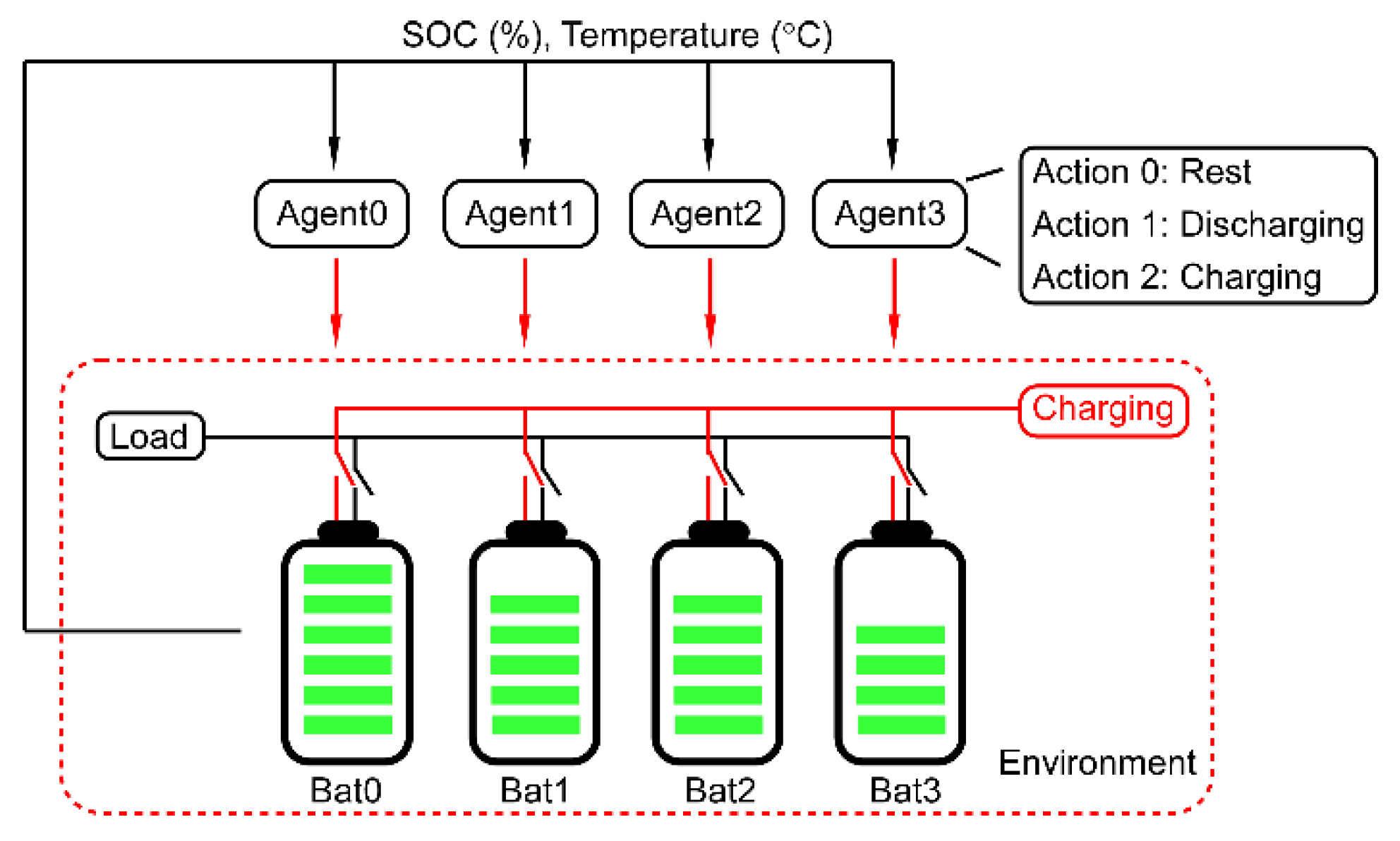

2. Battery Model

3. Reinforcement Learning Algorithm

4. Experiment

4.1. Electrical Only

4.2. Thermal Effect

4.3. Imbalanced Battery

5. Discussion

6. Conclusions and Future Work

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Kim, H.; Shin, K.G. Scheduling of battery charge, discharge, and rest. In Proceedings of the 2009 30th IEEE Real-Time Systems Symposium, Washington, DC, USA, 1–4 December 2009; pp. 13–22. [Google Scholar]

- Malarkodi, B.; Prasana, B.; Venkataramani, B. A scheduling policy for battery management in mobile devices. In Proceedings of the 2009 First International Conference on Networks & Communications, Chennai, India, 27–29 December 2009; pp. 83–87. [Google Scholar]

- Jongerden, M.; Haverkort, B.; Bohnenkamp, H.; Katoen, J.-P. Maximizing system lifetime by battery scheduling. In Proceedings of the 2009 IEEE/IFIP International Conference on Dependable Systems & Networks, Lisbon, Portugal, 29 June–2 July 2009; pp. 63–72. [Google Scholar]

- Jongerden, M.; Mereacre, A.; Bohnenkamp, H.; Haverkort, B.; Katoen, J.-P. Computing optimal schedules of battery usage in embedded systems. IEEE Trans. Ind. Inform. 2010, 6, 276–286. [Google Scholar] [CrossRef]

- Chau, C.-K.; Qin, F.; Sayed, S.; Wahab, M.H.; Yang, Y. Harnessing battery recovery effect in wireless sensor networks: Experiments and analysis. IEEE J. Sel. Areas Commun. 2010, 28, 1222–1232. [Google Scholar] [CrossRef]

- Pelzer, D.; Ciechanowicz, D.; Knoll, A. Energy arbitrage through smart scheduling of battery energy storage considering battery degradation and electricity price forecasts. In Proceedings of the 2016 IEEE Innovative Smart Grid Technologies-Asia (ISGT-Asia), Melbourne, Australia, 28 November–1 December 2016; pp. 472–477. [Google Scholar]

- Prapanukool, C.; Chaitusaney, S. An appropriate battery capacity and operation schedule of battery energy storage system for PV rooftop with net-metering scheme. In Proceedings of the 2017 14th International Conference on Electrical Engineering/Electronics, Computer, Telecommunications and Information Technology (ECTI-CON), Phuket, Thailand, 27–30 June 2017; pp. 222–225. [Google Scholar]

- Mao, H.; Alizadeh, M.; Menache, I.; Kandula, S. Resource management with deep reinforcement learning. In Proceedings of the 15th ACM Workshop on Hot Topics in Networks, Atlanta, GA, USA, 9–10 November 2016; pp. 50–56. [Google Scholar]

- Mbuwir, B.V.; Ruelens, F.; Spiessens, F.; Deconinck, G. Battery energy management in a microgrid using batch reinforcement learning. Energies 2017, 10, 1846. [Google Scholar] [CrossRef]

- Graves, A.; Wayne, G.; Reynolds, M.; Harley, T.; Danihelka, I.; Grabska-Barwińska, A.; Colmenarejo, S.G.; Grefenstette, E.; Ramalho, T.; Agapiou, J. Hybrid computing using a neural network with dynamic external memory. Nature 2016, 538, 471–476. [Google Scholar] [CrossRef] [PubMed]

- Yosinski, J.; Clune, J.; Bengio, Y.; Lipson, H. How transferable are features in deep neural networks? In Proceedings of the Advances in Neural Information Processing Systems, Montreal, QC, Canada, 8–13 December 2014; pp. 3320–3328. [Google Scholar]

- Finn, C.; Abbeel, P.; Levine, S. Model-agnostic meta-learning for fast adaptation of deep networks. In Proceedings of the 34th International Conference on Machine Learning, Sydney, Australia, 6–11 August 2017; Volume 70, pp. 1126–1135. [Google Scholar]

- Howard, A.G.; Zhu, M.; Chen, B.; Kalenichenko, D.; Wang, W.; Weyand, T.; Andreetto, M.; Adam, H. Mobilenets: Efficient convolutional neural networks for mobile vision applications. arXiv 2017, arXiv:1704.04861. [Google Scholar]

- He, Y.; Sainath, T.N.; Prabhavalkar, R.; McGraw, I.; Alvarez, R.; Zhao, D.; Rybach, D.; Kannan, A.; Wu, Y.; Pang, R. Streaming end-to-end speech recognition for mobile devices. In Proceedings of the ICASSP 2019—2019 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Brighton, UK, 12–17 May 2019; pp. 6381–6385. [Google Scholar]

- Manwell, J.F.; McGowan, J.G. Lead acid battery storage model for hybrid energy systems. Sol. Energy 1993, 50, 399–405. [Google Scholar] [CrossRef]

- Rodrigues, L.M.; Montez, C.; Moraes, R.; Portugal, P.; Vasques, F. A temperature-dependent battery model for wireless sensor networks. Sensors 2017, 17, 422. [Google Scholar] [CrossRef] [PubMed]

- Zhang, Q.; Li, Y.; Shang, Y.; Duan, B.; Cui, N.; Zhang, C. A fractional-Order kinetic battery model of lithium-Ion batteries considering a nonlinear capacity. Electronics 2019, 8, 394. [Google Scholar] [CrossRef]

- Onda, K.; Ohshima, T.; Nakayama, M.; Fukuda, K.; Araki, T. Thermal behavior of small lithium-ion battery during rapid charge and discharge cycles. J. Power Sources 2006, 158, 535–542. [Google Scholar] [CrossRef]

- Ismail, N.H.F.; Toha, S.F.; Azubir, N.A.M.; Ishak, N.H.M.; Hassan, M.K.; Ibrahim, B.S.K. Simplified heat generation model for lithium ion battery used in electric vehicle. In Proceedings of the IOP Conference Series: Materials Science and Engineering, Bandung, Indonesia, 9–13 March 2013; p. 012014. [Google Scholar]

- Sony VTC6 18650 Datasheet. Available online: https://www.18650batterystore.com/v/files/sony_vtc6_data_sheet.pdf (accessed on 28 February 2020).

- Maleki, H.; Al Hallaj, S.; Selman, J.R.; Dinwiddie, R.B.; Wang, H. Thermal properties of lithium-ion battery and components. J. Electrochem. Soc. 1999, 146, 947. [Google Scholar] [CrossRef]

- Jow, T.R.; Delp, S.A.; Allen, J.L.; Jones, J.-P.; Smart, M.C. Factors limiting Li+ charge transfer kinetics in Li-ion batteries. J. Electrochem. Soc. 2018, 165, A361. [Google Scholar] [CrossRef]

- Salvaire, F. PySPICE. Available online: https://pypi.org/project/PySpice/ (accessed on 28 February 2020).

- Song, S.; Sui, Y. System Level Optimization for High-Speed SerDes: Background and the Road towards Machine Learning Assisted Design Frameworks. Electronics 2019, 8, 1233. [Google Scholar] [CrossRef]

- Abadi, M.; Barham, P.; Chen, J.; Chen, Z.; Davis, A.; Dean, J.; Devin, M.; Ghemawat, S.; Irving, G.; Isard, M. Tensorflow: A system for large-scale machine learning. In Proceedings of the 12th {USENIX} Symposium on Operating Systems Design and Implementation ({OSDI} 16), Savannah, GA, USA, 2–4 November 2016; pp. 265–283. [Google Scholar]

- Paszke, A.; Gross, S.; Chintala, S.; Chanan, G.; Yang, E.; DeVito, Z.; Lin, Z.; Desmaison, A.; Antiga, L.; Lerer, A. Automatic differentiation in pytorch. In Proceedings of the Neural Information Processing Systems, Long Beach, GA, USA, 4–9 December 2017. [Google Scholar]

- Yang, Y.; Hu, X.; Qing, D.; Chen, F. Arrhenius equation-based cell-health assessment: Application to thermal energy management design of a HEV NiMH battery pack. Energies 2013, 6, 2709. [Google Scholar] [CrossRef]

- Foerster, J.N.; Farquhar, G.; Afouras, T.; Nardelli, N.; Whiteson, S. Counterfactual multi-agent policy gradients. In Proceedings of the Thirty-Second AAAI Conference on Artificial Intelligence, New Orleans, LA, USA, 2–7 February 2018. [Google Scholar]

- Lowe, R.; Wu, Y.; Tamar, A.; Harb, J.; Abbeel, O.P.; Mordatch, I. Multi-agent actor-critic for mixed cooperative-competitive environments. In Proceedings of the Advances in Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017; pp. 6379–6390. [Google Scholar]

- Fang, X.; Wang, J.; Song, G.; Han, Y.; Zhao, Q.; Cao, Z. Multi-Agent Reinforcement Learning Approach for Residential Microgrid Energy Scheduling. Energies 2020, 13, 123. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26–31 June 2016; pp. 770–778. [Google Scholar]

- Bentley, W. Cell balancing considerations for lithium-ion battery systems. In Proceedings of the Twelfth Annual Battery Conference on Applications and Advances, 14–17 January 1997; pp. 223–226. [Google Scholar]

- Cao, J.; Schofield, N.; Emadi, A. Battery balancing methods: A comprehensive review. In Proceedings of the 2008 IEEE Vehicle Power and Propulsion Conference, 3 September 2008; pp. 1–6. [Google Scholar]

- Lee, W.C.; Drury, D.; Mellor, P. Comparison of passive cell balancing and active cell balancing for automotive batteries. In Proceedings of the 2011 IEEE Vehicle Power and Propulsion Conference, Chicago, IL, USA, 6–8 September 2011; pp. 1–7. [Google Scholar]

- Nvidia Jetson Nano Developer Kit. Available online: https://developer.nvidia.com/embedded/jetson-nano-developer-kit (accessed on 28 February 2020).

| Parameter | Value |

|---|---|

| Base model | Murata VTC6 18650 |

| Electrical Parameters | |

| Capacity | 3000 mAh |

| Nominal voltage | 3.7 V |

| Capacity ratio c | 0.5 |

| Fractional order α | 0.9 or 0.99 |

| Recovery flow rate | 0.001 |

| Discharge current | 4 A |

| Charge current | 0.3 A |

| Step time duration | 1 min |

| Physical & Thermal Parameters | |

| Battery dimension | 18 mm Ø × 65 mm |

| Battery mass | ~45 g |

| Max operating temp. | 60 °C |

| Battery specific heat capacity | ~0.96 ± 0.02 J /g K |

| Internal resistance | 0.1 Ohm |

| Entropy change ΔS | −30 J/mol·K |

| Heat transfer coeff. h | 13 Wm−2K−1 |

| Experiment | Sequential | RR | 2RR | All-Way (4RR) | RL Agent |

|---|---|---|---|---|---|

| Discharge only (α = 0.99) | 84 | 92 | 92 | 92 | 92 |

| Discharge only (α = 0.9) | 112 | 124 | 136 | 145 | 145 |

| Charge enabled (α = 0.99) | 84 | 116 | 108 | 92 | 116 |

| Charge enabled (α = 0.9) | 112 | 152 | 154 | 145 | 154 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Sui, Y.; Song, S. A Multi-Agent Reinforcement Learning Framework for Lithium-ion Battery Scheduling Problems. Energies 2020, 13, 1982. https://doi.org/10.3390/en13081982

Sui Y, Song S. A Multi-Agent Reinforcement Learning Framework for Lithium-ion Battery Scheduling Problems. Energies. 2020; 13(8):1982. https://doi.org/10.3390/en13081982

Chicago/Turabian StyleSui, Yu, and Shiming Song. 2020. "A Multi-Agent Reinforcement Learning Framework for Lithium-ion Battery Scheduling Problems" Energies 13, no. 8: 1982. https://doi.org/10.3390/en13081982

APA StyleSui, Y., & Song, S. (2020). A Multi-Agent Reinforcement Learning Framework for Lithium-ion Battery Scheduling Problems. Energies, 13(8), 1982. https://doi.org/10.3390/en13081982