Temperature Measurement Method of Flame Image Fusion with Different Exposures

Abstract

1. Introduction

2. Principle and Methods

2.1. Two-Color Pyrometry

2.2. Fusion Method

3. Experimental Setup

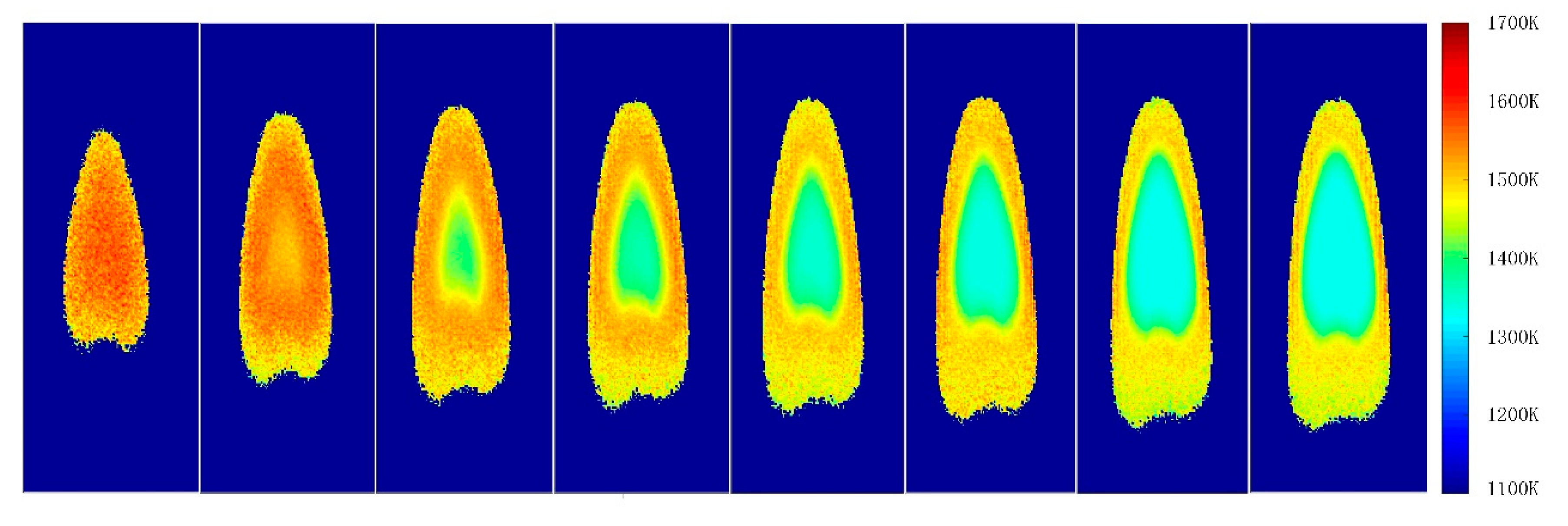

4. Results and Discussion

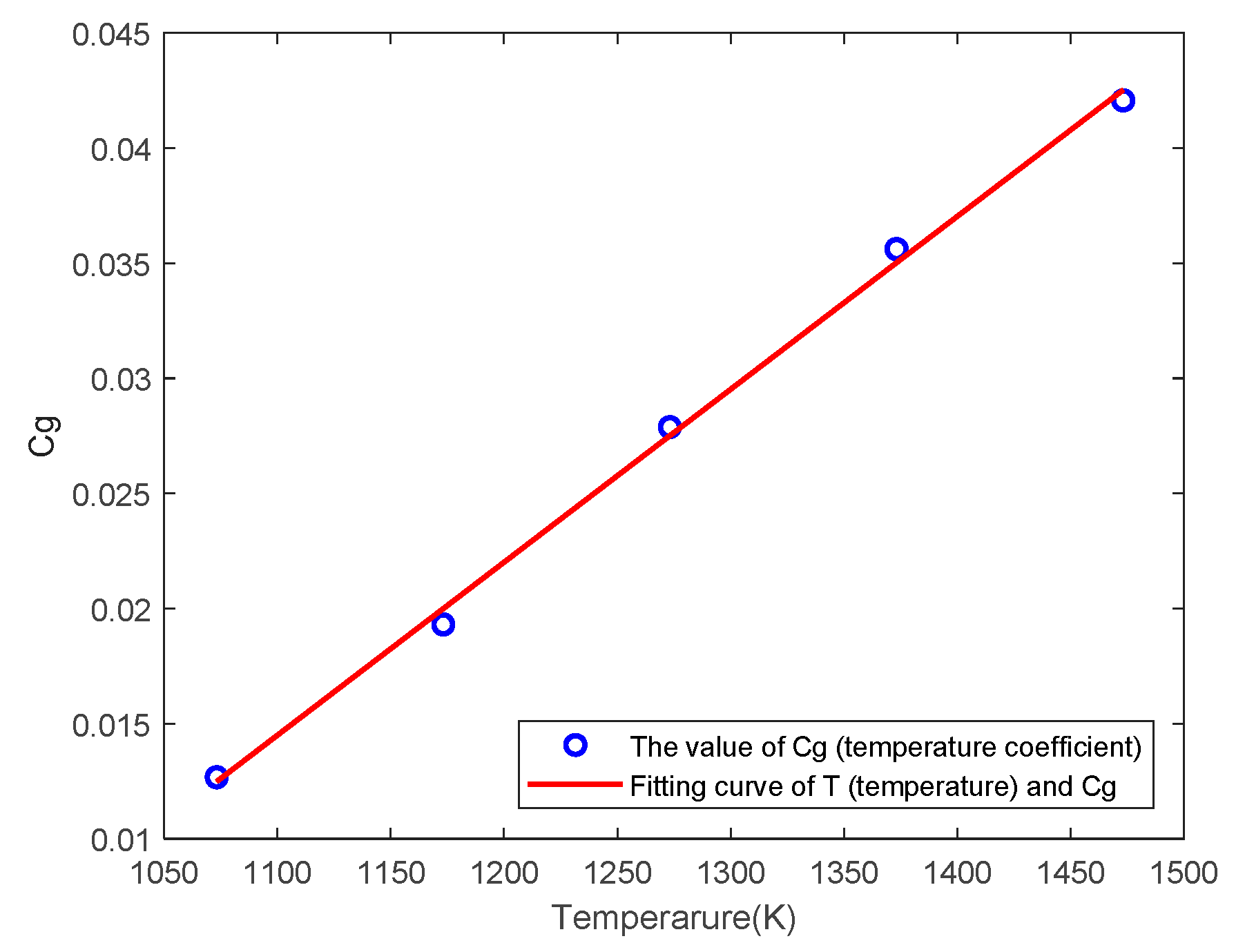

4.1. Temperature Measurement Coefficient Calibration

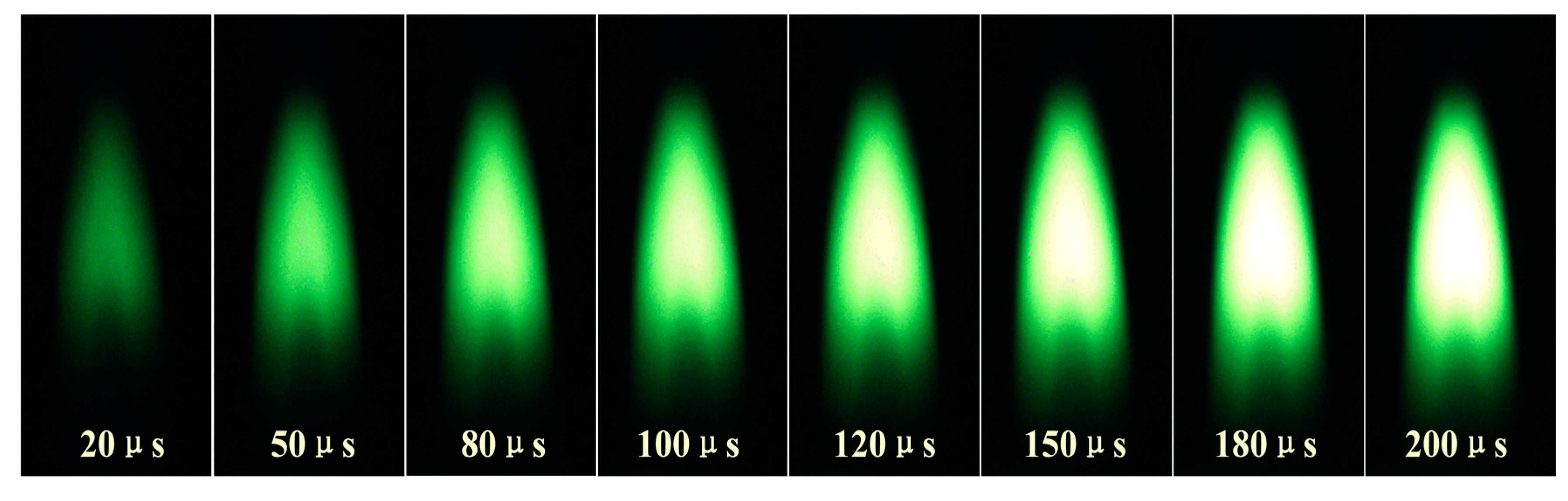

4.2. Image Fusion of Multi-Exposure Times

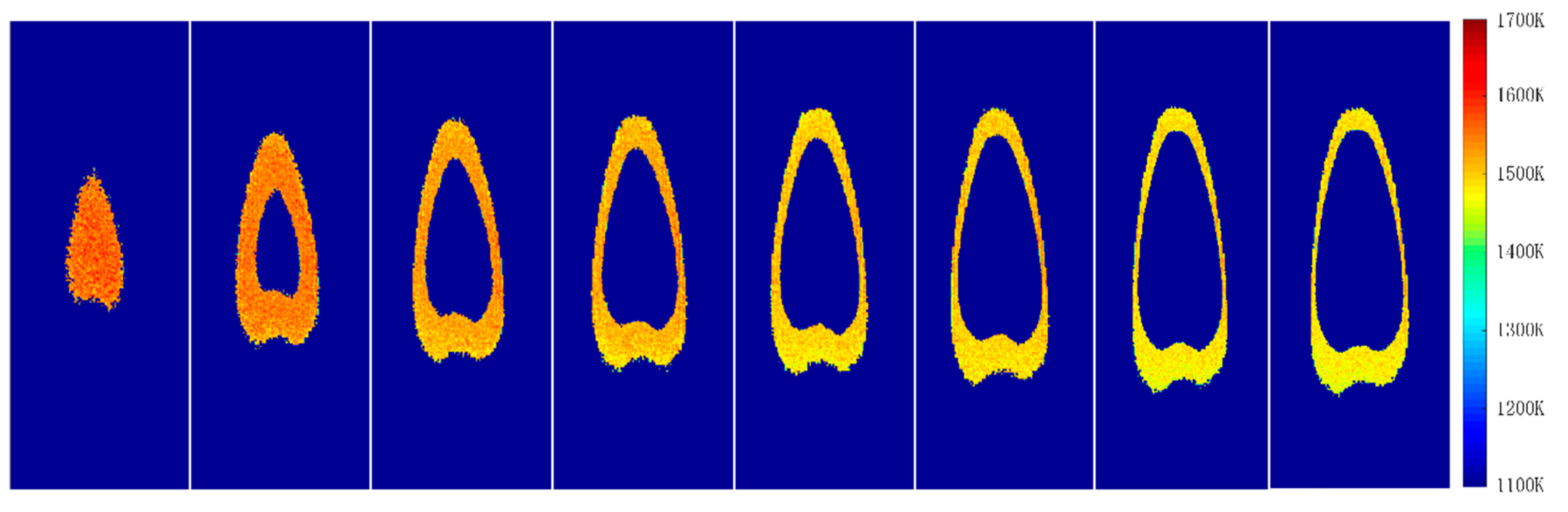

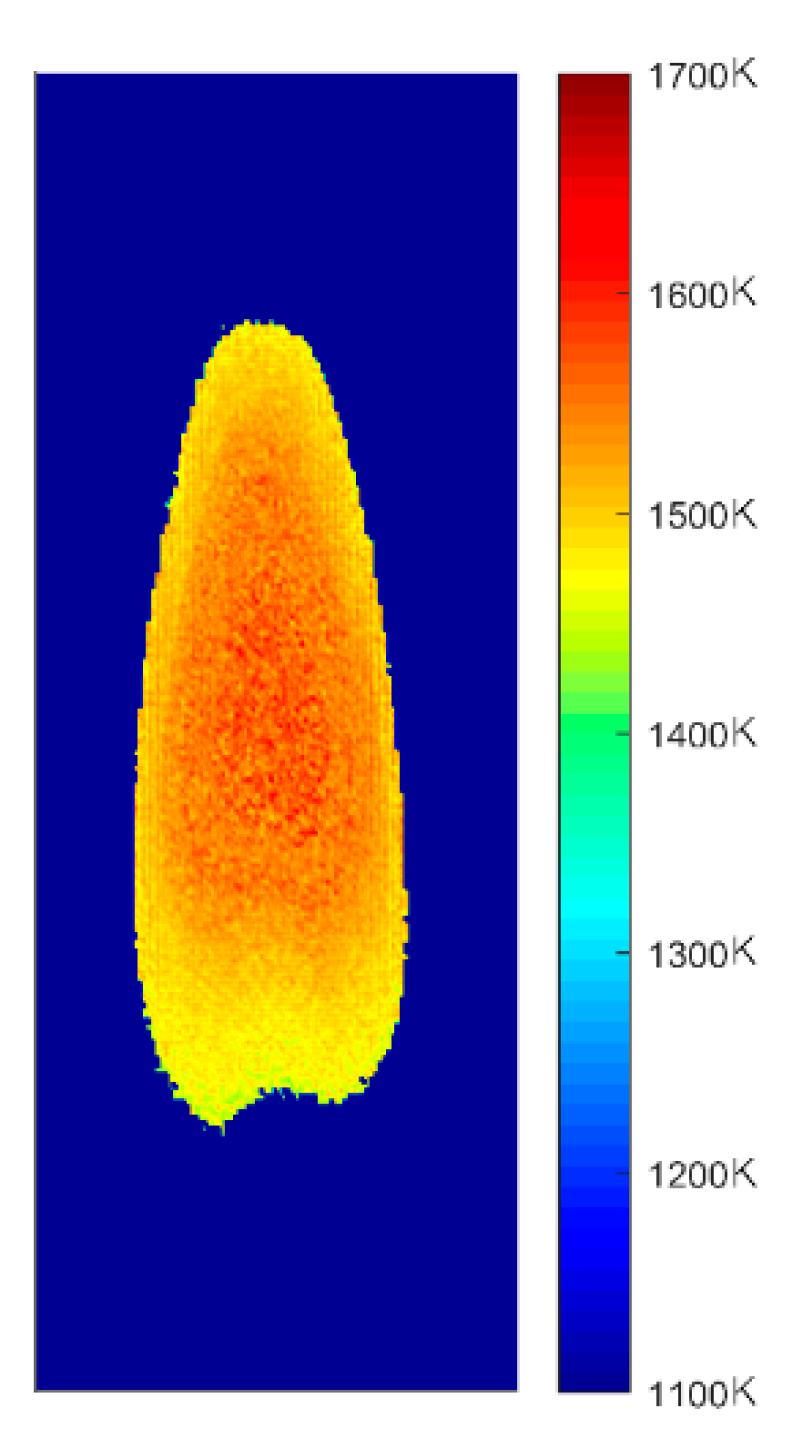

5. Conclusions

- According to our previous experimental researches and other literature, the optimal pixel gray range is determined to be 50 to 220, which can ensure the accuracy of the two-color pyrometry.

- Through the relationship between the exposure time and the radiation intensity (image pixel gray value) under different temperatures, the appropriate exposure intervals in different flame temperatures are determined, which ensures that overexposure and underexposure will not appear in the selected areas of the flame images.

- Flame images with different exposures were collected and effective measurement areas were extracted by means of threshold segmentation. Image fusion was performed to obtain the complete two-dimensional flame temperature distribution.

- This method has been proved to be effective in two-dimensional flame temperature distribution, which provides a foundation for applying the method in accurate three-dimensional flame temperature field reconstruction.

Author Contributions

Funding

Conflicts of Interest

References

- Ohtake, K.; Okazaki, K. Optical CT measurement and mathematical prediction of multi-temperature in pulverized coal combustion field. Int. J. Heat Mass Transf. 1988, 31, 397–405. [Google Scholar] [CrossRef]

- Stasio, S.D.; Massoli, P. Influence of the soot property uncertainties in temperature and volume-fraction measurements by two-colour pyrometry. Meas. Sci. Technol. 1999, 5, 1453–1465. [Google Scholar] [CrossRef]

- Hirano, T.; Ishizuka, S.; Tsuruda, T.; Tanaka, R.; Hasegawa, T.; Mochida, S. The potential of visualization for studies on flames and furnaces. Fuel 1994, 73, 1697–1705. [Google Scholar] [CrossRef]

- Lu, G.; Yan, Y.; Riley, G.; Bheemul, H.C. Concurrent measurement of temperature and soot concentration of pulverized coal flames. IEEE Trans. Instrum. Meas. 2002, 51, 990–995. [Google Scholar]

- Wang, F.; Wang, X.J.; Ma, Z.Y.; Yan, J.H.; Chi, Y.; Wei, C.Y.; Ni, M.J.; Cen, K.F. The research on the estimation for the NOx emissive concentration of the pulverized coal boiler by the flame image processing technique. Fuel 2002, 81, 2113–2120. [Google Scholar] [CrossRef]

- Kurihara, N.; Nishikawa, M.; Watanabe, A.; Satoh, Y.; Ohtsuka, K.; Miyagaki, H.; Higashi, T.; Masai, T. A combustion diagnosis method for pulverized coal boilers using flame-image recognition technology. IEEE Trans. Energy Convers. 1986, 2, 99–103. [Google Scholar] [CrossRef]

- Liu, D.; Yan, J.; Wang, F.; Huang, Q.; Yong, C.; Cen, K. Experimental reconstructions of flame temperature distributions in laboratory-scale and large-scale pulverized-coal fired furnaces by inverse radiation analysis. Fuel 2012, 93, 397–403. [Google Scholar] [CrossRef]

- Zhou, H.C.; Lou, C.; Cheng, Q.; Jiang, Z.; He, J.; Huang, B. Experimental investigations on visualization of three-dimensional temperature distributions in a large-scale pulverized-coal-fired boiler furnace. Proc. Combust. Institute 2005, 30, 1699–1706. [Google Scholar] [CrossRef]

- Wang, H.J.; Huang, Z.F.; Wang, D.D.; Luo, Z.X.; Sun, Y.P.; Fang, Q.Y. Measurements on flame temperature and its 3D distribution in a 660 MWe arch-fired coal combustion furnace by visible image processing and verification by using an infrared pyrometer. Meas. Sci. Technol. 2009, 20, 1–12. [Google Scholar]

- Yan, W.J.; Zhou, H.C.; Jiang, Z.W.; Lou, C.; Zhang, X.K.; Chen, D.L. Experiments on measurement of Temperature and Emissivity of Municipal Solid Waste (MSW) combustion by spectral analysis and image processing in visible spectrum. Energy Fuels 2013, 27, 6754–6762. [Google Scholar] [CrossRef]

- Shimoda, M.; Sugano, A.; Kimura, T.; Watanabe, Y.; Ishiyama, K. Prediction method of unburnt carbon for coal fired utility boiler using image processing technique of combustion flame. IEEE Trans. Energy Convers. 1990, 5, 640–645. [Google Scholar] [CrossRef]

- Zhou, H.C.; Lou, X.S.; Yin, H.L.; Deng, Y.K.; Sun, G.J. Study on application of monochromatic flame image processing technique in combustion monitoring and control of boilers. Autom. Electr. Power Syst. 1996, 10, 18–22. [Google Scholar]

- Zhou, H.C.; Han, S.D.; Deng, C.G. Comparative study on two radiative temperature image monitoring methods and assessments for their applicability. Proc. CSEE 2002, 22, 109–114. [Google Scholar]

- Jiang, Z.W.; Luo, Z.X.; Zhou, H.C. A simple measurement method of temperature and emissivity of coal-fired flames from visible radiation image and its application in a CFB boiler furnace. Fuel 2009, 2, 108–111. [Google Scholar] [CrossRef]

- Sun, Y.P.; Lou, C.; Jiang, Z.W.; Zhou, H.C. Experimental research of representative wavelengths of tricolor for color CCD camera. Huazhong Univ. Sci. Technol. 2009, 37, 108–111. [Google Scholar]

- Panagiotou, T.; Levendis, Y. Measuremnet of particle flame temperature using three-color optical pyrometry. Combust. Flame 1996, 104, 272–287. [Google Scholar] [CrossRef]

- Yamashita, S.; Yamamoto, M.; Shinozaki, K.; Kadoi, K.; Mitsui, K.; Usui, H. In-situ temperature measurement using a multi-sensor camera during laser welding. Q. J. Jpn. Weld. Soc. 2015, 33, 93–97. [Google Scholar] [CrossRef]

- Huang, Y.; Yan, Y.; Riley, G. Vision-based measurement of temperature distribution in a 500-kW model furnace using the two-colour method. Measurement 2000, 28, 175–183. [Google Scholar] [CrossRef]

- Xu, Y.; Li, S.Q.; Yuan, Y.; Yao, Q. Measurement on the surface temperature of dispersed chars in a flat-flame burner using modified RGB pyrometry. Energy Fuels 2016, 31, 2228–2235. [Google Scholar] [CrossRef]

- Sun, Y.; Peng, X.Q.; Song, Y.B. Radiation image filtering and segmentation in CCD-based colorimetric thermometry. J. Image Graph. 2017, 22, 20–28. [Google Scholar]

- Shao, L.C.; Zhou, Z.J.; Ji, W.; Guo, L.Z.; Chen, L.P.; Liu, B.; Tao, Y.J. Improvement and verification of two-color pyrometry by setting exposure time respectively. Therm. Power Gener. 2018, 47, 30–36. [Google Scholar]

- Yan, W.J.; Li, X.Y.; Huang, X.L.; Yu, L.B.; Lou, C.; Chen, Y.M. Online measurement of the flame temperature and emissivity during biomass volatile combustion using spectral thermometry and image thermometry. Energy Fuels 2019, 34, 907–919. [Google Scholar] [CrossRef]

- Yan, W.J.; Panahi, A.; Lenvendis, Y.A. Spectral emissivity and temperature of heated surfaces based on spectrometry and digital thermal imaging—Validation with thermocouple temperature measurements. Experaure Therm. Fkuid Sci. 2019, 112, 110017. [Google Scholar] [CrossRef]

- Li, T.J.; Zhang, C.X.; Yuan, Y.; Shuai, Y.; Tan, H.P. Flame temperature estimation from light field image processing. Appl. Opt. 2018, 57, 7259–7265. [Google Scholar] [CrossRef] [PubMed]

- Deng, C.W.; Liu, X.; Chanussot, J.; Xu, Y.; Zhao, B.J. Towards perceptual image fusion: A novel two-layer framework. Elsevier 2020, 57, 102–114. [Google Scholar] [CrossRef]

- Liu, Y.; Chen, X.; Ward, R.K.; Wang, Z.J. Image fusion with convolutional sparse representation. IEEE Signal Process. Lett. 2016, 23, 1882–1886. [Google Scholar] [CrossRef]

- Yan, W.Z.; Sun, Q.S.; Sun, H.J.; Li, Y.M. Joint dimensionality reduction and metric learning for image set classification. Elsevier Sci. 2020, 516, 109–124. [Google Scholar] [CrossRef]

- Kang, X.D.; Duan, P.H.; Li, S.T. Hyperspectral image visualization with edge-preserving filtering and principal component analysis. Elsevier 2019, 57, 130–143. [Google Scholar] [CrossRef]

- Alhichri, H.S.; Kamel, M. Image registration using virtual circles and edge direction. In Proceedings of the International Conference on Pattern Recognition IEEE, Quebec, Canada, 11–15 August 2002. [Google Scholar]

- Wang, Z.J.; Ziou, D.; Armenakis, C.; Li, D.R.; Li, Q.Q. A comparative analysis of image fusion methods. IEEE Trans. Geosci. Remote Sens. 2005, 43, 1391–1402. [Google Scholar] [CrossRef]

- Daily, M.I.; Farr, T.; Elachi, C. Geologic interpretation from composited radar and Landsat imagery. Photogramm. Eng. Remote Sens. 1979, 45, 1109–1116. [Google Scholar]

- Zhang, M.L.; Li, S.; Yu, F.; Tian, X. Image fusion employing adaptive spectral-spatial gradient sparse regularization in UAV remote sensing. Elsevier 2020, 170, 107434. [Google Scholar] [CrossRef]

- Yin, M.; Liu, X.M.; Liu, Y. Medical image fusion with parameter-adaptive pulse coupled neural network in nonsubsampled shearlet transform domain. IEEE Trans. Instrum. Meas. 2019, 68, 49–64. [Google Scholar] [CrossRef]

- Liu, Y.; Chen, X.; Peng, H.; Wang, Z. Multi-focus image fusion with a deep convolutional neural network. Inf. Fusion 2017, 36, 191–207. [Google Scholar] [CrossRef]

- Wei, C.Y.; Li, X.D.; Ma, Z.Y.; Xue, F.; Wang, F.; Yan, J.H.; Cen, K.F. Research of the numerical method of the colorimetric temperature-measurement method used in high temperature flame image process. J. Combust. Sci. Technol. 1998, 4, 88–92. [Google Scholar]

- Jiang, Z.W.; Zhou, H.C.; Lou, C.; Yu, Z.Q. Method of detecting flame temperature and emissivity image based on color image processing. Journal of Huazhong University of Science and Technology. Nat. Sci. 2004, 32, 49–51. [Google Scholar]

- Jiang, Z.W. Experimental Research on Images of Flame Temperature and Emissivity in Coal-Fired Furnace. Master’s Thesis, Huazhong University of Science and Technology, Wuhan, China, 25 April 2004. [Google Scholar]

- Li, S.T.; Kang, X.D.; Fang, L.Y.; Hu, J.W.; Yin, H.T. Pixel-level image fusion: A survey of the state of the art. Inf. Fusion 2016, 33, 100–112. [Google Scholar] [CrossRef]

- Song, M.; Gui, X.K. Spectral analysis for candle flame. Spectrosc. Spectr. Anal. 1994, 14, 68–77. [Google Scholar]

- Wei, C.Y.; Yan, J.H.; Shang, M.E.; Cen, K.F. Measurement of flame temperature distribution by the use if a colored array CCD (Charge-couple device) (I) the measurement of a two-dimensional projection temperature field. J. Eng. Therm. Energy Power 2002, 17, 58–61. [Google Scholar]

- Zheng, S.; Ni, L.; Liu, H.W.; Zhou, H.C. Measurement of the distribution of temperature and emissivity of a candle flame using hyperspectral imaging technique. Optik 2019, 183, 222–231. [Google Scholar] [CrossRef]

- Patel, A. Temperature Measurement of a Candle Flame Using a CCD Camera. Available online: https://www.slideserve.com/devika/temperature-measurement-of-a-candle-flame-using-a-ccd-camera (accessed on 11 October 2019).

| Temperature (K) | Exposure Range (μs) |

|---|---|

| 1073.15 | (6234, 27421) |

| 1173.15 | (1260, 6317) |

| 1273.15 | (251, 1354) |

| 1373.15 | (29, 245) |

| 1473.15 | (20, 55) |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Shan, L.; Huang, H.; Hong, B.; Zhao, J.; Wang, D.; Kong, M. Temperature Measurement Method of Flame Image Fusion with Different Exposures. Energies 2020, 13, 1487. https://doi.org/10.3390/en13061487

Shan L, Huang H, Hong B, Zhao J, Wang D, Kong M. Temperature Measurement Method of Flame Image Fusion with Different Exposures. Energies. 2020; 13(6):1487. https://doi.org/10.3390/en13061487

Chicago/Turabian StyleShan, Liang, Huiyun Huang, Bo Hong, Jun Zhao, Daodang Wang, and Ming Kong. 2020. "Temperature Measurement Method of Flame Image Fusion with Different Exposures" Energies 13, no. 6: 1487. https://doi.org/10.3390/en13061487

APA StyleShan, L., Huang, H., Hong, B., Zhao, J., Wang, D., & Kong, M. (2020). Temperature Measurement Method of Flame Image Fusion with Different Exposures. Energies, 13(6), 1487. https://doi.org/10.3390/en13061487