Time Series Forecasting with Multi-Headed Attention-Based Deep Learning for Residential Energy Consumption

Abstract

1. Introduction

- The multi-headed attention works well for modeling the short-term patterns of time-series data, resulting in the best deep learning model for predicting the energy demand.

- The class activation map appropriately visualizes how the proposed method forecasts the energy demand from the time-series data.

2. Related Works

3. Convolutional Recurrent Neural Network with Multi-Headed Attention

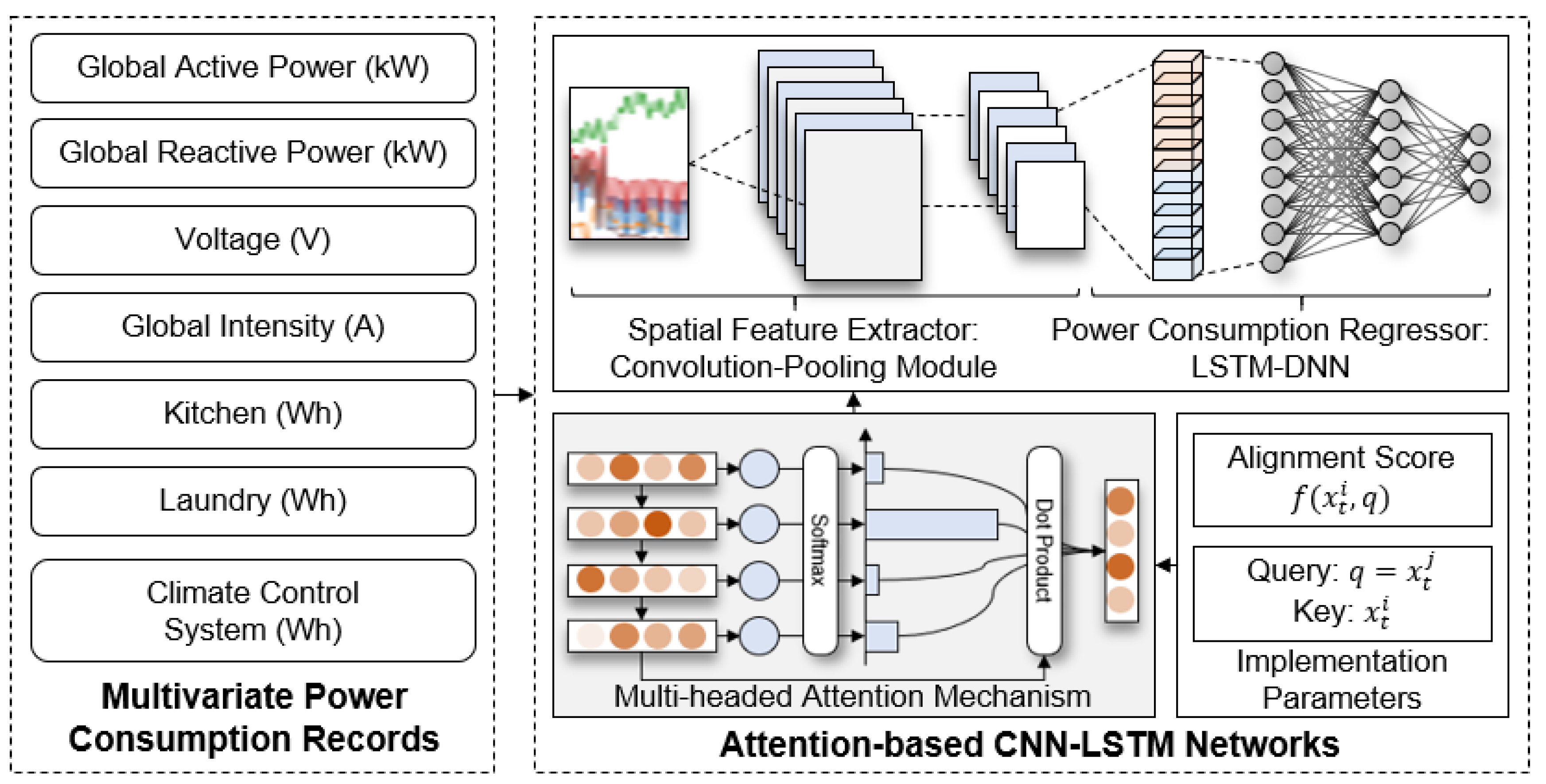

3.1. Structure Overview

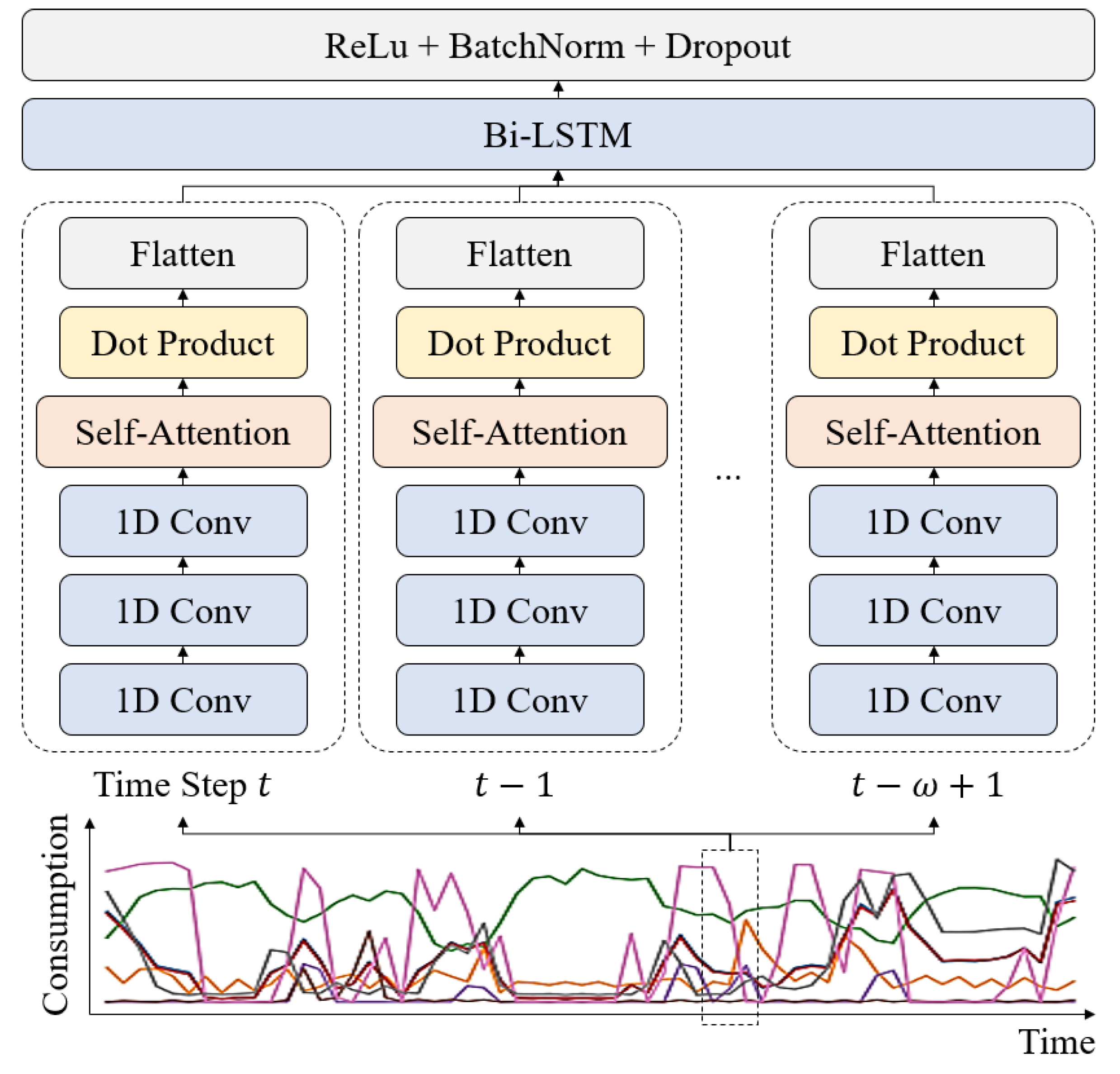

3.2. Convolutional Recurrent Neural Networks

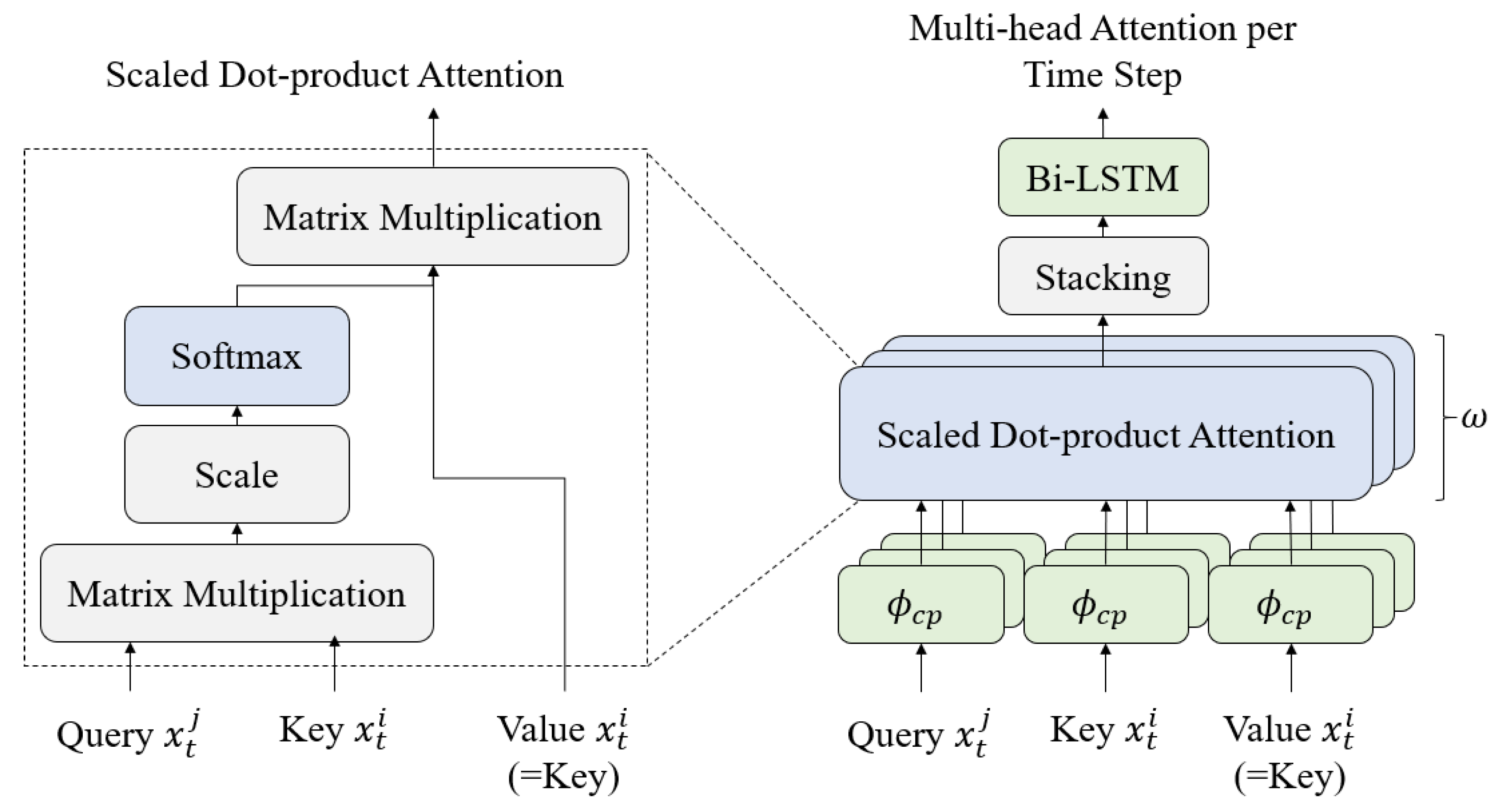

3.3. Multi-Headed Attention

4. Experimental Results

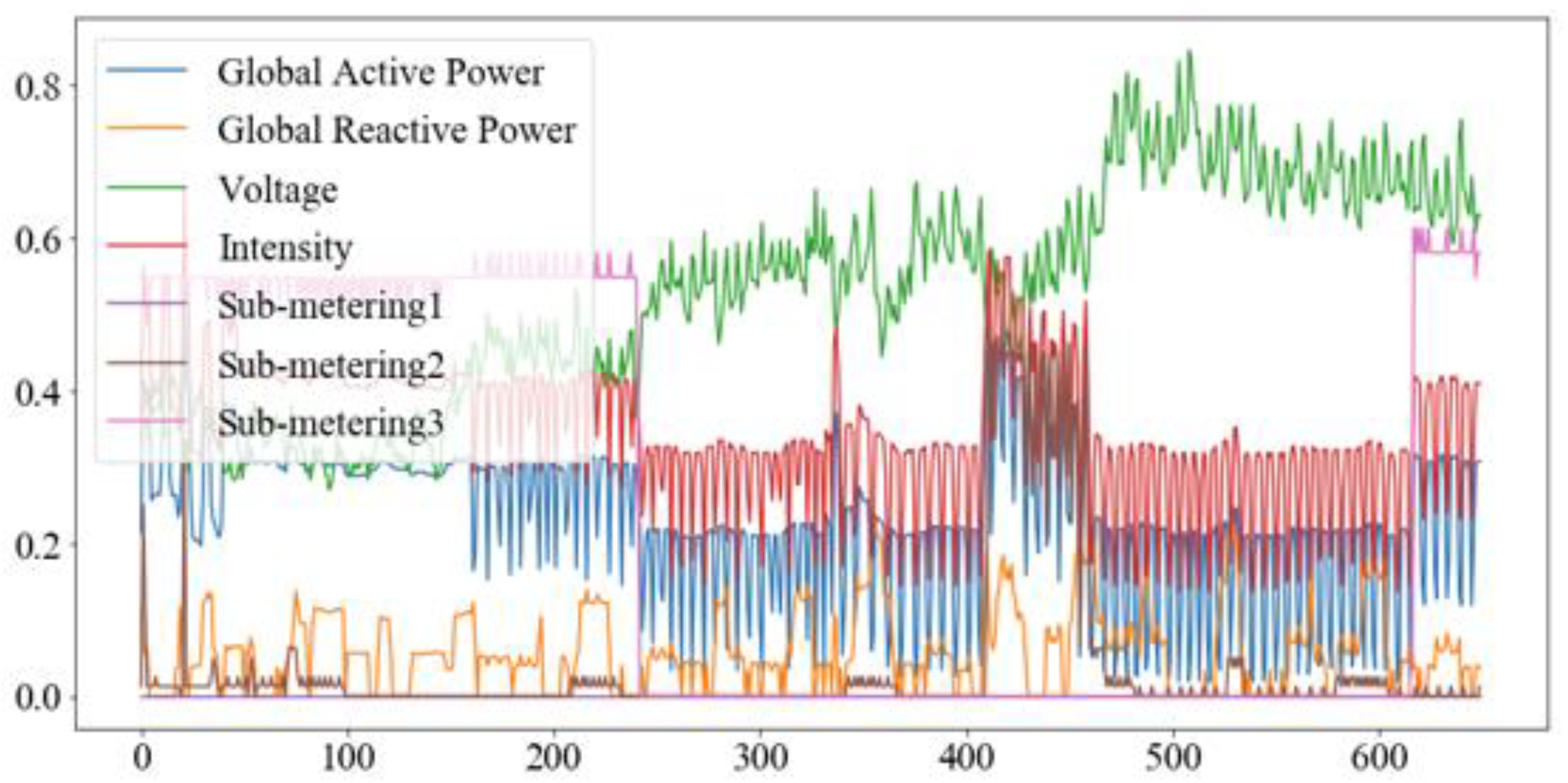

4.1. Dataset and Implementation

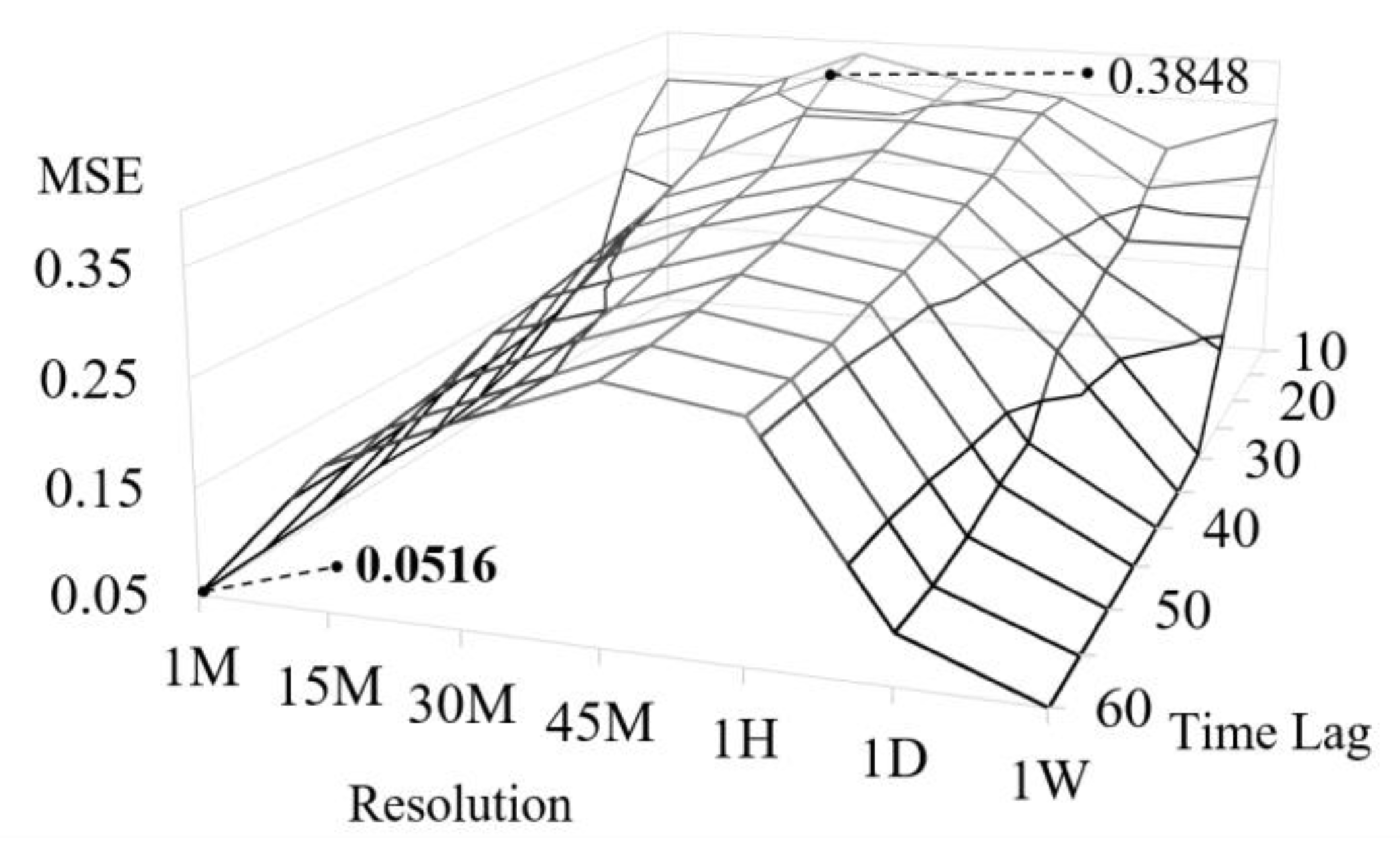

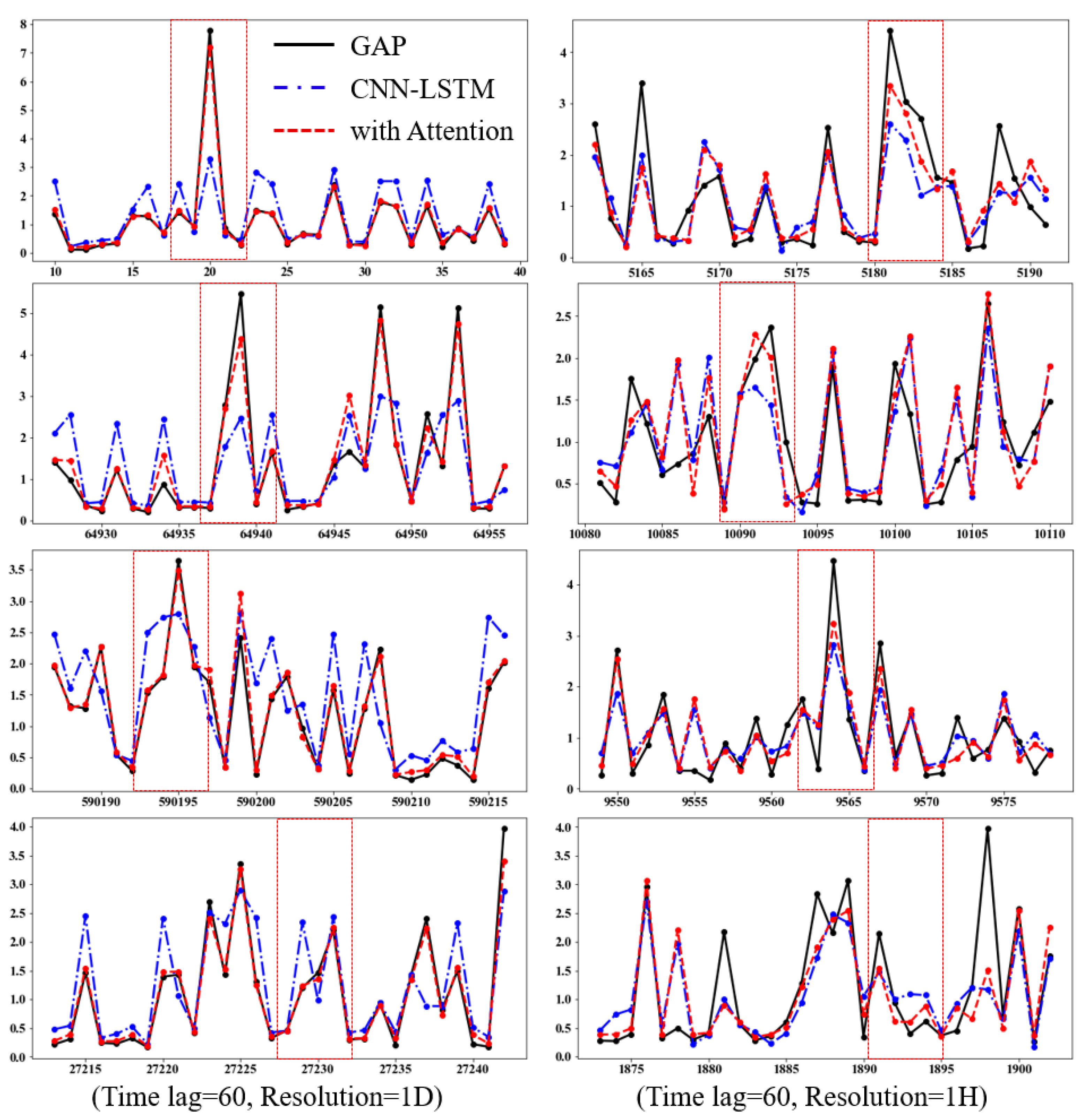

4.2. Power Consumption Prediction Performance

4.3. Effects of Multi-Headed Attention

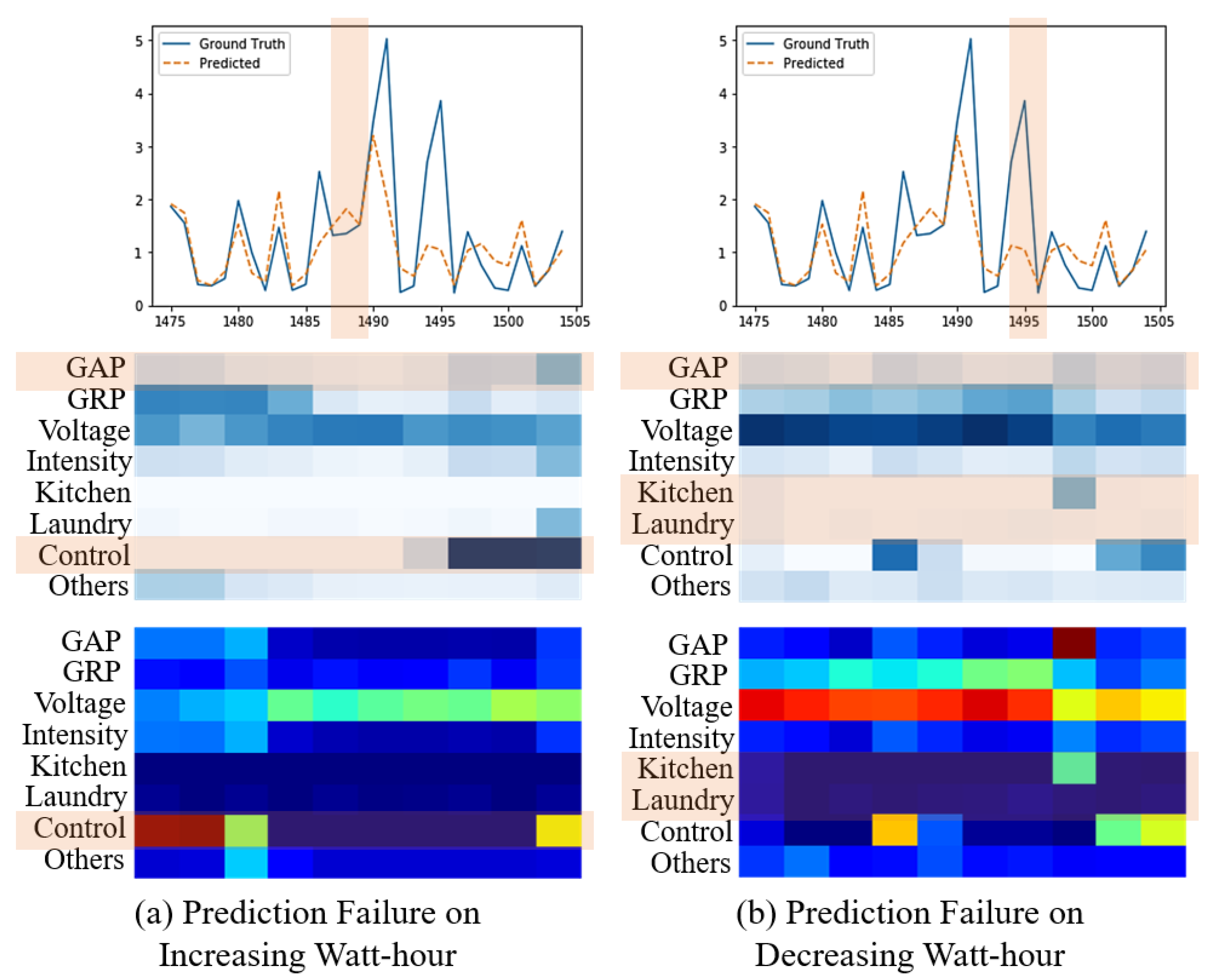

4.4. Discussion

5. Concluding Remarks

Author Contributions

Funding

Conflicts of Interest

References

- IEA. World Energy Outlook 2019; IEA: Paris, France, 2019; Available online: http://www.iea.org/reports/world-energy-outlook-2019 (accessed on 13 November 2019).

- Nejat, P.; Jomehzadeh, F.; Taheri, M.M.; Gohari, M.; Majid, M.Z.A. A global review of energy consumption, CO2 emissions and policy in the residential sector (with an overview of the top ten CO2 emitting countries). Renew. Sustain. Energy Rev. 2015, 43, 843–862. [Google Scholar] [CrossRef]

- Zhao, G.; Liu, Z.; He, Y.; Cao, H.; Guo, Y.B. Energy consumption in machining: Classification, prediction, and reduction strategy. Energy 2017, 133, 142–157. [Google Scholar] [CrossRef]

- Deb, C.; Zhang, F.; Yang, J.; Lee, S.E.; Shah, K.W. A review on time series forecasting techniques for building energy consumption. Renew. Sustain. Energy Rev. 2017, 74, 902–924. [Google Scholar] [CrossRef]

- Arghira, N.; Hawarah, L.; Ploix, S.; Jacomino, M. Prediction of appliances energy use in smart homes. Energy 2012, 48, 128–134. [Google Scholar] [CrossRef]

- Prashar, A. Adopting PDCA (Plan-Do-Check-Act) cycle for energy optimization in energy-intensive SMEs. J. Clean. Prod. 2017, 145, 277–293. [Google Scholar] [CrossRef]

- Makridakis, S.; Spiliotis, E.; Assimakopoulos, V. Statistical and Machine Learning forecasting methods: Concerns and ways forward. PLoS ONE 2018, 13, e0194889. [Google Scholar] [CrossRef]

- Gonzalez-Briones, A.; Hernandez, G.; Corchado, J.M.; Omatu, S.; Mohamad, M.S. Machine Learning Models for Electricity Consumption Forecasting: A Review. In Proceedings of the 2019 2nd International Conference on Computer Applications & Information Security, Riyadh, Saudi Arabia, 19–21 March 2019; pp. 1–6. [Google Scholar]

- Burgio, A.; Menniti, D.; Sorrentino, N.; Pinnarelli, A.; Leonowicz, Z. Influence and Impact of Data Averaging and Temporal Resolution on the Assessment of Energetic, Economic and Technical Issues of Hybrid Photovoltaic-Battery Systems. Energies 2020, 13, 354. [Google Scholar] [CrossRef]

- Kim, T.-Y.; Cho, S.-B. Predicting residential energy consumption using CNN-LSTM neural networks. Energy 2019, 182, 72–81. [Google Scholar] [CrossRef]

- Lago, J.; De Ridder, F.; De Schutter, B. Forecasting spot electricity prices: Deep learning approaches and empirical comparison of traditional algorithms. Appl. Energy 2018, 221, 386–405. [Google Scholar] [CrossRef]

- Fan, H.; MacGill, I.; Sproul, A. Statistical analysis of driving factors of residential energy demand in the greater Sydney region, Australia. Energy Build. 2015, 105, 9–25. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Polosukhin, I. Attention is all you need. In Proceedings of the Advances in Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017; pp. 5998–6008. [Google Scholar]

- Zheng, H.; Fu, J.; Mei, T.; Luo, J. Learning Multi-attention Convolutional Neural Network for Fine-Grained Image Recognition. In Proceedings of the 2017 IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 5219–5227. [Google Scholar]

- Ray, A. Symbolic dynamic analysis of complex systems for anomaly detection. Signal Process. 2004, 84, 1115–1130. [Google Scholar] [CrossRef]

- Rajagopalan, V.; Ray, A. Symbolic time series analysis via wavelet-based partitioning. Signal Process. 2006, 86, 3309–3320. [Google Scholar] [CrossRef]

- Lin, J.; Khade, R.; Li, Y. Rotation-invariant similarity in time series using bag-of-patterns representation. J. Intell. Inf. Syst. 2012, 39, 287–315. [Google Scholar] [CrossRef]

- Tso, G.K.; Yau, K.K.; Tso, G.; Yau, K.K.W. Predicting electricity energy consumption: A comparison of regression analysis, decision tree and neural networks. Energy 2007, 32, 1761–1768. [Google Scholar] [CrossRef]

- Ekonomou, L. Greek long-term energy consumption prediction using artificial neural networks. Energy 2010, 35, 512–517. [Google Scholar] [CrossRef]

- Li, W.; Yang, X.; Li, H.; Su, L. Hybrid Forecasting Approach Based on GRNN Neural Network and SVR Machine for Electricity Demand Forecasting. Energies 2017, 10, 44. [Google Scholar] [CrossRef]

- Mocanu, E.; Nguyen, P.H.; Gibescu, M.; Kling, W.L. Deep learning for estimating building energy consumption. Sustain. Energy Grids Netw. 2016, 6, 91–99. [Google Scholar] [CrossRef]

- Marino, D.L.; Amarasinghe, K.; Manic, M. Building energy load forecasting using Deep Neural Networks. In Proceedings of the IECON 2016—42nd Annual Conference of the IEEE Industrial Electronics Society, Florence, Italy, 23–26 October 2016; pp. 7046–7051. [Google Scholar]

- Kong, W.; Dong, Z.Y.; Jia, Y.; Hill, D.; Xu, Y.; Zhang, Y. Short-Term Residential Load Forecasting Based on LSTM Recurrent Neural Network. IEEE Trans. Smart Grid 2017, 10, 841–851. [Google Scholar] [CrossRef]

- Li, C.; Ding, Z.; Zhao, D.; Yi, J.; Zhang, G. Building Energy Consumption Prediction: An Extreme Deep Learning Approach. Energies 2017, 10, 1525. [Google Scholar] [CrossRef]

- Rahman, A.; Srikumar, V.; Smith, A.D. Predicting electricity consumption for commercial and residential buildings using deep recurrent neural networks. Appl. Energy 2018, 212, 372–385. [Google Scholar] [CrossRef]

- Shah, I.; Iftikhar, H.; Ali, S.; Wang, D. Short-Term Electricity Demand Forecasting Using Components Estimation Technique. Energies 2019, 12, 2532. [Google Scholar] [CrossRef]

- Fan, C.; Wang, J.; Gang, W.; Li, S. Assessment of deep recurrent neural network-based strategies for short-term building energy predictions. Appl. Energy 2019, 236, 700–710. [Google Scholar] [CrossRef]

- Wang, Y.; Gan, D.; Sun, M.; Zhang, N.; Lu, Z.-X.; Kang, C. Probabilistic individual load forecasting using pinball loss guided LSTM. Appl. Energy 2019, 235, 10–20. [Google Scholar] [CrossRef]

- Kim, T.-Y.; Cho, S.-B. Particle Swarm Optimization-based CNN-LSTM Networks for Forecasting Energy Consumption. In Proceedings of the 2019 IEEE Congress on Evolutionary Computation, Wellington, New Zealand, 10–13 June 2019; pp. 1510–1516. [Google Scholar]

- Shi, H.; Xu, M.; Li, R. Deep Learning for Household Load Forecasting—A Novel Pooling Deep RNN. IEEE Trans. Smart Grid 2017, 9, 5271–5280. [Google Scholar] [CrossRef]

- Bouktif, S.; Fiaz, A.; Ouni, A.; Serhani, M.A. Optimal Deep Learning LSTM Model for Electric Load Forecasting using Feature Selection and Genetic Algorithm: Comparison with Machine Learning Approaches. Energies 2018, 11, 1636. [Google Scholar] [CrossRef]

- Guo, Z.; Zhou, K.; Zhang, X.; Yang, S. A deep learning model for short-term power load and probability density forecasting. Energy 2018, 160, 1186–1200. [Google Scholar] [CrossRef]

- Fan, C.; Sun, Y.; Zhao, Y.; Song, M.; Wang, J. Deep learning-based feature engineering methods for improved building energy prediction. Appl. Energy 2019, 240, 35–45. [Google Scholar] [CrossRef]

- Hinton, G.E. Deep belief networks. Scholarpedia 2009, 4, 5947. [Google Scholar] [CrossRef]

- Taieb, S.B. Machine Learning Strategies for Multi-Step ahead Time Series Forecasting; Universit Libre de Bruxelles: Bruxelles, Belgium, 2014; pp. 75–86. [Google Scholar]

- Wang, K.; Qi, X.; Liu, H. Photovoltaic power forecasting based LSTM-Convolutional Network. Energy 2019, 189, 116225. [Google Scholar] [CrossRef]

- Bu, S.-J.; Cho, S.-B. A convolutional neural-based learning classifier system for detecting database intrusion via insider attack. Inf. Sci. 2020, 512, 123–136. [Google Scholar] [CrossRef]

- Qing, X.; Niu, Y. Hourly day-ahead solar irradiance prediction using weather forecasts by LSTM. Energy 2018, 148, 461–468. [Google Scholar] [CrossRef]

- Chorowski, J.; Bahdanau, D.; Serdyuk, D.; Cho, K.; Bengio, Y. Attention-Based Models for Speech Recognition. In Proceedings of the Advances in Neural Information Processing Systems, Montreal, QC, Canada, 7–12 December 2015; pp. 577–585. [Google Scholar]

- Zhou, B.; Khosla, A.; Lapedriza, A.; Oliva, A.; Torralba, A. Learning Deep Features for Discriminative Localization. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 2921–2929. [Google Scholar]

- Kim, J.-Y.; Cho, S.-B. Electric Energy Consumption Prediction by Deep Learning with State Explainable Autoencoder. Energies 2019, 12, 739. [Google Scholar] [CrossRef]

- Sainath, T.N.; Mohamed, A.R.; Kingsbury, B.; Ramabhadran, B. Deep convolutional nueral networks for LVCSR. In Proceedings of the 2013 IEEE International Conference on Acoustics, Speech and Signal Processing, Vancouver, BC, Canada, 26–31 May 2013; pp. 8614–8618. [Google Scholar]

- Ronao, C.A.; Cho, S.-B. Human activity recognition with smartphone sensors using deep learning neural networks. Expert Syst. Appl. 2016, 59, 235–244. [Google Scholar] [CrossRef]

- Shen, T.; Zhou, T.; Long, G.; Pan, J.J.S.; Zhang, C. DiSAN: Directional self-attention network for RNN/CNN-free language understanding. In Proceedings of the Thirty-Second AAAI Conference on Artifial Intelligence, New Orleans, LA, USA, 2–7 February 2018; pp. 5446–5455. [Google Scholar]

- Miyazaki, K.; Komatsu, T.; Hayashi, T.; Watanabe, S.; Toda, T.; Takeda, K. Weakly-Supervised Sound Event Detection with Self-Attention. In Proceedings of the ICASSP 2020 IEEE International Conference on Acoustics, Speech and Signal Processing, Barcelona, Spain, 4–8 May 2020; pp. 66–70. [Google Scholar]

- Bache, K.; Lichman, M. Individual Household Electric Power Consumption Dataset; University of California, School of Information and Computer Science: Irvine, CA, USA, 2013; Volume 206. [Google Scholar]

- Gal, Y.; Ghahramani, Z. Dropout as a bayesian approximation: Representing model uncertainty in deep learning. In Proceedings of the International Conference on Machine Learning, New York City, NY, USA, 19–24 June 2016; pp. 1050–1059. [Google Scholar]

| Time-Scale | Author | Domain/Data | Forecasting Method | Prediction MSE (Time-Scale) |

|---|---|---|---|---|

| Short (30-min or 1-h) | Mocanu [21] | UCI Household Power | Factored Conditional Restrict Boltzmann Machine | 0.6211 (1-h) |

| Marino [22] | UCI Household Power | Sequence-to-sequence LSTM | 0.6420 (1-h) | |

| Li [20] | New South Wales and Victorian Australia | RNN-SVR Ensemble | 0.4059 (1-h) | |

| Kong [23] | Smart Grid Smart City (SGSC) Australia | RNN, LSTM | 0.2903 (1-h) | |

| Li [24] | Applied Building Energy | Stacked Autoencoder, Extreme Learning Machine | 40.6747 (30-min) | |

| Makridakis [7] | M3-competition Time-series Data | ARIMA, NN, GP, k-NN, SVR | 0.3252 (1-h) | |

| Rahman [25] | Salt Lake City Public Safety Building | LSTM | 0.2903 (1-h) | |

| Gonzalez-Briones [8] | Shoe Store Power Data Spain | LR, DT, RF, k-NN, SVR | 0.4234 (1-h) | |

| Shah [26] | Nord Pool Electricity Data | Spline Function-based, Polynomial Regression, ARIMA | 0.2428 (1-h) | |

| Fan [27] | Hong Kong Educational Building Operational Data | NN, RNN, LSTM | 118.2 (24-h) | |

| Kim [10] | UCI Household Power | CNN-LSTM | 0.2803 (1-h) | |

| Wang [28] | Irish Customer Behavior Trials, Low Carbon London | Quantile Loss-guided LSTM | 0.1552 (1-h) | |

| Kim [29] | UCI Household Power | CNN-LSTM with Particle Swarm Optimization-based Architecture Optimization | 0.3258 (30-min) | |

| Medium (1-month) | Shi [30] | Smart Metering Electricity Customer Behavior Trial (CBT) | Pooling-based RNN | 0.4505 (1-month) |

| Bouktif [31] | French National Energy Consumption | LSTM with Genetic Algorithm-based Time Lag Optimization | 270.4 (1-month) | |

| Long (1-year) | Guo [32] | China Jiangsu Province Power | LSTM, Quantile Regression | 594.8 (1-year) |

| Attributes | Date | Global Active Power (kW) | Global Reactive Power (kW) | Voltage (V) | Global Intensity (A) | Kitchen (Wh) | Laundry (Wh) | Climate Controls (Wh) |

|---|---|---|---|---|---|---|---|---|

| Average | - | 1.0916 | 0.1237 | 240.8399 | 4.6278 | 1.1219 | 1.2985 | 6.4584 |

| Std. Dev. | - | 1.0573 | 0.1127 | 3.2400 | 4.4444 | 6.1530 | 5.8220 | 8.4372 |

| Max | 26/11/2010 | 11.1220 | 1.3900 | 254.1500 | 48.4000 | 88.0000 | 80.0000 | 31.0000 |

| Min | 16/12/2006 | 0.0760 | 0.0000 | 223.2000 | 0.2000 | 0.0000 | 0.0000 | 0.0000 |

| Operation | No. of Convolution Filters/Nodes | Kernel Size | Stride | Activation Function | No. of Parameters |

|---|---|---|---|---|---|

| TimeDistributed(Conv1D) | 64 | 2 × 1 | 1 | tanh | 192 |

| TimeDistributed(MaxPool1D) | - | 2 | tanh | 0 | |

| TimeDistributed(Multi-Attention) | - | - | softmax | 0 | |

| TimeDistributed(Conv1D) | 64 | 2 × 1 | 1 | tanh | 8256 |

| TimeDistributed(MaxPool1D) | - | 2 | tanh | 0 | |

| TimeDistributed(Multi-Attention) | - | - | softmax | 0 | |

| Dropout | 0.5 | - | - | - | 0 |

| LSTM | 64 | - | - | tanh | 73,984 |

| LSTM | 64 | - | - | tanh | 33,024 |

| Dropout | 0.5 | - | - | - | 0 |

| Dense | 64 | tanh | 4160 | ||

| Dense | 64 | tanh | 4160 | ||

| Dense | 1 | linear | 65 |

| Resolution | LR | ARIMA [7] | DT | RF | SVR [8] | MLP | CNN | LSTM | CNN-LSTM [10] | Ours |

|---|---|---|---|---|---|---|---|---|---|---|

| 1M | 0.0844 | 0.0838 | 0.1026 | 0.0802 | 0.0797 | 0.1003 | 0.0666 | 0.0741 | 0.0660 | 0.0516 |

| 15M | 0.2515 | 0.2428 | 0.4234 | 0.3972 | 0.3228 | 0.2370 | 0.2123 | 0.2208 | 0.2085 | 0.1838 |

| 30M | 0.3050 | 0.2992 | 0.5199 | 0.3951 | 0.3619 | 0.2813 | 0.2684 | 0.2773 | 0.2592 | 0.2366 |

| 45M | 0.3321 | 0.3431 | 0.4804 | 0.4315 | 0.4247 | 0.3208 | 0.3183 | 0.3220 | 0.3133 | 0.2838 |

| 1H | 0.3398 | 0.3252 | 0.5259 | 0.4344 | 0.4059 | 0.3072 | 0.2865 | 0.2903 | 0.2803 | 0.2662 |

| 1D | 0.1083 | 0.0980 | 0.1891 | 0.1190 | 0.1311 | 0.1134 | 0.1069 | 0.1129 | 0.1013 | 0.0969 |

| 1W | 0.0624 | 0.0616 | 0.0706 | 0.0617 | 0.0620 | 0.0441 | 0.0333 | 0.0387 | 0.0328 | 0.0305 |

| Attention Type | CNN (1D) | CNN (2D) | LSTM | CNN-LSTM |

|---|---|---|---|---|

| None | 0.0724 | 0.0666 | 0.0741 | 0.0676 |

| Single-attention | 0.0701 | 0.0542 | 0.0706 | 0.0660 |

| Multi-headed Attention | 0.0688 | 0.0538 | 0.0683 | 0.0516 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Bu, S.-J.; Cho, S.-B. Time Series Forecasting with Multi-Headed Attention-Based Deep Learning for Residential Energy Consumption. Energies 2020, 13, 4722. https://doi.org/10.3390/en13184722

Bu S-J, Cho S-B. Time Series Forecasting with Multi-Headed Attention-Based Deep Learning for Residential Energy Consumption. Energies. 2020; 13(18):4722. https://doi.org/10.3390/en13184722

Chicago/Turabian StyleBu, Seok-Jun, and Sung-Bae Cho. 2020. "Time Series Forecasting with Multi-Headed Attention-Based Deep Learning for Residential Energy Consumption" Energies 13, no. 18: 4722. https://doi.org/10.3390/en13184722

APA StyleBu, S.-J., & Cho, S.-B. (2020). Time Series Forecasting with Multi-Headed Attention-Based Deep Learning for Residential Energy Consumption. Energies, 13(18), 4722. https://doi.org/10.3390/en13184722