1. Introduction

With the rapid development of the economy, the application of electricity in various aspects of production and living has been becoming increasingly widespread [

1]. Faced with the difficulty of electrical energy storage, power plants need to generate electricity in accordance with the requirement of the power grid [

2]. Short-term load forecasting (STLF) can provide a decision-making basis for generation dispatchers to draw up a reasonable generation dispatching plan [

3], which plays a vital role in the optimal combination of units, economic dispatch, optimal power flow, and power market transactions [

4]. However, the short-term load is sensitive to the external environment, such as climate change, date types, and social activities [

5]. The randomness of the load sequence is raised by these uncertainties [

6]. Therefore, how to identify the strong correlation factors of the extracted load from a host of influencing factors and realize the accurate prediction of the short-term load is an urgent problem to be solved in this research.

STLF has a dramatic impact on the external environment [

7]. The load can be affected by various factors, such as temperature, weather, and date type [

8]. When the temperature is in the extreme position, electrical appliances for heating or cooling will bring about the increase of the consumption of the load [

9]. The type of date contributes to the change of the load by affecting the on-off state of the plant [

10]. Therefore, extracting effective features from the uncertain influencing factors of load are able to lay the foundation for improving short-term load forecasting. Feature engineering refers to the selection of a representative subset of features in the feature set [

11]. These features are highly correlated with the output variables and are the most common methods for extracting effective features. Common feature selection methods consist of autocorrelation (AC), mutual information (MI), ReliefF (RF), correlation-based feature selection (CFS), and so on [

12]. Moon et al. used CFS to extract relevant features for rainfall prediction [

13]. The feature subset selected by this method can shorten the training time of the model and reduce over-fitting. Yang et al. used the autocorrelation function (ACF) to select features and used Least Squares Support Vector Machines to predict the load [

14]. The existing feature processing methods are formidable to ensure that the selected features are the optimal feature subsets [

15]. Therefore, this study uses a hybrid feature extraction method to solve the above problems.

For the same load forecasting case, the upper limit of prediction accuracy differs among various features; for the same set of features, the performance of each prediction model is also different [

16]. For decades, an ocean of advanced methods has been proposed to predict the power load [

17]. The general prediction methods can be broadly divided into time series methods, machine learning methods, and deep learning methods [

18]. Compared with traditional time series methods, these methods are relatively mature and there is no difficulty in implementing them [

19]. These include autoregressive integrated moving average (ARIMA) [

20], exponential smoothing [

21], semi-parametric models (SPM) [

22], multiple linear regression (MLR) [

23], and so on. However, they are all based on linear analysis and unable to accurately describe the specific trend of STLF [

24].

As the research of machine learning is becoming a hot spot nowadays, these methods have been applied to various prediction fields. Liu et al. put forward a runoff prediction method which combines hidden Markov and Gaussian process regression [

25]. Liu et al. proposes an ultra-short-time forecasting method based on the Takagi–Sugeno (T–S) fuzzy model for wind power and wind speed. This method employs meteorological measurements as input and can get accurate prediction results [

26]. Machine learning also has a pleasurable effect on STLF [

27]. Khwaja et al. presented an artificial neural network (ANN) and ensemble machine learning to improve short-term load prediction [

18]. Compared with traditional models, such as traditional exponential smoothing (ARMA), it performs higher accuracy.

With the increasing development of artificial intelligence, a multitude of traditional machine learning methods are unable to catch up with deep learning methods [

28]. A high number of improved deep learning models have also appeared one after another, and these models are applied to various fields for predicting. Tao et al. developed the convolutional-based bidirectional gated recurrent unit (CBGRU) method to forecast air pollution [

29]. Liu et al. proposed the experimental Bayesian deep learning model to predict the wind speed with good accuracy [

30]. Similarly, deep learning showed significant achievements in STLF [

31]. Guo et al. developed Multilayer Perceptron (MLP) and Quantile Regression (QR) for point prediction and probabilistic prediction of the short-term load [

32]. The model has a better forecasting accuracy in terms of measuring electricity consumption relative to the random forest and gradient boosting model.

The prediction of time series by recurrent neural networks (RNN) has been adopted by more and more people [

33]. However, when RNN works out the long-term dependence problem, it would face problems of gradient disappearance and gradient explosion [

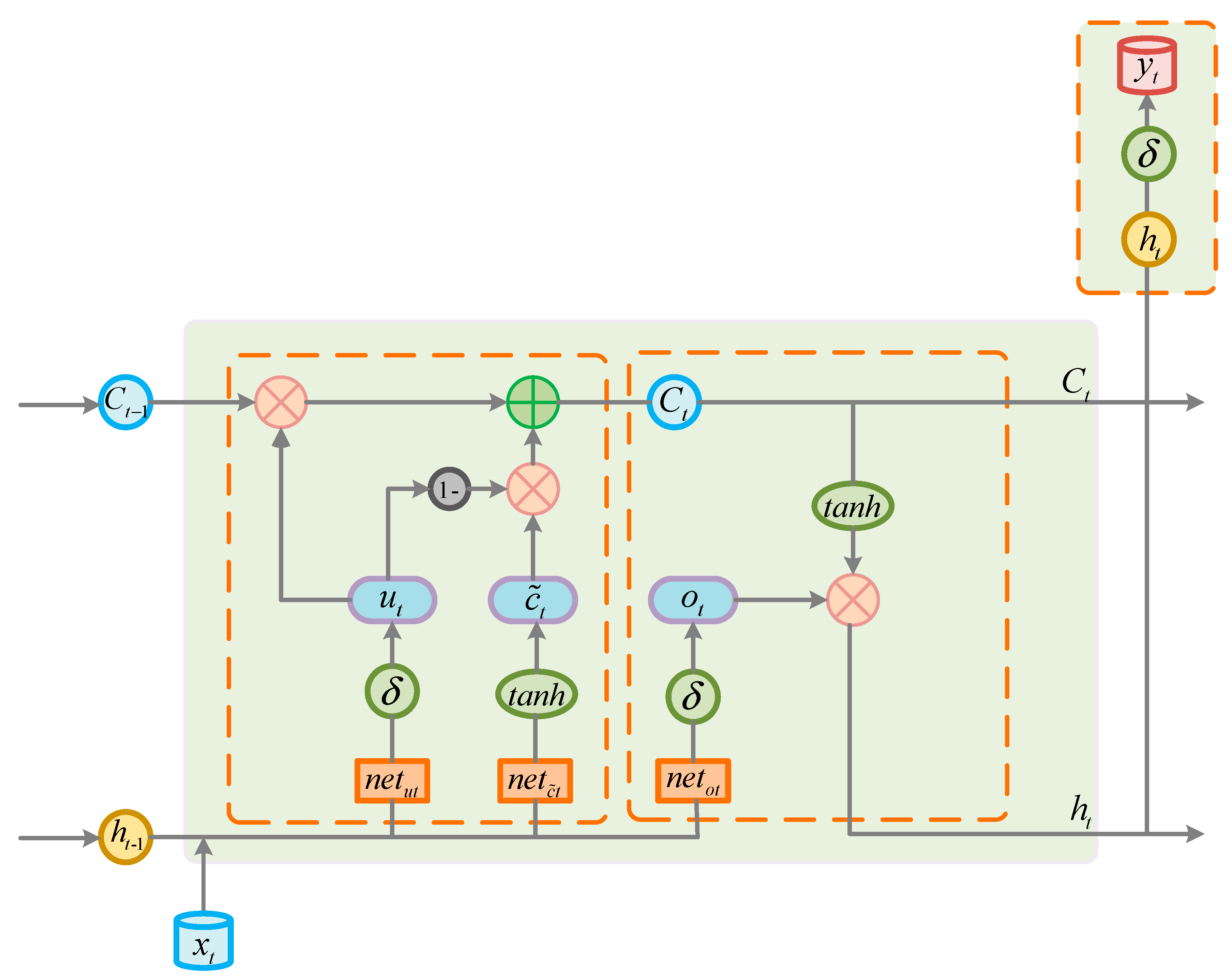

34]. To solve such problems, the Long Short Memory Network (LSTM) was proposed by Hochreiter and Schmidhuber in 1997 [

35]. The concepts of gate, input gate, output gate, and forget gate were proposed for the application of the network. After years of testing, LSTM showed a more prominent contribution in timing prediction than RNN. In recent years, numerous LSTM variants have been proposed. Zhang et al. unified the gates in the LSTM network into one gate, and these gates share the same set of weights, thereby reducing training time [

36]. Pei et al. changed the structure in the LSTM network to achieve better prediction results and shorter training time [

37]. These variants of LSTM are well applied to short-term prediction.

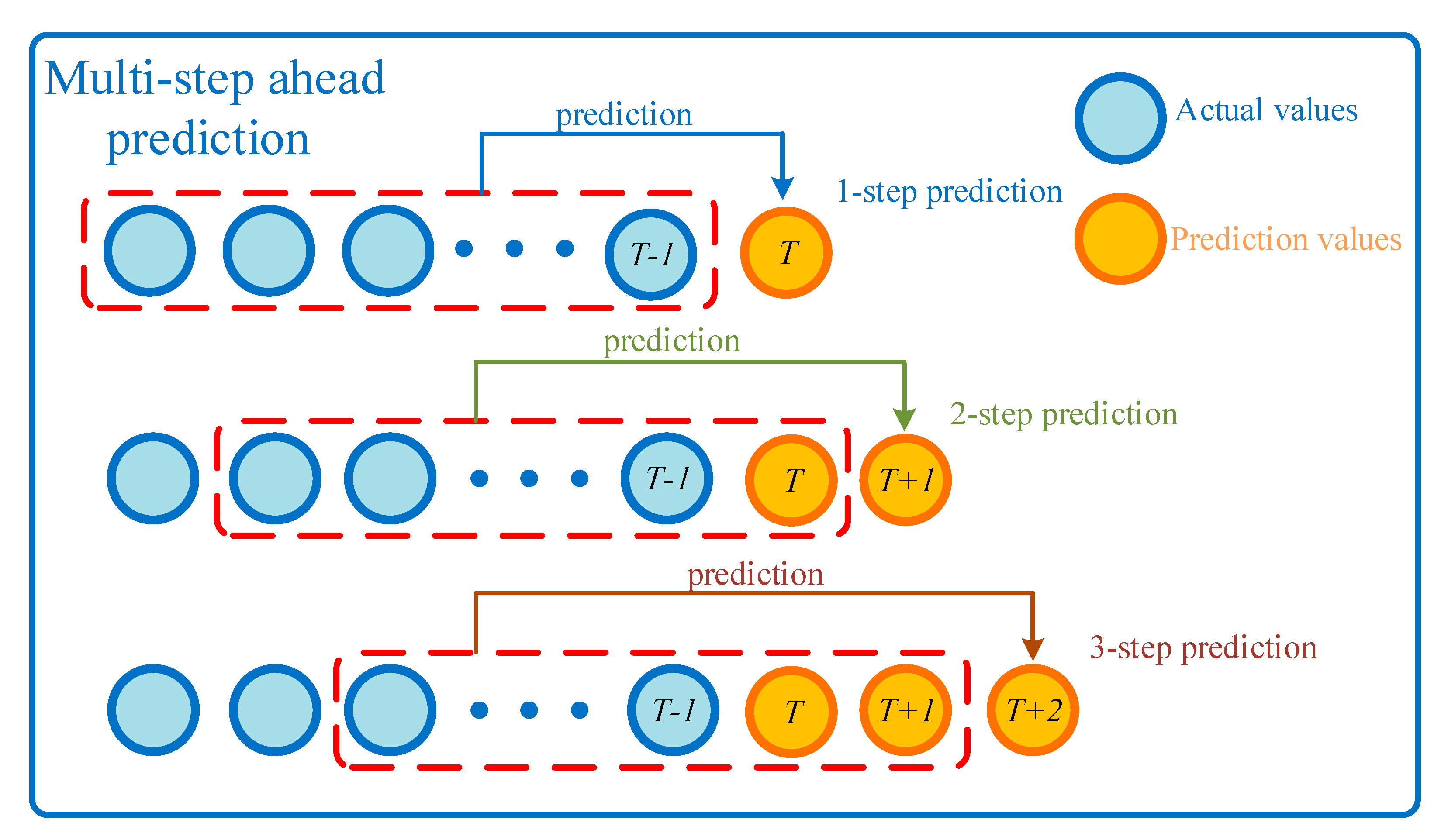

Nowadays, most of the researches focus on single-step STLF. However, accurate multi-step STLF has a more important significance for formulating generation scheduling plans [

38]. It can formulate longer-term plans for electric power dispatching, and reap greater benefits for electric power operators [

39]. This paper is devoted to the exploration of multi-step STLF. In order to test the limit prediction ability of the model under the requirement of short-term load prediction accuracy, the model is proposed to predict multi-step prediction of the power load in the Hubei Province. This study provides technical support for a power system to formulate a generation plan.

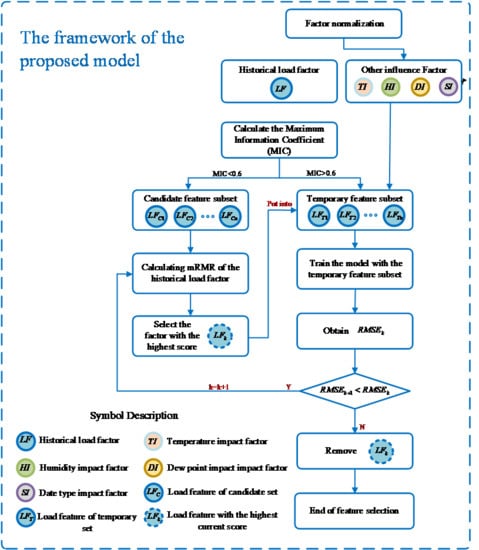

Compared with the existing research of short-term load forecasting, the highlights and advantages of our study are as follows:

(1) The model not only considers other influencing factors such as the environment on short-term load forecasting, but also pays more attention to the influence of the historical load on the model and adopts a two-stage feature selection method to select load features of 168 time periods from the previous week;

(2) This paper proposes an Improved Long Short-Term Memory network for load prediction. This network changes the characteristics of the original door and the transfer method of the cell. Compared with the traditional LSTM, it has higher prediction accuracy;

(3) Most of the popular short-term load forecasting models predict the load of the next period (hour-ahead or day-ahead). This article is dedicated to studying the load of the next multi-period (multi-step ahead). This is more conducive to rationally arrange the power generation tasks of the power station and ensure the stability of the power system operation. This research has more practical significance.

4. Case Study

In this section, we first introduce the basic data for the power load and the corresponding factors applied to the model. In order to verify that the proposed model has high-precision prediction results, four datasets are used for testing and compared with existing popular models. In addition, multiple predictive period experiments further confirm the practicability of the model. In the predictive model, all deep learning models are implemented using the keras framework, and SVR is implemented using the “sklearn” framework in python.

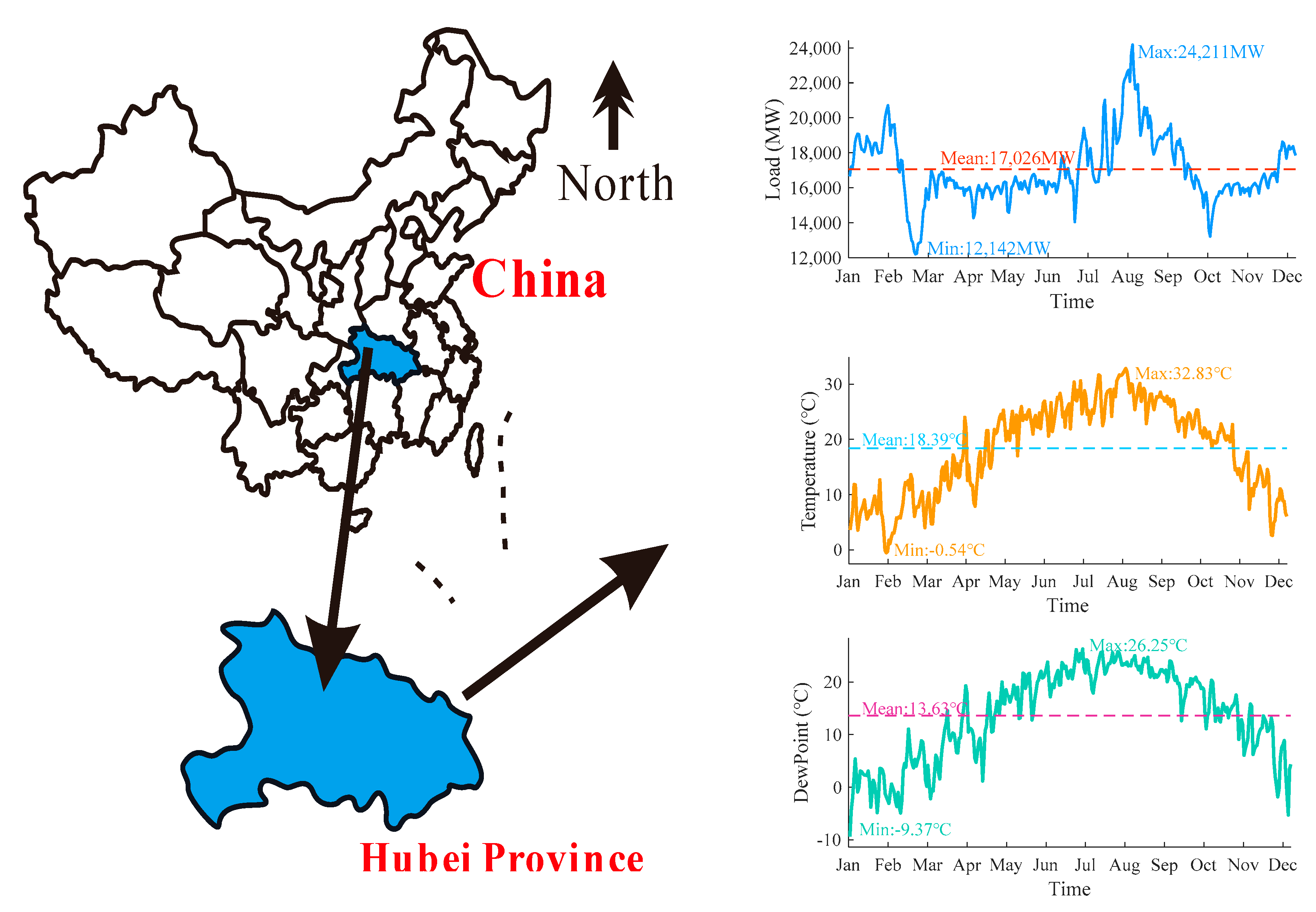

4.1. Data Introduction

In this case, the power load and related influencing factors are first introduced. The data used in this paper comes from the Huazhong Power Grid Corporation, which is the hourly load data for 2015 from the Hubei Province, China. In this year, the average annual temperature in Hubei was about 18 degrees. January and February were the coldest times of the year. The minimum temperature reached numbers below zero. The temperature became higher in July and August, and the highest temperature reached 40 degrees and more. The power load in January and February was higher than the annual average due to the application of heating equipment. From March to June, the temperature has been stable below the average. In the summer, the large-scale application of the refrigeration system seriously affected the power load. During this period, the power load was the highest in the whole year. With the weather turned cooler from September to November, the load weakened accordingly until the temperature began to rise steadily. The lowest power load of the year was the Spring Festival due to factory holidays. The annual electrical load data and environmental data are shown in

Figure 4.

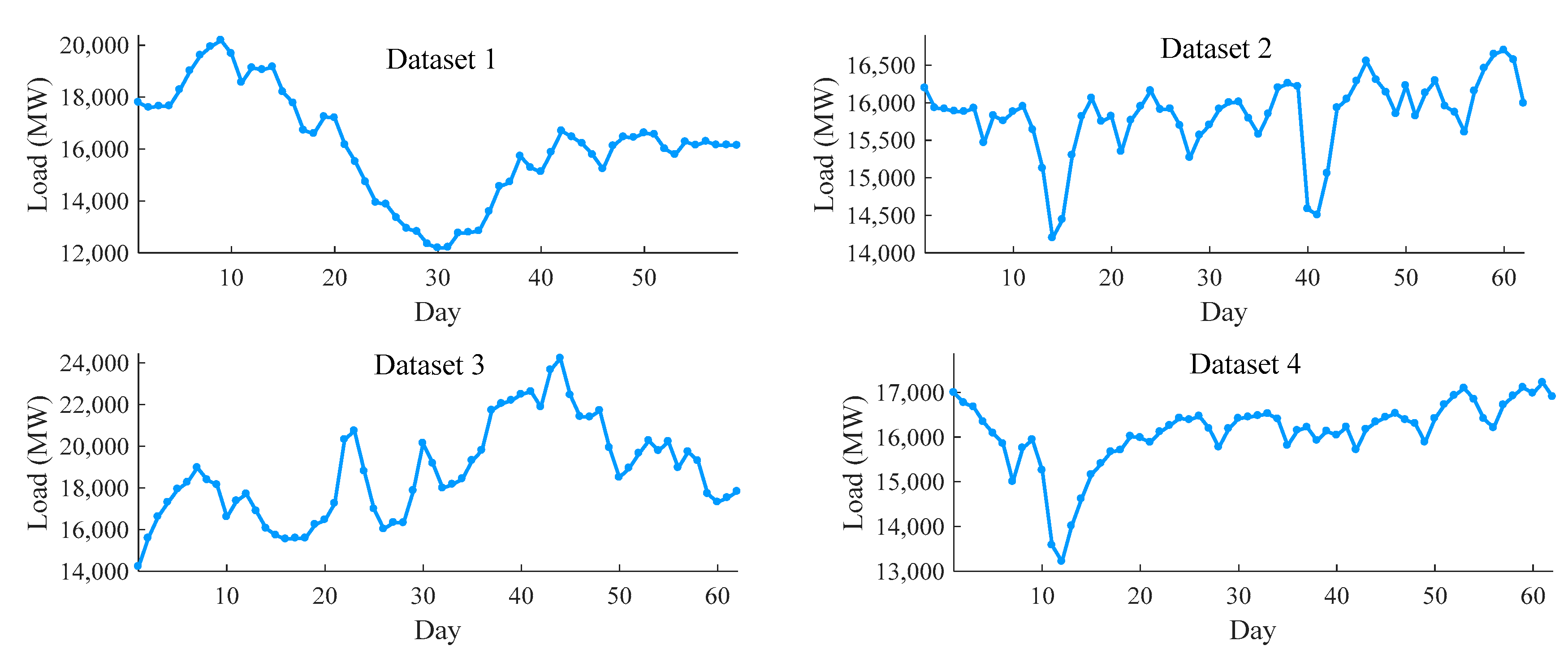

In order to better evaluate the performance of the model, the load data is divided into four datasets according to the quarter. It can be seen from the figure that the first dataset shows a large fluctuation range, the second dataset is relatively stable, the third dataset performs a high peak value, and the power load in the fourth dataset drops sharply and then slowly rises. According to this classification, the dataset can be better trained and is representative. The detailed parameters of the dataset are shown in

Table 3.

In this paper, the data of the missing period is obtained by using the average value of the load of the previous period and the load of the next period. The first 80% of the original dataset is used as training data to train the model, and the other 20% is used as test data. The models are trained using the cross-validation [

41,

42].

Tr,

Te represents the number of training sets and test sets, respectively.

Sum represents the total number of datasets. Four datasets are shown in

Figure 5.

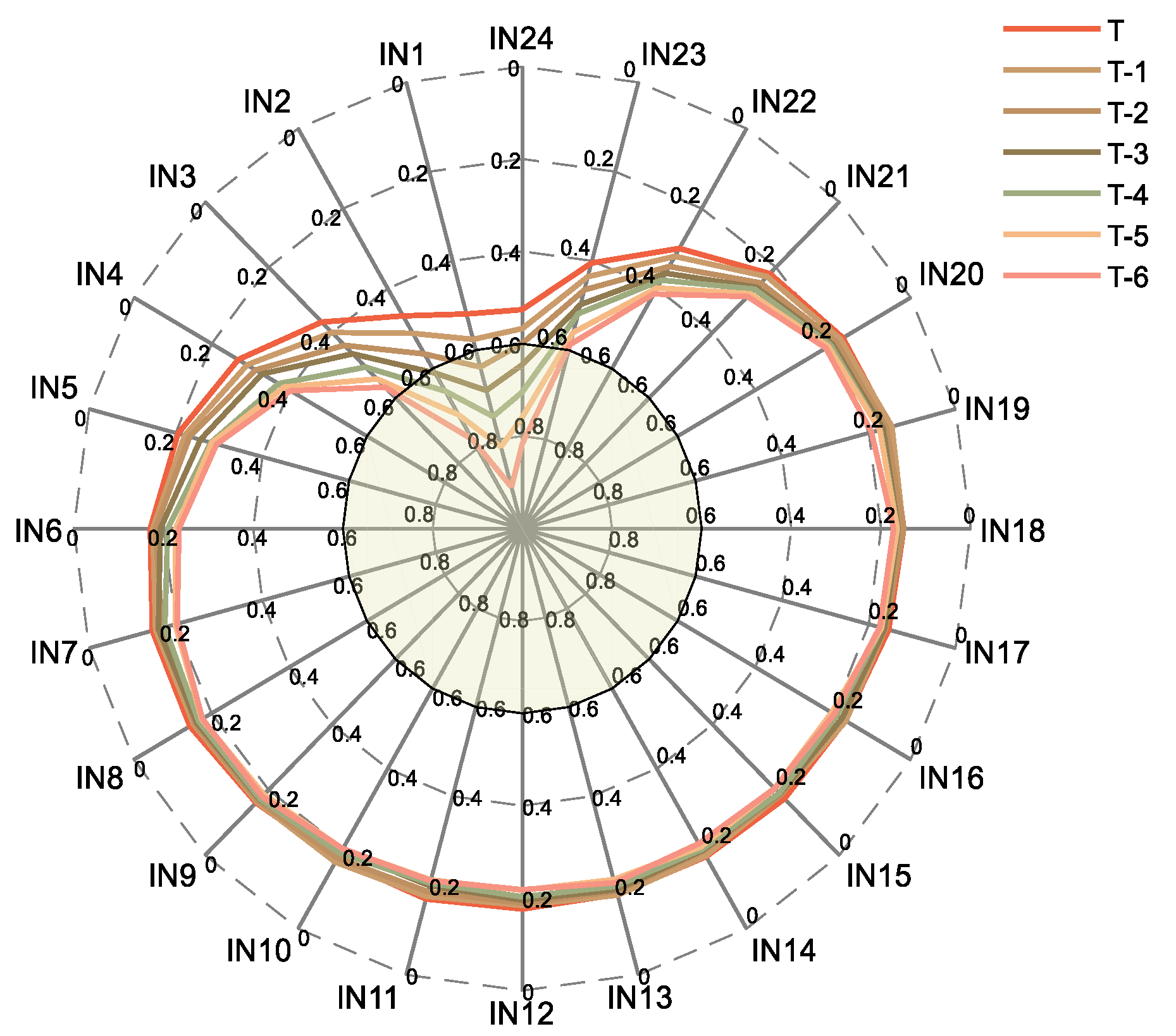

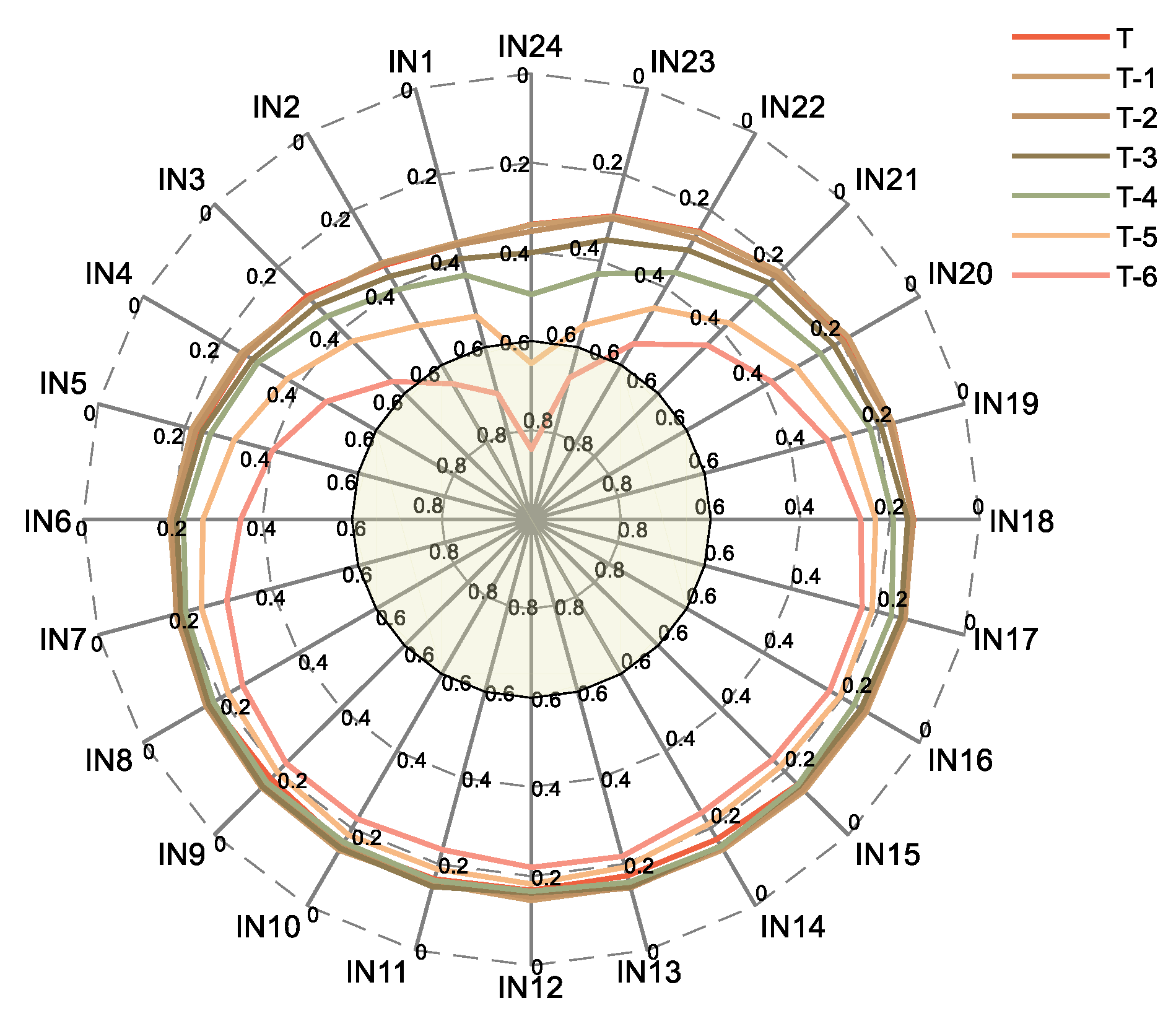

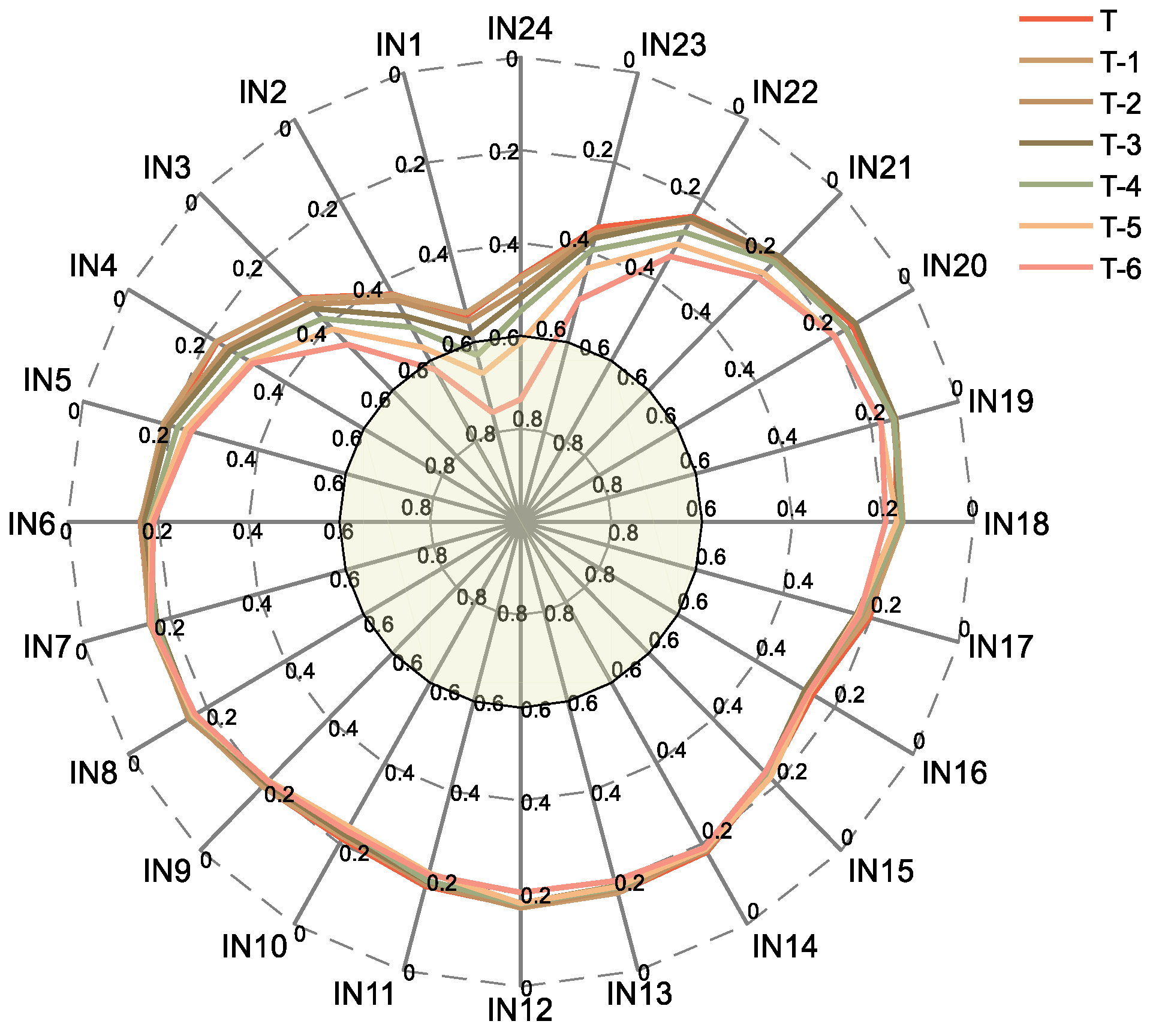

4.2. Feature Combination Selection

In the proposed method, the original load characteristics are pre-processed. Firstly, the load characteristics of the dataset in the first seven days (168 time periods) are calculated for the maximum information coefficient of the current period, and the features with a MIC value greater than 0.6 are selected. The MIC value of the load of the first 168 periods and the current period load in dataset 1 to dataset 4 are shown in

Figure 6,

Figure 7,

Figure 8 and

Figure 9.

T represents the period of the day when the load is to be predicted, T-1 represents the day before the predicted load, IN1 represents the current load in the past hour, IN2 represents the current load for the past two hours, and so on.

After the preliminary screening of features, make a second selection of the remaining feature. The features including the environment features and the encoded date features are added to the feature subset, and the features of the candidate subset are sorted by the mRMR method. The first ranked feature is placed in the feature subset for training. If the prediction accuracy becomes higher, the feature is retained, and continue the above process. If the accuracy becomes lower, stop the selection. The selected feature tables under the four datasets are shown in

Table 4, where t-n represents the load characteristics of the previous n hours predicted at that time. The final feature set is used by the model to make predictions.

4.3. Parameter Settings

To validate the performance of the model, other popular models (LSTM, GRU, SVR) are used for comparison. In order to achieve fairness, the parameters of different models should be chosen as much as possible. The parameters selected in this article are all optimized with GA or some common values. For the purpose of eliminating the error caused by randomness, each model is run multiple times to average the results. The specific parameters are as shown in

Table 5:

4.4. Experiment Results and Discussion

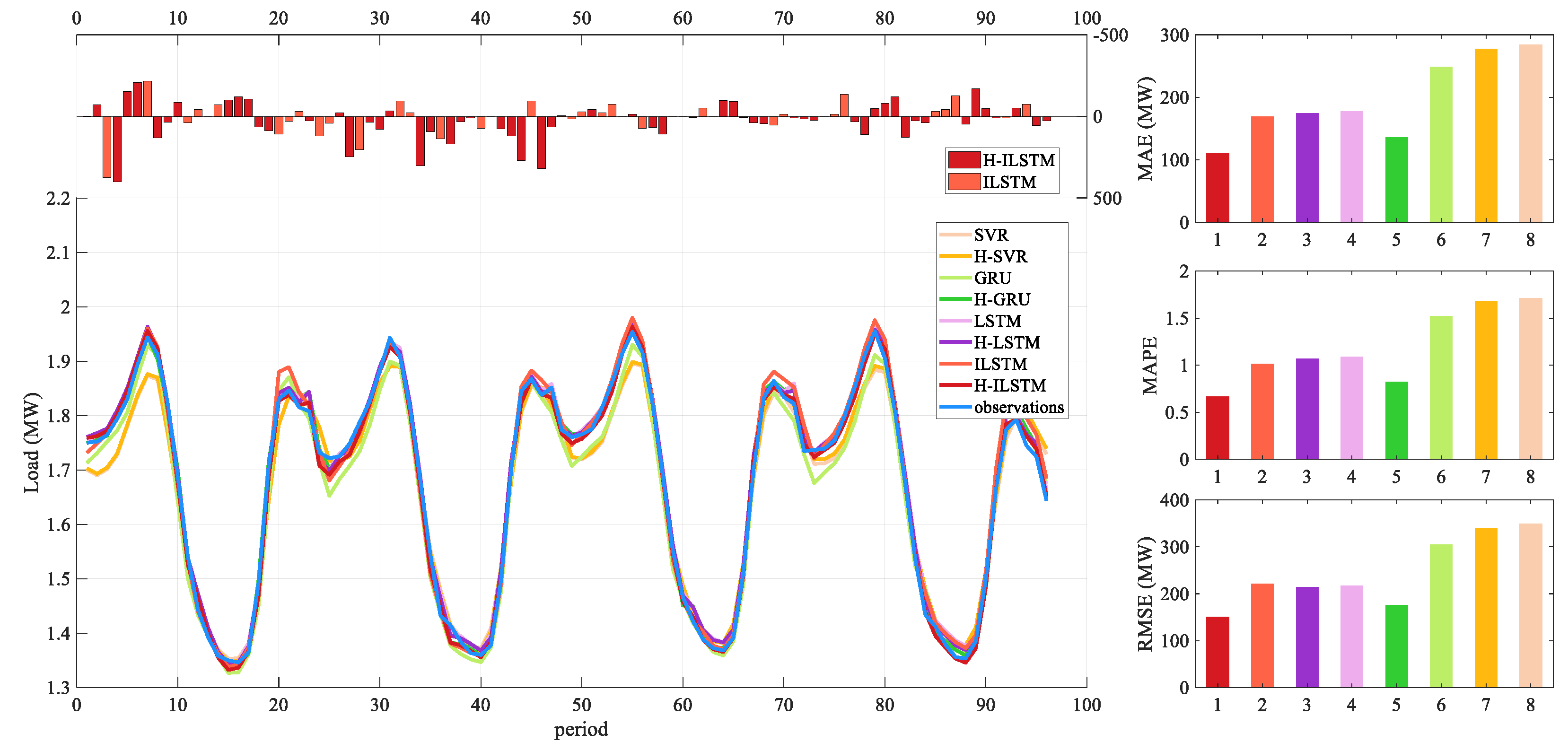

In this section, the current popular models are compared with the proposed method to predict the short-term load. The results of various models under the 1-step, 2-step, and 3-step load forecasting will be introduced as follows, and MAE, MAPE, RMSE, and R2 are used to evaluate the accuracy of the model. Cpu times of the algorithms (CT) is used to indicate the time required for model calculation, and S is used to evaluate the future operational risks generated by model predictions

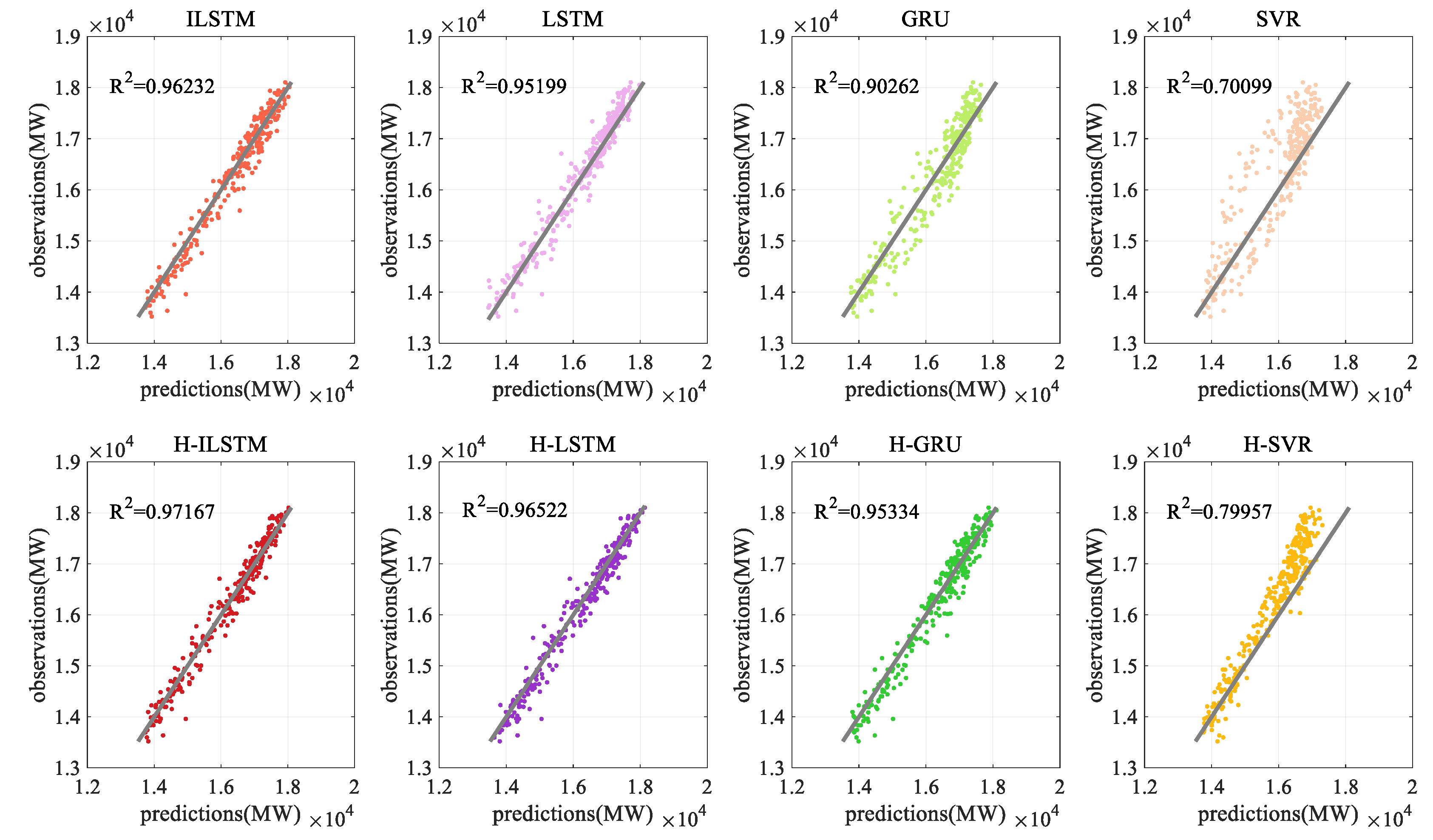

(1) The analysis of the one-step prediction

The prediction results of each model on the dataset are shown in

Table 6. H-ILSTM represents the model combined with hybrid feature selection and ILSTM. The detailed index comparison figure for dataset 1 is shown in

Figure 10 and

Figure 11. The figure shows the load forecast results of 96 time periods in the test set for dataset 1. The bold words in the table represent the best prediction results among the eight models. It can be clearly seen that the hybrid feature selection method has improved the model to varying degrees in every dataset. Among them, the H-ILSTM model has the highest prediction accuracy, and the value of the standard deviation of the prediction error is also the smallest. Compared with the model which does not adopt a hybrid feature selection method, the specific improvement effect of this model is shown in

Table 7. The forecast accuracy improved by nearly 50%.

Compared with the recently popular model, H-ILSTM is also very competitive. As shown in

Table 8, the accuracy of this model is compared with other prediction models. The evaluation index is the average of the four datasets. H-ILSTM has a better prediction effect than the original LSTM network, and the prediction accuracy is improved by about 20%. Compared with machine learning, the prediction accuracy has been significantly improved.

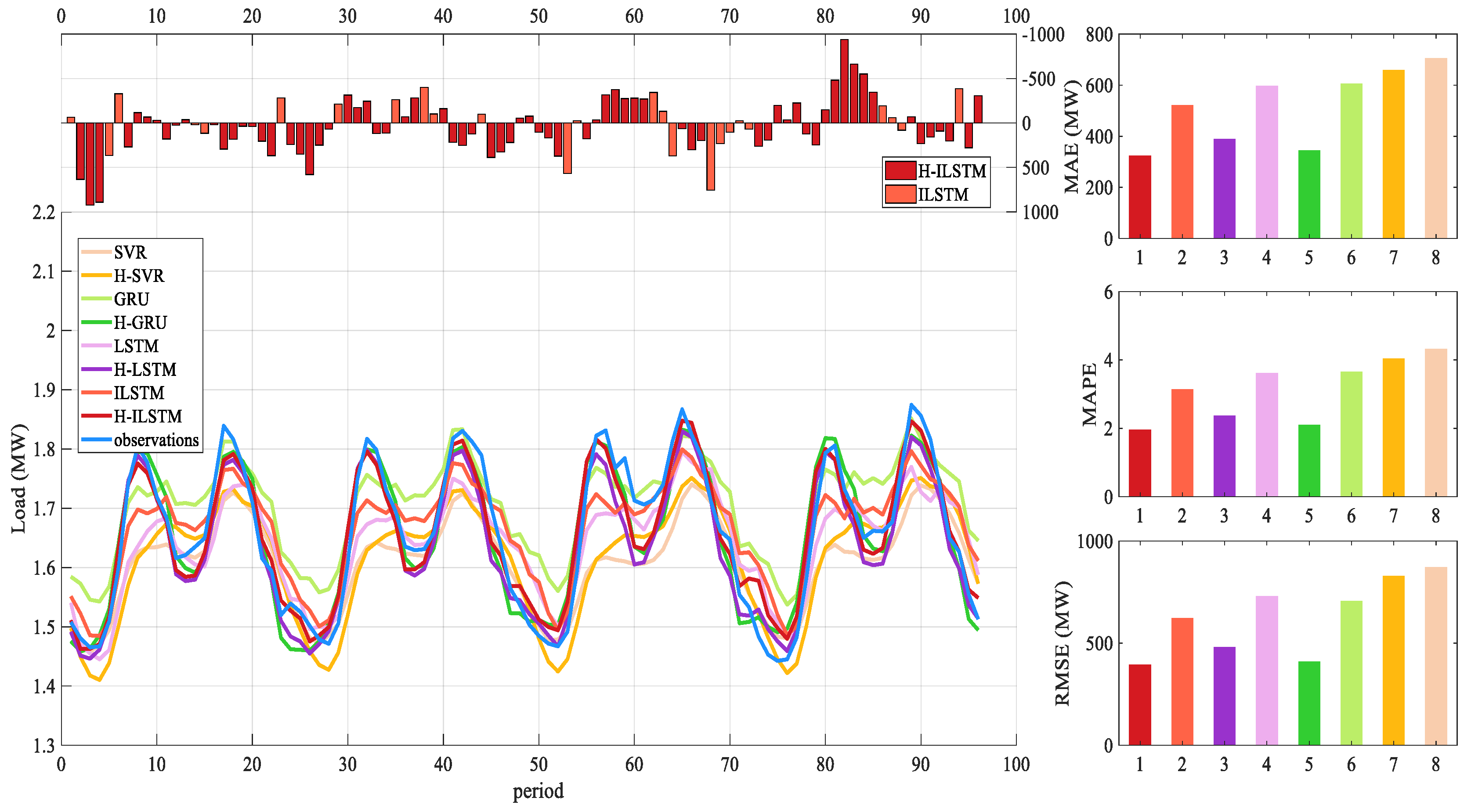

(2) The analysis of the two-step prediction

The prediction accuracy is reduced compared to the one-step prediction, but the model still maintains a high accuracy. The two-step prediction results of various models under four data are shown in

Table 9. The bold characters in the table represent the best predictions among the eight models. The comparison figure of forecast indicators is shown in

Figure 12 and

Figure 13. The figure shows the load forecast results of 96 time periods in the test set for dataset 2. Among them, H-ILSTM predicted the best performance under the four datasets.

Compared with other models, the H-ILSTM model has improved to varying degrees under the four datasets. The improvement indicators are shown in

Table 11.

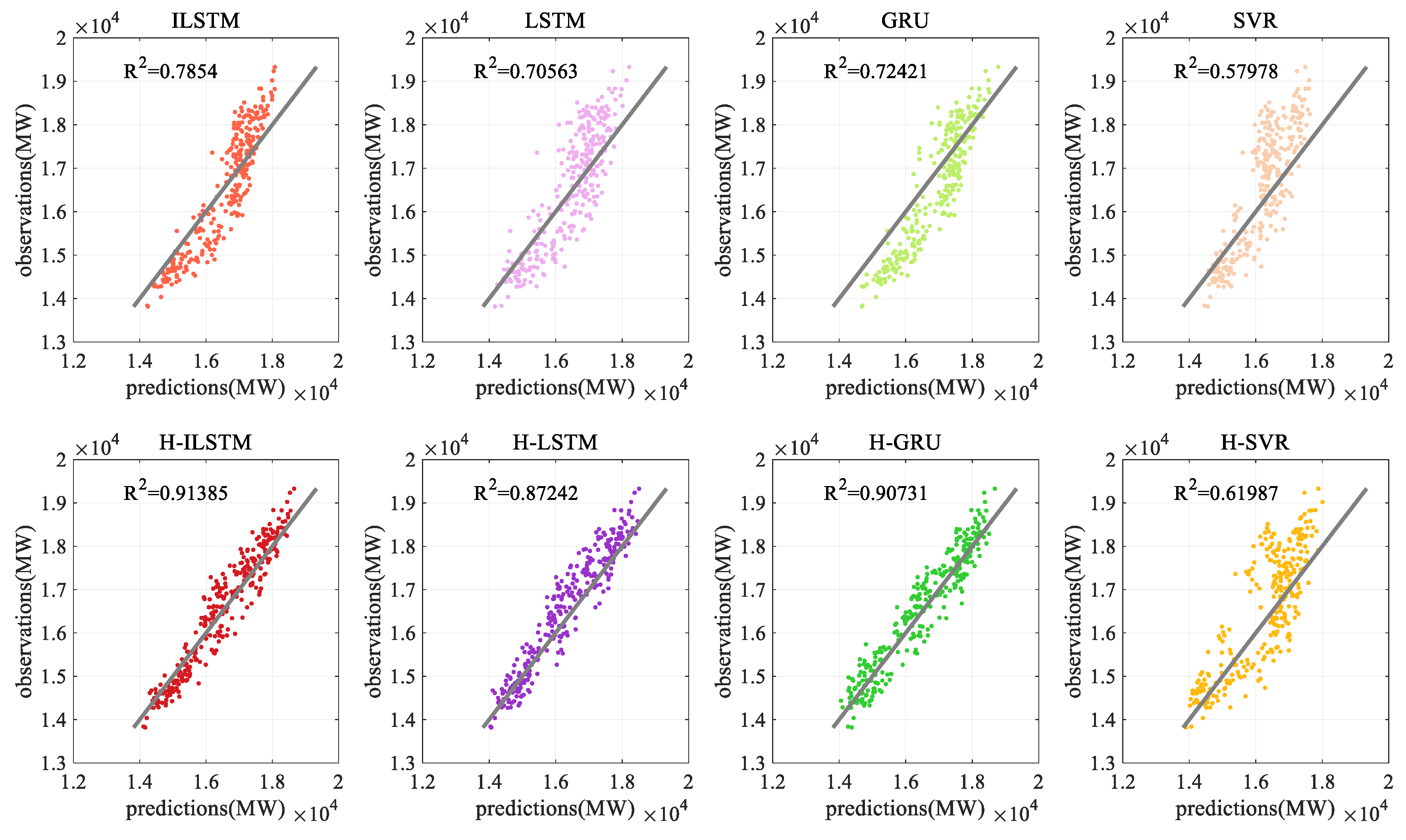

(3) The analysis of the three-step prediction

The model can still maintain a relatively high prediction accuracy. The three-step prediction results of various models under four data are shown in

Table 12. The bold characters in the table represent the best predictions among the eight models. The comparison figure of forecast indicators is shown in

Figure 14 and

Figure 15. The figure shows the load forecast results of 96 time periods in the test set for dataset 4.

The use of the hybrid feature selection method has slightly improved the ILSTM model, and each forecast evaluation index has been improved by more than 20%. The details are shown in

Table 13.

Compared with other models, the average value of each evaluation index under the four datasets has increased by about 15%. The details are shown in

Table 14.

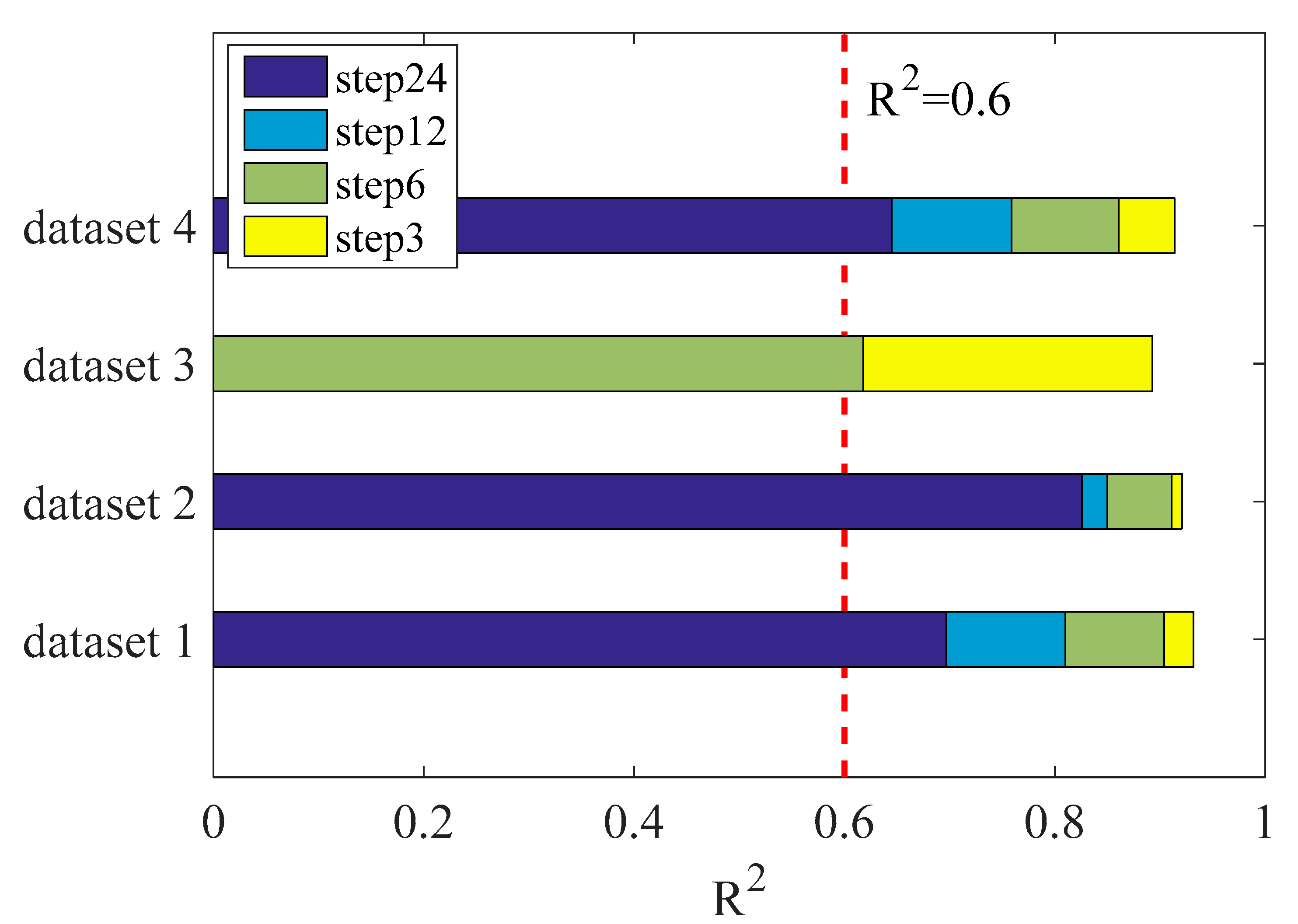

(4) The analysis of the multi-step prediction

This section mainly tests the limit prediction ability of the H-LSTM model proposed in this paper. Make predictions of multi-step for four datasets, respectively, and establish prediction thresholds. If the prediction accuracy is less than this threshold, the prediction is stopped. This threshold is evaluated using the decision coefficient R

2. The result is shown in

Figure 16.

It can be seen from the figure that the model performs best under dataset 2 and can accurately predict the load in the next 24 h. In dataset 1 and dataset 4, the model can more accurately predict the load for the next 24 periods. The performance in dataset 3 is general and can only be predicted for the next 6 h. Due to the large fluctuations in the peaks, the model has a slightly insufficient ability to predict such multi-steps. Compared with dataset 1, dataset 2, and dataset 4, the model has a good prediction performance for such datasets. Both can accurately predict the load in the next 6 h, and thus can more accurately predict the load in the next 24 h. The model also has room for improvement under load datasets with large fluctuations

(5) Comparison experiment between the proposed model and the persistence model

A good baseline for time series forecasting is the persistence model. This is a predictive model in which the last observation is persisted forward. This method uses the “today equals tomorrow” concept [

43]. In order to better evaluate the effect of the proposed method, we conducted a test comparison between the proposed method and the persistence model, and used MAE, MAPE, RMSE, and R

2 for evaluation. This section shows the experiment of single-step prediction in dataset 1.

Figure 17 shows the prediction effects of the two models. The evaluation indicators are listed in

Table 15. In terms of indicators, the persistence model is close to the proposed model on R

2. All other indicators are worse than the proposed model.

5. Conclusions

STLF has a very important leading role in the power grid. In order to improve the accuracy of short-term load forecasting, this paper first starts from feature engineering, taking into account the relevant factors that affect the load, such as weather conditions and date types, and the hybrid feature selection is adopted. The improved LSTM network is used for multi-step prediction. The datasets of four time periods in the Hubei Province are selected and compared with the LSTM, GRU, and SVR models using the hybrid feature selection method. The effects of model prediction are reflected through MAE, RMSE, MAPE, and R2. , , and are used to reflect the difference of prediction results between models. From the experimental results, the prediction accuracy of the ILSTM model using the hybrid feature selection method is higher than the ILSTM model without this method in four datasets on average by more than 20%. The accuracy of the H-ILSTM model is about 15% higher than that of other models using the hybrid feature selection method. We also tested the multi-step prediction ability of the proposed model, which has a satisfactory performance. To sum up the following conclusions:

- (1)

The hybrid feature selection method can improve the prediction accuracy of the model;

- (2)

The ILSTM model is better than other traditional forecasting models in short-term load forecasting;

- (3)

The H-ILSTM model has a good prediction effect in multi-step prediction.

Therefore, the proposed method has a very eye-catching performance in short-term multi-step load forecasting, which can more accurately predict the load in the next few periods. This model is competitive in this field.

The proposed model also has some shortcomings. When selecting features, it only considers the optimal combination of historical loads. Other influencing factors are just normalized as one of the inputs of the model. Secondly, it takes a lot of time to select features. We will gradually improve these issues in future research.