A Dynamic Adam Based Deep Neural Network for Fault Diagnosis of Oil-Immersed Power Transformers

Abstract

1. Introduction

2. Feasibility Analysis of the DADDNN

2.1. Dynamic Adam Optimization Algorithm

- It is appropriate for non-stationary objectives and problems.

- Parameter updates are independent of the gradient. The upper limit of step size is determined by the hyper-parameters, ensuring that the updated step size is within the stable range.

- It is gradient diagonal scaling invariant and handles noisy samples or sparse gradients better.

- The parameters are generalized and only a small amount of adjustments are needed for different datasets.

Specific Implementation of Dynamic Adam

- t: Iteration t

- : Stochastic objective function with parameters

- : Initial learning rate

- : Attenuation coefficient of learning rate

- : A constant for numerical stability

- : Exponential decay rates for the moment estimates

- : First moment estimation at iteration t

- : Second moment estimation at iteration t

- : Bias-corrected first moment estimate at iteration t

- : Bias-corrected second moment estimate at iteration t

- : The element-wise square

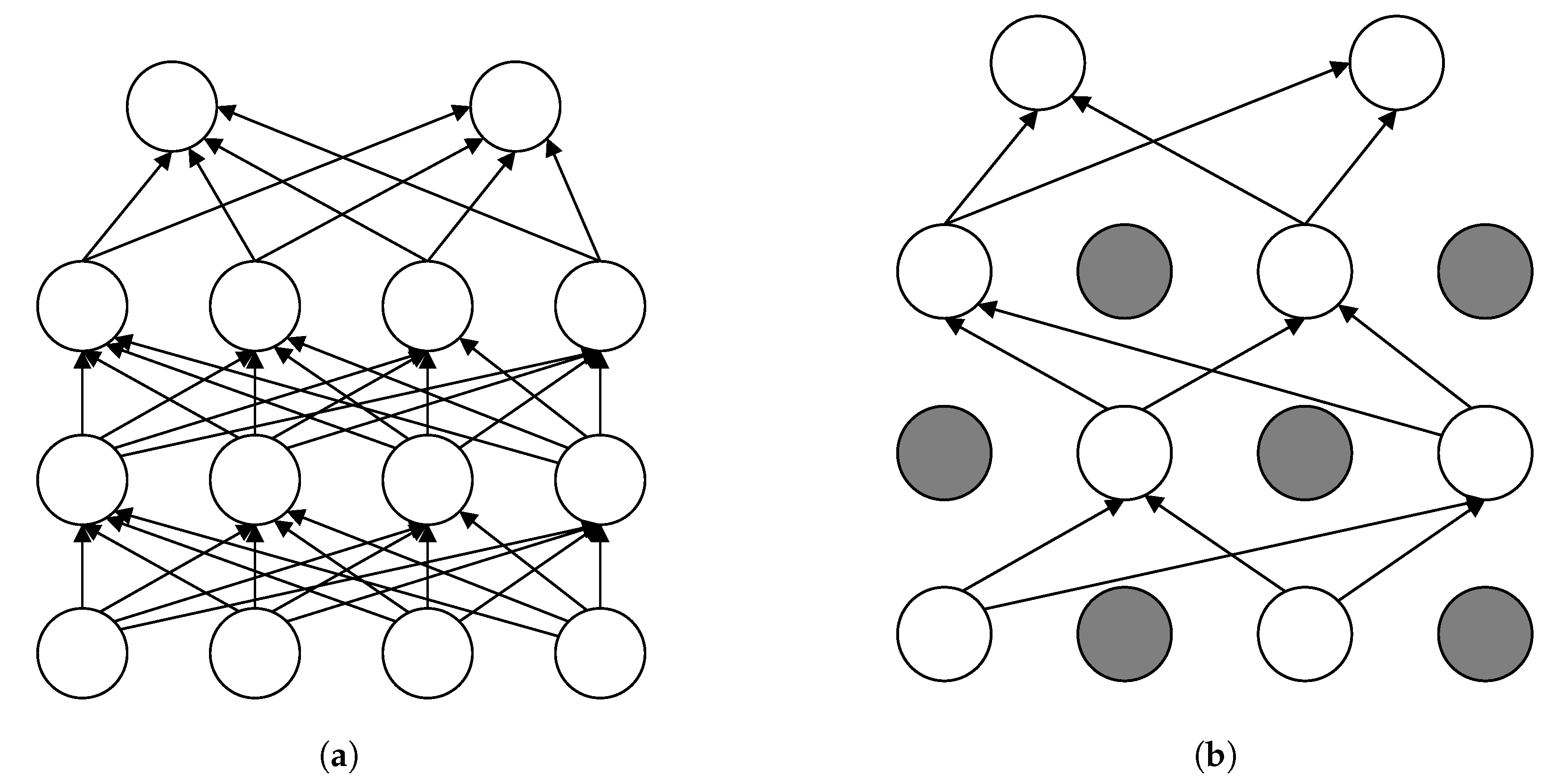

2.2. Dropout Technique

3. Realization and Discussions of Transformer Fault Diagnosis Based on DADDNN Model

3.1. Transformer Fault Type and Data Acquisition

3.2. Selection of the Feature Vector

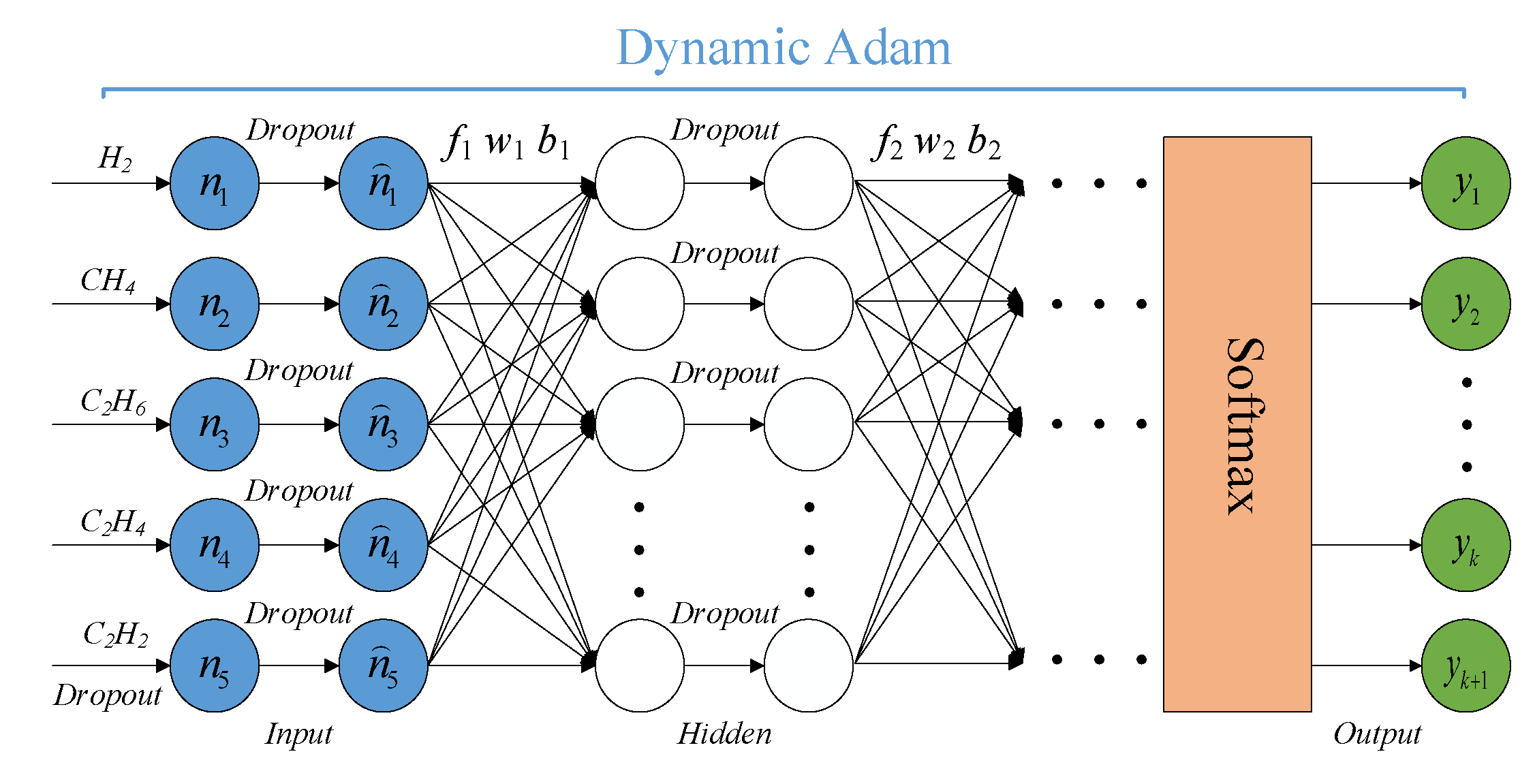

3.3. Transformer Fault Diagnosis Instantiation Model

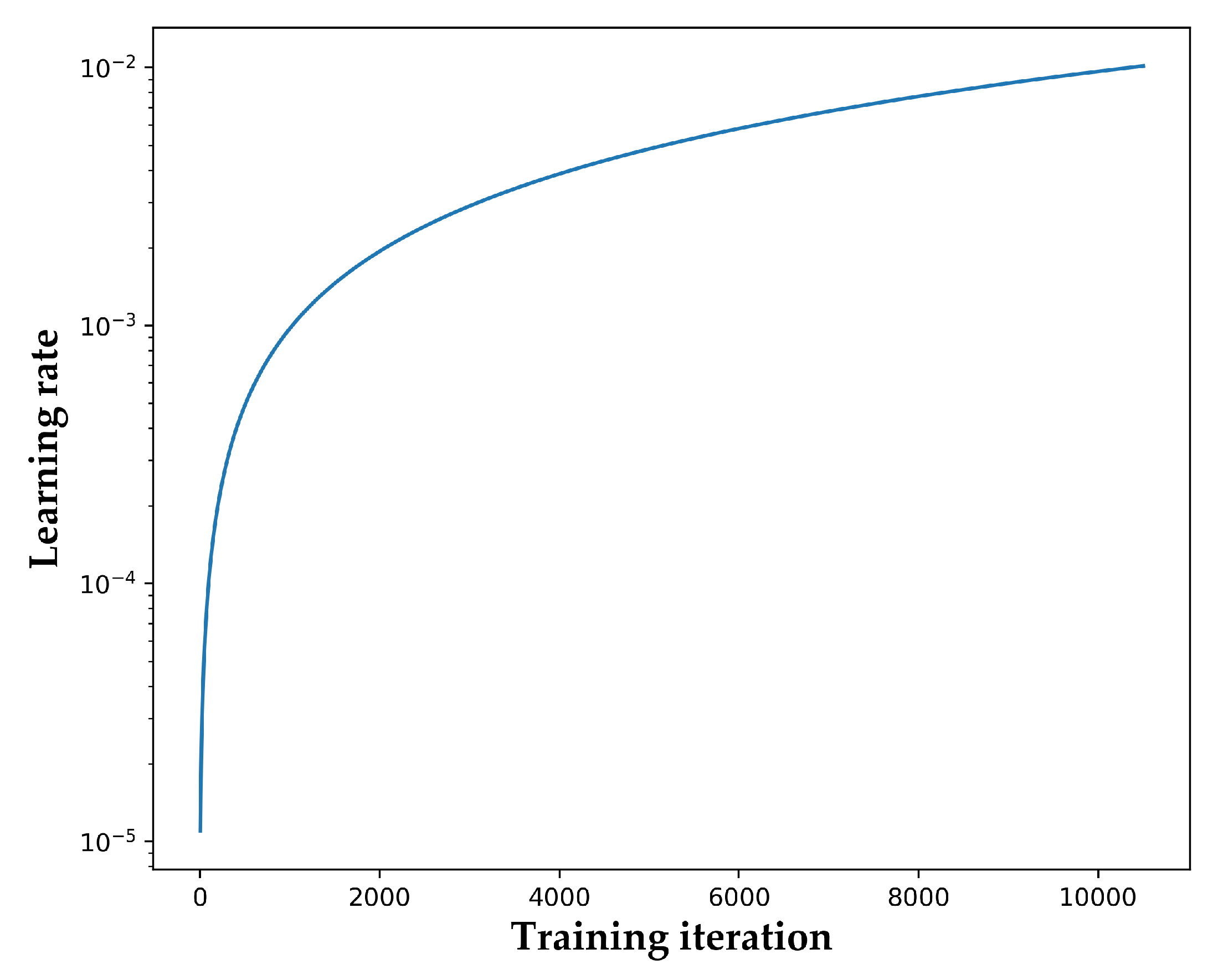

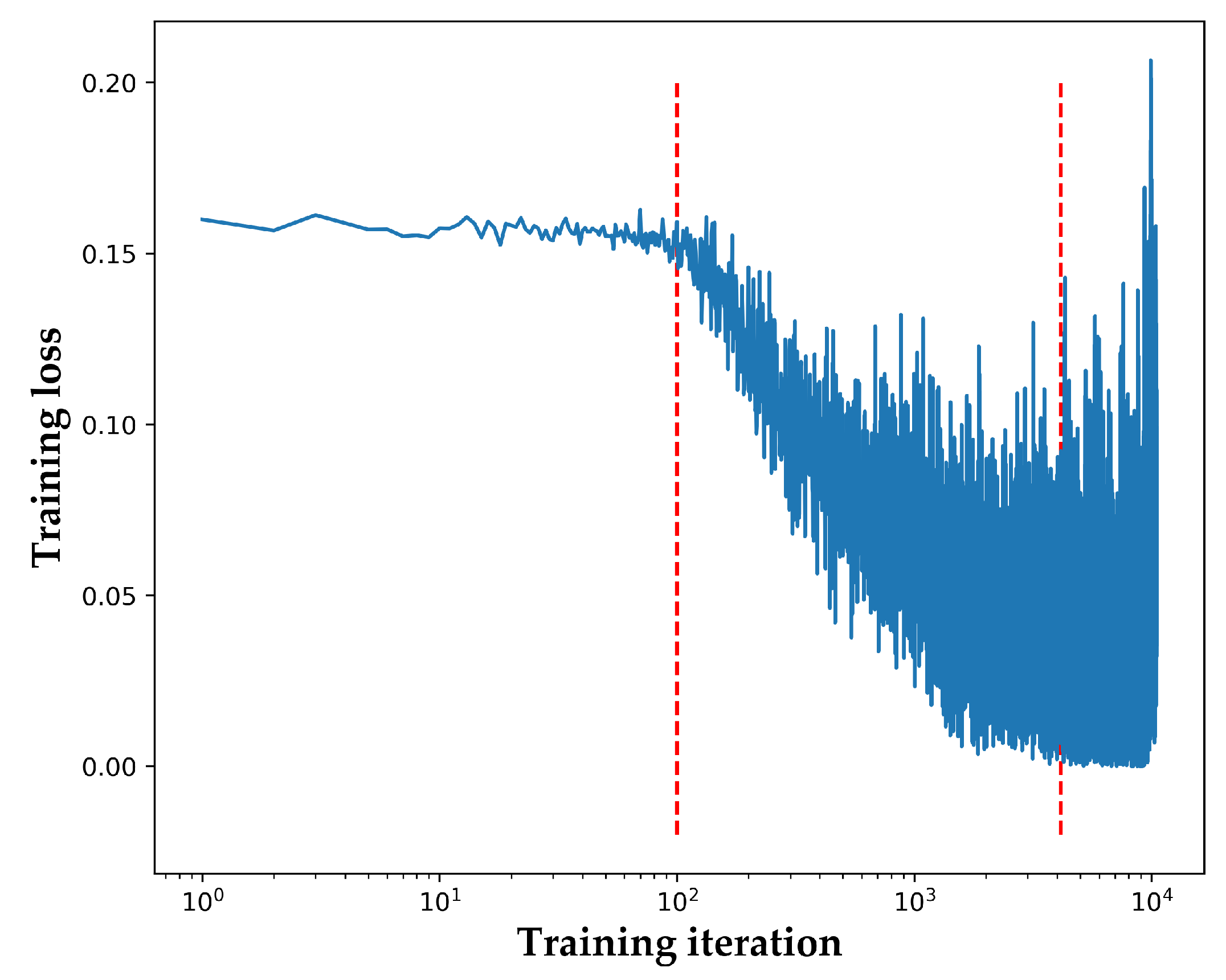

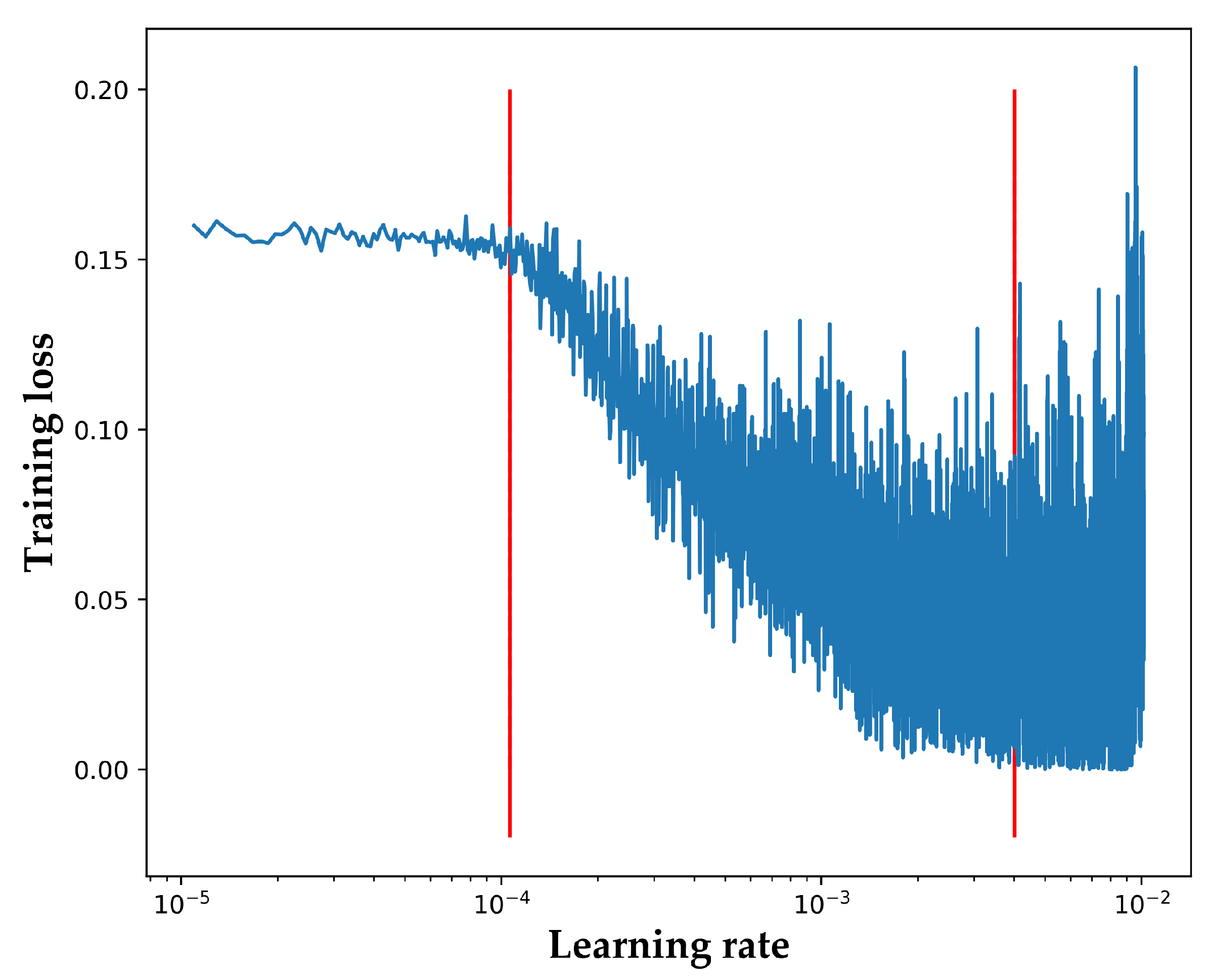

3.3.1. Learning Rate

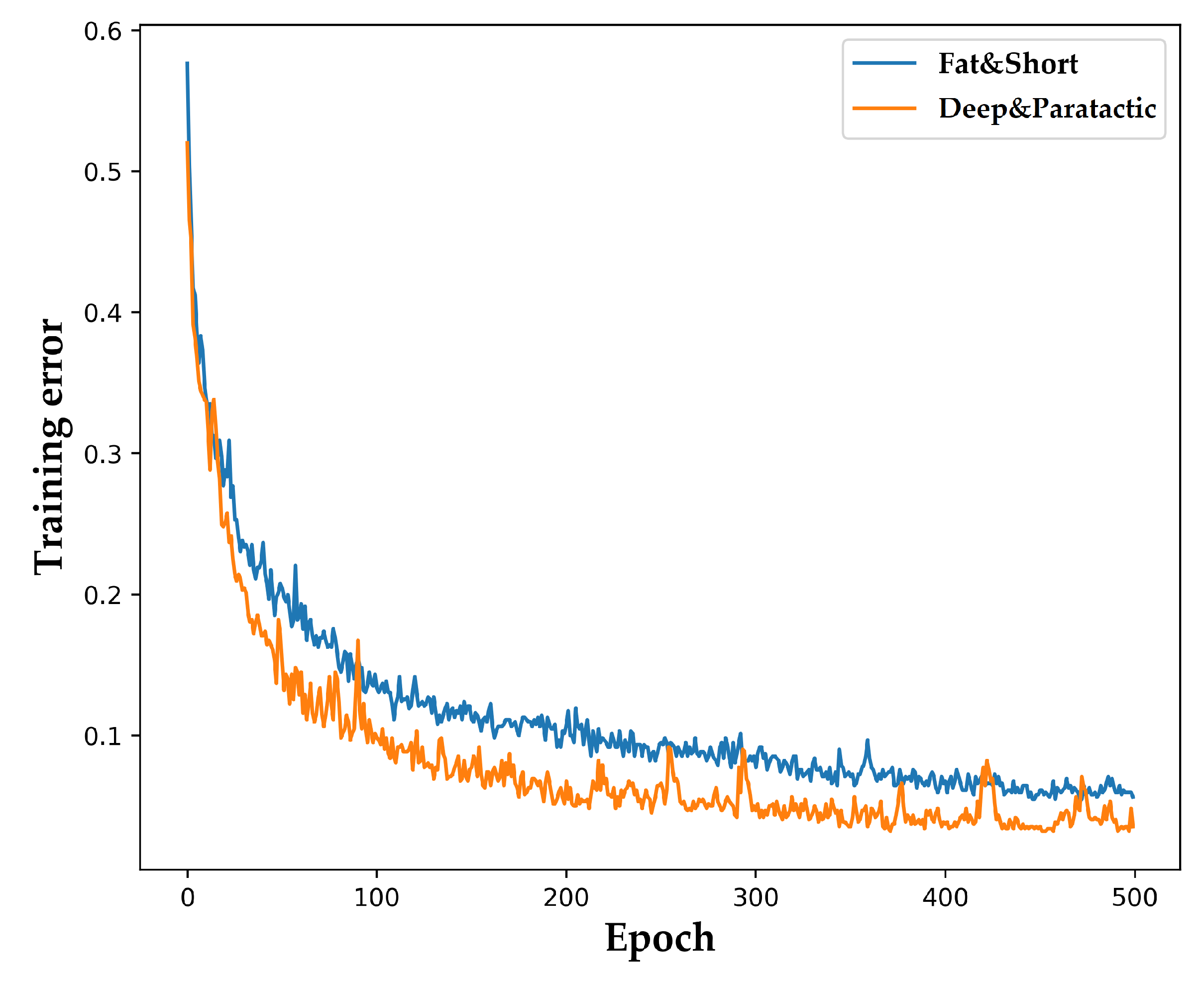

3.3.2. Paratactic Network Structure

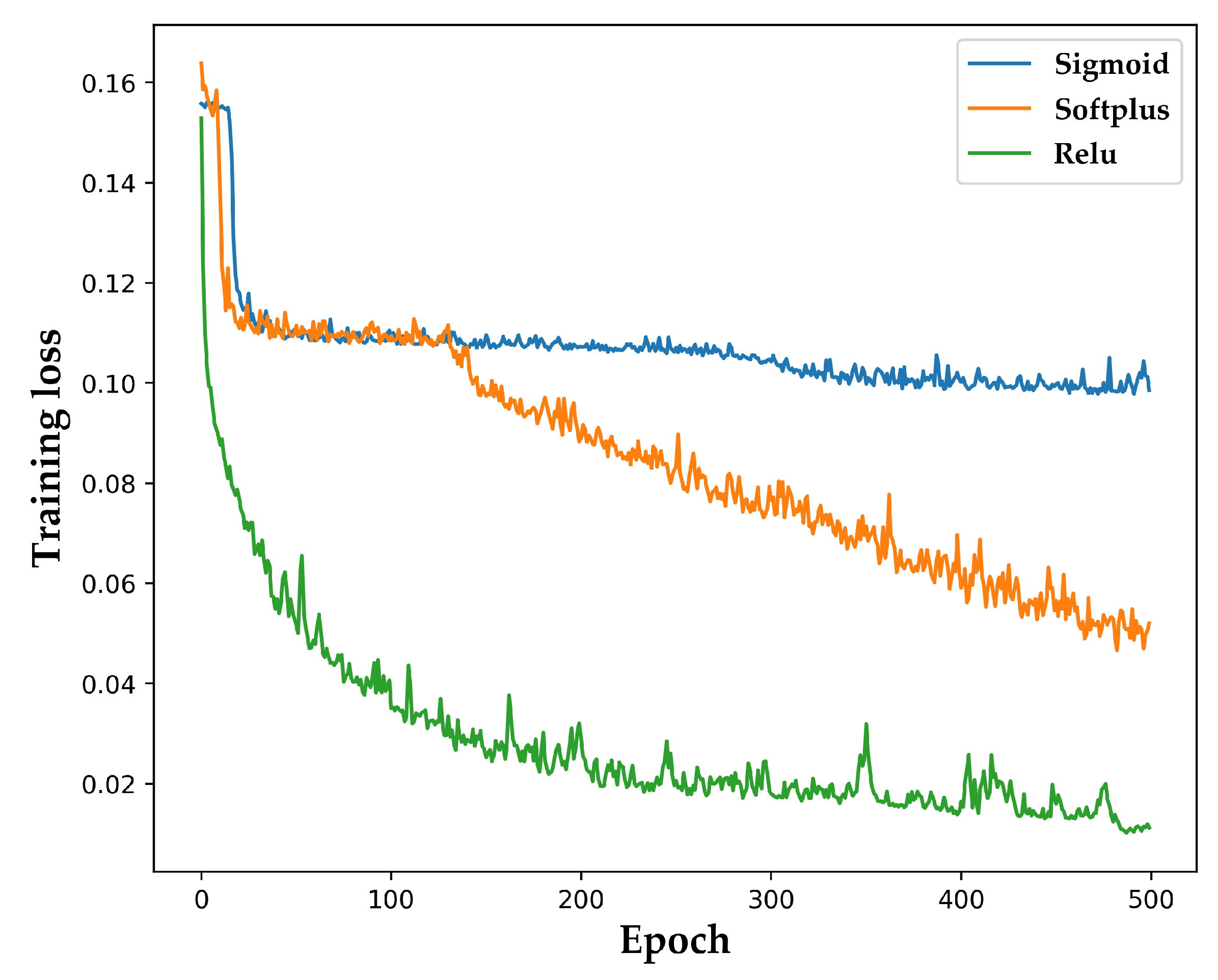

3.3.3. Activation Function “Relu”

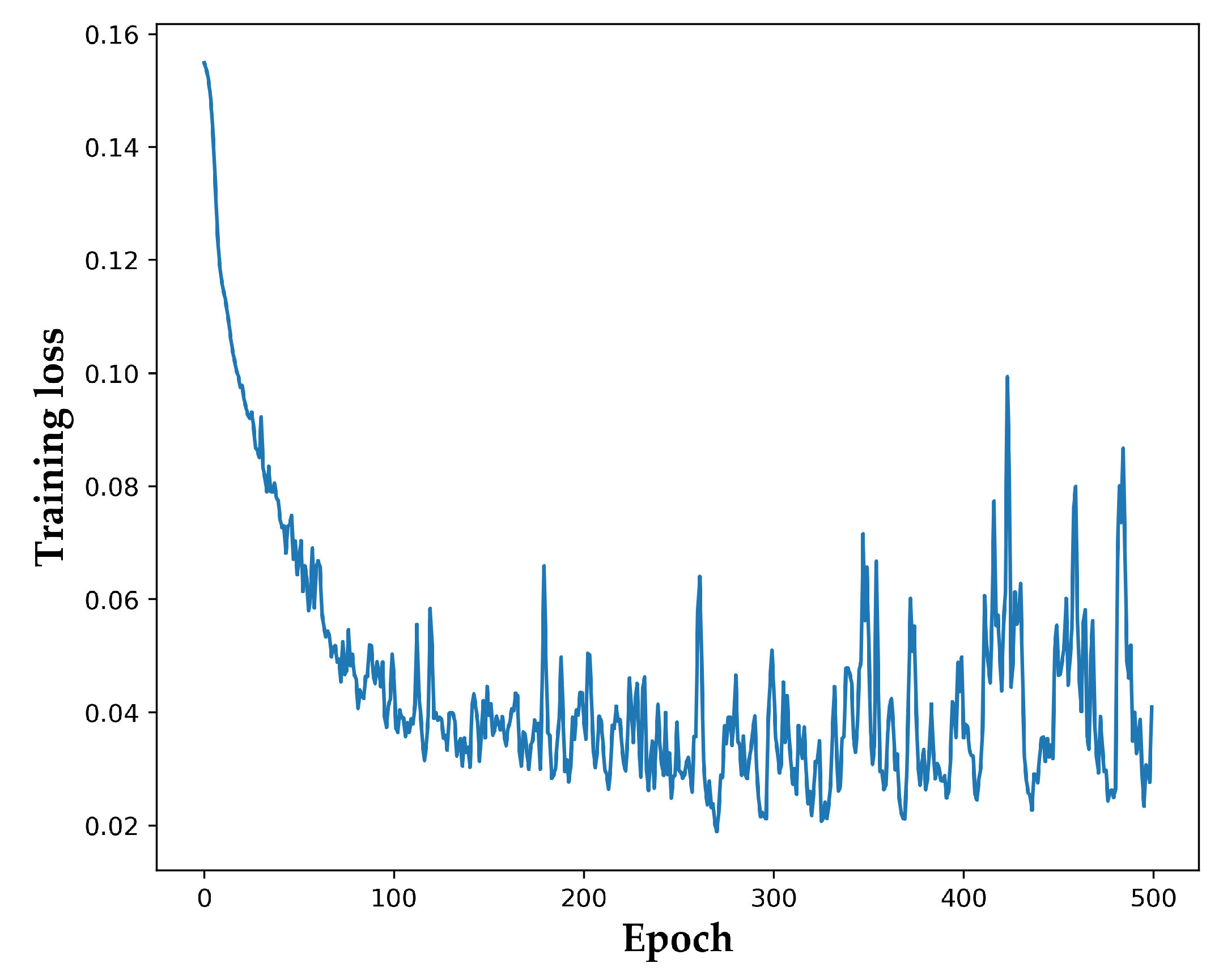

3.3.4. Optimization Algorithm “Dynamic Adam”

4. DADDNN Model Effect Analysis

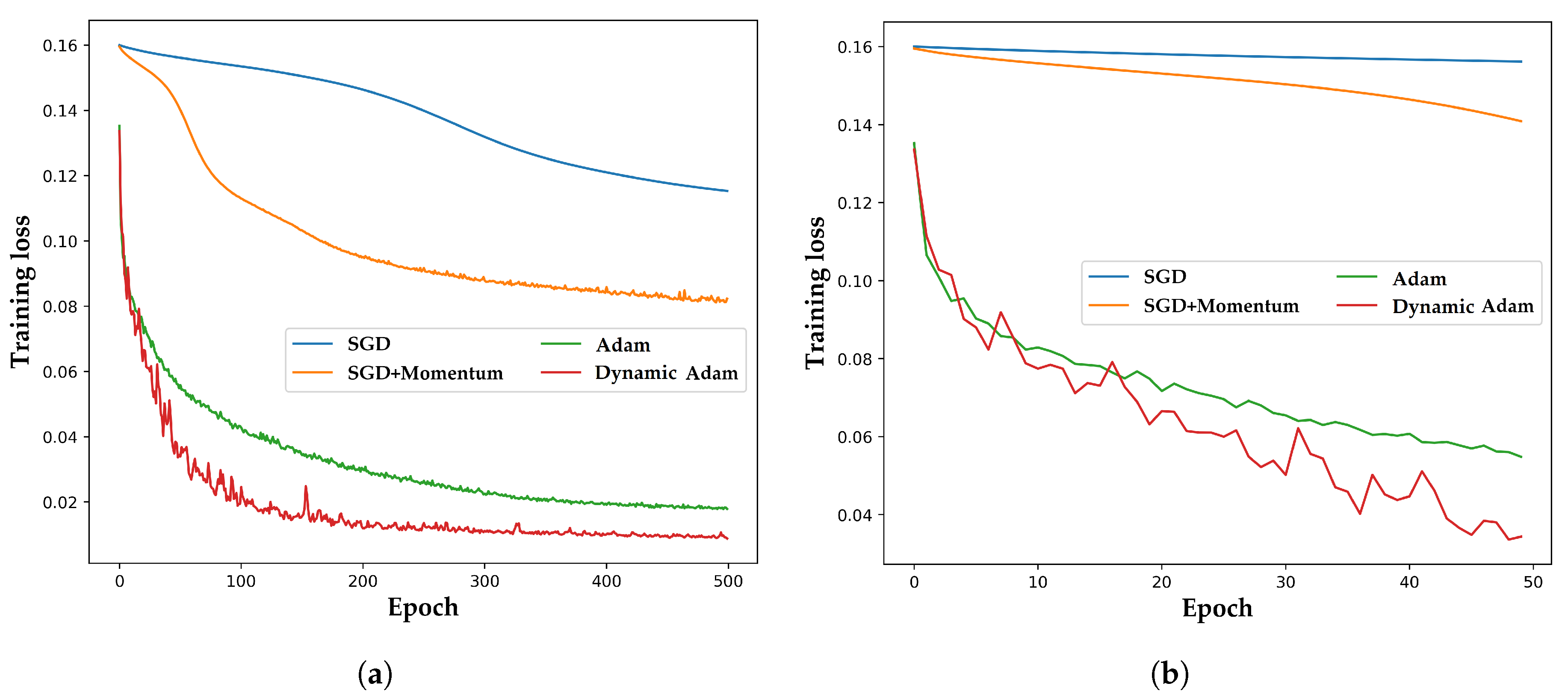

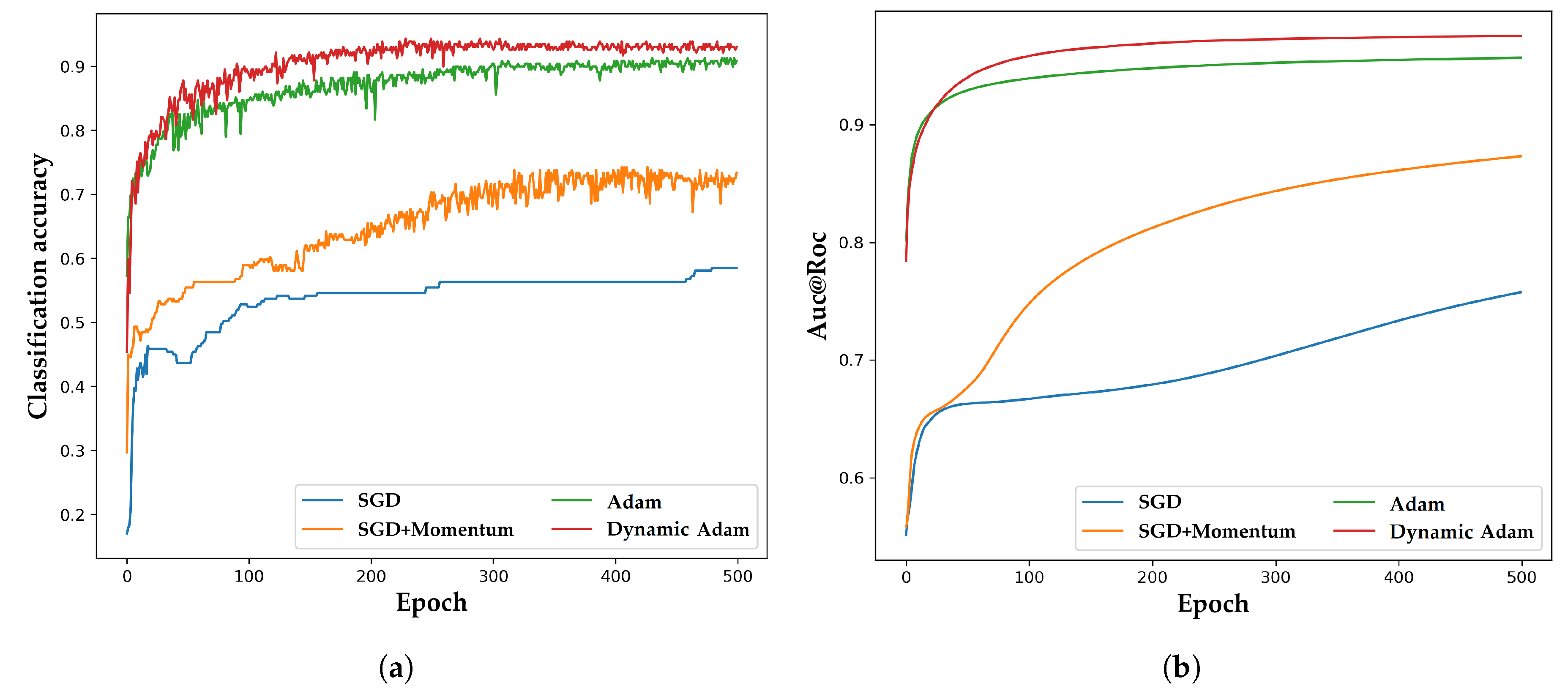

4.1. Method Performance Comparison

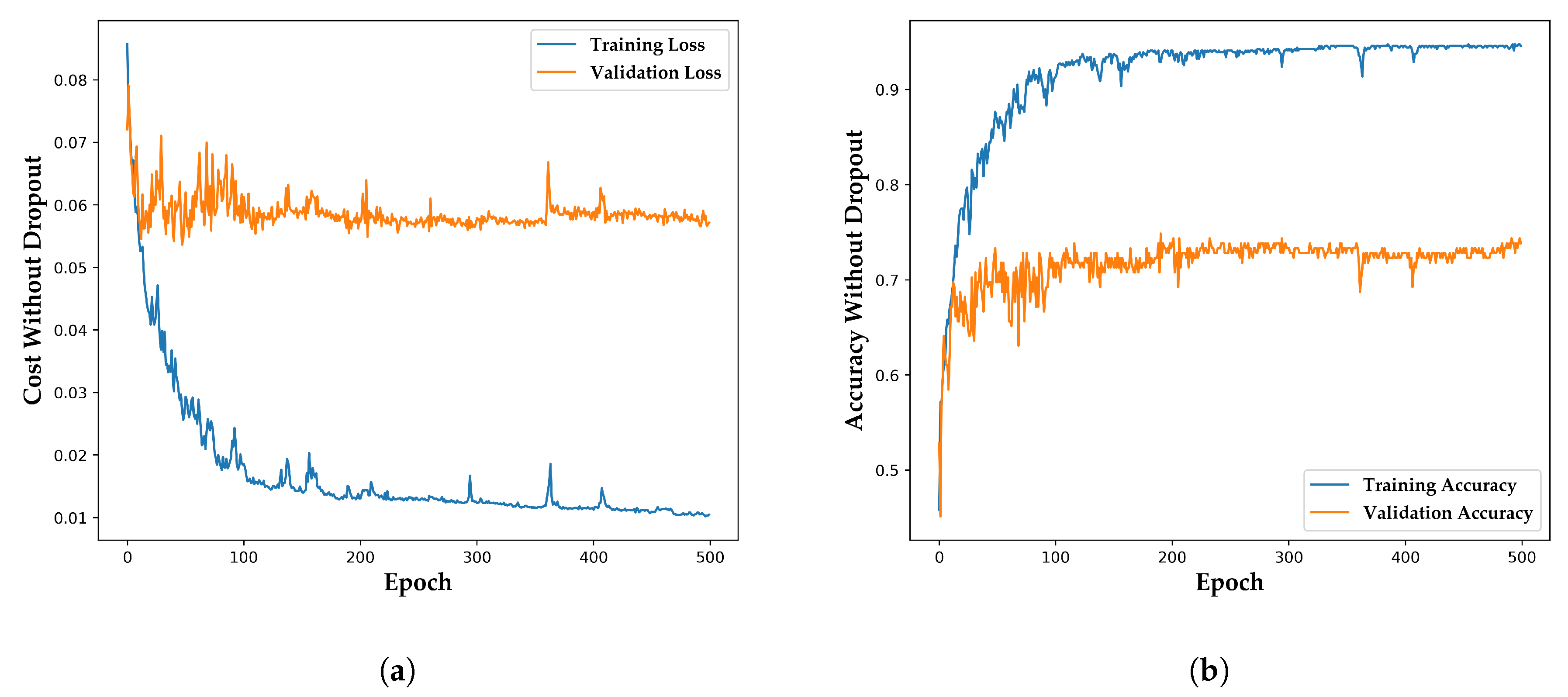

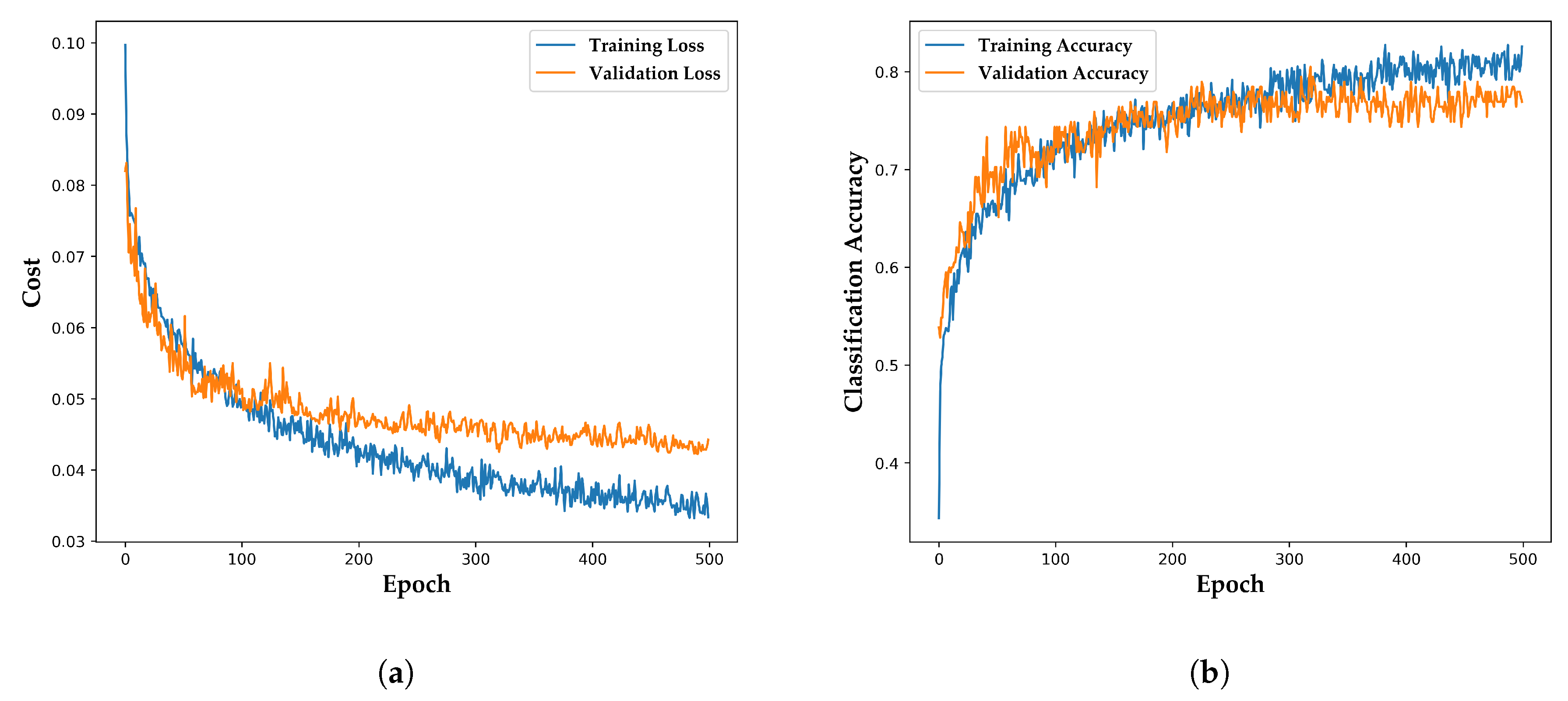

4.2. Analysis of Generalization Performance

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Tang, S.; Hale, C.; Thaker, H. Reliability modeling of power transformers with maintenance outage. Syst. Sci. Control Eng. 2014, 2, 316–324. [Google Scholar] [CrossRef]

- Sang, Z.; Mao, C.; Lu, J.; Wang, D. Analysis and Simulation of Fault Characteristics of Power Switch Failures in Distribution Electronic Power Transformers. Energies 2013, 6, 4246–4268. [Google Scholar] [CrossRef]

- Liu, J.; Zheng, H.; Zhang, Y.; Wei, H.; Liao, R. Grey Relational Analysis for Insulation Condition Assessment of Power Transformers Based Upon Conventional Dielectric Response Measurement. Energies 2017, 10, 1526. [Google Scholar] [CrossRef]

- Linhjell, D.; Lundgaard, L.; Gafvert, U. Dielectric response of mineral oil impregnated cellulose and the impact of aging. IEEE Trans. Dielectr. Electr. Insul. 2007, 14, 156–169. [Google Scholar] [CrossRef]

- Liu, J.; Fan, X.; Zheng, H.; Zhang, Y.; Zhang, C.; Lai, B.; Wang, J.; Ren, G.; Zhang, E. Aging condition assessment of transformer oil-immersed cellulosic insulation based upon the average activation energy method. Cellulose 2019. [Google Scholar] [CrossRef]

- Zhang, Y.; Liu, J.; Zheng, H.; Wei, H.; Liao, R. Study on Quantitative Correlations between the Ageing Condition of Transformer Cellulose Insulation and the Large Time Constant Obtained from the Extended Debye Model. Energies 2017, 10, 1842. [Google Scholar] [CrossRef]

- Mehdizadeh, S.; Yazdchi, M.; Niroomand, M. A Novel AE Based Algorithm for PD Localization in Power Transformers. J. Electr. Eng. Technol. 2013, 8, 1487–1496. [Google Scholar] [CrossRef]

- Wang, K.; Li, J.; Zhang, S.; Liao, R.; Wu, F.; Yang, L.; Li, J.; Grzybowski, S.; Yan, J. A hybrid algorithm based on s transform and affinity propagation clustering for separation of two simultaneously artificial partial discharge sources. IEEE Trans. Dielectr. Electr. Insul. 2015, 22, 1042–1060. [Google Scholar] [CrossRef]

- Engineers, E.E.; Board, I.S. IEEE Guide for the Interpretation of Gases Generated in Oil-Immersed Transformers; IEEE: Piscataway, NJ, USA, 2009. [Google Scholar] [CrossRef]

- Liu, J.; Zheng, H.; Zhang, Y.; Li, X.; Fang, J.; Liu, Y.; Liao, C.; Li, Y.; Zhao, J. Dissolved Gases Forecasting Based on Wavelet Least Squares Support Vector Regression and Imperialist Competition Algorithm for Assessing Incipient Faults of Transformer Polymer Insulation. Polymers 2019, 11, 85. [Google Scholar] [CrossRef]

- Cheng, L.; Yu, T. Dissolved Gas Analysis Principle-Based Intelligent Approaches to Fault Diagnosis and Decision Making for Large Oil-Immersed Power Transformers: A Survey. Energies 2018, 11, 913. [Google Scholar] [CrossRef]

- Duval, M.; Depabla, A. Interpretation of gas-in-oil analysis using new IEC publication 60599 and IEC TC 10 databases. Electr. Insul. Mag. IEEE 2002, 17, 31–41. [Google Scholar] [CrossRef]

- Rogers, R.R. IEEE and IEC Codes to Interpret Incipient Faults in Transformers, Using Gas in Oil Analysis. IEEE Trans. Electr. Insul. 2007, EI-13, 349–354. [Google Scholar] [CrossRef]

- Duval, M. A review of faults detectable by gas-in-oil analysis in transformers. Electr. Insul. Mag. IEEE 2002, 18, 8–17. [Google Scholar] [CrossRef]

- Duval, M.; Lamarre, L. The duval pentagon-a new complementary tool for the interpretation of dissolved gas analysis in transformers. IEEE Electr. Insul. Mag. 2014, 30, 9–12. [Google Scholar] [CrossRef]

- Faiz, J.; Soleimani, M. Dissolved Gas Analysis Evaluation using Conventional Methods for Fault Diagnosis in Electric Power Transformers- A Review. IEEE Trans. Dielectr. Electr. Insul. 2017, 24, 1239–1248. [Google Scholar] [CrossRef]

- Barbosa, F.R.; Almeida, O.M.; Braga, A.P.D.S.; Amora, M.A.B.; Cartaxo, S.J.M. Application of an artificial neural network in the use of physicochemical properties as a low cost proxy of power transformers DGA data. IEEE Trans. Dielectr. Electr. Insul. 2012, 19, 239–246. [Google Scholar] [CrossRef]

- Miranda, V.; Castro, A.R.G.; Lima, S. Diagnosing Faults in Power Transformers With Autoassociative Neural Networks and Mean Shift. IEEE Trans. Power Deliv. 2012, 27, 1350–1357. [Google Scholar] [CrossRef]

- Lin, J.; Sheng, G.; Yan, Y.; Dai, J.; Jiang, X. Prediction of Dissolved Gas Concentrations in Transformer Oil Based on the KPCA-FFOA-GRNN Model. Energies 2018, 11, 225. [Google Scholar] [CrossRef]

- Ganyun, L.V.; Haozhong, C.; Haibao, Z.; Lixin, D. Fault diagnosis of power transformer based on multi-layer SVM classifier. Electr. Power Syst. Res. 2005, 74, 1–7. [Google Scholar] [CrossRef]

- Fei, S.; Zhang, X. Fault diagnosis of power transformer based on support vector machine with genetic algorithm. Expert Syst. Appl. 2009, 36, 11352–11357. [Google Scholar] [CrossRef]

- Fang, J.; Zheng, H.; Liu, J.; Zhao, J.; Zhang, Y.; Wang, K. A Transformer Fault Diagnosis Model Using an Optimal Hybrid Dissolved Gas Analysis Features Subset with Improved Social Group Optimization-Support Vector Machine Classifier. Energies 2018, 11, 1922. [Google Scholar] [CrossRef]

- Rigatos, G.; Siano, P. Power transformers’ condition monitoring using neural modeling and the local statistical approach to fault diagnosis. Int. J. Electr. Power Energy Syst. 2016, 80, 150–159. [Google Scholar] [CrossRef]

- Li, E.; Wang, L.; Song, B.; Jian, S. Improved Fuzzy C-Means Clustering for Transformer Fault Diagnosis Using Dissolved Gas Analysis Data. Energies 2018, 11, 2344. [Google Scholar] [CrossRef]

- Khan, S.A.; Equbal, M.D.; Islam, T. A comprehensive comparative study of DGA based transformer fault diagnosis using fuzzy logic and ANFIS models. IEEE Trans. Dielectr. Electr. Insul. 2015, 22, 590–596. [Google Scholar] [CrossRef]

- Li, S.; Wu, G.; Gao, B.; Hao, C.; Xin, D.; Yin, X. Interpretation of DGA for transformer fault diagnosis with complementary SaE-ELM and arctangent transform. IEEE Trans. Dielectr. Electr. Insul. 2016, 23, 586–595. [Google Scholar] [CrossRef]

- Carita, A.J.Q.; Leite, L.C.; Medeiros, A.P.P.; Barros, R.; Sauer, L. Bayesian Networks applied to Failure Diagnosis in Power Transformer. IEEE Lat. Am. Trans. 2013, 11, 1075–1082. [Google Scholar] [CrossRef]

- Ghoneim, S.S.M.; Taha, I.B.M.; Elkalashy, N.I. Integrated ANN-based proactive fault diagnostic scheme for power transformers using dissolved gas analysis. IEEE Trans. Dielectr. Electr. Insul. 2016, 23, 1838–1845. [Google Scholar] [CrossRef]

- Ma, H.; Saha, T.K.; Ekanayake, C.; Martin, D. Smart Transformer for Smart Grid—Intelligent Framework and Techniques for Power Transformer Asset Management. IEEE Trans. Smart Grid 2015, 6, 1026–1034. [Google Scholar] [CrossRef]

- Peimankar, A.; Weddell, S.J.; Jalal, T.; Lapthorn, A.C. Evolutionary multi-objective fault diagnosis of power transformers. Swarm Evolut. Comput. 2017. [Google Scholar] [CrossRef]

- Ghoneim, S.S.M. Intelligent Prediction of Transformer Faults and Severities Based on Dissolved Gas Analysis Integrated with Thermodynamics Theory. IET Sci. Meas. Technol. 2018. [Google Scholar] [CrossRef]

- Zhang, Y.; Zheng, H.; Liu, J.; Zhao, J.; Sun, P. An anomaly identification model for wind turbine state parameters. J. Clean. Prod. 2018, 195, 1214–1227. [Google Scholar] [CrossRef]

- Hinton, G.E.; Osindero, S.; Teh, Y.W. A fast learning algorithm for deep belief nets. Neural Comput. 2006, 18, 1527–1554. [Google Scholar] [CrossRef]

- Hinton, G.E.; Salakhutdinov, R. Reducing the dimensionality of data with neural networks. Science 2006, 313, 504–507. [Google Scholar] [CrossRef]

- Ali, M.U.; Son, D.; Kang, S.; Nam, S. An Accurate CT Saturation Classification Using a Deep Learning Approach Based on Unsupervised Feature Extraction and Supervised Fine-Tuning Strategy. Energies 2017, 10, 1830. [Google Scholar] [CrossRef]

- Dai, J.; Song, H.; Sheng, G.; Jiang, X. Dissolved gas analysis of insulating oil for power transformer fault diagnosis with deep belief network. IEEE Trans. Dielectr. Electr. Insul. 2017, 24, 2828–2835. [Google Scholar] [CrossRef]

- Bagheri, M.; Zollanvari, A.; Nezhivenko, S. Transformer Fault Condition Prognosis Using Vibration Signals Over Cloud Environment. IEEE Access 2018, 6, 9862–9874. [Google Scholar] [CrossRef]

- Hinton, G.E.; Deng, L.; Yu, D.; Dahl, G.E.; Mohamed, A.; Jaitly, N.; Senior, A.W.; Vanhoucke, V.; Nguyen, P.; Sainath, T.N. Deep Neural Networks for Acoustic Modeling in Speech Recognition: The Shared Views of Four Research Groups. IEEE Signal Process. Mag. 2012, 29, 82–97. [Google Scholar] [CrossRef]

- Lin, C.; Wang, L.; Tsai, K. Hybrid Real-Time Matrix Factorization for Implicit Feedback Recommendation Systems. IEEE Access 2018, 6, 21369–21380. [Google Scholar] [CrossRef]

- Huang, G.; Liu, Z.; Der Maaten, L.V.; Weinberger, K.Q. Densely Connected Convolutional Networks. Comput. Vis. Pattern Recognit. 2017, 2261–2269. [Google Scholar] [CrossRef]

- Kingma, D.; Ba, J. Adam: A Method for Stochastic Optimization. arXiv, 2014; arXiv:1412.6980. [Google Scholar]

- Loshchilov, I.; Hutter, F. Fixing Weight Decay Regularization in Adam. arXiv, 2017; arXiv:1711.05101. [Google Scholar]

- Zhang, R.; Xu, Z.B.; Huang, G.B.; Wang, D. Global convergence of online BP training with dynamic learning rate. IEEE Trans. Neural Netw. Learn. Syst. 2012, 23, 330–341. [Google Scholar] [CrossRef]

- Duchi, J.C.; Hazan, E.; Singer, Y. Adaptive Subgradient Methods for Online Learning and Stochastic Optimization. J. Mach. Learn. Res. 2011, 12, 2121–2159. [Google Scholar]

- Tieleman, T.; Hinton, G. Lecture 6.5-rmsprop: Divide the Gradient by a Running Average of Its Recent Magnitude. COURSERA Neural Netw. Mach. Learn. 2012, 4, 26–31. [Google Scholar]

- Srivastava, N.; Hinton, G.; Krizhevsky, A.; Sutskever, I.; Salakhutdinov, R. Dropout: A simple way to prevent neural networks from overfitting. J. Mach. Learn. Res. 2014, 15, 1929–1958. [Google Scholar]

- Jinliang, Y. Study on Oil-immersed Power Transformer Fault Diagnosis Based on Relevance Vector Machine. Ph.D. Thesis, North China Electric Power University, Beijing, China, 2013. [Google Scholar]

- Wu, J.; Li, K.; Sun, J.; Xie, L. A Novel Integrated Method to Diagnose Faults in Power Transformers. Energies 2018, 11, 3041. [Google Scholar] [CrossRef]

- Rennie, S.J.; Goel, V.; Thomas, S. Annealed dropout training of deep networks. In Proceedings of the 2014 Spoken Language Technology Workshop, South Lake Tahoe, NV, USA, 7–10 December 2014; pp. 159–164. [Google Scholar] [CrossRef]

- University of California, Irvine. UCI Machine Learning Repository. Available online: https://archive.ics.uci.edu/ml/ (accessed on 1 August 2018).

- Lin, C.H.; Wu, C.H.; Huang, P.Z. Grey clustering analysis for incipient fault diagnosis in oil-immersed transformers. Expert Syst. Appl. 2009, 36, 1371–1379. [Google Scholar] [CrossRef]

- Zhang, Y.; Wei, H.; Liao, R.; Wang, Y.; Yang, L.; Yan, C. A New Support Vector Machine Model Based on Improved Imperialist Competitive Algorithm for Fault Diagnosis of Oil-immersed Transformers. J. Electr. Eng. Technol. 2017, 12, 830–839. [Google Scholar] [CrossRef]

| Category Samples | Dataset 1 | Dataset 2 | Dataset 3 | Total Dataset |

|---|---|---|---|---|

| PD | 11 | 0 | 20 | 31 |

| LD | 13 | 23 | 68 | 104 |

| HD | 32 | 45 | 128 | 205 |

| LT | 7 | 0 | 17 | 24 |

| MT | 22 | 0 | 47 | 69 |

| MLT | 0 | 10 | 44 | 54 |

| HT | 50 | 14 | 157 | 221 |

| NC | 0 | 26 | 52 | 78 |

| Total | 135 | 118 | 533 | 786 |

| Dataset | IEC 60599 | Duval Triangle | SAE | DBN | GSSVM | DADDNN |

|---|---|---|---|---|---|---|

| Dataset 1 | 57.6 | 66.7 | 82.9 | 77.1 | 82.9 | 93.9 |

| Dataset 2 | 48.3 | 65.5 | 71.4 | 67.9 | 82.1 | 92.9 |

| Dataset 3 | 42.1 | 48.1 | 63.2 | 59.4 | 62.4 | 75.2 |

| Total dataset | 42.6 | 60.0 | 66.3 | 62.2 | 70.3 | 80.5 |

| Average accuracy | 47.7 | 60.1 | 71.0 | 66.7 | 74.4 | 85.6 |

| Category | IEC 60599 | Duval Triangle | SAE | DBN | GSSVM | DADDNN |

|---|---|---|---|---|---|---|

| PD | 100.0 | 0.0 | 87.5 | 0.0 | 87.5 | 87.5 |

| LD | 30.8 | 61.5 | 11.5 | 69.2 | 46.2 | 57.7 |

| HD | 23.5 | 68.6 | 88.2 | 72.5 | 82.4 | 90.2 |

| LT | 16.7 | 50.0 | 16.7 | 16.7 | 16.7 | 50.0 |

| MT | 76.5 | 41.2 | 70.6 | 70.6 | 70.6 | 82.4 |

| MLT | 0.0 | 0.0 | 15.4 | 0.0 | 15.4 | 53.8 |

| HT | 72.7 | 96.4 | 87.3 | 78.2 | 85.5 | 90.9 |

| NC | 0.0 | 0.0 | 63.2 | 57.9 | 57.9 | 57.9 |

| Data Set | Class | Attributes | Instances | Train Samples | Test Samples |

|---|---|---|---|---|---|

| Breast cancer | 2 | 30 | 569 | 469 | 100 |

| Iris | 3 | 4 | 150 | 120 | 30 |

| Wine | 3 | 13 | 178 | 142 | 36 |

| Class identification | 6 | 9 | 214 | 171 | 43 |

| Data Set | Model | Structure | Activation Function | Algorithm | Train Accuracy (%) | Test Accuracy (%) |

|---|---|---|---|---|---|---|

| Breast cancer | DNN | 6 layers 600 units | Tanh | SGD | 92.5 | 93.9 |

| DNN | 6 layers 600 units | Tanh | SGD+Momentum | 93.2 | 93.9 | |

| DNN | 6 layers 600 units | Tanh | Adagrad | 94.9 | 96.0 | |

| DNN | 6 layers 600 units | Tanh | Dynamic Adam | 96.0 | 97.0 | |

| Iris | DNN | 5 layers 500 units | Relu | SGD | 98.3 | 100.0 |

| DNN | 5 layers 500 units | Relu | SGD+Momentum | 99.2 | 100.0 | |

| DNN | 5 layers 500 units | Relu | Adagrad | 99.2 | 100.0 | |

| DNN | 5 layers 500 units | Relu | Dynamic Adam | 99.2 | 100.0 | |

| Wine | DNN | 6 layers 600 units | Tanh | SGD | 67.0 | 72.2 |

| DNN | 6 layers 600 units | Tanh | SGD+Momentum | 69.0 | 88.9 | |

| DNN | 6 layers 600 units | Tanh | Adagrad | 95.1 | 100.0 | |

| DNN | 6 layers 600 units | Tanh | Dynamic Adam | 97.2 | 100.0 | |

| Glass identification | DNN | 6 layers 600 units | Tanh | SGD | 35.7 | 44.2 |

| DNN | 6 layers 600 units | Tanh | SGD+Momentum | 35.7 | 38.9 | |

| DNN | 6 layers 600 units | Tanh | Adagrad | 74.8 | 72.7 | |

| DNN | 6 layers 600 units | Tanh | Dynamic Adam | 83.6 | 81.4 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ou, M.; Wei, H.; Zhang, Y.; Tan, J. A Dynamic Adam Based Deep Neural Network for Fault Diagnosis of Oil-Immersed Power Transformers. Energies 2019, 12, 995. https://doi.org/10.3390/en12060995

Ou M, Wei H, Zhang Y, Tan J. A Dynamic Adam Based Deep Neural Network for Fault Diagnosis of Oil-Immersed Power Transformers. Energies. 2019; 12(6):995. https://doi.org/10.3390/en12060995

Chicago/Turabian StyleOu, Minghui, Hua Wei, Yiyi Zhang, and Jiancheng Tan. 2019. "A Dynamic Adam Based Deep Neural Network for Fault Diagnosis of Oil-Immersed Power Transformers" Energies 12, no. 6: 995. https://doi.org/10.3390/en12060995

APA StyleOu, M., Wei, H., Zhang, Y., & Tan, J. (2019). A Dynamic Adam Based Deep Neural Network for Fault Diagnosis of Oil-Immersed Power Transformers. Energies, 12(6), 995. https://doi.org/10.3390/en12060995