Sample Selection Based on Active Learning for Short-Term Wind Speed Prediction

Abstract

:1. Introduction

2. Active Learning

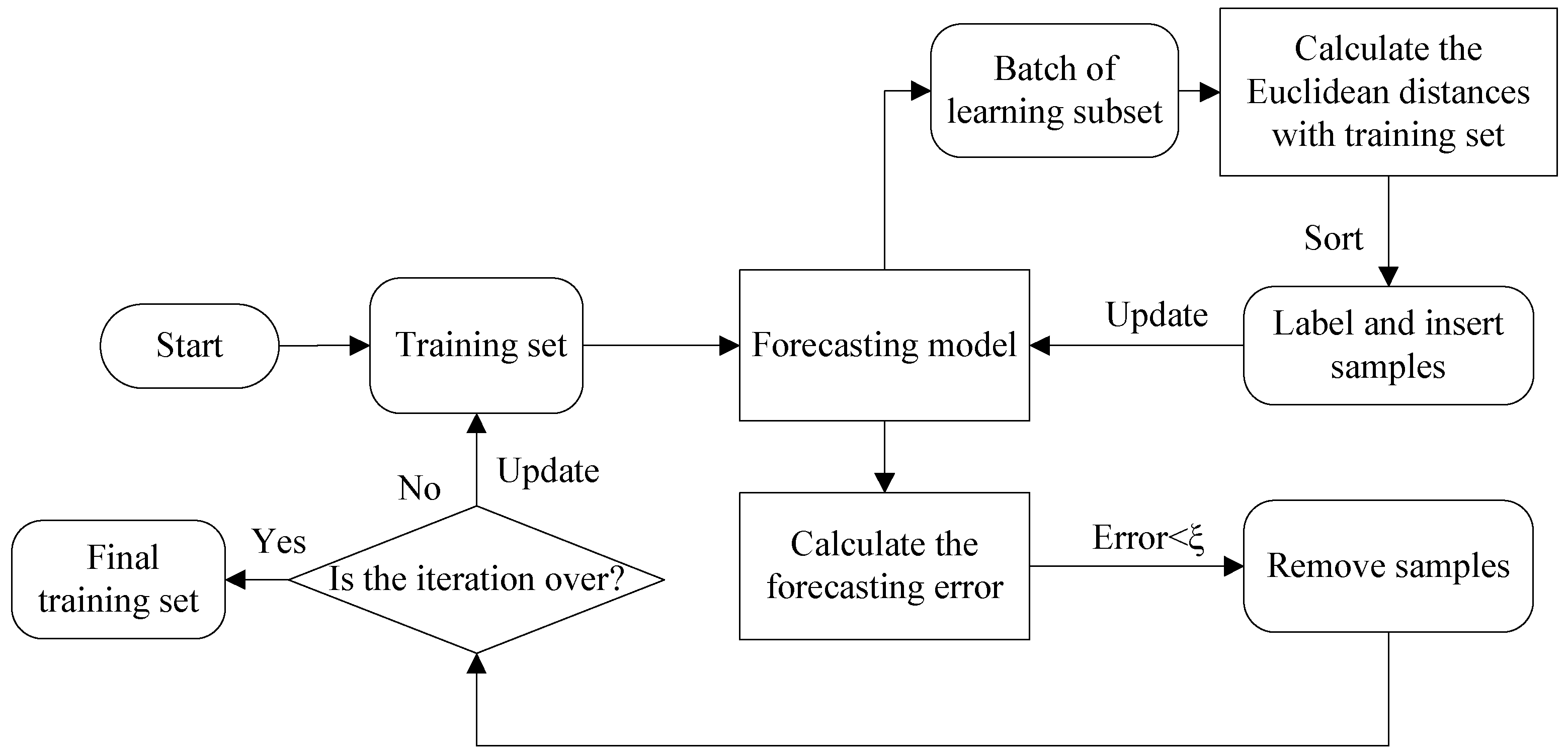

2.1. Euclidean Distance and Error (EDE-AL)

- Step (1) Define the initial training samples xt (t = 1, 2,…, n) and the learning subset Ui (i = 1, 2,…, k);

- Step (2) Compute the Euclidean distances Edl = {Edl,t} (t = 1, 2,…, n) from the n different training samples xt (t = 1, 2,…, n) for each sample xl (l = n + 1, n + 2,…, n + m) of the learning subset;

- Step (3) Define the sample similarity as fED(l) = −min{Edl};

- Step (4) Label and insert the N most distant samples to the training set and update the forecasting model;

- Step (5) Calculate the forecasting errors of the new N training samples and remove samples with errors less than the threshold ξ;

- Step (6) Reestablish the model to predict the next learning subset until the iteration stops.

2.2. Support Vector Regression (SVR-AL)

- Step (1) Define the initial training samples xt (t = 1, 2,…, n) and the learning subset Ui (i = 1, 2,…, k);

- Step (2) Establish an ε-SVR model by using training samples, and calculate the model error of each sample xl (l = n + 1, n + 2,…, n + m) of the learning subset;

- Step (3) Label and insert the samples with model errors outside the ε-intensive band into the training set;

- Step (4) Update the training set and reestablish the ε-SVR model to predict the next learning subset until the iteration stops.

3. Materials and Experiment

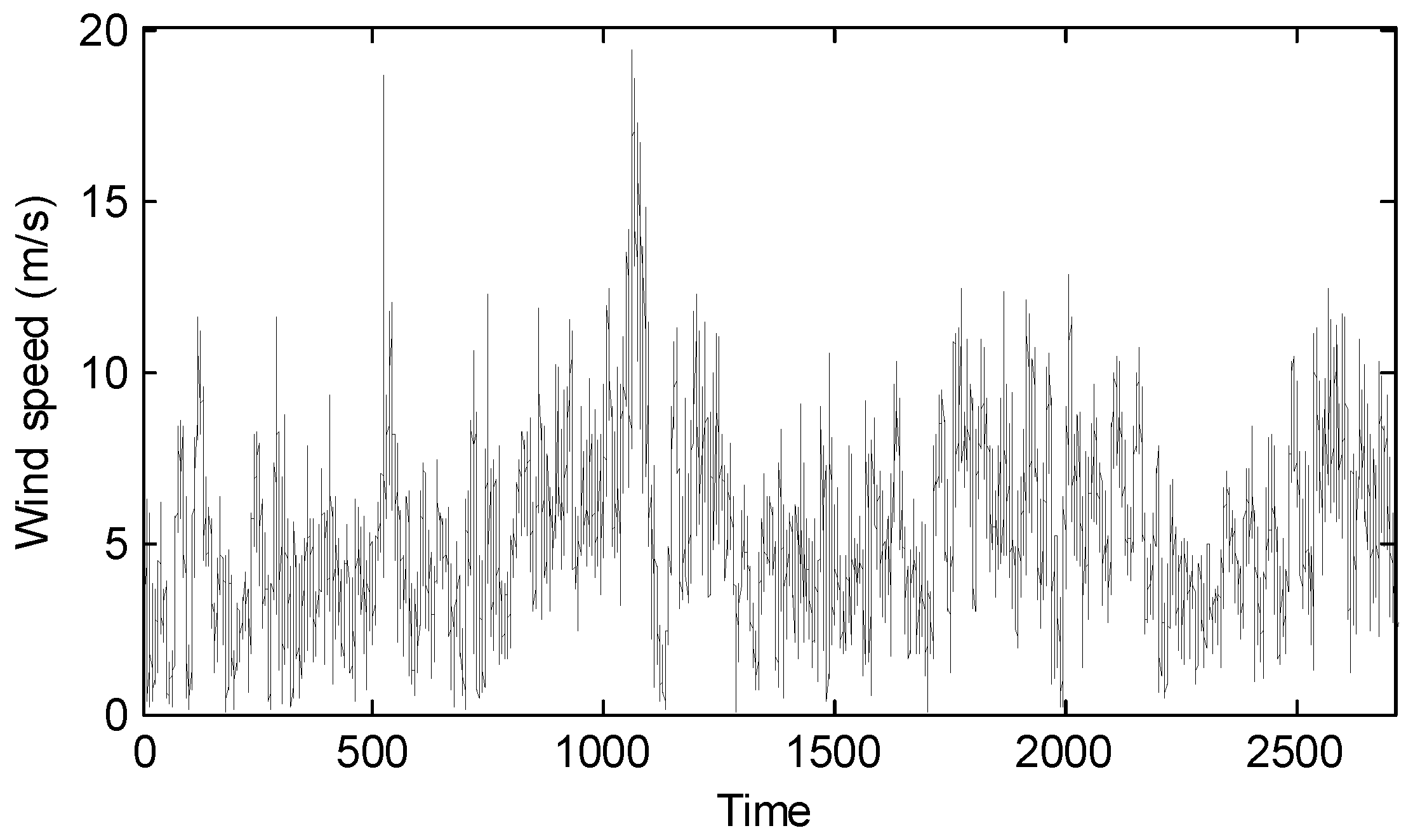

3.1. Wind Speed Data Sets

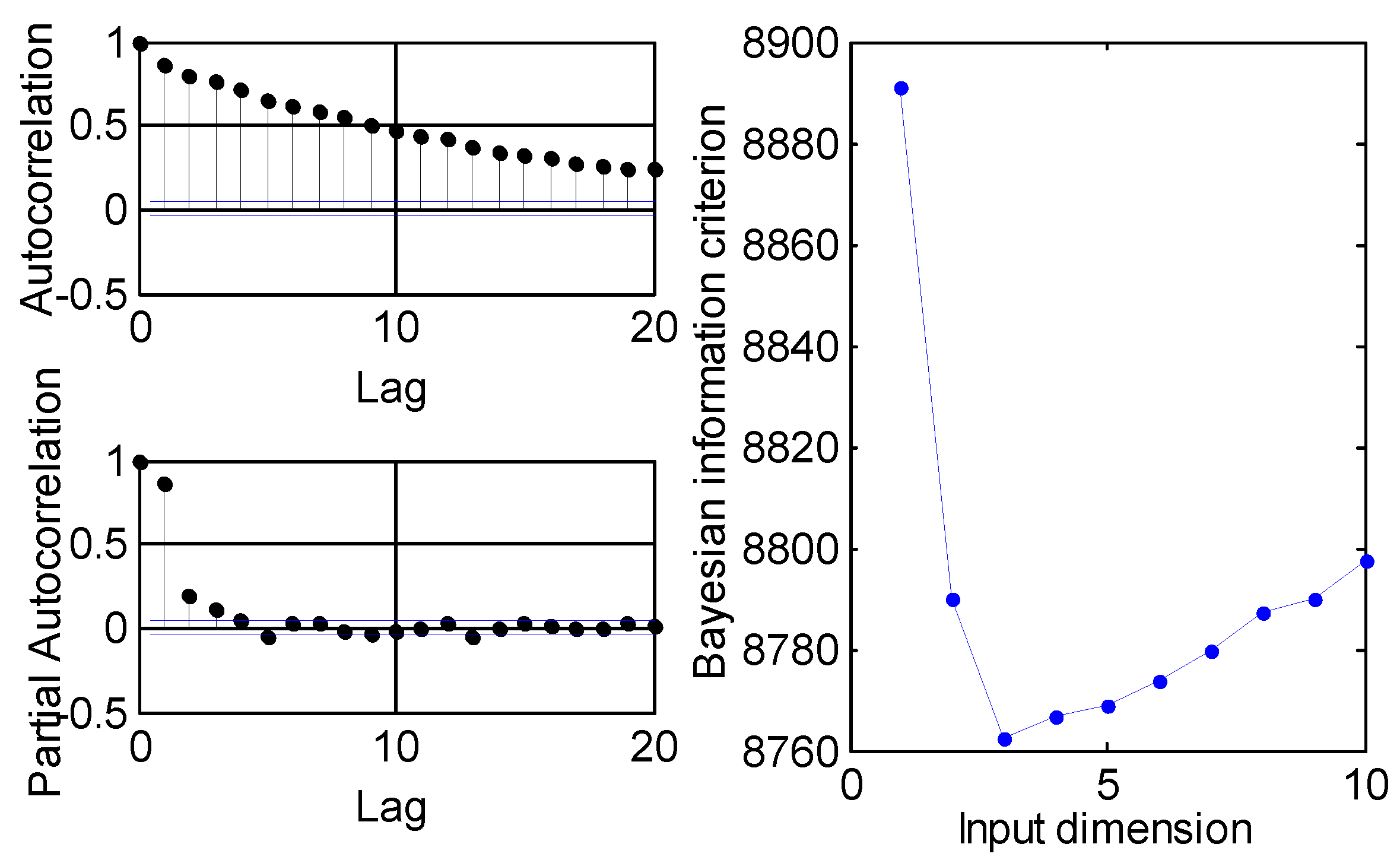

3.2. Model Selection

3.3. Prediction Models

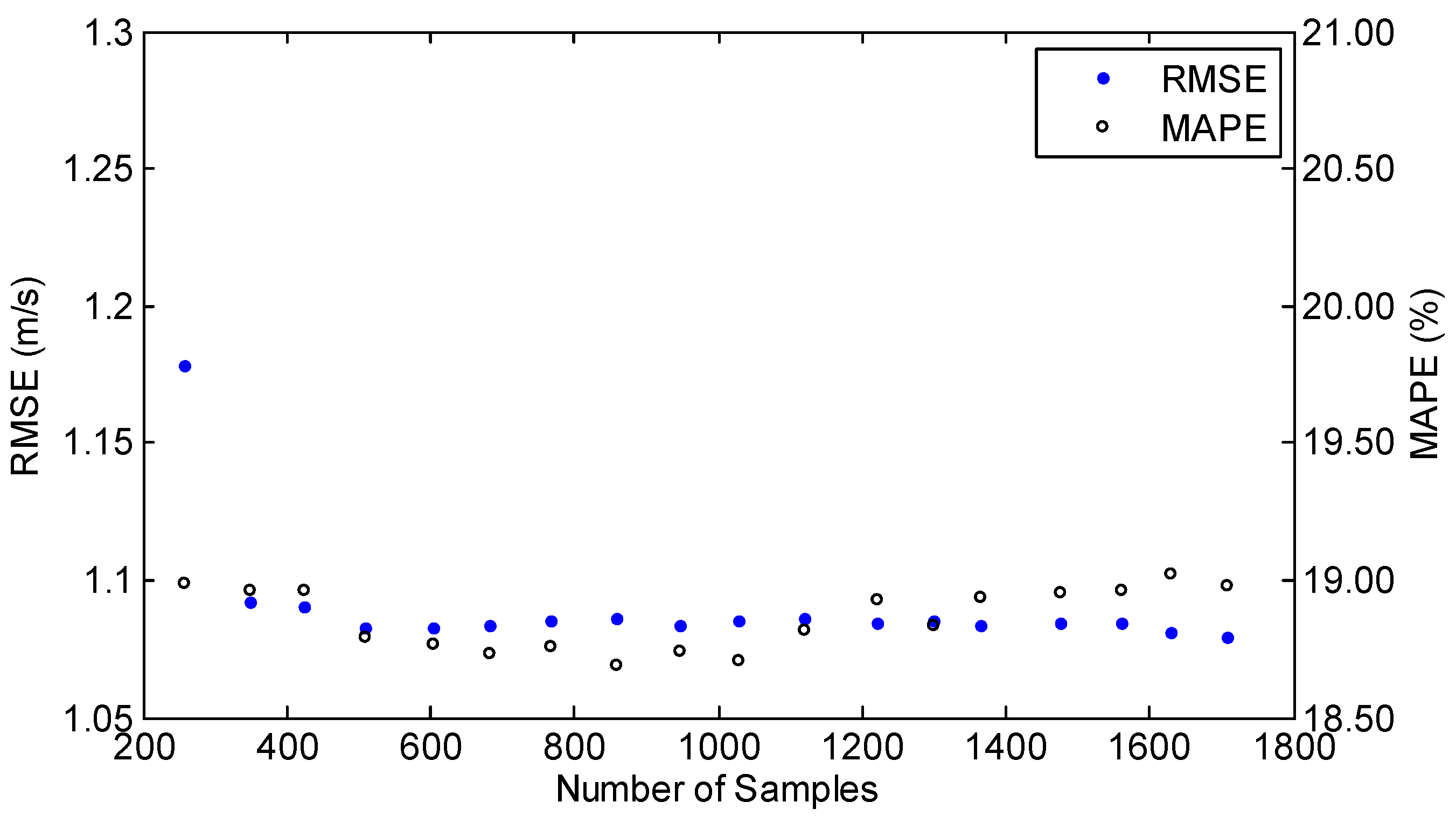

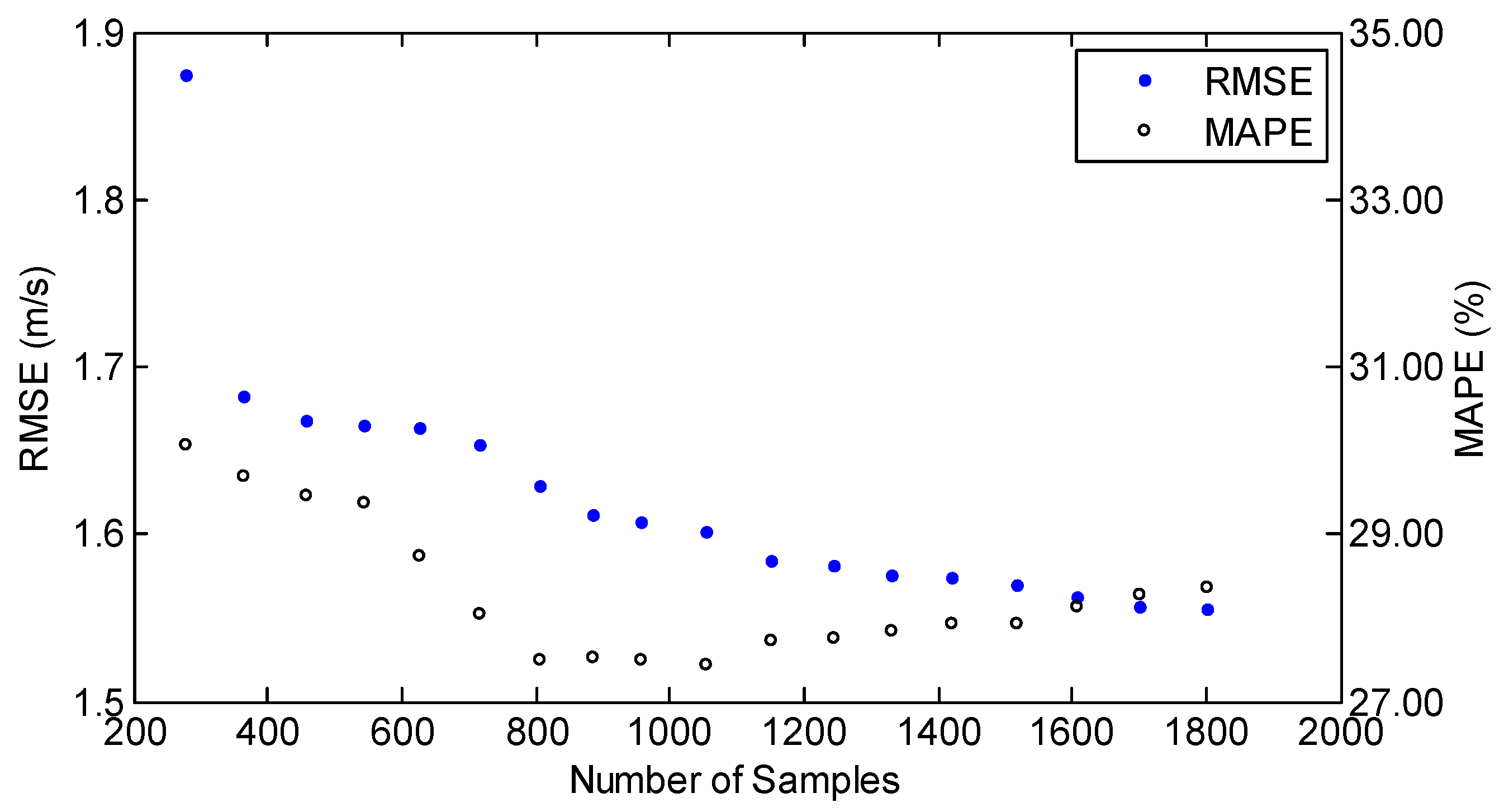

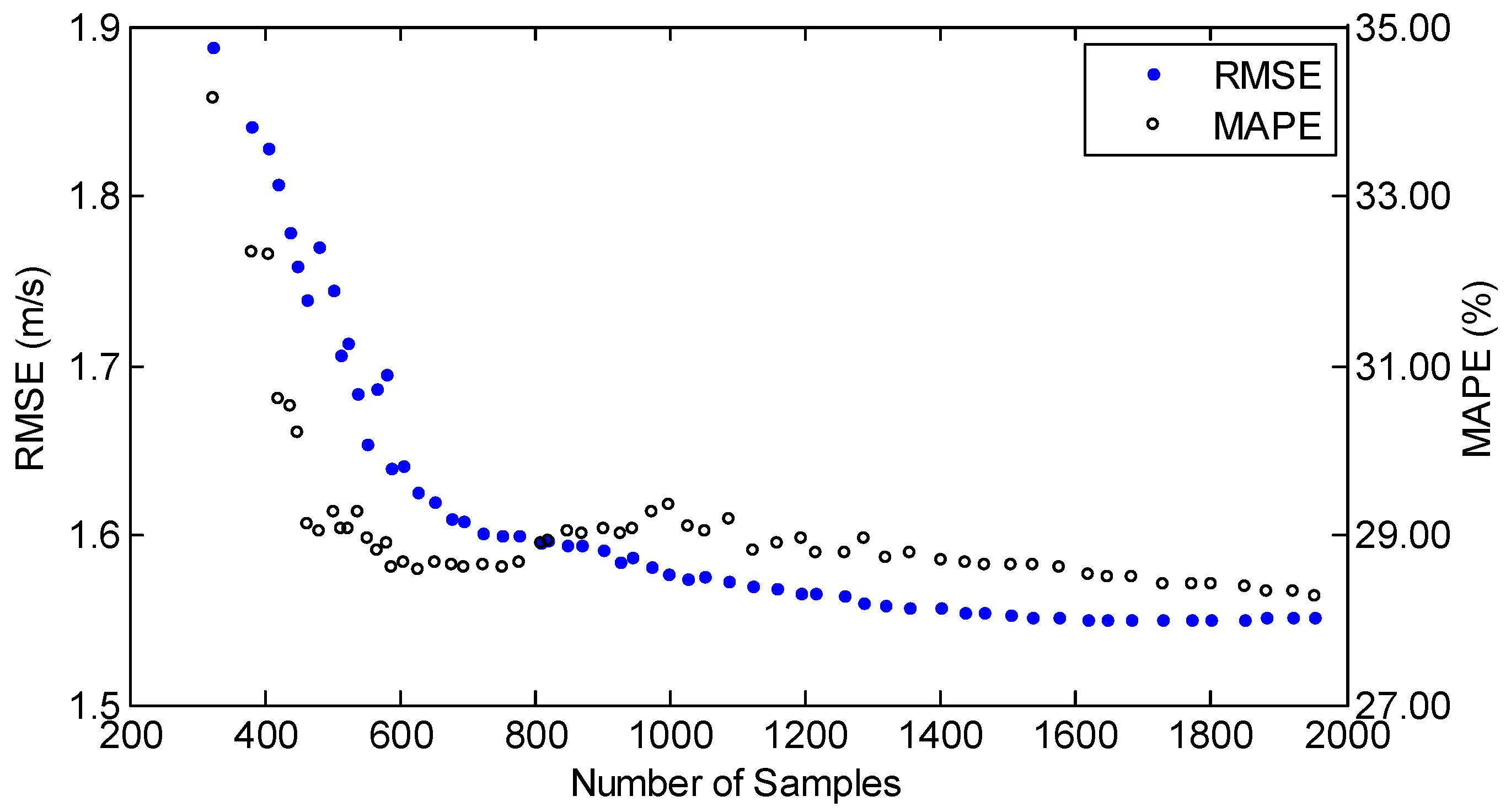

4. Results and Discussion

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

Abbreviations

| ANN | Artificial neural network |

| SVR | Support vector regression |

| MLP | Multilayer perceptron |

| EDE-AL | Active learning approach by Euclidean distance and error |

| SVR-AL | Active learning approach by support vector regression |

| BIC | Bayesian information criterion |

| ACF | Autocorrelation function |

| PACF | Partial autocorrelation function |

| RMSE | Root mean square error |

| MAE | Mean absolute error |

| MAPE | Mean absolute percentage error |

References

- Ponta, L.; Raberto, M.; Teglio, A.; Cincotti, S. An agent-based stock-flow consistent model of the sustainable transition in the energy sector. Ecol. Econ. 2018, 145, 274–300. [Google Scholar] [CrossRef]

- De Filippo, A.; Lombardi, M.; Milano, M. User-Aware electricity price optimization for the competitive market. Energies 2017, 10, 1378. [Google Scholar] [CrossRef]

- Jung, J.; Broadwater, R.P. Current status and future advances for wind speed and power forecasting. Renew. Sustain. Energy Rev. 2014, 31, 762–777. [Google Scholar] [CrossRef]

- Zhou, J.; Sun, N.; Jia, B.; Peng, T. A novel decomposition-optimization model for short-term wind speed forecasting. Energies 2018, 11, 1752. [Google Scholar] [CrossRef]

- Li, G.; Shi, J.; Zhou, J. Bayesian adaptive combination of short-term wind speed forecasts from neural network models. Renew. Energy 2011, 36, 352–359. [Google Scholar] [CrossRef]

- De Giorgi, M.G.; Campilongo, S.; Ficarella, A.; Congedo, P.M. Comparison between wind power prediction models based on wavelet decomposition with least-squares support vector machine (LS-SVM) and artificial neural network (ANN). Energies 2014, 7, 5251–5272. [Google Scholar] [CrossRef]

- Howard, T.; Clark, P. Correction and downscaling of NWP wind speed forecasts. Meteorol. Appl. 2010, 14, 105–116. [Google Scholar] [CrossRef]

- Carta, J.A.; Ramírez, P.; Velázquez, S. A review of wind speed probability distributions used in wind energy analysis: Case studies in the Canary Islands. Renew. Sustain. Energy Rev. 2009, 13, 933–955. [Google Scholar] [CrossRef]

- Liu, D.; Niu, D.; Wang, H.; Fan, L. Short-term wind speed forecasting using wavelet transform and support vector machines optimized by genetic algorithm. Renew. Energy 2014, 62, 592–597. [Google Scholar] [CrossRef]

- Liu, H.; Tian, H.; Li, Y.; Zhang, L. Comparison of four Adaboost algorithm based artificial neural networks in wind speed predictions. Energy Convers. Manag. 2015, 92, 67–81. [Google Scholar] [CrossRef]

- Ak, R.; Fink, O.; Zio, E. Two machine learning approaches for short-Term wind speed time-series prediction. IEEE Trans. Neural Netw. Learn. Syst. 2016, 27, 1734–1747. [Google Scholar] [CrossRef]

- Han, Q.; Wu, H.; Hu, T.; Chu, F. Short-term wind speed forecasting based on signal decomposing algorithm and hybrid linear/nonlinear models. Energies 2018, 11, 2796. [Google Scholar] [CrossRef]

- Cincotti, S.; Gallo, G.; Ponta, L.; Raberto, M. Modeling and forecasting of electricity spot-prices: Computational intelligence vs classical econometrics. AI Commun. 2014, 27, 301–314. [Google Scholar]

- Ren, C.; An, N.; Wang, J.; Li, L.; Hu, B.; Shang, D. Optimal parameters selection for BP neural network based on particle swarm optimization: A case study of wind speed forecasting. Knowl.-Based Syst. 2014, 56, 226–239. [Google Scholar] [CrossRef]

- Kong, X.; Liu, X.; Shi, R.; Lee, K.Y. Wind speed prediction using reduced support vector machines with feature selection. Neurocomputing 2015, 169, 449–456. [Google Scholar] [CrossRef]

- Cohn, D.A.; Ghahramani, Z.; Jordan, M.I. Active Learning with Statistical Models. J. Artif. Intell. Res. 1996, 4, 705–712. [Google Scholar] [CrossRef]

- Cohn, D.; Atlas, L.; Ladner, R. Improving Generalization with Active Learning. Mach. Learn. 1994, 15, 201–221. [Google Scholar] [CrossRef]

- Tuia, D.; Ratle, F.; Pacifici, F.; Kanevski, M.; Emery, W. Active learning methods for remote sensing image classification. IEEE Trans. Geosci. Remote. 2009, 47, 2218–2232. [Google Scholar] [CrossRef]

- Douak, F.; Melgani, F.; Benoudjit, N. Kernel ridge regression with active learning for wind speed prediction. Appl. Energy 2013, 103, 328–340. [Google Scholar]

- Zliobaite, I.; Bifet, A.; Pfahringer, B.; Holmes, G. Active learning with drifting streaming data. IEEE Trans. Neural Netw. Learn. Syst. 2014, 25, 27–39. [Google Scholar] [CrossRef]

- Huang, S.; Jin, R.; Zhou, Z. Active learning by querying informative and representative examples. IEEE Trans. Pattern Anal. 2014, 36, 1936–1949. [Google Scholar] [CrossRef] [PubMed]

- Gal, Y.; Islam, R.; Ghahramani, Z. Deep Bayesian Active Learning with Image Data. Available online: https://arxiv.org/abs/1703.02910 (accessed on 8 March 2017).

- Chen, Y.; Xu, P.; Chu, Y.; Li, W.; Wu, Y.; Ni, L.; Bao, Y.; Wang, K. Short-term electrical load forecasting using the Support Vector Regression (SVR) model to calculate the demand response baseline for office buildings. Appl. Energy 2017, 195, 659–670. [Google Scholar] [CrossRef]

- Zhou, X.; Jiang, T. An effective way to integrate ε-support vector regression with gradients. Expert Syst. Appl. 2018, 99, 126–140. [Google Scholar] [CrossRef]

- Ma, J.; Theiler, J.; Perkins, S. Accurate on-line support vector regression. Neural Comput. 2003, 15, 2683–2703. [Google Scholar] [CrossRef] [PubMed]

- Vrieze, S.I. Model selection and psychological theory: A discussion of the differences between the Akaike information criterion (AIC) and the Bayesian information criterion (BIC). Psychol. Methods 2012, 17, 228–243. [Google Scholar] [CrossRef] [PubMed]

- Azimi, R.; Ghofrani, M.; Ghayekhloo, M. A hybrid wind power forecasting model based on data mining and wavelets analysis. Energy Convers. Manag. 2016, 127, 208–225. [Google Scholar] [CrossRef]

- Santamaria-Bonfil, G.; Reyes-Ballesteros, A.; Gershenson, C. Wind speed forecasting for wind farms: A method based on support vector regression. Renew. Energy 2016, 85, 790–809. [Google Scholar] [CrossRef]

| Mean | Standard Deviation | Minimum | Maximum | |

|---|---|---|---|---|

| All | 5.25 | 2.50 | 0.04 | 19.37 |

| Training | 5.14 | 2.58 | 0.04 | 19.37 |

| Testing | 5.54 | 2.17 | 0.59 | 11.41 |

| Variables | Description |

|---|---|

| Training samples | |

| Learning samples | |

| N | The inserted numbers of samples in EDE-AL |

| The error threshold for removing samples in EDE-AL | |

| The threshold of epsilon intensive band in SVR-AL | |

| Measured wind speed | |

| Predicted wind speed |

| Model | Sample Set | RMSE (m/s) | MAE (m/s) | MAPE (%) | Number |

|---|---|---|---|---|---|

| Persistence | - | 1.271 | 0.942 | 23.97 | - |

| ANN | All | 1.081 | 0.832 | 18.96 | 2000 |

| Random | 1.131 | 0.859 | 21.04 | 900 | |

| EDE-AL | 1.082 | 0.831 | 18.93 | 680 | |

| SVR-AL | 1.092 | 0.844 | 18.96 | 813 | |

| SVR | All | 1.080 | 0.831 | 18.97 | 2000 |

| Random | 1.125 | 0.853 | 19.96 | 900 | |

| EDE-AL | 1.083 | 0.830 | 18.73 | 680 | |

| SVR-AL | 1.084 | 0.830 | 18.72 | 813 |

| Model | Sample Set | RMSE (m/s) | MAE (m/s) | MAPE (%) | Number |

|---|---|---|---|---|---|

| Persistence | - | 1.876 | 1.448 | 39.49 | - |

| ANN | All | 1.578 | 1.214 | 28.74 | 2000 |

| Random | 1.620 | 1.229 | 29.91 | 1300 | |

| EDE-AL | 1.584 | 1.215 | 28.71 | 1329 | |

| SVR-AL | 1.583 | 1.214 | 28.73 | 1318 | |

| SVR | All | 1.552 | 1.192 | 28.53 | 2000 |

| Random | 1.621 | 1.231 | 29.90 | 1300 | |

| EDE-AL | 1.576 | 1.200 | 27.86 | 1329 | |

| SVR-AL | 1.559 | 1.196 | 28.72 | 1318 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yang, J.; Zhao, X.; Wei, H.; Zhang, K. Sample Selection Based on Active Learning for Short-Term Wind Speed Prediction. Energies 2019, 12, 337. https://doi.org/10.3390/en12030337

Yang J, Zhao X, Wei H, Zhang K. Sample Selection Based on Active Learning for Short-Term Wind Speed Prediction. Energies. 2019; 12(3):337. https://doi.org/10.3390/en12030337

Chicago/Turabian StyleYang, Jian, Xin Zhao, Haikun Wei, and Kanjian Zhang. 2019. "Sample Selection Based on Active Learning for Short-Term Wind Speed Prediction" Energies 12, no. 3: 337. https://doi.org/10.3390/en12030337

APA StyleYang, J., Zhao, X., Wei, H., & Zhang, K. (2019). Sample Selection Based on Active Learning for Short-Term Wind Speed Prediction. Energies, 12(3), 337. https://doi.org/10.3390/en12030337