Do Graph Readers Prefer the Graph Type Most Suited to a Given Task? Insights from Eye Tracking

Abstract

:Introduction

Graph Reading Tasks

Computational Properties of Graphs

Graph reading and learning effects

Research Questions

Additionally, we investigated graph readers’ preference development as it unfolds across the experimental trials. Even though there is some evidence that graph readers may get more efficient in using the computational advantages of graphs over time (Peebles and Cheng, 2003), this second research question is comparatively novel and more explorative in nature.In summary, we expected the following:

Method

Sample and Study Design

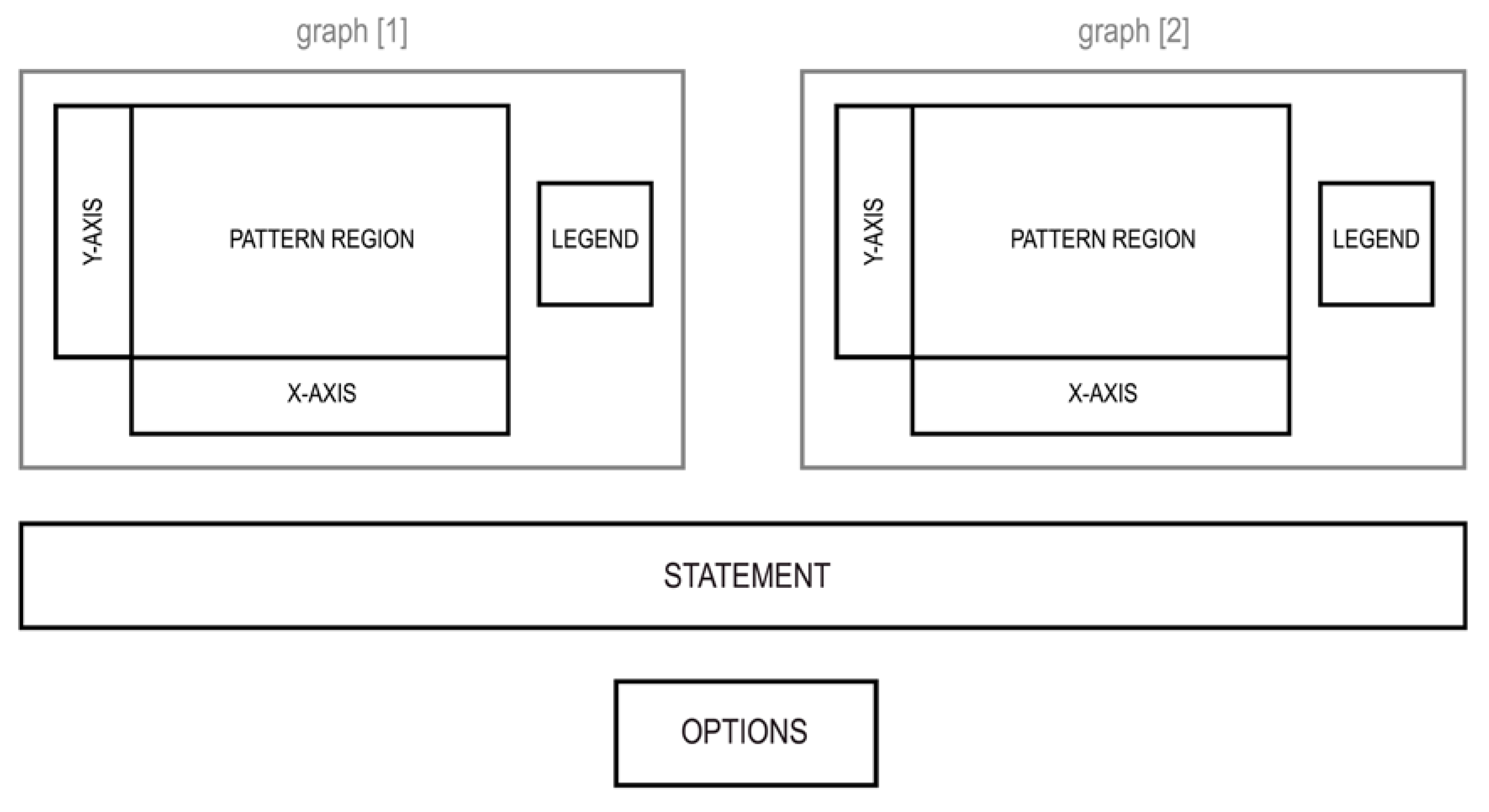

Material and Measures

Apparatus

Procedure

Analysis

Results

Overall Graph Preference

Preferential Graph Processing

Preference Development

Discussion

Overall Graph Preference

Preferential Graph Processing

Preference Development

Conclusions

Acknowledgments

References

- Ali, N., and D. Peebles. 2013. The effect of gestalt laws of perceptual organization on the comprehension of three-variable bar and line graphs. Human Factors: The Journal of the Human Factors and Ergonomics Society 55, 1: 183–203. [Google Scholar] [CrossRef] [PubMed]

- Baddeley, A. D. 1994. The magical number seven: Still magic after all these years? Psychological Review 101: 353–356. [Google Scholar] [CrossRef] [PubMed]

- Baddeley, A. D., and G. Hitch. 1974. Working Memory. Psychology of Learning and Motivation 8: 47–89. [Google Scholar] [CrossRef]

- Baker, R. S., A. T. Corbett, and K. R. Koedinger. 2001. Toward a model of learning data representations. Edited by J. D. Moore and K. Stenning. In Proceedings of the Twenty-Third Annual Conference of the Cognitive Science Society. Mahwah, NJ: Erlbaum: pp. 45–50. [Google Scholar]

- Barr, D. J. 2008. Analyzing ‘visual world’ eyetracking data using multilevel logistic regression. Journal of Memory and Language 59: 457–474. [Google Scholar] [CrossRef]

- Barr, D. J., R. Levy, C. Scheepers, and H. J. Tily. 2013. Random effects structure for confirmatory hypothesis testing: Keep it maximal. Journal of Memory and Language 68: 255–278. [Google Scholar] [CrossRef]

- Bates, D., M. Maechler, B. Bolker, and S. Walker. 2015. Fitting Linear Mixed-Effects Models Using lme4. Journal of Statistical Software 67: 1–48. [Google Scholar] [CrossRef]

- Bell, A., and C. Janvier. 1981. The Interpretation of Graphs Representing Situations. For the Learning of Mathematics 2: 34–42, Retrieved from http://eric.ed.gov/?id=EJ255422. [Google Scholar]

- Bertin, J. 1983. Semiology of graphics: Diagrams, networks, maps. Madison, WI: University of Wisconsin Press. [Google Scholar]

- Blackwell, A. F. 2001. Introduction Thinking with Diagrams. In Thinking with Diagrams. Netherlands: Springer: pp. 1–3. [Google Scholar]

- Cucuo, A. A., and F. Curcio. 2001. The role of representation in school mathematics: National Council of Teachers of Mathematics Yearbook. Reston, VA: NCTM. [Google Scholar]

- Curcio, F. R. 1987. Comprehension of mathematical relationships expressed in graphs. Journal for Research in Mathematics Education 18: 382–393. [Google Scholar] [CrossRef]

- De Jong, T. 2010. Cognitive load theory, educational research, and instructional design: Some food for thought. Instructional Science 38: 105–134. [Google Scholar] [CrossRef]

- Djamasbi, S., M. Siegel, J. Skorinko, and T. Tullis. 2011. Online viewing and aesthetic preferences of generation y and the baby boom generation: Testing user web site experience through eye tracking. International Journal of Electronic Commerce 15, 4: 121–158. [Google Scholar] [CrossRef]

- Dorman, J. P. 2008. The effect of clustering on statistical tests: An illustration using classroom environment data. Educational Psychology 28: 583–595. [Google Scholar] [CrossRef]

- Ericsson, K. A., and H. A. Simon. 1980. Verbal reports as data. Psychological Review 87: 215–251. [Google Scholar] [CrossRef]

- Glazer, N. 2011. Challenges with graph interpretation: A review of the literature. Studies in Science Education 47: 183–210. [Google Scholar] [CrossRef]

- Goldberg, J., and J. Helfman. 2011. Eye tracking for visualization evaluation: Reading values on linear versus radial graphs. Information Visualization 10: 182–195. [Google Scholar] [CrossRef]

- Grosse, M. E., and B. D. Wright. 1985. Validity and reliability of true-false tests. Educational and Psychological Measurement 45: 1–13. [Google Scholar] [CrossRef]

- Haladyna, T. M. 2004. Developing and validating multiple-choice test items. New York, NY: Routledge. [Google Scholar]

- Halford, G. S., R. Baker, J. E. McCredden, and J. D. Bain. 2005. How many variables can humans process? Psychological Science 16: 70–76. [Google Scholar] [CrossRef]

- Hartig, J., and J. Buchholz. 2012. A multilevel item response model for item position effects and individual persistence. Psychological Test and Assessment Modeling 54: 418–431. [Google Scholar]

- Hoffman, L., and M. J. Rovine. 2007. Multilevel models for the experimental psychologist: Foundations and illustrative examples. Behavior Research Methods 39: 101–117. [Google Scholar] [CrossRef]

- Holmqvist, K., M. Nyström, R. Andersson, R. Dewhurst, H. Jarodzka, and J. De Weijer. 2011. Eye tracking: A comprehensive guide to methods and measures. Oxford, UK: Oxford University Press. [Google Scholar]

- Just, M. A., and P. A. Carpenter. 1980. A theory of reading: From eye fixations to comprehension. Psychological Review 87: 329–354. [Google Scholar] [CrossRef]

- Kim, S., and L. Lombardino. 2015. Comparing graphs and text: Effects of complexity and task. Journal of Eye Movement Research 8, 3: 1–17. [Google Scholar] [CrossRef]

- Koerber, S. 2011. Der Umgang mit visuell-grafischen Repräsentationen im Grundschulalter. Unterrichtswissenschaft 39: 49–62. [Google Scholar] [CrossRef]

- Kosslyn, S. M. 1989. Understanding charts and graphs. Applied Cognitive Psychology 3: 185–225. [Google Scholar] [CrossRef]

- Larkin, J. H., and H. A. Simon. 1987. Why a diagram is (sometimes) worth ten thousand words. Cognitive Science 11: 65–100. [Google Scholar] [CrossRef]

- Lohse, G. L., K. Biolsi, N. Walker, and H. H. Rueter. 1994. A classification of visual representations. Communications of the ACM, vol. 37, pp. 36–49. [Google Scholar] [CrossRef]

- Lindner, M. A., A. Eitel, G.-B. Thoma, I. M. Dalehefte, J. M. Ihme, and O. Köller. 2014. Tracking the decision making process in multiple-choice assessment: Evidence from eye movements. Applied Cognitive Psychology 28: 738–752. [Google Scholar] [CrossRef]

- Lowrie, T., and C. M. Diezmann. 2007. Solving graphics problems: Student performance in junior grades. The Journal of Educational Research 100: 369–378. [Google Scholar] [CrossRef]

- Mayer, R. E. 2009. Multimedia learning, 2nd ed. New York, NY: Cambridge University Press. [Google Scholar] [CrossRef]

- Meissner, M., and R. Decker. 2010. Eye-tracking information processing in choice-based conjoint analysis. International Journal of Market Research 52: 591–610. [Google Scholar] [CrossRef]

- Miller, G. A. 1956. The magical number seven, plus or minus two: Some limits on our capacity for processing information. Psychological Review 101: 343–352. [Google Scholar] [CrossRef]

- Palmer, S. E. 1978. Fundamental aspects of cognitive representation. Edited by E. Rosch and B. B. Lloyd. In Cognition and categorization. Hillsdale, NJ: Lawrence Erlbaum: pp. 259–303. [Google Scholar]

- Peebles, D., and P. C. H. Cheng. 2003. Modeling the effect of task and graphical representation on response latency in a graph reading task. Human Factors: The Journal of the Human Factors and Ergonomics Society 45: 28–46. [Google Scholar] [CrossRef]

- Pereira-Mendoza, L., S. L. Goh, and W. Bay. 2004. Interpreting graphs from newspapers: Evidence of going beyond the data. Paper presented at the ERAS Conference, Singapore, November; Available online: https://repository.nie.edu.sg/bitstream/10497/15549/1/ ERAS-2004-277_a.pdf.

- Pinheiro, J., and D. Bates. 2006. Mixed-effects models in S and S-PLUS. New York: Springer. [Google Scholar]

- Pinker, S. 1990. A theory of graph comprehension. Edited by R. O. Freedle. In Artificial Intelligence and the Future of Testing. Hillsdale, NJ, England: Lawrence Erlbaum Associates, Inc.: pp. 73–126. [Google Scholar]

- Purchase, H. C. 2014. Twelve years of diagrams research. Journal of Visual Languages & Computing 25: 57–75. [Google Scholar] [CrossRef]

- Quené, H., and H. Van den Bergh. 2008. Examples of mixed-effects modeling with crossed random effects and with binomial data. Journal of Memory and Language 59: 413–425. [Google Scholar] [CrossRef]

- R Core Team. 2015. R: A language and environment for statistical computing. In R Foundation for Statistical Computing. Vienna, Austria: URL https://www.Rproject.org/. [Google Scholar]

- Ren, X., T. Wang, M. Altmeyer, and K. Schweizer. 2014. A learning-based account of fluid intelligence from the perspective of the position effect. Learning and Individual Differences 31: 30–35. [Google Scholar] [CrossRef]

- Schnotz, W. 1994. Wissenserwerb mit logischen Bildern. Edited by B. Weidenmann. In Wissenserwerb mit Bildern. Bern: Huber: pp. 95–148. [Google Scholar]

- Schnotz, W. 2002. Commentary: Towards an integrated view of learning from text and visual displays. Educational Psychology Review 14: 101–120. [Google Scholar] [CrossRef]

- Shah, P. 1997. A model of the cognitive and perceptual processes in graphical display comprehension. In Proceedings of American Association for Artificial Intelligence Spring Symposium. AAAI Technical Report FS-97-03. Stanford University. [Google Scholar]

- Shah, P. 2002. Graph comprehension: The role of format, content, and individual differences. Edited by M. Anderson, B. Meyer and P. Olivier. In Diagrammatic Representation and Reasoning. Springer, New York. [Google Scholar]

- Shah, P., and J. Hoeffner. 2002. Review of graph comprehension research: Implications for instruction. Educational Psychology Review 14: 47–69. [Google Scholar] [CrossRef]

- Shah, P., and P. A. Carpenter. 1995. Conceptual limitations in comprehending line graphs. Journal of Experimental Psychology 124: 43–61. [Google Scholar] [CrossRef]

- Shah, P., R. E. Mayer, and M. Hegarty. 1999. Graphs as aids to knowledge construction: Signaling techniques for guiding the process of graph comprehension. Journal of Educational Psychology, 91, 690–702. [Google Scholar] [CrossRef]

- Simkin, D., and R. Hastie. 1987. An informationprocessing analysis of graph perception. Journal of the American Statistical Association 82: 454–465. [Google Scholar] [CrossRef]

- Snijders, T. A. B., and R. J. Bosker. 2012. Multilevel Analysis: An Introduction to Basic and Advanced Multilevel Modeling, 2nd ed. Los Angeles, CA: SAGE. [Google Scholar]

- Sweller, J. 1988. Cognitive load during problem solving: Effects on learning. Cognitive Science 12: 257–285. [Google Scholar] [CrossRef]

- Tan, J. K., and I. Benbasat. 1990. Processing of graphical information: A decomposition taxonomy to match data extraction tasks and graphical representations. Information Systems Research 1: 416–439. [Google Scholar]

- Trickett, S. B., and J. G. Trafton. 2006. Toward a comprehensive model of graph comprehension: Making the case for spatial cognition. Edited by D. Barker-Plummer, R. Cox and N. Swaboda. In Diagrammatic representation and inference . Berlin, Germany: Springer: pp. 286–300. [Google Scholar]

- Van Gog, T., L. Kester, F. Nievelstein, B. Giesbers, and F. Paas. 2009. Uncovering cognitive processes: Different techniques that can contribute to cognitive load research and instruction. Computers in Human Behavior 25: 325–331. [Google Scholar] [CrossRef]

- Wainer, H. 1992. Understanding graphs and tables. Educational Researcher 21: 14–23. [Google Scholar] [CrossRef]

- Wertheimer, M. 1938. Laws of organization in perceptual forms. Edited by D. Ellis W. In A source book of Gestalt psychology. London: Routledge & Kegan Paul. [Google Scholar]

- Winn, W. D. 1990. A theoretical framework for research on learning from graphics. International Journal of Educational Research 14: 553–564. [Google Scholar] [CrossRef]

- Zacks, J., and B. Tversky. 1999. Bars and lines: A study of graphic communication. Memory & Cognition 27: 1073–1079. [Google Scholar] [CrossRef]

| Average total fixation time (in ms) | Average percentage of processing time (in %) | |||||||

| Area of Interest | Difference Task | Trend Task | Difference Task | Trend Task | ||||

| Bar graph (total) | 6828.8 | (6551.0) | 2767.8 | (4181.6) | 22.9 | (17.5) | 13.8 | (16.5) |

| Pattern | 4044.3 | (4236.0) | 1823.1 | (3006.5) | 13.4 | (11.5) | 9.1 | (12.0) |

| Legend | 1731.0 | (1971.4) | 682.0 | (1119.9) | 6.0 | (6.2) | 3.6 | (5.2) |

| X-axis | 819.4 | (1464.4) | 165.4 | (489.2) | 2.8 | (4.3) | 0.8 | (2.0) |

| Y-axis | 234.1 | (692.2) | 97.4 | (364.1) | 0.6 | (1.7) | 0.4 | (1.7) |

| Line graph (total) | 4524.5 | (4538.5) | 5139.5 | (4978.4) | 17.7 | (16.4) | 30.3 | (16.9) |

| Pattern | 2548.1 | (2857.0) | 3324.6 | (3712.0) | 9.8 | (10.1) | 18.9 | (13.2) |

| Legend | 1231.5 | (1517.3) | 1477.1 | (1289.1) | 4.9 | (5.9) | 9.6 | (6.8) |

| X-axis | 539.5 | (1004.8) | 198.9 | (445.3) | 2.3 | (4.0) | 1.2 | (2.5) |

| Y-axis | 205.4 | (554.0) | 138.9 | (433.8) | 0.6 | (1.6) | 0.7 | (2.0) |

| Statement | 9004.7 | (5737.1) | 4341.8 | (3582.5) | 33.1 | (13.1) | 26.9 | (15.2) |

| Options | 1104.7 | (636.1) | 944.7 | (571.3) | 4.6 | (3.0) | 6.8 | (4.8) |

| Total processing time | 27685.8 | (14533.3) | 16988.2 | (11274.9) | ||||

| Model 0 (M0) | Model 1 (M1) | Model 2 (M2) | Model 3 (M3) | ||||||||||||

| Fixed effect | Estimate | SE | t-value1 | Estimate | SE | t-value1 | Estimate | SE | t-value1 | Estimate | SE | t-value1 | |||

| Intercept | -3.02 | 1.89 | -1.60 | 9.84 | ** | 2.81 | 3.50 | 9.18 | ** | 2.79 | 3.30 | 9.23 | ** | 2.83 | 3.26 |

| Trial no. | -0.53 | ** | 0.19 | -2.83 | -0.52 | ** | 0.19 | -2.83 | -0.53 | * | 0.22 | -2.39 | |||

| Task type (trend) | -15.36 | *** | 3.57 | -4.31 | -15.36 | *** | 3.57 | -4.30 | -14.92 | ** | 4.06 | -3.68 | |||

| Task type x Trial no. | 0.16 | 0.27 | 0.60 | 0.16 | 0.27 | 0.26 | 0.14 | 0.27 | 0.52 | ||||||

| Overall preference | 1.79 | * | 0.77 | 2.36 | 1.42 | * | 0.64 | 2.23 | |||||||

| Random effect | Variance Component | Variance Component | Variance Component | Variance Component | |||||||||||

| Stimulus | 83.03 | 28.78 | 28.80 | 31.00 | |||||||||||

| Subject | 47.61 | 47.54 | 40.31 | 46.19 | |||||||||||

| Trial number | 0.40 | ||||||||||||||

| Task type (trend) | 108.35 | ||||||||||||||

| Residual | 309.19 | 309.23 | 309.21 | 262.20 | |||||||||||

| Deviance | 11191 | 11148 | 11142 | 11024 | |||||||||||

| LR-Test | M0-M1: χ2(3) = 43.34*** | M1-M2: χ2(1) = 5.30* | M2-M3: χ2(5) = 118.58*** | ||||||||||||

Copyright © 2016. This article is licensed under a Creative Commons Attribution 4.0 International License.

Share and Cite

Strobel, B.; Saß, S.; Lindner, M.A.; Köller, O. Do Graph Readers Prefer the Graph Type Most Suited to a Given Task? Insights from Eye Tracking. J. Eye Mov. Res. 2016, 9, 1-15. https://doi.org/10.16910/jemr.9.4.4

Strobel B, Saß S, Lindner MA, Köller O. Do Graph Readers Prefer the Graph Type Most Suited to a Given Task? Insights from Eye Tracking. Journal of Eye Movement Research. 2016; 9(4):1-15. https://doi.org/10.16910/jemr.9.4.4

Chicago/Turabian StyleStrobel, Benjamin, Steffani Saß, Marlit Annalena Lindner, and Olaf Köller. 2016. "Do Graph Readers Prefer the Graph Type Most Suited to a Given Task? Insights from Eye Tracking" Journal of Eye Movement Research 9, no. 4: 1-15. https://doi.org/10.16910/jemr.9.4.4

APA StyleStrobel, B., Saß, S., Lindner, M. A., & Köller, O. (2016). Do Graph Readers Prefer the Graph Type Most Suited to a Given Task? Insights from Eye Tracking. Journal of Eye Movement Research, 9(4), 1-15. https://doi.org/10.16910/jemr.9.4.4