Abstract

The present paper presents the performance of an experimental cartographic study towards the examination of the minimum duration threshold required for the detection by the central vision of a moving point symbol on cartographic backgrounds. The examined threshold is investigated using backgrounds with discriminant levels of information. The experimental process is based on the collection (under free viewing conditions) and the analysis of eye movement recordings. The computation of fixation derived statistical metrics allows the calculation of the examined threshold as well as the study of the general visual reaction of map users. The critical duration threshold calculated within the present study corresponds to a time span around 400msec. The results of the analysis indicate meaningful evidences about these issues while the suggested approach can be applied towards the examination of perception thresholds related to changes occurred on dynamic stimuli.

Introduction

Map symbolization constitutes the fundamental process for the visualization of geographic information during cartographic production. Today, the majority of distributed cartographic products is directly related to the use of digital monitors (e.g. computer monitors or monitors of mobile devices etc.) and includes “animated” material. Animated maps constitute a special type of cartographic products, which are characterized by continuous changes during their observation (Slocum, McMaster, Kessler, & Howard, 2009). In traditional mapping, “visual variables” (Bertin, 1967/1983) are the basic graphic elements for the implementation of map symbolization process. Additional design tools, the so called; “dynamic variables”, are also used in conjunction with visual variables for the production of animated maps. The original list of dynamic variables was introduced by DiBiase, MacEachren, Krygier, and Reeves (1992) and consists of three variables; duration, rate of change and order. Subsequently, MacEachren (1995) enriched this list by the involvement of the variables of display date, frequency and synchronization.

Many researchers (e.g. Karl, 1992; Griffin, MacEachren, Hardisty, Steiner & Li, 2006; Harrower, 2007a) point out the need to evaluate the effectiveness of animated maps, as well as visual attention issues in animated diagrams in comparison to static ones (e.g. Lowe & Boucheix, 2010). The study of animated maps’ influence on the process of gaining knowledge is also considered important (Fabrikant, 2005; Harrower & Fabrikant, 2008), while other researchers (Kraak & MacEachrean, 1994; Xiaofang, Qingyun, Zhiyong, & Na, 2005) suggest the performance of experimental studies in order to investigate the function of dynamic variables for map symbolization.

The study of map reading process is able to indicate interesting points related to map symbolization. Considering that map reading constitutes a complex cognitive process, the study of the related concepts requires the contribution of theories, methods and approaches adopted from visual perception and visual attention. This fact has become clear in many research studies in cartography (MacEachren, 1995; Keates, 1996; Lloyd, 1997; Sluter, 2001; Montello, 2002; Lloyd, 2005; Griffin & Bell, 2009 etc.). Studying the reaction of map readers during map reading process requires the implementation of psychological methods, which are used for the examination of visual behavior. As Ciołkosz-Styk (2012) suggested, “the assumptions of psychological research were easy to transfer into the language of cartography: the cartographic symbols were treated as a stimulus, and their perception, i.e. the way the map user reads them, was the reaction to the stimuli”.

Several research studies have been already performed in cartography and related disciplines (e.g. Geographic Information Science) towards the examination of animated and interactive maps' effectiveness. Animated maps are characterized by the existence of motion. Motion is produced by the implementation of graphic changes in successive visual scenes (frames) that compose an animated map. Therefore, the examination of how map readers perceive these changes is of critical importance in the field of map perception. Several empirical studies examine map readers' reaction in visual scene changes; Harrower (2007b) investigates the influence of classification methods in animated choropleth maps, while other research studies examine the influence of changing blindness and the spatial distribution in the context of dynamic cartographic visualizations (Fish, 2010; Fish, Goldsberry, & Battersby, 2011; Moon, Seonggook, Kim, EunKyeong, Hwang, Chul-Sue, 2014). The examination of these aspects is performed using choropleth maps in the aforementioned studies, which constitute one of the most popular methods in cartographic visualization.

Over the last years, eye tracking has become a compatible technique for the examination of visual perception. The methodological framework of eye tracking has great influence in several research disciplines (Duchowski, 2007). In cartographic research, eye tracking has also become a robust tool for the examination of several related topics. Despite the fact that early eye tracking cartographic studies (e.g. Jenks, 1973) followed a general approach without specific questions in order to examine visual behavior during map reading process (Steinke, 1987), recent studies are related to the examination of fundamental design elements of map symbolization (Garlandini & Fabrikant, 2009; Krassanakis, 2013; Dong, Zhang, Liao, Liu, Li, & Yang, 2014; Kiik, 2015) based on theoretical frameworks of vision and visual attention. Furthermore, recent eye tracking cartographic experimentation consists of a variety of studies related to different map types including static (e.g. Krassanakis, Filippakopoulou, & Nakos, 2011a), animated (e.g. Opach & Nossum, 2011; Opach, Gołębiowska, & Fabrikant, 2013), interactive (e.g. Ooms, De Mayer, Fack, Van Assche, & Witlox, 2012) and web (e.g. Alaçam & Dalci, 2009) maps. Moreover, specific cartographic processes are also examined using eye tracking methodology including the evaluation of critical points in map generalization (Bargiota, Mitropoulos, Krassanakis, & Nakos 2013), the comparison between paper and digital maps (Incoul, Ooms, & De Mayer, 2015), the comparison between 2D and 3D terrain visualization (Popelka & Brychtova, 2013), comparisons of the performance between expert and novice groups (Ooms, De Mayer, & Fack, 2014; Stofer & Che, 2014), the introduction of new visualization methods of eye movement data referred to as cartographic lines (Karagiorgou, Krassanakis, Vescoukis, & Nakos, 2014) etc.

Animations are an alternative visualization method that may be used instead of text (Russo, Pettit, Çöltekin, Imhof, Cox, & Bayliss, 2014), or multiple small maps (Griffin, MacEachrean, Hardisty, & Erik, 2006) for data representation. The investigation of cognitive aspects related to animated mapping may produce critical suggestions about their function. Hence, several experimental approaches aim to deliver critical suggestions about this type of mapping and the related cognitive tasks (e.g. Maggi & Fabrikant 2014; Maggi, Fabrikant, Imbert, & Hurter, 2015;). For example, Nossum (2012) concludes, through a web experimental study, that the solution of semistatic animations (the case of weather maps is examined) may increase the performance of map users during the execution of map reading tasks. The majority of the performed cartographic studies examine the cognitive issues related to cognitive map tasks. The study of the graphic design variables and the perception of them may shed more light towards this direction.

Considering the aforementioned issues and studies, we propose that eye tracking can be a valuable tool for the examination of concepts related to map perception. Especially in the case of animated maps, eye tracking is able to give objective evidences (Duchowski, 2002) for the study of map users' reaction indicating meaningful information about limits and thresholds related to the values of dynamic variables, which constitutes an important element towards the understanding of their function and their effectiveness.

Duration constitutes one of the fundamental design tools for animated mapping (DiBiase et al., 1992; MacEachren, 1995; Slocum et al., 2009). More specifically, this design variable can be used for the visualization of thematic data with spatiotemporal characteristics (e.g. flow maps, population changes, navigational applications etc.). The present paper contributes to the map perception by determining the lower functional limit value of this variable. A cartographic experiment is performed in order to examine the minimum threshold of the dynamic variable of duration, which is required for the detection of a moving point symbol on cartographic backgrounds with discriminant levels of geographic information. Eye tracking data are collected for the performance of the experimental process and eye movements’ analysis is based on fixations derived statistical metrics. The analysis indicates meaningful evidences about the minimum duration threshold required for the reaction of map users while the provided approach can be applied for the investigation of detection thresholds for dynamic stimuli changes.

Methods

An experimental study is designed and performed based on eye movement recordings and analysis. Subjects observe a moving point symbol on cartographic backgrounds with discriminant levels of graphic information. The experimental process is executed under free viewing conditions without any specific task to be required from subjects and it examines the reaction of foveal vision in moving point symbols on cartographic-based stimuli. The production of base maps with discriminant levels of visual abstraction is based on the application of intensity changes in base map symbols. According to Marr' s theory of vision (1982), the detection of intensity changes is the first reaction and has a critical role during the perception of visual stimuli. The production of motion in point symbol is based on Euclidean distance changes in symbol's location. The experimental process is performed through three parts (see section Stimuli).

Experimental design

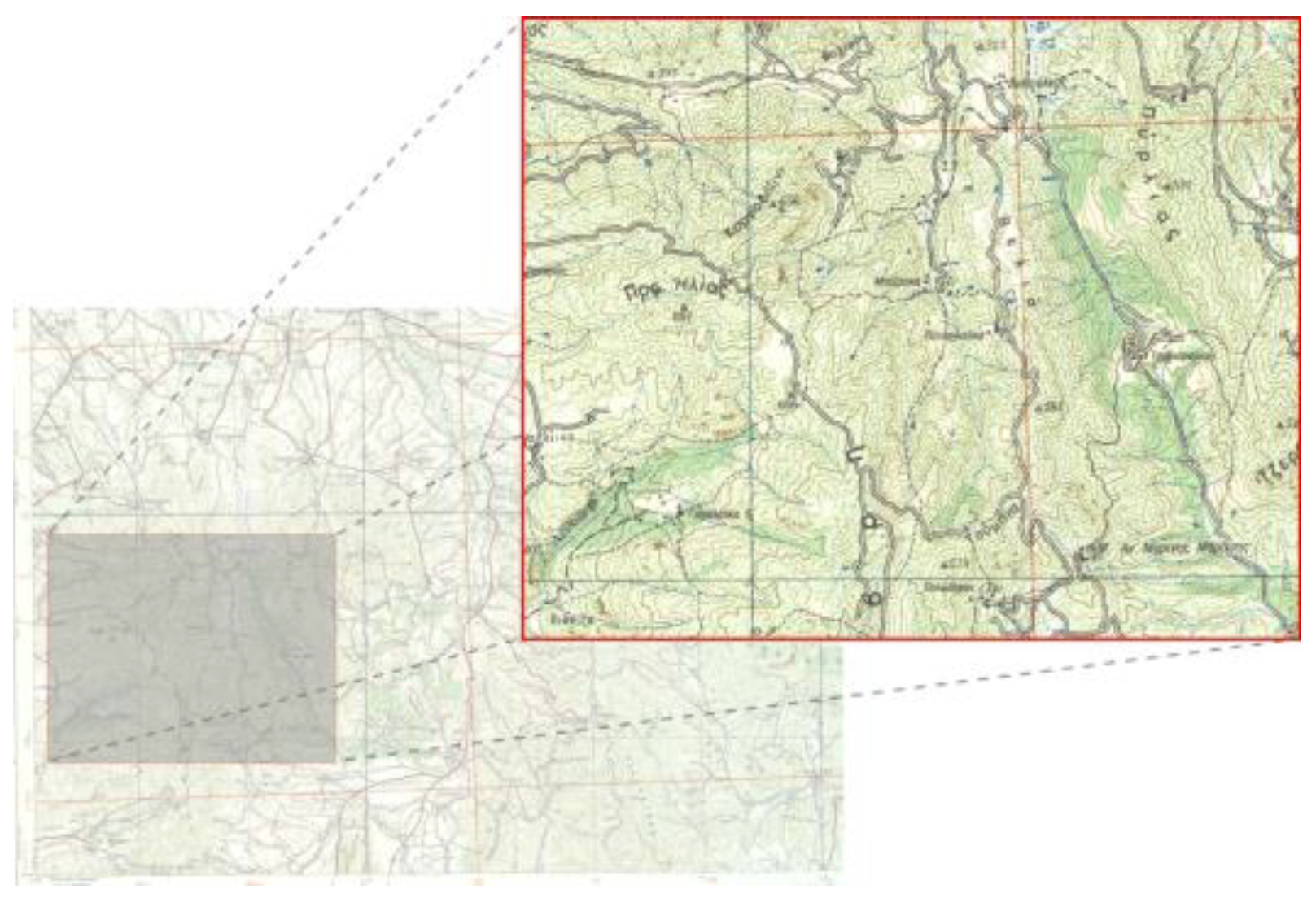

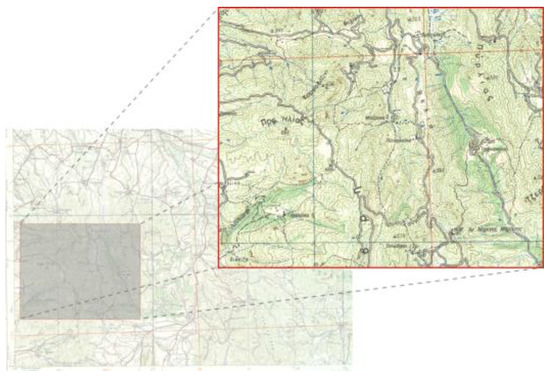

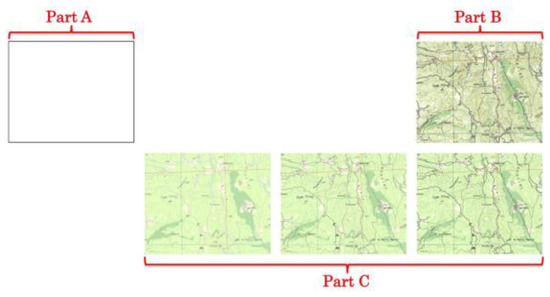

Stimuli. A part of a real topographic map provided in paper form by the Hellenic Military Cartographic Service (HMCS) (Figure 1) constitutes the main cartographic background that serves as the base map where point symbols' location changes are depicted. As shown in Figure 1, the visual complexity of base map is very high; the map involves a variety of cartographic symbols that represent several entities, such as road network, hydrological features (e.g. rivers), names, point symbols (e.g. heights), contours as well as several area symbols.

Figure 1.

The main cartographic background used for the experimental design is a part of a real topographic map.

Additionally, as referred above, discriminant base maps are produced by modifying the value of intensity in each map symbol, that process being feasible after the digitization of the original source map. This process was performed within the environment of the open source Geographic Information System (GIS) of Quantum GIS. The selected intensity changes correspond to the percentages of 35% and 70%. Despite the fact that these values are selected empirically, intensity changes are in accordance with the characteristic points of percentage reflectance and color value curve in Munsell color system cited by Robinson, Morrison, Muehrcke, Kimerling & Guptill (1995).

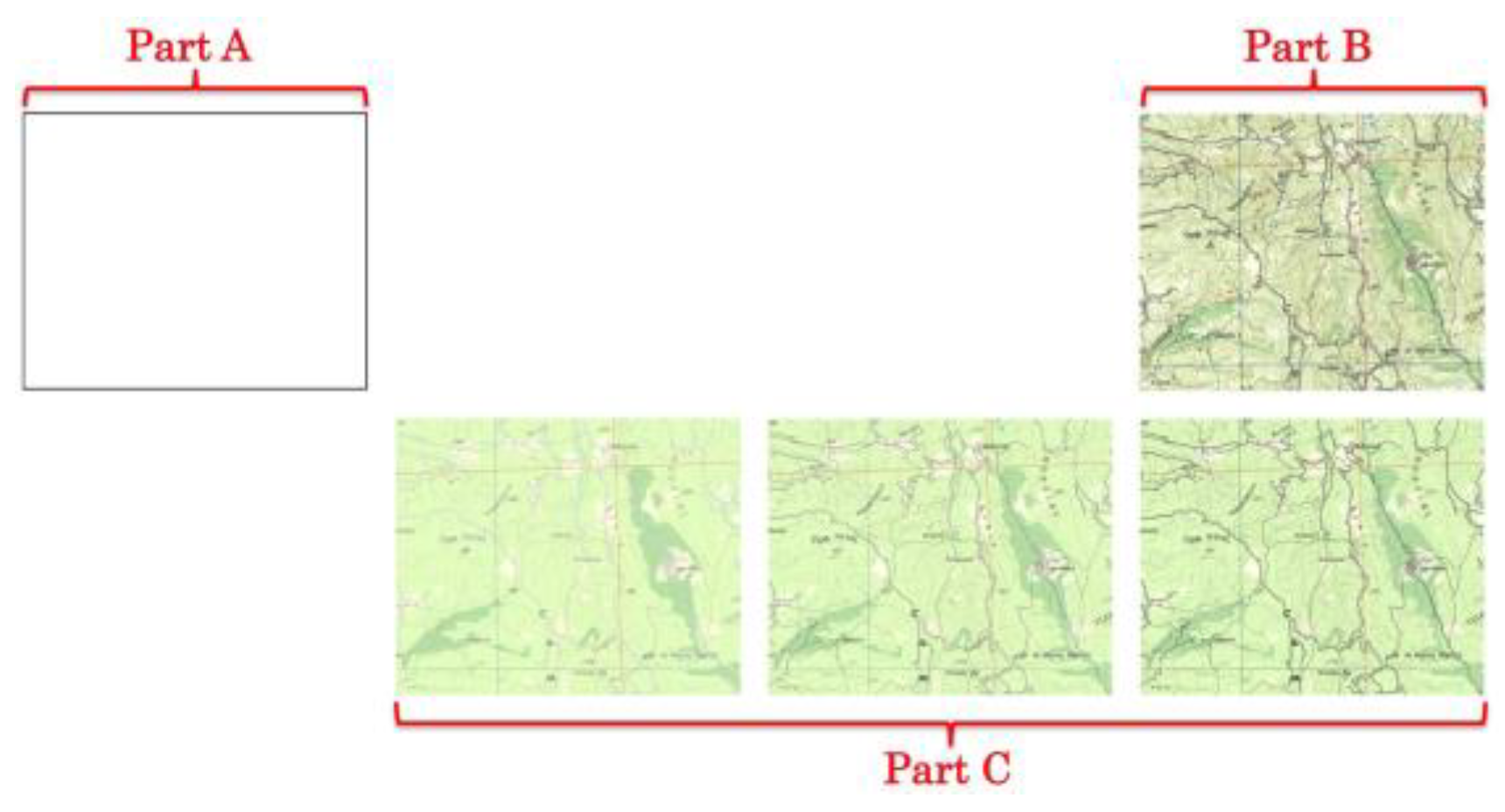

A blank (white, (R,G,B)=(255,255,255)) background is also used as experimental stimulus. The experimental process is executed into three parts depending on the used base map; Part A includes the blank background, Part B includes the original source map, and Part C includes the digitized map and the two base maps produced after the performance of intensity changes (Figure 2).

Figure 2.

Backgrounds with different level of information used for the performance of the three experimental parts (Part A, B, & C).

The resolution of screen and stimuli are 1280x1024pixels while the selected size corresponds approximately to the physical dimensions of 4.5x4.5mm in stimuli monitor and a 15x15pixels compact and black dot serves as the moving point symbol of the experiment. The moving point is selected to be compact as the existence of topological feature such as hole and/or line terminations may affect the process of visual perception (Michaelidou, Filippakopoulou, Nakos, & Petropoulou, 2005; Krassanakis, 2009; Krassanakis, Filippakopoulou, Nakos, 2011a). Changing the location of point symbol in successive visual scenes allows subject to perceive this difference as motion. Specifically, this change is based on the parameterization of two variables; the distance (change of location) between successive point symbols and the duration of each visual scene. Taking into consideration stimuli monitor's diagonal size, all distances are classified into three categories in order to be equally grouped within the stimuli; small (0-500pixels), medium (500-1000pixels), and large (1000-1560pixels) distances. On the other hand, the selection of duration values is based on the minimum reported duration of fixation event, which approximately corresponds to the value of 100msec (see section Fixation detection). The values of the chosen distances are selected randomly within the predefined ranges (including small, medium, and large group distances).

Part A and Part B, which correspond to the two “limit” backgrounds (the blank one and this of high level of geographic information), are used for the execution of a preliminary study towards the first validation of the suggested approach. For the execution of preliminary study, the selected values of duration are 100msec, 150msec, 200msec, 250msec, 300msec, 350msec, 400msec, 450msec, 500msec, 600msec, 700msec, 800msec, 900msec, 1sec, 2sec and 3sec. The selected durations have a difference of 50msec for the range between 100-500msec (where the investigated threshold is expected), while the differences correspond to the minimum fixation duration threshold for the range of 500msec-1sec. Additionally, the duration values of 1sec, 2sec, and 3sec are also used in order to validate the experimental approach. Due to the limitation of eye tracking equipment that allows the experimental performance using specific number of visual scenes, the durations are examined into two time groups (Durations A & B). Both backgrounds (blank and topographic map) are examined with both time groups. Table A1 (Appendix) presents the location coordinates of point map symbols as well as selected distances and durations (into two groups), while the results of the preliminary study indicate that the moving point detection is achieved within the range of 200-750msec (Krassanakis, Lelli, Lokka, Filippakopoulou, & Nakos, 2013).

Hence, the selected durations for the execution of Part C correspond to the values of 200msec, 337.5msec, 475msec, 612.5msec and 750msec. The differences between the selected values correspond to a range greater than the minimum duration of fixation events but, considering that the estimation may be performed in the half of this range (approximately in 69ms), the examination of the duration threshold may be executed having precision in duration smaller than the minimum reported fixation. Table A2 (Appendix) presents the location coordinates for each selected combination of duration and background with different intensity level. The location of point symbol (hence, the distances) in the combinations T1-T44 are the same with these used in preliminary study while T45* and T46* combinations have been added in order to equally select distances from three distances groups. In total, 144 visual scenes with different background – moving point symbols combinations are produced; 49 visual scenes for Part A, 49 visual scenes for Part B, and 46 visual scenes for Part C. The total experimental duration of each Part is equal to the sum of all corresponded duration values. Therefore, there are no pauses between stimuli.

Subjects. Totally 86 subjects participate in the presented research study, aged between 19-60 years for Part A and B and 20-35 years for Part C. More specifically, for the first group of durations 7 females and 14 males participate in Part A and B, respectively, while for the second group of durations 9 females and 12 males participate in Part A and B, respectively. Additionally, 26 females and 18 males participate in Part C. All subjects of this study are volunteers and have normal vision; without the need of glasses or contact lenses. Each subject is examined separately in free viewing conditions. Subjects are asked to observe a computer monitor where some stimuli are presented. The only guidelines given to the subjects are referred to eye tracker calibration and validation process.

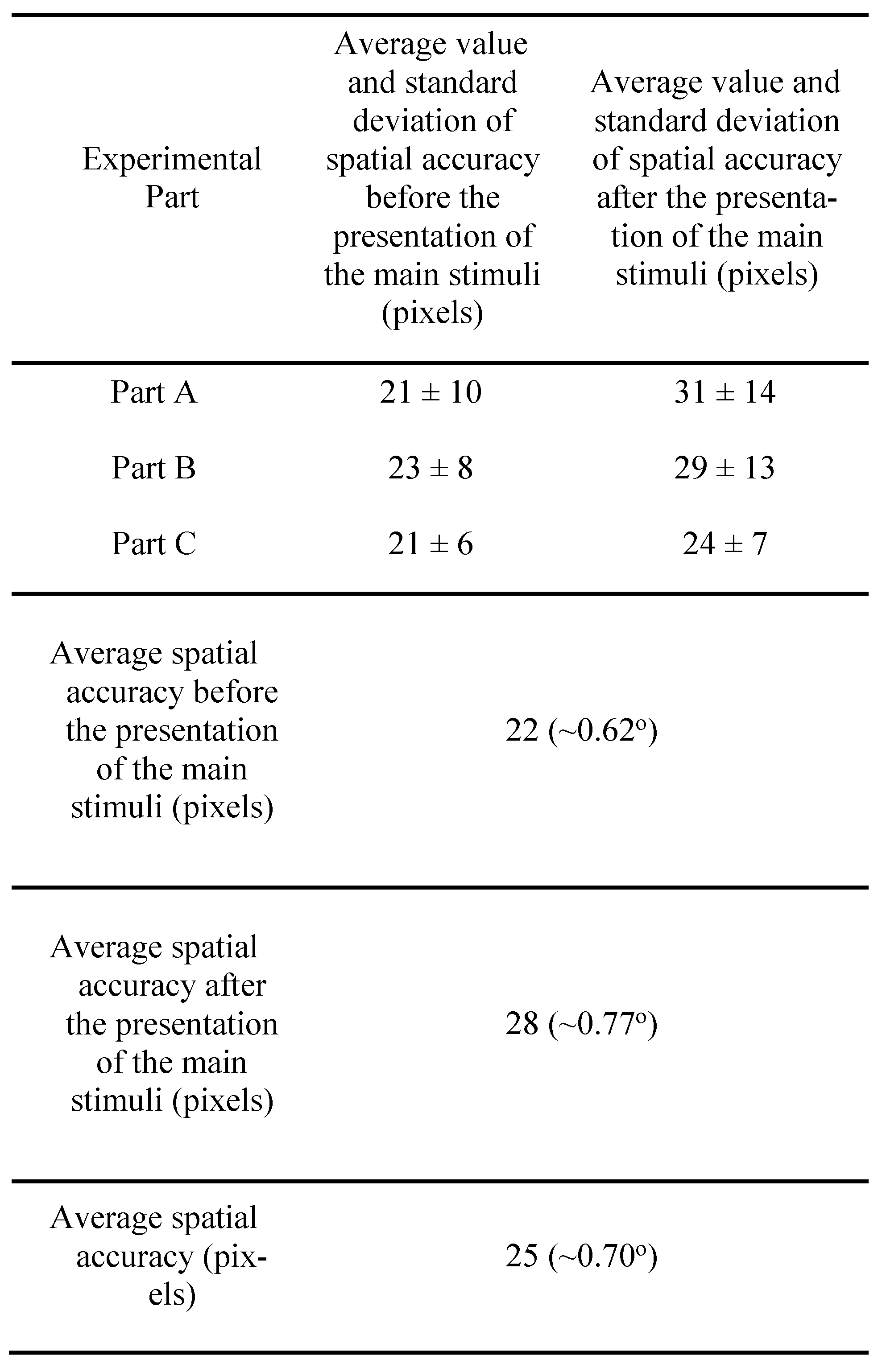

The eye movement recording process is validated in order to ensure the quality of the raw data that are used for next step analysis (see next section for further details). Taking into account the maximum accuracy (~1o of visual angle) of the used eye tracking equipment, only the subjects' gaze data collected within this threshold are considered for the analysis. The final dataset that is used for fixations' calculation includes 16 subjects for the first group of durations for Part A, 16 subjects for the second group of durations for Part A, 17 subjects for first group of durations for Part B, 16 subjects for second group of durations for Part B, and 28 subjects for Part C of the experimental study. Average spatial accuracy is computed before and after the presentation of the main experimental stimuli. The results of the validation process regarding each experimental part as well as the total achieved spatial accuracy are presented in Table 1.

Table 1.

Average value and standard deviation of spatial accuracy before and after the presentation of the main stimuli for all experimental Parts and in total.

Experimental setup & eye tracker’s function validation. The Viewpoint Eye Tracker® by Arrington Research is used for the execution of the experimental study. The process of eye movements recording is executed in 60Hz, which means that the location of human gaze is recorded approximately every 16.67ms. Furthermore, gaze detection is implemented using pupil location method while the spatial accuracy of the eye tracker device lies within the range of 0.25o-1o of visual angle. A chin rest mechanism is also used for head stabilization allowing the achievement of the optimal accuracy of the eye tracker system. Stimuli projection is executed in a 19-inch computer monitor with 1280x1024pixels resolution while the distance between monitor and subject is 60cm that corresponds to the typical distance for monitor viewing (Jenny, Jenny, & Räber, 2008). More information about the function and the capabilities of the laboratory equipment is cited by a previous study (Krassanakis, Filippakopoulou, & Nakos, 2011b).

In order to validate the results of eye movement recording process a calibration process is performed before and after the presentation of experimental stimuli. This process is based on a five fixed targets stimulus while the spatial distribution of all targets is selected in order to cover uniformly the range of stimulus monitor. Subjects observe each target for 3sec. The estimation of the average gaze location that corresponds to each fixed target is based on the performance of fuzzy C-means (FCM) algorithm (Bezdek, 1981) which is executed for the two collected datasets (before and after the presentation of the experimental stimuli), produced during the experimental process using the number of fixed targets in each dataset (n=5) as the number of classes for clustering process. In this way, the spatial accuracy produced during the execution of each experimental trial can be reported indicating the effectiveness of the procedure.

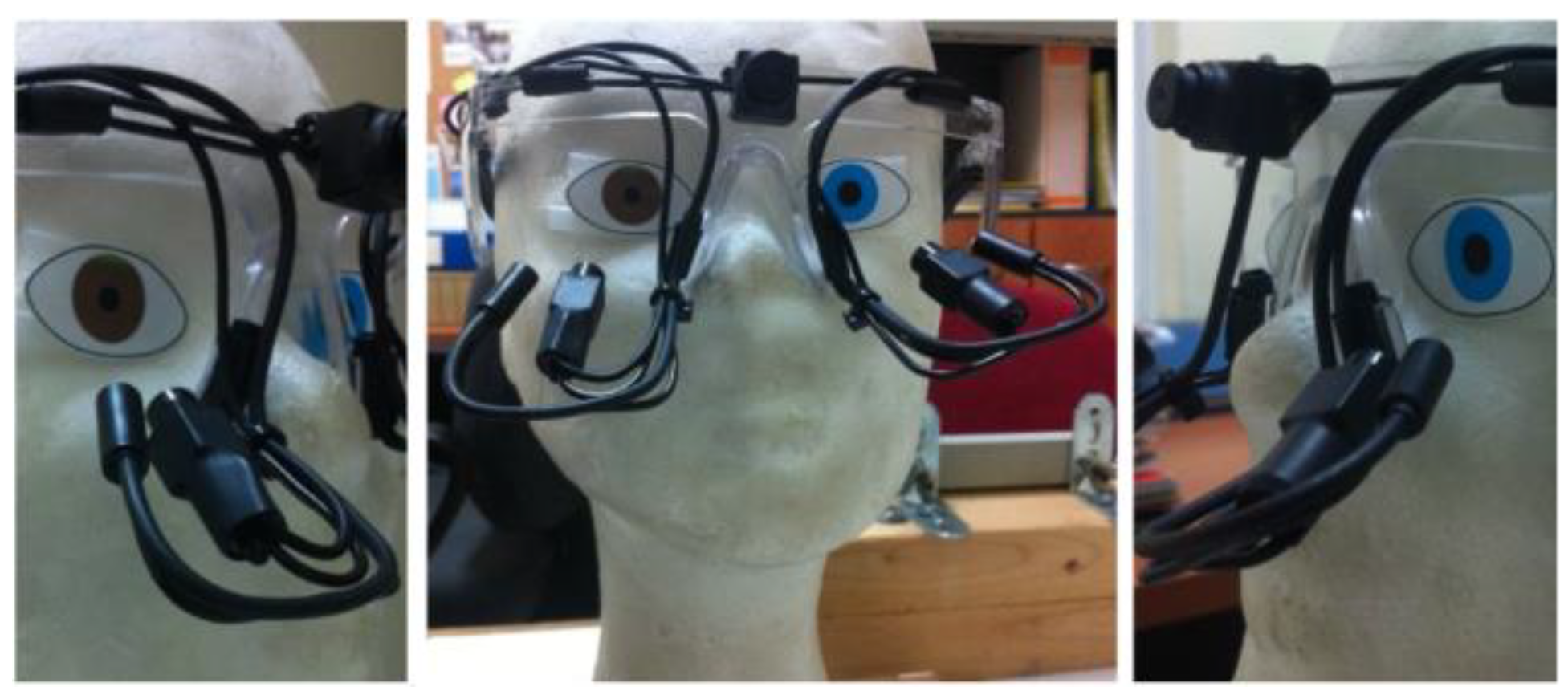

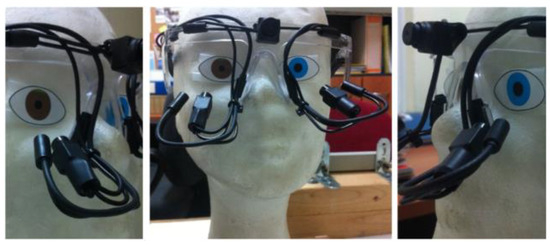

Furthermore, in order to ensure the normal function of eye tracker device, a validation procedure is executed using a pair of fake eyes, a blue and a brown one, which are adapted in an artificial head (Figure 3). Artificial eyes have already been used in previous studies either for the examination of pupil detection (e.g. Bodale & Talbar 2010; 2011) or for checking eye tracker equipment's noise (Coey, Wallot, Richardson, & Orden, 2012).

Figure 3.

Adaptation of the eye tracking equipment in an artificial head with a pair of artificial eyes.

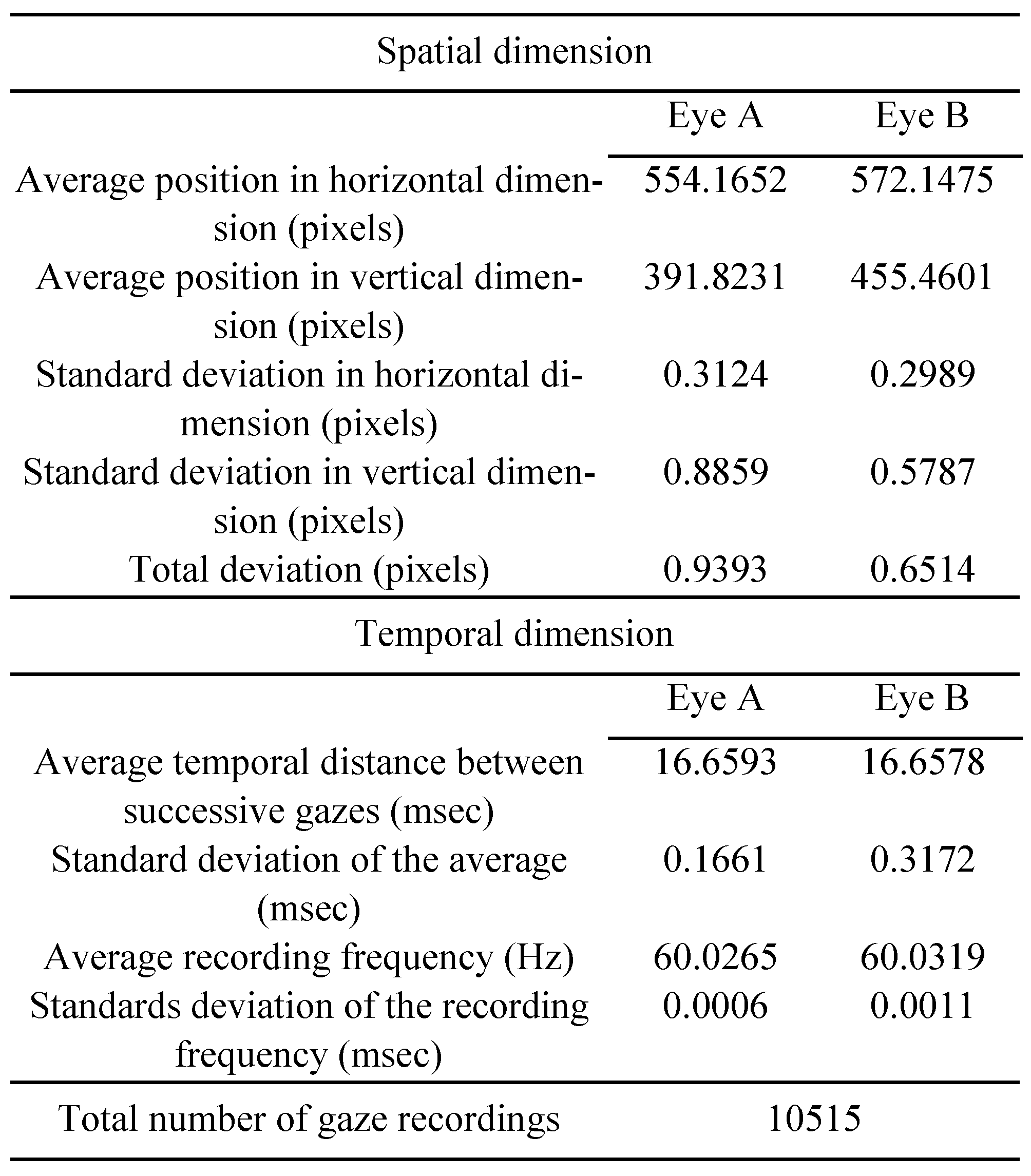

Except from the need to validate eye tracker's function, in the present study eye tracker's noise is recorded in order to serve as a critical indication for the performance of the algorithm for fixation detection process (see section Fixation detection). Totally, 10,515 gaze points are recorded within 2.92min using the mode of 60Hz frequency. The noise of eye tracker's system is computed considering both the spatial and the temporal dimension. Regarding the spatial noise, the total deviation is computed using the formula stotalspatial=(sx2+sy2)1/2, where sx and sy values correspond to the standard deviation in horizontal and vertical dimensions, respectively. Additionally, the temporal noise is computed as the standard deviation of the average time between successive gaze points and the standard deviation of the average recorded frequency. All noise measures referred above are performed for both eyes while the results of the process are presented in Table 2.

Table 2.

Noise measurement in both spatial and temporal dimension during the recording of eye tracking data using a pair of artificial eyes.

Eye Movement Analysis

The analysis of the collected eye movement recordings aims at delivering critical indications about the minimum duration threshold required for the detection of the moving point symbols on the examined cartographic backgrounds. It has to be mentioned that the concept of detection is referred only to the process of central vision (and not to peripheral), which is feasible to be recorded using eye tracking equipment. The investigation of the examined threshold is performed through the detection of fixation events and the computation of specific fixation derived statistical metrics.

Fixation detection. Fixation detection constitutes the most fundamental process during eye tracking experimentation as it has a great influence in next level analysis (Salvucci & Goldberg, 2000). In the present study, fixation detection process is performed using the dispersion based (I-DT) algorithm introduced by Krassanakis, Filippakopoulou, and Nakos (2014). This algorithm is executed through EyeMMV toolbox (Krassanakis et al., 2014), which was designed in order to serve as a post experimental analysis tool. The performance of the used algorithm requires the selection of three parameters; two spatial parameters (t1,t2) and a temporal one which correspond to the minimum fixation duration. The spatial parameter t1 is linked to the spatial range of foveal vision while spatial parameter t2 may be used in order to remove the noise produced by the recording process.

The selection of fixation spatial dispersion thresholds is considered very important for fixation identification process (Blignaut & Beelders, 2009). The spatial parameters of the used algorithm are based on the computation of the Euclidean distance of each fixation cluster. Hence, the expression of spatial thresholds as radial values of fixation clusters, as indicated in several studies (see e.g. Camilli, Nacchia, Terenzi, & Di Nocera, 2008), allows the selection of the appropriate thresholds. Several studies (Salvucci & Goldberg, 2000; Jacob & Karn, 2003; Camilli et al., 2008) suggest the selection of this radical threshold within the range of 0.25o-1o of visual angle while more recent studies (Blignaut 2009, Blignaut & Beelders, 2009) indicate that the selection of this threshold may lie within the range of 0.7o-1.3o of visual angle. Taking into consideration the above cited studies and the fact that the noise produced by the eye tracker equipment is quite small, and practically very close to zero (Table 3), the same value for both spatial parameters t1 and t2 is selected, equal to the value of t1= t2=1o of visual angle (corresponds approximately to 36pixels in stimuli monitor).

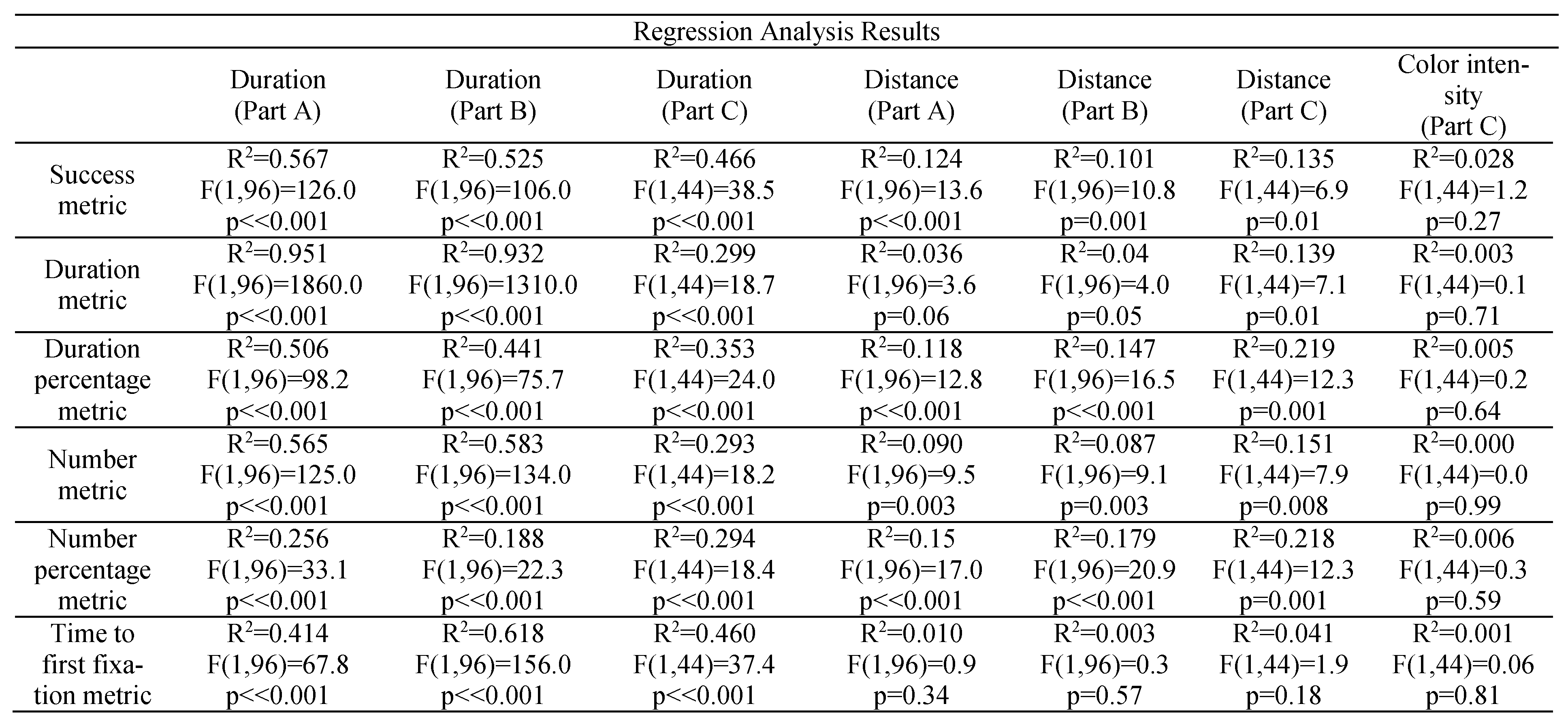

Table 3.

Regression analysis results (R2, F, p values). Experimental parameters and statistical metrics serve as the dependent and the independent variables correspondingly for the performance of the regression analysis.

On the other hand, the temporal parameter of the used algorithm corresponds to the minimum duration of fixation events. Several studies discuss the temporal threshold during fixation detection. According to the study of Jacob and Karn (2003) and this of Poole and Ball (2005) the minimum fixation duration values are reported within the range of 100-200ms. Additionally, Goldberg and Kotval (1999) suggest that the discussed range lies between 100-150msec while Duchowski (2007) mentions that the minimum duration is performed after the time threshold of 150ms. The selection of temporal threshold is also very important for the process of fixation analysis while it must be compatible with the experimental conditions. The present study is executed under free viewing conditions. According to the study of Manor and Gordon (2003) the optimal temporal duration in free viewing tasks corresponds to the value of 100ms. Hence, the temporal parameter for the execution of the performed algorithm is selected to be equal to 100ms.

Moving point detection. The investigation of the research question posed within the present study requires the definition of the concept of moving point detection. As mentioned above, the process is referred to the function of foveal vision. Taking into account the fact that the location of human gaze is relative stationary (Poole & Ball, 2005) during the execution of fixation events a spatial buffer may be considered for the process of moving point detection. For the present study all fixation points that are distributed within this area are linked with the detection of point symbol. The selection of spatial value radius can be based on physical characteristics of human eye as well as in the spatial accuracy of the recorded data. Despite the fact that the spatial limits of fovea are not discriminant (Strasburger, Rentschler, & Jüttner, 2011) the range of its central region corresponds to the value of 5.2o of visual angle while the highest acuity correspond in a range of 1o of visual angle (Wandell, 1995). Hence, the generation of spatial buffers around each moving point is based on the value of 1o of visual angle, which is greater than the average achieved spatial accuracy of the experimental study (see section Results). The generated areas constitute the Areas of Interest (AOIs) of the experimental study.

Statistical Metrics based on fixation analysis. Several authors suggest the examination of eye tracking data through the definition of statistical metrics (e.g. Just & Carpenter, 1976; Goldberg & Kotval, 1999; Poole & Ball, 2005). In the present work, both the investigation of minimum required duration threshold as well as the study of subjects' general reaction during the observation of moving point symbol are based on the examination of fixation-derived statistical metrics. More specifically, six statistical metrics are considered for the analysis of each visual scene including:

- “Success metric”: This metric is computed as subjects' percentage that detects the moving point symbol. The values of this metric lies in the range of [0,1] where 0 value indicates that all subjects do not detect moving point symbol while the value 1 indicates that 100% of subjects achieve the moving point detection.

- “Duration metric”: This metric expresses the average total durations that are linked with the detection of moving point symbol produced by the calculation of the corresponded average values produced by all subjects' recordings.

- “Duration percentage metric”: This metric expresses the average percentage of duration required for the detection of moving point symbol. The metric is produced by the calculation of the corresponding average values produced by all subjects' recordings. The values lie in the range of [0,1] where 0 value indicates that all fixations performed within the visual scene are not linked with the detection of moving point symbol while value 1 indicates that 100% of the performed fixations correspond to the detection of moving point symbol.

- “Number metric”: This metric expresses the average number of fixations related to moving point detection.

- “Number percentage metric”: This metric refers to the average percentage of fixations’ number that is linked with moving point detection to the total number of fixations in the visual scene. The values of this metric lies in the range of [0,1] where 0 value indicates that all fixations performed within the visual scene are not linked with the detection of moving point symbol while value 1 indicates that the 100% of the performed fixations correspond to the detection of moving point symbol.

- “Time to first fixation metric”: This metric indicates the average duration value, which is required for the first fixation in moving point symbol on the examined visual scene. More specifically, the metric expresses the duration between the appearance of the new frame until the first fixation (by the central vision) in the moving point symbol.

The statistical metrics mentioned above serve as the dependent variables of the experimental study. More specifically, the statistical metrics express the visual behavior of all subjects, taking into account that metrics are produced from the gaze data of all subjects. Additionally, it must be reported that the value of a statistical metric is positive when the moving point symbol is detected. Among the examined statistical metrics, the “Time to first fixation metric” is considered the most important for the computation of the investigated duration threshold. More specifically, the computation of the examined threshold is based only on the positive values produced by “Time to first fixation metric”. The “Duration metric” is used in order to validate the experimental approach which considers that the variable of moving point symbol duration affects directly the total duration of fixations that are linked with its detection. The implementation of the rest of metrics aims at examining the general visual reaction of subjects after their correlation with the experimental variables.

Results

Overall visual reaction

The computation of the statistical metrics based on the performed fixations during the presentation of the experimental stimuli allows the study of visual reaction process. More specifically, the examination of the overall visual reaction is based on the comparison of all statistical metrics values (included zero values of “Time to first fixation metric”) with the parameters of visual scenes (i.e. the parameter of duration for all experimental parts, the parameter of distance for the experimental parts A & B, as well as the parameter of level of information expressed by intensity changes for Part C). The model of linear regression is used towards the examination of possible correlation among the experimental parameters while the results are produced considering the gaze data collected through all experimental parts (Part A, B, and C). The produced regression analysis results are presented in Table 3.

The corresponding diagrams that refer to the linear regression analysis results presented in Table 3, including the equations of each linear model, can been found in pp. 163 -169 of the PDF file available at this link: http://dspace.lib.ntua.gr/handle/123456789/39974 (Krassanakis, 2014).

Duration threshold

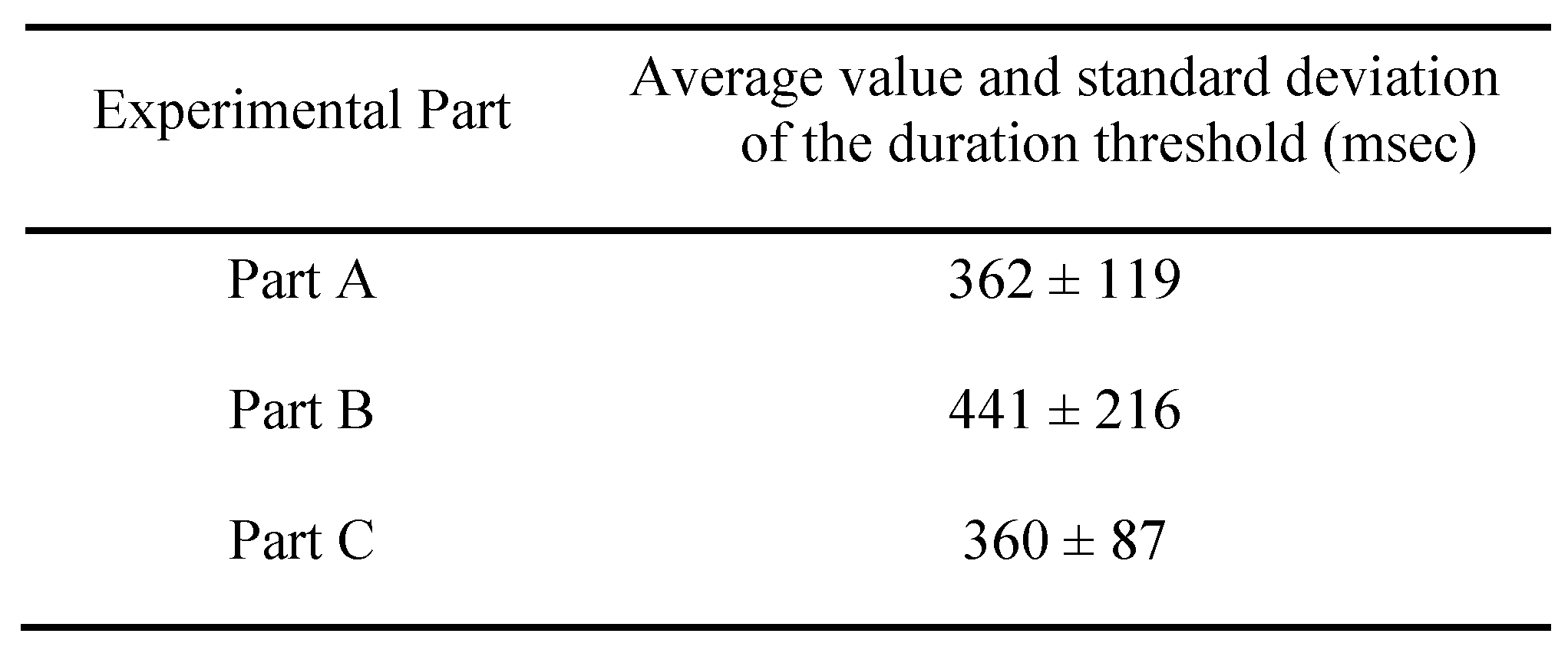

The computation of the investigated duration threshold is based on the computation of the “Time to first fixation metric”. As mentioned above, considering that this metric is calculated only for the cases that the moving point symbols is detected by the subjects, its values may reveal the average required duration for the examined visual process. Hence, the duration threshold is defined as the average value of this statistical metric, while it indicates the required time needed for the detection of the moving point symbol by the central vision. The computation is performed for all experimental parts (A, B, & C) and the results are presented in Table 4.

Table 4.

Average value and standard deviation of the duration threshold (msec) for all experimental Parts.

Discussion

The analysis of the collected gazes based on statistical metrics derived from fixation events allows the overall examination of subjects' visual reaction, as well as the computation of the minimum duration threshold required for the detection of moving point symbols on the experimental base maps. Concerning the overall visual reaction, the comparison between the “Success metric” with the parameter of duration indicates that a critical zone for moving point detection exists in a range around 400 msec (see Table 3) validating early findings produced by a preliminary examination (Krassanakis et al., 2013). Additionally, the comparison between the “Duration metric” and the parameter of moving point duration indicates that duration is the most critical parameter towards the detection of moving point symbol. This finding is validated considering the parameters of linear regression model for the case of blank background in Part A (R2=0.951, F(1,96)=1860.0, p<<0.001) as well as for the topographic map in part B (R2=0.932, F(1,96)=1310.0, p<<0.001). In Part C, the “Duration metric” is practically uncorrelated with the parameter of duration of moving point symbol (R2=0.299, F(1,96)=18.7, p<<0.001). Hence, the selected range (200-750ms) for the parameter of moving point duration is well designed. Furthermore, the values of “Duration percentage metric” are not correlated with the parameter of duration (R2~0.5 for both blank and topographic background) while a similar result is revealed concerning the “Number percentage metric”. This result is obvious considering that subjects' gazes are distracted after the detection of moving point. On the other hand, the “Number metric” has similar values for the case of blank (Part A) as well as map (Part B) background concluding that the level of information does not affect the process of moving point detection.

While the examination of the aforementioned statistical metrics derives some critical indication related to the detection process and the overall visual reaction of, the computation of the examined duration threshold is produced by the values of “Time to first fixation metric”. Despite that the computation of the examined threshold is based on the positive values of “Time to first fixation metric”, all values of this index (including zero values) are used for the examination of the overall visual reaction. The performance of the whole experimental population is indicated in the diagrams of regression analysis (as well as in the parameters of regression analysis). Since the correlation between the examined variables is unknown, linear regression model constitute the first approach and it is used for the analysis. The diagrams of linear regression models indicate that the critical zone corresponds to a time span around 400 msec for blank and map background while for the case of map backgrounds with the discrimination in color intensities the corresponded range lies between 337.5-475msec. The final duration threshold corresponds to the value of 360±87msec (Table 4) and it is calculated considering the produced values of “Time to first fixation metric” for the experimental Part C. At this point, it must be mentioned that this value is computed very accurately having a standard deviation smaller than the minimum duration of a fixation event.

The computed minimum required duration threshold for the detection of the moving point symbol is equal to a value greater than the average fixation duration which corresponds to the value of 275msec according to Rayner (1998), while the examination of the threshold, within the present study, is performed using static backgrounds. Other research studies suggest that the visual search process of a moving point symbol is more effective in the case that the moving point symbol is moved along static objects than the case where the movement of a target symbol takes place along moving objects (Royden, Wolfe, & Klempen, 2001; Matsuno & Tomonaga, 2006). More specifically, in the study of Royden et al. (2001) subjects' reaction time is calculated during the process of visual search of moving point symbols examining different types of movement including uniform, random as well as Brownian motion and corresponds to values smaller than the threshold of 500 msec. This threshold value is also validated in the study of Matsuno & Tomonaga (2006). Both Royden et al. (2001) and Matsuno & Tomonaga (2006) studies examine subject' visual reaction during the execution of visual search task which indicates the existence of several cognitive parameters in the process of visual scene examination. The introduction of a task (which is a cognitive process that introduces cognitive load) will affect the produced results towards the computation of perceptual thresholds. In the present study, the duration threshold is calculated under free viewing conditions (without any visual search task). Considering that the examined value corresponds to a perceptual threshold that can be measured from the unconscious reaction of the subjects’ central vision. Hence, it is reasonable that the calculated duration threshold corresponds to a smaller value than the one computed within the aforementioned studies.

The investigation of the examined threshold is also compared with the variable of distance that expresses the magnitude of change of moving point symbol in terms of location differences between successive scenes. As indicated from the regression analysis models (Table 3) in Part A, Part B, and Part C this parameter does not affect the process of moving point symbol detection; the parameter of R2 of linear regression model corresponds to values near zero (<0.22) for all statistical metrics which is translated to practically uncorrelated parameters. As mentioned above a similar result can be derived for the parameter of information level, expressed by cartographic backgrounds with discriminant color intensity levels as any possible correlation can be concluded on the basis of the linear regression model adaptation results (Table 3). Hence, these findings indicate the dominance of motion during the observation of blank and cartographic backgrounds consisted of several static features that are candidates to serve as distractors. This outcome is in line with psychological studies which mention that the feature of motion is dominant during the visual process and serves as a pre-attentive feature that is able to guide the vision in the stage of selective attention (Wolfe & Horowitz, 2004; Wolfe, 2005).

The minimum duration threshold for moving point detection is examined through the present study. In cartographic research, the variable of duration is directly connected to rate of change. Rate of change can be defined as the ratio m/d, where m is the magnitude of change between frames and d is the duration of each frame, while this variable can be referred to geographic position or an attribute (Slocum et al., 2009). In the present experiment, the magnitude of change corresponds to one change in point position per frame. Therefore, rate of change is equal to the ratio 1/d, which means the variable is directly related to the values of duration variable. Representative duration values (within the range 100msec-3sec) and different point positions values (despite that the number of changes is always equal to one change per frame changes according to the definition of rate of change variable) are examined in order to avoid biases existence in the computed threshold. The attribute of motion in point symbols could be also described by the variable of speed. Hence, the experimental design of future studies can be based on this variable extending the results of the presented approach.

Conclusion

The results of the paper will contribute to the basic research that leads to the standardization of visual variables in animated mapping. The contribution of the present experimental study in the cartographic research is threefold; (a) The minimum threshold of the dynamic variable of duration required for the detection of a moving point symbol on cartographic backgrounds is computed with a standard deviation smaller than the minimum reported fixation duration. Hence, duration changes smaller than the computed threshold are not perceived by map readers. This outcome can be helpful in map design and mapbased system design. (b) important evidences about the perception of the parameter of point symbol location changes, based on Euclidean distance changes as well as for this level of background information, expressed by color intensity changes, are produced indicating that they do not affect the process of moving point detection and validating the dominance of movement elements on cartographic backgrounds. (c) An integrated methodology based on eye movement recordings and analysis is presented serving as a robust approach for the investigation of perception thresholds related to map design variables. In the framework of the present work, the analysis of the collected gaze data is executed after the performance of a validation procedure based on measurement using a pair of artificial eyes and presenting a practical method towards the verification of the collected data quality. The process of selection of fixation analysis parameters serves as an identical example for future studies based on analyses using dispersion-based algorithms (I-DT) for fixation identification. The experimental results could be directly applied for the design of animated cartographic products mainly used to highlight the position of a point entity (e.g. vehicles or observers’ position during real time navigation etc.).

Appendix

Table A1.

The locations coordinates (in pixels) of point map symbols as well as selected distances (in pixels) and durations (into two groups, in msec).

Table A1.

The locations coordinates (in pixels) of point map symbols as well as selected distances (in pixels) and durations (into two groups, in msec).

| Point Symbol | X (pixels) | Y (pixels) | Distance (pixels) | Duration A (msec) | Duration B (msec) | |||||

|---|---|---|---|---|---|---|---|---|---|---|

| T1 | 73 | 101 | - | 2000 | 700 | |||||

| T2 | 1039 | 743 | 1160 | 800 | 450 | |||||

| T3 | 270 | 873 | 780 | 400 | 300 | |||||

| T4 | 1245 | 86 | 1260 | 200 | 250 | |||||

| T5 | 932 | 78 | 300 | 2000 | 700 | |||||

| T6 | 465 | 455 | 600 | 600 | 350 | |||||

| T7 | 606 | 566 | 180 | 400 | 300 | |||||

| T8 | 1198 | 941 | 700 | 800 | 450 | |||||

| T9 | 1242 | 1008 | 80 | 600 | 350 | |||||

| T10 | 49 | 38 | 1540 | 200 | 250 | |||||

| T11 | 156 | 568 | 540 | 100 | 150 | |||||

| T12 | 1200 | 220 | 1100 | 2000 | 700 | |||||

| T13 | 861 | 250 | 340 | 3000 | 900 | |||||

| T14 | 288 | 864 | 840 | 600 | 350 | |||||

| T15 | 1221 | 47 | 1240 | 1000 | 500 | |||||

| T16 | 1252 | 155 | 100 | 800 | 450 | |||||

| T17 | 74 | 911 | 1400 | 100 | 150 | |||||

| T18 | 634 | 915 | 560 | 1000 | 500 | |||||

| T19 | 610 | 56 | 860 | 400 | 300 | |||||

| T20 | 1029 | 898 | 940 | 2000 | 700 | |||||

| T21 | 753 | 389 | 580 | 400 | 300 | |||||

| T22 | 324 | 489 | 440 | 800 | 450 | |||||

| T23 | 125 | 501 | 200 | 400 | 300 | |||||

| T24 | 1223 | 18 | 1200 | 600 | 350 | |||||

| T25 | 335 | 224 | 920 | 3000 | 900 | |||||

| T26 | 531 | 395 | 260 | 800 | 450 | |||||

| T27 | 212 | 950 | 640 | 3000 | 900 | |||||

| T28 | 1137 | 318 | 1120 | 200 | 250 | |||||

| T29 | 89 | 943 | 1220 | 100 | 150 | |||||

| T30 | 159 | 432 | 500 | 600 | 350 | |||||

| T31 | 810 | 741 | 720 | 2000 | 700 | |||||

| T32 | 1224 | 836 | 420 | 100 | 150 | |||||

| T33 | 79 | 179 | 1320 | 400 | 300 | |||||

| T34 | 329 | 53 | 280 | 1000 | 500 | |||||

| T35 | 1000 | 974 | 1140 | 1000 | 500 | |||||

| T36 | 543 | 392 | 740 | 200 | 250 | |||||

| T37 | 619 | 599 | 220 | 600 | 350 | |||||

| T38 | 448 | 938 | 380 | 3000 | 900 | |||||

| T39 | 1233 | 57 | 1180 | 800 | 450 | |||||

| T40 | 122 | 972 | 1440 | 100 | 150 | |||||

| T41 | 41 | 176 | 800 | 2000 | 700 | |||||

| T42 | 1244 | 612 | 1280 | 200 | 250 | |||||

| T43 | 77 | 39 | 1300 | 3000 | 900 | |||||

| T44 | 490 | 580 | 680 | 200 | 250 | |||||

| T45 | 1144 | 666 | 660 | 1000 | 500 | |||||

| T46 | 1025 | 678 | 120 | 100 | 150 | |||||

| T47 | 1004 | 644 | 40 | 3000 | 900 | |||||

| T48 | 1198 | 785 | 240 | 1000 | 500 | |||||

| T49 | 102 | 14 | 1340 | 2000 | 700 | |||||

Table A2.

The location coordinates (in pixels) for each selected combination of duration (in msec) and background with different color intensity level expressed as changes (%) of the main cartographic background.

Table A2.

The location coordinates (in pixels) for each selected combination of duration (in msec) and background with different color intensity level expressed as changes (%) of the main cartographic background.

| Point.Symbol | X(pixels) | Y(pixels) | Duration(msec) | Intensitychange | ||

|---|---|---|---|---|---|---|

| T1 | 73 | 101 | 475 | 0.00% | ||

| T2 | 1039 | 743 | 750 | 35.00% | ||

| T3 | 270 | 873 | 200 | 70.00% | ||

| T4 | 1245 | 86 | 612.5 | 35.00% | ||

| T5 | 932 | 78 | 337.5 | 70.00% | ||

| T6 | 465 | 455 | 612.5 | 0.00% | ||

| T7 | 606 | 566 | 475 | 0.00% | ||

| T8 | 1198 | 941 | 750 | 70.00% | ||

| T9 | 1242 | 1008 | 337.5 | 0.00% | ||

| T10 | 49 | 38 | 200 | 35.00% | ||

| T11 | 156 | 568 | 475 | 70.00% | ||

| T12 | 1200 | 220 | 337.5 | 70.00% | ||

| T13 | 861 | 250 | 475 | 35.00% | ||

| T14 | 288 | 864 | 612.5 | 35.00% | ||

| T15 | 1221 | 47 | 200 | 0.00% | ||

| T16 | 1252 | 155 | 750 | 0.00% | ||

| T17 | 74 | 911 | 475 | 70.00% | ||

| T18 | 634 | 915 | 612.5 | 35.00% | ||

| T19 | 610 | 56 | 200 | 0.00% | ||

| T20 | 1029 | 898 | 337.5 | 35.00% | ||

| T21 | 753 | 389 | 475 | 35.00% | ||

| T22 | 324 | 489 | 200 | 70.00% | ||

| T23 | 125 | 501 | 612.5 | 0.00% | ||

| T24 | 1223 | 18 | 200 | 70.00% | ||

| T25 | 335 | 224 | 475 | 0.00% | ||

| T26 | 531 | 395 | 612.5 | 0.00% | ||

| T27 | 212 | 950 | 200 | 35.00% | ||

| T28 | 1137 | 318 | 612.5 | 70.00% | ||

| T29 | 89 | 943 | 337.5 | 35.00% | ||

| T30 | 159 | 432 | 612.5 | 70.00% | ||

| T31 | 810 | 741 | 337.5 | 0.00% | ||

| T32 | 1224 | 836 | 200 | 0.00% | ||

| T33 | 79 | 179 | 475 | 70.00% | ||

| T34 | 329 | 53 | 750 | 35.00% | ||

| T35 | 1000 | 974 | 337.5 | 0.00% | ||

| T36 | 543 | 392 | 750 | 35.00% | ||

| T37 | 619 | 599 | 612.5 | 70.00% | ||

| T38 | 448 | 938 | 337.5 | 70.00% | ||

| T39 | 1233 | 57 | 475 | 35.00% | ||

| T40 | 122 | 972 | 750 | 0.00% | ||

| T41 | 41 | 176 | 750 | 70.00% | ||

| T42 | 1244 | 612 | 337.5 | 35.00% | ||

| T43 | 77 | 39 | 750 | 70.00% | ||

| T44 | 490 | 580 | 200 | 35.00% | ||

| T45* | 578 | 599 | 750 | 0.00% | ||

| T46* | 740 | 422 | 475 | 0.00% | ||

References

- Alaçam, Ö., and M. Dalci. 2009. A Usability Study of WebMaps with Eye Tracking Tool: The Effect of Iconic Representation of Information. In Human-Computer Interaction. Edited by J.A. Jacko. LNCS 5610. Springer-Verlang Berlin Heidelber: pp. 12–21. [Google Scholar]

- Bargiota, T., V. Mitropoulos, V. Krassanakis, and B. Nakos. 2013. Measuring locations of critical points along cartographic lines with eye movements. Proceedings of the 26th International Cartographic Association Conference, Dresden, Germany. [Google Scholar]

- Bejdek, J. C. 1981. Pattern Recognition with Fuzzy Objective Function Algorithms. New York: Plenum Press. [Google Scholar]

- Bertin, J. C. 1967, 1983. Semiology of Graphics: Diagrams, Networks, Maps, Madison. University of Wisconsin Press (French Edition 1967). [Google Scholar]

- Blignaut, P. 2009. Fixation identification: the optimum threshold for a dispersion algorithm. Attention, Perception, & Psychophysics 71, 4: 881–895. [Google Scholar]

- Blignaut, P., and T. Beelders. 2009. The effect of fixational eye movements on fixation identification with dispersion-based fixation detection algorithm. Journal of Eye Movement Research 2, (5):4: 1–14. [Google Scholar] [CrossRef]

- Bodade, R., and S. Talbar. 2010. Novel approach of accurate iris localisation form high resolution eye images suitable for fake iris detection. International Journal of Information Technology and Knowledge Management 3, 2: 685–690. [Google Scholar]

- Camilli, M., R. Nacchia, M. Terenzi, and F. Di Nocera. 2008. ASTEF: A simple tool for examining fixations. Behavior Researcher Methods 40, 2: 373–382. [Google Scholar] [CrossRef] [PubMed]

- Ciołkosz-Styk, A. 2012. The visual search method in map perception research. Geoinformation Issues 4, 1: 33–42. [Google Scholar]

- Coey, C. A., S. Wallot, M. J. Richardson, and G. V. Orden. 2012. On the Structure of Measurement Noise in Eye-Tracking. Journal of Eye Movement Research 5, (4):5: 1–10. [Google Scholar] [CrossRef]

- DiBiase, D., A. M. MacEachren, J. B. Krygier, and C. Reeves. 1992. Animation and the Role of Map Design in Scientific Visualization. Cartography and Geographic Information Systems 19, 4: 201–214. [Google Scholar] [CrossRef]

- Dong, W., H. Liao, F. Xu, Z. Liu, and S. Zhang. 2014. Using eye tracking to evaluate the usability of animated maps. Science China Earth Sciences 57, 3: 512522. [Google Scholar] [CrossRef]

- Duchowski, A. T. 2002. A breath-first survey of eyetracking applications. Behavior Research Methods, Instruments & Computers 34, 4: 455–470. [Google Scholar]

- Duchowski, A.T. 2007. Eye Tracking Methodology: Theory & Practice, 2nd ed. London: SpringerVerlag. [Google Scholar]

- Fabrikant, S. 2005. Towards an understanding of geovisualization with dynamic displays: Issues and Prospects. Proceedings Reasoning with Mental and External Diagrams: Computational Modeling and Spatial Assistance; 2005, American Association for Artificial Intelligence: Stanford. [Google Scholar]

- Fish, C. 2010. Change detection in animated choropleth maps. MSc Thesis, Michigan State University. [Google Scholar]

- Fish, C., K. P. Goldsberry, and S. Battersby. 2011. Change blindness in animated choropleth maps: an empirical study. Cartography and Geographic Information Science 38, 4: 350–362. [Google Scholar] [CrossRef]

- Garlandini, S., and S. I. Fabrikant. 2009. Evaluating the Effectiveness and Efficiency of Visual Variables for Geographic Information Visualization. In COSIT 2009, LNCS 5756. Edited by et al. Hornsb Springer-Verlag Berlin Heidelberg: pp. 195–211. [Google Scholar]

- Goldberg, J. H., and X. P. Kotval. 1999. Computer interface evaluation using eye movements: methods and constructs. International Journal of Industrial Ergonomics 24: 631–645. [Google Scholar] [CrossRef]

- Griffin, A. L., and S. Bell. 2009. Applications of signal detection theory to geographic information science. Cartographica: The International Journal for Geographic Information and Geovisualization 44, 3: 145–158. [Google Scholar] [CrossRef]

- Griffin, A. M., A. M. MacEachren, F. Hardisty, E. Steiner, and B. Li. 2006. A Comparison of Animated Maps with Static Small-Multiple Maps for Visually Identifying Space-Time Clusters. Annals of the Association of American Geographers 96, 4: 740–753. [Google Scholar] [CrossRef]

- Harrower, M. 2007a. The Cognitive Limits of Animated Maps. Cartographica 42, 4: 349–357. [Google Scholar] [CrossRef]

- Harrower, M. 2007b. Unclassed animated choropleth maps. The Cartographic Journal 44, 4: 313–320. [Google Scholar] [CrossRef]

- Harrower, M., and S. Fabrikant. 2008. The role of map animation for geographic visualization. In Geographic Visualization. Edited by Dodge, McDerby and Turner. John Wiley & Sons: London: pp. 49–65. [Google Scholar]

- Jacob, R. J. K., and K. S. Karn. 2003. Eye Tracking in Human-Computer Interaction and Usability Research: Ready to Deliver the Promises. In The Mind's Eyes: Cognitive and Applied Aspects of Eye Movements. Edited by Radach Hyona & Deubel. Oxford: Elsevier Science, pp. 573–605. [Google Scholar]

- Jenks, G. F. 1973. Visual Integration in Thematic Mapping: Fact or Fiction? International Yearbook of Cartography 13: 27–35. [Google Scholar]

- Just, M. A., and P. A. Carpenter. 1976. Eye fixation and cognitive processes. Cognitive Psychology 8: 441–480. [Google Scholar] [CrossRef]

- Incoul, A., K. Ooms, and P. De Maeyer. 2015. Comparing paper and digital topographic maps using eye tracking. In Modern Trends in Cartography. Edited by J. Brus et al. and et al. Springer International Publishing: pp. 339–356. [Google Scholar]

- Karagiorgou, S., V. Krassanakis, V. Vescoukis, and B. Nakos. 2014. Experimenting with polylines on the visualization of eye tracking data from observations of cartographic line. Proceedings of the 2nd International Workshop on Eye Tracking for Spatial Research, Vienna, Austria, 22-26; Edited by P. Kiefer, I. Giannopoulos, M. Raubal and A. Krüger. (co-located with the 8th International Conference on Geographic Information Science (GIScience 2014)). [Google Scholar]

- Karl, D. 1992. Cartographic Animation: Potential and Research Issues. Cartographic Perspectives 13: 3–9. [Google Scholar] [CrossRef]

- Keates, J. S. 1996. Understanding maps, 2nd ed. Harlow, Essex, England: Longman. [Google Scholar]

- Kiik, A. 2015. Cartographic design of thematic polygons: a comparison using eye-movements metrics analysis. MSc Thesis, 2015, Department of Physical Geography and Ecosystem Science, Lund University, Sweden. [Google Scholar]

- Kraak, M J., and A. M. MacEachren. 1994. Visualization of spatial data's temporal component. Proceedings, Spatial Data Handling, Advances in GIS Research, Edinburg. [Google Scholar]

- Krassanakis, V. 2013. Exploring the map reading process with eye movement analysis. In Eye Tracking for Spatial Research, Proceedings of the 1st International Workshop (in conjunction with COSIT 2013). Edited by Kiefer, Giannopoulos, Raubal and Hegarty. Scarborough, United Kingdom: pp. 2–7. [Google Scholar]

- Krassanakis, V. 2014. Development of a methodology of eye movement analysis for the study of visual perception in animated maps . Doctoral Dissertation, 2014, School of Rural and Surveying Engineering, National Technical University of Athens. [Google Scholar]

- Krassanakis, V., V. Filippakopoulou, and B. Nakos. 2011a. The influence of attributes of shape in map reading process. Proceedings of the 25th International Cartographic Association Conference, Paris, France. [Google Scholar]

- Krassanakis, V., A. Lelli, I. E. Lokka, V. Filippakopoulou, and B. Nakos. 2013. Investigating dynamic variables with eye movement analysis. Proceedings of the 26th International Cartographic Association Conference, Dresden, Germany. [Google Scholar]

- Krassanakis, V., V. Filippakopoulou, and B. Nakos. 2011b. An Application of Eye Tracking Methodology in Cartographic Research. Proceedings of the EyeTrackBehavior2011(Tobii), Frankfurt, Germany. [Google Scholar]

- Krassanakis, V., V. Filippakopoulou, and B. Nakos. 2014. EyeMMV toolbox: An eye movement post-analysis tool based on a two-step spatial dispersion threshold for fixation identification. Journal of Eye Movement Research 7, (1): 1: 1–10. [Google Scholar] [CrossRef]

- Lloyd, R. 1997. Visual Search Processes Used in Map Reading. Cartographica 34, 1: 11–32. [Google Scholar] [CrossRef]

- Lloyd, R. 2005. Attention on Maps. Cartographic Perspectives 52: 28–57. [Google Scholar] [CrossRef]

- Lowe, R., and J. M. Boucheix. 2010. Attention direction in static and animated diagrams. In Diagrammatic Representation and Inference. Edited by A.K. Goel, M. Jamnik and N.H. Narayanan. Springer Berlin Heidelberg: pp. 250–256. [Google Scholar]

- MacEachren, A. M. 1995. How maps work: Representation, Visualization, and Design. New York: The Guilford press. [Google Scholar]

- Maggi, S., and S. I. Fabrikant. 2014. Triangulating Eye Movement Data of Animated Displays. Proceedings of the 2nd International Workshop on Eye Tracking for Spatial Research, Vienna, Austria, 27-31; Edited by P. Kiefer, I. Giannopoulos, M. Raubal and A. Krüger. (co-located with the 8th International Conference on Geographic Information Science (GIScience 2014)). pp. 27–31. [Google Scholar]

- Maggi, S., S. I. Fabrikant, J. P. Imbert, and C. Hurter. 2015. How Do Display Design and User Characteristics Matter in Animated Visualizations of Movement Data? Proceedings of the 27th International Cartographic Association Conference, Rio de Janeiro, Brazil. [Google Scholar]

- Manor, B. R., and E. Gordon. 2003. Defining the temporal threshold for ocular fixation in free-viewing visuocognitive tasks. Journal of Neuroscience Methods 128: 85–93. [Google Scholar] [CrossRef] [PubMed]

- Marr, D. 1982. Vision: A Computational Investigation into the Human Representation and Processing of Visual Information. W. H. Freeman and Company, New York. [Google Scholar]

- Matsuno, T., and M. Tomonaga. 2006. Visual search for moving and stationary items in chimpanzees (Pan troglodytes) and humans (Homo sapiens). Behavioural Brain Research 172: 219–232. [Google Scholar] [CrossRef] [PubMed]

- Michaelidou, E., V. Filippakopoulou, B. Nakos, and A. Petropoulou. 2005. Designing Point Map Symbols: The effect of preattentive attribute of shape. Proceedings of the 22th International Cartographic Association Conference, A Coruna, Spain. [Google Scholar]

- Montello, D. R. 2002. Cognitive Map-Design Research in the Twentieth Century: Theoretical and Empirical Approaches. Cartography and Geographic Information Science 29, 3: 283–304. [Google Scholar] [CrossRef]

- Moon, S., E. K. Kim, and C. S. Hwang. 2014. Effects of Spatial Distribution on Change Detection in Animated Choropleth Maps. Journal of the Korean Society of Surveying, Geodesy, Photogrammetry and Cartography 32, 6: 571–580. [Google Scholar] [CrossRef]

- Nossum, A. S. 2012. Semistatic Animation-Integrating Past, Present and Future in Map Animations. The Cartographic Journal 49, 1: 43–54. [Google Scholar] [CrossRef]

- Ooms, K., P. De Mayer, V. Fack, E. Van Assche, and F. Witlox. 2012. Interpreting maps through the eyes of expert and novice users. International Journal of Geographical Information Science 26, 10: 1773–1788. [Google Scholar] [CrossRef]

- Ooms, K., P. De Mayer, and V. Fack. 2014. Study of the attentive behavior of novice and expert map users using eye tracking. Cartography and Geographic Information Science 41, 1: 37–54. [Google Scholar] [CrossRef]

- Opach, T., I. Gołębiowska, and S. I. Fabrikant. 2013. How Do People View Multi-Component Animated Maps? The Cartographic Journal, 1–13. [Google Scholar] [CrossRef]

- Opach, T., and A. Nossum. 2011. Evaluating the usability of cartographic animations with eye-movement analysis. Proceedings of the 27th International Cartographic Association Conference, Paris, France. [Google Scholar]

- Poole, A., and L. J. Ball. 2005. Eye Tracking in HumanComputer Interaction and Usability Research: Current Status and Future Prospects. In Encyclopedia of human computer interaction. Edited by C. Ghaoui. Pennsylvania: Idea Group, pp. 211–219. [Google Scholar]

- Popelka, S., and A. Brychtova. 2013. Eye-tracking Study on Different Perception of 2D and 3D Terrain Visualization. The Cartographic Journal 50, 3: 240–246. [Google Scholar] [CrossRef]

- Robinson, A. H., J. L. Morrsion, P. C. Muehrcke, A. J. Kimerling, and S. C. Guptill. 1995. Elements of Cartography. New York: John Wiley & Sons. [Google Scholar]

- Royden, C. S., J. M. Wolfe, and N. Klempen. 2001. Visual search asymmetries in motion and optic flow fields. Perception & Psychophysics 63, 3: 436–444. [Google Scholar]

- Russo, P., C. Pettit, A. Coltekin I, M. of, M. Cox, and C. Bayliss. 2014. Understanding soil acidification process using animation and text: An empirical user evaluation with eye tracking. In Cartography from Pole to Pole. Edited by M. Buchroithner and et al. Springer Berlin Heidelberg: p. pp. 431448. [Google Scholar]

- Salvucci, D. D., and J. H. Goldberg. 2000. Identifying Fixations and Saccades in EyeTracking Protocols. Proceedings of the Symposium on Eye Tracking Research and Applications; pp. 71–78. [Google Scholar]

- Slocum, T. A., R. B. McMaster, F. C. Kessler, and H. H. Howard. 2009. Thematic Cartography and Geovisualization, 3rd Ed. ed. Prentice Hall Series in Geographic Information Science. [Google Scholar]

- Sluter, R. S. 2001. New theoretical research trends in cartography. Revista Brasileira de Cartografia 53: 29–37. [Google Scholar] [CrossRef]

- Steinke, T. R. 1987. Eye movements studies in cartography and related fields. Studies in Cartography, Monograph 37, Cartographica 24, 2: 40–73. [Google Scholar] [CrossRef]

- Stofer, K., and X. Che. 2014. Comparing experts and novices on scaffolded data visualizations using eyetracking. Journal of Eye Movement Research 7, (5):2: 1–15. [Google Scholar] [CrossRef]

- Strasburger, H., I. Rentschler, and M. Jüttner. 2011. Peripheral vision and pattern recognition: A review. Journal of Vision 11, (5):13: 1–82. [Google Scholar] [CrossRef]

- Wandell, B. A. 1995. Foundations of vision. Sinauer Associates. [Google Scholar]

- Wolfe, J. M. 2005. Guidance of Visual Search by Preattentive Information. In Neurobiology of attention. Edited by L. Itti, G. Rees and J. Tsotsos. San Diego, CA: Academic Press/Elsevier. [Google Scholar]

- Wolfe, J. M., and T. S. Horowitz. 2004. What attributes guide the deployment of visual attention and how do they do it? Nature Reviews Neuroscience 5: 1–7. [Google Scholar] [CrossRef]

- Xiaofang, W., D. Qingyun, X. Zhiyong, and L. Na. 2005. Research and Design of Dynamic symbol in GIS. Proceedings of the International Symposium on Spatio-temporal Modeling, Spatial Reasoning, Analysis, Data Mining and Data Fusion, Beijing. [Google Scholar]

Copyright © 2016. This article is licensed under a Creative Commons Attribution 4.0 International License.