Gaze Path Stimulation in Retrospective Think-Aloud

Abstract

:Introduction

Previous Research on Think-Aloud and Eye Tracking in Usability Evaluation

Studies of Retrospective and Concurrent Think-Aloud Protocols

Eye Tracking in Usability Tests

Studies Combining Eye Tracking and Think-Aloud

- Will the participants using gaze path stimulated RTA be able to produce as many or more useful comments than users using CTA?

- Do we find fewer or more usability problems with gaze path stimulated RTA than with CTA?

- How will the need for capturing the user’s gaze path and collecting retrospective verbalizations affect planning and running usability tests?

Experimental Setup

Apparatus

Participants

Procedure

Design

Results

Qualitative Analysis

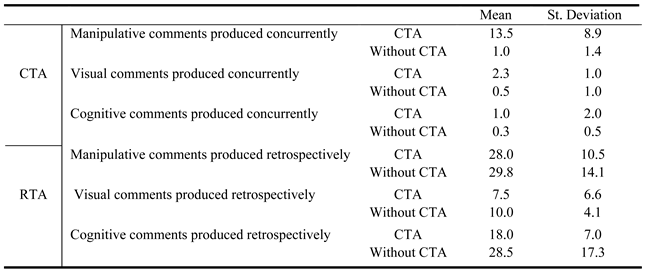

Quantitative Evaluation

“I write the name into this field”,

Of course, I could have clicked all of those…”, or

“Oh, I gave an erroneous input…”.

“I saw it here somewhere…”,

“Then I look for a picture of the car…”, or

“I read it from the previous page …”.

“I remember seeing it before”,

“Now I finally understand that there is a scroll bar on the right”, or

“I found out that I can’t make a search from this page”.

Discussion

Participants

Mobility Restrictions

Duration of the Test

Instructions for the Participants

Role of the Auditory Data

Quality of the Data

Special User Groups

Veridicality

Conclusions

Acknowledgments

References

- Aaltonen, A. 1999. Eye tracking in usability testing: Is it worthwhile? In Usability & eye tracking’ workshop at CHI’99. ACM Press. [Google Scholar]

- Ball, L. J., N. Eger, R. Stevens, and J. Dodd. 2006. Applying the PEEP method in usability testing. Interfaces 67: 15–19. Available online: http://www.psych.lancs.ac.uk/people/uploads/Linden (accessed on 24 September 2008).

- Bojko, A. 2006. Using eye tracking to compare web page designs: A case study. Journal of Usability Studies 1, 3: 112–120. Available online: http://www.upassoc.org/upa_publications/jus/2006_m (accessed on 24 September 2008).

- Boren, M. T., and J. Ramey. 2000. Thinking aloud: Reconciling theory and practice. IEEE Transactions on Professional Communication 43, 3: 261–278. [Google Scholar] [CrossRef]

- Bowers, V. A., and H. L. Snyder. 1990. Concurrent versus retrospective verbal protocol for comparing window usability. In Proceedings of the Human Factors Society 34th Annual Meeting. pp. 1270–1274. [Google Scholar]

- Branch, J. L. 2000. Investigating the information-seeking processes of adolescents: the value of using think alouds and think afters. Library & Information Science Research 22, 4: 371–392. [Google Scholar]

- Branch, J. L. 2001. Junior high students and Think Alouds: Generating information-seeking process data using concurrent verbal protocols. Library & Information Science Research 23, 2: 107–122. [Google Scholar]

- Capra, M. G. 2002. Contemporaneous versus retrospective user-reported critical incidents in usability evaluation. In Proceedings of the Human Factors Society, 46th Annual Meeting. pp. 1973–1977. Available online: http://www.thecapras.org/mcapra/work/Capra_HFES2002_UserReportedCIs.pdf (accessed on 24 September 2008).

- Cooke, L., and E. Cuddihy. 2005. Using eye tracking to address limitations in think-aloud protocol. In Proceedings of the 2005 IEEE International Professional Communication Conference. pp. 653–658. [Google Scholar]

- Denning, S., D. Hoiem, M. Simpson, and K. Sullivan. 1990. The value of thinking aloud protocols in industry: A case study at Microsoft Corporation. In Proceedings of the Human Factors Society 34th Annual Meeting. pp. 1285–1289. [Google Scholar]

- Dickinson, A., J. Arnott, and S. Prior. 2007. Methods for human-computer interaction research with older people. Behaviour & Information Technology 26, 4: 343–352. [Google Scholar]

- Duchowski, A. T. 2006. High-level eye movement metrics in the usability context [Position paper]. CHI2006 Workshop Getting a Measure of Satisfaction from Eyetracking in Practice, April 23; Available online: http://www.amberlight.co.uk/CHI2006/pos_papr_duchowski.pdf (accessed on 24 September 2008).

- Ebling, M. R., and B. E. John. 2000. On the contributions of different empirical data in usability testing. In Proceedings of Symposium on Designing Interactive Systems, DIS’00. pp. 289–296. [Google Scholar]

- Eger, N., L. J. Ball, R. Stevens, and J. Dodd. 2007. Edited by L. J. Ball, M. A. Sasse, C. Sas, T. C. Ormerod, A. Dix, P. Bagnall and T. McEwan. Cueing retrospective verbal reports in usability testing through eye-movement replay. In People and Computers XXI-HCI... but not as we know it: Proceedings of HCI 2007. The British Computer Society. [Google Scholar]

- Ehmke, C., and S. Wilson. 2007. Edited by L. J. Ball, M. A. Sasse, C. Sas, T. C. Ormerod, A. Dix, P. Bagnall and T. McEwan. Identifying web usability problems from eye-tracking data. In People and Computers XXI-HCI... but not as we know it: Proceedings of HCI 2007. The British Computer Society. [Google Scholar]

- Ericsson, K. A., and H. A. Simon. 1993. Protocol Analysis: Verbal Reports as Data, Revised edition. The MIT Press. [Google Scholar]

- Evers, V. 2002. Cross-cultural applicability of user evaluation methods: A case study amongst Japanese, North-American, English and Dutch users. In CHI ’02 Extended Abstracts on Human Factors in Computing Systems. ACM Press: pp. 740–741. [Google Scholar]

- Goldberg, J. H., and X. P. Kotval. 1999. Computer interface evaluation using eye movements: methods and constructs. International Journal of Industrial Ergonomics 24: 631–645. [Google Scholar]

- Goldberg, J. H., M. J. Stimson, M. Lewnstein, N. Scott, and A. M. Wichansky. 2002. Eye tracking in web search tasks: Design implications. In Proceedings of Symposium on Eye Tracking Research & Applications (ETRA 2002). ACM Press: pp. 51–58. [Google Scholar]

- Goldberg, J. H., and A. M. Wichansky. 2003. Edited by R. Radach, J. Hyönä and H. Deubel. Eye tracking in usability evaluation: A practitioner’s guide. In The Mind’s Eye: Cognitive and Applied Aspects of Eye Movement Research. Elsevier Science: pp. 493–516. [Google Scholar]

- Guan, Z., S. Lee, E. Cuddihy, and J. Ramey. 2006. The validity of the stimulated retrospective think-aloud method as measured by eye tracking. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems (CHI ’06). ACM Press: pp. 1253–1262. [Google Scholar]

- Hansen, J. P. 1991. The use of eye mark recordings to support verbal retrospection in software testing. Acta Psychologica 76: 31–49. [Google Scholar]

- Jacob, R. J. K., and K. Karn. 2003. Edited by R. Radach, J. Hyönä and H. Deubel. Eye tracking in humancomputer interaction and usability research: Ready to deliver the promises. In The Mind’s Eye: Cognitive and Applied Aspects of Eye Movement Research. Elsevier Science: pp. 573–605. [Google Scholar]

- Johansen, S. A., and J. P. Hansen. 2006. Do we need eye trackers to tell where people look? In Extended Abstracts of CHI 2006 (Work in Progress). ACM Press: pp. 923–926. [Google Scholar]

- Kim, H. S. 2002. We talk, therefore we think? A cultural analysis of the effect of talking on thinking. Journal of Personality and Social Psychology 83, 4: 828–842. [Google Scholar] [PubMed]

- Kuusela, H., and P. Paul. 2000. A comparison of concurrent and retrospective verbal protocol analysis. American Journal of Psychology 113, 3: 387–404. [Google Scholar] [PubMed]

- Lehtinen, M. 2007. A gaze path cued retrospective thinking aloud technique in usability testing. M.Sc. Thesis, University of Tampere, Interactive Technology. Available online: http://tutkielmat.uta.fi/tutkielma.phtml?id=17069 (accessed on 24 September 2008).

- Lehtinen, M., A. Hyrskykari, and K.-J. Räihä. 2006. Gaze path playback supporting retrospective thinkaloud in usability tests. 2nd Conference on Communication by Gaze Interaction–COGAIN 2006, Turin, Italy, September 4–5; pp. 88–91. Available online: http://www.cogain.org/cogain2006/COGAIN2006_Pr (accessed on 24 September 2008).

- Lin, T. I. 2006. Evaluating usability based on multimodal information: an empirical study. In Proceedings of the 8th International Conference on Multimodal Interfaces (ICMI ’06). ACM Press: pp. 364–371. [Google Scholar]

- Maughan, L., J. Dodd, and R. Walters. 2007. Video replay as a cue in retrospective protocol …Don’t make me think aloud! In Second Scandinavian Workshop on Applied Eye-Tracking (SWAET 2007), Lund. [Google Scholar]

- Nakamichi, N., K. Shoma, M. Sakai, and K. Matsumoto. 2006. Detecting low usability web pages using quantitative data of users’ behavior. In Proceedings of the 28th International Conference on Software Engineering (ICSE’06). ACM Press: pp. 569–576. [Google Scholar]

- Nielsen, J. 1993. Usability Engineering. Academic Press Professional. [Google Scholar]

- Nielsen, J. 2007. Eyetracking research. Available online: http://www.useit.com/eyetracking/ (accessed on 24 September 2008).

- Nielsen, J., T. Clemmensen, and C. Yssing. 2002. Getting access to what goes on in people’s heads? Reflections on the think-aloud technique. In Proceedings of the Second Nordic Conference on Human-Computer Interaction (NordiCHI 2002). pp. 101–110. [Google Scholar]

- Norman, K. L., and E. Murphy. 2004. Usability testing of an Internet form for the 2004 overseas enumeration test: A comparison of think-aloud and retrospective reports. In Human Factors Society 48th Annual Meeting. Human Factors Society: Available online: http://lap.umd.edu/lap/Papers/Tech_Reports/LAP2004TR04/LAP2004TR04.pdf (accessed on 24 September 2008).

- Penzo, M. 2006a. Evaluating the usability of search forms using eyetracking: A practical approach. UXMatters. January 23. Available online: http://www.uxmatters.com/MT/archives/000068.php (accessed on 24 September 2008).

- Penzo, M. 2006b. Label placement in forms. UXMatters. July 12. Available online: http://www.uxmatters.com/MT/archives/000107.php (accessed on 24 September 2008).

- Poole, A., and L. J. Ball. 2006. Edited by C. Ghaoui. Eye tracking in humancomputer interaction and usability research: Current status and future prospects. In Encyclopedia of Human Computer Interaction. Idea Group Publishing: Available online: http://www.alexpoole.info/academic/Poole&Ball%20 (accessed on 24 September 2008).

- Pretorius, M. C., A. P. Calitz, and D. Van Greunen. 2005. The added value of eye tracking in the usability evaluation of a network management tool. In Proceedings of the 2005 Annual Research Conference of the South African Institute of Computer Scientists and Information Technologists on IT Research in Developing Countries. ACM International Conference Proceeding Series; vol. 150, pp. 1–10. [Google Scholar]

- Rayner, K. 1998. Eye movements in reading and information processing: 20 years of research. Psychological Bulletin 124, 3: 372–422. [Google Scholar] [CrossRef] [PubMed]

- Rhenius, D., and G. Deffner. 1990. Evaluation of concurrent thinking aloud using eye-tracking data. In Proceedings of the Human Factors Society 34th Annual Meeting. Human Factors Society: pp. 1265–1269. [Google Scholar]

- Russo, J. E. 1978. Edited by H. K. Hunt. Eye fixations can save the world: A critical evaluation and a comparison between eye fixation and other information processing methodologies. In Advances in Consumer Research. Association for Consumer Research: Vol. 5, pp. 561–570. Available online: http://forum.johnson.cornell.edu/faculty/russo/Eye%20Fixations%20Can%20Save%20the%20World.pdf (accessed on 24 September 2008).

- Russo, J. E. 1979. A software system for the collection of retrospective protocols prompted by eye fixations. Behavior Research Methods & Instrumentation 11, 2: 177–179. [Google Scholar]

- Russo, J. E., E. J. Johnson, and D. L. Stephens. 1989. The validity of verbal protocols. Memory & Cognition 17: 759–769. [Google Scholar]

- Schiessl, M., S. Duda, A. Thölke, and R. Fisher. 2003. Eye tracking and its application in usability and media research. MMI-Interaktiv 6, 41–50. [Google Scholar]

- Seagull, F. J., and Y. Xiao. 2001. Using eye tracking video data to augment knowledge elicitation in cognitive task analysis. In Proceedings of the Human Factors and Ergonomics Society 45th Annual Meeting. Available online: http://hfrp.umm.edu/alarms/12.%20Seagull_Xiao_CT (accessed on 24 September 2008).

- Taylor, K. L., and J. P. Dionne. 2000. Accessing problem solving strategy knowledge: The complementary use of concurrent verbal protocols and retrospective debriefing. Journal of Educational Psychology 92: 413–425. [Google Scholar] [CrossRef]

- Van den Haak, M., M. D. T. De Jong, and P. J. Schellens. 2003. Retrospective vs. concurrent think-aloud protocols: testing the usability of an online library catalogue. Behaviour & Information Technology 22, 5: 339–351. [Google Scholar]

- Van Gog, T., F. Paas, J. J. G. van Merriënboer, and P. Witte. 2005. Uncovering the problem-solving process: Cued retrospective reporting versus concurrent and retrospective reporting. Journal of Experimental Psychology: Applied 11, 4: 237–244. [Google Scholar] [PubMed]

- Van Someren, M., Y. Barnard, and J. Sandberg. 1994. The Think Aloud Method. A Practical Guide Modelling Cognitive Processes. Academic Press. [Google Scholar]

- Velichkovsky, B. M., and J. P. Hansen. 1996. New technological windows into mind: There is more in eyes and brains for human-computer interaction. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems (CHI’96). ACM Press: pp. 496–503. [Google Scholar]

- Wilson, T. D. 1994. The proper protocol: Validity and completeness of verbal reports. Psychological Science 5, 5: 249–252. [Google Scholar]

- Wooding, D. 2002. Fixation maps: Quantifying eyemovement traces. In Proceedings of the Symposium on Eye Tracking Research & Applications (ETRA 2002). ACM Press: pp. 31–36. [Google Scholar]

- Yammiyavar, P. G., T. Clemmensen, and J. Kumar. 2008. Influence of cultural background on non-verbal communication in a usability testing situation. International Journal of Design 2, 2. [Google Scholar]

Copyright © 2008. This article is licensed under a Creative Commons Attribution 4.0 International License.

Share and Cite

Hyrskykari, A.; Ovaska, S.; Majaranta, P.; Räihä, K.-J.; Lehtinen, M. Gaze Path Stimulation in Retrospective Think-Aloud. J. Eye Mov. Res. 2008, 2, 1-18. https://doi.org/10.16910/jemr.2.4.5

Hyrskykari A, Ovaska S, Majaranta P, Räihä K-J, Lehtinen M. Gaze Path Stimulation in Retrospective Think-Aloud. Journal of Eye Movement Research. 2008; 2(4):1-18. https://doi.org/10.16910/jemr.2.4.5

Chicago/Turabian StyleHyrskykari, Aulikki, Saila Ovaska, Pävi Majaranta, Kari-Jouko Räihä, and Merja Lehtinen. 2008. "Gaze Path Stimulation in Retrospective Think-Aloud" Journal of Eye Movement Research 2, no. 4: 1-18. https://doi.org/10.16910/jemr.2.4.5

APA StyleHyrskykari, A., Ovaska, S., Majaranta, P., Räihä, K.-J., & Lehtinen, M. (2008). Gaze Path Stimulation in Retrospective Think-Aloud. Journal of Eye Movement Research, 2(4), 1-18. https://doi.org/10.16910/jemr.2.4.5