Quantifying Dwell Time With Location-based Augmented Reality: Dynamic AOI Analysis on Mobile Eye Tracking Data With Vision Transformer

Abstract

Introduction

Background

Mobile Eye Tracking for Educational Technology Research

Deep Learning for Eye Tracking Data Analysis

Methods

General Approach

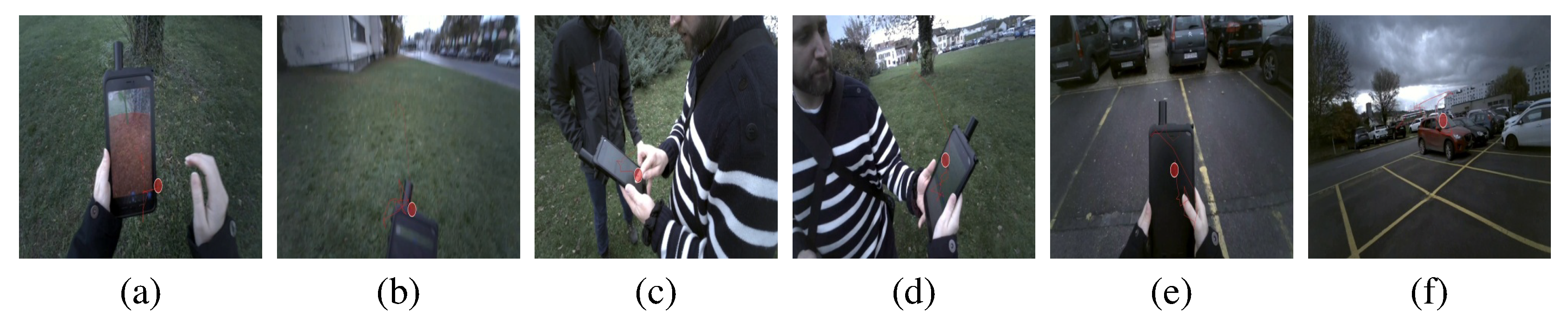

Data Collection

Data Pre-processing

Training Set and Manual Image Labeling

Model Training

- Epochs: the number of times the model should process the entire dataset. We first ran a test with 3 epochs then further trained the model for 10 epochs, with the instruction to save checkpoints files at the end of each epoch.

- Batch size: the number of images that are processed per step. The bigger the value the faster the training, but it is generally limited by the available RAM and its ability to process large amounts of data simultaneously. We used a batch size of 24, which is standard.

- Loss function: the type of algorithm used to minimize the loss value. Cross-entropy loss was used. Its output, ranging from 0 to 1, represents how far off the model’s predictions are from ground truth. The smaller the loss, the best the model performs (both on training and unseen data) with the current weights. Together with accuracy, it may be used to determine the optimal checkpoint.

- Learning rate: the speed at which adjustments will be made in the weights of our neurons relative to the loss gradient descent. If the learning rate is too fast, the optimal weights will be overlooked, and we will miss the optimal checkpoint. If it’s too small, it will take too many steps to attain the optimal solution. An optimal rate should see the loss progress rather than jump up and down. We kept the pretrained model’s preset value of 5 × 10−5.

- Evaluation metric: a model’s performance may be evaluated upon its upon accuracy, precision, or recall, during and after training. We used accuracy, which represents how often the model is correct overall.

Hold-out Validation

Inference

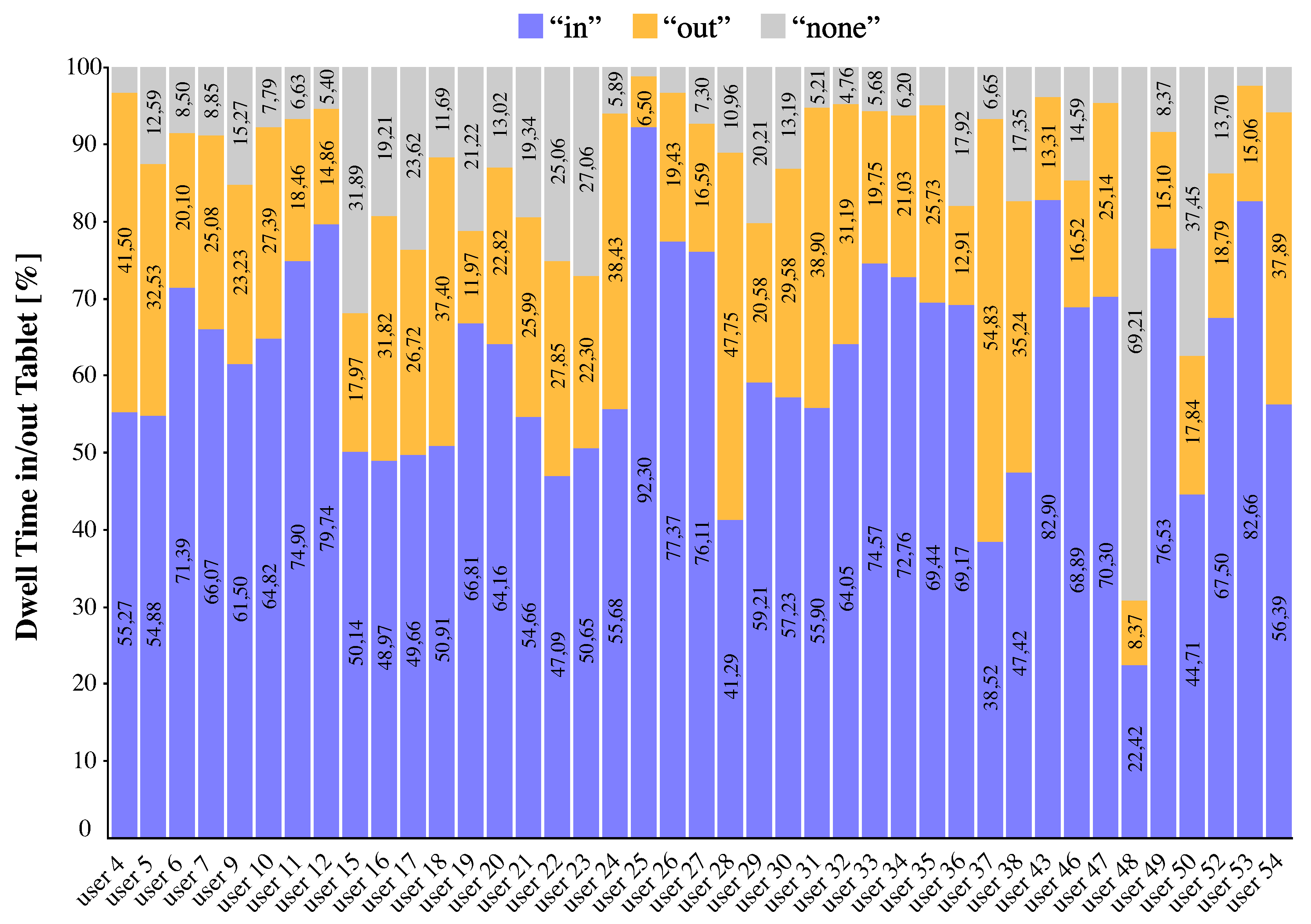

Data Post-Processing and Data-Visualization

Results

Discussion

Code Availability

Ethics and Conflict of Interest

Acknowledgements

Appendix A Image Data Embedding in Deep Learning

Appendix B Convolutional Neural Network

Appendix C Vision Transformer

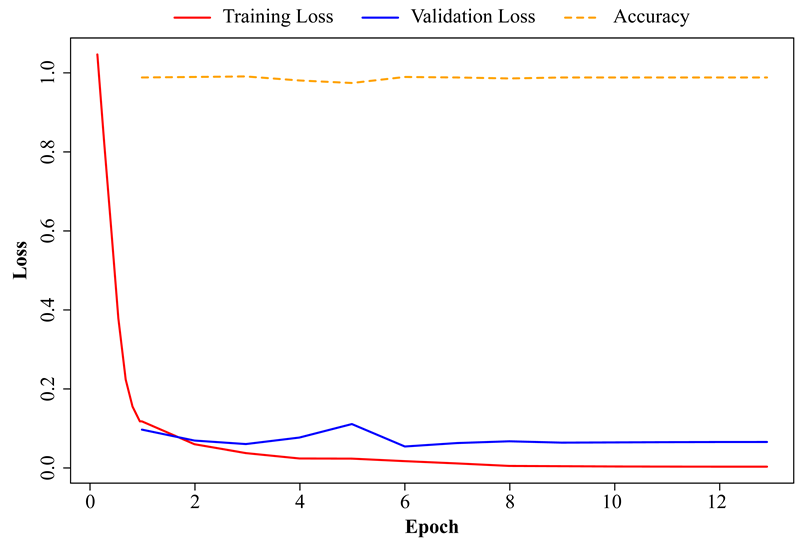

Appendix D Training results.

| Epoch | Step | Training Loss | Validation Loss | Accuracy |

| 1 | 73 | 0.1179 | 0.0977 | 98.85% |

| 2 | 147 | 0.06 | 0.0693 | 98.98% |

| 3 | 219 | 0.0376 | 0.0604 | 99.11%1 |

| 4 | 292 | 0.024 | 0.0769 | 98.09% |

| 5 | 366 | 0.0236 | 0.1111 | 97.45% |

| 6 | 440 | 0.0172 | 0.0542 | 98.98%2 |

| 7 | 514 | 0.0114 | 0.0630 | 98.85% |

| 8 | 587 | 0.0051 | 0.0674 | 98.60% |

| 9 | 661 | 0.0044 | 0.0640 | 98.85% |

| 10 | 735 | 0.0037 | 0.0646 | 98.85% |

| 11 | 809 | 0.0034 | 0.0652 | 98.85% |

| 12 | 882 | 0.0032 | 0.0656 | 98.85% |

| 13 | 949 | 0.0032 | 0.0657 | 98.85% |

| 1 After epoch 3, training reached best accuracy overall (as evaluated on a test set that was split from the training set) and the checkpoint was saved as Model v1. | ||||

| 2 After epoch no. 6, training reached best accuracy of the second training run and the checkpoint was saved as Model v2. | ||||

Appendix E Training plot.

References

- Aggarwal, C., A. Hinneburg, and D. Keim. 2002. On the Surprising Behavior of Distance Metric in High-Dimensional Space. First Publ. in: Database Theory, ICDT 200, 8th International Conference, London, UK, January 4 - 6, 2001 / Jan Van Den Bussche ... (Eds.). Berlin: Springer, 2001, Pp. 420-434 (=Lecture Notes in Computer Science ; 1973). [Google Scholar]

- Ahlström, C., T. Victor, C. Wege, and E. Steinmetz. 2012. Processing of Eye/Head-Tracking Data in Large-Scale Naturalistic Driving Data Sets. IEEE Transactions on Intelligent Transportation Systems - TITS 13: 553–564. [Google Scholar] [CrossRef]

- Andersson, R., M. Nyström, and K. Holmqvist. 2010. Sampling frequency and eye tracking measures: How speed affects durations, latencies, and more. Journal of Eye Movement Research 3, 3: Article 3. [Google Scholar] [CrossRef]

- Barz, M., O. S. Bhatti, H. M. T. Alam, D. M. H. Nguyen, and D. Sonntag. 2023. Interactive Fixation-to-AOI Mapping for Mobile Eye Tracking Data based on Few-Shot Image Classification. Companion Proceedings of the 28th International Conference on Intelligent User Interfaces; pp. 175–178. [Google Scholar] [CrossRef]

- Barz, M., and D. Sonntag. 2021. Automatic Visual Attention Detection for Mobile Eye Tracking Using Pre-Trained Computer Vision Models and Human Gaze. Sensors 21, 12: Article 12. [Google Scholar] [CrossRef]

- Bertasius, G., H. S. Park, S. X. Yu, and J. Shi. 2017. First Person Action-Object Detection with EgoNet. arXiv arXiv:1603.04908. [Google Scholar] [CrossRef]

- Callemein, T., K. Van Beeck, G. Brône, and T. Goedemé. 2019. Edited by K. J. Kim and N. Baek. Automated Analysis of Eye-Tracker-Based Human-Human Interaction Studies. In Information Science and Applications 2018. Springer: pp. 499–509. [Google Scholar] [CrossRef]

- Carter, B. T., and S. G. Luke. 2020. Best practices in eye tracking research. International Journal of Psychophysiology 155: 49–62. [Google Scholar] [CrossRef]

- Deng, J., W. Dong, R. Socher, L.-J. Li, K. Li, and L. Fei-Fei. 2009. ImageNet: A large-scale hierarchical image database. 2009 IEEE Conference on Computer Vision and Pattern Recognition; pp. 248–255. [Google Scholar] [CrossRef]

- Dosovitskiy, A., L. Beyer, A. Kolesnikov, D. Weissenborn, X. Zhai, T. Unterthiner, M. Dehghani, M. Minderer, G. Heigold, S. Gelly, J. Uszkoreit, and N. Houlsby. 2021. An Image is Worth 16x16 Words: Transformers for Image Recognition at Scale. arXiv arXiv:2010.11929. http://arxiv.org/abs/2010.11929.

- Duchowski, A. T. 2017. Eye Tracking Methodology. Springer International Publishing. [Google Scholar] [CrossRef]

- Evans, K. M., R. A. Jacobs, J. A. Tarduno, and J. B. Pelz. 2012. Collecting and Analyzing Eye tracking Data in Outdoor Environments. Journal of Eye Movement Research 5, 2: Article 2. [Google Scholar] [CrossRef]

- Fang, Y., W. Wang, B. Xie, Q. Sun, L. Wu, X. Wang, T. Huang, X. Wang, and Y. Cao. 2023. EVA: Exploring the Limits of Masked Visual Representation Learning at Scale. 2023 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR); pp. 19358–19369. [Google Scholar] [CrossRef]

- Franchak, J. M., and C. Yu. 2022. Beyond screen time: Using head-mounted eye tracking to study natural behavior. In Advances in Child Development and Behavior. Elsevier: Vol. 62, pp. 61–91. [Google Scholar] [CrossRef]

- Friedrich, M., N. Rußwinkel, and C. Möhlenbrink. 2017. A guideline for integrating dynamic areas of interests in existing set-up for capturing eye movement: Looking at moving aircraft. Behavior Research Methods 49, 3: 822–834. [Google Scholar] [CrossRef]

- Google. 2023. Google/vit-base-patch16-224 · Hugging Face. December 21. https://huggingface.co/google/vit-base-patch16-224.

- Goth, C., D. Frohberg, and G. Schwabe. 2006. The Focus Problem in Mobile Learning. 2006 Fourth IEEE International Workshop on Wireless, Mobile and Ubiquitous Technology in Education (WMTE’06); pp. 153–160. [Google Scholar] [CrossRef]

- Holmqvist, K., and R. Andersson. 2017. Eye tracking: A comprehensive guide to methods, paradigms, and measures, 2nd edition. Lund Eye tracking Research Institute. [Google Scholar]

- Holmqvist, K., M. Nyström, R. Andersson, R. Dewhurst, H. Jarodzka, and J. van de Weijer. 2011. Eye Tracking: A Comprehensive Guide To Methods And Measures.

- Khan, S., M. Naseer, M. Hayat, S. W. Zamir, F. S. Khan, and M. Shah. 2022. Transformers in Vision: A Survey. ACM Computing Surveys 54, 10s: 200:1–200:41. [Google Scholar] [CrossRef]

- Kolesnikov, A., L. Beyer, X. Zhai, J. Puigcerver, J. Yung, S. Gelly, and N. Houlsby. 2020. Big Transfer (BiT): General Visual Representation Learning. arXiv arXiv:1912.11370. [Google Scholar] [CrossRef]

- Kong, A., K. Ahuja, M. Goel, and C. Harrison. 2021. EyeMU Interactions: Gaze + IMU Gestures on Mobile Devices. Proceedings of the 2021 International Conference on Multimodal Interaction; pp. 577–585. [Google Scholar] [CrossRef]

- Kredel, R., A. Klostermann, and E.-J. Hossner. 2015. Automated vector-based gaze analysis for perception-action diagnostics. In Advances in Visual Perception Research. Nova: pp. 171–191. [Google Scholar]

- Kredel, R., C. Vater, A. Klostermann, and E.-J. Hossner. 2017. Eye tracking Technology and the Dynamics of Natural Gaze Behavior in Sports: A Systematic Review of 40 Years of Research. Frontiers in Psychology 8: 1845. [Google Scholar] [CrossRef]

- Kucirkova, N. I., S. Livingstone, and J. S. Radesky. 2023. Faulty screen time measures hamper national policies: Here is a way to address it. Frontiers in Psychology 14: 1243396. [Google Scholar] [CrossRef]

- Kumari, N., V. Ruf, S. Mukhametov, A. Schmidt, J. Kuhn, and S. Küchemann. 2021. Mobile Eye tracking Data Analysis Using Object Detection via YOLO v4. Sensors 21, 22: Article 22. [Google Scholar] [CrossRef]

- Kurzhals, K. 2021. Image-Based Projection Labeling for Mobile Eye Tracking. ACM Symposium on Eye Tracking Research and Applications; pp. 1–12. [Google Scholar] [CrossRef]

- Kurzhals, K., M. Hlawatsch, C. Seeger, and D. Weiskopf. 2017. Visual Analytics for Mobile Eye Tracking. IEEE Transactions on Visualization and Computer Graphics 23, 1: 301–310. [Google Scholar] [CrossRef] [PubMed]

- Kurzhals, K., N. Rodrigues, M. Koch, M. Stoll, A. Bruhn, A. Bulling, and D. Weiskopf. 2020. Visual Analytics and Annotation of Pervasive Eye Tracking Video. ACM Symposium on Eye Tracking Research and Applications; pp. 1–9. [Google Scholar] [CrossRef]

- Lappi, O. 2015. Eye Tracking in the Wild: The Good, the Bad and the Ugly. Journal of Eye Movement Research 8, 5: Article 5. [Google Scholar] [CrossRef]

- Lim, J. Z., J. Mountstephens, and J. Teo. 2022. Eye tracking Feature Extraction for Biometric Machine Learning. Frontiers in Neurorobotics 15. https://www.frontiersin.org/articles/10.3389/fnbot.2021.796895. [CrossRef] [PubMed]

- Ma, M., H. Fan, and K. M. Kitani. 2016. Going Deeper into First-Person Activity Recognition. arXiv arXiv:1605.03688. [Google Scholar] [CrossRef]

- Madigan, S., R. Eirich, P. Pador, B. A. McArthur, and R. D. Neville. 2022. Assessment of Changes in Child and Adolescent Screen Time During the COVID-19 Pandemic: A Systematic Review and Meta-analysis. JAMA Pediatrics 176, 12: 1188. [Google Scholar] [CrossRef]

- Maurício, J., I. Domingues, and J. Bernardino. 2023. Comparing Vision Transformers and Convolutional Neural Networks for Image Classification: A Literature Review. Applied Sciences 13, 9: Article 9. [Google Scholar] [CrossRef]

- Mercier, J., N. Chabloz, G. Dozot, C. Audrin, O. Ertz, E. Bocher, and D. Rappo. 2023. IMPACT OF GEOLOCATION DATA ON AUGMENTED REALITY USABILITY: A COMPARATIVE USER TEST. The International Archives of the Photogrammetry, Remote Sensing and Spatial Information Sciences, XLVIII-4/W7-2023; pp. 133–140. [Google Scholar] [CrossRef]

- Mones, R. 2024. Rmones/tkteach [Python]. (Original work published 2018). https://github.com/rmones/tkteach.

- Niehorster, D. C., R. S. Hessels, and J. S. Benjamins. 2020. GlassesViewer: Open-source software for viewing and analyzing data from the Tobii Pro Glasses 2 eye tracker. Behavior Research Methods 52, 3: 1244–1253. [Google Scholar] [CrossRef]

- Panetta, K., Q. Wan, A. Kaszowska, H. A. Taylor, and S. Agaian. 2019. Software Architecture for Automating Cognitive Science Eye tracking Data Analysis and Object Annotation. IEEE Transactions on Human-Machine Systems 49, 3: 268–277. [Google Scholar] [CrossRef]

- Panetta, K., Q. Wan, S. Rajeev, A. Kaszowska, A. L. Gardony, K. Naranjo, H. A. Taylor, and S. Agaian. 2020. ISeeColor: Method for Advanced Visual Analytics of Eye Tracking Data. IEEE Access 8: 52278–52287. [Google Scholar] [CrossRef]

- Purucker, C., J. R. Landwehr, D. E. Sprott, and A. Herrmann. 2013. Clustered insights: Improving Eye Tracking Data Analysis using Scan Statistics. International Journal of Market Research 55, 1: 105–130. [Google Scholar] [CrossRef]

- Rim, N. W., K. W. Choe, C. Scrivner, and M. G. Berman. 2021. Introducing Point-of-Interest as an alternative to Area-of-Interest for fixation duration analysis. PLOS ONE 16, 5: e0250170. [Google Scholar] [CrossRef] [PubMed]

- Russakovsky, O., J. Deng, H. Su, J. Krause, S. Satheesh, S. Ma, Z. Huang, A. Karpathy, A. Khosla, M. Bernstein, A. C. Berg, and L. Fei-Fei. 2015. ImageNet Large Scale Visual Recognition Challenge. International Journal of Computer Vision 115, 3: 211–252. [Google Scholar] [CrossRef]

- Silva Machado, E. M., I. Carrillo, M. Collado, and L. Chen. 2019. Visual Attention-Based Object Detection in Cluttered Environments. 2019 IEEE SmartWorld, Ubiquitous Intelligence & Computing, Advanced & Trusted Computing, Scalable Computing & Communications, Cloud & Big Data Computing, Internet of People and Smart City Innovation (SmartWorld/SCALCOM/UIC/ATC/CBDCom/IOP/SCI); pp. 133–139. [Google Scholar] [CrossRef]

- Simpson, J. 2021. Three-Dimensional Gaze Projection Heat-Mapping of Outdoor Mobile Eye tracking Data. Interdisciplinary Journal of Signage and Wayfinding 5, 1: Article 1. [Google Scholar] [CrossRef]

- Srivastava, S., and G. Sharma. 2023. OmniVec: Learning robust representations with cross modal sharing. arXiv arXiv:2311.05709. Version 1. http://arxiv.org/abs/2311.05709.

- Sümer, Ö., P. Goldberg, K. Stürmer, T. Seidel, P. Gerjets, U. Trautwein, and E. Kasneci. 2018. Teacher’s Perception in the Classroom. arXiv arXiv:1805.08897. [Google Scholar] [CrossRef]

- Tzamaras, H. M., H.-L. Wu, J. Z. Moore, and S. R. Miller. 2023. Shifting Perspectives: A proposed framework for analyzing head-mounted eye tracking data with dynamic areas of interest and dynamic scenes. Proceedings of the Human Factors and Ergonomics Society Annual Meeting 67, 1: 953–958. [Google Scholar] [CrossRef]

- Vaswani, A., N. Shazeer, N. Parmar, J. Uszkoreit, L. Jones, A. N. Gomez, Ł. Kaiser, and I. Polosukhin. 2017. Attention is All you Need. Advances in Neural Information Processing Systems 30. https://papers.nips.cc/paper_files/paper/2017/hash/3f5ee243547dee91fbd053c1c4a845aa-Abstract.html.

- Venuprasad, P., L. Xu, E. Huang, A. Gilman, L. C. Ph.D., and P. Cosman. 2020. Analyzing Gaze Behavior Using Object Detection and Unsupervised Clustering. ACM Symposium on Eye Tracking Research and Applications, 1–9. [Google Scholar] [CrossRef]

- Wolf, J., S. Hess, D. Bachmann, Q. Lohmeyer, and M. Meboldt. 2018. Automating Areas of Interest Analysis in Mobile Eye Tracking Experiments based on Machine Learning. Journal of Eye Movement Research 11, 6. [Google Scholar] [CrossRef] [PubMed]

- Wu, B., C. Xu, X. Dai, A. Wan, P. Zhang, Z. Yan, M. Tomizuka, J. Gonzalez, K. Keutzer, and P. Vajda. 2020. Visual Transformers: Token-based Image Representation and Processing for Computer Vision. arXiv arXiv:2006.03677. [Google Scholar] [CrossRef]

- Xu, S., Y. Li, J. Hsiao, C. Ho, and Z. Qi. 2023. Open Vocabulary Multi-Label Classification with Dual-Modal Decoder on Aligned Visual-Textual Features. arXiv arXiv:2208.09562. Version 2. http://arxiv.org/abs/2208.09562.

- Zong, Z., G. Song, and Y. Liu. 2023. DETRs with Collaborative Hybrid Assignments Training. 2023 IEEE/CVF International Conference on Computer Vision (ICCV); pp. 6725–6735. [Google Scholar] [CrossRef]

| Method | “in” | “out” | “none” | Errors1 | Accuracy1 |

|---|---|---|---|---|---|

| Ground truth2 | 5714 (55.96%) | 4298 (42.1%) | 198 (1.94%) | ø | 100% |

| Model v1 | 5691 (55.74%) | 4318 (42.3%) | 201 (1.96%) | 67 (0.66%) | 99.34% |

| Manual labeling | 5726 (56.08%) | 4327 (42.38%) | 157 (1.54%) | 86 (0.84%) | 99.16% |

| Model v2 | 5608 (54.93%) | 4394 (43.07%) | 208 (2.04%) | 126 (1.24%) | 98.76% |

Copyright © 2024. This article is licensed under a Creative Commons Attribution 4.0 International License.

Share and Cite

Mercier, J.; Ertz, O.; Bocher, E. Quantifying Dwell Time With Location-based Augmented Reality: Dynamic AOI Analysis on Mobile Eye Tracking Data With Vision Transformer. J. Eye Mov. Res. 2024, 17, 1-22. https://doi.org/10.16910/jemr.17.3.3

Mercier J, Ertz O, Bocher E. Quantifying Dwell Time With Location-based Augmented Reality: Dynamic AOI Analysis on Mobile Eye Tracking Data With Vision Transformer. Journal of Eye Movement Research. 2024; 17(3):1-22. https://doi.org/10.16910/jemr.17.3.3

Chicago/Turabian StyleMercier, Julien, Olivier Ertz, and Erwan Bocher. 2024. "Quantifying Dwell Time With Location-based Augmented Reality: Dynamic AOI Analysis on Mobile Eye Tracking Data With Vision Transformer" Journal of Eye Movement Research 17, no. 3: 1-22. https://doi.org/10.16910/jemr.17.3.3

APA StyleMercier, J., Ertz, O., & Bocher, E. (2024). Quantifying Dwell Time With Location-based Augmented Reality: Dynamic AOI Analysis on Mobile Eye Tracking Data With Vision Transformer. Journal of Eye Movement Research, 17(3), 1-22. https://doi.org/10.16910/jemr.17.3.3