Introduction

The relationship between a wide range of aspects of ensemble playing and musicians’ gaze behaviour has recently gained more attention. This may be partly due to the realisation that bodily movement, a visual aspect of musical performance that has been studied extensively, must be attended to if it is to play a role in interperformer communication. Yet observations regarding gaze as a communication channel in ensemble playing, whether as a means for gathering visual information on the partner or for cueing, are still scarce. The current literature that addresses gaze behaviour tends to do so anecdotally within the context of qualitative studies that describe gaze based on video recordings. However, researchers wishing to focus on musicians’ gaze behaviour in a relatively natural setting may consider making use of the recently developed technique of mobile eye-tracking.

The current paper reports on the initial results of such an undertaking and addresses methodological issues. The type of ensemble studied is the trio since this constellation combines the interactional richness of a group (as opposed to a duo) (

Brandler & Peynircioglu, 2015) with a minimum of complexities. Our research agenda is motivated by the aim to explain how musicians’ gazing at the partner may relate to their sense-making of the musical task. This means we eventually hope to relate gazing at the partner to the characteristics of the musical score and to the decision-making process during rehearsal. Thereby, we consider each individual musician a single case to be studied in depth, after which cross-case comparison will take place.

The current article reports on an initial exploration of a part of our data set. First, we describe how often gazing at the partner occurred to identify possible contrasting cases. Second, we compare the amount of partner-gazes across uninterrupted runs through the entire musical fragment in order to determine whether gazing at the partner increased or decreased. Our observations are based on a data set that shows four trio ensembles playing the same musical fragment, running through it four times each (sixteen run-throughs in total) in a rehearsal setting. The procedure also required the participants to work collaboratively on the musical fragment between the runthroughs (two times for half an hour), but data on these activities are not discussed here.

Below, we situate our research by presenting an overview of the main data collection methods used in studies that have addressed gaze behaviour in ensemble playing. We proceed by providing some technical insights into mobile eye-tracking. Last, we review results on gaze, as far as it relates to the musical task within ensemble playing. We note that gaze in performer-audience communication (see e.g.,

Antonietti et al., 2009), or in orchestral or choral conducting (see

Silvey, 2014), was deemed lying outside the scope of the current research.

State of the Art

The topic of gaze behaviour in ensemble playing has been illuminated by naturalistic and experimental research that employed data collection methods other than mobile eye-tracking. Gaze has been included in surveys on ensemble playing (

Blank & Davidson, 2007;

Ford & Davidson, 2003;

Pennill & Timmers, 2017). A wide range of qualitative studies using video data, too, have addressed gaze as part of broader ensemble-related topics (

Davidson, 2012;

Davidson & Good, 2002;

Fulford & Ginsborg, 2014; Geeves, McIlwain, & Sutton, 2014;

King & Ginsborg, 2011;

Kurosawa & Davidson, 2005;

Williamon & Davidson, 2002). Gaze has also been the focus of more detailed study, on the one hand by using video recordings of ensembles playing in natural settings (

Biasutti et al., 2016;

Kawase, 2009;

Moran, 2010), on the other by employing video cameras in experimental settings (

Kawase, 2014a,

2014b; Morgan, Gunes, & Bryan-Kinns, 2015b). Finally, some studies proceeded by employing several visual conditions, whereby gaze (at certain body parts or at the entire body) was either possible or obstructed (

Kawase, 2014b;

Keller & Appel, 2010; Vera, Chew, & Healey, 2013). The existence of these studies indicates that the topic of gaze appears of interest to various researchers studying ensemble performance.

A particular challenge when using video data seems to be to avoid a trade-off between ecological validity and fine-grained gaze measurements. For example, some authors take head direction as a measurement for gaze direction, as is clearly stated in

Moran (

2010) and Dardard, Gnecco, and Glowinski (2016). The latter refers to

Stiefelhagen (

2002), stating that head direction is sometimes a good approximation for gaze direction.

Kawase (

2014b) on the other hand, obtained the more finegrained distinction between mutual gaze (gazing at each other’s body) and eye-contact (gazing into each other’s eyes) by means of an elaborate experimental design, using a screen between the musicians and a chinrest to fix their heads. Seen in this light, mobile eye-tracking can be considered an appropriate tool for measuring eye gaze in an interactional setting, as it allows a compromise between ecological validity and the need for measurements that capture the alternation between saccades (jerky movements from one target to another) and fixations (moments where the eyes remain relatively static and focused on the same target) (Liversedge, Gilchrist, & Everling, 2011).

Still, there are some limitations. First, we note that eye-tracking is not entirely new within the musical domain, as there is a growing body of research on music reading (see

Madell & Hébert, 2008;

Puurtinen, 2018) in which various forms of video-based eye-tracking are used. In these studies, however, regardless of any methodological and technical varieties, the stimulus (in this case the musical score) is always presented as a stationary object (usually on a screen). When studying the eye movements of musicians playing in an interactional setting, visual targets are not known in advance and this calls for a different eye-tracking technique. Mobile eyetracking (equally video-based) offers the advantage of allowing for a relatively naturalistic setting in which participants can direct their gaze at any point in space. In addition, they can move more freely in order to handle their instruments. On the downside, due to a relatively low sampling rate, gaze measurements require careful interpretation. High-accuracy eye-tracking systems collect data at up to 2000 Hz, whereas mobile eye trackers generally have a sampling rate of 60 Hz (Anantrasirichai, Gilchrist, & Bull, 2016), although higher sampling rates are available as well. Since saccades may be shorter than 50 ms, Anantrasirichai, Gilchrist, and Bull (2016) argue that mobile eye trackers with a frame rate below 40 Hz may be inadequate to reliably distinguish between fixations and saccades.

Regardless of what kind of eye tracker is used, a second limitation is that the obtained data provide information about what lies in the participant’s central vision. Hence, peripheral vision, while it may play an important role in collaborative music making, cannot be studied. A third limitation, finally, concerns the occasional loss of data caused by the fact that the image of the videorecorded visual field is slightly smaller than the actual visual field. Therefore, the gaze cursor that moves across the video-recorded image of the visual field, thus indicating the point of regard, cannot be mapped onto the visual field image when participants look from the corner of their eyes.

To our knowledge, three studies thus far have incorporated mobile eye-tracking into the study of ensemble playing, other than our own pilot study (

Vandemoortele et al., 2015,

2016) and current research. Morgan, Gunes, and Bryan-Kinns (2015a) devised a tool for real-time feedback on the body motion and eye gaze of an invisible co-performer employing eye-tracking headsets and small wireless accelerometers.

Yamada et al. (

2014) tracked the gaze shifts of an expert and non-expert Japanese drum player playing together, calculating gaze shifts and percentages of time looking at the self, the opposite person, and other areas. An ongoing study by

Bishop and Goebl (

2017) analyses mobile eye-tracking data, alongside motion capture and audio/MIDI data, from clarinet-piano duos to test whether visual communication between performers facilitates coordination and how. The duos performed three run-throughs, at the start, middle, and end of a rehearsal, followed by a run-through during which musicians’ views of each other were obscured. The use of several run-throughs renders their research somewhat comparable to our own pilot and current study, the design of which we explain in the next section.

Most studies with results on gaze have dealt with aspects of the musical task, whether as a task set by the musical score or by the demands of ensemble performance. Some studies reveal that certain moments in the score can indeed be said to bear a relationship with gaze behaviour.

Davidson (

2012) who video-recorded clarinetflute duos observed that gazes at the partner do not happen regularly, but rather at major boundaries (the start and the end of the work and section endings). Furthermore,

Williamon and Davidson (

2002, p. 61) state that the proportion of “direct, simultaneous eye-contact” (out of the total amount) increased across the two rehearsals, and public performance, at places in the score that were identified by the musicians (a piano duo) as important for coordination.

Results in other studies point out that gaze behaviour may also relate to aspects of the sounding performance. For instance, the topic of coordination was also addressed by measuring timing lags between musicians’ note onsets. Morgan, Gunes, and Bryan-Kinns (2015b) and Vera, Chew, and Healey (2013) concluded that gazing at the partner enhanced synchronisation. Furthermore,

Kawase (

2014b) studied piano duos and found that mutual gazing, but not eye-contact, enhanced synchronisation at tempo changes. In

Keller and Appel (

2010), visual contact was found to cause a higher variability of key stroke asynchronies between the two pianists than in the condition where performers could not see each other, although ensemble coordination was not affected markedly. The authors suggest that visual contact could have encouraged the performers to be more expressive with the timing. In a second experiment by

Kawase (

2014b) it was suggested that gazing itself provided some coordination cues, although movement cues were necessary for strict coordination. This is not necessarily in contradiction with

Keller and Appel’s (

2010) finding that the absence of visual contact did not affect coordination markedly, since the musical materials in their study contained no tempo changes and maintained a continuous metrical pulse (in contrast with Kawase’s study).

Another performance aspect that gaze has been shown to be related to is the relationship between leaders/soloists and followers/accompanists, as can be learnt from

Moran (

2010) and

Kawase (

2014a). In both studies, accompanists looked at soloists longer than vice versa. As Kawase remarks, this shows that similar mechanisms regarding gaze may be at work in musical interaction (when considering melody allocation or leadership allocation) as in spoken interaction (when considering social status). For example, participants in a study by

Foulsham et al. (

2010) looked more frequently and longer at highstatus individuals than at low-status individuals when watching a clip of a group decision-making task.

Our own study tracked the gaze behaviour of musicians playing in trios consisting of a clarinet, violin and piano using Tobii Pro Glasses 2 (sampling rate 50 Hz). Gazing at the partner will eventually be related to the characteristics of the musical score and to the decisionmaking process during rehearsal via in-depth study of each individual musician. The aim of the current article, however, is to report on an initial exploration of a part of the eye-tracked data set, namely the four run-throughs each of the four trios played. First, we describe how often gazing at the partner occurred to identify possible contrasting cases. As studies on conversational interaction show, gaze behaviour is not only a means to an end, for instance to regulate turn-taking in unscripted conversation, but it is also a learnt behaviour shaped by social norms. As such, people tend to have an idea of what constitutes ordinary or deviant gaze behaviour (

Rossano, 2012). Not considering that gazing at the partner may be generally recommended in certain musical situations (i.e., at tempo changes), it may be hard to define what constitutes ordinary or deviant gaze behaviour in musical interaction. Indeed, the lack of clear norms regarding gaze behaviour presents musicians with the opportunity to display themselves as various sorts of artistic personae and allows them to actively engage with the audience (as was the case with

The Corrs according to a study by (

Kurosawa and Davidson, 2005) or to purposely ignore them. Given this flexibility and given the additional fact that, in conversations, individuals’ amount of gazing at the partner has been shown to differ substantially (

Kendon, 1967), we expect that the number of gazes at the partner in our study will differ regardless of the musical instrument of the participant.

Second, we compare the amount of partner-gazes across run-throughs in order to determine whether gazing at the partner increased or decreased.

Williamon and Davidson (

2002) found that eye-contact increasingly occurred at places in the score that were identified by the musicians as important for coordination. The increase was found after comparing two rehearsals and a performance, i.e., three stages of different duration. As the authors suggest, gaze may have started to function increasingly as a coordinating device over the course of the rehearsal process, however the increase may also have been supported by a growing ease to look up from the score. Both the study by

Williamon and Davidson (

2002) and our own study deal with interactions between unfamiliar musicians rehearsing unfamiliar music. The current design differs in that it allows for a comparison between runs through the same fragment, all in a rehearsal setting, thus providing the opportunity to determine whether a tendency can be detected regardless of any links to specific moments in the score.

Methods

Participants

Our data set consists of twelve musicians, who follow higher music education in Belgium and agreed to participate in an eye-tracking experiment. Except one, all study at LUCA School of Arts and were selected on the basis of their musical abilities as judged by the chamber music coordinator of the institution. One participant was found through social media and studies at the Royal Conservatory of Brussels. Four clarinet-violin-piano trios were formed making sure that no musician had ever played chamber music with any of the partners before. Two of the trios were all female, one included a male musician, and one included two male musicians. Ages ranged from 18–28 years (Mean age = 23 years). None of the musicians had ever played the composition chosen for the recording session. They consented in writing to taking part in the study and to the use of still images and audiovisual recordings for scientific purposes. At the end of the session each musician received a voucher.

Setting, Apparatus, Stimuli

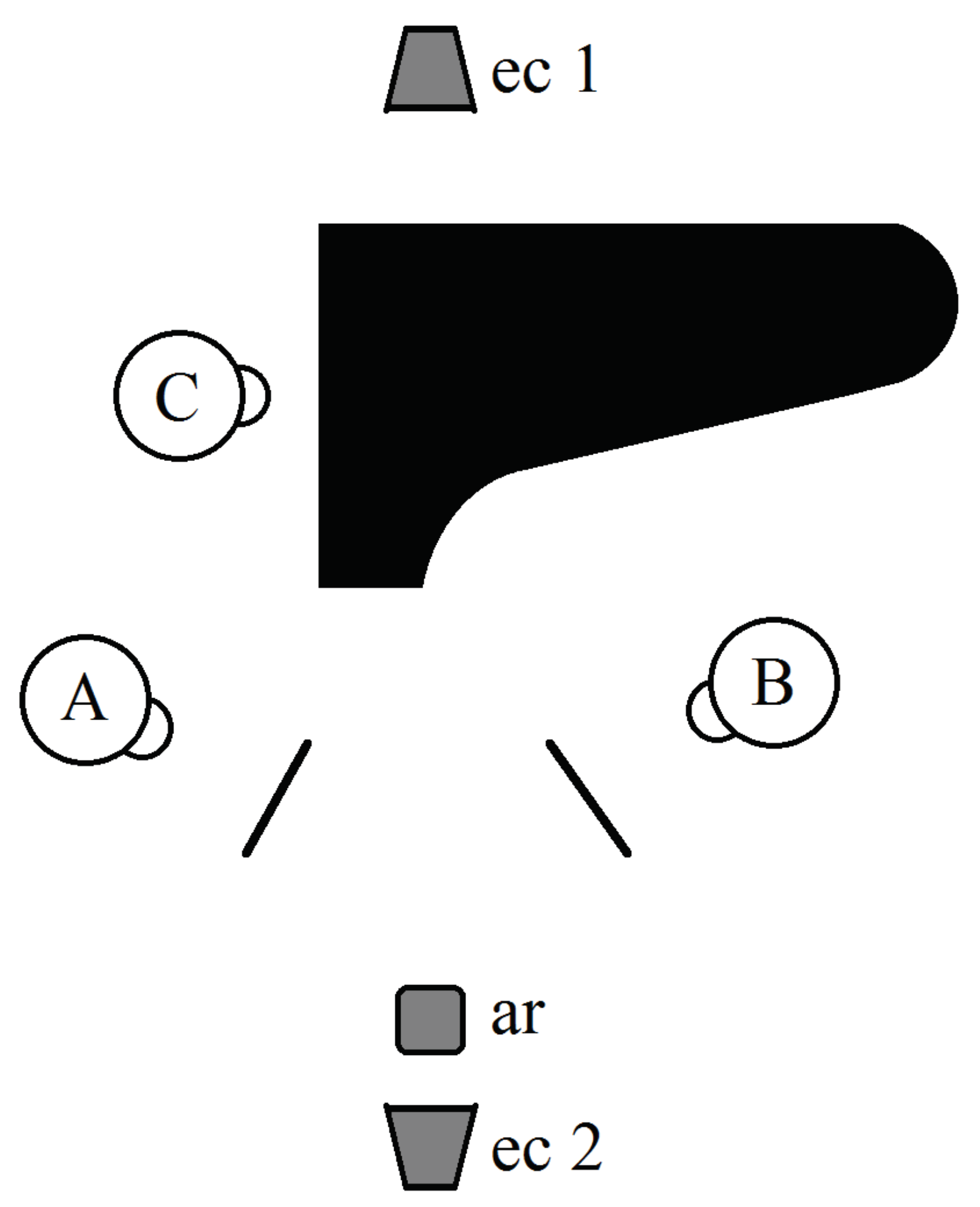

The recording session took place in the concert hall of the institution. The musicians were positioned the way they would be in a natural condition (

Figure 1), i.e., the clarinetist stood inside the wing of the piano, while the violinist stood next to where the pianist was seated. Clarinetist and violinist were faced toward each other and the height of their music stands was lowered just enough so that the participants could see each other’s head. The distance between the musicians was such that gazes at different large areas of the body could be distinguished (i.e., head, torso, legs, arms…). Smaller parts (for instance, eyes and mouth) could not be detected separately.

Each musician in the trio wore a binocular mobile eye tracker (Tobii Pro Glasses 2, sampling rate 50 Hz). For those who normally wore prescription glasses the eye tracker was fitted with lenses with approximately the same strength as the participants’ own. Two external cameras (frame rate 50 fps) captured the overall interaction. One was positioned in the seating area of the hall, filming a frontal view of the trio; the other filmed the back of the trio from the balcony above the stage. An audio recorder (TASCAM DR-2d) was placed on one of the front seats and guaranteed a reasonable sound quality.

The musical excerpt was taken from the last movement of Milhaud’s Suite for violin, clarinet and piano (measure 1 to 103 of the Vif-section). The musical parts carried a metronome marking of 120 bpm. At this speed the excerpt lasts about 2 minutes in performance. The marking could inform the participants about the envisaged performance tempo, however the researchers gave no instructions as to what tempo was expected. The musicians were also told not to use a metronome. The music was deemed appropriate for the study of individual differences and interactional dynamics, as the instruments are treated more or less as equal partners through an almost equal share in the melody and through passages that combine the melody with countermelodic material, rather than accompaniment patterns.

Procedure

Upon arrival at the concert hall, the participants were briefly introduced to each other. The researcher explained the schedule for the session, handed over the musical parts and guided the clarinetist and violinist to an individual practice room. The pianist remained in the concert hall. The musicians were allowed to practice for half an hour, after which they were assembled for the eyetracked rehearsal. The rehearsal followed a predetermined schedule that alternated between uninterrupted runs through the musical fragment and rehearsal periods during which the participants were expected to work collaboratively on the fragment. The schedule was organised as follows: rehearsal period 1 (30′)—run-through—rehearsal period 2 (30′)—run-through—run-through. Except for the individual practice, the entire session was recorded with mobile eye trackers, cameras, and audio device. The eye trackers were recalibrated before each run-through. At the very end, each participant filled out a post-performance questionnaire. On the one hand, the questionnaire obtained information about the participants’ experience of the equipment and procedure. On the other, it aimed to collect possible data points for analysis by enquiring about the difficulties in the musical excerpt and by asking where in the score participants thought they had looked at a partner. Gaze will be analysed in the light of these responses at a later stage of our research. It is well worth noting that all participants, with one exception, considered the amount of individual practice time either adequate or too long. Regarding the amount of rehearsal time, participants stated either that no additional time was needed or that additional rehearsing on another day could be useful if they were to study the full musical piece. It therefore seems that the musical excerpt was not too difficult for the participants. The questions were formulated in Dutch and English. However, quite a few participants were not native English or Dutch speakers. Hence, after participants completed the form a brief oneto-one discussion followed with the researcher, mostly to ensure that the questions and answers were clear to both participant and researcher.

Annotation of Gaze Behaviour

Prior to annotation, the gaze data of each trio member were exported as video files. These were synchronised with each other, with one of the external camera recordings and the audio recording in Adobe Premier Pro (but only the eye-tracked data and audio recording are of importance for the current publication). Synchronisation was enabled through the claps that were executed at the beginning and end of each run-through and rehearsal period. In the resulting quadvid, the audio of the eyetracking videos and the external camera recording was disabled, leaving only the sound from the audio recorder. The synchronised data were exported at 25 fps and imported into the editing tool ELAN (

Wittenburg et al., 2006) to be annotated manually. The procedure thus far followed that of researchers in conversation analysis (see for instance

Holler & Kendrick, 2015; Jehoul, Brône, & Feyaerts, 2016).

Whenever the gaze cursor approached one of the partners, the annotator checked the location of the cursor frame by frame in order to determine the start and end of a partner-gaze. Partner-gazes were annotated as such when the gaze cursor fell onto the partner, including (parts of) the instrument, i.e., the clarinet, the violin and bow, and the keys of the piano. The annotated data set did not include moments where the gaze cursor fell near the partner. We will investigate these moments separately (in a later stage of our research), since these moments were clearly marked by a gaze shift toward the partner and therefore could be relevant for the study of gaze in ensemble interaction. Equally excluded from the annotated data set were 20 instances where it was unclear whether the gaze cursor pointed toward the partner due to overlap. These cases concerned some of the violinists. Due to the particular posture of violinists (who hold the instrument at the left side of the body) and their particular position in the trio (at the right side of the pianist), it was at times hard to distinguish gazes at the scroll of the violin and the violinist’s left hand from gazes at the pianist, who was seated ‘behind’ the scroll and left hand.

Annotation of the Sounding Music

Musical bars were annotated manually on an additional tier in ELAN by listening to the audio recording. This included checking each bar in an initial annotation in order to eliminate traces of sound that belonged to the previous bar. Since ensemble playing always involves some asynchrony, this means that in our annotations the new bar started once all three musicians had arrived there. We note that asynchronies were overall hard to detect by ear so, for our research purposes, this procedure seemed adequate.

After analysis of the score, score characteristics were annotated on additional tiers, using the audio recordings, in a similar fashion as described above. The score analysis identified structural features (e.g., section endings, phrase endings, and smaller phrase segments), role allocation (indicating which instrument held the melody, countermelody, or accompaniment), role switches (moments where role allocation changed), rests, entrances and exits.

Results

The analysis we report here is based on only the runthroughs, not the rehearsal periods, which will be analysed in a next stage of the project. Accidentally, all trios started their first rehearsal period with a complete run-through. This enabled us to compare four runthroughs across trios (one spontaneous run-through and three that were demanded by the researchers). They can be situated within the rehearsal schedule as follows: runthrough (1) as part of rehearsal period 1—remainder of rehearsal period 1—run-through (2)—rehearsal period 2—run-through (3)—run-through (4).

The musical fragment contained 103 bars of music. As was observed in our pilot study (

Vandemoortele et al., 2016), playing always starts after a mutual gaze and very often finishes with a cluster of gazes at, and after, the end of the musical piece. This points towards two gaze situations that are different from the situation where one is in the midst of playing. Similar observations were made in the current data set. Since we did not want to smooth out differences between individual musicians during playing, the last bar was eliminated from analysis, as were gazes before the start of the music.

Individual Musicians’ Amount of Gazing at the Partner

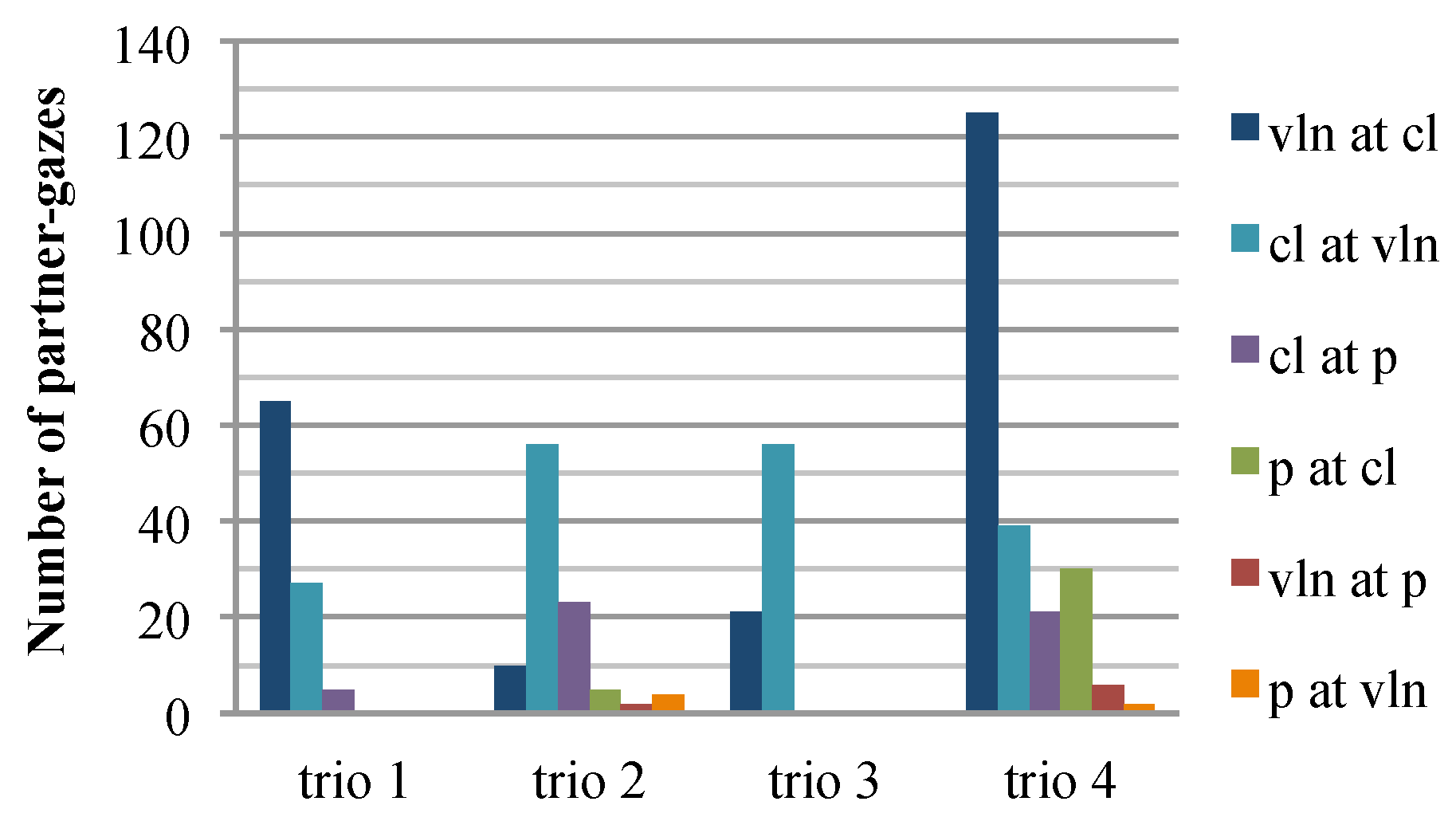

Gazing at the partner in a trio constellation can occur in six directions, in this particular study between violin and clarinet, between clarinet and piano, and between violin and piano, each time in two directions. As regards the number of partner-gazes in all four run-throughs in total (

Figure 2), gazes occurred in both directions between violin and clarinet in all trios. However, in trios 1 and 4 the violinist looked more often at the clarinetist than vice versa. In trios 2 and 3, the clarinetist looked more often at the violinist than vice versa. Regardless of the specific gaze direction, in each trio the highest amount of partnergazes happened between the violinist and the clarinetist.

Much less gazing at the partner can be seen in the interactions that involve the pianist. In trio 3, no one looked at the pianist and the pianist did not look at anyone. To be sure that, indeed, there was no visual interaction at all with the pianist in this trio, we checked whether gazes near (as opposed to on) the partner occurred. This was not the case. In trio 1, the clarinetist only looked five times at the pianist across all run-throughs. The pianist, again, did not look at anyone (nor gaze near the partner). There were no gazes at the pianist by the violinist (although overlaps occurred four times). In trios 2 and 4, gazes happened in all six directions. In both trios, gazes between violinist and pianist occurred only sporadically. Compared to that, the pianist received more than sporadic visual attention from the clarinetist in trio 2. In trio 4, both pianist and clarinetist looked more than sporadically at each other.

Distribution of Partner-Gazes Across Runthroughs

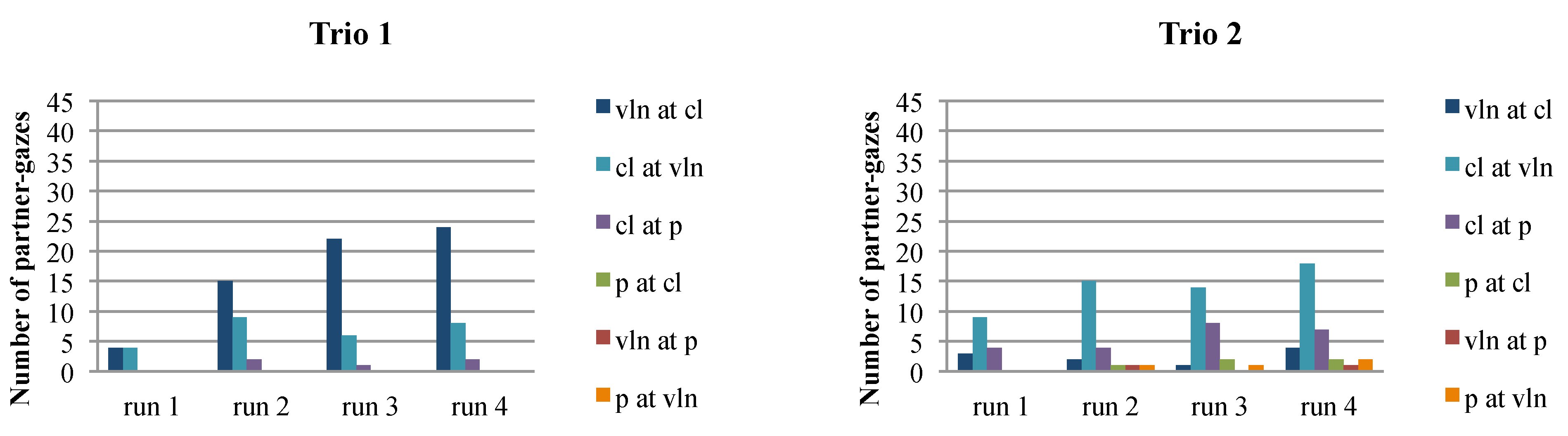

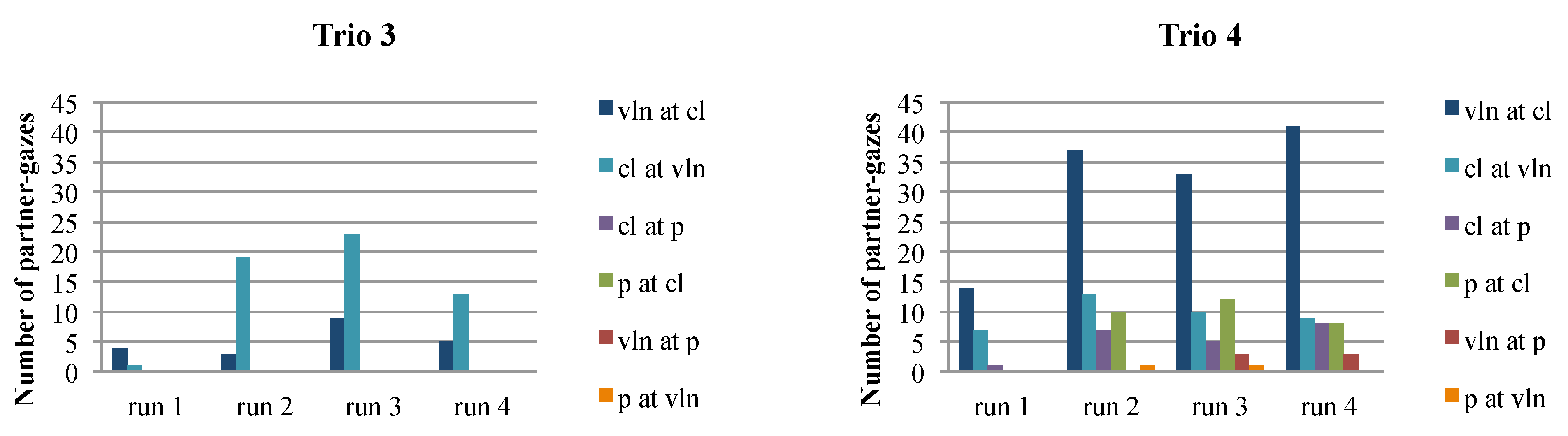

When we view each run-through separately, we see that gazing at the partner occurred least often in the first run-through (

Figure 3). In terms of the amount of gaze directions that were represented in this first run-through, most trios (1, 2 and 4) reveal that gazes occurred in fewer directions than in the other run-throughs. Also, pianists never looked at anyone during the first run-through. As is the case with the amount of gaze directions, the first runthrough tended to contain a lesser amount of partnergazes than the other run-throughs. More specifically, this was the case in trio 1 (in all directions), in trio 2 (in all directions, except from violin to clarinet), in trio 3 (except from violin to clarinet), and in trio 4 (in all directions).

While still attending to each gaze direction within each trio, we consider the possibility of a tendency across all four run-throughs. Some instances of the last runthrough contain the highest number of partner-gazes, namely in trio 1 (from violin to clarinet), trio 2 (from clarinet to violin), and trio 4 (from violin to clarinet). A steady increase of partner-gazes across run-throughs can be observed in trio 1 (from violin to clarinet) and—if one considers the total amount of gazes by a musician to both partners—in trio 2 (by the clarinetist and by the pianist). Finally, there are no musicians, whose gazing at the partner decreases across run-throughs and there are no musicians either, whose last run-through reveals the lowest number of partner-gazes.

Discussion

Individual Musicians’ Amount of Gazing at the Partner

As regards the amount of partner-gazes for all runthroughs in total, it was found that most visual interaction occurred between clarinetists and violinists, some between clarinetists and pianists, and no or only sporadic visual interaction between violinists and pianists. A possible explanation could lie in the particular musical excerpt used. Although the three instrumentalists overall are treated as equal partners (through an almost equal share in playing the melody and through passages that are polyphonic in nature), exchanges of the melody happen at a quicker pace between the violinist and clarinetist than with the pianist. According to our analysis, the violinist and clarinetist take over the melody each 12 times, whereas the pianist does so only 4 times but for a longer stretch of time.

Another plausible interpretation relates to the (natural) set-up of the musicians. Violinists and clarinetists only have to look up from the score in order to see each other, whereas a slight turn of the head to the right is needed for the clarinetists and pianists, and a turn of the body for the violinists and pianists. The same remark applies to the study by

King and Ginsborg (

2011), where (relatively) little observable gazing at the partner was found. In their study a head turn was necessary for the singers and pianists to see each other within central vision, similarly to the clarinet-piano interaction in our study. While a common explanation for both studies might be that pianists do not often look at their partners, the set-up was insufficiently accounted for in order to enable a meaningful interpretation of the frequency of partner-gazes.

While the musical fragment and the set-up in our study may have caused differences between instrumentalists within the trio constellation, a comparison across trios seems to defy attempts at generalisation, confirming our expectation that differences in gaze behaviour would occur regardless of the specific instrument played. Between clarinetists and violinists, both the scenario of clarinetists looking more often at violinists (Trios 2 and 3) and the opposite scenario (Trios 1 and 4) occurred. In addition, the absolute frequency of gazing at the partner differed widely: In trio 2 the violinist only looked 10 times at the clarinetist, whereas the violinist in trio 4 did so 125 times. Also, while in some trios gaze occurred in all six directions (Trios 2 and 4), in other trios certain gaze directions were not represented in any of the four run-throughs. In extreme cases, the pianist was not looked at by anyone and/or did not look at anyone. Individuals thus differed to the extent that they looked at both partners, only looked at one partner and ignored another, or never even used (foveal) gaze as a means of communication.

As for the pianists’ infrequent looking at the partner, the need for seeing the keys may have to be taken into account. However, the pianists reported only few technical difficulties that required close visual attention. Pianists 1 and 3 reported a jump and a glissando. Pianist 2 indicated only the glissando and pianist 4 did not report any technical difficulties. Pianist 3 pointed out additional difficulties that required looking at the keyboard in two passages, each six bars long. We also note that they may have experienced a higher need for reading the score than the other musicians because of having to read two staves, but our self-reports did not cover this issue.

A substantial difference as to how often musicians looked at their partner can also be seen in

Biasutti et al. (

2016). In daily conversations, too, participants’ amount of gazing at the partner may differ substantially. Specifically,

Kendon (

1967) found that the amount of time spent gazing at the partner varied from 28% to over 70% of the total duration of the analysed samples. We note that, in our data set, the total amount of time spent looking at the partner was much less, as can be expected since the musicians also had a reading task to fulfill, and varied between 0% and approximately 15% of the entire duration of a run-through. As for the musicians who did not look at all at their partner (pianists 1 and 3), their situation mirrors the experimental conditions in

Keller and Appel (

2010) and

Kawase (

2014b), whereby musicians could not see each other. The former study, using a fragment without metrical changes, found no remarkable effect of visual conditions on ensemble coordination, while in Kawase’s study there was an effect on the coordination of tempo changes. In line with

Keller and Appel’s (

2010) study, our musical fragment did not contain tempo changes or changes in metrical pulse. Consequently, it may be that pianists 1 and 3 did not consider gazing at the partner, at least via central vision, necessary for the sake of temporal coordination. In fact, when listening to the audio, no disturbing asynchronies could be heard in any of their trio’s performances (as was the case for all trios). Thus, the musical sounds themselves may have provided them with the necessary cues to synchronise. Evidence from a study by Vera, Chew, and Healey (2013) could support such an argument. While other studies have mentioned synchronisation and musical coordination as a possible role of gazing at the partner (

Davidson, 2012;

Davidson & Good, 2002;

Kawase, 2009; Morgan, Gunes, & Bryan-Kinns, 2015b;

Williamon & Davidson, 2002), this functionality has to be considered with respect to the musical characteristics and to competing coordination strategies that individuals may draw on to different extents.

Distribution of Partner-Gazes Across Runthroughs

We also looked at the distribution of partner-gazes across run-throughs to see whether a tendency could be observed. In the first run-through, partner-gazes were fewer and represented less gaze directions than in the other run-throughs. Since at this moment the musicians were still unfamiliar with each other and with the overall sound of the musical fragment, familiarity may well be a good explanation for the frequency of partner-gaze. Once the newness of the music and the social pressure to perform well in front of unfamiliar partners have been overcome, musicians are better able to let go of the notes on the score. Also, additional rehearsing after individual practice may have contributed to a diminished/diminishing need for close note-to-note reading in run-throughs 2, 3, and 4. Alternatively, the first runthrough was not an ‘official’ one in the sense that it was not requested by the researcher. As it was an inherent part of the rehearsal period, there may have been less pressure on the participants to perform well, causing a difference in their gaze behaviour. Last, we note that the first runthrough was played slower than the other run-throughs by trios 2 and 3, causing the conditions in which gaze occurred to be slightly different in the first run-through for those trios. (Specifically, all trios played run-throughs 2, 3 and 4 at performance tempo, with the total playing time varying between 1′45″ and 1′55″. Trios 1 and 4 stayed within this range for the first run-through, while in trios 2 and 3 the total playing time was 2′51″ and 2′20″ respectively.)

A tendency across all run-throughs was otherwise hard to find. The last run-through was found to contain the most partner-gazes in the case of a few musicians, while sometimes the amount of partner-gazes also increased across all run-throughs. The opposite—the last run-through containing the least partner-gazes or a decrease across run-throughs—was not found. Although this is little evidence for a general tendency, this somewhat confirms

Williamon and Davidson’s (

2002) suggestion that the increase of eye-contact at important momments for coordination should not be explained solely by a tendency for gaze to increasingly function as a coordinating device. By contrast, in our pilot study (

Vandemoortele et al., 2016), no tendency for partner-gaze to occur more frequently could be found in any of the three duos that were analysed. This may be due to the fact that the four run-throughs in the pilot study took place on two different days and constituted ‘snapshots’ in the middle of a rehearsal process, which was initiated by the musicians themselves before they were asked to take part in the study. The tendencies found in the current study may thus be typical for a very first rehearsal when performers do not know each other and try out a new piece for the first time.

Future Analysis and Research

The current analysis focused on the direction in which gazing at the partner occurred. Further analysis may delve deeper into the interactional patterning of gaze by the three musical partners. For instance, a gaze at the partner may or may not be returned by the addressee. As regards this patterning,

Kawase’s (

2014a) terminology may be useful, as he distinguishes between “mutual gaze” (gazing at the partner’s body) and the sub-category “eyecontact” (looking into each other’s eyes). Some authors fail to do so and use the term eye-contact without clearly defining it (

Blank & Davidson, 2007;

Ford & Davidson, 2003; Geeves, McIlwain, & Sutton, 2014;

Pennill & Timmers, 2017), which renders their results somewhat difficult to interpret. We also note that conversationanalytical research has much to offer regarding the roles and mechanisms of mutual gaze (see for instance

Argyle & Cook, 1976;

Bavelas et al., 2002). In our own data set, instances of mutual gazing were found, but eye-contact could not be distinguished separately from mutual gaze since the distance between the musicians was too large.

More complex patterns that do not take place in dyadic interactions may also be investigated.

Biasutti et al. (

2016) found instances of “multiple-direction eye contact”, i.e., gazing at more than one different musical partner in immediate succession. Research in non-musical domains on shared attention (Frischen, Bayliss, & Tipper, 2007) allows us to distinguish an additional gaze pattern, hitherto unreported in music studies, namely the simultaneous gazing by two persons at a third. Our own data set showed that both gaze patterns occurred, however only rarely. We add that studying bodily communication

multimodally would be a useful (and sought-after) way to advance the study of gaze patterning between partners. For instance, investigations into the mechanisms of interpersonal synchronisation could benefit from studying cueing gestures in relation to gaze (

Bishop & Goebl, 2017,

2018).

Our own research will proceed by studying the relationship between gaze and score characteristics. However, we foresee some challenges. First, the duration of the gazes at the partners may differ substantially: from as short as 40 ms (meaning that the cursor appeared twice in a row at more or less the same spot at an interval of 40 ms, which was the duration of a single frame during annotation) to around 2400 ms, although the mean duration for a partner-gaze was approximately 400 ms. Leaving aside that an explanation for such outliers would be interesting, long gazes have the ‘disadvantage’ that they cover a lot of the ongoing music and hence, become difficult to relate to just one moment in the ongoing stream of music. Furthermore, if a partner-gaze is at all related to a single moment in the score, it may precede or follow a score event. Alternatively, a gaze can be related to a passage of music without it being deliberately timed to precede or follow a certain moment within that passage. Indeed, the gaze patterning of a single musician may or may not change along contrasting sections in the music. Yet another scenario occurs when gazes occur quickly after one another, opening up the possibility that they may relate to the same moment in the music. This shows the complexity of gaze behaviour in our data set and indicates that data enabling access to the participants’ own processing of the score and their experience during playing would be valuable input for analysis. Thus, in addition to an analysis in relation to the score, we will analyse gaze in relation to the decision-making process during the rehearsal periods and the participants’ answers on the postperformance questionnaire. We hope that this will enable us to relate gaze to the musical task as perceived by the musicians and that this will prove a promising method to study the way gaze functions in ensemble playing. Meanwhile, the current study forms a complement to an artistic research project, in which the first author investigates gaze behaviour in her own trio (equally consisting of violin, clarinet and piano). It is expected that her selftuition will be strengthened by the results from the current observational enquiry.