A GIS Pipeline to Produce GeoAI Datasets from Drone Overhead Imagery

Abstract

:1. Introduction

2. Materials and Methods

2.1. GIS Pipeline to Produce GeoAI Datasets

2.2. Raster Layers: Drone Imagery

2.2.1. Geometric Augmentation

- Rotation: consists of small clockwise rotations of images; suggested value is 10 degrees [22].

- Mirroring: a transformation in which the upper and lower, or right and left, parts of images interchange position. They are commonly referred to as vertical and horizontal mirroring.

- Resizing or zooming: the magnification of certain parts of an image, zooming in or out.

- Cropping: the trimming of an image at certain place.

- Deformation: the elastic change of the proportion of image dimensions. It is a common phenomenon that occurs in the borders of orthomosaics [17].

- Overlapping: the repetition of a part of an image measured by a percentage (%).

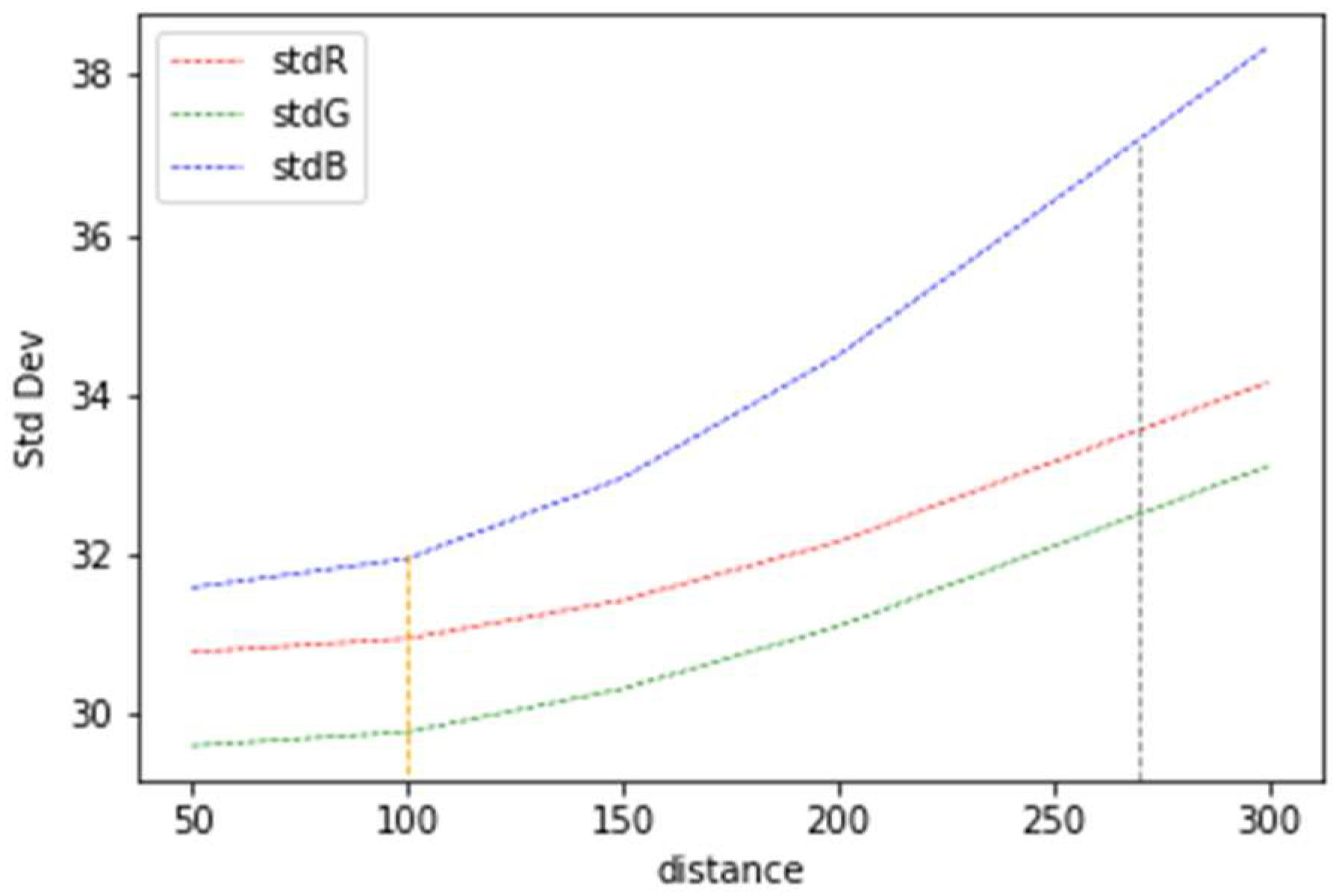

2.2.2. Spectral Augmentation

- Brightness: the amount of light in an image. It increases the overall lightness of the image—for example, making dark colors lighter, and light colors whiter (GIS Mapping Software, Location Intelligence and Spatial Analytics|Esri, www.esri.com (accessed on 2 May 2022))

- Contrast: the difference between the darkest and lightest colors of an image. An adjustment of contrast may result in a crisper image, making image features become easier to distinguish (GIS Mapping Software, Location Intelligence and Spatial Analytics|Esri, www.esri.com (accessed on 2 May 2022)).

- Intensity or gamma value: refers to the degree of contrast between the mid-level gray values of an image. It does not change the extreme pixel values, the black or white—it only affects the middle values [21]. A gamma correction controls the brightness of an image. Gamma values lower than one decrease the contrast in the darker areas and increase it in the lighter areas. It changes the image without saturating the dark or light areas, and doing this brings out the details of lighter features, such as building roofs. On the other hand, gamma values greater than one increase the contrast in darker areas, such as shadows from buildings or trees in roads. They also help bring out details in lower elevation areas when working with elevation data such as DSM or DTM. Gamma can modify the brightness, but also the ratios of red to green to blue (GIS Mapping Software, Location Intelligence and Spatial Analytics|Esri, www.esri.com (accessed on 2 May 2022)).

2.2.3. Data Fusion

- Height: the DSM, which contains the height of objects in an image, can be fused with the orthomosaics by adding it either algebraically or logarithmically to each red (), green (), and blue () band as stated in (1) and (2). Another option is replacing any of the bands with DSM, as in (3).

- Index: may replace one of the RGB bands of a drone orthomosaic with the values of an index. The Visible Atmospherically Resistant Index (VARI) was developed by [29], based on a measurement of corn and soybean crops in the midwestern United States, to estimate the fraction of vegetation in a scene, with low sensitivity to atmospheric effects in the visible portion of the spectrum. It is exactly what occurs in low-altitude drone imagery [26]. Equation (5) allows the calculation of the VARI for an orthomosaic using the red, green, and blue bands of an image.

2.3. Vector Layers: Ground Truth

Vector Masks, Raster Masks, and Color Masks

2.4. Image Tessellation, Imbalance Check, Pairing, and Splitting

3. Results

3.1. Method for Producing Primitive Masks

3.2. Dataset Production

3.3. Dataset Evaluation

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A

References

- Song, Y.; Huang, B.; Cai, J.; Chen, B. Dynamic Assessments of Population Exposure to Urban Greenspace Using Multi-Source Big Data. Sci. Total Environ. 2018, 634, 1315–1325. [Google Scholar] [CrossRef]

- Ballesteros, J.R.; Sanchez-Torres, G.; Branch, J.W. Automatic Road Extraction in Small Urban Areas of Developing Countries Using Drone Imagery and Image Translation. In Proceedings of the 2021 2nd Sustainable Cities Latin America Conference (SCLA), Online, 25–27 August 2021; pp. 1–6. [Google Scholar]

- Vanschoren, J. Aerial Imagery Pixel-Level Segmentation Aerial Imagery Pixel-Level Segmentation. Available online: https://www.semanticscholar.org/paper/Aerial-Imagery-Pixel-level-Segmentation-Aerial-Vanschoren/7dadc3affe05783f2b49282c06a2aa6effbd4267 (accessed on 26 February 2022).

- Gao, X.; Sun, X.; Zhang, Y.; Yan, M.; Xu, G.; Sun, H.; Jiao, J.; Fu, K. An End-to-End Neural Network for Road Extraction From Remote Sensing Imagery by Multiple Feature Pyramid Network. IEEE Access 2018, 6, 39401–39414. [Google Scholar] [CrossRef]

- Ng, V.; Hofmann, D. Scalable Feature Extraction with Aerial and Satellite Imagery. In Proceedings of the 17th Python in Science Conference (SCIPY 2018), Austin, TX, USA, 9–15 July 2018; pp. 145–151. [Google Scholar]

- Perri, D.; Simonetti, M.; Gervasi, O. Synthetic Data Generation to Speed-Up the Object Recognition Pipeline. Electronics 2022, 11, 2. [Google Scholar] [CrossRef]

- Ratner, A.; Bach, S.H.; Ehrenberg, H.; Fries, J.; Wu, S.; Ré, C. Snorkel: Rapid Training Data Creation with Weak Supervision. In Proceedings of the VLDB Endowment. International Conference on Very Large Data Bases, Munich, Germany, 28 August–1 September 2017; Volume 11, p. 269. [Google Scholar] [CrossRef]

- Golubev, A.; Chechetkin, I.; Parygin, D.; Sokolov, A.; Shcherbakov, M. Geospatial Data Generation and Preprocessing Tools for Urban Computing System Development1. Procedia Comput. Sci. 2016, 101, 217–226. [Google Scholar] [CrossRef]

- Al-Azizi, J.I.; Shafri, H.Z.M.; Hashim, S.J.B.; Mansor, S.B. DeepAutoMapping: Low-Cost and Real-Time Geospatial Map Generation Method Using Deep Learning and Video Streams. Earth Sci. Inf. 2020, 15, 1481–1494. [Google Scholar] [CrossRef]

- Abdollahi, A.; Pradhan, B.; Shukla, N.; Chakraborty, S.; Alamri, A. Deep Learning Approaches Applied to Remote Sensing Datasets for Road Extraction: A State-Of-The-Art Review. Remote Sens. 2020, 12, 1444. [Google Scholar] [CrossRef]

- Zhang, Q.; Qin, R.; Huang, X.; Fang, Y.; Liu, L. Classification of Ultra-High Resolution Orthophotos Combined with DSM Using a Dual Morphological Top Hat Profile. Remote Sens. 2015, 7, 16422–16440. [Google Scholar] [CrossRef]

- Abdollahi, A.; Pradhan, B.; Alamri, A. RoadVecNet: A New Approach for Simultaneous Road Network Segmentation and Vectorization from Aerial and Google Earth Imagery in a Complex Urban Set-Up. GISci. Remote Sens. 2021, 58, 1151–1174. [Google Scholar] [CrossRef]

- Yang, W.; Gao, X.; Zhang, C.; Tong, F.; Chen, G.; Xiao, Z. Bridge Extraction Algorithm Based on Deep Learning and High-Resolution Satellite Image. Sci. Program. 2021, 2021, e9961963. [Google Scholar] [CrossRef]

- Gong, Z.; Xu, L.; Tian, Z.; Bao, J.; Ming, D. Road Network Extraction and Vectorization of Remote Sensing Images Based on Deep Learning. In Proceedings of the 2020 IEEE 5th Information Technology and Mechatronics Engineering Conference (ITOEC), Chongqing, China, 12–14 June 2020; pp. 303–307. [Google Scholar]

- Ballesteros, J.R.; Sanchez-Torres, G.; Branch-Bedoya, J.W. HAGDAVS: Height-Augmented Geo-Located Dataset for Detection and Semantic Segmentation of Vehicles in Drone Aerial Orthomosaics. Data 2022, 7, 50. [Google Scholar] [CrossRef]

- Avola, D.; Pannone, D. MAGI: Multistream Aerial Segmentation of Ground Images with Small-Scale Drones. Drones 2021, 5, 111. [Google Scholar] [CrossRef]

- Kameyama, S.; Sugiura, K. Effects of Differences in Structure from Motion Software on Image Processing of Unmanned Aerial Vehicle Photography and Estimation of Crown Area and Tree Height in Forests. Remote Sens. 2021, 13, 626. [Google Scholar] [CrossRef]

- Heffels, M.; Vanschoren, J. Aerial Imagery Pixel-Level Segmentation. arXiv 2020, arXiv:2012.02024. [Google Scholar]

- Shermeyer, J.; Etten, A. The Effects of Super-Resolution on Object Detection Performance in Satellite Imagery. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, Long Beach, CA, USA, 16–17 June 2019; pp. 1432–1441. [Google Scholar]

- Weir, N.; Lindenbaum, D.; Bastidas, A.; Etten, A.; Kumar, V.; Mcpherson, S.; Shermeyer, J.; Tang, H. SpaceNet MVOI: A Multi-View Overhead Imagery Dataset. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Korea, 27 October–2 November 2019; pp. 992–1001. [Google Scholar]

- Buslaev, A.; Iglovikov, V.I.; Khvedchenya, E.; Parinov, A.; Druzhinin, M.; Kalinin, A.A. Albumentations: Fast and Flexible Image Augmentations. Information 2020, 11, 125. [Google Scholar] [CrossRef]

- Blaga, B.-C.-Z.; Nedevschi, S. A Critical Evaluation of Aerial Datasets for Semantic Segmentation. In Proceedings of the 2020 IEEE 16th International Conference on Intelligent Computer Communication and Processing (ICCP), Cluj-Napoca, Romania, 3–5 September 2020; pp. 353–360. [Google Scholar]

- Long, Y.; Xia, G.-S.; Li, S.; Yang, W.; Yang, M.Y.; Zhu, X.X.; Zhang, L.; Li, D. On Creating Benchmark Dataset for Aerial Image Interpretation: Reviews, Guidances, and Million-AID. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 4205–4230. [Google Scholar] [CrossRef]

- Song, A.; Kim, Y. Semantic Segmentation of Remote-Sensing Imagery Using Heterogeneous Big Data: International Society for Photogrammetry and Remote Sensing Potsdam and Cityscape Datasets. ISPRS Int. J. Geo-Inf. 2020, 9, 601. [Google Scholar] [CrossRef]

- Xu, Y.; Xie, Z.; Feng, Y.; Chen, Z. Road Extraction from High-Resolution Remote Sensing Imagery Using Deep Learning. Remote Sens. 2018, 10, 1461. [Google Scholar] [CrossRef]

- Eng, L.S.; Ismail, R.; Hashim, W.; Baharum, A. The Use of VARI, GLI, and VIgreen Formulas in Detecting Vegetation In Aerial Images. IJTech 2019, 10, 1385. [Google Scholar] [CrossRef]

- López-Tapia, S.; Ruiz, P.; Smith, M.; Matthews, J.; Zercher, B.; Sydorenko, L.; Varia, N.; Jin, Y.; Wang, M.; Dunn, J.B.; et al. Machine Learning with High-Resolution Aerial Imagery and Data Fusion to Improve and Automate the Detection of Wetlands. Int. J. Appl. Earth Obs. Geoinf. 2021, 105, 102581. [Google Scholar] [CrossRef]

- Sun, W.; Wang, R. Fully Convolutional Networks for Semantic Segmentation of Very High Resolution Remotely Sensed Images Combined With DSM. IEEE Geosci. Remote Sens. Lett. 2018, 15, 474–478. [Google Scholar] [CrossRef]

- Gitelson, A.A.; Stark, R.; Grits, U.; Rundquist, D.; Kaufman, Y.; Derry, D. Vegetation and Soil Lines in Visible Spectral Space: A Concept and Technique for Remote Estimation of Vegetation Fraction. Int. J. Remote Sens. 2002, 23, 2537–2562. [Google Scholar] [CrossRef]

- Wang, S.; Liu, W.; Wu, J.; Cao, L.; Meng, Q.; Kennedy, P.J. Training Deep Neural Networks on Imbalanced Data Sets. In Proceedings of the 2016 International Joint Conference on Neural Networks (IJCNN), Vancouver, BC, Canada, 24–29 July 2016; pp. 4368–4374. [Google Scholar]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional Networks for Biomedical Image Segmentation. In Proceedings of the Medical Image Computing and Computer-Assisted Intervention—MICCAI 2015; Navab, N., Hornegger, J., Wells, W.M., Frangi, A.F., Eds.; Springer International Publishing: Cham, Switzerland, 2015; pp. 234–241. [Google Scholar]

| Settlement | Geographic Extent (Lonmin; Latmin) (Lonmax; Latmax) | Flight Height (m) | GSD (cm/px) | Number of Pixels Columns, Rows | Area (Hectares) |

|---|---|---|---|---|---|

| El Retiro, Ant. | (−75.5057858485094; 6.05456672000301) (−75.4995986448169; 6.06544416605448) | 120 | 7 | 11,276, 16,914 | 82.9 |

| La Ceja, Ant. | (−75.4379001836735; 6.03130980894862) (−75.4332962779884; 6.0342695019348) | 80 | 5.5 | 12,162, 10,181 | 16.8 |

| Prado_largo, Ant. | (−75.5311888383421; 6.15636546472326) (−75.5226877620765; 6.16018600622437) | 90 | 5.7 | 20,826, 16,829 | 40 |

| Rionegro, Ant. | (−75.3809074659528; 6.13947401033623) (−75.3760197352806; 6.14988050247727) | 80 | 5.5 | 8847, 18,895 | 62.7 |

| Andes, Ant. | (−75.8893603823231; 5.64578355169507) (−75.8715703129762; 5.67187862641961) | 180 | 8.6 | 20,744, 30,428 | 572.0 |

| RGDSM | RVARIB | HRGB | HRVARIB |

|---|---|---|---|

| mIoU = 0.725 | mIoU = 0.549 | mIoU = 0.621 | mIoU = 0.508 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ballesteros, J.R.; Sanchez-Torres, G.; Branch-Bedoya, J.W. A GIS Pipeline to Produce GeoAI Datasets from Drone Overhead Imagery. ISPRS Int. J. Geo-Inf. 2022, 11, 508. https://doi.org/10.3390/ijgi11100508

Ballesteros JR, Sanchez-Torres G, Branch-Bedoya JW. A GIS Pipeline to Produce GeoAI Datasets from Drone Overhead Imagery. ISPRS International Journal of Geo-Information. 2022; 11(10):508. https://doi.org/10.3390/ijgi11100508

Chicago/Turabian StyleBallesteros, John R., German Sanchez-Torres, and John W. Branch-Bedoya. 2022. "A GIS Pipeline to Produce GeoAI Datasets from Drone Overhead Imagery" ISPRS International Journal of Geo-Information 11, no. 10: 508. https://doi.org/10.3390/ijgi11100508