Assessment of Digital Pathology Imaging Biomarkers Associated with Breast Cancer Histologic Grade

Abstract

:1. Introduction

2. Methods

2.1. Patients and Dataset

2.2. Specimen Preparation

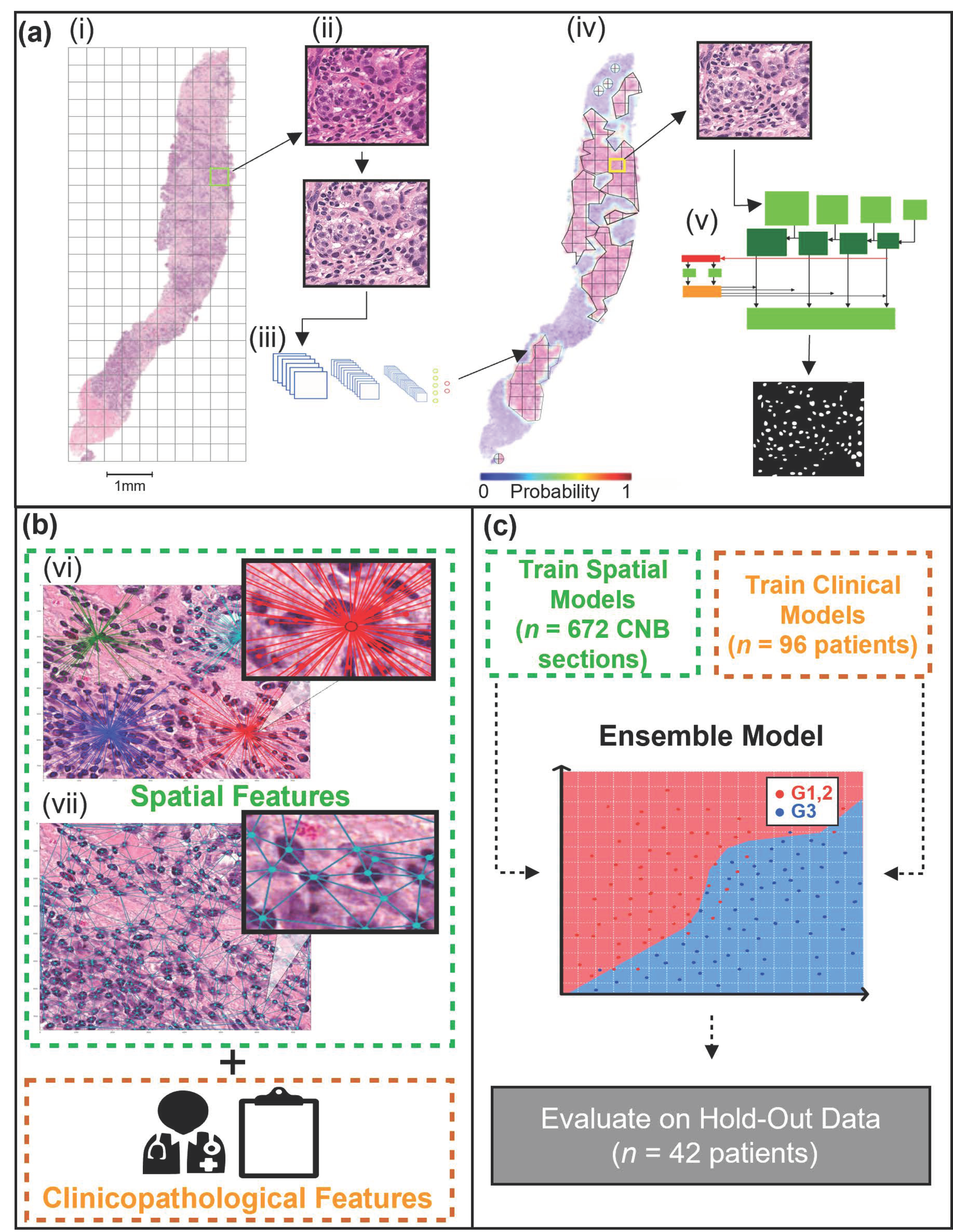

2.3. WSI Pre-Processing and Tumor Bed Identification

2.4. Instance Segmentation Network

2.5. Spatial Feature Extraction

2.6. Machine Learning

2.7. Software and Hardware

3. Results

3.1. Clinicopathological Characteristics

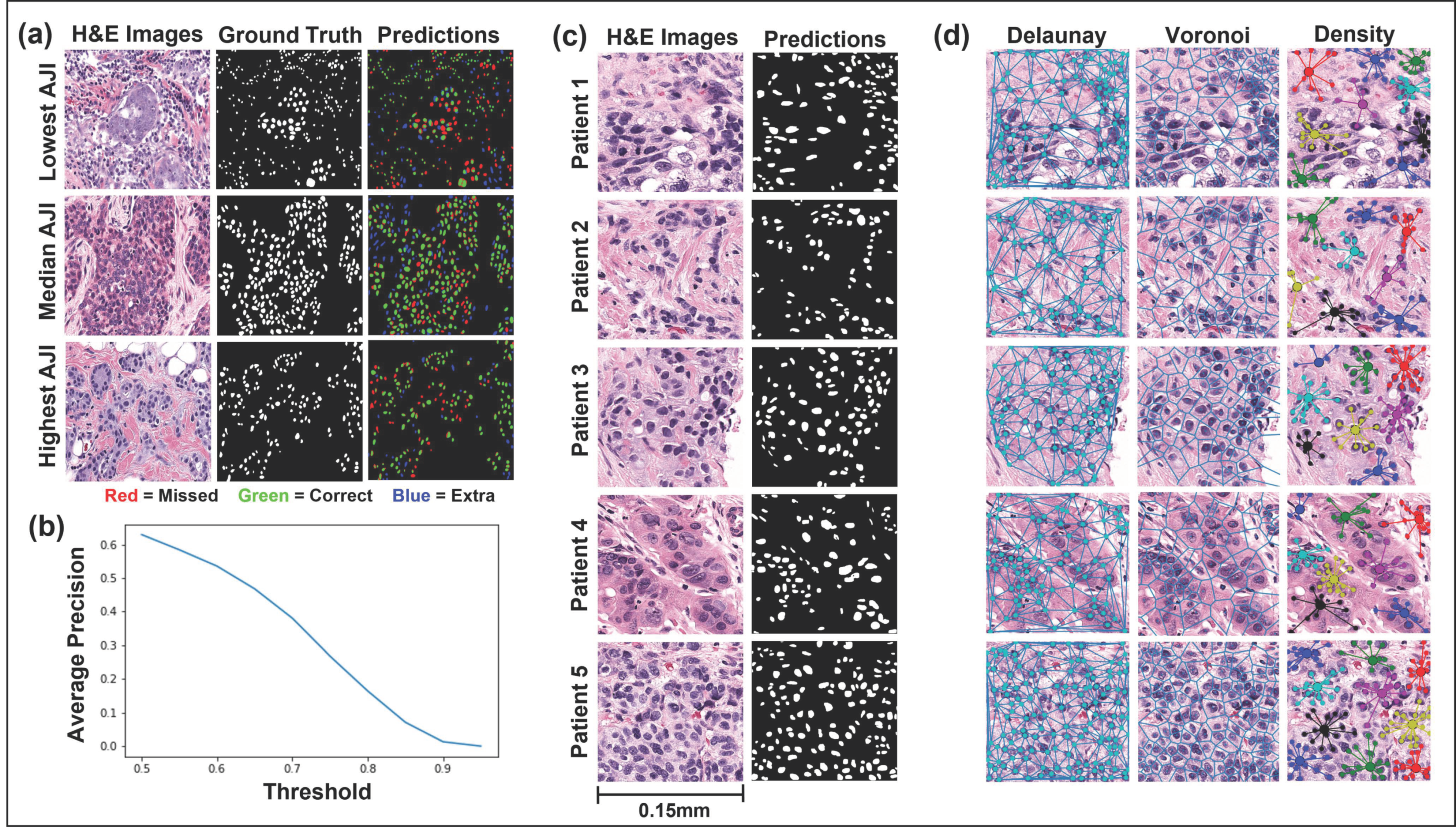

3.2. Mask R-CNN Segmentation

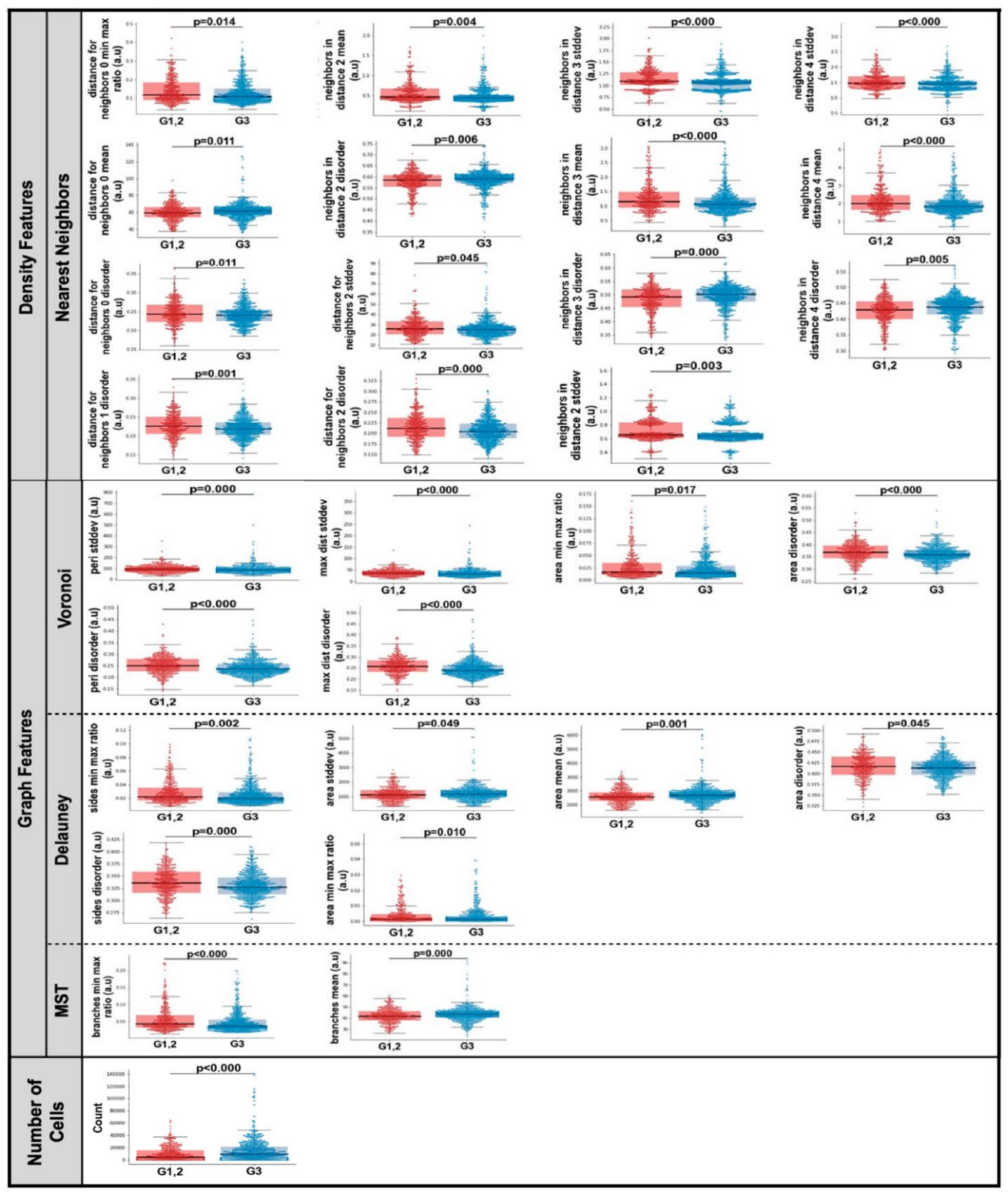

3.3. Computationally Derived Spatial Features

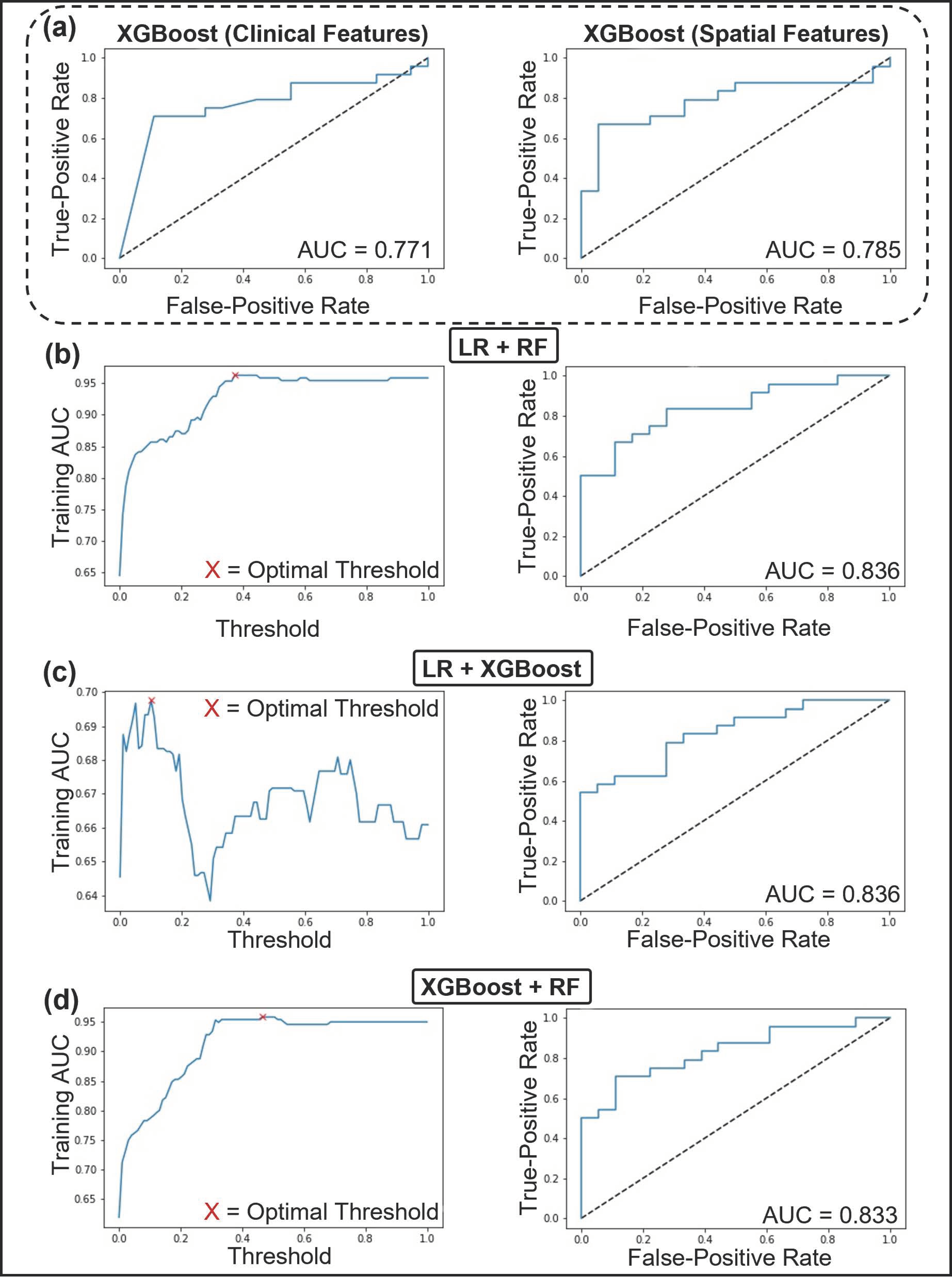

3.4. Predictive Modeling Using Machine Learning

4. Discussion

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Fitzgibbons, P.L.; Connolly, J.L. Cancer Protocol Templates. Available online: https://www.cap.org/protocols-and-guidelines/cancer-reporting-tools/cancer-protocol-templates (accessed on 11 August 2021).

- Van Dooijeweert, C.; van Diest, P.J.; Ellis, I.O. Grading of invasive breast carcinoma: The way forward. Virchows Arch. 2021. [Google Scholar] [CrossRef] [PubMed]

- Rakha, E.A.; El-Sayed, M.E.; Lee, A.H.S.; Elston, C.W.; Grainge, M.J.; Hodi, Z.; Blamey, R.W.; Ellis, I.O. Prognostic significance of nottingham histologic grade in invasive breast carcinoma. J. Clin. Oncol. 2008, 26, 3153–3158. [Google Scholar] [CrossRef]

- Cortazar, P.; Zhang, L.; Untch, M.; Mehta, K.; Costantino, J.P.; Wolmark, N.; Bonnefoi, H.; Cameron, D.; Gianni, L.; Valagussa, P.; et al. Pathological complete response and long-term clinical benefit in breast cancer: The CTNeoBC pooled analysis. Lancet 2014, 384, 164–172. [Google Scholar] [CrossRef] [Green Version]

- Kalli, S.; Semine, A.; Cohen, S.; Naber, S.P.; Makim, S.S.; Bahl, M. American Joint Committee on Cancer’s Staging System for Breast Cancer, Eighth Edition: What the Radiologist Needs to Know. RadioGraphics 2018, 38, 1921–1933. [Google Scholar] [CrossRef] [PubMed]

- Giuliano, A.E.; Edge, S.B.; Hortobagyi, G.N. Eighth Edition of the AJCC Cancer Staging Manual: Breast Cancer. Ann. Surg. Oncol. 2018, 25, 1783–1785. [Google Scholar] [CrossRef]

- Van Dooijeweert, C.; van Diest, P.J.; Baas, I.O.; van der Wall, E.; Deckers, I.A. Variation in breast cancer grading: The effect of creating awareness through laboratory-specific and pathologist-specific feedback reports in 16,734 patients with breast cancer. J. Clin. Pathol. 2020, 73, 793–799. [Google Scholar] [CrossRef] [PubMed]

- Dooijeweert, C.; Diest, P.J.; Willems, S.M.; Kuijpers, C.C.H.J.; Wall, E.; Overbeek, L.I.H.; Deckers, I.A.G. Significant inter- and intra-laboratory variation in grading of invasive breast cancer: A nationwide study of 33,043 patients in The Netherlands. Int. J. Cancer 2020, 146, 769–780. [Google Scholar] [CrossRef]

- Ginter, P.S.; Idress, R.; D’Alfonso, T.M.; Fineberg, S.; Jaffer, S.; Sattar, A.K.; Chagpar, A.; Wilson, P.; Harigopal, M. Histologic grading of breast carcinoma: A multi-institution study of interobserver variation using virtual microscopy. Mod. Pathol. 2021, 34, 701–709. [Google Scholar] [CrossRef] [PubMed]

- Meyer, J.S.; Alvarez, C.; Milikowski, C.; Olson, N.; Russo, I.; Russo, J.; Glass, A.; Zehnbauer, B.A.; Lister, K.; Parwaresch, R. Breast carcinoma malignancy grading by Bloom-Richardson system vs proliferation index: Reproducibility of grade and advantages of proliferation index. Mod. Pathol. 2005, 18, 1067–1078. [Google Scholar] [CrossRef] [PubMed]

- Anglade, F.; Milner, D.A.; Brock, J.E. Can pathology diagnostic services for cancer be stratified and serve global health? Cancer 2020, 126, 2431–2438. [Google Scholar] [CrossRef]

- Metter, D.M.; Colgan, T.J.; Leung, S.T.; Timmons, C.F.; Park, J.Y. Trends in the US and Canadian Pathologist Workforces From 2007 to 2017. JAMA Netw. Open 2019, 2, e194337. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Krithiga, R.; Geetha, P. Breast Cancer Detection, Segmentation and Classification on Histopathology Images Analysis: A Systematic Review. Arch. Comput. Methods Eng. 2021, 28, 2607–2619. [Google Scholar] [CrossRef]

- Tran, W.T.; Sadeghi-Naini, A.; Lu, F.-I.; Gandhi, S.; Meti, N.; Brackstone, M.; Rakovitch, E.; Curpen, B. Computational Radiology in Breast Cancer Screening and Diagnosis Using Artificial Intelligence. Can. Assoc. Radiol. J. 2021, 72, 98–108. [Google Scholar] [CrossRef] [PubMed]

- AlZubaidi, A.K.; Sideseq, F.B.; Faeq, A.; Basil, M. Computer aided diagnosis in digital pathology application: Review and perspective approach in lung cancer classification. In Proceedings of the 2017 Annual Conference on New Trends in Information & Communications Technology Applications (NTICT), Baghdad, Iraq, 7–9 March 2017; pp. 219–224. [Google Scholar]

- Chen, C.; Huang, Y.; Fang, P.; Liang, C.; Chang, R. A computer-aided diagnosis system for differentiation and delineation of malignant regions on whole-slide prostate histopathology image using spatial statistics and multidimensional DenseNet. Med. Phys. 2020, 47, 1021–1033. [Google Scholar] [CrossRef] [PubMed]

- Duran-Lopez, L.; Dominguez-Morales, J.P.; Conde-Martin, A.F.; Vicente-Diaz, S.; Linares-Barranco, A. PROMETEO: A CNN-Based Computer-Aided Diagnosis System for WSI Prostate Cancer Detection. IEEE Access 2020, 8, 128613–128628. [Google Scholar] [CrossRef]

- Diao, S.; Hou, J.; Yu, H.; Zhao, X.; Sun, Y.; Lambo, R.L.; Xie, Y.; Liu, L.; Qin, W.; Luo, W. Computer-Aided Pathologic Diagnosis of Nasopharyngeal Carcinoma Based on Deep Learning. Am. J. Pathol. 2020, 190, 1691–1700. [Google Scholar] [CrossRef] [PubMed]

- Sun, H.; Zeng, X.; Xu, T.; Peng, G.; Ma, Y. Computer-Aided Diagnosis in Histopathological Images of the Endometrium Using a Convolutional Neural Network and Attention Mechanisms. IEEE J. Biomed. Health Inform. 2020, 24, 1664–1676. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Turashvili, G.; Brogi, E. Tumor Heterogeneity in Breast Cancer. Front. Med. 2017, 4, 227. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Hsu, W.-W.; Wu, Y.; Hao, C.; Hou, Y.-L.; Gao, X.; Shao, Y.; Zhang, X.; He, T.; Tai, Y. A Computer-Aided Diagnosis System for Breast Pathology: A Deep Learning Approach with Model Interpretability from Pathological Perspective. arXiv 2021, arXiv:2108.02656. [Google Scholar]

- Fauzi, M.F.A.; Jamaluddin, M.F.; Lee, J.T.H.; Teoh, K.H.; Looi, L.M. Tumor Region Localization in H&E Breast Carcinoma Images Using Deep Convolutional Neural Network. In Proceedings of the 2018 Institute of Electronics and Electronics Engineers International Conference on Image Processing, Valbonne, France, 12–14 December 2018; pp. 61–66. [Google Scholar]

- Araújo, T.; Aresta, G.; Castro, E.; Rouco, J.; Aguiar, P.; Eloy, C.; Polónia, A.; Campilho, A. Classification of breast cancer histology images using Convolutional Neural Networks. PLoS ONE 2017, 12, e0177544. [Google Scholar] [CrossRef] [PubMed]

- Zhang, J.; Guo, X.; Wang, B.; Cui, W. Automatic Detection of Invasive Ductal Carcinoma Based on the Fusion of Multi-Scale Residual Convolutional Neural Network and SVM. IEEE Access 2021, 9, 40308–40317. [Google Scholar] [CrossRef]

- Chen, J.-M.; Li, Y.; Xu, J.; Gong, L.; Wang, L.-W.; Liu, W.-L.; Liu, J. Computer-aided prognosis on breast cancer with hematoxylin and eosin histopathology images: A review. Tumor Biol. 2017, 39, 101042831769455. [Google Scholar] [CrossRef] [Green Version]

- Xu, J.; Xiang, L.; Liu, Q.; Gilmore, H.; Wu, J.; Tang, J.; Madabhushi, A. Stacked Sparse Autoencoder (SSAE) for Nuclei Detection on Breast Cancer Histopathology Images. IEEE Trans. Med. Imaging 2016, 35, 119–130. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Cireşan, D.C.; Giusti, A.; Gambardella, L.M.; Schmidhuber, J. Mitosis Detection in Breast Cancer Histology Images with Deep Neural Networks. In International Conference on Medical Image Computing and Computer-Assisted Intervention; Springer: Berlin/Heidelberg, Germany, 2013; pp. 411–418. [Google Scholar]

- Janowczyk, A.; Doyle, S.; Gilmore, H.; Madabhushi, A. A resolution adaptive deep hierarchical (RADHicaL) learning scheme applied to nuclear segmentation of digital pathology images. Comput. Methods Biomech. Biomed. Eng. Imaging Vis. 2018, 6, 270–276. [Google Scholar] [CrossRef] [PubMed]

- Veta, M.; van Diest, P.J.; Kornegoor, R.; Huisman, A.; Viergever, M.A.; Pluim, J.P.W. Automatic Nuclei Segmentation in H&E Stained Breast Cancer Histopathology Images. PLoS ONE 2013, 8, e70221. [Google Scholar] [CrossRef] [Green Version]

- Vandenberghe, M.E.; Scott, M.L.J.; Scorer, P.W.; Söderberg, M.; Balcerzak, D.; Barker, C. Relevance of deep learning to facilitate the diagnosis of HER2 status in breast cancer. Sci. Rep. 2017, 7, 45938. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Lagree, A.; Mohebpour, M.; Meti, N.; Saednia, K.; Lu, F.-I.; Slodkowska, E.; Gandhi, S.; Rakovitch, E.; Shenfield, A.; Sadeghi-Naini, A.; et al. A review and comparison of breast tumor cell nuclei segmentation performances using deep convolutional neural networks. Sci. Rep. 2021, 11, 8025. [Google Scholar] [CrossRef] [PubMed]

- Meti, N.; Saednia, K.; Lagree, A.; Tabbarah, S.; Mohebpour, M.; Kiss, A.; Lu, F.-I.; Slodkowska, E.; Gandhi, S.; Jerzak, K.J.; et al. Machine Learning Frameworks to Predict Neoadjuvant Chemotherapy Response in Breast Cancer Using Clinical and Pathological Features. JCO Clin. Cancer Inform. 2021, 66–80. [Google Scholar] [CrossRef] [PubMed]

- Dodington, D.W.; Lagree, A.; Tabbarah, S.; Mohebpour, M.; Sadeghi-Naini, A.; Tran, W.T.; Lu, F.-I. Analysis of tumor nuclear features using artificial intelligence to predict response to neoadjuvant chemotherapy in high-risk breast cancer patients. Breast Cancer Res. Treat. 2021, 186, 379–389. [Google Scholar] [CrossRef] [PubMed]

- Rakha, E.A.; Van Deurzen, C.H.M.; Paish, E.C.; MacMillan, R.D.; Ellis, I.O.; Lee, A.H.S. Pleomorphic lobular carcinoma of the breast: Is it a prognostically significant pathological subtype independent of histological grade? Mod. Pathol. 2013, 26, 496–501. [Google Scholar] [CrossRef]

- Bane, A.L.; Tjan, S.; Parkes, R.K.; Andrulis, I.; O’Malley, F.P. Invasive lobular carcinoma: To grade or not to grade. Mod. Pathol. 2005, 18, 621–628. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Couture, H.D.; Williams, L.A.; Geradts, J.; Nyante, S.J.; Butler, E.N.; Marron, J.S.; Perou, C.M.; Troester, M.A.; Niethammer, M. Image analysis with deep learning to predict breast cancer grade, ER status, histologic subtype, and intrinsic subtype. NPJ Breast Cancer 2018, 4, 30. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Allison, K.H.; Hammond, M.E.H.; Dowsett, M.; McKernin, S.E.; Carey, L.A.; Fitzgibbons, P.L.; Hayes, D.F.; Lakhani, S.R.; Chavez-MacGregor, M.; Perlmutter, J.; et al. Estrogen and progesterone receptor testing in breast cancer: American society of clinical oncology/college of American pathologists guideline update. Arch. Pathol. Lab. Med. 2020, 144, 545–563. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Wolff, A.C.; McShane, L.M.; Hammond, M.E.H.; Allison, K.H.; Fitzgibbons, P.; Press, M.F.; Harvey, B.E.; Mangu, P.B.; Bartlett, J.M.S.; Hanna, W.; et al. Human epidermal growth factor receptor 2 testing in breast cancer: American Society of Clinical Oncology/College of American Pathologists Clinical Practice Guideline Focused Update. Arch. Pathol. Lab. Med. 2018, 142, 1364–1382. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Wolff, A.C.; Hammond, M.E.H.; Hicks, D.G.; Dowsett, M.; McShane, L.M.; Allison, K.H.; Allred, D.C.; Bartlett, J.M.S.; Bilous, M.; Fitzgibbons, P.; et al. Recommendations for human epidermal growth factor receptor 2 testing in breast. J. Clin. Oncol. 2013, 31, 3997–4013. [Google Scholar] [CrossRef] [PubMed]

- Otsu, N. A Threshold Selection Method from Gray-Level Histograms. IEEE Trans. Syst. Man. Cybern. 1979, 9, 62–66. [Google Scholar] [CrossRef] [Green Version]

- Vahadane, A.; Peng, T.; Sethi, A.; Albarqouni, S.; Wang, L.; Baust, M.; Steiger, K.; Schlitter, A.M.; Esposito, I.; Navab, N. Structure-Preserving Color Normalization and Sparse Stain Separation for Histological Images. IEEE Trans. Med. Imaging 2016, 35, 1962–1971. [Google Scholar] [CrossRef]

- Martel, A.L.; Nofech-Mozes, S.; Salama, S.; Akbar, S.; Peikari, M. Assessment of Residual Breast Cancer Cellularity after Neoadjuvant Chemotherapy Using Digital Pathology. Available online: https://wiki.cancerimagingarchive.net/pages/viewpage.action?pageId=52758117 (accessed on 9 June 2020).

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar] [CrossRef] [Green Version]

- Lin, T.-Y.; Maire, M.; Belongie, S.; Bourdev, L.; Girshick, R.; Hays, J.; Perona, P.; Ramanan, D.; Zitnick, C.L.; Dollár, P. Microsoft COCO: Common Objects in Context. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015. [Google Scholar]

- Cauchy, M.A. Méthode générale pour la résolution des systèmes d’équations simultanées. Comp. Rend. Hebd. Seances Acad. Sci. 1847, 25, 536–538. [Google Scholar]

- Doyle, S.; Agner, S.; Madabhushi, A.; Feldman, M.; Tomaszewski, J. Automated grading of breast cancer histopathology using spectral clusteringwith textural and architectural image features. In Proceedings of the 2008 5th IEEE International Symposium on Biomedical Imaging: From Nano to Macro, Paris, France, 14–17 May 2008; pp. 496–499. [Google Scholar]

- Gutman, D.A.; Khalilia, M.; Lee, S.; Nalisnik, M.; Mullen, Z.; Beezley, J.; Chittajallu, D.R.; Manthey, D.; Cooper, L.A.D. The digital slide archive: A software platform for management, integration, and analysis of histology for cancer research. Cancer Res. 2017, 77, e75–e78. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Chawla, N.V.; Bowyer, K.W.; Hall, L.O.; Kegelmeyer, W.P. SMOTE: Synthetic Minority Over-sampling Technique Nitesh. J. Artif. Intell. Res. 2002, 16, 321–357. [Google Scholar] [CrossRef]

- Han, H.; Wang, W.Y.; Mao, B.H. Borderline-SMOTE: A new over-sampling method in imbalanced data sets learning. In Proceedings of the International Conference on Intelligent Computing, Hefei, China, 23–26 August 2005; Volume 3644, pp. 878–887. [Google Scholar]

- Harrell, F.E.; Lee, K.L.; Califf, R.M.; Pryor, D.B.; Rosati, R.A. Regression modelling strategies for improved prognostic prediction. Stat. Med. 1984, 3. [Google Scholar] [CrossRef]

- Anaconda Software Distribution. Computer Software. Vers. 2-2.3.1. 2016. Available online: https://www.anaconda.com/products/individual (accessed on 1 April 2020).

- Matterport’s Mask-R CNN. Computer Software. Available online: https://github.com/matterport/Mask_RCNN.com (accessed on 3 March 2021).

- Chollet, F. Others Keras. Available online: http://keras.io (accessed on 3 March 2021).

- Abadi, M.; Agarwal, A.; Barham, P.; Brevdo, E.; Chen, Z.; Citro, C.; Corrado, G.S.; Davis, A.; Dean, J.; Devin, M.; et al. TensorFlow: Large-Scale Machine Learning on Heterogeneous Distributed Systems. arXiv 2016, arXiv:1603.04467. [Google Scholar]

- Vanderplas, J.; Connolly, A.J.; Ivezic, Z.; Gray, A. Introduction to astroML: Machine learning for astrophysics. In Proceedings of the 2012 Conference on Intelligent Data Understanding, CIDU 2012, Boulder, CO, USA, 24–26 October 2012; pp. 47–54. [Google Scholar] [CrossRef] [Green Version]

- Raschka, S. MLxtend: Providing machine learning and data science utilities and extensions to Python’s scientific computing stack. J. Open Source Softw. 2018, 3, 638. [Google Scholar] [CrossRef]

- Pedregosa, F.; Varoquaux, G.; Gramfort, A.; Michel, V.; Thirion, B.; Grisel, O.; Blondel, M.; Prettenhofer, P.; Weiss, R.; Dubourg, V.; et al. Scikit-learn: Machine Learning in Python Fabian. J. Mach. Learn. Res. 2011, 12, 2825–2830. [Google Scholar]

- Chen, T.; Guestrin, C. XGBoost: A scalable tree boosting system. In Proceedings of the ACM SIGKDD International Conference on Knowledge Discovery and Data Mining 2016, San Francisco, CA, USA, 13–17 August 2016; pp. 785–794. [Google Scholar] [CrossRef] [Green Version]

- Qiu, J.; Xue, X.; Hu, C.; Xu, H.; Kou, D.; Li, R.; Li, M. Comparison of clinicopathological features and prognosis in triple-negative and non-triple negative breast cancer. J. Cancer 2016, 7, 167–173. [Google Scholar] [CrossRef] [Green Version]

- Wan, T.; Cao, J.; Chen, J.; Qin, Z. Automated grading of breast cancer histopathology using cascaded ensemble with combination of multi-level image features. Neurocomputing 2017, 229, 34–44. [Google Scholar] [CrossRef]

- Cao, J.; Qin, Z.; Jing, J.; Chen, J.; Wan, T. An automatic breast cancer grading method in histopathological images based on pixel-, object-, and semantic-level features. In Proceedings of the 2016 IEEE 13th International Symposium on Biomedical Imaging (ISBI), Prague, Czech Republic, 3–16 April 2016; pp. 1151–1154. [Google Scholar]

- Li, L.; Pan, X.; Yang, H.; Liu, Z.; He, Y.; Li, Z.; Fan, Y.; Cao, Z.; Zhang, L. Multi-task deep learning for fine-grained classification and grading in breast cancer histopathological images. Multimed. Tools Appl. 2020, 79, 14509–14528. [Google Scholar] [CrossRef]

- Yan, R.; Li, J.; Rao, X.; Lv, Z.; Zheng, C.; Dou, J.; Wang, X.; Ren, F.; Zhang, F. NANet: Nuclei-Aware Network for Grading of Breast Cancer in HE Stained Pathological Images. In Proceedings of the 2020 IEEE International Conference on Bioinformatics and Biomedicine (BIBM), Seoul, Korea, 16–19 December 2020; pp. 865–870. [Google Scholar]

- Dimitropoulos, K.; Barmpoutis, P.; Zioga, C.; Kamas, A.; Patsiaoura, K.; Grammalidis, N. Grading of invasive breast carcinoma through Grassmannian VLAD encoding. PLoS ONE 2017, 12, e0185110. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Dent, R.; Trudeau, M.; Pritchard, K.I.; Hanna, W.M.; Kahn, H.K.; Sawka, C.A.; Lickley, L.A.; Rawlinson, E.; Sun, P.; Narod, S.A. Triple-Negative Breast Cancer: Clinical Features and Patterns of Recurrence. Clin. Cancer Res. 2007, 13, 4429–4434. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Mills, M.N.; Yang, G.Q.; Oliver, D.E.; Liveringhouse, C.L.; Ahmed, K.A.; Orman, A.G.; Laronga, C.; Hoover, S.J.; Khakpour, N.; Costa, R.L.B.; et al. Histologic heterogeneity of triple negative breast cancer: A National Cancer Centre Database analysis. Eur. J. Cancer 2018, 98, 48–58. [Google Scholar] [CrossRef]

- Lee, H.J.; Park, I.A.; Park, S.Y.; Seo, A.N.; Lim, B.; Chai, Y.; Song, I.H.; Kim, N.E.; Kim, J.Y.; Yu, J.H.; et al. Two histopathologically different diseases: Hormone receptor-positive and hormone receptor-negative tumors in HER2-positive breast cancer. Breast Cancer Res. Treat. 2014, 145, 615–623. [Google Scholar] [CrossRef] [PubMed]

- Acs, B.; Rantalainen, M.; Hartman, J. Artificial intelligence as the next step towards precision pathology. J. Intern. Med. 2020, 288, 62–81. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Nam, S.; Chong, Y.; Jung, C.K.; Kwak, T.-Y.; Lee, J.Y.; Park, J.; Rho, M.J.; Go, H. Introduction to digital pathology and computer-aided pathology. J. Pathol. Transl. Med. 2020, 54, 125–134. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Amitha, H.; Selvamani, I. A Survey on Automatic Breast Cancer Grading of Histopathological Images. In Proceedings of the 2018 International Conference on Control, Power, Communication and Computing Technologies (ICCPCCT), Virtual. 11–13 November 2020; pp. 185–189. [Google Scholar]

| Patient Clinicopathological Characteristics | Study Cohort (n = 138) | ||

|---|---|---|---|

| G1, 2 (n = 58) n (%) | G3 (n = 80) n (%) | p-Value | |

| Age | |||

| Mean Age ± SD (y) | 51.6 ± 10.8 | 50.3 ± 9.2 | 0.423 |

| ≤50 years | 23 (39.7) | 39 (48.8) | 0.289 |

| >50 years | 35 (60.3) | 41 (51.3) | |

| Menopausal Status | |||

| Pre | 30 (51.7) | 38 (47.5) | 0.624 |

| Post | 28 (48.3) | 42 (52.5) | |

| Tumor Laterality | |||

| Left | 25 (43.1) | 38 (47.5) | 0.609 |

| Right | 33 (56.9) | 42 (52.5) | |

| Receptor Status | |||

| Median ER ± SD (%) | 90 ± 44.1 | 0 ± 43.9 | <0.000 |

| ER-positive | 43 (74.1) | 34 (42.5) | <0.000 |

| ER-negative | 15 (25.9) | 46 (57.5) | |

| Median PR ± SD (%) | 4 ± 42.8 | 0 ± 36.3 | 0.013 |

| PR-positive | 35 (60.3) | 30 (37.5) | 0.008 |

| PR-negative | 23 (39.7) | 50 (62.5) | |

| HER2-positive | 26 (44.8) | 39 (48.8) | 0.649 |

| HER2-negative | 32 (55.2) | 41 (51.3) | |

| Tumor Size | 0.445 | ||

| Mean Size ± SD (mm) | 48.3 ± 27.8 | 44.5 ± 25.1 | |

| Clinical T Stage | |||

| 1 | 5 (8.6) | 4 (5.0) | 0.314 |

| 2 | 32 (55.2) | 54 (67.5) | |

| 3 | 21 (36.2) | 22 (27.5) | |

| 4 | 0 (0.0) | 0 (0.0) | |

| Clinical N Stage | |||

| 0 | 12 (20.7) | 28 (35.0) | 0.183 |

| 1 | 40 (69.0) | 46 (57.5) | |

| 2 | 6 (10.3) | 6 (7.5) | |

| 3 | 0 (0.0) | 0 (0.0) | |

| Node Status | |||

| Node-positive | 46 (79.3) | 52 (65.0) | 0.067 |

| Node-negative | 12 (20.7) | 28 (35.0) | |

| Inflammatory Breast Cancer | |||

| Yes | 5 (8.6) | 8 (10.0) | 0.784 |

| No | 53 (91.4) | 72 (90.0) | |

| Model | Feature Type | Feature Index | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | ƒ | ||

| Naïve Bayes | Spatial | V max dist disorder | MST branches min max ratio | 33 | ||||||||

| Clinical | ||||||||||||

| K-NN | Spatial | # of nuclei | V max dist disorder | ρ neighbors in dist 1 disorder | ρ neighbors in dist 4 stddev | ρ min | 16 | |||||

| Clinical | Age (years) | ER (%) | PR (%) | HER2 status | 63 | |||||||

| LR | Spatial | V max dist stddev | V max dist disorder | MST branches min max ratio | ρ neighbors in dist 1 disorder | ρ dist for neighbors 2 min max ratio | 43 | |||||

| Clinical | ER (%) | PR (%) | 81 | |||||||||

| RF | Spatial | # of nuclei | V max dist stddev | V max dist disorder | ρ neighbors in dist 1 disorder | ρ neighbors in dist 4 stddev | ρ dist for neighbors 2 min max ratio | ρ dist for neighbors 2 disorder | ρ min | ρ med | 15 | |

| Clinical | PR (%) | HER2 status | 62 | |||||||||

| SVM | Spatial | # of nuclei | V max dist disorder | MST branches min max ratio | ρ neighbors in dist 1 disorder | ρ neighbors in dist 4 stddev | ρ dist for neighbors 2 disorder | ρ min | ρ med | 25 | ||

| Clinical | Age (years) | ER (%) | PR (%) | HER2 status | 84 | |||||||

| XGBoost | Spatial | # of nuclei | V max dist stddev | V max dist disorder | MST branches min max ratio | ρ neighbors in dist 1 disorder | ρ neighbors in dist 4 stddev | ρ dist for neighbors 2 min max ratio | ρ dist for neighbors 2 disorder | ρ min | ρ med | 7 |

| Clinical | ER (%) | PR (%) | 22 | |||||||||

| Feature Set | Model | Training Set | Testing Set | |||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Mean AUC ± SD | Mean ACC ± SD (%) | AUC | Acc (%) | Sn (%) | Sp (%) | Prev (%) | FNR (%) | FPR (%) | PPV (%) | NPV (%) | FDR (%) | FOR (%) | LR+ | LR- | DOR | f1 | ||

| Clinical | K-NN | 0.66 ± 0.16 | 62 ± 14 | 0.62 | 64.29 | 75.00 | 50.00 | 57.14 | 25.00 | 50.00 | 66.67 | 60.00 | 33.33 | 40.00 | 1.50 | 0.50 | 3.00 | 0.71 |

| LR | 0.66 ± 0.22 | 64 ± 19 | 0.77 | 73.81 | 75.00 | 72.22 | 57.14 | 25.00 | 27.78 | 78.26 | 68.42 | 21.74 | 31.58 | 2.70 | 0.35 | 7.80 | 0.77 | |

| RF | 0.68 ± 0.15 | 59 ± 14 | 0.56 | 66.67 | 83.33 | 44.44 | 57.14 | 16.67 | 55.56 | 66.67 | 66.67 | 33.33 | 33.33 | 1.50 | 0.38 | 4.00 | 0.74 | |

| SVM | 0.64 ± 0.25 | 52 ± 16 | 0.50 | 66.67 | 75.00 | 55.56 | 57.14 | 25.00 | 44.44 | 69.23 | 62.50 | 30.77 | 37.50 | 1.69 | 0.45 | 3.75 | 0.72 | |

| XGBoost | 0.63 ± 0.23 | 59 ± 16 | 0.77 | 73.81 | 75.00 | 72.22 | 57.14 | 25.00 | 27.78 | 78.26 | 68.42 | 21.74 | 31.58 | 2.70 | 0.35 | 7.80 | 0.77 | |

| Spatial | Naïve Bayes | 0.65 ± 0.07 | 59 ± 6 | 0.68 | 64.29 | 87.50 | 33.33 | 57.14 | 12.50 | 66.67 | 63.64 | 66.67 | 36.36 | 33.33 | 1.31 | 0.38 | 3.50 | 0.74 |

| K-NN | 0.87 ± 0.03 | 76 ± 3 | 0.64 | 66.67 | 66.67 | 66.67 | 57.14 | 33.33 | 33.33 | 72.73 | 60.00 | 27.27 | 40.00 | 2.00 | 0.50 | 4.00 | 0.70 | |

| LR | 0.67 ± 0.04 | 62 ± 5 | 0.73 | 66.67 | 62.50 | 72.22 | 57.14 | 37.50 | 27.78 | 75.00 | 59.09 | 25.00 | 40.91 | 2.25 | 0.52 | 4.33 | 0.68 | |

| RF | 0.88 ± 0.04 | 79 ± 5 | 0.75 | 64.29 | 79.17 | 44.44 | 57.14 | 20.83 | 55.56 | 65.52 | 61.54 | 34.48 | 38.46 | 1.43 | 0.47 | 3.04 | 0.72 | |

| SVM | 0.79 ± 0.06 | 77 ± 5 | 0.69 | 69.05 | 75.00 | 61.11 | 57.14 | 25.00 | 38.89 | 72.00 | 64.71 | 28.00 | 35.29 | 1.93 | 0.41 | 4.71 | 0.73 | |

| XGBoost | 0.88 ± 0.03 | 79 ± 3 | 0.78 | 71.43 | 87.50 | 50.00 | 57.14 | 12.50 | 50.00 | 70.00 | 75.00 | 30.00 | 25.00 | 1.75 | 0.25 | 7.00 | 0.78 | |

| Ensemble | LR + RF | 0.96 ± 0.12 | 88 ± 14 | 0.84 | 78.57 | 83.33 | 72.22 | 57.14 | 16.67 | 27.78 | 80.00 | 76.47 | 20.00 | 23.53 | 3.00 | 0.23 | 13.00 | 0.82 |

| LR + XGBoost | 0.70 ± 0.23 | 56 ± 14 | 0.84 | 73.81 | 75.00 | 72.22 | 57.14 | 25.00 | 27.78 | 78.26 | 68.42 | 21.74 | 31.58 | 2.70 | 0.35 | 7.80 | 0.77 | |

| XGBoost + RF | 0.96 ± 0.13 | 92 ± 13 | 0.83 | 73.81 | 87.50 | 55.56 | 57.14 | 12.50 | 44.44 | 72.41 | 76.92 | 27.59 | 23.08 | 1.97 | 0.23 | 8.75 | 0.79 | |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lagree, A.; Shiner, A.; Alera, M.A.; Fleshner, L.; Law, E.; Law, B.; Lu, F.-I.; Dodington, D.; Gandhi, S.; Slodkowska, E.A.; et al. Assessment of Digital Pathology Imaging Biomarkers Associated with Breast Cancer Histologic Grade. Curr. Oncol. 2021, 28, 4298-4316. https://doi.org/10.3390/curroncol28060366

Lagree A, Shiner A, Alera MA, Fleshner L, Law E, Law B, Lu F-I, Dodington D, Gandhi S, Slodkowska EA, et al. Assessment of Digital Pathology Imaging Biomarkers Associated with Breast Cancer Histologic Grade. Current Oncology. 2021; 28(6):4298-4316. https://doi.org/10.3390/curroncol28060366

Chicago/Turabian StyleLagree, Andrew, Audrey Shiner, Marie Angeli Alera, Lauren Fleshner, Ethan Law, Brianna Law, Fang-I Lu, David Dodington, Sonal Gandhi, Elzbieta A. Slodkowska, and et al. 2021. "Assessment of Digital Pathology Imaging Biomarkers Associated with Breast Cancer Histologic Grade" Current Oncology 28, no. 6: 4298-4316. https://doi.org/10.3390/curroncol28060366

APA StyleLagree, A., Shiner, A., Alera, M. A., Fleshner, L., Law, E., Law, B., Lu, F.-I., Dodington, D., Gandhi, S., Slodkowska, E. A., Shenfield, A., Jerzak, K. J., Sadeghi-Naini, A., & Tran, W. T. (2021). Assessment of Digital Pathology Imaging Biomarkers Associated with Breast Cancer Histologic Grade. Current Oncology, 28(6), 4298-4316. https://doi.org/10.3390/curroncol28060366