Affective States and Virtual Reality to Improve Gait Rehabilitation: A Preliminary Study

Abstract

:1. Introduction

1.1. Related Works on Virtual Reality and Rehabilitation

1.2. Related Works on Neurological Disorders and Headsets

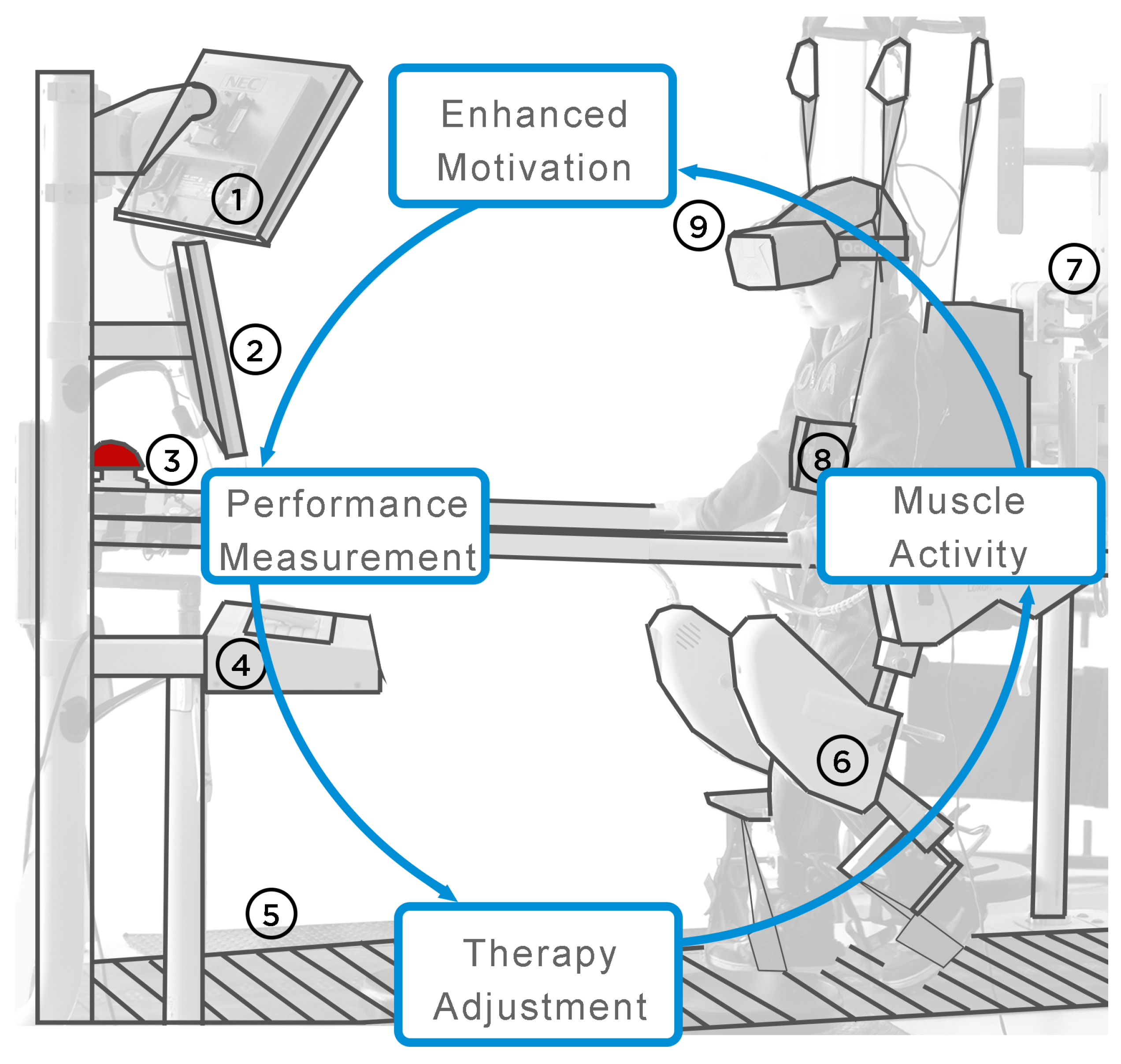

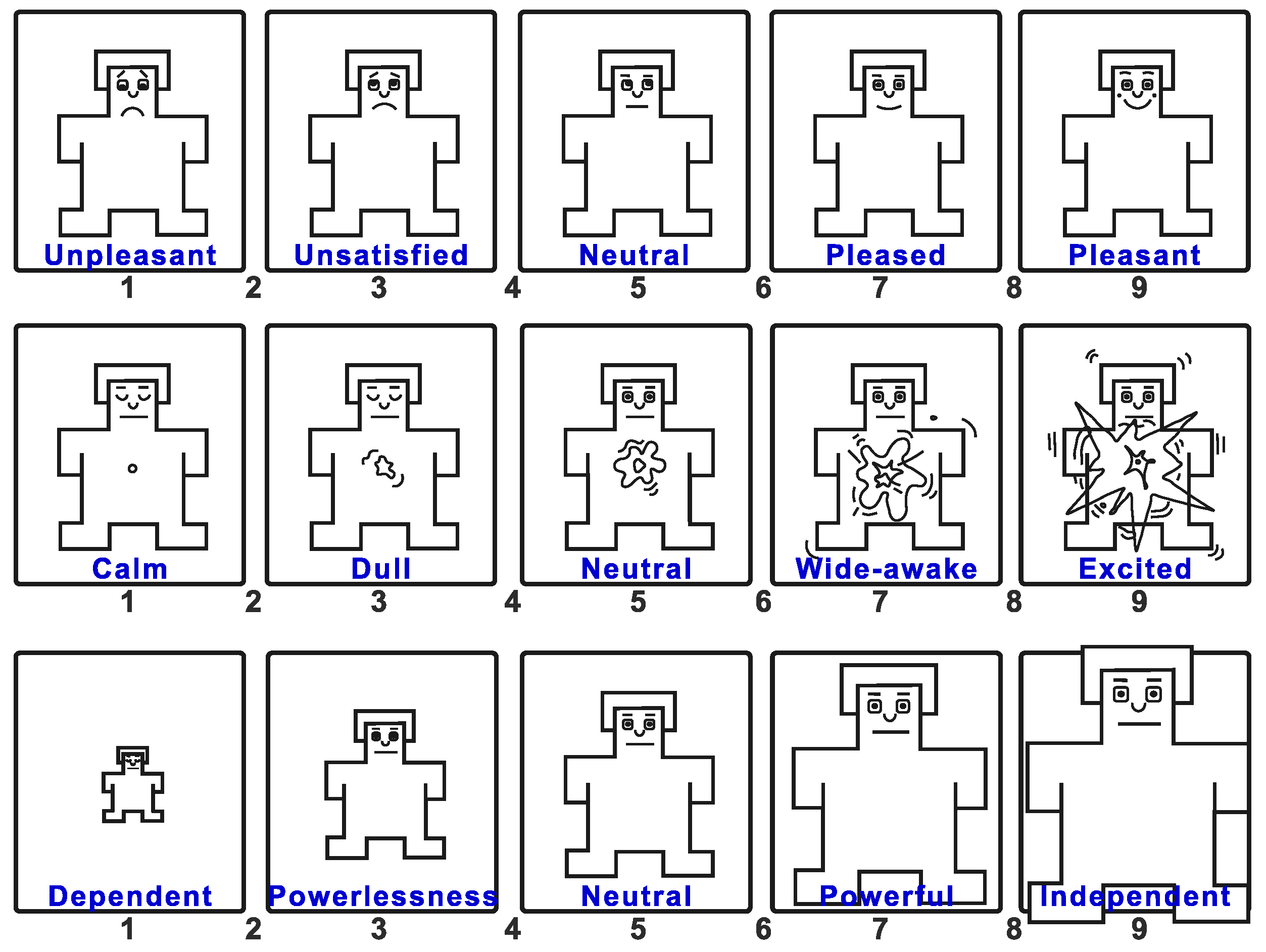

1.3. Potential of Using Affective Recognition and Gait Therapy

2. Materials and Methods

2.1. Participants

2.2. VR Environment, Device Selection, and Specification

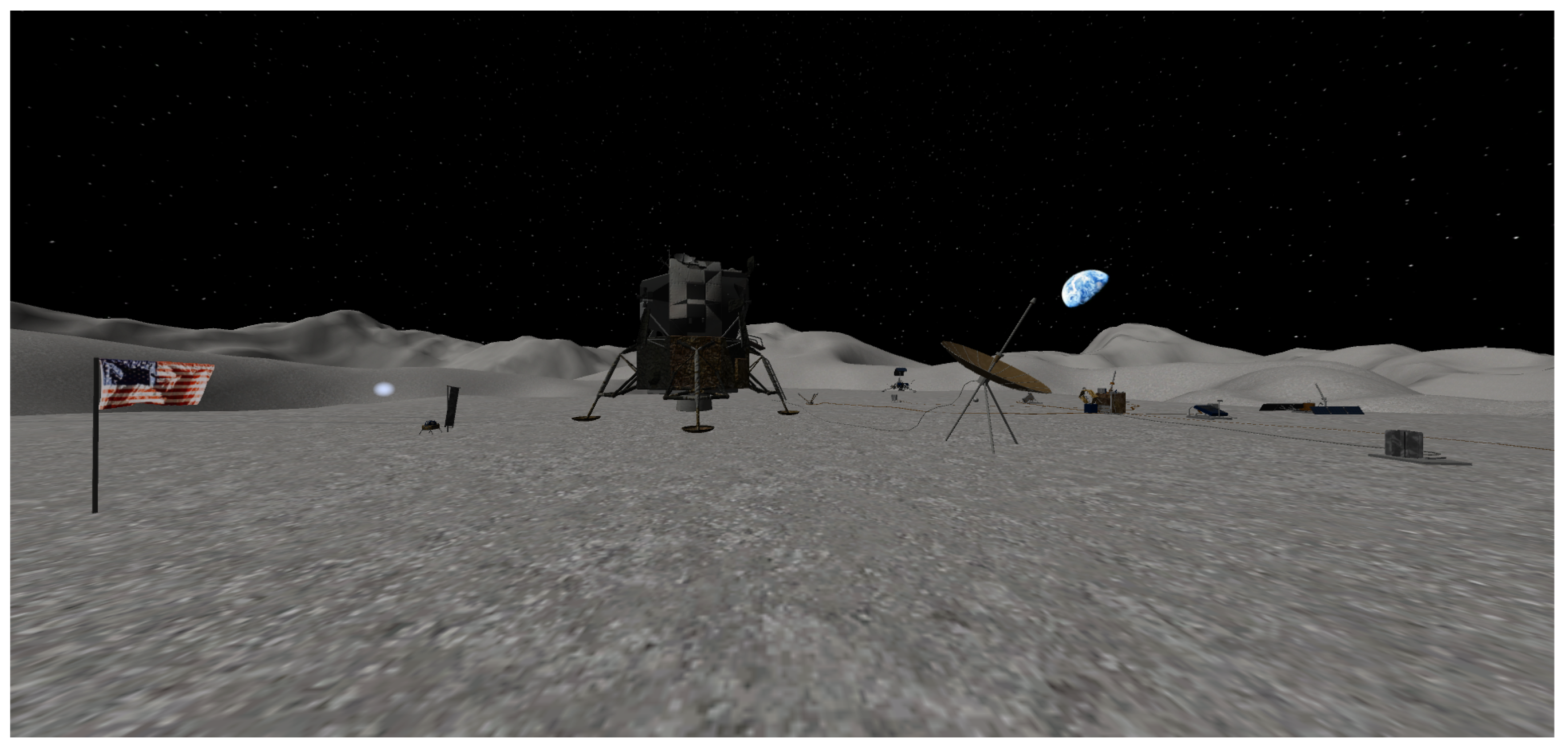

2.2.1. VR Environment

2.2.2. Lokomat

2.2.3. Emotiv Insight

2.2.4. Oculus Go

2.3. Procedure

2.4. Data Analysis

3. Results

4. Discussion

Future Work

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- INEGI. La Discapacidad en México, datos al 2014; Instituto Nacional de Estadística y Geografía: Aguascalientes, Mexico, 2017. [Google Scholar]

- Peek, E.; Lutteroth, C.; Wunsche, B. More for less: Fast image warping for improving the appearance of head tracking on HMDs. In Proceedings of the International Conference Image and Vision Computing New Zealand, Wellington, New Zealand, 27–29 November 2013; pp. 41–46. [Google Scholar]

- Bohil, C.J.; Alicea, B.; Biocca, F.A. Virtual reality in neuroscience research and therapy. Nat. Rev. Neurosci. 2011, 12, 752–762. [Google Scholar] [CrossRef] [PubMed]

- Biocca, F.; Levy, M.R. Communication in the Age of Virtual Reality; Routledge: London, UK, 2013. [Google Scholar]

- LaValle, S.M.; Yershova, A.; Katsev, M.; Antonov, M. Head tracking for the Oculus Rift. In Proceedings of the 2014 IEEE International Conference on Robotics and Automation (ICRA), Hong Kong, China, 31 May–7 June 2014; pp. 187–194. [Google Scholar]

- Barahimi, F.; Wismath, S. 3D graph visualization with the oculus rift. In Proceedings of the 22nd International Symposium, Würzburg, Germany, 24–26 September 2014; pp. 519–520. [Google Scholar]

- Brütsch, K.; Koenig, A.; Zimmerli, L.; Mérillat-Koeneke, S.; Riener, R.; Jäncke, L.; Van Hedel, H.J.A.; Meyer-Heim, A. Virtual reality for enhancement of robot-assisted gait training in children with neurological gait disorders. J. Rehabil. Med. 2011, 43, 493–499. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Caponnetto, P.; Triscari, S.; Maglia, M.; Quattropani, M.C. The Simulation Game Virtual Reality Therapy for the Treatment of Social Anxiety Disorder: A Systematic Review. Int. J. Environ. Res. Public Health 2021, 18, 3209. [Google Scholar] [CrossRef] [PubMed]

- Tan, W.; Xu, Y.; Liu, P.; Liu, C.; Li, Y.; Du, Y.; Chen, C.; Wang, Y.; Zhang, Y. A method of VR-EEG scene cognitive rehabilitation training. Health Inf. Sci. Syst. 2021, 9, 4. [Google Scholar] [CrossRef] [PubMed]

- Ambron, E.; Miller, A.; Kuchenbecker, K.J.; Buxbaum, L.J.; Coslett, H.B. Immersive low-cost virtual reality treatment for phantom limb pain: Evidence from two cases. Front. Neurol. 2018, 9, 67. [Google Scholar] [CrossRef] [PubMed]

- Tej, T.; Rognini, G. Upper Limb Rehabilitation System, 2017. U.S. Patent 15/426,443, 23 March 2018. [Google Scholar]

- Perez, D.L.; Edwards, M.J.; Nielsen, G.; Kozlowska, K.; Hallett, M.; LaFrance, W.C., Jr. Decade of progress in motor functional neurological disorder: Continuing the momentum. J. Neurol. Neurosurg. Psychiatry 2021, 92, 668–677. [Google Scholar] [CrossRef]

- Labruyère, R.; Gerber, C.N.; Birrer-Brütsch, K.; Meyer-Heim, A.; van Hedel, H.J.A. Requirements for and impact of a serious game for neuro-pediatric robot-assisted gait training. Res. Dev. Disabil. 2013, 34, 3906–3915. [Google Scholar] [CrossRef] [PubMed]

- Park, J.; Chung, Y. The effects of robot-assisted gait training using virtual reality and auditory stimulation on balance and gait abilities in persons with stroke. NeuroRehabilitation 2018, 43, 227–235. [Google Scholar] [CrossRef]

- Hamzeheinejad, N.; Straka, S.; Gall, D.; Weilbach, F.; Erich Latoschik, M. Immersive Robot-Assisted Virtual Reality Therapy for Neurologically-Caused Gait Impairments. In Proceedings of the 25th IEEE Conference on Virtual Reality and 3D User Interfaces, Reutlingen, Germany, 18–22 March 2018; pp. 565–566. [Google Scholar]

- Rizzo, A.A.; Buckwalter, J.G.; Neumann, U. Virtual reality and cognitive rehabilitation: A brief review of the future. J. Head Trauma Rehabil. 1997, 12, 1–15. [Google Scholar] [CrossRef]

- Lindner, P. Better, virtually: The past, present, and future of virtual reality cognitive behavior therapy. Int. J. Cogn. Ther. 2021, 14, 23–46. [Google Scholar] [CrossRef]

- Manuli, A.; Maggio, M.G.; Latella, D.; Cannavò, A.; Balletta, T.; De Luca, R.; Naro, A.; Calabrò, R.S. Can robotic gait rehabilitation plus Virtual Reality affect cognitive and behavioural outcomes in patients with chronic stroke? A randomized controlled trial involving three different protocols. J. Stroke Cerebrovasc. Dis. 2020, 29, 104994. [Google Scholar] [CrossRef] [PubMed]

- Calabrò, R.S.; Naro, A.; Russo, M.; Leo, A.; De Luca, R.; Balletta, T.; Buda, A.; La Rosa, G.; Bramanti, A.; Bramanti, P. The role of virtual reality in improving motor performance as revealed by EEG: A randomized clinical trial. J. Neuroeng. Rehabil. 2017, 14, 53. [Google Scholar] [CrossRef] [PubMed]

- Berger, A.; Horst, F.; Müller, S.; Steinberg, F.; Doppelmayr, M. Current State and Future Prospects of EEG and fNIRS in Robot-Assisted Gait Rehabilitation: A Brief Review. Front. Hum. Neurosci. 2019, 13, 172. [Google Scholar] [CrossRef]

- Zhang, B.; Li, D.; Liu, Y.; Wang, J.; Xiao, Q. Virtual reality for limb motor function, balance, gait, cognition and daily function of stroke patients: A systematic review and meta-analysis. J. Adv. Nurs. 2021, 77, 3255–3273. [Google Scholar] [CrossRef]

- Mehrabian, A. Pleasure-arousal-dominance: A general framework for describing and measuring individual differences in temperament. Curr. Psychol. 1996, 14, 261–292. [Google Scholar] [CrossRef]

- Bradley, M.M.; Lang, P.J. Measuring emotion: The self-assessment manikin and the semantic differential. J. Behav. Ther. Exp. 1994, 25, 49–59. [Google Scholar] [CrossRef]

- Gonzalez-Sanchez, J.; Chavez-Echeagaray, M.E.; Atkinson, R.; Burleson, W. ABE: An Agent-Based Software Architecture for a Multimodal Emotion Recognition Framework. In Proceedings of the 2011 Ninth Working IEEE/IFIP Conference on Software Architecture, Boulder, CO, USA, 20–24 June 2011; pp. 187–193. [Google Scholar]

- Yeh, H.P.; Stone, J.A.; Churchill, S.M.; Brymer, E.; Davids, K. Physical and emotional benefits of different exercise environments designed for treadmill running. Int. J. Environ. Res. Public Health 2017, 14, 752. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Jung, D.; Choi, J.; Kim, J.; Cho, S.; Han, S. EEG-Based Identification of Emotional Neural State Evoked by Virtual Environment Interaction. Int. J. Environ. Res. Public Health 2022, 19, 2158. [Google Scholar] [CrossRef] [PubMed]

- Voigt-Antons, J.N.; Spang, R.; Kojić, T.; Meier, L.; Vergari, M.; Möller, S. Don’t Worry be Happy-Using virtual environments to induce emotional states measured by subjective scales and heart rate parameters. In Proceedings of the 2021 IEEE Virtual Reality and 3D User Interfaces (VR), Lisboa, Portugal, 27 March 2021–1 April 2021; pp. 679–686. [Google Scholar]

- NASA. Apollo 11 Landing Site 3D Resources. 2015. Available online: https://nasa3d.arc.nasa.gov/detail/Apollo11-Landing (accessed on 11 December 2021).

- SolCommand. SolCommand The FREE MODELS Project. 2018. Available online: https://www.solcommand.com/ (accessed on 11 December 2021).

- Yang, Y.; Wu, Q.; Qiu, M.; Wang, Y.; Chen, X. Emotion recognition from multi-channel EEG through parallel convolutional recurrent neural network. In Proceedings of the 2018 International Joint Conference on Neural Networks (IJCNN), Rio de Janeiro, Brazil, 8–13 July 2018; pp. 1–7. [Google Scholar]

- Wei, C.; Chen, L.-l.; Song, Z.-z.; Lou, X.-g.; Li, D.-d. EEG-based emotion recognition using simple recurrent units network and ensemble learning. Biomed. Signal Process. Control 2020, 58, 101756. [Google Scholar] [CrossRef]

- Siuly, S.; Li, Y.; Zhang, Y. EEG signal analysis and classification. IEEE Trans. Neural Syst. Rehabilit. Eng. 2016, 11, 141–144. [Google Scholar]

- Dadebayev, D.; Goh, W.W.; Tan, E.X. EEG-based emotion recognition: Review of commercial EEG devices and machine learning techniques. J. King Saud Univ.—Comput. Inf. Sci. 2021, 34, 4385–4401. [Google Scholar] [CrossRef]

- Pratama, S.H.; Rahmadhani, A.; Bramana, A.; Oktivasari, P.; Handayani, N.; Haryanto, F.; Suprijadi; Khotimah, S.N. Signal Comparison of Developed EEG Device and Emotiv Insight Based on Brainwave Characteristics Analysis. J. Phys. Conf. Ser. 2020, 1505, 012071. [Google Scholar] [CrossRef]

- Zabcikova, M.; Koudelkova, Z.; Jasek, R.; Muzayyanah, U. Examining the Efficiency of Emotiv Insight Headset by Measuring Different Stimuli. WSEAS Trans. Appl. Theor. Mech. 2019, 14, 235–242. [Google Scholar]

- Angelov, V.; Petkov, E.; Shipkovenski, G.; Kalushkov, T. Modern virtual reality headsets. In Proceedings of the 2020 International Congress on Human-Computer Interaction, Optimization and Robotic Applications (HORA), Ankara, Turkey, 26–28 June 2020; pp. 1–5. [Google Scholar]

- Kim, J.; Palmisano, S.; Luu, W.; Iwasaki, S. Effects of linear visual-vestibular conflict on presence, perceived scene stability and cybersickness in the oculus go and oculus quest. Front. Virtual Real. 2021, 2, 582156. [Google Scholar] [CrossRef]

- Yan, Y.; Chen, K.; Xie, Y.; Song, Y.; Liu, Y. The effects of weight on comfort of virtual reality devices. In Proceedings of the International Conference on Applied Human Factors and Ergonomics, Orlando, FL, USA, 22–26 July 2018; pp. 239–248. [Google Scholar]

- Chattha, U.A.; Janjua, U.I.; Anwar, F.; Madni, T.M.; Cheema, M.F.; Janjua, S.I. Motion sickness in virtual reality: An empirical evaluation. IEEE Access 2020, 8, 130486–130499. [Google Scholar] [CrossRef]

- Gonzalez-Sanchez, J.; Baydogan, M.; Chavez-Echeagaray, M.E.; Atkinson, R.K.; Burleson, W. Chapter 11—Affect Measurement: A Roadmap Through Approaches, Technologies, and Data Analysis. In Emotions and Affect in Human Factors and Human-Computer Interaction; Jeon, M., Ed.; Academic Press: San Diego, CA, USA, 2017; pp. 255–288. [Google Scholar]

- Etienne, A.; Laroia, T.; Weigle, H.; Afelin, A.; Kelly, S.K.; Krishnan, A.; Grover, P. Novel electrodes for reliable EEG recordings on coarse and curly hair. In Proceedings of the 2020 42nd Annual International Conference of the IEEE Engineering in Medicine & Biology Society (EMBC), Montreal, QC, Canada, 20–24 July 2020; pp. 6151–6154. [Google Scholar]

- Roshdy, A.; Alkork, S.; Karar, A.; Mhalla, H.; Beyrouthy, T.; Al Barakeh, Z.; Nait-ali, A. Statistical Analysis of Multi-channel EEG Signals for Digitizing Human Emotions. In Proceedings of the 2021 4th International Conference on Bio-Engineering for Smart Technologies (BioSMART), Paris/Créteil, France, 8–10 December 2021; pp. 1–4. [Google Scholar]

- Roosta, F.; Taghiyareh, F.; Mosharraf, M. Personalization of gamification-elements in an e-learning environment based on learners’ motivation. In Proceedings of the 2016 8th International Symposium on Telecommunications (IST), Tehran, Iran, 27–28 September 2016; pp. 637–642. [Google Scholar]

| Reviewer | Video 1 | Video 2 | Video 3 | Video 4 | Video 5 | Video 6 | Video 7 | Video 8 |

|---|---|---|---|---|---|---|---|---|

| 1 | AGS1 | AGS2 | AGS3 | AGS4 | GDL1 | GDL2 | GDL3 | GDL4 |

| 2 | AGS2 | AGS3 | AGS4 | GDL1 | GDL2 | GDL3 | GDL4 | AGS1 |

| 3 | AGS3 | AGS4 | GDL1 | GDL2 | GDL3 | GDL4 | AGS1 | AGS2 |

| 4 | AGS4 | GDL1 | GDL2 | GDL3 | GDL4 | AGS1 | AGS2 | AGS3 |

| 5 | GDL1 | GDL2 | GDL3 | GDL4 | AGS1 | AGS2 | AGS3 | AGS4 |

| 6 | GDL2 | GDL3 | GDL4 | AGS1 | AGS2 | AGS3 | AGS4 | GDL1 |

| 7 | GDL3 | GDL4 | AGS1 | AGS2 | AGS3 | AGS4 | GDL1 | GDL2 |

| 8 | GDL4 | AGS1 | AGS2 | AGS3 | AGS4 | GDL1 | GDL2 | GDL3 |

| Patient | Sex | Headsets Placing | Session | Unmounting | Total |

|---|---|---|---|---|---|

| 1 | Male | 88 s | 2075 s | 150 s | 2313 s |

| 2 | Female | 188 s | 2696 s | 77 s | 2961 s |

| 3 | Male | 111 s | 1640 s | 91 s | 1842 s |

| 4 | Male | 129 s | 1996 s | 100 s | 2225 s |

| 5 | Male | 75 s | 1716 s | 118 s | 1909 s |

| 6 | Female | 233 s | 1513 s | 74 s | 1820 s |

| 7 | Female | 194 s | 1655 s | 86 s | 1935 s |

| 8 | Female | 310 s | 2378 s | 153 s | 2841 s |

| Average | 166 s | 1959 s | 106 s | 2231 s | |

| Std Dev | 80 s | 412 s | 31 s | 451 s | |

| Median | 159 s | 1856 s | 96 s | 2080 s |

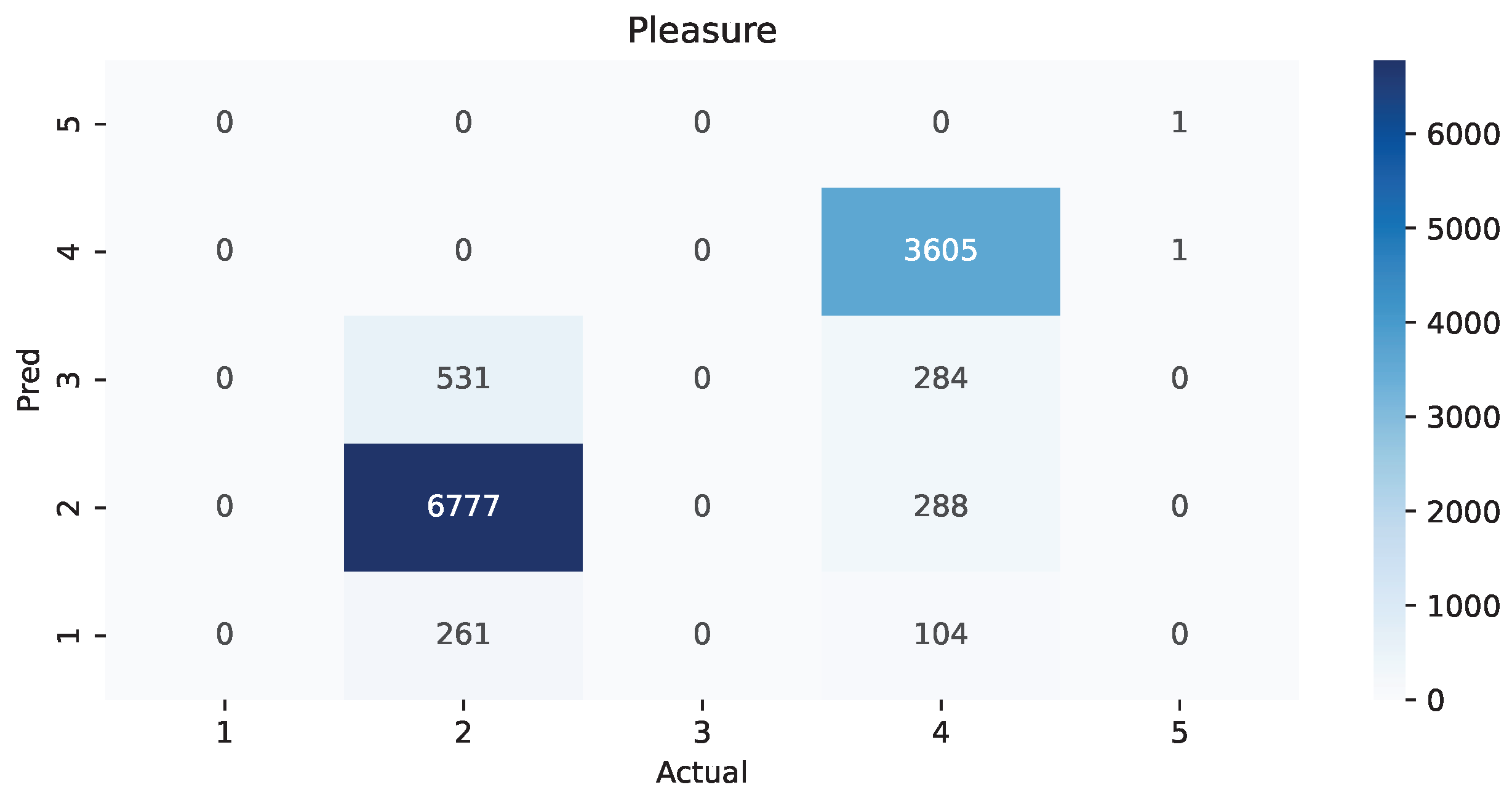

| Pleasure | ||||

|---|---|---|---|---|

| Region | Precision | Recall | F1-Score | Support |

| 1 | 0.000000 | 0.000000 | 0.000000 | 0.000000 |

| 2 | 0.959236 | 0.895363 | 0.926199 | 7569.000000 |

| 3 | 0.000000 | 0.000000 | 0.000000 | 0.000000 |

| 4 | 0.999723 | 0.842093 | 0.914163 | 4281.000000 |

| 5 | 1.000000 | 0.500000 | 0.666667 | 2.000000 |

| accuracy | 0.876055 | 0.876055 | 0.876055 | 0.876055 |

| macro avg | 0.591792 | 0.447491 | 0.501406 | 11,852.000000 |

| weighted avg | 0.973867 | 0.876055 | 0.921808 | 11,852.000000 |

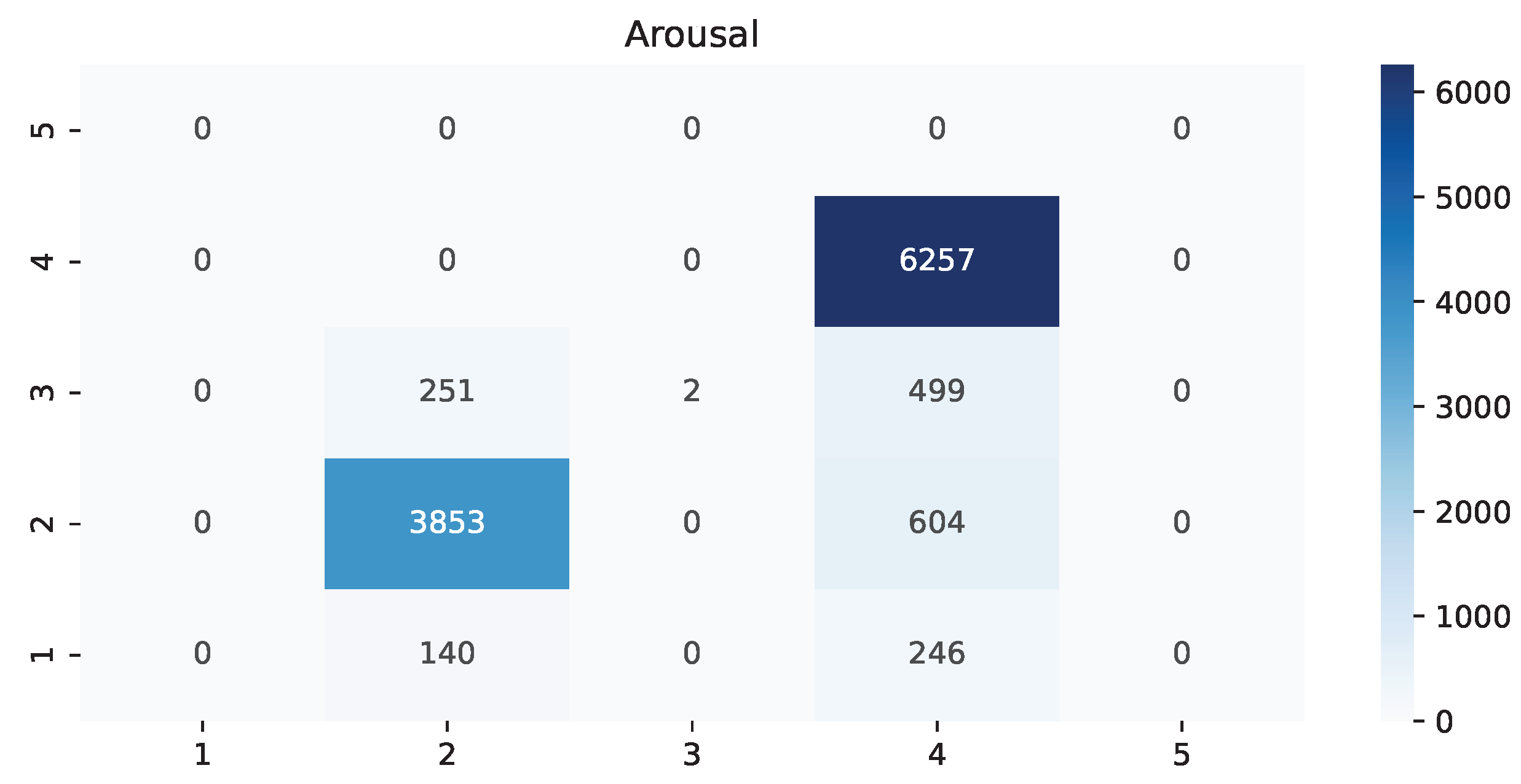

| Arousal | ||||

|---|---|---|---|---|

| Region | Precision | Recall | F1-Score | Support |

| 1 | 0.000000 | 0.000000 | 0.000000 | 0.000000 |

| 2 | 0.864483 | 0.907870 | 0.885645 | 4244.000000 |

| 3 | 0.002660 | 1.000000 | 0.005305 | 2.000000 |

| 4 | 1.000000 | 0.822640 | 0.902691 | 7606.000000 |

| 5 | 0.000000 | 0.000000 | 0.000000 | 0.000000 |

| accuracy | 0.853189 | 0.853189 | 0.853189 | 0.853189 |

| macro avg | 0.466786 | 0.682627 | 0.448410 | 11,852.000000 |

| weighted avg | 0.951305 | 0.853189 | 0.896436 | 11,852.000000 |

| Dominance | ||||

|---|---|---|---|---|

| Region | Precision | Recall | F1-Score | Support |

| 1 | 0.000000 | 0.000000 | 0.000000 | 0.000000 |

| 2 | 1.000000 | 0.906751 | 0.951095 | 11,850.000000 |

| 3 | 0.002494 | 1.000000 | 0.004975 | 2.000000 |

| 4 | 0.000000 | 0.000000 | 0.000000 | 0.000000 |

| 5 | 0.000000 | 0.000000 | 0.000000 | 0.000000 |

| accuracy | 0.906767 | 0.906767 | 0.906767 | 0.906767 |

| macro avg | 0.334165 | 0.635584 | 0.318690 | 11,852.000000 |

| weighted avg | 0.999832 | 0.906767 | 0.950936 | 11,852.000000 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Rodriguez, J.; Del-Valle-Soto, C.; Gonzalez-Sanchez, J. Affective States and Virtual Reality to Improve Gait Rehabilitation: A Preliminary Study. Int. J. Environ. Res. Public Health 2022, 19, 9523. https://doi.org/10.3390/ijerph19159523

Rodriguez J, Del-Valle-Soto C, Gonzalez-Sanchez J. Affective States and Virtual Reality to Improve Gait Rehabilitation: A Preliminary Study. International Journal of Environmental Research and Public Health. 2022; 19(15):9523. https://doi.org/10.3390/ijerph19159523

Chicago/Turabian StyleRodriguez, Jafet, Carolina Del-Valle-Soto, and Javier Gonzalez-Sanchez. 2022. "Affective States and Virtual Reality to Improve Gait Rehabilitation: A Preliminary Study" International Journal of Environmental Research and Public Health 19, no. 15: 9523. https://doi.org/10.3390/ijerph19159523

APA StyleRodriguez, J., Del-Valle-Soto, C., & Gonzalez-Sanchez, J. (2022). Affective States and Virtual Reality to Improve Gait Rehabilitation: A Preliminary Study. International Journal of Environmental Research and Public Health, 19(15), 9523. https://doi.org/10.3390/ijerph19159523