Assessing the Influence of Physical Activity Upon the Experience Sampling Response Rate on Wrist-Worn Devices

Abstract

:1. Introduction

2. Related Work

3. Materials and Methods

3.1. Materials

- Software

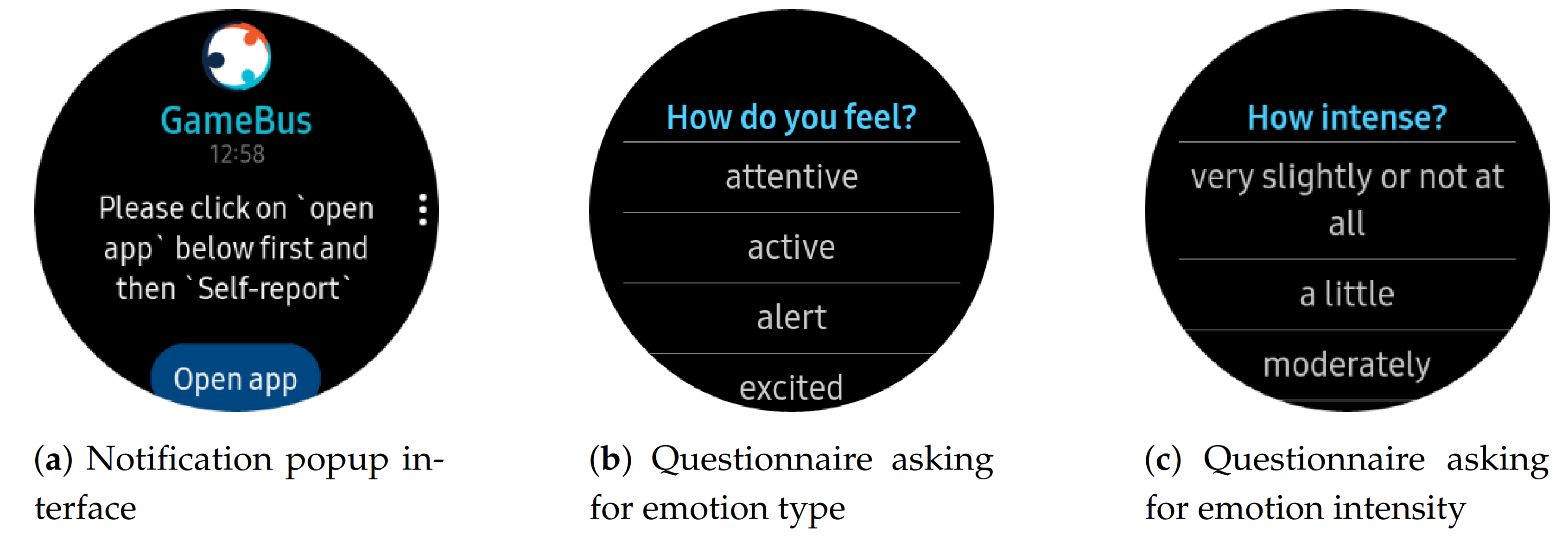

- We created Experiencer [55], a GDPR-compliant ESM platform. The software is implemented in JavaScript, using Web API of Tizen OS suitable for Samsung smartwatches. In our experiments, we used the Samsung Galaxy Watch Active 2 devices. To ensure seamless data collection, our prototype is integrated with GameBus [56] (an mHealth platform developed for supporting the design, implementation, and evaluation of various health promotion campaigns [57,58]) (Figure 1).Experiencer was designed to support (1) dynamic configurabilty that facilities researchers with on-the-fly adjustments applied to the ESM parameters. (2) stand-alone operation to collect data in situ, and syncing the data upon detecting reliable network connectivity. (3) a user interface compliant with wearable usability standards so that participants can easily answer the questionnaires on the smartwatch screen (Figure 2).

3.2. Methods

3.2.1. Study Design

- Recruitment

- Our study was conducted in the context of the TU/e Samen Gezond program, an online program designed to promote healthy activities for the students and staff members of the Eindhoven University of Technology. During the program, participants received a set of healthy suggestions in a web application and were rewarded points in return for acting upon those suggestions. To enhance the experience of participants in the lifestyle program (by providing a steps tracker built on top of our ESM application), they also received Samsung Galaxy Watch Active 2 equipped with our prototyped ESM application.

- Duration

- The duration of the study was 5 weeks, which is as long as the TU/e Samen Gezond program lasted.

- Number of participants

- Constrained by the number of available smartwatches at the time of the study, and the recruitment process described, we could ultimately recruit participants.

- Treatment groups

- The participants were randomly assigned to two treatment groups which we called ’resting’ and ’active’: Half were assigned to the resting group who received beeps while not moving, and the other half to the active group who received beeps while being physically active (e.g., walking). Due to some early dropouts, ultimately the active group consisted and the resting group participants.

- Compensation

- Depending on the allocated treatment group in the TU/e Samen Gezond program, participants could be rewarded with a giveaway voucher of €25 in exchange for their points. Note that the participants were not rewarded for wearing the smartwatch neither for any other interactions with it (e.g., checking the smartwatch for notifications, replying to the questions they received, etc.). Rather they were rewarded for doing healthy activities that they could register via a separate web application dedicated to the TU/e Samen Gezond program or via unobtrusive sensing by the smartwatch.

- Schedule

- Following our hypothesis, the schedule of choice was event-contingent. The monitored event was the level of physical activity. As soon as a physical activity event was detected via our prototype, a beep was delivered to the participant’s smartwatch. The beeps were administered depending on the type of physical activity (e.g., walking, running, not moving), the treatment group a participant was in, and the defined inter notification time.

- Inquiry limit

- In our study, being event-contingent, sensible limits could reduce burden. According to the literature, around 7 beeps per day may yield an optimal balance of recall and annoyance [59]. Since we instructed participants to wear the smartwatch when they were awake, assuming one wears the smartwatch ∼12 h per day, an internotification time of 105 min (1.75 h) would result in inquiries per day, compliant with the literature.

- Inter notification time

- This notion is defined as the time in-between two consecutive notifications. In our case, since the schedule was event-contingent, there might be a situation that one is rarely or frequently beeped based on their level of physical activity and their treatment group. As as we described above, to prevent overwhelming the participants, we set a 105 min internotification time.

- Notification expiry

- There are many heuristics and hypotheses in the literature depending on different scenarios to determine notification expiry time (or lifetime) such as 5-min [60] or 3-min [61]. In this study, the notifications remained in the notifications area of the smartwatch, unless a participant cleared it, or the next beep from our prototyped ESM software arrived (our beeps did not stack up). This could also act as a reminder to the participant in case of an occasional visit to the notification area.

- Questionnaire

- To assess the impact of the event contingent strategy upon response rates, we chose to survey user emotions which is a typical case of ESM applications. Furthermore, we were motivated by earlier research that aims to infer emotions from wearable sensors (see [37,46,62]). Thus, at sampling moments, participants were requested to complete the Positive and Negative Affect Schedule (PANAS), which is a standard scale that consists of different words that describe feelings and emotions [63].

3.2.2. Data Analysis and Cleaning

- Physical activity recognition

- To detect the physical activity levels of participants, we utilized the built-in Samsung pedometer API that applies its proprietary algorithm for physical activity detection. We adopted such an API to capture changes in physical activity in real-time and to manage sending beeps based on the physical activity levels of our participants across the active and resting treatment groups. More specifically, the pedometer API of the smartwatch is able to detect and distinguish not moving, walking, and running activities [64]. In the case that the algorithm fails to categorize a physical activity, it marks it as unknown. In our study, in the active group, the beeps were sent as soon as either walking, or running were detected and only if the internotification time was passed. In contrast, in the resting group, the beeps were sent when the not moving activity was detected in accordance with the inter notification time constraint. The internotification time was set to control the number of notifications sent to the participants. That is to avoid overwhelming the participants by sending a beep at any moment that the pedometer detects a physical activity. By setting such constraints, the participants received at most about 7 bees per day. Additionally, to capture a wider range of physical activities, we also leveraged detection of activities that fell under the unknown category. The details of such inclusion are described below in the Analysis section.

- Analysis

- The response rate is calculated as the ratio of the number of self-reports over the total number of received beeps. In the results section, we do so at the treatment group level both for the whole study period and on each week:where G refers to a collection of participants containing either all members of a treatment group or a single participant. Also, the time window is referred to as t.The built-in physical activity monitor API of our smartwatch could detect walking, running, and not-moving activities. Additionally, to capture other physical activities (such as householding) we also enabled the detection of the built-in unknown physical activity [64]. By doing so, we were able to capture a wider range of physical activities (other than just walking and running) in line with our methodological decisions. The unknown event includes a spectrum of physical activities from subtle to vigorous and is triggered whenever the built-in activity monitor in the smartwatch fails to categorize a physical activity into either not moving, walking, or running. The unknown event may be detected both in lower (resting) or higher (active) levels of physical activity. Accordingly, we also checked the speed property of unknown events so that beeps were only delivered at intended levels of physical activity (e.g., for a participant in the active group, if an unknown activity of high speed were detected, a beep could be delivered).

- Cleaning

- The gathered data consisted of beep-related information, self-reports, and sensor data. The beep-related information consisted of timestamps of when a beep was received and when a beep was read. The self-report data included the timestamps of when the self-report was submitted, and the selected emotion from the PANAS scale along with its corresponding intensity (the different intensity levels are “very slightly or not at all”, “a little”, “moderately”, “quite a bit”, or “extremely”). The sensor recordings included the physiological data monitored to detect interesting events. i.e., active and resting states.As discussed in previous sections, the internotification time and the inquiry limit were set to specific values congruent with the common strategies in the literature (see [3,65]). However, at the beginning of our experiment, a technical malfunction in our first version of the prototype caused some constraint violations concerning the inquiry limits and inter notification times. That led to receiving beeps sooner than the intended inter notification time and more than the inquiry limit. In other words, participants received more beeps than intended. Although the issue was fixed during the first week of the study, some noisy data was generated. To clean such noises, in our analysis, for each participant, we only considered the first 7 beeps that were delivered on each day. Having cleaned data, we tested our hypothesis by calculating and then comparing the response rates of our treatment groups.

4. Results

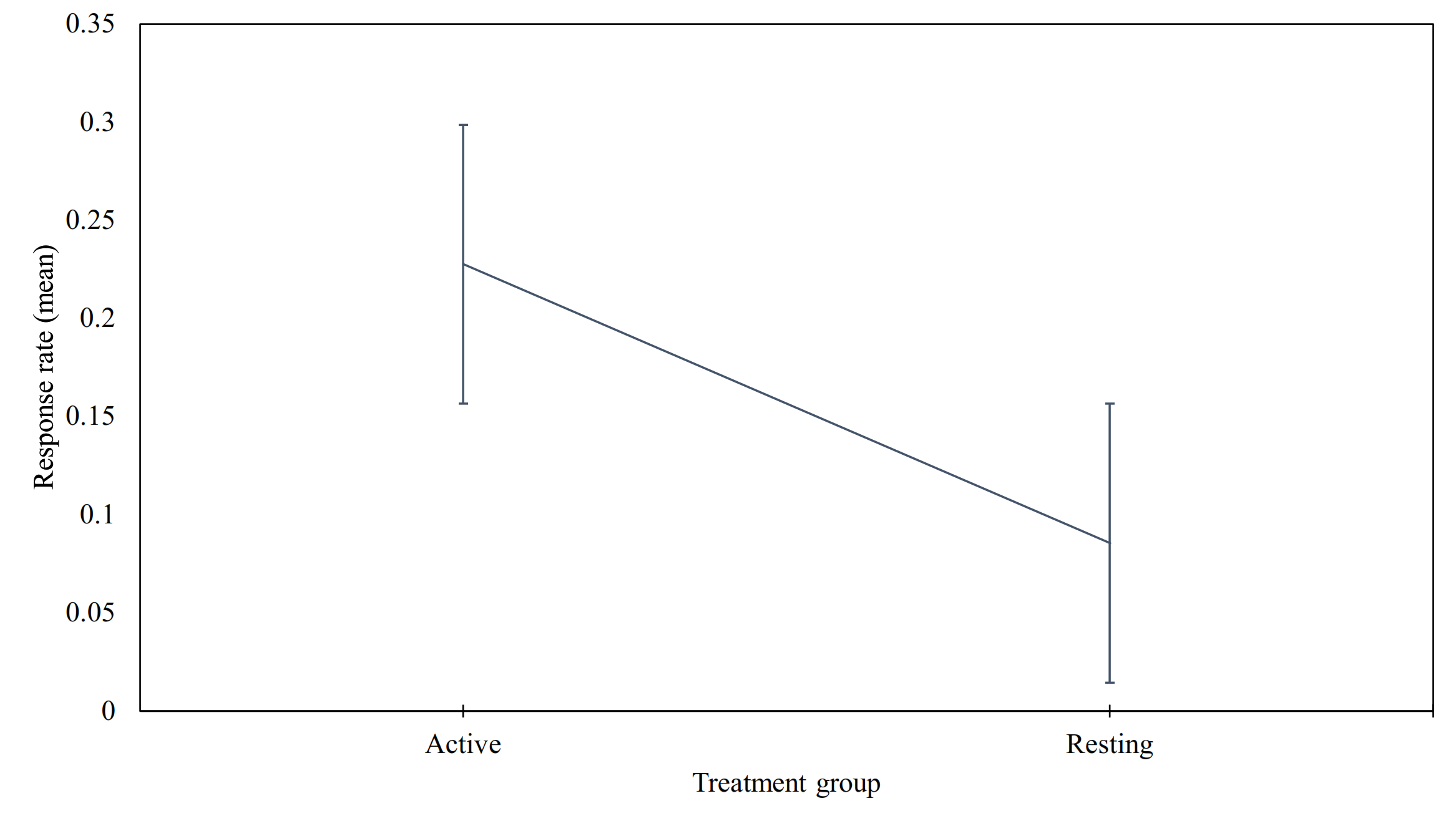

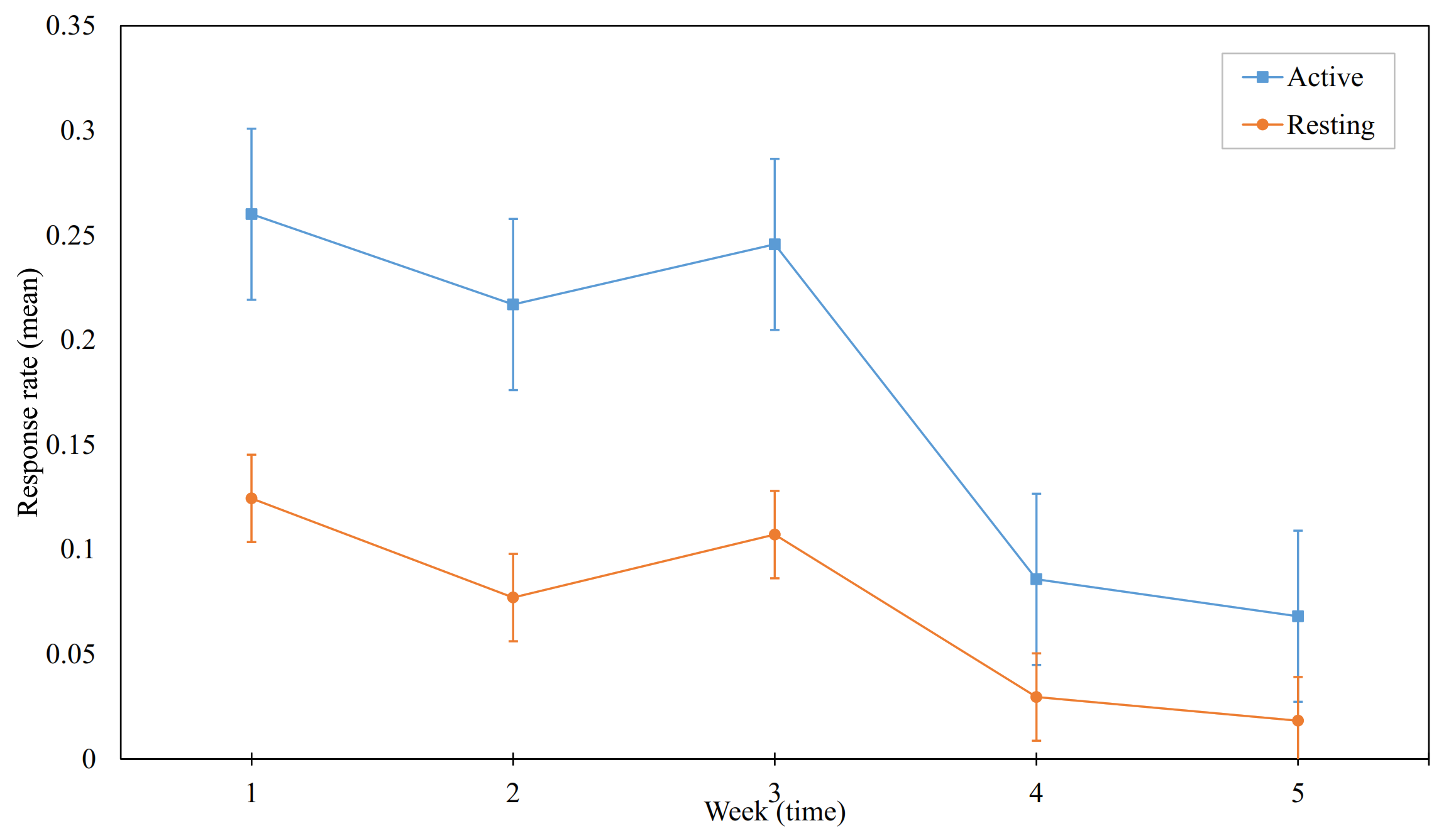

4.1. Response Rate

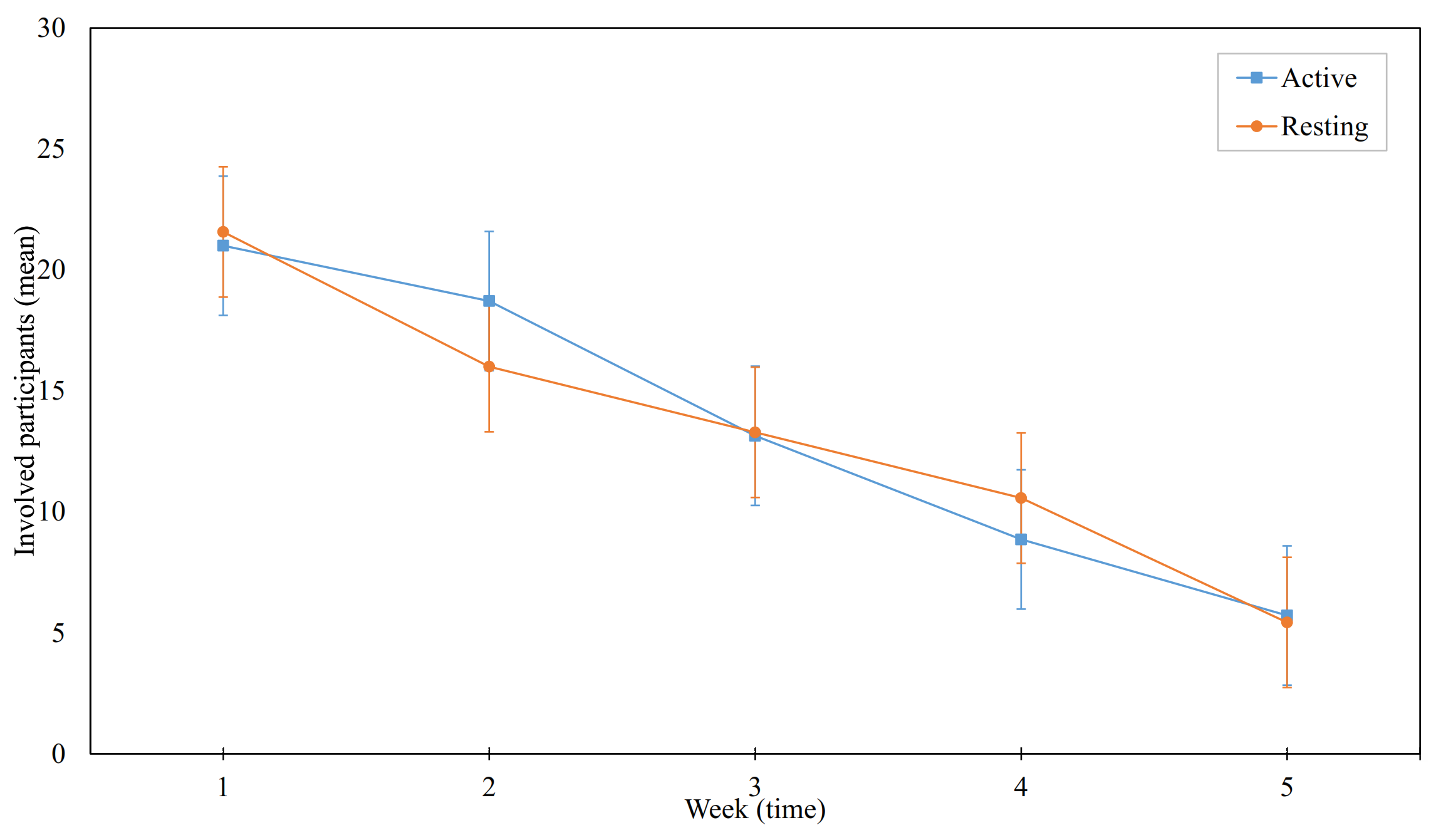

4.2. Dropouts

5. Discussion

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Barrett, L.F.; Barrett, D.J. An introduction to computerized experience sampling in psychology. Soc. Sci. Comput. Rev. 2001, 19, 175–185. [Google Scholar] [CrossRef] [Green Version]

- Larson, R.; Csikszentmihalyi, M. The Experience Sampling Method. In Flow and the Foundations of Positive Psychology: The Collected Works of Mihaly Csikszentmihalyi; Csikszentmihalyi, M., Ed.; Springer Netherlands: Dordrecht, The Netherlands, 2014; pp. 21–34. [Google Scholar] [CrossRef]

- Csikszentmihalyi, M.; Larson, R. Validity and Reliability of the Experience-Sampling Method. In Flow and the Foundations of Positive Psychology: The Collected Works of Mihaly Csikszentmihalyi; Csikszentmihalyi, M., Ed.; Springer Netherlands: Dordrecht, The Netherlands, 2014; pp. 35–54. [Google Scholar] [CrossRef]

- Naughton, F.; Riaz, M.; Sutton, S. Response Parameters for SMS Text Message Assessments Among Pregnant and General Smokers Participating in SMS Cessation Trials. Nicotine Tobacco Res. 2016, 18, 1210–1214. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Stone, A.A.; Kessler, R.C.; Haythomthwatte, J.A. Measuring Daily Events and Experiences: Decisions for the Researcher. J. Personal. 1991, 59, 575–607. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Reynolds, B.M.; Robles, T.F.; Repetti, R.L. Measurement reactivity and fatigue effects in daily diary research with families. Dev. Psychol. 2016, 52, 442–456. [Google Scholar] [CrossRef]

- Van Berkel, N.; Goncalves, J.; Hosio, S.; Kostakos, V. Gamification of Mobile Experience Sampling Improves Data Quality and Quantity. Proc. ACM Interact. Mob. Wearable Ubiquitous Technol. 2017, 1, 107:1–107:21. [Google Scholar] [CrossRef] [Green Version]

- Consolvo, S.; Walker, M. Using the experience sampling method to evaluate ubicomp applications. IEEE Pervasive Comput. 2003, 2, 24–31. [Google Scholar] [CrossRef] [Green Version]

- Wen, C.K.F.; Schneider, S.; Stone, A.A.; Spruijt-Metz, D. Compliance With Mobile Ecological Momentary Assessment Protocols in Children and Adolescents: A Systematic Review and Meta-Analysis. J. Med. Internet Res. 2017, 19, e6641. [Google Scholar] [CrossRef] [Green Version]

- Kini, S. Please Take My Survey: Compliance with Smartphone-Based EMA/ESM studies. Undergraduate Thesis, Dartmouth College, Hanover, NH, USA, 2013. [Google Scholar]

- Trull, T.J.; Ebner-Priemer, U.W. Using experience sampling methods/ecological momentary assessment (ESM/EMA) in clinical assessment and clinical research: Introduction to the special section. Psychol. Assess. 2009, 21, 457–462. [Google Scholar] [CrossRef] [Green Version]

- Morren, M.; Dulmen, S.v.; Ouwerkerk, J.; Bensing, J. Compliance with momentary pain measurement using electronic diaries: A systematic review. Eur. J. Pain 2009, 13, 354–365. [Google Scholar] [CrossRef]

- Intille, S.S.; Rondoni, J.; Kukla, C.; Ancona, I.; Bao, L. A Context-Aware Experience Sampling Tool CHI ’03 Extended Abstracts on Human Factors in Computing Systems; Association for Computing Machinery: New York, NY, USA, 2003; CHI EA ’03; pp. 972–973. [Google Scholar] [CrossRef] [Green Version]

- Hsieh, G.; Li, I.; Dey, A.; Forlizzi, J.; Hudson, S.E. Using visualizations to increase compliance in experience sampling. In Proceedings of the 10th International Conference on Ubiquitous Computing, Seoul, Korea, 21–24 September 2008; Association for Computing Machinery: New York, NY, USA, 2008. UbiComp ’08. pp. 164–167. [Google Scholar] [CrossRef]

- Markopoulos, P.; Batalas, N.; Timmermans, A. On the Use of Personalization to Enhance Compliance in Experience Sampling. In Proceedings of the European Conference on Cognitive Ergonomics 2015, Warsaw, Poland, 1–3 July 2015; Association for Computing Machinery: New York, NY, USA, 2015. ECCE ’15. pp. 1–4. [Google Scholar] [CrossRef]

- Raento, M.; Oulasvirta, A.; Eagle, N. Smartphones: An Emerging Tool for Social Scientists. Sociol. Methods Res. 2009, 37, 426–454. [Google Scholar] [CrossRef] [Green Version]

- van Berkel, N.; Luo, C.; Anagnostopoulos, T.; Ferreira, D.; Goncalves, J.; Hosio, S.; Kostakos, V. A Systematic Assessment of Smartphone Usage Gaps. In Proceedings of the 2016 CHI Conference on Human Factors in Computing Systems, San Jose, CA, USA, 7–12 May 2016; Association for Computing Machinery: New York, NY, USA, 2016. CHI ’16. pp. 4711–4721. [Google Scholar] [CrossRef] [Green Version]

- Fischer, J.E.; Greenhalgh, C.; Benford, S. Investigating episodes of mobile phone activity as indicators of opportune moments to deliver notifications. In Proceedings of the 13th International Conference on Human Computer Interaction with Mobile Devices and Services, Stockholm, Sweden, 30 August–2 September 2011; Association for Computing Machinery: New York, NY, USA, 2011. MobileHCI ’11. pp. 181–190. [Google Scholar] [CrossRef]

- Harbach, M.; Zezschwitz, E.v.; Fichtner, A.; Luca, A.D.; Smith, M. It’s a Hard Lock Life: A Field Study of Smartphone (Un)Locking Behavior and Risk Perception. In Proceedings of the 10th Symposium On Usable Privacy and Security, Menlo Park, CA, USA, 9–11 July 2014; pp. 213–230. [Google Scholar]

- Zhang, X.; Pina, L.R.; Fogarty, J. Examining Unlock Journaling with Diaries and Reminders for In Situ Self-Report in Health and Wellness. In Proceedings of the 2016 CHI Conference on Human Factors in Computing Systems, San Jose, CA, USA, 7–12 May 2016; Association for Computing Machinery: New York, NY, USA, 2016. CHI ’16. pp. 5658–5664. [Google Scholar] [CrossRef] [Green Version]

- Lathia, N.; Rachuri, K.K.; Mascolo, C.; Rentfrow, P.J. Contextual dissonance: Design bias in sensor-based experience sampling methods. In Proceedings of the 2013 ACM International Joint Conference on Pervasive and Ubiquitous Computing, Zurich, Switzerland, 8–12 September 2013; Association for Computing Machinery: New York, NY, USA, 2013. UbiComp ’13. pp. 183–192. [Google Scholar] [CrossRef]

- Van Berkel, N.; Ferreira, D.; Kostakos, V. The Experience Sampling Method on Mobile Devices. ACM Comput. Surv. 2017, 50, 93:1–93:40. [Google Scholar] [CrossRef]

- van Berkel, N.; Goncalves, J.; Lovén, L.; Ferreira, D.; Hosio, S.; Kostakos, V. Effect of experience sampling schedules on response rate and recall accuracy of objective self-reports. Int. J. Hum.-Comput. Stud. 2019, 125, 118–128. [Google Scholar] [CrossRef]

- Burgin, C.J.; Silvia, P.J.; Eddington, K.M.; Kwapil, T.R. Palm or Cell? Comparing Personal Digital Assistants and Cell Phones for Experience Sampling Research. Soc. Sci. Comput. Rev. 2013, 31, 244–251. [Google Scholar] [CrossRef] [Green Version]

- Seebregts, C.J.; Zwarenstein, M.; Mathews, C.; Fairall, L.; Flisher, A.J.; Seebregts, C.; Mukoma, W.; Klepp, K.I. Handheld computers for survey and trial data collection in resource-poor settings: Development and evaluation of PDACT, a Palm™ Pilot interviewing system. Int. J. Med. Inform. 2009, 78, 721–731. [Google Scholar] [CrossRef]

- Mehl, M.R.; Conner, T.S. (Eds.) Handbook of Research Methods for Studying Daily Life; The Guilford Press: New York, NY, USA, 2012; pp. xxvii, 676. [Google Scholar]

- Miller, G. The Smartphone Psychology Manifesto. Perspect. Psychol. Sci. 2012, 7, 221–237. [Google Scholar] [CrossRef] [Green Version]

- Stone, A.A.; Shiffman, S.; Schwartz, J.E.; Broderick, J.E.; Hufford, M.R. Patient non-compliance with paper diaries. BMJ 2002, 324, 1193–1194. [Google Scholar] [CrossRef] [Green Version]

- Ilumivu. 2021. Available online: https://ilumivu.com/ (accessed on 5 October 2021).

- LifeData Experience Sampling App. 2021. Available online: https://www.lifedatacorp.com/ (accessed on 5 October 2021).

- Metricwire Inc. Real-World Data|Real-Life Impact. 2021. Available online: https://metricwire.com/ (accessed on 5 October 2021).

- Experience Sampling—movisensXS. Available online: https://www.movisens.com/en/products/movisensxs/ (accessed on 5 October 2021).

- Stieger, S.; Schmid, I.; Altenburger, P.; Lewetz, D. |The Sensor-Based Physical Analogue Scale as a Novel Approach for Assessing Frequent and Fleeting Events: Proof of Concept. Front. Psychiatry 2020. [Google Scholar] [CrossRef]

- Intille, S.; Haynes, C.; Maniar, D.; Ponnada, A.; Manjourides, J. μEMA: Microinteraction-based ecological momentary assessment (EMA) using a smartwatch. In Proceedings of the 2016 ACM International Joint Conference on Pervasive and Ubiquitous Computing, Heidelberg, Germany, 12–16 September 2016; Association for Computing Machinery: New York, NY, USA, 2016. UbiComp ’16. pp. 1124–1128. [Google Scholar] [CrossRef]

- Mehrotra, A.; Vermeulen, J.; Pejovic, V.; Musolesi, M. Ask, but don’t interrupt: The case for interruptibility-aware mobile experience sampling. In Proceedings of the 2015 ACM International Joint Conference on Pervasive and Ubiquitous Computing and Proceedings of the 2015 ACM International Symposium on Wearable Computers, Osaka, Japan, 7–11 September 2015; Association for Computing Machinery: New York, NY, USA, 2015. UbiComp/ISWC’15 Adjunct. pp. 723–732. [Google Scholar] [CrossRef]

- Pejovic, V.; Lathia, N.; Mascolo, C.; Musolesi, M. Mobile-Based Experience Sampling for Behaviour Research. In Emotions and Personality in Personalized Services: Models, Evaluation and Applications; Tkalčič, M., De Carolis, B., de Gemmis, M., Odić, A., Košir, A., Eds.; Human–Computer Interaction Series; Springer International Publishing: Cham, Switzerland, 2016; pp. 141–161. [Google Scholar] [CrossRef] [Green Version]

- Ghosh, S.; Ganguly, N.; Mitra, B.; De, P. Designing An Experience Sampling Method for Smartphone based Emotion Detection. IEEE Trans. Affect. Comput. 2019. [Google Scholar] [CrossRef] [Green Version]

- Ho, J.; Intille, S.S. Using context-aware computing to reduce the perceived burden of interruptions from mobile devices. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, Portland, OR, USA, 2–7 April 2005; Association for Computing Machinery: New York, NY, USA, 2005. CHI ’05. pp. 909–918. [Google Scholar] [CrossRef] [Green Version]

- Beukenhorst, A.L.; Howells, K.; Cook, L.; McBeth, J.; O’Neill, T.W.; Parkes, M.J.; Sanders, C.; Sergeant, J.C.; Weihrich, K.S.; Dixon, W.G. Engagement and Participant Experiences With Consumer Smartwatches for Health Research: Longitudinal, Observational Feasibility Study. JMIR mHealth uHealth 2020, 8, e14368. [Google Scholar] [CrossRef] [Green Version]

- Ponnada, A.; Haynes, C.; Maniar, D.; Manjourides, J.; Intille, S. Microinteraction Ecological Momentary Assessment Response Rates: Effect of Microinteractions or the Smartwatch? Proc. ACM Interact. Mob. Wearable Ubiquitous Technol. 2017, 1, 92:1–92:16. [Google Scholar] [CrossRef]

- Pizza, S.; Brown, B.; McMillan, D.; Lampinen, A. Smartwatch in vivo. In Proceedings of the 2016 CHI Conference on Human Factors in Computing Systems, San Jose, CA, USA, 7–12 May 2016; Association for Computing Machinery: New York, NY, USA, 2016. CHI ’16. pp. 5456–5469. [Google Scholar] [CrossRef]

- Ashbrook, D.L.; Clawson, J.R.; Lyons, K.; Starner, T.E.; Patel, N. Quickdraw: The impact of mobility and on-body placement on device access time. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, Florence, Italy, 5–10 April 2008; Association for Computing Machinery: New York, NY, USA, 2008. CHI ’08. pp. 219–222. [Google Scholar] [CrossRef]

- Nadal, C.; Earley, C.; Enrique, A.; Vigano, N.; Sas, C.; Richards, D.; Doherty, G. Integration of a smartwatch within an internet-delivered intervention for depression: Protocol for a feasibility randomized controlled trial on acceptance. Contemp. Clin. Trials 2021, 103, 106323. [Google Scholar] [CrossRef]

- Ekiz, D.; Can, Y.S.; Dardagan, Y.C.; Ersoy, C. Is Your Smartband Smart Enough to Know Who You Are: Continuous Physiological Authentication in The Wild. IEEE Access 2020, 8, 59402–59411. [Google Scholar] [CrossRef]

- Ashry, S.; Ogawa, T.; Gomaa, W. CHARM-Deep: Continuous Human Activity Recognition Model Based on Deep Neural Network Using IMU Sensors of Smartwatch. IEEE Sens. J. 2020, 20, 8757–8770. [Google Scholar] [CrossRef]

- Quiroz, J.C.; Geangu, E.; Yong, M.H. Emotion Recognition Using Smart Watch Sensor Data: Mixed-Design Study. JMIR Ment. Health 2018, 5, e10153. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Jayathissa, P.; Quintana, M.; Sood, T.; Nazarian, N.; Miller, C. Is your clock-face cozie? A smartwatch methodology for the in-situ collection of occupant comfort data. J. Phys. Conf. Ser. 2019, 1343, 012145. [Google Scholar] [CrossRef]

- Goodman, S.; Kirchner, S.; Guttman, R.; Jain, D.; Froehlich, J.; Findlater, L. Evaluating Smartwatch-based Sound Feedback for Deaf and Hard-of-hearing Users Across Contexts. In Proceedings of the 2020 CHI Conference on Human Factors in Computing Systems, Honolulu, HI, USA, 25–30 April 2020; Association for Computing Machinery: New York, NY, USA, 2020; pp. 1–13. [Google Scholar]

- Perez, M.V.; Mahaffey, K.W.; Hedlin, H.; Rumsfeld, J.S.; Garcia, A.; Ferris, T.; Balasubramanian, V.; Russo, A.M.; Rajmane, A.; Cheung, L.; et al. Large-Scale Assessment of a Smartwatch to Identify Atrial Fibrillation. N. Engl. J. Med. 2019, 381, 1909–1917. [Google Scholar] [CrossRef] [PubMed]

- Zhao, J.; Lin, Y.; Wu, J.; Nyein, H.Y.Y.; Bariya, M.; Tai, L.C.; Chao, M.; Ji, W.; Zhang, G.; Fan, Z.; et al. A Fully Integrated and Self-Powered Smartwatch for Continuous Sweat Glucose Monitoring. ACS Sens. 2019, 4, 1925–1933. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Weiss, G.M.; Yoneda, K.; Hayajneh, T. Smartphone and Smartwatch-Based Biometrics Using Activities of Daily Living. IEEE Access 2019, 7, 133190–133202. [Google Scholar] [CrossRef]

- Baudisch, P.; Chu, G. Back-of-device interaction allows creating very small touch devices. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, Boston, MA, USA, 4–9 April 2009; Association for Computing Machinery: New York, NY, USA, 2009. CHI ’09. pp. 1923–1932. [Google Scholar] [CrossRef] [Green Version]

- Xiao, R.; Laput, G.; Harrison, C. Expanding the input expressivity of smartwatches with mechanical pan, twist, tilt and click. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, Toronto, OT, Canada, 26 April–1 May 2014; Association for Computing Machinery: New York, NY, USA, 2014. CHI ’14. pp. 193–196. [Google Scholar] [CrossRef]

- Hernandez, J.; McDuff, D.; Infante, C.; Maes, P.; Quigley, K.; Picard, R. Wearable ESM: Differences in the experience sampling method across wearable devices. In Proceedings of the 18th International Conference on Human-Computer Interaction with Mobile Devices and Services, Florence, Italy, 6–9 September 2016; Association for Computing Machinery: New York, NY, USA, 2016. MobileHCI ’16. pp. 195–205. [Google Scholar] [CrossRef]

- Experiencer. 2021. Available online: https://experiencer.eu/ (accessed on 5 October 2021).

- GameBus—Social Health Games for the Entire Family. 2021. Available online: https://www.gamebus.eu/ (accessed on 5 October 2021).

- Khanshan, A. GameBus Wear Application User Guide. 2021. Available online: https://blog.gamebus.eu/?p=1191 (accessed on 5 October 2021).

- Shahrestani, A.; Gorp, P.V.; Blanc, P.L.; Greidanus, F.; de Groot, K.; Leermakers, J. Unified Health Gamification can significantly improve well-being in corporate environments. In Proceedings of the 2017 39th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Jeju Island, Korea, 11–15 July 2017; pp. 4507–4511. [Google Scholar] [CrossRef] [Green Version]

- Klasnja, P.; Harrison, B.L.; LeGrand, L.; LaMarca, A.; Froehlich, J.; Hudson, S.E. Using wearable sensors and real time inference to understand human recall of routine activities. In Proceedings of the 10th International Conference on Ubiquitous Computing, Seoul, Korea, 21–24 September 2008; Association for Computing Machinery: New York, NY, USA, 2008. UbiComp ’08. pp. 154–163. [Google Scholar] [CrossRef]

- Khan, V.J.; Markopoulos, P.; Eggen, B.; IJsselsteijn, W.; de Ruyter, B. Reconexp: A way to reduce the data loss of the experiencing sampling method. In Proceedings of the 10th International Conference on Human Computer Interaction with Mobile Devices and Services, Amsterdam, The Netherlands, 2–5 September 2008; Association for Computing Machinery: New York, NY, USA, 2008. MobileHCI ’08. pp. 471–476. [Google Scholar] [CrossRef]

- Ferreira, D.; Goncalves, J.; Kostakos, V.; Barkhuus, L.; Dey, A.K. Contextual experience sampling of mobile application micro-usage. In Proceedings of the 16th International Conference on Human-Computer Interaction with Mobile Devices & Services, Toronto, ON, Canada, 23–26 September 2014; Association for Computing Machinery: New York, NY, USA, 2014. MobileHCI ’14. pp. 91–100. [Google Scholar] [CrossRef]

- Udovičić, G.; Ðerek, J.; Russo, M.; Sikora, M. Wearable Emotion Recognition System based on GSR and PPG Signals. In Proceedings of the 2nd International Workshop on Multimedia for Personal Health and Health Care, Mountain View, CA, USA, 23 October 2017; Association for Computing Machinery: New York, NY, USA, 2017. MMHealth ’17. pp. 53–59. [Google Scholar] [CrossRef]

- Watson, D.; Clark, L.A.; Tellegen, A. Development and validation of brief measures of positive and negative affect: The PANAS scales. J. Pers. Soc. Psychol. 1988, 54, 1063–1070. [Google Scholar] [CrossRef]

- Samsung Corp. HumanActivityMonitor API. 2021. Available online: https://docs.tizen.org/application/web/api/5.5/device_api/mobile/tizen/humanactivitymonitor.html#PedometerStepStatus (accessed on 5 October 2021).

- Csikszentmihalyi, M.; Csikzentmihaly, M. Flow: The Psychology of Optimal Experience; Harper & Row: New York, NY, USA, 1990; Volume 1990. [Google Scholar]

- Nuijten, R.; Van Gorp, P.; Khanshan, A.; Le Blanc, P.; Van den Berg, P.; Kemperman, A.; Simons, M. Evaluating the impact of personalized goal setting on engagement levels of government staff with a gamified mHealth tool: Results from a two-month randomized intervention trial. J. Med. Internet Res. 2021. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Khanshan, A.; Van Gorp, P.; Nuijten, R.; Markopoulos, P. Assessing the Influence of Physical Activity Upon the Experience Sampling Response Rate on Wrist-Worn Devices. Int. J. Environ. Res. Public Health 2021, 18, 10593. https://doi.org/10.3390/ijerph182010593

Khanshan A, Van Gorp P, Nuijten R, Markopoulos P. Assessing the Influence of Physical Activity Upon the Experience Sampling Response Rate on Wrist-Worn Devices. International Journal of Environmental Research and Public Health. 2021; 18(20):10593. https://doi.org/10.3390/ijerph182010593

Chicago/Turabian StyleKhanshan, Alireza, Pieter Van Gorp, Raoul Nuijten, and Panos Markopoulos. 2021. "Assessing the Influence of Physical Activity Upon the Experience Sampling Response Rate on Wrist-Worn Devices" International Journal of Environmental Research and Public Health 18, no. 20: 10593. https://doi.org/10.3390/ijerph182010593

APA StyleKhanshan, A., Van Gorp, P., Nuijten, R., & Markopoulos, P. (2021). Assessing the Influence of Physical Activity Upon the Experience Sampling Response Rate on Wrist-Worn Devices. International Journal of Environmental Research and Public Health, 18(20), 10593. https://doi.org/10.3390/ijerph182010593