Artificial Intelligence in the Diagnosis and Imaging-Based Assessment of Pelvic Organ Prolapse: A Scoping Review

Abstract

1. Introduction

2. Materials and Methods

2.1. Study Protocol

2.2. Eligibility Criteria

2.3. Search Methodology

2.4. Screening and Eligibility Assessment

2.5. Data Charting and Extraction

2.6. Level of Evidence Assessment

3. Results

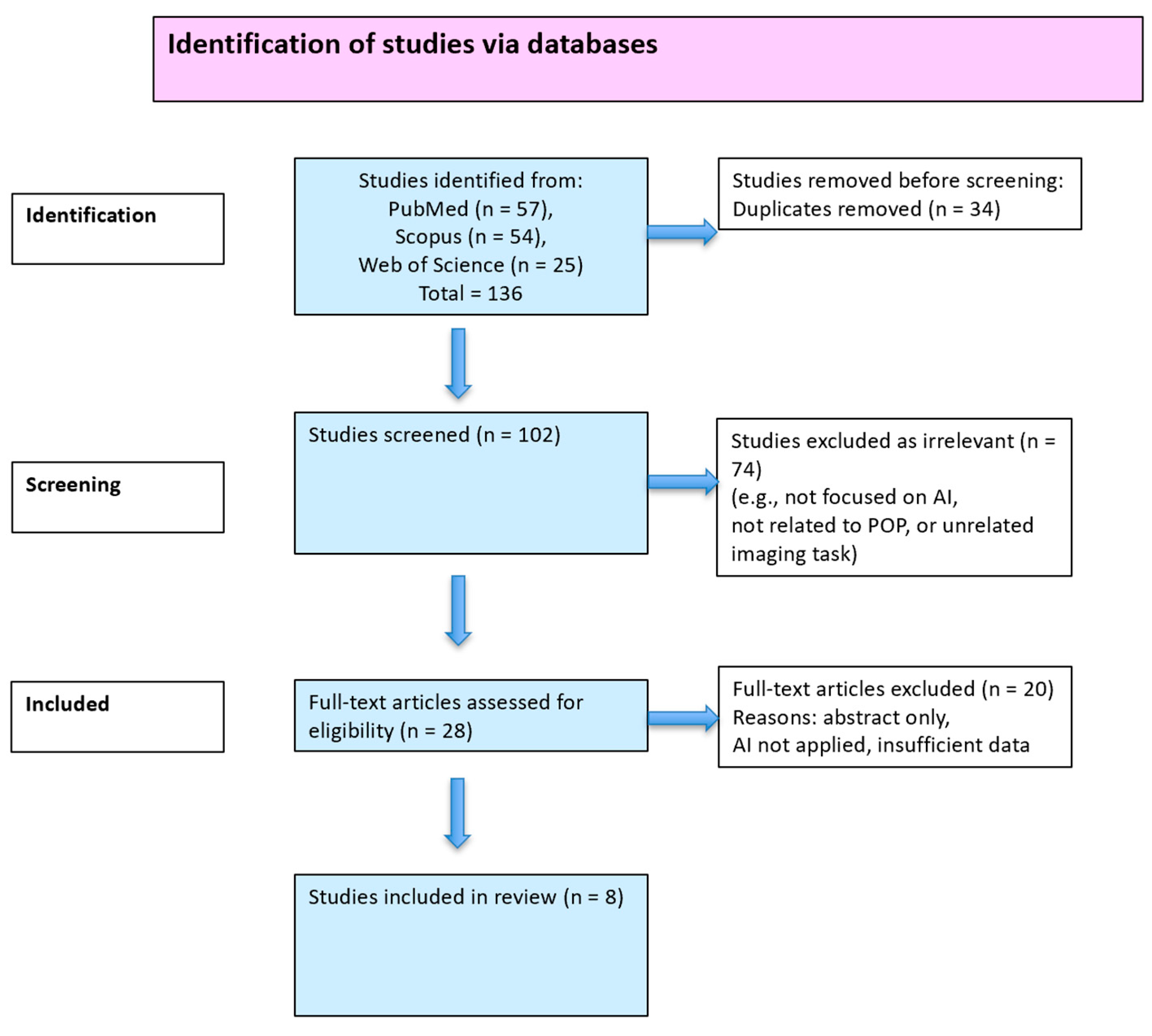

3.1. Study Selection

3.2. Study Characteristics

3.3. Imaging Modalities and AI Approaches

3.3.1. Ultrasound

3.3.2. MRI

3.3.3. AI Methodologies

4. Discussion

4.1. Summary of the Main Findings

4.2. Advances in AI Architectures

4.3. Clinical Relevance and Integration

4.4. Unresolved Technical and Methodological Issues

4.5. Challenges in Imaging and AI Performance

4.6. Limitations of the Study

4.7. Ethical, Regulatory, and Global Considerations

4.8. Implementation Challenges and Opportunities

4.9. Potential Bias and Reporting Gaps

4.10. Future Perspectives

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| POP | Pelvic organ prolapse |

| AI | Artificial intelligence |

| AUC | Area Under the Curve |

| CLAIM | Checklist for Artificial Intelligence in Medical Imaging |

| CNN | Convolutional neural network |

| CONSORT | Consolidated Standards of Reporting Trials |

| CT | Computer tomography |

| DE | Deep encoder (Encoder–Decoder) |

| DL | Deep learning |

| GDPR | General Data Protection Regulation |

| JBI | Joanna Briggs Institute |

| MRI | Magnetic resonance imaging |

| PCC | Population–Concept–Context |

| PRISMA-ScR | Preferred Reporting Items for Systematic Reviews and Meta-Analyses extension for Scoping Reviews |

| SHAP | Shapley Additive exPlanations |

| SVM | Support vector machine |

| VGG | Visual Geometry Group |

| ViT | Video transformer |

| ResNet-18 | Residual Neural Network with 18 layers |

| XGBoost | eXtreme Gradient Boosting |

| PACS | Picture Archiving and Communication Systems |

| FDA | Food and Drug Administration |

| EMA | European Medicines Agency |

| POP-Q | Pelvic organ prolapse quantification system |

References

- Recker, F.; Gembruch, U.; Strizek, B. Clinical Ultrasound Applications in Obstetrics and Gynecology in the Year 2024. J. Clin. Med. 2024, 13, 1244. [Google Scholar] [CrossRef] [PubMed]

- Nguyen, P.N.; Nguyen, V.T. Additional Value of Doppler Ultrasound to B-Mode Ultrasound in Assessing for Uterine Intracavitary Pathologies among Perimenopausal and Postmenopausal Bleeding Women: A Multicentre Prospective Observational Study in Vietnam. J. Ultrasound 2022, 26, 459–469. [Google Scholar] [CrossRef]

- Salvador, J.C.; Coutinho, M.P.; Venâncio, J.M.; Viamonte, B. Dynamic Magnetic Resonance Imaging of the Female Pelvic Floor—A Pictorial Review. Insights Imaging 2019, 10, 4. [Google Scholar] [CrossRef]

- Chen, L.; Xue, Z.; Wu, Q. Review of MRI-Based Three-Dimensional Digital Model Reconstruction of Female Pelvic Floor Organs. J. Shanghai Jiaotong Univ. (Med. Sci.) 2022, 42, 381–386. [Google Scholar] [CrossRef]

- Collins, S.; Lewicky-Gaupp, C. Pelvic Organ Prolapse. Gastroenterol. Clin. N. Am. 2022, 51, 177–193. [Google Scholar] [CrossRef]

- van der Steen, A.; Jochem, K.Y.; Consten, E.C.J.; Simonis, F.F.J.; Grob, A.T.M. POP-Q Versus Upright MRI Distance Measurements: A Prospective Study in Patients with POP. Int. Urogynecol. J. 2024, 35, 1255–1261. [Google Scholar] [CrossRef] [PubMed]

- Hainsworth, A.J.; Gala, T.; Johnston, L.; Solanki, D.; Ferrari, L.; Schizas, A.M.P.; Santoro, G. Integrated Total Pelvic Floor Ultrasound in Pelvic Floor Dysfunction. Continence 2023, 8, 101045. [Google Scholar] [CrossRef]

- Wang, X.; He, D.; Feng, F.; Ashton-Miller, J.; DeLancey, J.; Luo, J. Multi-Label Classification of Pelvic Organ Prolapse Using Stress Magnetic Resonance Imaging with Deep Learning. Int. Urogynecol. J. 2022, 33, S88–S89. [Google Scholar] [CrossRef]

- Dietz, H.P. Translabial Ultrasonography. In Pelvic Floor Disorders: Imaging and Multidisciplinary Approach to Management; Santoro, G.A., Wieczorek, A.P., Bartram, C.I., Eds.; Springer: Milan, Italy, 2010; pp. 405–428. ISBN 978-88-470-1542-5. [Google Scholar]

- Krupinski, E.A. Current Perspectives in Medical Image Perception. Atten. Percept. Psychophys. 2010, 72, 1205–1217. [Google Scholar] [CrossRef]

- Pinto-Coelho, L. How Artificial Intelligence Is Shaping Medical Imaging Technology: A Survey of Innovations and Applications. Bioengineering 2023, 10, 1435. [Google Scholar] [CrossRef]

- Srivastav, S.; Chandrakar, R.; Gupta, S.; Babhulkar, V.; Agrawal, S.; Jaiswal, A.; Prasad, R.; Wanjari, M.B. ChatGPT in Radiology: The Advantages and Limitations of Artificial Intelligence for Medical Imaging Diagnosis. Cureus 2023, 15, e41435. [Google Scholar] [CrossRef] [PubMed]

- Mienye, I.D.; Swart, T.G.; Obaido, G.; Jordan, M.; Ilono, P. Deep Convolutional Neural Networks in Medical Image Analysis: A Review. Information 2025, 16, 195. [Google Scholar] [CrossRef]

- Sreelakshmi, S.; Malu, G.; Sherly, E.; Mathew, R. M-Net: An Encoder-Decoder Architecture for Medical Image Analysis Using Ensemble Learning. Results Eng. 2023, 17, 100927. [Google Scholar] [CrossRef]

- Azad, R.; Kazerouni, A.; Heidari, M.; Aghdam, E.K.; Molaei, A.; Jia, Y.; Jose, A.; Roy, R.; Merhof, D. Advances in Medical Image Analysis with Vision Transformers: A Comprehensive Review. Med. Image Anal. 2024, 91, 103000. [Google Scholar] [CrossRef] [PubMed]

- Jiao, W.; Hao, X.; Qin, C. The Image Classification Method with CNN-XGBoost Model Based on Adaptive Particle Swarm Optimization. Information 2021, 12, 156. [Google Scholar] [CrossRef]

- Mak, S.; Thomas, A. Steps for Conducting a Scoping Review. J. Grad. Med. Educ. 2022, 14, 565–567. [Google Scholar] [CrossRef] [PubMed]

- Pollock, D.; Evans, C.; Menghao Jia, R.; Alexander, L.; Pieper, D.; Brandão De Moraes, É.; Peters, M.D.J.; Tricco, A.C.; Khalil, H.; Godfrey, C.M.; et al. “How-to”: Scoping Review? J. Clin. Epidemiol. 2024, 176, 111572. [Google Scholar] [CrossRef]

- Levac, D.; Colquhoun, H.; O’Brien, K.K. Scoping Studies: Advancing the Methodology. Implement. Sci. 2010, 5, 69. [Google Scholar] [CrossRef]

- Brignardello-Petersen, R.; Santesso, N.; Guyatt, G.H. Systematic Reviews of the Literature: An Introduction to Current Methods. Am. J. Epidemiol. 2025, 194, 536–542. [Google Scholar] [CrossRef]

- Tricco, A.C.; Lillie, E.; Zarin, W.; O’Brien, K.K.; Colquhoun, H.; Levac, D.; Moher, D.; Peters, M.D.J.; Horsley, T.; Weeks, L.; et al. PRISMA Extension for Scoping Reviews (PRISMA-ScR): Checklist and Explanation. Ann. Intern. Med. 2018, 169, 467–473. [Google Scholar] [CrossRef]

- Szentimrey, Z.; Ameri, G.; Hong, C.X.; Cheung, R.Y.K.; Ukwatta, E.; Eltahawi, A. Automated Segmentation and Measurement of the Female Pelvic Floor from the Mid-Sagittal Plane of 3D Ultrasound Volumes. Med. Phys. 2023, 50, 6215–6227. [Google Scholar] [CrossRef] [PubMed]

- Zhu, S.; Zhu, X.; Zheng, B.; Wu, M.; Li, Q.; Qian, C. Building a Pelvic Organ Prolapse Diagnostic Model Using Vision Transformer on Multi-Sequence MRI. Med. Phys. 2025, 52, 553–564. [Google Scholar] [CrossRef] [PubMed]

- Yang, F.; Hu, R.; Wu, H.; Li, S.; Peng, S.; Luo, H.; Lv, J.; Chen, Y.; Mei, L. Combining Pelvic Floor Ultrasonography with Deep Learning to Diagnose Anterior Compartment Organ Prolapse. Quant. Imaging Med. Surg. 2025, 15, 2. [Google Scholar] [CrossRef]

- Duan, L.; Wang, Y.; Li, J.; Zhou, N. Exploring the Clinical Diagnostic Value of Pelvic Floor Ultrasound Images for Pelvic Organ Prolapses through Deep Learning. J. Supercomput. 2021, 77, 10699–10720. [Google Scholar] [CrossRef]

- Feng, F.; Ashton-Miller, J.A.; DeLancey, J.O.L.; Luo, J. Convolutional Neural Network-Based Pelvic Floor Structure Segmentation Using Magnetic Resonance Imaging in Pelvic Organ Prolapse. Med. Phys. 2020, 47, 4281–4293. [Google Scholar] [CrossRef]

- Feng, F.; Ashton-Miller, J.A.; DeLancey, J.O.L.; Luo, J. Feasibility of a Deep Learning-Based Method for Automated Localization of Pelvic Floor Landmarks Using Stress MR Images. Int. Urogynecol. J. 2021, 32, 3069–3075. [Google Scholar] [CrossRef]

- Garcia-Mejido, J.A.; Fernandez-Palacin, F.; Sainz-Bueno, J.A. Systematic Review and Meta-Analysis of the Ultrasound Diagnosis of Pelvic Organ Prolapse (MUDPOP). Clin. E Investig. Ginecol. Y Obstet. 2025, 52, 101018. [Google Scholar] [CrossRef]

- Howick, J.; Chalmers, I.; Glasziou, P.; Greenhalgh, T.; Heneghan, C.; Liberati, A.; Moschetti, I.; Phillips, B.; Thornton, H. The 2011 Oxford CEBM Levels of Evidence (Introductory Document). Oxford Centre for Evidence-Based Medicine. Available online: http://www.cebm.net/index.aspx?o=5653 (accessed on 10 June 2025).

- Ponce-Bobadilla, A.V.; Schmitt, V.; Maier, C.S.; Mensing, S.; Stodtmann, S. Practical Guide to SHAP Analysis: Explaining Supervised Machine Learning Model Predictions in Drug Development. Clin. Transl. Sci. 2024, 17, e70056. [Google Scholar] [CrossRef]

- Selvaraju, R.R.; Cogswell, M.; Das, A.; Vedantam, R.; Parikh, D.; Batra, D. Grad-CAM: Visual Explanations from Deep Networks via Gradient-Based Localization. Int. J. Comput. Vis. 2020, 128, 336–359. [Google Scholar] [CrossRef]

- Madhu, C.; Swift, S.; Moloney-Geany, S.; Drake, M.J. How to Use the Pelvic Organ Prolapse Quantification (POP-Q) System? Neurourol. Urodyn. 2018, 37, S39–S43. [Google Scholar] [CrossRef]

- Billone, V.; Gullo, G.; Perino, G.; Catania, E.; Cucinella, G.; Ganduscio, S.; Vassiliadis, A.; Zaami, S. Robotic versus Mini-Laparoscopic Colposacropexy to Treat Pelvic Organ Prolapse: A Retrospective Observational Cohort Study and a Medicolegal Perspective. J. Clin. Med. 2024, 13, 4802. [Google Scholar] [CrossRef]

- Culmone, S.; Speciale, P.; Guastella, E.; Puglisi, T.; Cucinella, G.; Piazza, F.; Morreale, C.; Buzzaccarini, G.; Gullo, G. Sacral Neuromodulation for Refractory Lower Urinary Tract Dysfunctions: A Single-Center Retrospective Cohort Study. Ital. J. Gynaecol. Obstet. 2022, 34, 317. [Google Scholar] [CrossRef]

- Rubin, N.; Cerentini, T.M.; Schlöttgen, J.; do Nascimento Petter, G.; Bertotto, A.; La Verde, M.; Gullo, G.; Telles da Rosa, L.H.; Viana da Rosa, P.; Della Méa Plentz, R. Urinary Incontinence and Quality of Life in High-Performance Swimmers: An Observational Study. Health Care Women Int. 2024, 45, 1446–1455. [Google Scholar] [CrossRef] [PubMed]

- Laganà, A.S.; La Rosa, V.L.; Palumbo, M.A.; Rapisarda, A.M.; Noventa, M.; Vitagliano, A.; Gullo, G.; Vitale, S.G. The Impact of Stress Urinary Incontinence on Sexual Function and Quality of Life. Gazz Med. Ital.-Arch. Sci. Med. 2018, 177, 415–416. [Google Scholar] [CrossRef]

- Di Giovanni, A.; Exacoustos, C.; Guerriero, S. Guidelines for Diagnosis and Treatment of Endometriosis. Ital. J. Gynaecol. Obstet. 2018, 30, 23–26. [Google Scholar] [CrossRef]

- Dhadve, R.U.; Krishnani, K.S.; Kalekar, T.; Durgi, E.C.; Agarwal, U.; Madhu, S.; Kumar, D. Imaging of Pelvic Floor Disorders Involving the Posterior Compartment on Dynamic MR Defaecography. S. Afr. J. Radiol. 2024, 28, 2935. [Google Scholar] [CrossRef]

- Grob, A.T.M.; Olde Heuvel, J.; Futterer, J.J.; Massop, D.; Veenstra van Nieuwenhoven, A.L.; Simonis, F.F.J.; van der Vaart, C.H. Underestimation of Pelvic Organ Prolapse in the Supine Straining Position, Based on Magnetic Resonance Imaging Findings. Int. Urogynecol. J. 2019, 30, 1939–1944. [Google Scholar] [CrossRef]

- Voigt, P.; Von Dem Bussche, A. The EU General Data Protection Regulation (GDPR); Springer International Publishing: Cham, Switzerland, 2017; ISBN 978-3-319-57958-0. [Google Scholar]

- Tejani, A.S.; Klontzas, M.E.; Gatti, A.A.; Mongan, J.T.; Moy, L.; Park, S.H.; Kahn, C.E.; for the CLAIM 2024 Update Panel; Abbara, S.; Afat, S.; et al. Checklist for Artificial Intelligence in Medical Imaging (CLAIM): 2024 Update. Radiol. Artif. Intell. 2024, 6, e240300. [Google Scholar] [CrossRef]

- Liu, X.; Cruz Rivera, S.; Moher, D.; Calvert, M.J.; Denniston, A.K.; The SPIRIT-AI and CONSORT-AI Working Group; SPIRIT-AI and CONSORT-AI Steering Group; Chan, A.-W.; Darzi, A.; Holmes, C.; et al. Reporting Guidelines for Clinical Trial Reports for Interventions Involving Artificial Intelligence: The CONSORT-AI Extension. Nat. Med. 2020, 26, 1364–1374. [Google Scholar] [CrossRef]

| Study (Author, Year) | Dataset Type | Annotation Method | Annotation Tool (If Stated) | Augmentation Reported | Notes on Interobserver Reliability |

|---|---|---|---|---|---|

| Wang et al., 2022 [8] | Labeled stress MRI (multi-label POP classification) | Manual expert labeling | Not reported | Yes, but no details | Not reported |

| Szentimrey et al., 2023 [22] | Labeled 3D ultrasound (mid-sagittal plane segmentation) | Manual segmentation | Not reported | Not stated | Not reported |

| Zhu et al., 2025 [23] | Labeled multi-sequence MRI for POP diagnosis | Manual annotation (details limited) | Not reported | Yes, geometric transforms | Not reported |

| Yang et al., 2025 [24] | Labeled ultrasound for anterior compartment POP | Manual expert annotations | Not reported | Yes, flipping, rotation | Not reported |

| Duan et al., 2021 [25] | Labeled ultrasound for POP identification | Manual annotation (POP stage) | Not reported | Yes, flipping, brightness | Not reported |

| Feng et al., 2020 [26] | Labeled MRI for pelvic floor segmentation | Manual delineation | Not reported | No | Not reported |

| Feng et al., 2021 [27] | Labeled stress MRI for landmark localization | Manual landmark placement | Not reported | Not stated | Not reported |

| García -Mejido et al., 2025 [28] | Ultrasound dataset labeled for POP compartments | Manual annotation by urogynecologists | Not reported | Yes, general augmentation | Not reported |

| Study (First Author, Year) | Study Design | AI Focus | OCEBM Level of Evidence |

|---|---|---|---|

| García-Mejido et al., 2025 [28] | Prospective observational | 2D ultrasound, CNN + XGBoost | Level 2 |

| Yang et al., 2025 [24] | Retrospective cohort | 2D ultrasound, DL architectures | Level 3 |

| Duan et al., 2021 [25] | Retrospective comparative | 3D ultrasound, DL classification | Level 3 |

| Szentimrey et al., 2023 [22] | Technical segmentation | 3D ultrasound, anatomical mapping | Level 4 |

| Zhu et al., 2025 [23] | Model development + validation | MRI, vision transformer | Level 2 |

| Feng et al., 2021 [27] | Feasibility study | Stress MRI, landmark localization | Level 4 |

| Feng et al., 2020 [26] | Technical segmentation study | MRI, CNN | Level 4 |

| Wang et al., 2022 [8] | Retrospective model development | Stress MRI, ResNet-50 | Level 3 |

| Article | Modality | AI Method | Other Metrics | Article Type |

|---|---|---|---|---|

| Ultrasound Diagnosis of POP Using AI [28] | 2D Ultrasound | CNN + XGBoost | Prospective Observational Study | |

| Building a POP Diagnostic Model Using Vision Transformer [23] | MRI (multi-sequence) | Vision transformer | Kappa: 0.77 | Model Development and Validation |

| Exploring the Diagnostic Value of PF Ultrasound via DL [25] | 3D Ultrasound | CNN | Specificity: 84% | Comparative Study |

| Automated Segmentation of the Female Pelvic Floor (3D US) [22] | 3D Ultrasound | Segmentation (DL) | Technical Segmentation Study | |

| Combining Pelvic Floor US with DL to Diagnose Anterior Compartment POP [24] | 2D Ultrasound | AlexNet/VGG-16/ResNet-18 | Inference time: 13.4 ms | Retrospective Study |

| Conventional NN-Based Pelvic Floor Segmentation using MRI in POP [26] | MRI | CNN | No diagnostic metrics reported | Segmentation Feasibility Study |

| Feasibility of DL-Based Landmark Localization on Stress MRI [27] | Stress MRI | Encoder–decoder CNN | Localization error: 0.9 to 3.6 mm, time: 0.015 s | Feasibility Study |

| Multi-label Classification of POP Using Stress MRI with DL [8] | Stress MRI | Modified ResNet-50 | Model Development and Validation |

| Article | Accuracy | Recall | Precision | F1-Score | AUC |

|---|---|---|---|---|---|

| Ultrasound Diagnosis of POP Using AI [28] | 98.31% | 100% | 98.18% | ||

| Building a POP Diagnostic Model Using Vision Transformer [23] | 0.76 | 0.86 | 0.86 | ||

| Exploring the Diagnostic Value of PF Ultrasound via DL [25] | 86% | 89% | 0.79 | ||

| Automated Segmentation of the Female Pelvic Floor (3D US) [22] | |||||

| Combining Pelvic Floor US with DL to Diagnose Anterior Compartment POP [24] | 93.53% | 0.852 | |||

| Conventional NN-Based Pelvic Floor Segmentation using MRI in POP [26] | |||||

| Feasibility of DL-Based Landmark Localization on Stress MRI [27] | |||||

| Multi-label Classification of POP Using Stress MRI with DL [8] | 0.72 | 0.84 | 0.77 | 0.91 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Published by MDPI on behalf of the Lithuanian University of Health Sciences. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Botoncea, M.; Molnar, C.; Butiurca, V.O.; Nicolescu, C.L.; Molnar-Varlam, C. Artificial Intelligence in the Diagnosis and Imaging-Based Assessment of Pelvic Organ Prolapse: A Scoping Review. Medicina 2025, 61, 1497. https://doi.org/10.3390/medicina61081497

Botoncea M, Molnar C, Butiurca VO, Nicolescu CL, Molnar-Varlam C. Artificial Intelligence in the Diagnosis and Imaging-Based Assessment of Pelvic Organ Prolapse: A Scoping Review. Medicina. 2025; 61(8):1497. https://doi.org/10.3390/medicina61081497

Chicago/Turabian StyleBotoncea, Marian, Călin Molnar, Vlad Olimpiu Butiurca, Cosmin Lucian Nicolescu, and Claudiu Molnar-Varlam. 2025. "Artificial Intelligence in the Diagnosis and Imaging-Based Assessment of Pelvic Organ Prolapse: A Scoping Review" Medicina 61, no. 8: 1497. https://doi.org/10.3390/medicina61081497

APA StyleBotoncea, M., Molnar, C., Butiurca, V. O., Nicolescu, C. L., & Molnar-Varlam, C. (2025). Artificial Intelligence in the Diagnosis and Imaging-Based Assessment of Pelvic Organ Prolapse: A Scoping Review. Medicina, 61(8), 1497. https://doi.org/10.3390/medicina61081497