Automated Image-Based Wound Area Assessment in Outpatient Clinics Using Computer-Aided Methods: A Development and Validation Study

Abstract

1. Introduction

2. Materials and Methods

2.1. Participants

2.2. Wound Area Analysis

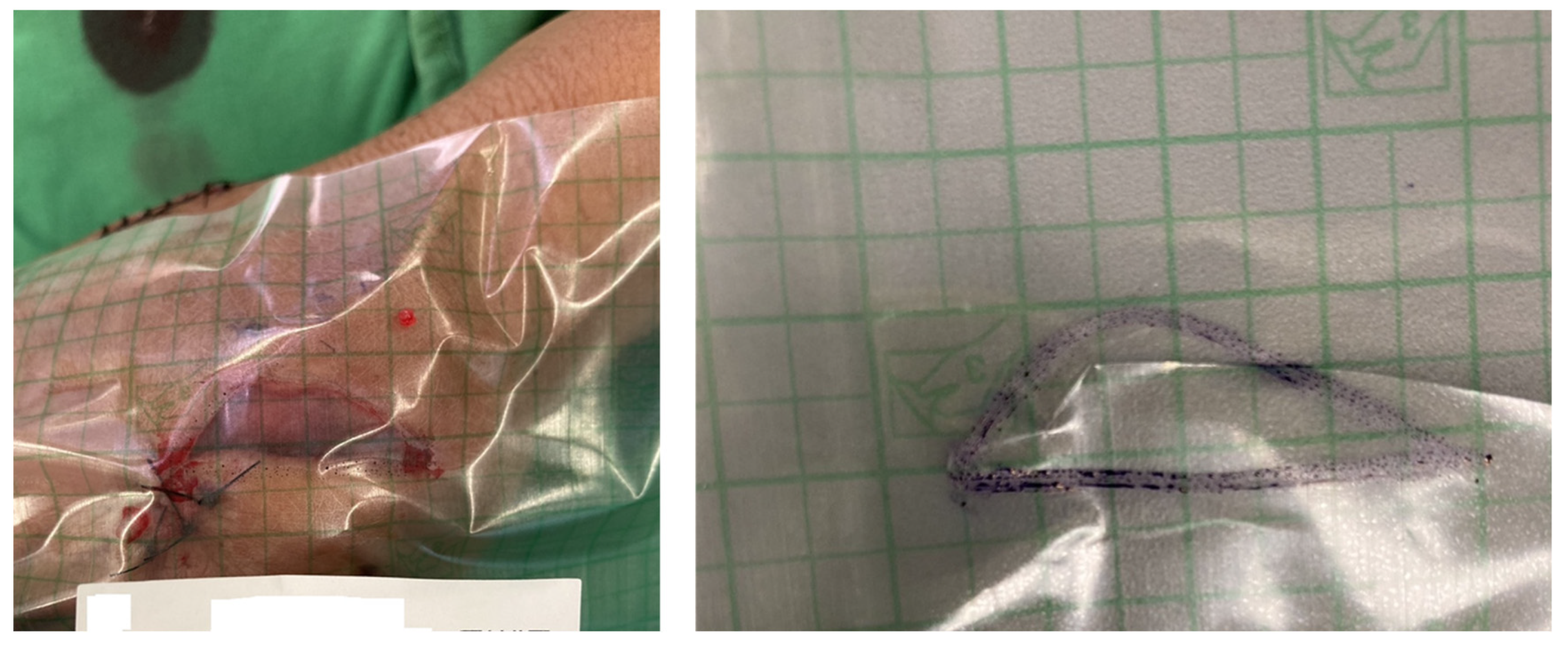

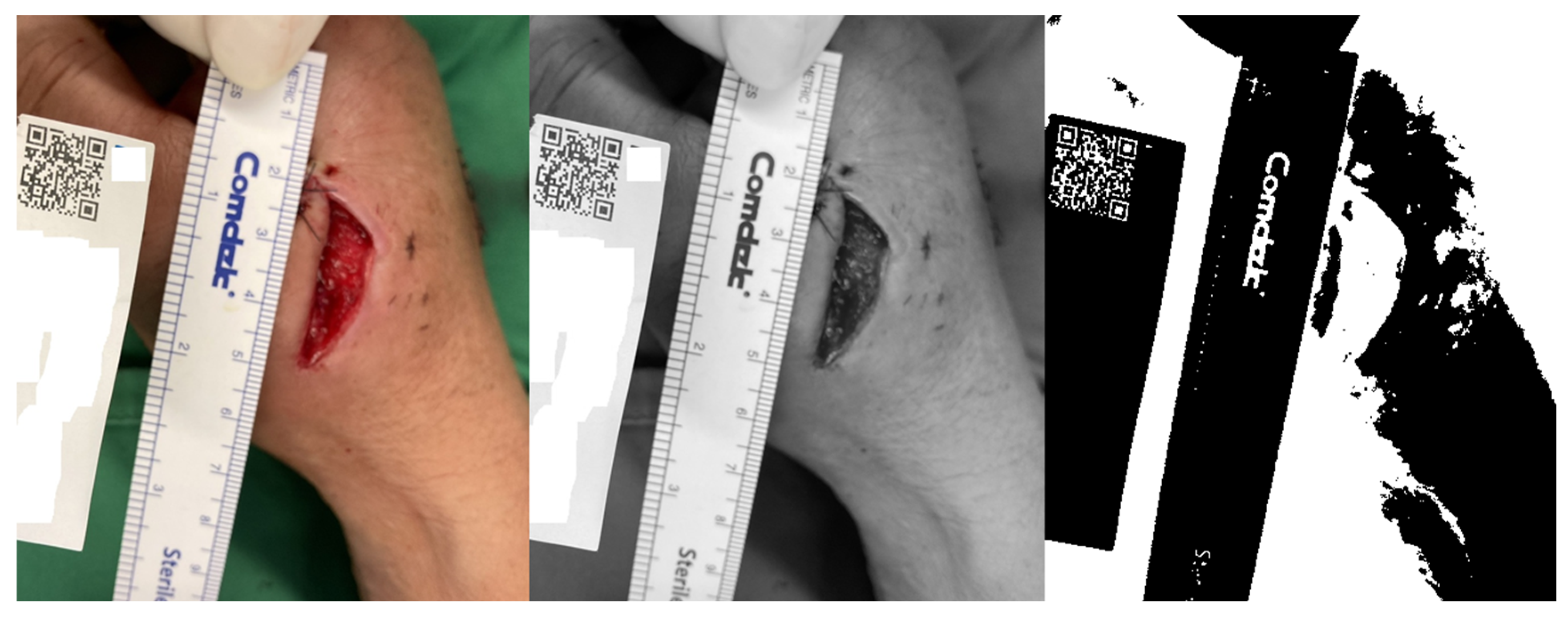

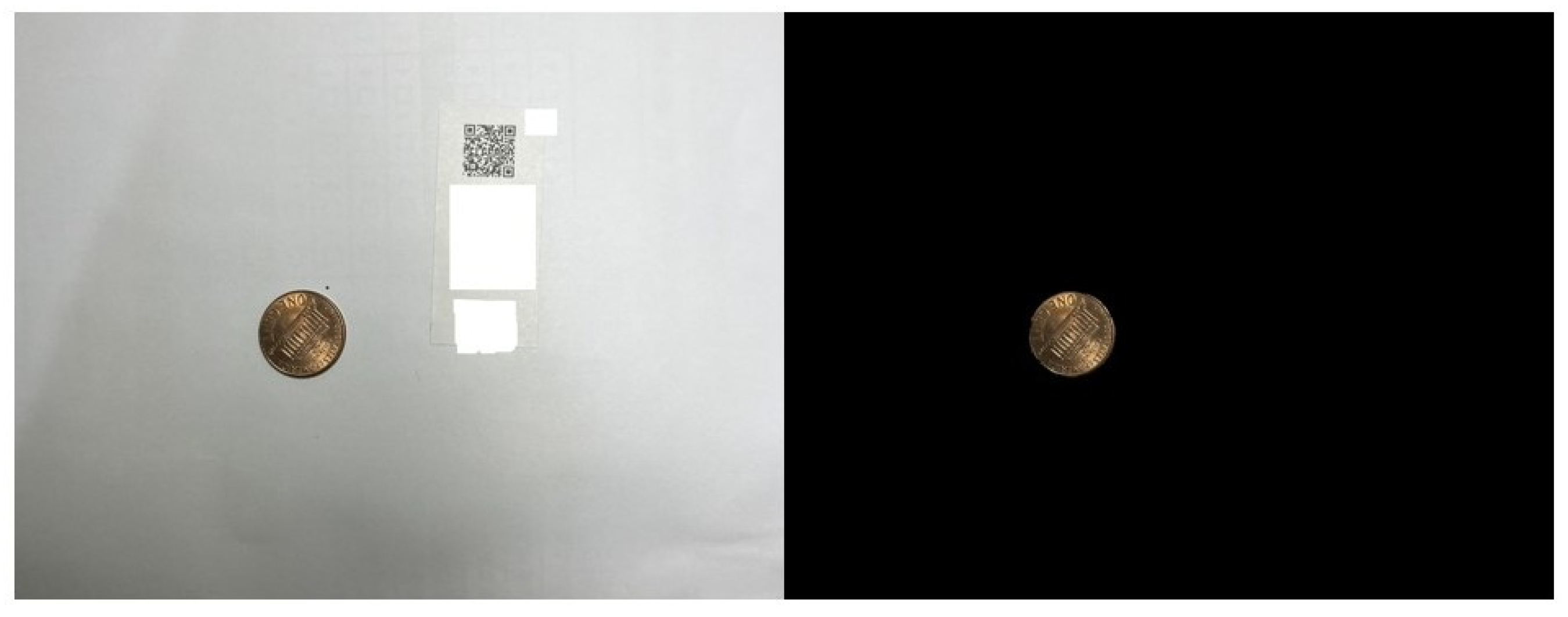

2.3. QR Code Detection Algorithm

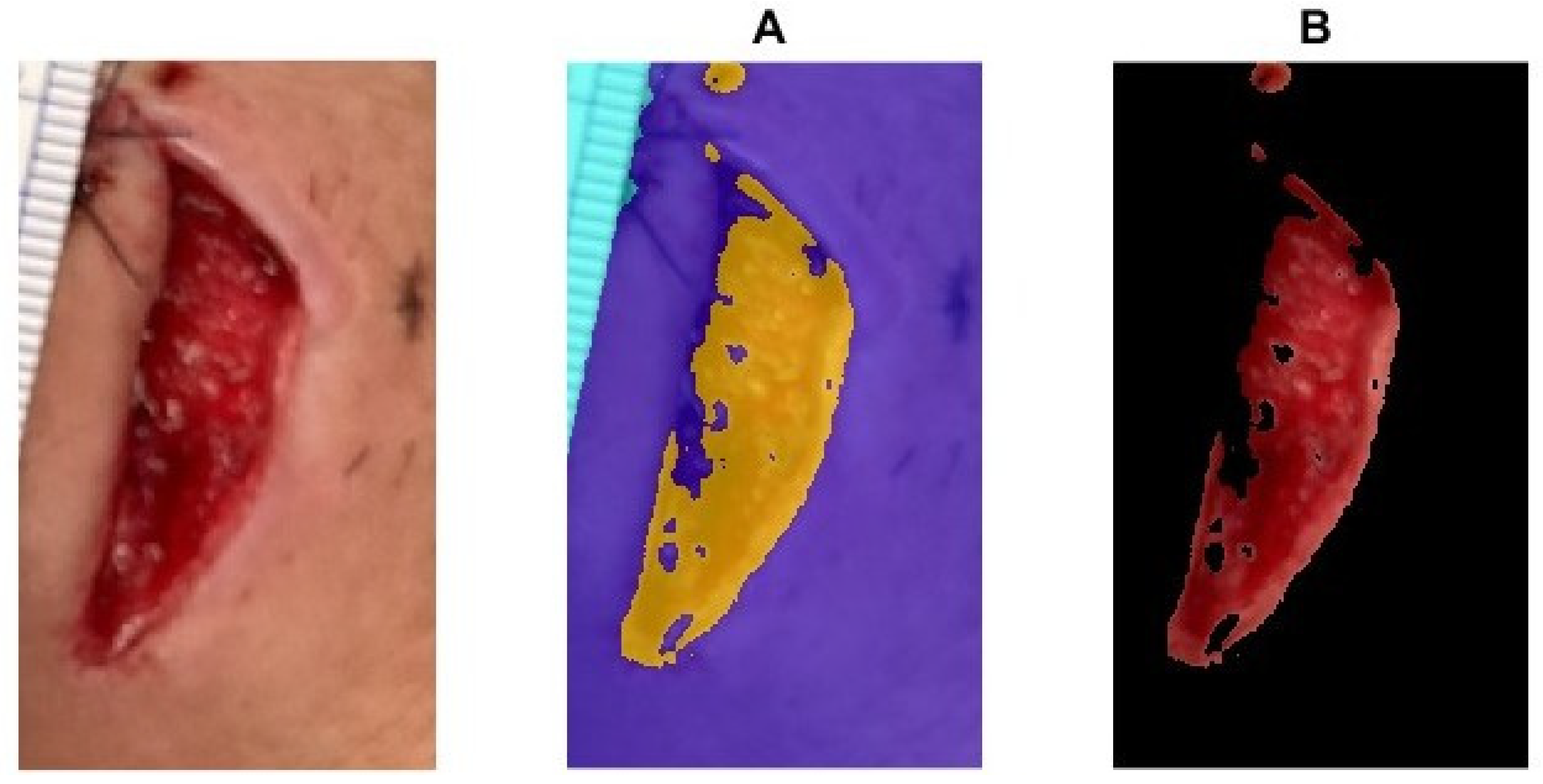

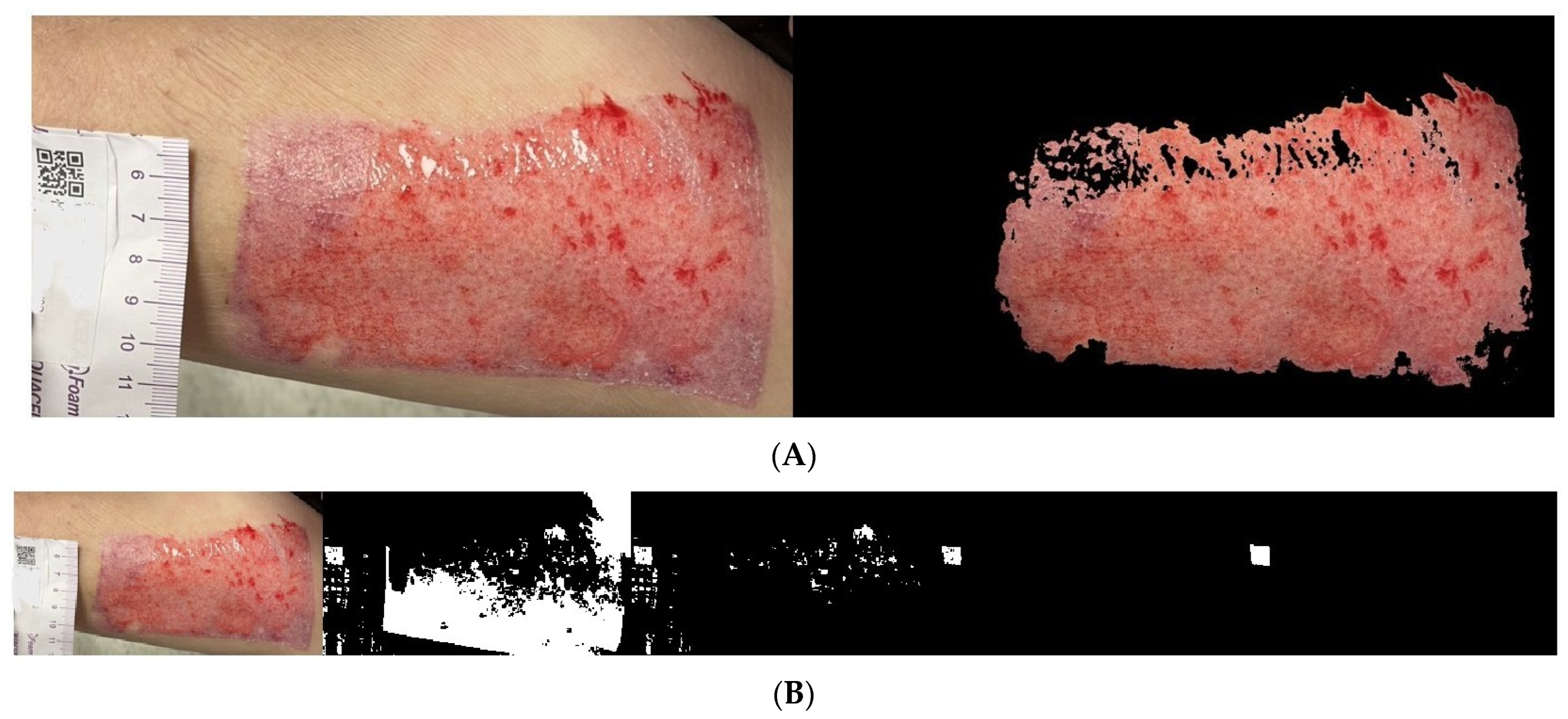

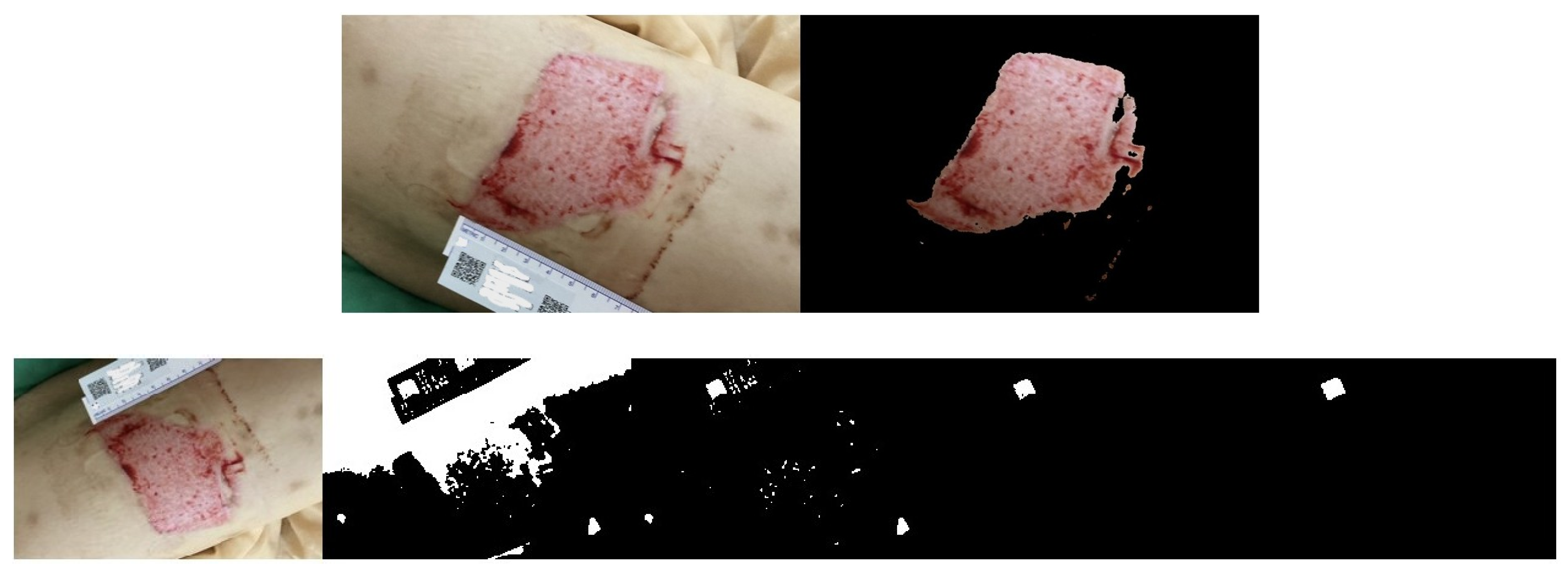

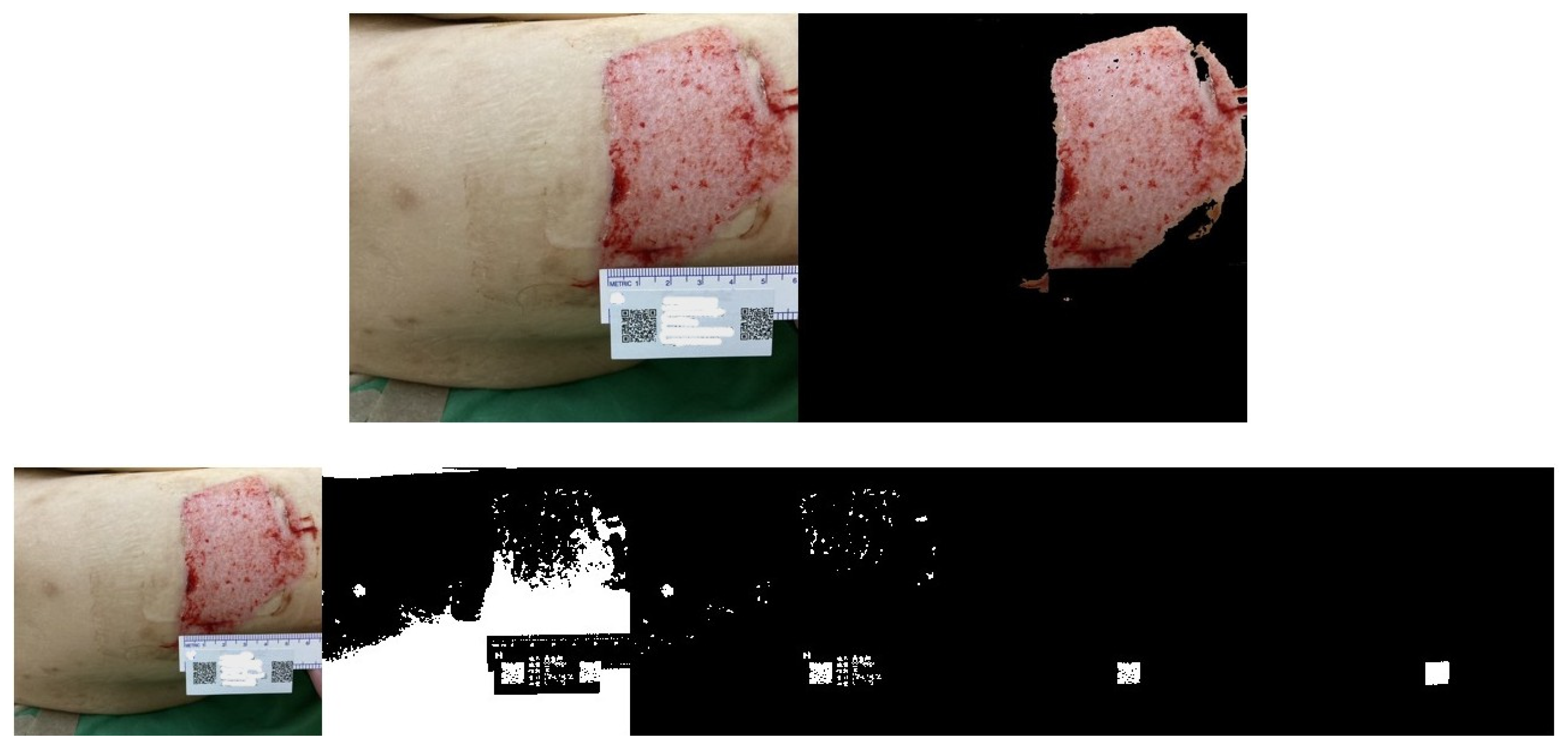

2.4. Wound Boundary Detection Algorithm

2.5. Verification of the Accuracy of the Proposed Algorithm

3. Results

Wound Area Assessment

4. Discussion

4.1. Limitations

4.2. Recommendations

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Demirtas, Y.; Yagmur, C.; Soylemez, F.; Ozturk, N.; Demir, A. Management of split-thickness skin graft donor site: A prospective clinical trial for comparison of five different dressing materials. Burns 2010, 36, 999–1005. [Google Scholar] [CrossRef] [PubMed]

- Peterson, N.; Stevenson, H.; Sahni, V. Size matters: How accurate is clinical estimation of traumatic wound size? Injury 2014, 45, 232–236. [Google Scholar] [CrossRef] [PubMed]

- Giretzlehner, M.; Ganitzer, I.; Haller, H. Technical and Medical Aspects of Burn Size Assessment and Documentation. Medicina 2021, 57, 242. [Google Scholar] [CrossRef]

- Pavoni, V.; Gianesello, L.; Paparella, L.; Buoninsegni, L.T.; Barboni, E. Outcome predictors and quality of life of severe burn patients admitted to intensive care unit. Scand. J. Trauma Resusc. Emerg. Med. 2010, 18, 24. [Google Scholar] [CrossRef] [PubMed]

- Bowers, S.; Franco, E. Chronic Wounds: Evaluation and Management. Am. Fam. Physician 2020, 101, 159–166. [Google Scholar]

- Chairat, S.; Chaichulee, S.; Dissaneewate, T.; Wangkulangkul, P.; Kongpanichakul, L. AI-Assisted Assessment of Wound Tissue with Automatic Color and Measurement Calibration on Images Taken with a Smartphone. Healthcare 2023, 11, 273. [Google Scholar] [CrossRef]

- Gunter, R.L.; Fernandes-Taylor, S.; Rahman, S.; Awoyinka, L.; Bennett, K.M.; Weber, S.M.; Greenberg, C.C.; Kent, K.C. Feasibility of an Image-Based Mobile Health Protocol for Postoperative Wound Monitoring. J. Am. Coll. Surg. 2018, 226, 277–286. [Google Scholar] [CrossRef]

- Zhang, J.; Dushaj, K.; Rasquinha, V.J.; Scuderi, G.R.; Hepinstall, M.S. Monitoring Surgical Incision Sites in Orthopedic Patients Using an Online Physician-Patient Messaging Platform. J. Arthroplast. 2019, 34, 1897–1900. [Google Scholar] [CrossRef]

- Zhang, J.; Mihai, C.; Tüshaus, L.; Scebba, G.; Distler, O.; Karlen, W. Wound Image Quality From a Mobile Health Tool for Home-Based Chronic Wound Management With Real-Time Quality Feedback: Randomized Feasibility Study. JMIR Mhealth Uhealth 2021, 9, e26149. [Google Scholar] [CrossRef]

- Chen, Y.W.; Hsu, J.T.; Hung, C.C.; Wu, J.M.; Lai, F.; Kuo, S.Y. Surgical Wounds Assessment System for Self-Care. IEEE Trans. Syst. Man Cybern. Syst. 2020, 50, 5076–5091. [Google Scholar] [CrossRef]

- Cazzolato, M.T.; Ramos, J.S.; Rodrigues, L.S.; Scabora, L.C.; Chino, D.Y.T.; Jorge, A.E.S.; de Azevedo-Marques, P.M.; Traina, C., Jr.; Traina, A.J.M. The UTrack framework for segmenting and measuring dermatological ulcers through telemedicine. Comput. Biol. Med. 2021, 134, 104489. [Google Scholar] [CrossRef] [PubMed]

- Ahmad Fauzi, M.F.; Khansa, I.; Catignani, K.; Gordillo, G.; Sen, C.K.; Gurcan, M.N. Computerized segmentation and measurement of chronic wound images. Comput. Biol. Med. 2015, 60, 74–85. [Google Scholar] [CrossRef] [PubMed]

- Wendelken, M.E.; Berg, W.T.; Lichtenstein, P.; Markowitz, L.; Comfort, C.; Alvarez, O.M. Wounds measured from digital photographs using photodigital planimetry software: Validation and rater reliability. Wounds 2011, 23, 267–275. [Google Scholar] [PubMed]

- Li, P.; Xie, H.; Peng, Y. An adaptive clustering algorithm based on boundary detection. In Proceedings of the 2021 IEEE 10th Data Driven Control and Learning Systems Conference (DDCLS), Suzhou, China, 14–16 May 2021. [Google Scholar]

- Mashat, A. A QR code-enabled framework for fast biomedical image processing in medical diagnosis using deep learning. BMC Med. Imaging 2024, 24, 198. [Google Scholar] [CrossRef]

- Otsu, N. A Threshold Selection Method from Gray-Level Histograms. IEEE Trans. Syst. Man Cybern. 1979, 9, 62–66. [Google Scholar] [CrossRef]

- Maragos, P.; Schafer, R. Applications of morphological filtering to image analysis and processing. In Proceedings of the ICASSP '86. IEEE International Conference on Acoustics, Speech, and Signal Processing, Tokyo, Japan, 7–11 April 1986. [Google Scholar]

- Udvaros, J.; Szabó, L. Recognize and decode QR codes from images. In Proceedings of the 2024 10th International Conference on Control, Decision and Information Technologies (CoDIT), Valetta, Malta, 1–4 July 2024. [Google Scholar]

- Saegusa, T.; Maruyama, T. Real-Time Segmentation of Color Images based on the K-means Clustering on FPGA. In Proceedings of the 2007 International Conference on Field-Programmable Technology, Kitakyushu, Japan, 12–14 December 2007. [Google Scholar]

- Garg, I.; Kaur, B. Color based segmentation using K-mean clustering and watershed segmentation. In Proceedings of the 2016 3rd International Conference on Computing for Sustainable Global Development (INDIACom), New Delhi, India, 16–18 March 2016. [Google Scholar]

- Jørgensen, L.B.; Sørensen, J.A.; Jemec, G.B.; Yderstraede, K.B. Methods to assess area and volume of wounds—A systematic review. Int. Wound J. 2016, 13, 540–553. [Google Scholar] [CrossRef]

- Kabir, A.; Sarkar, A.; Barui, A. Acute and Chronic Wound Management: Assessment, Therapy and Monitoring Strategies; Springer: Singapore, 2023; pp. 97–125. [Google Scholar]

- Zhao, C.; Guo, Y.; Li, L.; Yang, M. Non-invasive techniques for wound assessment: A comprehensive review. Int. Wound J. 2024, 21, e70109. [Google Scholar] [CrossRef]

- Watson, S.I.; Gkini, E.; Bishop, J.; Scandrett, K.; Napit, I.; Lilford, R.J. Modelling wound area in studies of wound healing interventions. BMC Med. Res. Methodol. 2024, 24, 206. [Google Scholar] [CrossRef]

- Georg, P.J.; Schmid, M.E.; Zahia, S.; Probst, S.; Cazzaniga, S.; Hunger, R.; Bossart, S. Evaluation of a Semi-Automated Wound-Halving Algorithm for Split-Wound Design Studies: A Step towards Enhanced Wound-Healing Assessment. J. Clin. Med. 2024, 13, 3599. [Google Scholar] [CrossRef]

- Niri, R.; Gutierrez, E.; Douzi, H.; Lucas, Y.; Treuillet, S.; Castaneda, B.; Hernandez, I. Multi-View Data Augmentation to Improve Wound Segmentation on 3D Surface Model by Deep Learning. IEEE Access 2021, 9, 157628–157638. [Google Scholar] [CrossRef]

- Rennert, R.; Golinko, M.; Kaplan, D.; Flattau, A.; Brem, H. Standardization of wound photography using the Wound Electronic Medical Record. Adv. Skin Wound Care 2009, 22, 32–38. [Google Scholar] [CrossRef] [PubMed]

- van Rossum, C.; de Bree, K.; de Jong, G.; Bartels, R.; Heeren, G.; Nabuurs, M.; Meijer, C.; Tostmann, A.; Aquarius, R. Usability and outcomes of self-monitored surgical wound healing using a smartphone-based application by patients following neurosurgery. J. Hosp. Infect. 2024, 148, 138–144. [Google Scholar] [CrossRef] [PubMed]

- Khalil, A.; Elmogy, M.; Ghazal, M.; Burns, C.; El-Baz, A. Chronic Wound Healing Assessment System Based on Different Features Modalities and Non-Negative Matrix Factorization (NMF) Feature Reduction. IEEE Access 2019, 7, 80110–80121. [Google Scholar] [CrossRef]

- Wang, L.; Pedersen, P.C.; Strong, D.M.; Tulu, B.; Agu, E.; Ignotz, R. Smartphone-Based Wound Assessment System for Patients With Diabetes. IEEE Trans. Biomed. Eng. 2015, 62, 477–488. [Google Scholar] [CrossRef]

- Queen, D.; Harding, K. Is wound photography becoming sloppy? Int. Wound J. 2020, 17, 5–6. [Google Scholar] [CrossRef]

- Elmogy, M.; Khalil, A.; Shalaby, A.; Mahmoud, A.; Ghazal, M.; El-Baz, A. Chronic Wound Healing Assessment System Based on Color and Texture Analysis. In Proceedings of the 2019 IEEE International Conference on Imaging Systems and Techniques (IST), Abu Dhabi, United Arab Emirates, 9–10 December 2019. [Google Scholar]

- Poon, T.W.K.; Friesen, M.R. Algorithms for Size and Color Detection of Smartphone Images of Chronic Wounds for Healthcare Applications. IEEE Access 2015, 3, 1799–1808. [Google Scholar] [CrossRef]

- Ganesan, O.; Morris, M.; Guo, L.; Orgill, D. A review of artificial intelligence in wound care. Artif. Intell. Surg. 2024, 4, 364–375. [Google Scholar] [CrossRef]

- Keegan, A.C.; Bose, S.; McDermott, K.M.; Starks White, M.P.; Stonko, D.P.; Jeddah, D.; Lev-Ari, E.; Rutkowski, J.; Sherman, R.; Abularrage, C.J.; et al. Implementation of a patient-centered remote wound monitoring system for management of diabetic foot ulcers. Front. Endocrinol. 2023, 14, 1157518. [Google Scholar]

- Griffa, D.; Natale, A.; Merli, Y.; Starace, M.; Curti, N.; Mussi, M.; Castellani, G.; Melandri, D.; Piraccini, B.M.; Zengarini, C. Artificial Intelligence in Wound Care: A Narrative Review of the Currently Available Mobile Apps for Automatic Ulcer Segmentation. BioMedInformatics 2024, 4, 2321–2337. [Google Scholar] [CrossRef]

| Photo Shooting | Coin Area Pixel | QR Code Pixel | Ratio |

|---|---|---|---|

| 1st | 10,806 | 5470 | 1.9755 |

| 2nd | 13,831 | 6958 | 1.9877 |

| 3rd | 12,050 | 6190 | 1.9466 |

| Mean ± Std | Pearson Correlation | ANOVA F-Statistic | ANOVA p-Value | |

|---|---|---|---|---|

| 1st photo shot | 29.43 ± 5.40 | - | 0.0049 | 0.9951 |

| 2nd photo shot | 29.49 ± 5.46 | 0.997 (vs. Test 1) | ||

| 3rd photo shot | 29.55 ± 5.51 | 0.995 (vs. Test 1), 0.993 (vs. Test 2) |

| t-Statistic | p-Value | Significant Difference? (p < 0.05) | |

|---|---|---|---|

| 1st photo shot vs. 2nd photo shot | −0.908 | 0.370 | No |

| 1st photo shot vs. 3rd photo shot | −1.367 | 0.179 | No |

| 2nd photo shot vs. 3rd photo shot | −0.608 | 0.547 | No |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Published by MDPI on behalf of the Lithuanian University of Health Sciences. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, K.-C.; Lee, Y.-H.; Lin, Y.-H. Automated Image-Based Wound Area Assessment in Outpatient Clinics Using Computer-Aided Methods: A Development and Validation Study. Medicina 2025, 61, 1099. https://doi.org/10.3390/medicina61061099

Li K-C, Lee Y-H, Lin Y-H. Automated Image-Based Wound Area Assessment in Outpatient Clinics Using Computer-Aided Methods: A Development and Validation Study. Medicina. 2025; 61(6):1099. https://doi.org/10.3390/medicina61061099

Chicago/Turabian StyleLi, Kuan-Chen, Ying-Han Lee, and Yu-Hsien Lin. 2025. "Automated Image-Based Wound Area Assessment in Outpatient Clinics Using Computer-Aided Methods: A Development and Validation Study" Medicina 61, no. 6: 1099. https://doi.org/10.3390/medicina61061099

APA StyleLi, K.-C., Lee, Y.-H., & Lin, Y.-H. (2025). Automated Image-Based Wound Area Assessment in Outpatient Clinics Using Computer-Aided Methods: A Development and Validation Study. Medicina, 61(6), 1099. https://doi.org/10.3390/medicina61061099