The Impact of Artificial Intelligence in the Endoscopic Assessment of Premalignant and Malignant Esophageal Lesions: Present and Future

Abstract

1. Introduction

2. Methods

3. Definitions of Artificial Intelligence Terminology

3.1. Artificial Intelligence (AI)

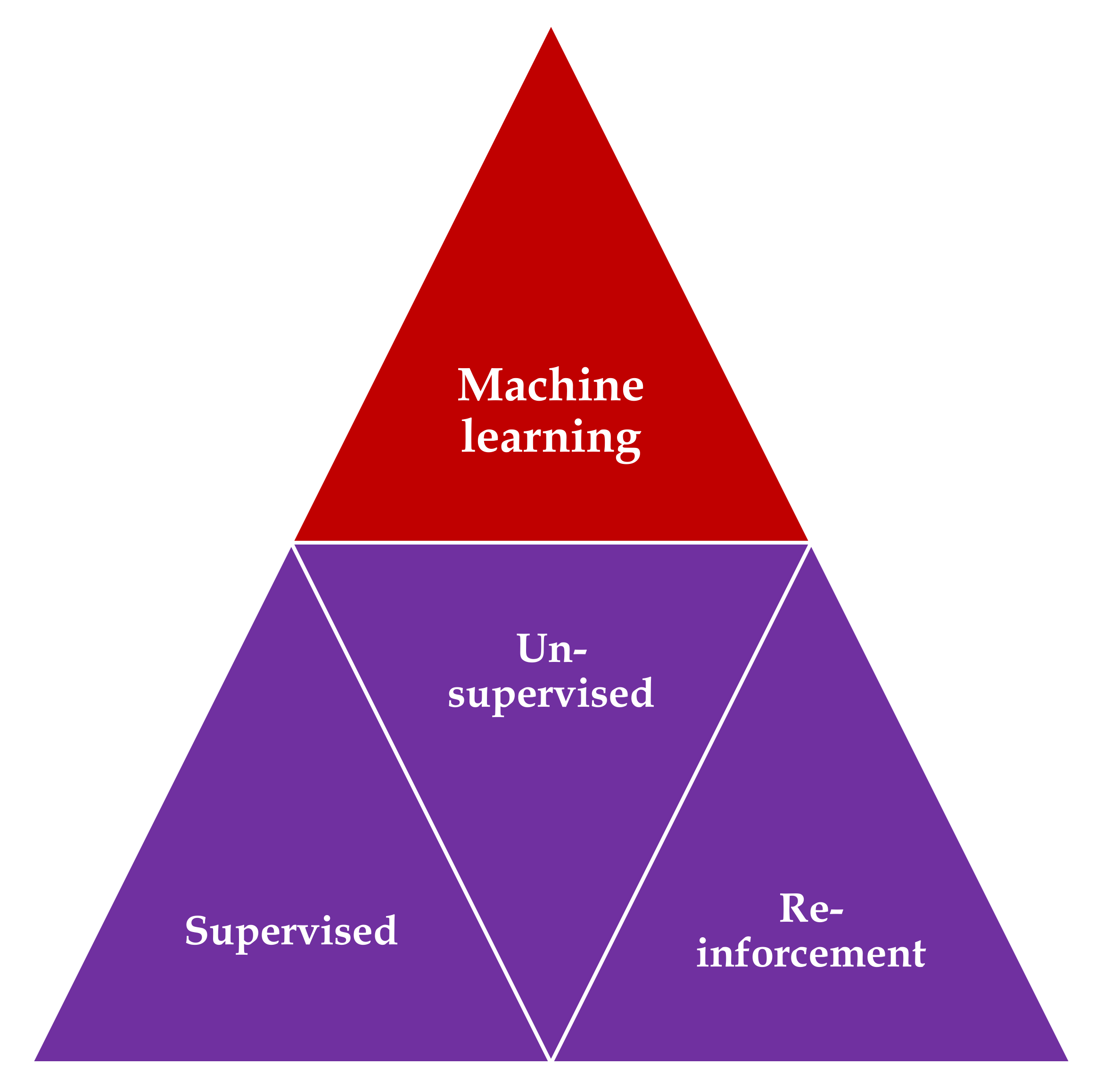

3.2. Machine Learning (ML)

3.2.1. ML Using Hand-Crafted Features (Conventional Algorithms)

3.2.2. ML Using Deep Learning (DL)

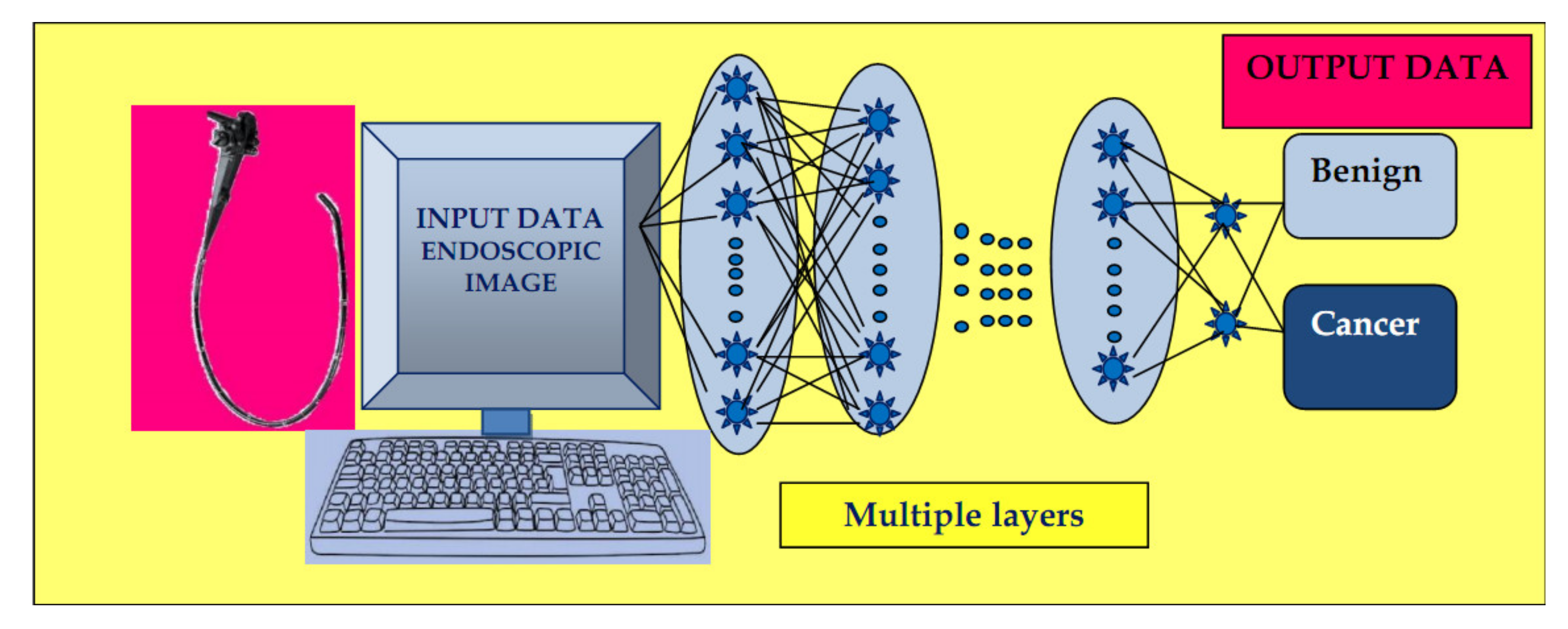

Neural Networks

Computer Vision

3.3. Automated Endoscopy Report Writing

4. Principal Applications of AI for Assessment of Precancerous and Cancerous Esophageal Lesions

4.1. Identification of Dysplasia/Early Neoplasia in Barrett’s Esophagus (BE)

4.1.1. CAD Using White-Light Endoscopy/Narrow-Band Imaging (WLE/NBI)

4.1.2. CAD Using Wide-Area Transepithelial Sampling (WATS)

4.1.3. CAD Using Volumetric Laser Endomicroscopy (VLE)

4.1.4. CAD Using I-SCAN

4.1.5. Novel Research Toward Real-Time Recognition of BE

4.2. Esophageal Squamous Cell Carcinoma

4.2.1. Identification of Premalignant Lesions/Early Esophageal Squamous Cell Carcinoma (ESCC)

CAD Using Narrow Band Imaging (NBI)

CAD Using the LASEREO System

Detection of Early Squamous Cell Carcinoma (ESCC) Plus ESCC Invasion Depth

CAD using Esophageal Intrapapillary Capillary Loops (IPCLs)

CAD Using the Endocytoscopic System (ECS)

4.3. Esophageal Cancer Detection (SCC or AC)

5. Future Perspectives and Challenges

6. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Topol, E.J. High-performance medicine: The convergence of human and artificial intelligence. Nat. Med. 2019, 25, 2544–2556. [Google Scholar] [CrossRef] [PubMed]

- Shung, D.L.; Au, B.; Taylor, R.A.; Tay, J.K.; Laursen, S.B.; Stanley, A.J.; Dalton, H.R.; Ngu, J.; Schultz, M.; Laine, L. Validation of a Machine Learning Model That Outperforms Clinical Risk Scoring Systems for Upper Gastrointestinal Bleeding. Gastroenterology 2020, 158, 160–167. [Google Scholar] [CrossRef] [PubMed]

- Neil, S.; Leiman, D.A. Improving Acute GI Bleeding Management Through Artificial Intelligence: Unnatural Selection? Dig. Dis. Sci. 2019, 64, 2061–2064. [Google Scholar] [CrossRef]

- Le Berre, C.; Sandborn, W.J.; Aridhi, S.; Devignes, M.-D.; Fournier, L.; Smaïl-Tabbone, M.; Danese, S.; Peyrin-Biroulet, L. Application of artificial intelligence to gastroenterology and hepatology. Gastroenterology 2020, 158, 76–94. [Google Scholar] [CrossRef]

- Kangi, A.K.; Bahrampour, A. Predicting the survival of gastric cancer patients using artificial and bayesian neural networks. Asian Pac. J. Cancer Prev. 2018, 19, 487–490. [Google Scholar] [CrossRef]

- Oh, S.E.; Seo, S.W.; Choi, M.G.; Sohn, T.S.; Bae, J.M.; Kim, S. Prediction of overall survival and novel classification of patients with gastric cancer using the survival recurrent network. Ann. Surg. Oncol. 2018, 25, 1153–1159. [Google Scholar] [CrossRef]

- Ruffle, J.K.; Farmer, A.D.; Aziz, Q. Artificial intelligence-assisted gastroenterology- promises and pitfalls. Am. J. Gastroenterol. 2019, 114, 422–428. [Google Scholar] [CrossRef]

- Van der Sommen, F.; Zinger, S.; Curvers, W.L.; Bisschops, R.; Pech, O.; Weusten, B.L.; Bergman, J.J.; de With, P.H.; Schoon, E.J. Computer aided detection of early neoplastic lesions in Barrett’s esophagus. Endoscopy 2016, 48, 617–624. [Google Scholar] [CrossRef]

- Trindade, A.J.; McKinley, M.J.; Fan, C.; Leggett, C.L.; Kahn, A.; Pleskow, D.K. Endoscopic surveillance of barrett’s esophagus using volumetric laser endomicroscopy with artificial intelligence image enhancement. Gastroenterology 2019, 157, 303–305. [Google Scholar] [CrossRef]

- Guo, L.; Xiao, X.; Wu, C.; Zeng, X.; Zhang, Y.; Du, J.; Bai, S.; Xie, J.; Zhang, Z.; Li, Y.; et al. Real-time automated diagnosis of precancerous lesions and early esophageal squamous cell carcinoma using a deep learning model (with videos). Gastrointest. Endosc. 2020, 91, 41–51. [Google Scholar] [CrossRef]

- Turing, A.M. Computing machinery and intelligence. Mind 1950, 59, 433–460. [Google Scholar] [CrossRef]

- Russell, S.J.; Norvig, P. Artificial Intelligence, a Modern Approach, 3rd ed.; Pearson Education: Upper Saddle River, NJ, USA, 2009; pp. 1–5. [Google Scholar]

- Topol, E. Deep Medicine; Hachette Book Group: New York, NY, USA, 2019; pp. 17–24. [Google Scholar]

- Ebigbo, A.; Palm, C.; Probst, A.; Mendel, R.; Manzeneder, J.; Prinz, F.; de Souza, L.A.; Papa, J.P.; Siersema, P.; Messmann, H. A technical review of artificial intelligence as applied to gastrointestinal endoscopy: Clarifying the terminology. Endosc. Int. Open 2019, 7, E1616–E1623. [Google Scholar] [CrossRef] [PubMed]

- Dey, A. Machine learning algorithms: A review. Int. J. Comput. Sci. Inf. Technol. 2016, 7, 1174–1179. [Google Scholar]

- Yang, Y.J.; Bang, C.S. Application of artificial intelligence in gastroenterology. World J. Gastroenterol. 2019, 25, 1666–1683. [Google Scholar] [CrossRef] [PubMed]

- Boser, B.E.; Guyon, I.M.; Vapnik, V.N. A training algorithm for optimal margin classifiers. In Proceedings of the Fifth Annual Workshop on Computational Learning Theory (COLT’92), Pittsburgh, PA, USA, 27–29 July 1992; pp. 144–152. [Google Scholar] [CrossRef]

- Schmidhuber, J. Deep learning in neural networks: An overview. Neural Netw. 2015, 61, 85–117. [Google Scholar] [CrossRef]

- Mori, Y.; Kudo, S.; Mohmed, H.; Misawa, M.; Ogata, N.; Itoh, H.; Oda, M.; Mori, K. Artificial intelligence and upper gastrointestinal endoscopy: Current status and future perspective. Dig. Endosc. 2019, 31, 378–388. [Google Scholar] [CrossRef]

- Khan, S.; Yong, S. A comparison of deep learning and handcrafted features in medical image modality classification. In Proceedings of the 2016 3rd International Conference on Computer and Information Sciences (ICCOINS), Kuala Lumpur, Malaysia, 15–17 August 2016; pp. 633–638. [Google Scholar] [CrossRef]

- Philbrick, K.A.; Yoshida, K.; Inoue, D.; Akkus, Z.; Kline, T.L.; Weston, A.D.; Korfiatis, P.; Takahashi, N.; Erickson, B.J. What does deep learning see? Insights from a classifier trained to predict contrast enhancement phase from CT images. Am. J. Roentgenol. 2018, 211, 1184–1193. [Google Scholar] [CrossRef]

- LeCun, Y.; Boser, B.; Denker, J.S.; Henderson, D.; Howard, R.E.; Hubbard, W.; Jackel, L.D. Handwritten digit recognition with a back-propagation network. In Proceedings of the Advances in Neural Information Processing Systems 1990, Denver, CO, USA, 27–30 November 1989; pp. 396–404. [Google Scholar]

- LeCun, Y.; Bottou, L.; Bengio, Y.; Haffner, P. Gradient-based learning applied to document recognition. In Proceedings of the 1998 IEEE International Frequency Control Symposium, Pasadena, CA, USA, 29 May 1998; Volume 86, pp. 2278–2324. [Google Scholar] [CrossRef]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016; pp. 770–778. [Google Scholar]

- Long, J.; Shelhamer, E.; Darrell, T. Fully convolutional networks for semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 3431–3440. [Google Scholar]

- Ronneberger, O.; Fischer; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention, Munich, Germany, 5–9 October 2015; pp. 234–241. [Google Scholar]

- Ebigbo, A.; Mendel, R.; Probst, A.; Manzeneder, J.; Prinz, F.; de Souza, L., Jr.; Papa, J.; Palm, C.; Messman, H. Real- time use of artificial intelligence in the evaluation of cancer in Barrett’s oesophagus. Gut 2020, 69, 615–616. [Google Scholar] [CrossRef]

- Hirasawa, T.; Aoyama, K.; Tanimoto, T.; Ishihara, S.; Shichijo, S.; Ozawa, T.; Ohnishi, T.; Fujishiro, M.; Matsuo, K.; Fujisaki, J.; et al. Application of artificial intelligence using a convolutional neural network for detecting gastric cancer in endoscopic images. Gastric Cancer 2018, 21, 653–660. [Google Scholar] [CrossRef] [PubMed]

- East, J.E.; Rees, C.J. Making optical biopsy a clinical reality in colonoscopy. Lancet Gastroenterol. Hepatol. 2018, 3, 10–12. [Google Scholar] [CrossRef]

- Yuan, Y.; Meng, M.Q. Deep learning for polyp recognition in wireless capsule endoscopy images. Med. Phys. 2017, 44, 1379–1389. [Google Scholar] [CrossRef] [PubMed]

- Groenen, M.J.M.; Kuipers, E.J.; van Berge Henegouwen, G.P.; Fockens, P.; Ouwendijk, R.J. Computerisation of endoscopy reports using standard reports and text blocks. Neth. J. Med. 2006, 6, 78–83. [Google Scholar] [CrossRef][Green Version]

- Abadir, A.P.; Ali, M.F.; Karnes, W.; Samarasena, J.B. Artificial Intelligence in Gastrointestinal Endoscopy. Clin. Endosc. 2020, 53, 132–141. [Google Scholar] [CrossRef] [PubMed]

- Bretthauer, M.; Aabakken, L.; Dekker, E.; Kaminski, M.F.; Rösch, T.; Hultcrantz, R.; Suchanek, S.; Jover, R.; Kuipers, E.J.; Bisschops, R.; et al. ESGE Quality Improvement Committee Requirements and standards facilitating quality improvement for reporting systems in gastrointestinal endoscopy: European Society of Gastrointestinal Endoscopy (ESGE) Position Statement. United Eur. Gastroenterol. J. 2016, 4, 172–176. [Google Scholar] [CrossRef]

- Bray, F.; Ferlay, J.; Soerjomataram, I.; Siegel, R.L.; Torre, L.A.; Jemal, A. Global cancer statistics 2018: GLOBOCAN estimates of incidence and mortality worldwide for 36 cancers in 185 countries. CA Cancer J. Clin. 2018, 68, 394–424. [Google Scholar] [CrossRef] [PubMed]

- Coleman, H.G.; Xie, S.-H.; Lagergren, J. The epidemiology of esophageal adenocarcinoma. Gastroenterology 2018, 154, 390–405. [Google Scholar] [CrossRef]

- Weismüller, J.; Thieme, R.; Hoffmeister, A.; Weismüller, T.; Gockel, I. Barrett-Screening: Rationale, aktuelle Konzepte und Perspektiven [Barrett-Screening: Rational, current concepts and perspectives]. Z. Gastroenterol. 2019, 57, 317–326. [Google Scholar] [CrossRef]

- American Gastroenterological Association; Spechler, S.J.; Sharma, P.; Souza, R.F.; Inadomi, J.M.; Shaheen, N.J. American Gastroenterological Association medical position statement on the management of Barrett’s esophagus. Gastroenterology 2011, 140, 1084–1091. [Google Scholar] [CrossRef]

- Pech, O.; May, A.; Manner, H.; Behrens, A.; Pohl, J.; Weferling, M.; Hartmann, U.; Manner, N.; Huijsmans, J.; Gossner, L.; et al. Long-term efficacy and safety of endoscopic resection for patients with mucosal adenocarcinoma of the esophagus. Gastroenterology 2014, 146, 652–660. [Google Scholar] [CrossRef]

- Weusten, B.; Bisschops, R.; Coron, E.; Dinis-Ribeiro, M.; Dumonceau, J.M.; Esteban, J.M.; Hassan, C.; Pech, O.; Repici, A.; Bergman, J.; et al. Endoscopic management of Barrett’s esophagus: European Society of Gastrointestinal Endoscopy (ESGE) Position Statement. Endoscopy 2017, 49, 191–198. [Google Scholar] [CrossRef] [PubMed]

- Choi, S.E.; Hur, C. Screening and surveillance for Barrett’s esophagus: Current issues and future directions. Curr. Opin. Gastroenterol. 2012, 28, 377–381. [Google Scholar] [CrossRef] [PubMed]

- Sharma, P. Review article: Emerging techniques for screening and surveillance in Barrett’s oesophagus. Aliment Pharmacol. Ther. 2004, 20, 63–96. [Google Scholar] [CrossRef] [PubMed]

- Spechler, S.J. Clinical practice. Barrett’s Esophagus. N. Engl. J. Med. 2002, 346, 836–842. [Google Scholar] [CrossRef] [PubMed]

- Sharma, P.; Savides, T.J.; Canto, M.I.; Corley, D.A.; Falk, G.W.; Goldblum, J.R.; Wang, K.K.; Wallace, M.B.; Wolfsen, H.C. The American Society for Gastrointestinal Endoscopy PIVI (Preservation and Incorporation of Valuable Endoscopic Innovations) on imaging in Barrett’s Esophagus. Gastrointest. Endosc. 2012, 76, 252–254. [Google Scholar] [CrossRef]

- ASGE Technology Committee; Thosani, N.; Dayyeh, B.K.A.; Sharma, P.; Aslanian, H.R.; Enestvedt, B.K.; Komanduri, S.; Manfredi, M.; Navaneethan, U.; Maple, J.T.; et al. ASGE Technology Committee systematic review and meta-analysis assessing the ASGE Preservation and Incorporation of Valuable Endoscopic Innovations thresholds for adopting real-time imaging-assisted endoscopic targeted biopsy during endoscopic surveillance of Barrett’s esophagus. Gastrointest. Endosc. 2016, 83, 684–698. [Google Scholar] [CrossRef] [PubMed]

- Sami, S.S.; Iyer, P.G. Recent Advances in Screening for Barrett’s Esophagus. Curr. Treat. Options Gastroenterol. 2018, 16, 1–14. [Google Scholar] [CrossRef]

- Sharma, P.; Hawes, R.H.; Bansal, A.; Gupta, N.; Curvers, W.; Rastogi, A.; Singh, M.; Hall, M.; Mathur, S.C.; Wani, S.B.; et al. Standard endoscopy with random biopsies versus narrow band imaging targeted biopsies in Barrett’s oesophagus: A prospective, international, randomised controlled trial. Gut 2013, 62, 15–21. [Google Scholar] [CrossRef]

- Curvers, W.L.; van Vilsteren, F.G.; Baak, L.C.; Böhmer, C.; Mallant-Hent, R.C.; Naber, A.H.; van Oijen, A.; Ponsioen, C.Y.; Scholten, P.; Schenk, E.; et al. Endoscopic trimodal imaging versus standard video endoscopy for detection of early Barrett’s neoplasia: A multicenter, randomized, crossover study in general practice. Gastrointest. Endosc. 2011, 73, 195–203. [Google Scholar] [CrossRef]

- Kara, M.A.; Smits, M.E.; Rosmolen, W.D.; Bultje, A.C.; Ten Kate, F.J.; Fockens, P.; Tytgat, G.N.; Bergman, J.J. A randomized crossover study comparing light-induced fluorescence endoscopy with standard videoendoscopy for the detection of early neoplasia in Barrett’s esophagus. Gastrointest. Endosc. 2005, 61, 671–678. [Google Scholar] [CrossRef]

- Mendel, R.; Ebigbo, A.; Probst, A.; Messmann, H.; Palm, C. Barrett’s Esophagus Analysis Using Convolutional Neural Networks. In Bildverarbeitung für die Medizin; Informatik Aktuell; Maier-Hein, K.H., Deserno, T.M., Handels, H., Tolxdorff, T., Eds.; Springer: Berlin/Heidelberg, Germany, 2017; pp. 80–85. [Google Scholar] [CrossRef]

- Ebigbo, A.; Mendel, R.; Probst, A.; Manzeneder, J.; Souza, L.A., Jr.; Papa, J.P.; Palm, C.; Messmann, H. Computer-aided diagnosis using deep learning in the evaluation of early oesophageal adenocarcinoma. Gut 2019, 68, 1143–1145. [Google Scholar] [CrossRef] [PubMed]

- Ghatwary, N.; Zolgharni, M.; Ye, X. Early esophageal adenocarcinoma detection using deep learning methods. Int. J. Comput. Assist. Radiol. Surg. 2019, 14, 611–621. [Google Scholar] [CrossRef] [PubMed]

- Hashimoto, R.; Requa, J.; Dao, T.; Ninh, A.; Tran, E.; Mai, D.; Lugo, M.; Chehade, N.E.-H.; Chang, K.J.; Karnes, W.E.; et al. Artificial intelligence using convolutional neural networks for real-time detection of early esophageal neoplasia in Barrett’s esophagus (with video). Gastrointest. Endosc. 2020, 5107, 30026-2. [Google Scholar] [CrossRef] [PubMed]

- Vennalaganti, P.R.; Kanakadandi, V.N.; Gross, S.A.; Parasa, S.; Wang, K.K.; Gupta, N.; Sharma, P. Inter-observer agreement among pathologists using wide-area transepithelial sampling with computer-assisted analysis in patients with barrett’s esophagus. Am. J. Gastroenterol. 2015, 110, 1257–1260. [Google Scholar] [CrossRef]

- Johanson, J.F.; Frakes, J.; Eisen, D.; EndoCDx Collaborative Group. Computer-assisted analysis of abrasive transepithelial brush biopsies increases the effectiveness of esophageal screening: A multicenter prospective clinical trial by the EndoCDx Collaborative Group. Dig. Dis. Sci. 2011, 56, 767–772. [Google Scholar] [CrossRef]

- Anandasabapathy, S.; Sontag, S.; Graham, D.Y.; Frist, S.; Bratton, J.; Harpaz, N.; Waye, J.D. Computer-assisted brush-biopsy analysis for the detection of dysplasia in a high-risk Barrett’s esophagus surveillance population. Dig. Dis. Sci. 2011, 56, 761–766. [Google Scholar] [CrossRef]

- Vennalaganti, P.R.; Kaul, V.; Wang, K.K.; Falk, G.W.; Shaheen, N.J.; Infantolino, A.; Johnson, D.A.; Eisen, G.; Gerson, L.B.; Smith, M.S.; et al. Increased detection of Barrett’s esophagus-associated neoplasia using wide-area trans-epithelial sampling: A multicenter, prospective, randomized trial. Gastrointest. Endosc. 2018, 87, 348–355. [Google Scholar] [CrossRef]

- Swager, A.F.; de Groof, A.J.; Meijer, S.L.; Weusten, B.L.; Curvers, W.L.; Bergman, J.J. Feasibility of laser marking in Barrett’s esophagus with volumetric laser endomicroscopy: First-in-man pilot study. Gastrointest. Endosc. 2017, 86, 464–472. [Google Scholar] [CrossRef]

- Smith, M.S.; Cash, B.; Konda, V.; Trindade, A.J.; Gordon, S.; DeMeester, S.; Joshi, V.; Diehl, D.; Ganguly, E.; Mashimo, H.; et al. Volumetric laser endomicroscopy and its application to Barrett’s esophagus: Results from a 1000 patient registry. Dis. Esophagus 2019, 32, doz029. [Google Scholar] [CrossRef]

- Evans, J.A.; Poneros, J.M.; Bouma, B.E.; Bressner, J.; Halpern, E.F.; Shishkov, M.; Lauwers, G.Y.; Mino-Kenudson, M.; Nishioka, N.S.; Tearney, G.J. Optical coherence tomography to identify intramucosal carcinoma and high-grade dysplasia in Barrett’s esophagus. Clin. Gastroenterol. Hepatol. 2006, 4, 38–43. [Google Scholar] [CrossRef]

- Leggett, C.L.; Gorospe, E.C.; Chan, D.K.; Muppa, P.; Owens, V.; Smyrk, T.C.; Anderson, M.; Lutzke, L.S.; Tearney, G.; Wang, K.K. Comparative diagnostic performance of volumetric laser endomicroscopy and confocal laser endomicroscopy in the detection of dysplasia associated with Barrett’s esophagus. Gastrointest. Endosc. 2016, 83, 880–888. [Google Scholar] [CrossRef]

- Struyvenberg, M.R.; van der Sommen, F.; Swager, A.F.; de Groof, A.J.; Rikos, A.; Schoon, E.J.; Bergman, J.J.; de With, P.H.N.; Curvers, W.L. Improved Barrett’s neoplasia detection using computer-assisted multiframe analysis of volumetric laser endomicroscopy. Dis. Esophagus 2020, 33, doz065. [Google Scholar] [CrossRef] [PubMed]

- Sehgal, V.; Rosenfeld, A.; Graham, D.G.; Lipman, G.; Bisschops, R.; Ragunath, K.; Rodriguez-Justo, M.; Novelli, M.; Banks, M.R.; Haidry, R.J.; et al. Machine Learning Creates a Simple Endoscopic Classification System that Improves Dysplasia Detection in Barrett’s Oesophagus amongst Non-expert Endoscopists. Gastroenterol. Res. Pract. 2018, 2018, 1872437. [Google Scholar] [CrossRef] [PubMed]

- Bergman, J.J.G.H.M.; de Groof, A.J.; Pech, O.; Ragunath, K.; Armstrong, D.; Mostafavi, N.; Lundell, L.; Dent, J.; Vieth, M.; Tytgat, G.N.; et al. An interactive web-based educational tool improves detection and delineation of barrett’s esophagus-related neoplasia. Gastroenterology 2019, 156, 1299–1308. [Google Scholar] [CrossRef] [PubMed]

- De Groof, J.; van der Sommen, F.; van der Putten, J.; Struyvenberg, M.R.; Zinger, S.; Curvers, W.L.; Pech, O.; Meining, A.; Neuhaus, H.; Bisschops, R.; et al. The Argos project: The development of a computer-aided detection system to improve detection of Barrett’s neoplasia on white light endoscopy. United Eur. Gastroenterol. J. 2019, 7, 538–547. [Google Scholar] [CrossRef]

- Kuramoto, M.; Kubo, M. Principles of NBI and BLI-blue laser imaging. In NBI/BLI Atlas: New Image-Enhanced Endoscopy; Tajiri, H., Kato, M., Tanaka, S., Saito, Y., Eds.; Nihon Medical Center Inc.: Tokyo, Japan, 2014; pp. 16–21. [Google Scholar]

- Kaneko, K.; Oono, Y.; Yano, T.; Ikematsu, H.; Odagaki, T.; Yoda, Y.; Yagishita, A.; Sato, A.; Nomura, S. Effect of novel bright image enhanced endoscopy using blue laser imaging (BLI). Endosc. Int. Open 2014, 2, E212–E219. [Google Scholar] [CrossRef]

- Togashi, K.; Nemoto, D.; Utano, K.; Isohata, N.; Kumamoto, K.; Endo, S.; Lefor, A.K. Blue laser imaging endoscopy system for the early detection and characterization of colorectal lesions: A guide for the endoscopist. Therap. Adv. Gastroenterol. 2016, 9, 50–56. [Google Scholar] [CrossRef]

- Ishihara, R.; Takeuchi, Y.; Chatani, R.; Kidu, T.; Inoue, T.; Hanaoka, N.; Yamamoto, S.; Higashino, K.; Uedo, N.; Iishi, H.; et al. Prospective evaluation of narrow-band imaging endoscopy for screening of esophageal squamous mucosal high-grade neoplasia in experienced and less experienced endoscopists. Dis. Esophagus 2010, 23, 480–486. [Google Scholar] [CrossRef]

- Nagami, Y.; Tominaga, K.; Machida, H.; Nakatani, M.; Kameda, N.; Sugimori, S.; Okazaki, H.; Tanigawa, T.; Yamagami, H.; Kubo, N.; et al. Usefulness of non-magnifying narrow-band imaging in screening of early esophageal squamous cell carcinoma: A prospective comparative study using propensity score matching. Am. J. Gastroenterol. 2014, 109, 845–854. [Google Scholar] [CrossRef]

- Ohmori, M.; Ishihara, R.; Aoyama, K.; Nakagawa, K.; Iwagami, H.; Matsuura, N.; Shichijo, S.; Yamamoto, K.; Nagaike, K.; Nakahara, M.; et al. Endoscopic detection and differentiation of esophageal lesions using a deep neural network. Gastrointest. Endosc. 2020, 91, 301–309. [Google Scholar] [CrossRef]

- Kuwano, H.; Nishimura, Y.; Oyama, T.; Kato, H.; Kitagawa, Y.; Kusano, M.; Shimada, H.; Takiuchi, H.; Toh, Y.; Doki, Y.; et al. Guidelines for Diagnosis and Treatment of Carcinoma of the Esophagus April 2012 edited by the Japan Esophageal Society. Esophagus 2015, 12, 1–30. [Google Scholar] [CrossRef] [PubMed]

- Tokai, Y.; Yoshio, T.; Aoyama, K.; Horie, Y.; Yoshimizu, S.; Horiuchi, Y.; Ishiyama, A.; Tsuchida, T.; Hirasawa, T.; Sakakibara, Y.; et al. Application of artificial intelligence using convolutional neural networks in determining the invasion depth of esophageal squamous cell carcinoma. Esophagus 2020, 17, 250–256. [Google Scholar] [CrossRef] [PubMed]

- Horie, Y.; Yoshio, T.; Aoyama, K.; Yoshimizu, S.; Horiuchi, Y.; Ishiyama, A.; Hirasawa, T.; Tsuchida, T.; Ozawa, T.; Ishihara, S.; et al. Diagnostic outcomes of esophageal cancer by artificial intelligence using convolutional neural networks. Gastrointest. Endosc. 2019, 89, 25–32. [Google Scholar] [CrossRef] [PubMed]

- Kumagai, Y.; Kawada, K.; Yamazaki, S.; Iida, M.; Ochiai, T.; Kawano, T.; Takubo, K. Prospective replacement of magnifying endoscopy by a newly developed endocytoscope, the ‘GIF-Y0002’. Dis. Esophagus 2010, 23, 627–632. [Google Scholar] [CrossRef]

- Inoue, H.; Honda, T.; Nagai, K.; Kawano, T.; Yoshino, K.; Takeshita, K.; Endo, M. Ultra-high magnification endoscopic observation of carcinoma in situ of the esophagus. Dig. Endosc. 1997, 9, 16–18. [Google Scholar] [CrossRef]

- Sato, H.; Inoue, H.; Ikeda, H.; Sato, C.; Onimaru, M.; Hayee, B.; Phlanusi, C.; Santi, E.G.; Kobayashi, Y.; Kudo, S.E. Utility of intrapapillary capillary loops seen on magnifying narrow-band imaging in estimating invasive depth of esophageal squamous cell carcinoma. Endoscopy 2015, 47, 122–128. [Google Scholar] [CrossRef]

- Kumagai, Y.; Toi, M.; Kawada, K.; Kawano, T. Angiogenesis in superficial esophageal squamous cell carcinoma: Magnifying endoscopic observation and molecular analysis. Dig. Endosc. 2010, 22, 259–267. [Google Scholar] [CrossRef]

- Inoue, H.; Kaga, M.; Ikeda, H.; Sato, C.; Sato, H.; Minami, H.; Santi, E.G.; Hayee, B.; Eleftheriadis, N. Magnification endoscopy in esophageal squamous cell carcinoma: A review of the intrapapillary capillary loop classification. Ann. Gastroenterol. 2015, 28, 41–48. [Google Scholar]

- Gono, K.; Obi, T.; Yamaguchi, M.; Ohyama, N.; Machida, H.; Sano, Y.; Yoshida, S.; Hamamoto, Y.; Endo, T. Appearance of enhanced tissue features in narrow-band endoscopic imaging. J. Biomed. Opt. 2004, 9, 568–577. [Google Scholar] [CrossRef]

- Arima, M.; Tada, M.; Arima, H. Evaluation of micro-vascular patterns of superficial esophageal cancers by magnifying endoscopy. Esophagus 2005, 2, 191–197. [Google Scholar] [CrossRef]

- Oyama, T.; Inoue, H.; Arima, M.; Momma, K.; Omori, T.; Ishihara, R.; Hirasawa, D.; Takeuchi, M.; Tomori, A.; Goda, K. Prediction of the invasion depth of superficial squamous cell carcinoma based on microvessel morphology: Magnifying endoscopic classification of the Japan Esophageal Society. Esophagus 2017, 14, 105–112. [Google Scholar] [CrossRef] [PubMed]

- Oyama, T.; Momma, K. A new classification of magnified endoscopy for superficial esophageal squamous cell carcinoma. Esophagus 2011, 8, 247–251. [Google Scholar] [CrossRef]

- Kim, S.J.; Kim, G.H.; Lee, M.W.; Jeon, H.K.; Baek, D.H.; Lee, B.E.; Song, G.A. New magnifying endoscopic classification for superficial esophageal squamous cell carcinoma. World J. Gastroenterol. 2017, 23, 4416–4421. [Google Scholar] [CrossRef]

- Zhao, Y.Y.; Xue, D.X.; Wang, Y.L.; Zhang, R.; Sun, B.; Cai, Y.P.; Feng, H.; Cai, Y.; Xu, J.M. Computer-assisted diagnosis of early esophageal squamous cell carcinoma using narrow-band imaging magnifying endoscopy. Endoscopy 2019, 51, 333–341. [Google Scholar] [CrossRef] [PubMed]

- Everson, M.; Herrera, L.C.G.P.; Li, W.; Muntion Luengo, I.; Ahmad, O.; Banks, M.; Magee, C.; Alzoubaidi, D.; Hsu, H.M.; Graham, D.; et al. Artificial intelligence for the real-time classification of intrapapillary capillary loop patterns in the endoscopic diagnosis of early oesophageal squamous cell carcinoma: A proof-of-concept study. United Eur. Gastroenterol. J. 2019, 7, 297–306. [Google Scholar] [CrossRef]

- García-Peraza-Herrera, L.C.; Everson, M.; Lovat, L.; Wang, H.P.; Wang, W.L.; Haidry, R.; Stoyanov, D.; Ourselin, S.; Vercauteren, T. Intrapapillary capillary loop classification inmagnification endoscopy: Open dataset and baseline methodology. Int. J. Comput. Assist. Radiol. Surg. 2020, 15, 651–659. [Google Scholar] [CrossRef]

- Inoue, H.; Kazawa, T.; Sato, Y.; Satodate, H.; Sasajima, K.; Kudo, S.E.; Shiokawa, A. In vivo observation of living cancer cells in the esophagus, stomach, and colon using catheter-type contact endoscope, “Endo-Cytoscopy system”. Gastrointest. Endosc. Clin. N. Am. 2004, 14, 589–594. [Google Scholar] [CrossRef]

- Kumagai, Y.; Kawada, K.; Yamazaki, S.; Ilida, M.; Momma, K.; Odajima, H.; Kawachi, H.; Nemoto, T.; Kawano, T.; Takubo, K. Endocytoscopic observation for esophageal squamous cell carcinoma: Can biopsy histology be omitted? Dis. Esophagus 2009, 22, 505–512. [Google Scholar] [CrossRef]

- Kumagai, Y.; Takubo, K.; Kawada, K.; Kumagai, Y.; Takubo, K.; Higashi, M.; Ishiguro, T.; Sobajima, J.; Fukuchi, M.; Ishibashi, K.I.; et al. A newly developed continuous zoom-focus endocytoscope. Endoscopy 2017, 49, 176–180. [Google Scholar] [CrossRef]

- Kumagai, Y.; Monma, K.; Kawada, K. Magnifying chromoendoscopy of the esophagus: In vivo pathological diagnosis using an endocytoscopy system. Endoscopy 2004, 36, 590–594. [Google Scholar] [CrossRef]

- Kumagai, Y.; Kawada, K.; Higashi, M.; Ishiguro, T.; Sobajima, J.; Fukuchi, M.; Ishibashi, K.; Baba, H.; Mochiki, E.; Aida, J.; et al. Endocytoscopic observation of various esophageal lesions at ×600: Can nuclear abnormality be recognized? Dis. Esophagus 2015, 28, 269–275. [Google Scholar] [CrossRef] [PubMed]

- Kumagai, Y.; Takubo, K.; Kawada, K.; Higashi, M.; Ishiguro, T.; Sobajima, J.; Fukuchi, M.; Ishibashi, K.; Mochiki, E.; Aida, J.; et al. Endocytoscopic observation of various types of esophagitis. Esophagus 2016, 13, 200–207. [Google Scholar] [CrossRef]

- Thakkar, S.J.; Kochhar, G.S. Artificial intelligence for real-time detection of early esophageal cancer: Another set of eyes to better visualize. Gastrointest. Endosc. 2020, 91, 52–54. [Google Scholar] [CrossRef] [PubMed]

- Kodashima, S.; Fujishiro, M.; Takubo, K.; Kammori, M.; Nomura, S.; Kakushima, N.; Muraki, Y.; Goto, O.; Ono, S.; Kaminishi, M.; et al. Ex vivo pilot study using computed analysis of endo-cytoscopic images to differentiate normal and malignant squamous cell epithelia in the oesophagus. Dig. Liver Dis. 2007, 39, 762–766. [Google Scholar] [CrossRef] [PubMed]

- Shin, D.; Protano, M.A.; Polydorides, A.D.; Dawsey, S.M.; Pierce, M.C.; Kim, M.K.; Schwarz, R.A.; Quang, T.; Parikh, N.; Bhutani, M.S.; et al. Quantitative analysis of high-resolution microendoscopic images for diagnosis of esophageal squamous cell carcinoma. Clin. Gastroenterol. Hepatol. 2015, 13, 272–279. [Google Scholar] [CrossRef] [PubMed]

- Quang, T.; Schwarz, R.A.; Dawsey, S.M.; Tan, M.C.; Patel, K.; Yu, X.; Wang, G.; Zhang, F.; Xu, H.; Anandasabapathy, S.; et al. A tablet-interfaced high-resolution microendoscope with automated image interpretation for real-time evaluation of esophageal squamous cell neoplasia. Gastrointest. Endosc. 2016, 84, 834–841. [Google Scholar] [CrossRef]

- Kumagai, Y.; Takubo, K.; Kawada, K.; Aoyama, K.; Endo, Y.; Ozawa, T.; Hirasawa, T.; Yoshio, T.; Ishihara, S.; Fujishiro, M.; et al. Diagnosis using deep-learning artificial intelligence based on the endocytoscopic observation of the esophagus. Esophagus 2019, 16, 180–187. [Google Scholar] [CrossRef]

- Wu, L.; Zhang, J.; Zhou, W.; An, P.; Shen, L.; Liu, J.; Jiang, X.; Huang, X.; Mu, G.; Wan, X.; et al. Randomised controlled trial of WISENSE, a real-time quality improving system for monitoring blind spots during esophagogastroduodenoscopy. Gut 2019, 68, 2161–2169. [Google Scholar] [CrossRef]

- Su, J.R.; Li, Z.; Shao, X.J.; Ji, C.R.; Ji, R.; Zhou, R.C.; Li, G.C.; Liu, G.Q.; He, Y.S.; Zuo, X.L.; et al. Impact of real-time automatic quality control system on colorectal polyp and adenoma detection: A prospective randomized controlled study (with videos). Gastrointest. Endosc. 2020, 91, 415–424. [Google Scholar] [CrossRef]

- Xu, J.; Jing, M.; Wang, S.; Yang, C.; Chen, X. A review of medical image detection for cancers in digestive system based on artificial intelligence. Expert Rev. Med. Devices 2019, 16, 877–889. [Google Scholar] [CrossRef]

- Kanesaka, T.; Lee, T.C.; Uedo, N.; Lin, K.P.; Chen, H.Z.; Lee, J.Y.; Wang, H.P.; Chang, H.T. Computer-aided diagnosis for identifying and delineating early gastric cancers in magnifying narrow-band imaging. Gastrointest. Endosc. 2018, 87, 1339–1344. [Google Scholar] [CrossRef] [PubMed]

- Yoon, H.J.; Kim, S.; Kim, J.H.; Keum, J.S.; Oh, S.I.; Jo, J.; Chun, J.; Youn, Y.H.; Park, H.; Kwon, I.G.; et al. A Lesion-Based Convolutional Neural Network Improves Endoscopic Detection and Depth Prediction of Early Gastric Cancer. J. Clin. Med. 2019, 8, 1310. [Google Scholar] [CrossRef] [PubMed]

- Byrne, M.F.; Chapados, N.; Soudan, F.; Oertel, C.; Pérez, M.L.; Kelly, R.; Iqbal, N.; Chandelier, F.; Rex, D.K. Real-time differentiation of adenomatous and hyperplastic diminutive colorectal polyps during analysis of unaltered videos of standard colonoscopy using a deep learning model. Gut 2019, 68, 94–100. [Google Scholar] [CrossRef] [PubMed]

- Wang, P.; Xiao, X.; Glissen Brown, J.R.; Berzin, T.M.; Tu, M.; Xiong, F.; Hu, X.; Liu, P.; Song, Y.; Zhang, D.; et al. Development and validation of a deep-learning algorithm for the detection of polyps during colonoscopy. Nat. Biomed. Eng. 2018, 2, 741–748. [Google Scholar] [CrossRef] [PubMed]

- Ding, Z.; Shi, H.; Zhang, H.; Meng, L.; Fan, M.; Han, C.; Zhang, K.; Ming, F.; Xie, X.; Liu, H.; et al. Gastroenterologist-Level Identification of Small-Bowel Diseases and Normal Variants by Capsule Endoscopy Using a Deep-Learning Model. Gastroenterology 2019, 157, 1044–1054. [Google Scholar] [CrossRef] [PubMed]

- Park, S.H.; Han, K. Methodologic Guide for Evaluating Clinical Performance and Effect of Artificial Intelligence Technology for Medical Diagnosis and Prediction. Radiology 2018, 286, 800–809. [Google Scholar] [CrossRef]

- Wang, P.; Berzin, T.M.; Glissen Brown, J.R.; Bharadwaj, S.; Becq, A.; Xiao, X.; Liu, P.; Li, L.; Song, Y.; Zhang, D.; et al. Real-time automatic detection system increases colonoscopic polyp and adenoma detection rates: A prospective randomised controlled study. Gut 2019, 68, 1813–1819. [Google Scholar] [CrossRef]

- England, J.R.; Cheng, P.M. Artificial Intelligence for Medical Image Analysis: A Guide for Authors and Reviewers. Am. J. Roentgenol. 2019, 212, 513–519. [Google Scholar] [CrossRef]

- He, Y.S.; Su, J.R.; Li, Z.; Zuo, X.L.; Li, Y.Q. Application of artificial intelligence in gastrointestinal endoscopy. J. Dig. Dis. 2019, 20, 623–630. [Google Scholar] [CrossRef]

- Park, S.H. Artificial intelligence in medicine: Beginner’s guide. J. Korean Soc. Radiol. 2018, 78, 301–308. [Google Scholar] [CrossRef]

- Bae, H.J.; Kim, C.W.; Kim, N.; Park, B.; Kim, N.; Seo, J.B.; Lee, S.M. A Perlin Noise-Based Augmentation Strategy for Deep Learning with Small Data Samples of HRCT Images. Sci. Rep. 2018, 8, 17687. [Google Scholar] [CrossRef] [PubMed]

- Tavanaei, A.; Ghodrati, M.; Kheradpisheh, S.R.; Masquelier, T.; Maida, A. Deep learning in spiking neural networks. Neural Netw. 2019, 111, 47–63. [Google Scholar] [CrossRef] [PubMed]

- Luo, H.; Xu, G.; Li, C.; He, L.; Luo, L.; Wang, Z.; Jing, B.; Deng, Y.; Jin, Y.; Li, Y.; et al. Real-time artificial intelligence for detection of upper gastrointestinal cancer by endoscopy: A multicentre, case-control, diagnostic study. Lancet Oncol. 2019, 20, 1645–1654. [Google Scholar] [CrossRef]

| Ref. | Published Year | Aim of Study | Design of Study | Type of AI (AI Classifier) | AI Validation Methods | Number of Subjects | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Training Dataset | Test Dataset | Performance | ||||||||||||

| No Cases (Negative/Positive) | No Images (Negative/Positive) | Endoscopic Procedure | No Cases (Negative/Positive) | No Images (Negative/ Positive) | Endoscopic Procedure | Accuracy % | Sensitivity/Specificity% | AUC | ||||||

| Van der Sommen et al. [8] | 2016 | Detection of early neoplasia in BE | R | color filters, specific texture, and ML (“Filter with Gabor bank”, SVM) | leave- one-out CV on a per-patient basis | 44 pts with BE (23/21) 100 EGD images | WLE | 83 (per image); 86/87 (per patient) | - | |||||

| Mendel et al. [50] | 2017 | Detection of early neoplasia in BE | R | CNN | 50/50 EGD images (Endoscopic Vision Challenge MICCAI 2015) | HD-WLE | 94/88 | - | ||||||

| Ebigbo et al. [51] | 2019 | Detection of early Barrett AC | R | deep CNN (ResNet) | leave-one-patient-out CV | Local dataset: 41/33 pts, 148 HD WLE/NBI MICCAI 2015 Dataset: 22/17 pts, 100 HD-WLE | HD- WLE/NBI | Local dataset: 97/88 (WLE) 94/80 (NBI) MICCAI-dataset: 92/100 (WLE) | - | |||||

| Ghatwary et al. [52] | 2019 | Detection of early Barrett AC | R | R-CNN, Fast R-CNN, Faster R-CNN, SSD | 2- and 5-fold-CV, leave-One-Patient-Out CV | MICCAI dataset:21 pts (9/12) (training dataset) | 60 (30/30) EGD images | HD-WLE | MICCAI dataset: 9 pts (4/5) (validation dataset) 9 pts (4/5) (test dataset) | 40 (20/20) EGD images | HD-WLE | 83 (ARR for Faster R-CNN) | 96/92 (SSD) | - |

| Hashi-moto et al. [53] | 2020 | Detection of early esophageal neoplasia on BE | R | CNN based on Xception architecture, YOLO v2 | Internal validation | 100 pts (30/70) | 1832 (916/916) EGD images | WLE/NBI | 39 pts (13/26) (valida-tion dataset) | 458 (233/225) EGD images (validation dataset) | WLE/NBI | 95.4 | 96.4/ 94.2 | - |

| Vennala-ganti et al. [57] NCT03008980 | 2017 | Detection of early esophageal neoplasia on BE | P | neural network-based, high-speed computer scan | 160 pts (134 ND/LGD, 26 HGD/EAC) randomized: −76 pts biopsy → WATS −84 pts WATS → biopsy | WATS | The addition of WATS: absolute detection rate increase 14.4% | |||||||

| Swager et al. [58] | 2017 | Detection of early BE neoplasia | R | ML-methods: SVM, discriminant analysis, Ada-Boost, random forest, k-nearest neighbors etc. | Leave-one-out CV | −19 BE pts −60 (30/30) images | Ex vivo VLE images | 90/93 | 0.95 | |||||

| Struy-benberg et al. [62] NCT01862666 | 2019 | Detection of Barrett’s neoplasia | P | 8 predictive models (e.g., SVM, random forest, Naive Bayes); best = CAD multi-frame image analysis | leave-one-out CV | −52 endoscopic resection specimens from 29 BE pts −60 (30/30) regions of interest + 25 neighboring frames → 3060 VLE frames | Ex vivo VLE images | - | - | 0.94 | ||||

| Seghal et al. [63] UK national clinical trial (REC reference 08/H0808/8, study no. 08/0018) | 2018 | Detection of dysplasia arising in BE | P | ML-algorithm: DT (WEKA package) | −40 pts BE ± dysplasia | Video HD-EGD, i-Scan | 92 | 97/88 | - | |||||

| Ebigbo et al. [28] | 2020 | Real- time detection of early neoplasia in BE | R/P | DeepLab V.3+, an encoder–decoder ANN (ResNet 101 layers) | classification (global prediction), segmentation (dense prediction) | 129 EGD images | HD-WLE/ gNBI | 14 pts BE (valida-tion dataset) | 26/36 images (validation dataset) | random images from real-time camera livestream | 89.9 | 83.7/ 100.0 | - | |

| De Groof et al. [65] - The ARGOS project | 2019 | Recognition of Barrett’s neoplasia | P | supervised ML-models (trained on color/texture features), SVM | leave-one-out CV | −60 pts (20/40) −60 EGD images | HD-WLE | 92 | 95/85 | 0.92 | ||||

| Guo et al. [10] | 2020 | Real-time automated diagnosis of precancerous lesions and ESCCs | R/P | DL model: SegNet = deep encoder–decoder architecture for multi-class pixelwise segmentation | AI probability heat map-generated for each input (ESD image) | 358/191 pts | 6473 (3703/2770) images | NBI images | Validation: 59 consecutive cc cases (dataset A); 2004 consecutive non-cc cases (dataset B); 27 non-ME cc cases + 20 ME cc cases (dataset C); 33 normal cases (dataset D) | Validation: 1480 cc images (dataset A); 5191 non-cc images (dataset B); 27 non-ME cc images + 20 ME cc images (dataset C); 33 normal images (dataset D) | NBI images (datasets A, B); NBI video EGD images (datasets C, D) | - | 98.04/ 95.03 (datasets A, B); sensitivity per- frame/lesion: 60.8/100 (non-ME video C) 96.1/100 (ME video C) specificity per frame/lesion: 99.9/ 90.9 (video D) | 0.989 (data-sets A, B) |

| Ohmori et al. [71] | 2020 | Detect and differentiate esophageal SCC | R | deep Neural Network-SSD | Caffe deep learning framework | 804 SSC pts | 9591 non-ME/7844 ME, SCC images; 1692 non-ME/3435 ME, non-cc images | ME/non-ME ESD images | 135 pts | 255 non-ME WLE; 268 non-ME, NBI/BLI; 204 ME-NBI/ BLI ESD images | non-ME WLE; non-ME/ME NBI, BLI | 83 | 98/68 | - |

| Tokai et al. [73] | 2020 | Diagnostic ability of AI to measure ESCC invasion depth | R | deep neural network-SSD, GoogLeNet | Caffe deep learning framework | -pre-training 8428 images; training 1751 EGD images | WLE/NBI images | 55 consecu-tive patients, 42 with EP-SM1 ESCC and 13 with SM2 ESCC | 291 images | WLE/NBI images | 95.5 (SCC diagnosis); 80.9 (invasion depth) | 84.1 (invasion depth) | - | |

| Zhao et al. [85] | 2019 | Automated classification of IPCLs to improve the detection of esophageal SCC | P | double-labelling FCN, self-transfer learning | VGG16 net architecture, 3-fold CV | −219 pts (30 inflammation, 24 LGD, 165 ESCC) −1350 images → 1383 lesions (207 type A, 970 type B1, 206 type B2) | ME-NBI images | 89.2 (lesion level) 93 (pixel level) | 87.0/ 84.1 (lesion level) | - | ||||

| Everson et al. [86] | 2019 | Real-time classification of IPCL patterns in the diagnosis of ESCC | P | CNN, eCAMs (discriminative areas normal/abnormal) | five-fold CV | −17 pts (7 normal 10 ESCC) −7046 sequential HD images | ME-NBI images (Video EGD) | 93.7 normal/abnormal IPCL | 89.3/ 98 | - | ||||

| García-Peraza-Herrera et al. [87] | 2020 | Classify still images or video frames as normal or abnormal IPCL patterns (esophageal SCC detection) | P | CNN architecture for the binary classification task (explainability) ResNet18CAM-DS | −114 pts (45/69) −67,742 annotated frames (28,078/39,662) with an average of 593 frames per patient. | ME-NBI video | 91.7 | 93.7/ 92.4 | - | |||||

| Koda-shima et al. [95] | 2007 | Discrimination normal/malignant esophageal tissue at the cellular level | P, ex vivo pilot | ImageJ program | −10 pts | Endocytoscopy | Difference in the mean ratio of total nuclei: 6.4 ± 1.9% in normal vs. 25.3 ± 3.8% in malignant samples | |||||||

| Shin et al. [96] | 2015 | Diagnosis of esophageal squamous dysplasia | P | Linear discriminant analysis | −177 pts −375 sites (training set 104 sites; test set 104 sites; validation set 167 sites) | Laptop-interfaced HRME | 87/ 97 | - | ||||||

| Quang et al. [97] | 2016 | Diagnosis of esophageal SCC | R | Linear discriminant analysis | Data identical as for [124] | Tablet-interfaced HRME | 95/ 91 | - | ||||||

| Kumagai et al. [98] | 2019 | Diagnosing ESCC based on ECS images (optical biopsy) | R/P | CNN based on GoogLeNet, 22 layers-backpropagation | Cafe deep learning framework | 240 pts (114/126) → 308 ECS | 4715 (3574/1141) images | ECS images | 55 consecutive pts (28/27) | 1520 images | ECS images | 90.9 | 92.6/ 89.3 | 0.85; 0.90 (HMP) 0.72 (LMP) |

| Horie et al. [74] | 2019 | Detection of esophageal cancer (SCC and AC) | R | deep CNN-SSD | Caffe deep learning framework | 384 pts esophageal cc (397 lesions ESCC, 32 lesions EAC) | 8428 images esophageal cc | WLE/NBI images | 50/47 pts (49 lesions−41 ESCC,8 EAC) | 1118 images | WLE/NBI images | 98 (superficial/advanced cc) 99 for ESCC,90 for EAC | 98 | - |

| Luo et al. | 2019 | AI for the diagnosis of upper gastrointestinal cancers | R/P | GRAIDS: DL semantic segmentation model (encoder-decoder DeepLab’s V3 + algorithm) | internal validation, external validation (5 hospitals), prospective validation | −1,036,496 endoscopy images from 84,424 individuals used to develop and test GRAIDS | HD-WLE EGD | 95.5 (internal validation set); 92.7 (prospective set); 91.5–97.7 (5 external validation sets) | 94.2/92.3 (prospec-tive set) | 0.966–0.990 (five external valida-tion datasets) | ||||

| Status | Study Title | Number ID/Acronym | Study Type | Conditions | Design/Interventions | Outcomes | Target Sample Size (No. Participants) | Region |

|---|---|---|---|---|---|---|---|---|

| Recruiting | The analysis of WATS3D increased yield of Barrett’s esophagus and esophageal dysplasia | NCT03008980 | Observational |

| Diagnostic test: patients will perform routine care EGD with WATS3D brush samples and forceps biopsies; collection of cytology/pathology results | Primary outcomes of patients undergoing WATS sampling. Specifically, incremental yield for Barrett’s esophagus and esophageal dysplasia due to WATS sampling above that noted from routine forceps biopsies in various clinical settings | 75,000 | US |

| Recruiting | Volumetric laser endomicroscopy with intelligent real-time image segmentation (IRIS) | NCT03814824 | Interventional |

| Diagnostic test: IRIS Diagnostic test: VLE Patients will undergo a VLE exam ± IRIS per the standard of care. They will be randomized into VLE without IRIS first vs. VLE with IRIS first | Primary: -time for image interpretation -biopsy yield -number of biopsies | 200 | US |

| Completed | A comparison of Volumetric Laser Endomicroscopy and endoscopic mucosal resection in patients with Barrett’s dysplasia or intramucosal adenocarcinoma | NCT01862666 | Observational |

| To evaluate the ability of physicians to use VLE to visualize HGIN/IMC in both the ex-vivo and in-vivo setting and correlate those images to standard histology of EMR specimens as the gold standard. | Primary: the correlation of features seen on VLE images to those seen on histopathology from EMR specimens Secondary: the creation of an image atlas, to determine the intra- and inter-observer agreement on VLE images in correlation with histopathology → refinement of the existing VLE image interpretation criteria and the validation of the VLE classification | 30 | The Netherlands |

| Preinitiation | The additional effect of AI support system to detect esophageal cancer-exploratory randomized control trial | UMIN 000039924/AIDEC | Interventional |

| To investigate the efficacy of AI for the diagnosis of esophageal cancer | Primary: improvement of detection rate with AI support system in less experienced endoscopist Secondary: improvement of detection rate with AI support system in experienced endoscopist | 300 | Japan |

| Recruiting | Automatic diagnosis of early esophageal squamous neoplasia using pCLE with AI | NCT04136236 | Observational |

| Diagnosis test: the diagnosis of AI and endoscopist | Primary: the diagnosis efficiency of AI for diagnosing esophageal mucosal disease on real-time pCLE examination Secondary: contrast the diagnosis efficiency of AI with endoscopist | 60 | China |

| Recruiting | Research on development of AI for detection and classification of upper gastrointestinal cancers in endoscopic images | UMIN000039597 | Observational |

| Collection of endoscopic images of upper GI cancer, development of an AI system for detection of upper GI cancer- assessment of an AI system performance by expert endoscopists | Primary: an accuracy of AI system for detection of upper GI cancers in endoscopic images Secondary: an accuracy of AI system for classification of upper GI cancers in endoscopic images | 200 | Japan |

| Completed (April 2020) | AI for early diagnosis of esophageal squamous cell carcinoma during optical enhancement magnifying endoscopy | NCT03759756 | Observational |

| Arm group label: AI visible/invisible group. The endoscopic novices analyzing the image can/cannot see the automatic diagnosis | Primary: the diagnosis efficiency (the sensitivity, specificity and accuracy) of the AI model | 119 | China |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lazăr, D.C.; Avram, M.F.; Faur, A.C.; Goldiş, A.; Romoşan, I.; Tăban, S.; Cornianu, M. The Impact of Artificial Intelligence in the Endoscopic Assessment of Premalignant and Malignant Esophageal Lesions: Present and Future. Medicina 2020, 56, 364. https://doi.org/10.3390/medicina56070364

Lazăr DC, Avram MF, Faur AC, Goldiş A, Romoşan I, Tăban S, Cornianu M. The Impact of Artificial Intelligence in the Endoscopic Assessment of Premalignant and Malignant Esophageal Lesions: Present and Future. Medicina. 2020; 56(7):364. https://doi.org/10.3390/medicina56070364

Chicago/Turabian StyleLazăr, Daniela Cornelia, Mihaela Flavia Avram, Alexandra Corina Faur, Adrian Goldiş, Ioan Romoşan, Sorina Tăban, and Mărioara Cornianu. 2020. "The Impact of Artificial Intelligence in the Endoscopic Assessment of Premalignant and Malignant Esophageal Lesions: Present and Future" Medicina 56, no. 7: 364. https://doi.org/10.3390/medicina56070364

APA StyleLazăr, D. C., Avram, M. F., Faur, A. C., Goldiş, A., Romoşan, I., Tăban, S., & Cornianu, M. (2020). The Impact of Artificial Intelligence in the Endoscopic Assessment of Premalignant and Malignant Esophageal Lesions: Present and Future. Medicina, 56(7), 364. https://doi.org/10.3390/medicina56070364