1. Introduction

In history, caves have a broad range of uses and meanings, formed over long periods that include prehistoric, historic, and contemporary phases. These uses have generated distinct values and meanings for diverse groups, including local communities, international researchers, and tourists. Caves are unique in that they fulfill, at least to some extent, all of the criteria for natural, tangible, intangible, and historical heritage protection, making management of them difficult. The past, present, and future importance of caves and their multiple uses and meanings have not been consistently considered in measures to protect or manage them. The increasing pressure from economic development practices, including logging, mining, and tourism, also compounds management and conservation [

1].

From a preservation perspective, the protection of cave heritage is extremely important. Underground sites have become attractive tourist destinations for an increasing number of visitors. This flow of visitors has made sustainability a major issue; that is, how tourism development ensures economic benefits for host communities and respects local identity without compromising the environmental resources [

2]. Underground sites have an intrinsic scientific (e.g., geological record), cultural (e.g., superstitious rituals, archaeological artifacts), and recreational value (e.g., exploring inner caves) [

3], leveraged for “cave tourism” including, among other forms of tourism, geo-tourism, mining tourism, and adventure tourism [

4,

5].

In this paper, we study the Alistrati cave, which is situated in the area of the town of Serres, Greece. The entrance of the cave is at the side of a hill near the Serres–Drama railroad track. The place is called Ambartsiki. The cave is formed within masses of marble with silex. The length of the main corridors at a horizontal level is about 1438 m. However, the total length is more than 2500 m; the average height is 5–10 m, with a maximum of 30 m. The average width of the cave is 5 m with a maximum of 20–35 m. A variety of shapes and several stalactites and stalagmites make a rich decoration of the cave. This cave is one of the most important in Europe. The first exploration was in 1976 AD by a team of Austrian scientists. (R. Seeman). According to these scientists, the Alistrati cave might be connected with a cave that is smaller but of equal interest called Peristeriona with a separate entrance [

6].

The main contribution of this work is the implementation and provision of a virtual reality (VR) tour experience for the site of the Alistrati cave that could potentially enhance the outreach of the site to an audience, enhance its visibility and reputation, and at the same time enable the provision of alternative valorization schemes moving towards more sustainability for the heritage site visiting experiences.

2. Background and Related Work and Methods

2.1. 3D Reconstruction Technologies for Heritage Sites

Technologies and techniques to achieve the digitalization of a three-dimensional (3D) object into a 3D model have already been well researched in the literature. Photogrammetry being one of the most highly used is the process of obtaining, measuring, and interpreting information about an object based on how light rays reflect from different points of the object [

7]. In this day and age, if an individual wishes for a fairly accurate digital representation of a three-dimensional object, this individual can easily achieve that goal by capturing a lot of high-resolution images from different angles and then passing that information into photogrammetry software or a simple mobile application. This has been done in the past numerous times. For example, in the ARCO pipeline [

8], stereophotogrammetry is the main tool that they used to create 3D representations of museum artifacts to produce a fully digital exhibition. This technology is now even being accelerated both in terms of time and memory complexity by neural networks and deep learning [

9,

10].

Photogrammetry has even been used by past researchers to reconstruct entire geographical locations. Agarwal et al. in their work used a set of distributed computer vision algorithm systems to gather and match photographs from internet photo-sharing sites to finally reconstruct the entire city of Rome, Italy in 3D [

11].

In [

12], two emblematic caves of northern Spain were reconstructed using a multi-sensor hybrid approach called Terrestrial Laser Scanning (TLS) to tackle the problem, and a great summarization is provided on the advantages and disadvantages of the existing photogrammetry and laser scanning technologies. Similarly, Grussenmeyer et al. [

13] attempted to reconstruct specific structural elements and prehistoric wall art that can be found in a cave in Perigord, France. In their attempt, they used multiple mediums of 3D reconstruction technologies; specifically TLS, photogrammetry, and spatial imaging. Another example is the ‘African Cultural Heritage and Landscape Database’ project, aimed at the creation of a digital library of spatial and non-spatial materials relating to cultural heritage sites in Africa. The archaeological site of Wonderwerk Cave, South Africa is one of the 19 sites documented to date using laser scanning, conventional survey, digital photogrammetry, and 3D modeling [

14].

2.2. 3D Reconstruction in the Game Industry

Building virtual 3D scenes requires artistic sense, specialized and expensive software, computational resources, and manual effort [

15]. Traditionally such a process required several visual references [

16], with higher budget games investing in field trips during the preproduction phase to capture authentic photographs on location [

17,

18]. Until recently, photogrammetry was only used sporadically as the dense millions of polygons meshes are highly unsuitable for real-time rendering. In May 2015 EA announced that “Star Wars: Battlefront” would rely heavily on photogrammetry [

19]. This was a radical change to game development and the team used photogrammetry to recreate not only props and outfits previously used in the movies, but also the epic locations familiar to Star Wars fans. The creation of realistic photogrammetry scans is still computationally demanding and as demonstrated by [

20], populating extensive game worlds with photogrammetry assets demands larger-scale solutions. Another consideration is the post-processing needs of photogrammetry reconstructions [

21]. In the past, it was a struggle to create visually convincing replications of the real world and they were surpassed by photography. Photography revolutionized art mainly because artists were relieved by the burden of realism and allowed to freely explore their creativity [

22]. Recently, several approaches for using 3D reconstructions to provide immersive cultural experiences have been proposed [

23,

24,

25], including games [

26] that aim to present sites of cultural significance, such as the Chios exploration game [

27].

2.3. Virtual Exhibitions

Research regarding virtual exhibitions (VEs) and the digital preservation of our cultural heritage had already begun in the early 2000s with the majority of the published works focusing on systems based on Web technologies like HTML/XML [

28,

29]. The advantages of VEs became apparent quickly, of course offering wide accessibility, ease of use, and minimal cost. In the early 2010s research focused on the quality characteristics of virtual exhibitions (localization, relevance, interaction, and accessibility) and created a web-based VE to promote rare historical manuscripts and seals, contributing with basic guidelines for creating interesting and compelling VEs [

30,

31,

32]. At the same time, digital technology was also explored as a way of enhancing the museum experience through on-site VEs [

33,

34] and extending the target user population through inclusive technologies [

35].

Around the same time as [

29], Martin White et al. [

8] had already presented ARCO, a complete architecture for digitization, management, and presentation of VEs. ARCO was introduced as a complete solution at the time for museums to steadily enter the era of 3D virtual exhibitions, providing both complete 3D VEs as well as augmented reality capabilities with interactivity elements.

In 2009, a survey by Beatriz et al. [

36] described emerging technologies, such as VR, AR, and Web3D, as widely used to create virtual museum exhibitions both in a museum environment through informative kiosks and on the World Wide Web. To greater extend the experience and immersion of the user, the use of 3D models, as well as free user navigation, is necessary. For that, we need not only to recreate the exhibits in 3D, but also the exhibition space.

Recently, platforms that allow users to create interactive and immersive virtual 3D/VR exhibitions have been proposed, such as the InvisibleMuseum platform that offers a collaborative authoring environment to support (a) user-designed dynamic virtual exhibitions, (b) personalized suggestions and exhibition tours, (c) visualization in web-based 3D/VR technologies, and (d) immersive navigation and interaction [

37].

2.4. VR Technologies for Virtual Visits to Archeological Sites

In recent times, with virtual reality (VR) technology and head-mounted displays (HMD) becoming increasingly widespread and part of our culture, it is natural that there has been a rise in research work regarding VR and VE. In 2017, Filomena Izzo [

38] executed a case study regarding the effects of VR on customer experience in museums. The experiment transported the users into a virtual world imagined after a painting called “Four Visions Afterlife” and showed to them the perspective of great artists dating five centuries ago. In the end, the author states that the results of this experiment were very positive. Another work by Deac et al. [

39] used VR to create a world where companies, professionals, and industry experts will be able to exhibit their work in a virtual trade show. One more excellent approach to visiting an archeological site using VR was carried out by Guy Schofield et al. [

40]. In their implementation, the user was taking a deep dive into the 9th century Viking encampment, experiencing the sights and sounds firsthand. Their team consisted of artists, archeologists, curators, and researchers and their main goal was authenticity. This way, the VR environment can reach its full potential, serving as a window to the past and simply as another way to preview museum exhibits.

3. Alistrati Cave Model Implementation

A key aspect of this work and our most important requirement was to capture the original topology of the cave using laser scanning and photogrammetry technologies to reconstruct the entirety of the cave as a 3D model.

3.1. Laser Scanning

A fixed laser scanner with a scanning range of 70 m was used to scan areas within the Alistrati cave. By placing the scanner in multiple places in the space, a series of scans were performed, for its full coverage. The Alistrati cave consists of eight points of interest (stops), which were covered with 29 grayscale scans. Special spheres identified by the Faro Scene software were used for automated registration. These spheres are placed in the scene in a manner that maximizes the visibility of each one from the different scanning points, and then the software recognizes the common spheres between the scans and performs the automatic registration. The data were imported into Faro Scene software for the process [

41]. The scans were registered in a point cloud for each point of interest and exported. Each point of interest was subdivided into nine point clouds and each point cloud was imported to Faro Scene software again to create the mesh. Mesh models with highly detailed structures were generated from the point clouds and exported.

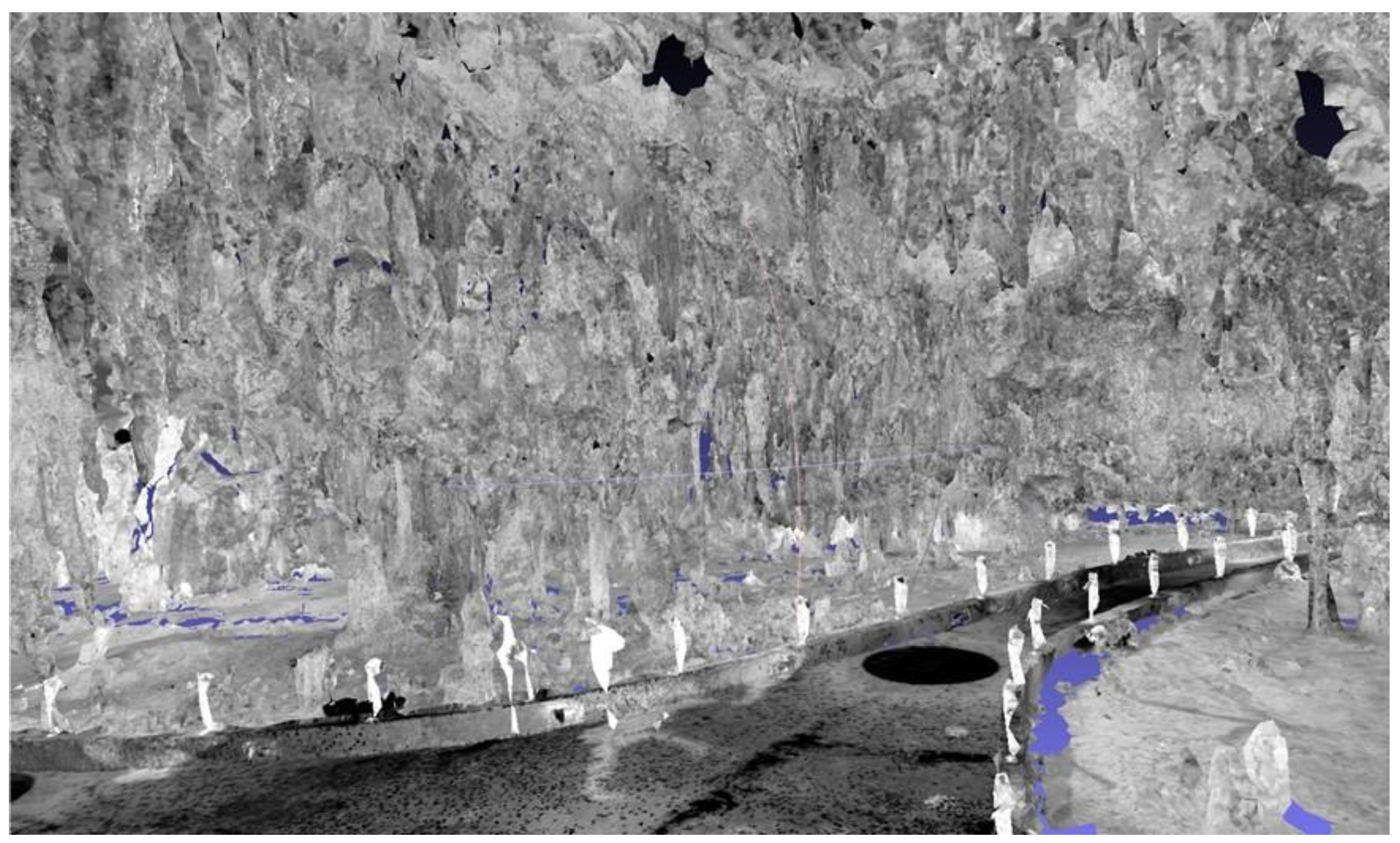

Individual scans were acquired without color information for two main reasons (a) to reduce the time needed to cover a path of almost 150 m within the cave and (b) to dismiss color information due to the peculiar lighting conditions within the cave. An example can be seen in

Figure 1.

3.2. Aerial Photogrammetry

For the aerial reconstruction of the exterior of the Alistrati cave the Phantom 4 Pro V2 was used, with which the collection of aerial photos and videos took place. The drone was equipped with a camera using a fixed 24 mm lens, capable of recording aerial photographs with a resolution of 20M pixels and the ability to record 4K video resolution (also known as an ultra-high definition or UHD) in high-efficiency Video Codec (HEVC) H.264 and H.265. At the same time, three batteries were available, each of which offered a flying capacity of about half an hour. For the conduction of the aerial photography and video recording, specific orbits were created using the Pix4DCapture software, which is followed by the drone, and the appropriate filters are adjusted to the camera lens depending on the lighting of the day in which the flights were carried out. The flying height of the drone was calculated based on the morphology of the area and it was decided that an altitude of 40 m would be sufficient to capture a desired level of detail for the final photogrammetry model. More specifically, the “Double Grid” mission was selected in the Pix4DCapture, with a camera angle of 70 degrees, front overlap at 80%, and side overlap of 70%. This option is recommended by the software for point cloud reconstructions for surfaces with height fluctuations.

The data were post-processed using Pix4D Mapper, photogrammetry software [

42]. Aerial photographs were uploaded to the photogrammetry software. Photographs were collected using Pix4D capture, which creates a fully autonomous flight and scan of the area of interest via a predetermined orbit. The reconstructed site can be seen in

Figure 2.

3.3. 3D Model Synthesis and Coloring

In this stage, the registration of the two models (interior–exterior) was made to create the synthetic environment to be employed by the VR tour guide. For the interior, extensive reworking was conducted to achieve (a) filling of the areas with gaps resulting from visual occlusions during laser scanning, and (b) coloring of the interior using reference photography from the heritage site.

For this, the process followed an extensive artistic-driven approach for filling the missing parts using the 3D application, Blender [

43]. Due to the chaotic nature of the stalactite and stalagmite cave, an abstract low-polygonal approach was used for the newly created meshes. For the best feature preservation with satisfactory performance in a portable VR workload, half a million polygons were set for each point of interest (POI) scene on average. The main target mesh was generated by the point cloud at 350,000 faces using FARO Studio, filling as much surface area as possible. All unnecessary polygons, such as columns, ropes, etc., were deleted and were replaced by repeating a better version of them. The target mesh was further enhanced by manually modeling the blank parts to an equivalent topological structure of the neighboring LIDAR set. Modeling started by extracting the boundary loop edges; thus achieving polygon continuity. Every POI consists of overlapping scans of 10–15 million faces and all available features, such as albedo, normals, and coverage area, were extracted to the target mesh as multiple 8K textures, with a Ray Distance of 5 cm for the albedo and 2 cm for the normals to minimize negative angles from the polygonal gaps. To retain the best texture quality, new UVs were created by manually marking seams in areas near the main path the user is experiencing, combined with SmartUV at 30 degrees for the chaotic structure in the distant areas, with a 1/16,384 island margin. For every scan extraction, the bake output margin was set to 3 pixels, as it retains textures on acute-angled triangles while also avoiding texture bleeding to the non-overlapping parts.

A shader node tree was used to combine and enhance all of the textures. Multiple mix-color nodes set to lighten were used for the albedo, brightening the dark areas by contributing light from all of the scans, while the alpha channel was used to mask the secondary cloned-neighboring textures for the reimagined missing parts for both albedo and normals. Masks were used to separate the cave from the concrete pathway and a color filter with a shade of brown that uniformly shifted the hue to green and blue was applied, to resemble reference images of the decomposed granite and stalagmite synthesis of the cave. Finally, colored lights that are also present in real life were added to give an extra vibrancy to the image. The entire process is summarized in

Figure 3.

3.4. Requirements for the VR Visit Scenario Definition

Before the implementation of the VR application for the Alistrati cave, specific system requirements had to be decided upon. To begin with, the VR tour should match the real-life one in terms of information; the means of providing the information about the cave could potentially vary. Ideally, we would use the 3D model mentioned in

Section 3.3 in our app, but this proved to be a near-impossible task. Reconstruction techniques from point cloud data produce 3D models with highly unoptimized topology and complex UV maps that are simply unfit for VR purposes. Even on high-end computers simply loading the entirety of the cave in computer-aided design (CAD) software proved to be a rather cumbersome process. To make things worse, it was decided that the app should be able to run smoothly on stand-alone VR devices to further increase the potential target audience that cannot afford expensive desktop VR gear. Another requirement was to provide multi-language support for the VR tour and, last but not least, immerse the user in the experience with high fidelity visuals and environmental sounds.

4. The VR Tour Guide

For the implementation of the VR tour guide app, the Unity game engine was used [

44]. Unity is an all-purpose engine that provides fast-developing iterations with the high-fidelity 3D rendering of graphics. Specifically, the universal rendering pipeline (URP) was used that comes along with a node-based shader graph for creating custom shaders without the need for direct scripting for graphics processing units (GPU). In addition, Unity’s own VR library XR SDK was the main library used in this project. By using XR SDK, the developing cycle of the VR app was greatly accelerated and vast portability between different HMDs was easily achieved through Unity’s multi-platform support. While developing the application, the Oculus (Meta) Quest 2 was the main testing unit, which today is the industry’s standard and most affordable stand-alone VR headset.

4.1. Digital Cave Game Level Optimization

VR development is a notorious field when it comes to performance optimizations since every frame has to be rendered twice in the system; specifically, one frame for each eye of the user to simulate spatial depth. The cost of rendering everything twice is well paid in performance, meaning that we have to compromise with lower fidelity graphics and optimize as much as possible. When we allow for low frame rates or frame drops in VR applications, it may cause the user to feel nauseous and ultimately create negative feelings both towards the tour of the cave and the experience of VR as a whole.

4.1.1. Handling the Vast Amount of Data

As mentioned in

Section 3.4, the result of the reconstruction technique outputs a highly unoptimized 3D model, unfit for a stand-alone VR application. It would be possible for a 3D artist to recreate the entirety of the cave by hand, using the photo-scanned model as a base. By the end of this recreation, a final optimized model would be exported that contains far fewer, but more well-defined, vertices. This step, requiring a 3D artist to recreate a model with clean geometry, is a common technique that is used in game development when creating virtual characters that were initially conceptualized using sculpting as a 3D modeling technique. However, re-modeling the complete set of the Alistrati cave data would be intense manual work and it was decided that it would not be worth the effort for this project. Our solution to the problem was to divide and conquer; split the cave into multiple parts and each part would represent a game level on our VR application. To traverse through each cave part the user will enter magical portals that will be positioned at the start and end of each part of the cave, respectively. This way, the information that needs to be handled in each scene is greatly reduced. Specifically, each part of the final application contains one or two points of interest where the virtual tour assistant will stop and provide information to the user. Then, the assistant will move towards the portal and wait until the user approaches before entering.

4.1.2. Baked Lighting

When rendering a frame in a computer graphics application, a lot of complex calculations take place behind the scenes. From applying the transformation matrix to each object (move, rotate, scale), to calculating how the light would realistically react to illuminate a scene. Naturally, the more complex the illumination model in question, the more realistic the final result will look. Thankfully, there is an exception to this rule that allows us to have realistic results with zero cost; if an object and a light source are static, meaning that they won’t be moved for the rest of their lifetime, the lighting result will be the same for the entire duration of the application. So, we can avoid rerunning those calculations on each frame by simply calculating it once before the app runs. This technique is called baked lighting and it is a common technique that dramatically increases performance on real-time computer graphics applications.

On our implementation, we initially tried to use Unity’s integrated baking pipeline, but it did not seem to be working well with the geometry of our cave 3D model, which was mostly created by automated point-cloud triangulation. Our next solution was to use Blender to bake the lighting. The process involved placing the light sources by hand in the corresponding places that matched the photographic material we had available from the actual cave facility. By switching to the rendering viewport of Blender, we could see in semi-real time the results of the lighting placement. Finally, we baked the direct, indirect, and color passes of the diffuse light type into a new texture of 8192 by 8192 pixels. By repeating this process for each separated part of the cave, we ended up with image textures that we can use with unlit type shaders that use zero light calculations. You can see the results of the baking technique in

Figure 4. It is important to mention that since there are no light sources in the scene, it is not possible to make use of normal maps to further increase the visual fidelity of the final result. There are specialized ways to apply normal maps even to such cases, but those options were not explored in this work.

4.1.3. Navigating the Cave and Controls

It is an industry standard that at least two ways of movement should be provided to the user in a VR environment: (a) joystick walk and (b) point and teleport. In the Alistrati cave application, the user has the option to move as if he was walking by pushing the left-hand joystick in the desired direction; by clicking the right-hand joystick he can change the direction he is looking at by 30 degrees; and lastly, by pressing the trigger of the right hand he can target a position on the floor and teleport there instantaneously. The reasoning behind offering both ways of movement lies in the fact that some people tend to feel nauseous when using only the walk method. The above happens because the user perceives that he is walking in the virtual environment, but his body knows that, in reality, he is not moving. Finally, since the user interfaces in the application are simple and only extend to allowing the user to choose his language, by either pressing the primary or the secondary buttons on his controllers the user can interact with the UI elements.

4.2. The Virtual Tour Assistant

To assist the user in the VR experience, a 3D model of a robot was created. This robot assistant was modeled to be a small, flying, and friendly robot to appeal to the user (final result shown in

Figure 5). The job of the assistant is to work as a guide and escort the user into the cave while narrating the history and importance of specific points of interest.

4.2.1. Assistant’s States

The robot assistant executes a simple logic loop while the game is running. First, it moves towards the designated position in the cave. Then, the robot waits until the user is close enough and when he arrives the robot starts playing the sound associated with the specific point of interest. After the sound is finished, the robot will repeat the execution of the above steps by moving toward the next stop. To handle the movement of the robot Unity’s NavMesh system was used. To use this system a walkable path had to be designated for the AI agent to understand how it can potentially move around the space. For the walkable path, cutouts of the original paths of the cave mesh were used, and after baking those meshes into the NavMesh system, the robot assistant was free to move independently.

To assign the positions where the robot should stop moving, wait for the user, and then play a specific audio file, an empty game object called the RobotPath was created. Inside the RobotPath exist multiple empty game objects positioned at places in the cave where the robot should stop moving and move to its next state. Another empty game object can be found inside RobotPath called PathSounds and it contains a public list with the sound files for every stop in every language available by the system. When the robot enters the moving phase of its state machine, it will use the NavMesh system to move to the position of the next RobotPath child. When it arrives and stops moving, it will play the sound from the PathSounds list that matches the index of the current stop. The advantage with the above setup is that a level designer can easily place as many stops and sounds as he pleases using Unity’s editor while being completely agnostic of the code.

4.2.2. Animations

Up until this point, the robot assistant can execute its main function of roaming around the space and providing information to the user regarding the cave, but it lacks interactivity and appealing aesthetics, so simple animations were created to add to the overall experience. For starters, since the robot is levitating in the air, a discreet constant up and down motion was added. Then, when the robot is moving it bends forward, just like a helicopter would, to visually suggest that its thrusters are propelling it forward. In addition, custom shaders were used in the visor area of the robot to make it feel livelier. To create animated shaders, we used Unity’s URP shader node graph and two shaders were implemented for the visor; one for an idle state in which the eyes of the robot would blink periodically, and another one where the eyes would transform into a sound wave visualizer while the robot would be speaking. Furthermore, a particle system has been created that emits particles in the form of sound waves to further emphasize the current status of the robot assistant, as seen in

Figure 6. The advantage of using the URP rendering system in Unity is that it allows you to use the Shader Graph and build shaders visually. As Unity describes it: “Instead of writing code, you create and connect nodes in a graph framework. Shader Graph gives instant feedback that reflects your changes, and it’s simple enough for users who are new to shader creation”.

4.3. Sound Design

An important ingredient to further engage the user in a virtual world, are sounds. First of all, environmental sounds were added to the three-dimensional space of the scene; for example, the sound of water droplets dropping from stalagmites with a light echo on the sound to emulate spatial depth. In addition, an ambient sound that imitates a light breeze blowing in the interior of the cave was attached. Lastly, a close-lipped booming sound was added to fake the robot assistant’s thrusters that allow it to fly.

4.4. An Example of a Visit Scenario

In this section, we describe how a visit scenario would take place from the perspective of the hypothetical user named Bob. Bob is a VR enthusiast that happened to come across the Alistrati cave VR application. When he launches the app, he is transported inside the Alistrati cave and in front of him awaits a small flying robot and a user interface asking for him to select his preferred language. After selecting the “English” option by pointing his controller to the button and pressing the trigger of his remote, the user interface disappears and the flying robot starts moving deeper inside the cave, where it stops after traveling a small distance.

As it is common in VR applications, Bob immediately figures out that he can move either by tilting his left-hand joystick or, as he prefers, to teleport to a destination he designates using the trigger button. He also notices that the robot is constantly looking at him as if it is waiting for him. He approaches the robot and when he gets close enough, the robot says the following while its eyes change shape and expanding aesthetic sound waves start appearing around it. In a female voice, the robot speaks the following: “My name is Persephone. I am the daughter of the goddess Demeter and the wife of Pluto, the god of the underworld. I welcome you to my underground kingdom, the Alistrati Cave. At this point, three corridors start. The left one is 700 m long and not yet accessible, the right one which is 300 m long and the main corridor which is one kilometer long where I will guide you. So… let’s start!” As the robot assistant, now known as Persephone, finishes its introduction it begins moving to the first point of interest where Bob immediately follows. Persephone stops and gives Bob a small piece of information regarding the cave: “The temperature inside the cave is between seventeen to twenty degrees celsius” and then continues flying down the corridor. Finally, the robot assistant Persephone stops at a part of the corridor where the path gets significantly wider. When Bob arrives, Persephone narrates: “We have arrived at the largest space of the cave, with a length of 45 m and a height of 15 m. This is the oldest part of the cave, at least three million years old. In front of us are various formations such as stalactites…” After Persephone ends her narration, she moves on to the next destination while continuing to occasionally make short stops and give Bob a quick fact about the cave.

At some point, Bob and Persephone reach a blue-colored, twisting portal-like formation. Persephone waits until Bob is close enough and then enters the portal. As Bob follows, he is teleported to the next part of the cave where he finds Persephone waiting for him. Then, the tour continues similarly until it reaches its end. Indicative screenshots from this guided tour taken by Bob can be seen in

Figure 7.

5. Evaluation

Heuristics evaluation [

45] is a commonly used method in the HCI field, especially in early design iterations because it is effective, quick to produce results, and does not require many resources. In addition, it can, in principle, take into account a wider range of users and tasks than user-based evaluation and assess if the application or system satisfies user requirements. During the heuristic evaluation the inspection is ideally conducted by HCI usability experts who base their judgement on prior experiences and knowledge of common human factors and ergonomics guidelines, principles, and standards. Heuristic evaluations, as well as cognitive walkthroughs, can also be performed by technology domain experts with experience in common design practices in their field of expertise. In cognitive walkthroughs [

46], the expert examines the working, or non-working, prototype through the eyes of the user, performing typical tasks and identifying areas in the design or the functionality that could potentially cause user errors.

Two levels of evaluation have been conducted so far on the VR tour guide. The first evaluation level involved expert walkthroughs, which were conducted by three accessibility and usability experts from the FORTH Human-Computer Interaction Lab. The second evaluation level involved testing with three staff members of the Alistrati cave at the installation day. The main objective was to assess the overall system usability and provide recommendations on how to improve the design. The findings of both evaluations are reported below.

5.1. Expert Walkthroughs

The expert walkthroughs consisted of simple tasks, narrated to the experts through a scripted scenario and, by the end of each walkthrough, the experts were also given the chance to freely explore the VR environment. After the walkthrough, the experts discussed with the development team the overall usability of the system. This process was repeated two times by the end of the project, and each iteration provided the development team with insightful counselling to improve the application’s usability and interactivity. Although these feedback sessions were not formally recorded, a summary of the most crucial improvements birthed by this method can be found in the following paragraph.

In the first iteration cycle of the expert walkthroughs, the robot assistant was missing both the soundbar visor and the soundwave emission. Both of these features were suggested by the experts and, according to their explanation, it is important for a system to always keep the users informed about what is going on, through appropriate feedback. In the second iteration cycle, the experts experienced the level transitions for the first time. In that iteration, the robot would reach the portal at the end of a level, and it would wait for the user to enter the portal first. They suggested that the robot should take initiative and verbally ask the user to follow it as it enters the portal. Since the entire tour is heavily guided by the robot, it is important to be consistent. In addition, they suggested that the robot could make brief stops between the determined information points, to provide short fun facts about the cave or about itself, to make the tour more entertaining. Finally, it was recommended to add a constant machine-sounding noise coming out of the robot, in order to match possible real life expectations of the users. The robot is, after all, a flying machine and it should sound like one.

5.2. Informal User-Based Evaluation

Unfortunately, due to the COVID-19 pandemic, the user-based evaluation involved only three staff members of the Alistrati cave facility. Once the movement controls were explained to them, each staff member had a run of the application. Afterwards, they filled in a System Usability Scale (SUS) form. Each statement in the SUS questionnaire has four responses ranging from “Strongly disagree” (1) to “Strongly Agree” (4). Once the SUS questionnaire was completed, the participants were given the chance to provide us with comments and feedback. Although the following results cannot be considered trustworthy due to the small sample count, the final score of the SUS questionnaire was a positive 81.4/100. The individual answers of each user can be found in

Figure 8. The statement “I think I would use this system frequently” was removed since it does not fit the context of the application. One does not have multiple tours of the same exhibition space frequently after all.

A recommendation that the staff made, and was later added to the application, was to add environmental sounds to the tour. Furthermore, they explained that they were actually expecting better visual quality. Lastly, the way to navigate the different cave parts using the portals did seem inconsistent to them in the context of a cave. That said, they all did agree that even though they were inexperienced when it comes to virtual reality, they could easily move and roam around the cave after little time using the app.

6. Discussion

Even though a larger sample count for the user-based evaluation would be more insightful and more accurate, it did provide us with the chance to observe inexperienced users running the application. By having multiple iterations of the expert walkthroughs, many interaction problems were dealt with early in the development stage. This became apparent by the high SUS score and was highlighted even more after discussing with the users. Most of their suggestions and complains had to do with aesthetics and visuals and not the interaction of the app. Even though some of the users wore an HMD for the first time, they were confidently roaming the virtual cave after using the basic movement functions a couple of times and, last but not least, the way to interact with the robot became clear from the beginning for all of the users.

7. Conclusions

In this work, an alternative visiting experience for cave heritage sites is presented powered by evolutions in VR and 3D reconstruction technologies. The approach is demonstrated through a use case for the heritage site of the Alistrati cave near Serres, Greece. By employing means of digital preservation of heritage sites, the VR solution presented aspires to offer immersive, close to reality, engaging visiting experiences.

During this work, several challenges were encountered, mainly related to the heritage site itself and to the technical requirements of delivering high-end experiences from new low-cost VR devices.

3D reconstruction of caves: The Alistrati cave consists of eight points of interest (stops), which were covered with 29 grayscale scans. Although the number and quality of the acquired scans would seem sufficient for the specific use case, which is not an archaeological reconstruction, but a simplified rendering of the heritage site, we learned that extensive post-processing was ultimately required through the existence of numerous gaps and blind spots due to the structural characteristics of the cave.

Performance issues when using 3D reconstructions in VR: VR development is a notorious field when it comes to performance optimizations since every frame has to be rendered twice in the system; specifically, one frame for each eye of the user to simulate spatial depth. We learned that VR and 3D reconstructed heritage sites increase performance issues due to the large size of the produced meshes. In the Alistrati cave VR tour implementation, we learned that both mesh optimization at design time and mesh splitting at development time was needed to cope with performance issues and ensure that high frame rates are offered in the entire tour.

Realism-lighting: Cave heritage sites are, in most cases, very dark and require artificial lighting to be visitable. In providing a virtual experience in heritage sites, lighting is considered important since the objective is to replicate the feeling of being there. To do so, we couldn’t rely on the light captured within the cave through laser scanning because these would result in a combination of very dark and very bright textures. The decision taken was to capture the site in greyscale and then apply artificial coloring and lighting. We learned that this was a good decision as it allowed us (a) to manually paint the resulting mesh by exploring photographic documentation and (b) to apply lights on the game engine to be able to control the “atmosphere” of the heritage site.

The scale of the site: The Alistrati cave is a cave that has a total length of more than 2500 m with a visiting section of 1500 m. Reconstructing the entire cave could require immense time and resources and the result would be almost impossible to render in a low-cost VR device. To handle the situation, we decided to capture the eight points of interest and their surroundings resulting in a total length of about 200 m of a virtual visit. To provide a constant visiting flow, the portal metaphor was used allowing the visitor to jump from one point to the other. We learned, based on the reactions of users using the app at the cave, that this was considered a good compromise and even liked by younger users because it was giving a sense of futurism in the whole visiting experience.

Author Contributions

Conceptualization, E.Z.; methodology, E.Z.; software, Z.P., A.A., E.Z., A.K. and T.E.; validation, E.Z.; formal analysis, E.Z.; investigation, E.Z.; resources, T.E.; data curation, A.K.; writing—original draft preparation, Z.P., N.P., A.K. and T.E.; writing—review and editing, Z.P., N.P., A.K., T.E. and X.Z.; visualization, Z.P., A.A. and A.K.; supervision, E.Z., X.Z., N.P. and C.S.; project administration, E.Z.; funding acquisition, N.P. All authors have read and agreed to the published version of the manuscript.

Funding

This work has been conducted in the context of the project: “Modernization, promotion, and exploitation of caves. (MyCaves, INTERREG V-A GREECE-BULGARIA 2014–2020)”, and has been co-financed by the European Regional Development Fund (ERDF) and Greek national funds (23 October 2020 Date of Contract). The main contractor of the project is CVRL Lab of ICS-FORTH (

www.ics.forth.gr/cvrl, last accessed on 10 March 2022) and the HCI-Lab (

www.ics.forth.gr/hci, last accessed on 10 March 2022) was responsible for the implementation of the VR tour guide, presented in this article, to accompany the implemented by CVRL robotic tour guide.

Institutional Review Board Statement

Ethical review and approval were waived for this study due to the fact that three accessibility and usability experts were staff from the FORTH Human-Computer Interaction Lab and users were staff from the heritage site (Alistrati cave).

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

Data available upon request.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Roberts, N. The cultural and natural heritage of caves in the Lao PDR: Prospects and challenges related to their use, management and conservation. J. Lao Stud. 2015, 5, 113–139. [Google Scholar]

- Buonincontri, P.; Micera, R.; Murillo-Romero, M.; Pianese, T. Where Does Sustainability Stand in Underground Tourism? A Literature Review. Sustainability 2021, 13, 12745. [Google Scholar] [CrossRef]

- Shavanddasht, M.; Karubi, M.; Sadry, B.N. An examination of the relationship between cave tourism motivations and satisfaction: The case of Alisadr cave. Iran. Geoj. Tour. Geosites 2017, 20, 165–176. [Google Scholar]

- Parga-Dans, E.; Gonzalez, P.A.; Enriquez, R.O. The social value of heritage: Balancing the promotion-preservation relationship inthe Altamira World Heritage Site. Spain. J. Destin. Mark. Manag. 2020, 18, 100499. [Google Scholar] [CrossRef]

- Torabi Farsani, N.; Reza Bahadori, S.; Abolghasem Mirzaei, S. An Introduction to Mining Tourism Route in Yazd Province. Geoconserv. Res. 2020, 3, 33–39. [Google Scholar]

- Dermitzakis, M.D.; Papadopoulos, N.K. The most important caves and potholes of Greece = The most interesting caves and precipices of Greece. Bull. Hell. Speleol. Soc. 1977, 14, 1–15. [Google Scholar]

- Yemez, Y.; Schmitt, F. 3D reconstruction of real objects with high resolution shape and texture. Image Vis. Comput. 2004, 22, 1137–1153. [Google Scholar] [CrossRef]

- Martin, W. ARCO—An Architecture for Digitization, Management and Presentation of Virtual Exhibitions. Comput. Graph. Int. Conf. 2004, 22, 622–625. [Google Scholar]

- Dosovitskiy, A.; Tobias Springenberg, J.; Brox, T. Learning to generate chairs with convolutional neural networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 1538–1546. [Google Scholar]

- Choy, C.B.; Xu, D.; Gwak, J.; Chen, K.; Savarese, S. 3d-r2n2: A unified approach for single and multi-view 3d object reconstruction. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 11–14 October 2016; Springer: Cham, Switzerland, 2016; pp. 628–644. [Google Scholar]

- Agarwal, S.; Furukawa, Y.; Snavely, N.; Simon, I.; Curless, B.; Seitz, S.M.; Szeliski, R. Building rome in a day. Commun. ACM 2011, 54, 105–112. [Google Scholar] [CrossRef]

- González-Aguilera, D.; Muñoz-Nieto, A.; Gómez-Lahoz, J.; Herrero-Pascual, J.; Gutierrez-Alonso, G. 3D Digital Surveying and Modelling of Cave Geometry: Application to Paleolithic Rock Art. Sensors 2009, 9, 1108–1127. [Google Scholar] [CrossRef]

- Grussenmeyer, P.; Landes, T.; Alby, E.; Carozza, L. High resolution 3D recordng and modelling of the bronze Age cave “les Fraux” in Périgord (France). ISPRS Comm. V Symp. 2020, 38, 262–267. [Google Scholar]

- Rüther, H.; Chazan, M.; Schroeder, R.; Neeser, R.; Held, C.; Walker, S.J.; Matmon, A.; Horwitz, L.K. Laser scanning for conservation and research of African cultural heritage sites: The case study of Wonderwerk Cave, South Africa. J. Archaeol. Sci. 2009, 36, 1847–1856. [Google Scholar] [CrossRef]

- Parys, R.; Schilling, A. Incremental large-scale 3D reconstruction. In Proceedings of the IEEE International Conference on 3D Imaging, Modeling, Processing, Visualization & Transmission, Zurich, Switzerland, 13–15 October 2012; IEEE: Piscataway, NJ, USA; pp. 416–423. [Google Scholar]

- Statham, N. Use of photogrammetry in video games: A historical overview. Games Cult. 2020, 15, 289–307. [Google Scholar] [CrossRef]

- Savvidis, D. Chios Mastic Tree; Kyriakidis Bros S.A. Publications: Thessaloniki, Greece, 2000. [Google Scholar]

- Sweetser, P.; Wyeth, P. GameFlow: A model for evaluating player enjoyment in games. Comput. Entertain. 2005, 3, 3. [Google Scholar] [CrossRef]

- Brown, K.; Hamilton, A. Photogrammetry and “Star Wars Battlefront”. 2016. Available online: https://www.gdcvault.com/play/1023272/Photogrammetry-and-Star-Wars-Battlefront (accessed on 10 March 2022).

- Azzam, J. Porting a Real-Life Castle into Your Game When You’re Broke. 2017. Available online: http://www.gdcvault.com/play/1023997/Porting-a-Real-Life-Castle (accessed on 10 March 2022).

- Bishop, L.; Chris, C.; Michal, J. Photogrammetry for Games: Art, Technology and Pipeline Integration for Amazing Worlds. 2017. Available online: https://www.gdcvault.com/play/1024340/Photogrammetry-for-Games-Art-Technology (accessed on 10 March 2022).

- Maximov, A. Future of Art Production in Games. 2017. Available online: https://www.gdcvault.com/play/1024104/Future-of-Art-Production-in (accessed on 10 March 2022).

- Partarakis, N.; Zabulis, X.; Patsiouras, N.; Chatjiantoniou, A.; Zidianakis, E.; Mantinaki, E.; Kaplanidi, D.; Ringas, C.; Tasiopoulou, E.; Carre, A.L.; et al. Multi-Scale Presentation of Spatial Context for Cultural Heritage Applications. Electronics 2022, 11, 195. [Google Scholar] [CrossRef]

- Ringas, C.; Tasiopoulou, E.; Kaplanidi, D.; Partarakis, N.; Zabulis, X.; Zidianakis, E.; Patakos, A.; Patsiouras, N.; Karuzaki, E.; Foukarakis, M.; et al. Traditional Craft Training and Demonstration in Museums. Heritage 2022, 5, 431–459. [Google Scholar] [CrossRef]

- Carre, A.L.; Dubois, A.; Partarakis, N.; Zabulis, X.; Patsiouras, N.; Mantinaki, E.; Zidianakis, E.; Cadi, N.; Baka, E.; Thalmann, N.M.; et al. Mixed-Reality Demonstration and Training of Glassblowing. Heritage 2022, 5, 103–128. [Google Scholar] [CrossRef]

- Photomodeler Technologies, How Is Photogrammetry Used in Video Games? Available online: https://www.photomodeler.com/how-is-photogrammetry-used-in-video-games/ (accessed on 10 March 2022).

- Partarakis, N.; Patsiouras, N.; Evdemon, T.; Doulgeraki, P.; Karuzaki, E.; Stefanidi, E.; Ntoa, S.; Meghini, C.; Kaplanidi, D.; Zabulis, X.; et al. Enhancing the Educational Value of Tangible and Intangible Dimensions of Traditional Crafts through Role-Play Gaming. In Proceedings of the International Conference on ArtsIT, Interactivity and Game Creation, Faro, Portugal, 21–22 November 2022; Springer: Cham, Switzerland, 2020; pp. 243–254. [Google Scholar]

- Su, C.J. An internet based virtual exhibition system: Conceptual deisgn and infrastructure. Comput. Ind. Eng. 1998, 35, 615–618. [Google Scholar] [CrossRef]

- Lim, J.C. Creating Virtual Exhibitions from an XML-Based Digital Archive. Sage J. 2003, 29, 143–157. [Google Scholar] [CrossRef]

- Gabriela, D.; Cornel, L.; Cristian, C. Creating Virtual Exhibitions for Educational and Cultural Development; Informatica Economica, Academy of Economic Studies-Bucharest: Bucharest, Romania, 2014; Volume 18, pp. 102–110. [Google Scholar]

- Foo, S. Online Virtual Exhibitions: Concepts and Design Considerations. DESIDOC J. Libr. Inf. Technol. 2010, 28, 22–34. [Google Scholar] [CrossRef]

- Rong, W. Some Thoughts on Using VR Technology to Communicate Culture. Open J. Soc. Sci. 2018, 6, 88–94. [Google Scholar] [CrossRef][Green Version]

- Partarakis, N.; Antona, M.; Stephanidis, C. Adaptable, personalizable and multi user museum exhibits. In Curating the Digital; Springer: Cham, Switzerland, 2016; pp. 167–179. [Google Scholar]

- Partarakis, N.; Grammenos, D.; Margetis, G.; Zidianakis, E.; Drossis, G.; Leonidis, A.; Metaxakis, G.; Antona, M.; Stephanidis, C. Digital cultural heritage experience in Ambient Intelligence. In Mixed Reality and Gamification for Cultural Heritage; Springer: Cham, Switzerland, 2017; pp. 473–505. [Google Scholar]

- Partarakis, N.; Klironomos, I.; Antona, M.; Margetis, G.; Grammenos, D.; Stephanidis, C. Accessibility of cultural heritage exhibits. In Proceedings of the International Conference on Universal Access in Human-Computer Interaction, Washington, DC, USA, 24–29 July 2016; Springer: Cham, Switzerland, 2016; pp. 444–455. [Google Scholar]

- Beatriz, M.; Paula, C. 3D Virtual Exhibitions. DESIDOC J. Libr. Inf. Technol. 2013, 33, 225–235. [Google Scholar]

- Zidianakis, E.; Partarakis, N.; Ntoa, S.; Dimopoulos, A.; Kopidaki, S.; Ntagianta, A.; Ntafotis, E.; Xhako, A.; Pervolarakis, Z.; Stephanidis, C.; et al. The invisible museum: A user-centric platform for creating virtual 3D exhibitions with VR support. Electronics 2021, 10, 363. [Google Scholar] [CrossRef]

- Izzo, F. Museum Customer Experience and Virtual Reality: H.BOSCH Exhibition Case Study. Mod. Econ. 2017, 8, 531–536. [Google Scholar] [CrossRef]

- Deac, G.C.; Georgescu, C.N.; Popa, C.L.; Ghinea, M.; Cotet, C.E. Virtual Reality Exhibition Platform. In Proceedings of the 29th DAAAM International Symposium, Zadar, Croatia, 24–27 October 2018; DAAAM International: Vienna, Austria, 2018; pp. 0232–0236, ISBN 978-3-902734-20-4, ISSN 1726-9679. [Google Scholar] [CrossRef]

- Schofield, G. Viking VR: Designing a Virtual Reality Experience for a Museum. DIS 18. In Proceedings of the 2018 Designing Interactive Systems Conference, Hong Kong, China, 9–13 June 2018; pp. 805–815. [Google Scholar]

- FARO SCENE Software—For Intuitive, Efficient 3D Point Cloud Capture, Processing and Registration. Available online: https://www.faro.com/ (accessed on 4 March 2022).

- Pix4D SA—A Unique Photogrammetry Software Suite for Mobile and Drone Mapping. EPFL Innovation Park, Building F, 1015 Lausanne, Switzerland. Available online: https://www.pix4d.com/ (accessed on 3 March 2022).

- Community BO. Blender—A 3D Modelling and Rendering Package; Stichting Blender Foundation: Amsterdam, The Netherlands, 2018; Available online: http://www.blender.org (accessed on 2 March 2022).

- Unity—A Real-Time Development Platform. Available online: https://www.unity.com (accessed on 8 March 2022).

- Nielsen, J.; Molich, R. Heuristic evaluation of user interfaces. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, Seattle, WA, USA, 1–5 April 1990; pp. 249–256. [Google Scholar]

- Rieman, J.; Franzke, M.; Redmiles, D. Usability evaluation with the cognitive walkthrough. In Proceedings of the Conference Companion on Human Factors in Computing Systems, Denver, CO, USA, 7–11 May 1995; pp. 387–388. [Google Scholar]

| Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).