Consistency and Asymptotic Normality of Estimator for Parameters in Multiresponse Multipredictor Semiparametric Regression Model

Abstract

1. Introduction

2. Materials and Methods

2.1. Multiresponse Multipredictor Semiparametric Regression (MMSR) Model

2.2. Truncated Spline

2.3. Pivotal Quantity

2.4. Simulation

3. Results and Discussions

3.1. Estimating MMSR Model

3.2. Estimating Confidence Interval of Parameters in MMSR Model

3.3. Investigating Consistency of Estimator for Parameters in MMSR Model

- (a)

- Based on the Strong Law of Large Numbers [45], we have:it implies as .

- (b)

- Note that as hold if as .On the other hand, we have:It means that:Therefore, we obtain:□

3.4. Determining Asymptotic Normality of Estimator for Parameters in MMSR Model

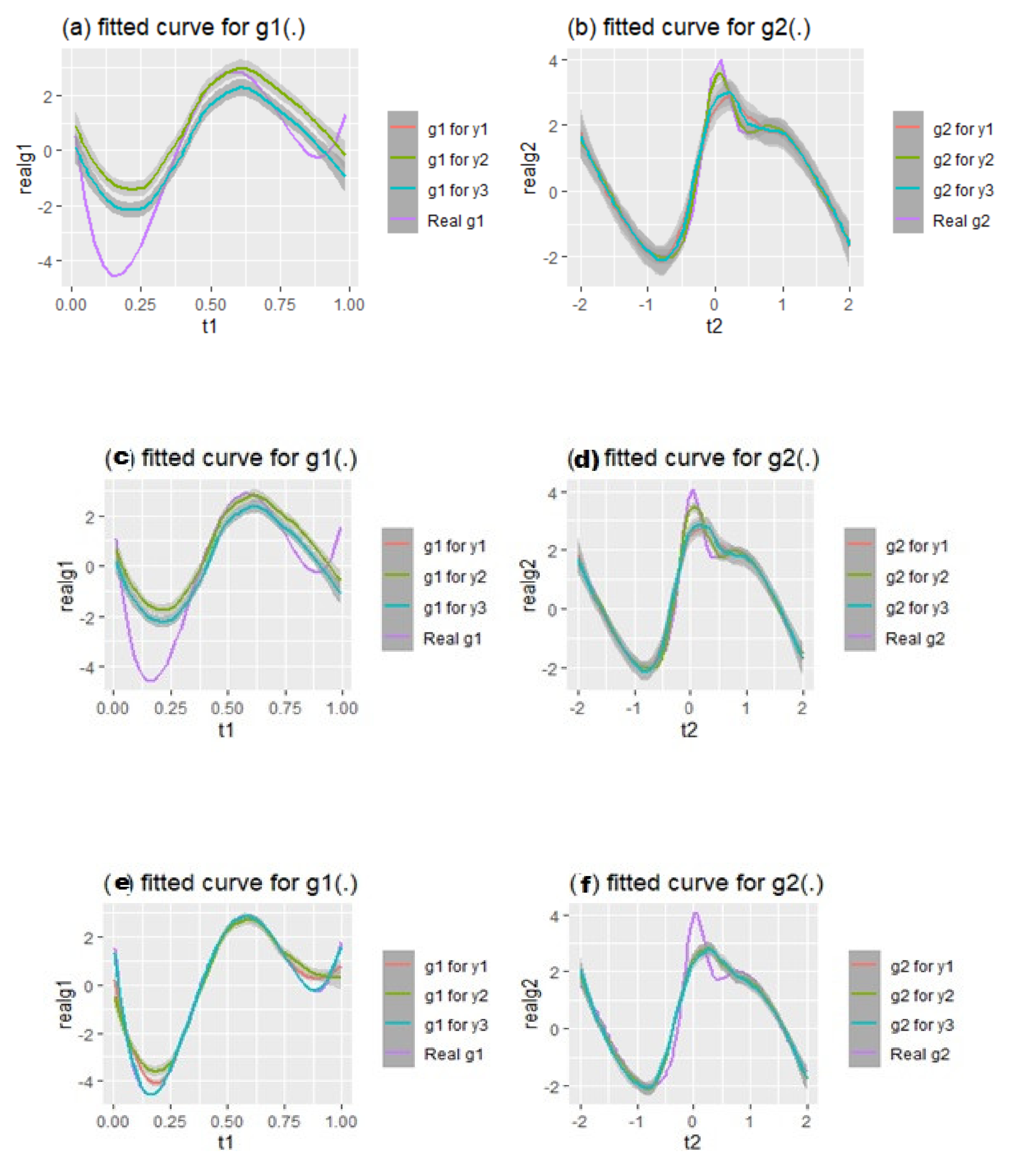

3.5. Simulation Study

- (i)

- Simulation Design: The simulation study scenarios are decided as follows:

- We generate three samples sized .

- The response vector , consisting of three response variables, is created from the MMSR model given in (ii).

- The design vector is produced from a uniform distribution.

- For each model, a total of nine regression coefficients specified as , are considered here.

- In addition, the MMSR model to be generated includes two different smooth functions, and with nonparametric covariates and , respectively.

- The number of replications for each sample used in simulation experiments is considered as 1000.

- (ii)

- Data Generation: The MMSR model can be written as follows, according to given information in the simulation design;where is a -dimensional design matrix and each is generated from a uniform distribution, that is, . The vector of regression coefficients is defined in (i) above.

- ▪

- and are computed by using and as follows:

- ▪

- Finally, the random error terms ’s are independent and identically distributed from the multivariate normal distribution for three models.

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Ana, E.; Chamidah, N.; Andriani, P.; Lestari, B. Modeling of hypertension risk factors using local linear of additive nonparametric logistic regression. J. Phys. Conf. Ser. 2019, 1397, 012067. [Google Scholar] [CrossRef]

- Cheruiyot, L.R. Local linear regression estimator on the boundary correction in nonparametric regression estimation. J. Statist. Theory Appl. 2020, 19, 460–471. [Google Scholar] [CrossRef]

- Chamidah, N.; Yonani, Y.S.; Ana, E.; Lestari, B. Identification the number of mycobacterium tuberculosis based on sputum image using local linear estimator. Bullet. Elect. Eng. Inform. (BEEI) 2020, 9, 2109–2116. [Google Scholar] [CrossRef]

- Cheng, M.-Y.; Huang, T.; Liu, P.; Peng, H. Bias reduction for nonparametric and semiparametric regression models. Statistica Sinica 2018, 28, 2749–2770. [Google Scholar] [CrossRef]

- Delaigle, A.; Fan, J.; Carroll, R.J. A design-adaptive local polynomial estimator for the errors-in-variables problem. J. Amer. Stat. Assoc. 2009, 104, 348–359. [Google Scholar] [CrossRef] [PubMed]

- Francisco-Fernandez, M.; Vilar-Fernandez, J.M. Local polynomial regression estimation with correlated errors. Comm. Statist. Theory Methods 2001, 30, 1271–1293. [Google Scholar] [CrossRef]

- Benhenni, K.; Degras, D. Local polynomial estimation of the mean function and its derivatives based on functional data and regular designs. ESAIM Probab. Stat. 2014, 18, 881–899. [Google Scholar] [CrossRef]

- Kikechi, C.B. On local polynomial regression estimators in finite populations. Int. J. Stats. Appl. Math. 2020, 5, 58–63. [Google Scholar]

- Wand, M.P.; Jones, M.C. Kernel Smoothing, 1st ed.; Chapman and Hall/CRC: New York, NY, USA, 1995. [Google Scholar]

- Cui, W.; Wei, M. Strong consistency of kernel regression estimate. Open J. Stats. 2013, 3, 179–182. [Google Scholar] [CrossRef]

- De Brabanter, K.; de Brabanter, J.; Suykens, J.A.K.; de Moor, B. Kernel regression in the presence of correlated errors. J. Mach. Learn. Res. 2011, 12, 1955–1976. [Google Scholar]

- Wahba, G. Spline Models for Observational Data; SIAM: Philadelphia, PA, USA, 1990. [Google Scholar]

- Eubank, R.L. Nonparametric Regression and Spline Smoothing, 2nd ed.; Marcel Dekker: New York, NY, USA, 1999. [Google Scholar]

- Wang, Y. Smoothing Splines: Methods and Applications; Taylor & Francis Group: Boca Raton, FL, USA, 2011. [Google Scholar]

- Liu, A.; Qin, L.; Staudenmayer, J. M-type smoothing spline ANOVA for correlated data. J. Multivar. Anal. 2010, 101, 2282–2296. [Google Scholar] [CrossRef]

- Chamidah, N.; Lestari, B.; Massaid, A.; Saifudin, T. Estimating mean arterial pressure affected by stress scores using spline nonparametric regression model approach. Commun. Math. Biol. Neurosci. 2020, 2020, 1–12. [Google Scholar]

- Eilers, P.H.C.; Marx, B.D. Flexible smoothing with B-splines and penalties. Statist. Sci. 1996, 11, 86–121. [Google Scholar] [CrossRef]

- Lu, M.; Liu, Y.; Li, C.-S. Efficient estimation of a linear transformation model for current status data via penalized splines. Stat. Meth. Medic. Res. 2020, 29, 3–14. [Google Scholar] [CrossRef]

- Wang, Y.; Guo, W.; Brown, M.B. Spline smoothing for bivariate data with applications to association between hormones. Stat. Sinica 2000, 10, 377–397. [Google Scholar]

- Yilmaz, E.; Ahmed, S.E.; Aydin, D. A-Spline regression for fitting a nonparametric regression function with censored data. Stats 2020, 3, 11. [Google Scholar] [CrossRef]

- Aydin, D. A comparison of the nonparametric regression models using smoothing spline and kernel regression. World Acad. Sci. Eng. Tech. 2007, 36, 253–257. [Google Scholar]

- Lestari, B.; Fatmawati; Budiantara, I.N.; Chamidah, N. Smoothing parameter selection method for multiresponse nonparametric regression model using spline and kernel estimators approaches. J. Phy. Conf. Ser. 2019, 1397, 012064. [Google Scholar] [CrossRef]

- Aydin, D.; Güneri, Ö.I.; Fit, A. Choice of bandwidth for nonparametric regression models using kernel smoothing: A simulation study. Int. J. Sci. Basic Appl. Research (IJSBAR) 2016, 26, 47–61. [Google Scholar]

- Osmani, F.; Hajizadeh, E.; Mansouri, P. Kernel and regression spline smoothing techniques to estimate coefficient in rates model and its application in psoriasis. Medic. J. Islamic Repub. Iran (MJIRI) 2019, 33, 90. [Google Scholar] [CrossRef]

- Fatmawati; Budiantara, I.N.; Lestari, B. Comparison of smoothing and truncated spline estimators in estimating blood pressures models. Int. J. Innov. Creat. Change (IJICC) 2019, 5, 1177–1199. [Google Scholar]

- Lestari, B.; Fatmawati; Budiantara, I.N. Spline estimator and its asymptotic properties in multiresponse nonparametric regression model. Songklanakarin J. Sci. Tech. (SJST) 2020, 42, 533–548. [Google Scholar]

- Mariati, M.P.A.M.; Budiantara, I.N.; Ratnasari, V. The application of mixed smoothing spline and Fourier series model in nonparametric regression. Symmetry 2021, 13, 2094. [Google Scholar] [CrossRef]

- Ruppert, D.; Wand, M.P.; Carroll, R.J. Semiparametric Regression; Cambridge University Press: New York, NY, USA, 2003. [Google Scholar]

- Heckman, N.E. Spline smoothing in a partly linear model. J. R. Stats. Soc. Ser. B. 1986, 48, 244–248. [Google Scholar] [CrossRef]

- Mohaisen, A.J.; Abdulhussein, A.M. Spline semiparametric regression models. J. Kufa Math. Comp. 2015, 2, 1–10. [Google Scholar]

- Sun, X.; You, J. Iterative weighted partial spline least squares estimation in semiparametric modeling of longitudinal data. Science in China Series A (Mathematics) 2003, 46, 724–735. [Google Scholar] [CrossRef]

- Chamidah, N.; Zaman, B.; Muniroh, L.; Lestari, B. Designing local standard growth charts of children in East Java province using a local linear estimator. Int. J. Innov. Creat. Change (IJICC) 2020, 13, 45–67. [Google Scholar]

- Aydin, D. Comparison of regression models based on nonparametric estimation techniques: Prediction of GDP in Turkey. Int. J. Math. Models Methods Appl. Sci. 2007, 1, 70–75. [Google Scholar]

- Aydın, D.; Ahmed, S.E.; Yılmaz, E. Estimation of semiparametric regression model with right-censored high-dimensional data. J. Stat. Comp. Simul. 2019, 89, 985–1004. [Google Scholar] [CrossRef]

- Gao, J.; Shi, P. M-Type smoothing splines in nonparametric and semiparametric regression models. Stat. Sinica 1997, 7, 1155–1169. [Google Scholar]

- Wang, Y.; Ke, C. Smoothing spline semiparametric nonlinear regression models. J. Comp. Graph. Stats. 2009, 18, 165–183. [Google Scholar] [CrossRef]

- Diana, R.; Budiantara, I.N.; Purhadi; Darmesto, S. Smoothing spline in semiparametric additive regression model with Bayesian approach. J. Math. Stats. 2013, 9, 161–168. [Google Scholar] [CrossRef][Green Version]

- Xue, L.; Xue, D. Empirical likelihood for semiparametric regression model with missing response data. J. Multivar. Anal. 2011, 102, 723–740. [Google Scholar] [CrossRef][Green Version]

- Wibowo, W.; Haryatmi, S.; Budiantara, I.N. On multiresponse semiparametric regression model. J. Math. Stats. 2012, 8, 489–499. [Google Scholar] [CrossRef]

- Li, J.; Zhang, C.; Doksum, K.A.; Nordheim, E.V. Simultaneous confidence intervals for semiparametric logistics regression and confidence regions for the multi-dimensional effective dose. Stat. Sinica 2010, 20, 637–659. [Google Scholar]

- Lestari, B.; Chamidah, N. Estimating regression function of multiresponse semiparametric regression model using smoothing spline. J. Southwest Jiaotong Univ. 2020, 55, 1–9. [Google Scholar]

- Hidayati, L.; Chamidah, N.; Budiantara, I.N. Confidence interval of multiresponse semiparametric regression model parameters using truncated spline. Int. J. Acad. Appl. Res. (IJAAR) 2020, 4, 14–18. [Google Scholar]

- Sahoo, P. Probability and Mathematical Statistics; University of Louisville: Lousville, KY, USA, 2013. [Google Scholar]

- Cramér, H.; Wold, H. Some theorems on distribution functions. J. London Math. Soc. 1936, 11, 290–295. [Google Scholar] [CrossRef]

- Sen, P.K.; Singer, J.M. Large Sample in Statistics: An Introduction with Applications; Chapman & Hall.: London, UK, 1993. [Google Scholar]

| 95% Confidence Interval | |||||||||

|---|---|---|---|---|---|---|---|---|---|

| Coefficients Vector | Lower | Upper | Lower | Upper | Lower | Upper | |||

| −1.308 | −0.917 | −0.527 | −1.164 | −0.929 | −0.693 | −1.033 | −0.923 | −0.813 | |

| 1.714 | 2.114 | 2.513 | 1.895 | 2.120 | 2.346 | 1.977 | 2.089 | 2.20 | |

| 3.644 | 4.044 | 4.444 | 3.811 | 4.041 | 4.271 | 3.979 | 4.087 | 4.196 | |

| −2.308 | −1.917 | −1.527 | −2.164 | −1.929 | −1.693 | −2.033 | −1.923 | −1.813 | |

| 2.714 | 3.114 | 3.513 | 2.895 | 3.120 | 3.346 | 2.977 | 3.089 | 3.200 | |

| 4.644 | 5.044 | 5.444 | 4.811 | 5.041 | 5.271 | 4.979 | 5.087 | 5.196 | |

| −0.808 | −0.417 | −0.027 | −0.664 | −0.429 | −0.193 | −0.533 | −0.423 | −0.313 | |

| 0.714 | 1.114 | 1.513 | 0.895 | 1.120 | 1.346 | 0.977 | 1.089 | 1.200 | |

| 2.644 | 3.044 | 3.444 | 2.811 | 3.041 | 3.271 | 2.979 | 3.087 | 3.196 | |

| p-Value | p-Value | p-Value | |||||

|---|---|---|---|---|---|---|---|

| 0.567 | 0.911 * | 0.566 | 0.806 * | 0.603 | 0.690 * | ||

| 0.842 | 0.830 * | 0.675 | 0.817 * | 0.675 | 0.789 * | ||

| 1.005 | 0.030 | 0.644 | 0.086 * | 0.694 | 0.033 | ||

| 0.804 | 0.030 | 0.701 | 0.046 | 0.671 | 0.020 | ||

| 0.568 | 0.912 * | 0.566 | 0.807 * | 0.603 | 0.689 * | ||

| 0.638 | 0.830 * | 0.675 | 0.817 * | 0.675 | 0.790 * | ||

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chamidah, N.; Lestari, B.; Budiantara, I.N.; Saifudin, T.; Rulaningtyas, R.; Aryati, A.; Wardani, P.; Aydin, D. Consistency and Asymptotic Normality of Estimator for Parameters in Multiresponse Multipredictor Semiparametric Regression Model. Symmetry 2022, 14, 336. https://doi.org/10.3390/sym14020336

Chamidah N, Lestari B, Budiantara IN, Saifudin T, Rulaningtyas R, Aryati A, Wardani P, Aydin D. Consistency and Asymptotic Normality of Estimator for Parameters in Multiresponse Multipredictor Semiparametric Regression Model. Symmetry. 2022; 14(2):336. https://doi.org/10.3390/sym14020336

Chicago/Turabian StyleChamidah, Nur, Budi Lestari, I. Nyoman Budiantara, Toha Saifudin, Riries Rulaningtyas, Aryati Aryati, Puspa Wardani, and Dursun Aydin. 2022. "Consistency and Asymptotic Normality of Estimator for Parameters in Multiresponse Multipredictor Semiparametric Regression Model" Symmetry 14, no. 2: 336. https://doi.org/10.3390/sym14020336

APA StyleChamidah, N., Lestari, B., Budiantara, I. N., Saifudin, T., Rulaningtyas, R., Aryati, A., Wardani, P., & Aydin, D. (2022). Consistency and Asymptotic Normality of Estimator for Parameters in Multiresponse Multipredictor Semiparametric Regression Model. Symmetry, 14(2), 336. https://doi.org/10.3390/sym14020336