Machine Learning Techniques for Increasing Efficiency of the Robot’s Sensor and Control Information Processing †

Abstract

1. Introduction

- Increasing the accuracy of the sensor information of the tactile or remote sensors;

- The minimization of the time of sensor signal formation;

- Decreasing the time of the sensor and control information processing;

- Decreasing the time of the robot’s control system decision-making process in uncertain conditions or a dynamic working environment with obstacles;

- Extending the functional characteristics of the robots based on the implementation of efficient sensors and high-speed calculation algorithms.

2. Related Works and Problem Statement

2.1. Machine Learning Techniques for Robotics in Industrial Automation

2.2. Machine Learning in Robot Path Planning and Control

2.3. Machine Learning for Information Processing in Robot Tactile and Remote Sensors

2.4. Machine Learning in Robot Computer Vision

2.5. Machine Learning for Increasing Reliability and Fault Diagnostics

- Implementing the machine learning algorithms for extension of functional features of adaptive robots; in particular, using fuzzy and neuro net approaches for sensor information processing within the recognition of the slippage direction of manipulated objects in the robot gripper during its contact with the obstacles;

- Approximating the “clamping force—air gap” nonstationary functional dependence based on a neuro-fuzzy technique for the mobile robot control system, which provides increased reliability for robot movement on inclined electromagnetic surfaces;

- Implementing the statistical learning theory for increasing the efficiency of a robot’s sensor system based on the developed algorithms of prediction control;

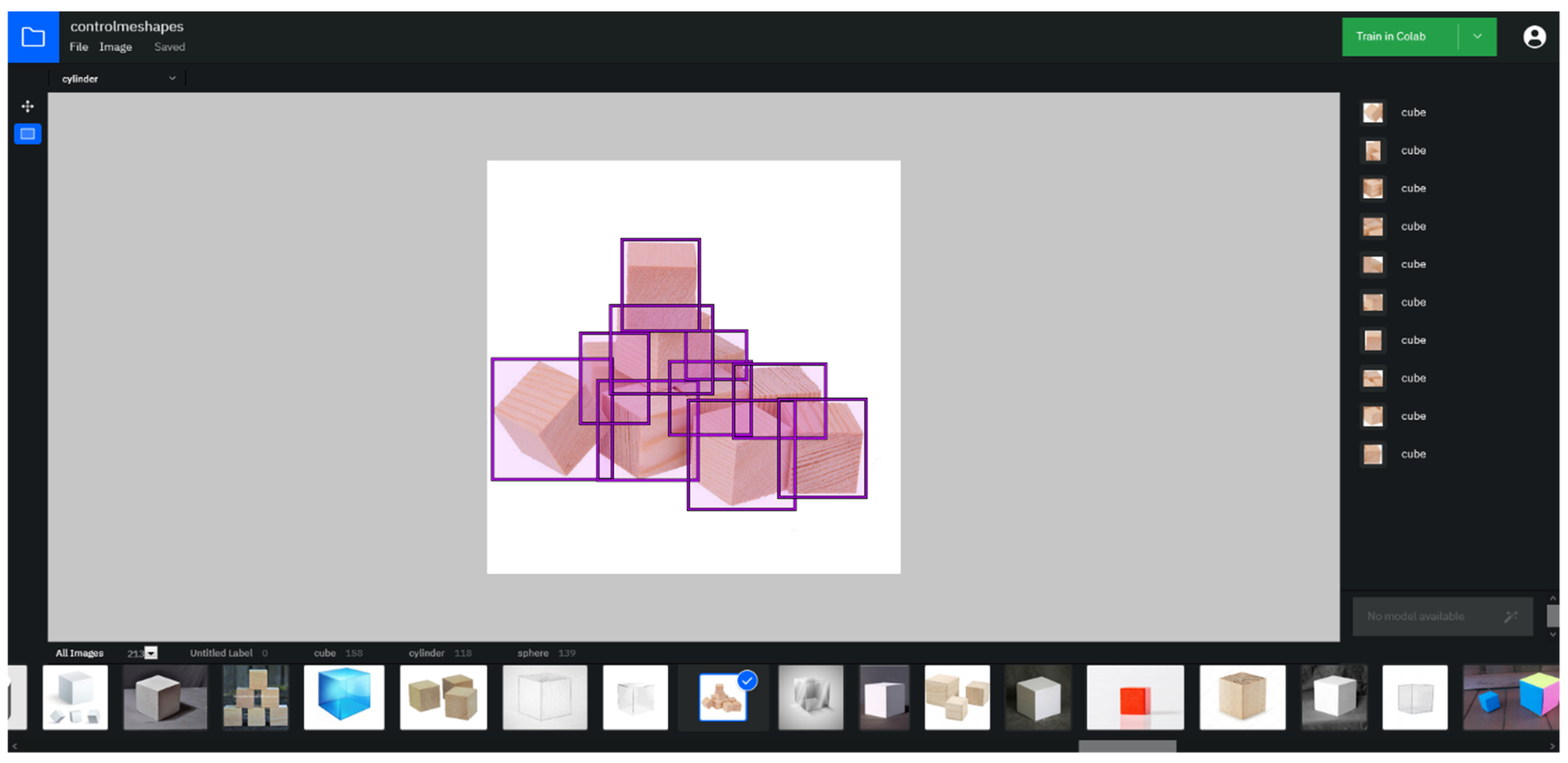

- Developing the machine learning models and corresponding software for recognizing manipulated objects [99] using video–sensor information processing with a discussion of the peculiarities of the convolutional-neural network’s training process.

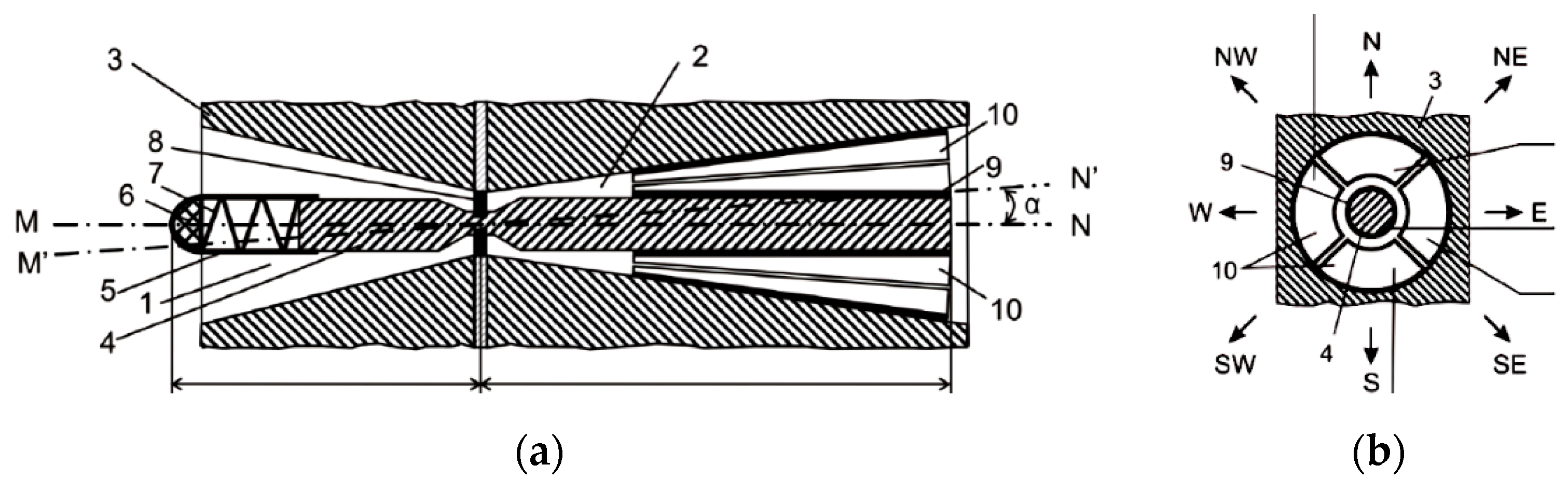

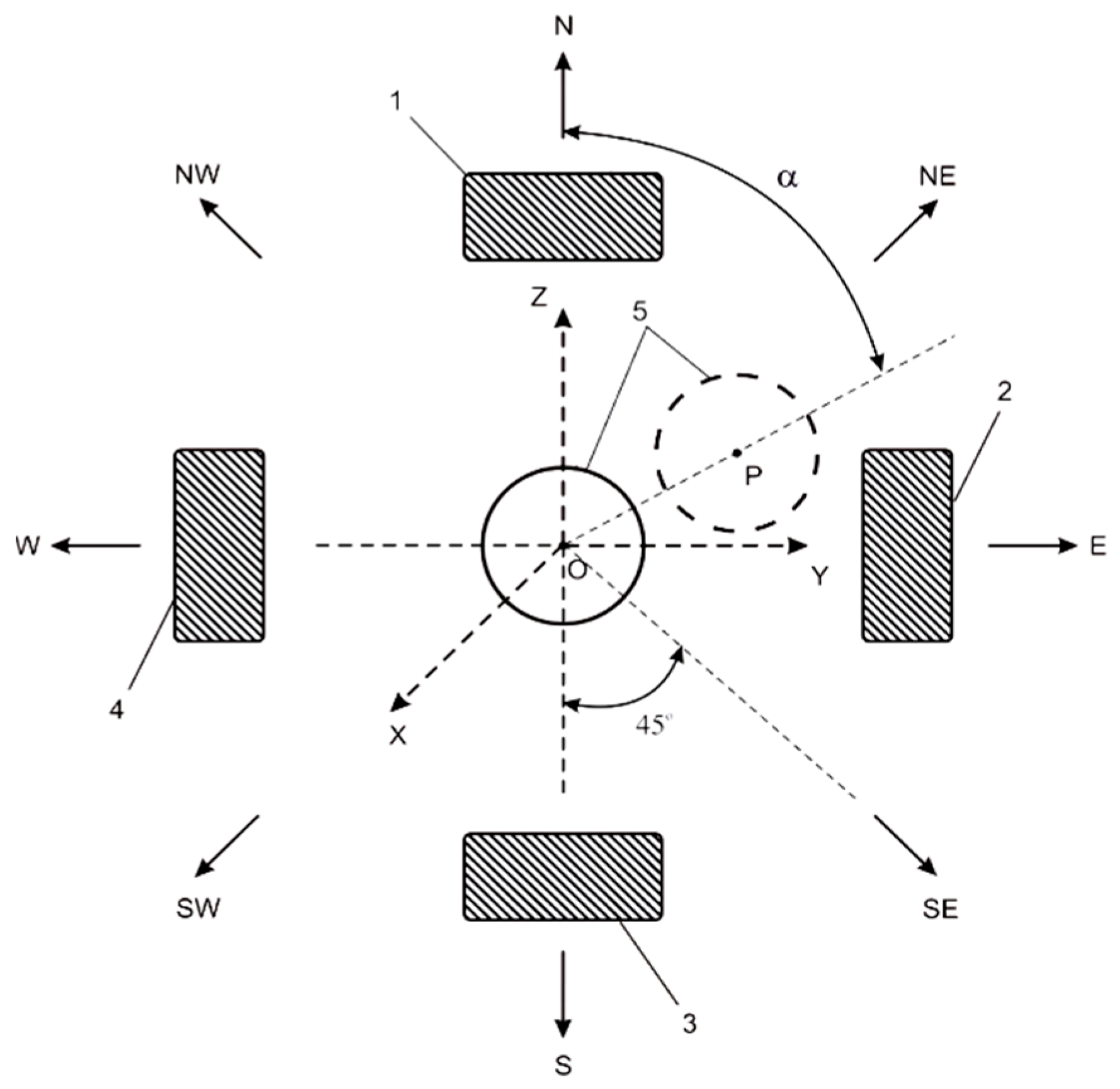

3. The Machine Learning Algorithms for Extension of Functional Properties of Adaptive Robots with Slip Displacement Sensors

4. Neuro-Fuzzy Techniques in Control Systems of Mobile Robots That Can Move the Operation Tool on Inclined, Vertical, and Ceiling Ferromagnetic Surfaces

5. Prediction Control of Robot Sensor and Control Systems Based on the Canonical Decomposition of the Statistical Data

- Critical operating conditions (high/low temperature, humidity, pressure, pollution, illumination, etc.);

- The autonomy of work;

- Changing the mutual orientation of the sensor and the recognition object;

- Work in real-time (almost always);

- Limited resources.

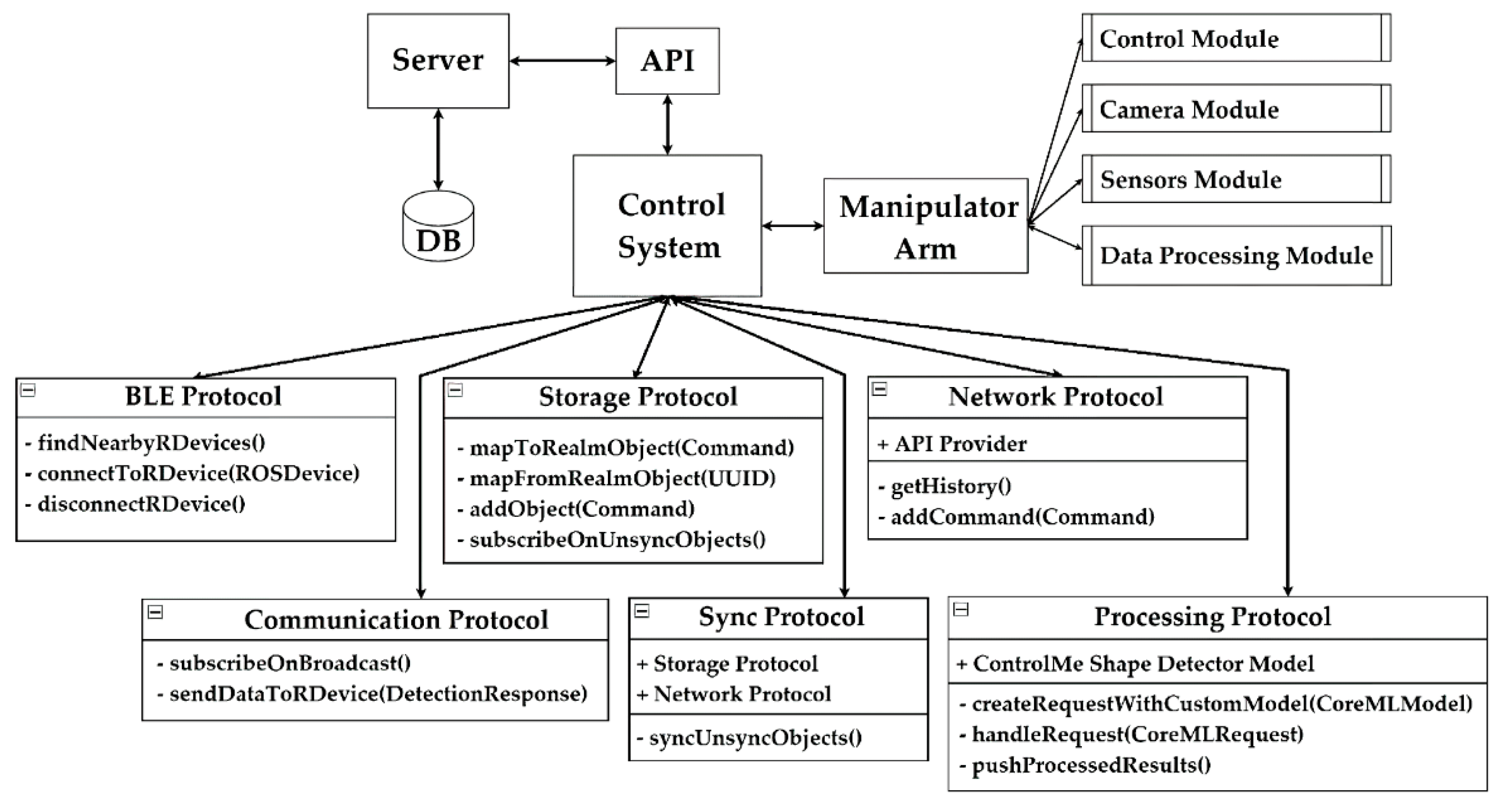

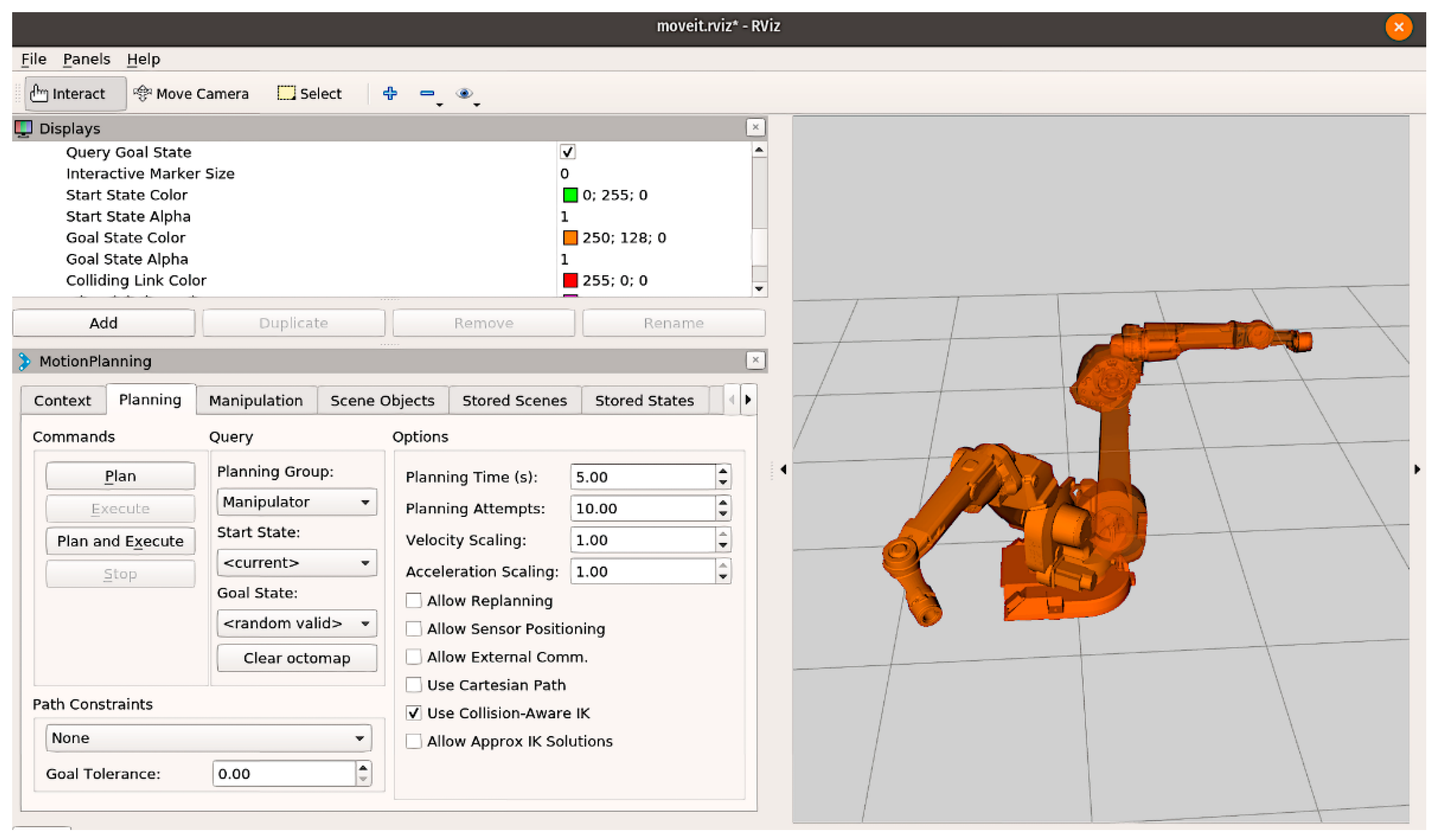

6. Control System Design and Robot Arm Simulation

6.1. “Control System” Component

6.2. “Server” Component

6.3. “Manipulator Arm” Component

7. Object Recognition in Robot Working Space Using Convolutional Neural Network

8. Conclusions

9. Patents

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Kawana, E.; Yasunobu, S. An Intelligent Control System Using Object Model by Real-Time Learning. In Proceedings of the SICE Annual Conference, Takamatsu, Japan, 17–20 September 2007; IEEE: Piscataway, NJ, USA, 2007; pp. 2792–2797. [Google Scholar] [CrossRef]

- Ayvaz, S.; Alpay, K. Predictive maintenance system for production lines in manufacturing: A machine learning approach using IoT data in real-time. Expert Syst. Appl. 2021, 173, 114598. [Google Scholar] [CrossRef]

- Kondratenko, Y.; Duro, R. (Eds.) Advances in Intelligent Robotics and Collaborative Automation; River Publishers: Aalborg, Denmark, 2015; ISBN 9788793237032. [Google Scholar]

- Kondratenko, Y.; Khalaf, P.; Richter, H.; Simon, D. Fuzzy Real-Time Multi-objective Optimization of a Prosthesis Test Robot Control System. In Advanced Control Techniques in Complex Engineering Systems: Theory and Applications, Dedicated to Professor Vsevolod M. Kuntsevich. Studies in Systems, Decision and Control; Springer Nature Switzerland AG: Cham, Switzerland, 2019; Volume 203, pp. 165–185. [Google Scholar] [CrossRef]

- Guo, J.; Xian, B.; Wang, F.; Zhang, X. Development of a three degree-of-freedom testbed for an unmanned helicopter and attitude control design. In Proceedings of the 32nd Chinese Control Conference, Xi’an, China, 26–28 July 2013; IEEE: Piscataway, NJ, USA, 2013; pp. 733–738. [Google Scholar]

- Kondratenko, Y.; Klymenko, L.; Kondratenko, V.; Kondratenko, G.; Shvets, E. Slip Displacement Sensors for Intelligent Robots: Solutions and Models. In Proceedings of the 2013 IEEE 7th International Conference on Intelligent Data Acquisition and Advanced Computing Systems (IDAACS), Berlin, Germany, 12–14 September 2013; IEEE: Piscataway, NJ, USA, 2013; pp. 861–866. [Google Scholar] [CrossRef]

- Derkach, M.; Matiuk, D.; Skarga-Bandurova, I. Obstacle Avoidance Algorithm for Small Autonomous Mobile Robot Equipped with Ultrasonic Sensors. In Proceedings of the 2020 IEEE 11th International Conference on Dependable Systems, Services and Technologies (DESSERT), Kyiv, Ukraine, 14–18 May 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 236–241. [Google Scholar] [CrossRef]

- Tkachenko, A.N.; Brovinskaya, N.M.; Kondratenko, Y.P. Evolutionary adaptation of control processes in robots operating in non-stationary environments. Mech. Mach. Theory 1983, 18, 275–278. [Google Scholar] [CrossRef]

- Kondratenko, Y.; Khademi, G.; Azimi, V.; Ebeigbe, D.; Abdelhady, M.; Fakoorian, S.A.; Barto, T.; Roshanineshat, A.; Atamanyuk, I.; Simon, D. Robotics and Prosthetics at Cleveland State University: Modern Information, Communication, and Modeling Technologies. In Communications in Computer and Information Science, Proceedings of the 12th International Conference on Information and Communication Technologies in Education, Research, and Industrial Applications (ICTERI 2016), Kyiv, Ukraine, 21–24 June 2016; Springer: Berlin/Heidelberg, Germany, 2016; Volume 783, pp. 133–155. [Google Scholar] [CrossRef]

- Patel, U.; Hatay, E.; D’Arcy, M.; Zand, G.; Fazli, P. A Collaborative Autonomous Mobile Service Robot. In Proceedings of the AAAI Fall Symposium on Artificial Intelligence for Human-Robot Interaction (AI-HRI), Arlington, VA, USA, 9–11 November 2017. [Google Scholar]

- Li, Z.; Huang, Z. Design of a type of cleaning robot with ultrasonic. J. Theor. Appl. Inf. Technol. 2013, 47, 1218–1222. [Google Scholar]

- Kondratenko, Y.P. Robotics, Automation and Information Systems: Future Perspectives and Correlation with Culture, Sport and Life Science. In Decision Making and Knowledge Decision Support Systems. Lecture Notes in Economics and Mathematical Systems; Gil-Lafuente, A.M., Zopounidis, C., Eds.; Springer: Cham, Switzerland, 2015; Volume 675, pp. 43–56. [Google Scholar] [CrossRef]

- Taranov, M.O.; Kondratenko, Y. Models of Robot’s Wheel-Mover Behavior on Ferromagnetic Surfaces. Int. J. Comput. 2018, 17, 8–14. [Google Scholar] [CrossRef]

- D’Arcy, M.; Fazli, P.; Simon, D. Safe Navigation in Dynamic, Unknown, Continuous, and Cluttered Environments. In Proceedings of the IEEE International Symposium on Safety, Security, and Rescue Robotics (SSRR), Shanghai, China, 11–13 October 2017; IEEE: Piscataway, NJ, USA, 2017. [Google Scholar] [CrossRef]

- Taranov, M.; Wolf, C.; Rudolph, J.; Kondratenko, Y. Simulation of Robot’s Wheel-Mover on Ferromagnetic Surfaces. In Proceedings of the 9th IEEE International Conference on Intelligent Data Acquisition and Advanced Computing Systems: Technology and Applications (IDAACS), Bucharest, Romania, 21–23 September 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 283–288. [Google Scholar] [CrossRef]

- Driankov, D.; Saffiotti, A. (Eds.) Fuzzy Logic Techniques for Autonomous Vehicle Navigation; Physica: Heidelberg, Germany, 2013; ISBN 978-3-7908-1341-8. [Google Scholar]

- Kondratenko, Y.P.; Rudolph, J.; Kozlov, O.V.; Zaporozhets, Y.M.; Gerasin, O.S. Neuro-fuzzy observers of clamping force for magnetically operated movers of mobile robots. Tech. Electrodyn. 2017, 5, 53–61. [Google Scholar] [CrossRef]

- Gerasin, O.S.; Topalov, A.M.; Taranov, M.O.; Kozlov, O.V.; Kondratenko, Y.P. Remote IoT-based control system of the mobile caterpillar robot. In Proceedings of the 16th International Conference on ICT in Education, Research and Industrial Applications. Integration, Harmonization and Knowledge Transfer (ICTERI 2020), Kharkiv, Ukraine, 6–10 October 2020; CEUR: Aachen, Germany, 2020; Volume 2740, pp. 129–136. [Google Scholar]

- Inoue, K.; Kaizu, Y.; Igarashi, S.; Imou, K. The development of autonomous navigation and obstacle avoidance for a robotic mower using machine vision technique. IFAC PapersOnLine 2019, 52, 173–177. [Google Scholar] [CrossRef]

- Zhang, Y.; Zhao, J.; Sun, J. Robot Path Planning Method Based on Deep Reinforcement Learning. In Proceedings of the 2020 IEEE 3rd International Conference on Computer and Communication Engineering Technology (CCET), Beijing, China, 14–16 August 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 49–53. [Google Scholar] [CrossRef]

- Qijie, Z.; Yue, Z.; Shihui, L. A path planning algorithm based on RRT and SARSA (λ) in unknown and complex conditions. In Proceedings of the 2020 Chinese Control and Decision Conference (CCDC), Hefei, China, 22–24 August 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 2035–2040. [Google Scholar] [CrossRef]

- Koza, J.R.; Bennett, F.H.; Andre, D.; Keane, M.A. Automated Design of Both the Topology and Sizing of Analog Electrical Circuits Using Genetic Programming. In Artificial Intelligence in Design ’96; Gero, J.S., Sudweeks, F., Eds.; Springer: Dordrecht, The Netherlands, 1996; pp. 151–170. [Google Scholar] [CrossRef]

- Hu, J.; Niu, H.; Carrasco, J.; Lennox, B.; Arvin, F. Voronoi-Based Multi-Robot Autonomous Exploration in Unknown Environments via Deep Reinforcement Learning. IEEE Trans. Veh. Technol. 2020, 69, 14413–14423. [Google Scholar] [CrossRef]

- Sokoliuk, A.; Kondratenko, G.; Sidenko, I.; Kondratenko, Y.; Khomchenko, A.; Atamanyuk, I. Machine Learning Algorithms for Binary Classification of Liver Disease. In Proceedings of the 2020 IEEE International Conference on Problems of Infocommunications. Science and Technology (PIC S&T), Kharkiv, Ukraine, 6–9 October 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 417–421. [Google Scholar] [CrossRef]

- Sheremet, A.; Kondratenko, Y.; Sidenko, I.; Kondratenko, G. Diagnosis of Lung Disease Based on Medical Images Using Artificial Neural Networks. In Proceedings of the 2021 IEEE 3rd Conference on Electrical and Computer Engineering (UKRCON), Lviv, Ukraine, 26–28 August 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 561–566. [Google Scholar] [CrossRef]

- Kondratenko, Y.; Sidenko, I.; Kondratenko, G.; Petrovych, V.; Taranov, M.; Sova, I. Artificial Neural Networks for Recognition of Brain Tumors on MRI Images. In Communications in Computer and Information Science, Proceedings of the 16th International Conference on Information and Communication Technologies in Education, Research, and Industrial Applications (ICTERI 2020), Kharkiv, Ukraine, 6–10 October 2020; Springer: Cham, Switzerland, 2020; Volume 1308, pp. 119–140. [Google Scholar] [CrossRef]

- Sova, I.; Sidenko, I.; Kondratenko, Y. Machine learning technology for neoplasm segmentation on brain MRI scans. In Proceedings of the 2020 PhD Symposium at ICT in Education, Research, and Industrial Applications (ICTERI-PhD 2020), Kharkiv, Ukraine, 6–10 October 2020; CEUR: Aachen, Germany, 2020; Volume 2791, pp. 50–59. [Google Scholar]

- Kassahun, Y.; Yu, B.; Tibebu, A.T.; Stoyanov, D.; Giannarou, S.; Metzen, J.H.; Poorten, E.V. Surgical robotics beyond enhanced dexterity instrumentation: A survey of machine learning techniques and their role in intelligent and autonomous surgical actions. Int. J. Comput. Assist. Radiol. Surg. 2016, 11, 553–568. [Google Scholar] [CrossRef]

- Striuk, O.; Kondratenko, Y. Generative Adversarial Neural Networks and Deep Learning: Successful Cases and Advanced Approaches. Int. J. Comput. 2021, 20, 339–349. [Google Scholar] [CrossRef]

- Mohammadi, A.; Meniailov, A.; Bazilevych, K.; Yakovlev, S.; Chumachenko, D. Comparative study of linear regression and sir models of COVID-19 propagation in Ukraine before vaccination. Radioelectron. Comput. Syst. 2021, 3, 5–14. [Google Scholar] [CrossRef]

- Striuk, O.; Kondratenko, Y.; Sidenko, I.; Vorobyova, A. Generative adversarial neural network for creating photorealistic images. In Proceedings of the 2nd IEEE International Conference on Advanced Trends in Information Theory (ATIT), Kyiv, Ukraine, 25–27 November 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 368–371. [Google Scholar] [CrossRef]

- Ren, C.; Kim, D.-K.; Jeong, D. A Survey of Deep Learning in Agriculture: Techniques and Their Applications. J. Inf. Process. Syst. 2020, 16, 1015–1033. [Google Scholar] [CrossRef]

- Kumar, M.; Kumar, A.; Palaparthy, V.S. Soil Sensors-Based Prediction System for Plant Diseases Using Exploratory Data Analysis and Machine Learning. IEEE Sens. J. 2021, 21, 17455–17468. [Google Scholar] [CrossRef]

- Atamanyuk, I.; Kondratenko, Y.; Sirenko, N. Management System for Agricultural Enterprise on the Basis of Its Economic State Forecasting. In Complex Systems: Solutions and Challenges in Economics, Management and Engineering. Studies in Systems, Decision and Control; Berger-Vachon, C., Gil Lafuente, A., Kacprzyk, J., Kondratenko, Y., Merigó, J., Morabito, C., Eds.; Springer: Berlin, Germany, 2018; Volume 125, pp. 453–470. [Google Scholar] [CrossRef]

- Atamanyuk, I.; Kondratenko, Y.; Poltorak, A.; Sirenko, N.; Shebanin, V.; Baryshevska, I.; Atamaniuk, V. Forecasting of Cereal Crop Harvest on the Basis of an Extrapolation Canonical Model of a Vector Random Sequence. In Proceedings of the 15th International Conference on Information and Communication Technologies in Education, Re-search, and Industrial Applications. Volume II: Workshops (ICTERI 2019), Kherson, Ukraine, 12–15 June 2019; Ermolaev, V., Mallet, F., Yakovyna, V., Kharchenko, V., Kobets, V., Kornilowicz, A., Kravtsov, H., Nikitchenko, M., Semerikov, S., Spivakovsky, A., Eds.; CEUR: Aachen, Germany, 2019; Volume 2393, pp. 302–315. [Google Scholar]

- Atamanyuk, I.P.; Kondratenko, Y.P.; Sirenko, N.N. Forecasting Economic Indices of Agricultural Enterprises Based on Vector Polynomial Canonical Expansion of Random Sequences. In Proceedings of the 12th International Conference on Information and Communication Technologies in Education, Research, and Industrial Applications (ICTERI 2016), Kyiv, Ukraine, 21–24 June 2016; CEUR: Aachen, Germany, 2016; Volume 1614, pp. 458–468. Available online: https://ceur-ws.org/Vol-1614/paper_91.pdf (accessed on 15 December 2021).

- Werners, B.; Kondratenko, Y. Alternative Fuzzy Approaches for Efficiently Solving the Capacitated Vehicle Routing Problem in Conditions of Uncertain Demands. In Complex Systems: Solutions and Challenges in Economics, Management and Engineering. Studies in Systems, Decision and Control; Berger-Vachon, C., Gil Lafuente, A., Kacprzyk, J., Kondratenko, Y., Merigó, J., Morabito, C., Eds.; Springer: Berlin, Germany, 2018; Volume 125, pp. 521–543. [Google Scholar] [CrossRef]

- Kondratenko, G.V.; Kondratenko, Y.P.; Romanov, D.O. Fuzzy Models for Capacitive Vehicle Routing Problem in Uncertainty. In Proceedings of the 17th International DAAAM Symposium Intelligent Manufacturing and Automation: Focus on Mechatronics & Robotics, Vienna, Austria, 8–11 November 2006; pp. 205–206. [Google Scholar]

- Zinchenko, V.; Kondratenko, G.; Sidenko, I.; Kondratenko, Y. Computer vision in control and optimization of road traffic. In Proceedings of the 2020 IEEE 3rd International Conference on Data Stream Mining and Processing (DSMP), Lviv, Ukraine, 21–25 August 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 249–254. [Google Scholar] [CrossRef]

- Jingyao, W.; Manas, R.P.; Nallappan, G. Machine learning-based human-robot interaction in ITS. Inf. Process. Manag. 2022, 59, 102750. [Google Scholar] [CrossRef]

- Leizerovych, R.; Kondratenko, G.; Sidenko, I.; Kondratenko, Y. IoT-complex for Monitoring and Analysis of Motor Highway Condition Using Artificial Neural Networks. In Proceedings of the 2020 IEEE 11th International Conference on Dependable Systems, Services and Technologies (DESSERT), Kyiv, Ukraine, 14–18 May 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 207–212. [Google Scholar] [CrossRef]

- Kondratenko, Y.P.; Kondratenko, N.Y. Reduced library of the soft computing analytic models for arithmetic operations with asymmetrical fuzzy numbers. In Soft Computing: Developments, Methods and Applications. Series: Computer Science, Technology and Applications; Casey, A., Ed.; NOVA Science Publishers: Hauppauge, NY, USA, 2016; pp. 1–38. [Google Scholar]

- Gozhyj, A.; Nechakhin, V.; Kalinina, I. Solar Power Control System based on Machine Learning Methods. In Proceedings of the 2020 IEEE 15th International Conference on Computer Sciences and Information Technologies (CSIT), Zbarazh, Ukraine, 23–26 September 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 24–27. [Google Scholar] [CrossRef]

- Chornovol, O.; Kondratenko, G.; Sidenko, I.; Kondratenko, Y. Intelligent forecasting system for NPP’s energy production. In Proceedings of the 2020 IEEE 3rd International Conference on Data Stream Mining and Processing (DSMP), Lviv, Ukraine, 21–25 August 2020; pp. 102–107. [Google Scholar] [CrossRef]

- Borysenko, V.; Kondratenko, G.; Sidenko, I.; Kondratenko, Y. Intelligent forecasting in multi-criteria decision-making. In Proceedings of the 3rd International Workshop on Computer Modeling and Intelligent Systems (CMIS-2020), Zaporizhzhia, Ukraine, 27 April–1 May 2020; CEUR: Aachen, Germany, 2020; Volume 2608, pp. 966–979. [Google Scholar]

- Lavrynenko, S.; Kondratenko, G.; Sidenko, I.; Kondratenko, Y. Fuzzy Logic Approach for Evaluating the Effectiveness of Investment Projects. In Proceedings of the 2020 IEEE 15th International Scientific and Technical Conference on Computer Sciences and Information Technologies (CSIT), Zbarazh, Ukraine, 23–26 September 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 297–300. [Google Scholar] [CrossRef]

- Bidyuk, P.I.; Gozhyj, A.; Kalinina, I.; Vysotska, V.; Vasilev, M.; Malets, M. Forecasting nonlinear nonstationary processes in machine learning task. In Proceedings of the 2020 IEEE 3rd International Conference on Data Stream Mining and Processing (DSMP), Lviv, Ukraine, 21–25 August 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 28–32. [Google Scholar] [CrossRef]

- Osborne, M.A.; Roberts, S.J.; Rogers, A.; Ramchurn, S.D.; Jennings, N.R. Towards Real-Time Information Processing of Sensor Network Data Using Computationally Efficient Multi-output Gaussian Processes. In Proceedings of the 2008 International Conference on Information Processing in Sensor Networks (IPSN 2008), St. Louis, MO, USA, 22–24 April 2008; IEEE: Piscataway, NJ, USA, 2008; pp. 109–120. [Google Scholar] [CrossRef]

- Atamanyuk, I.; Shebanin, V.; Kondratenko, Y.; Havrysh, V.; Lykhach, V.; Kramarenko, S. Identification of the Optimal Parameters for Forecasting the State of Technical Objects Based on the Canonical Random Sequence Decomposition. In Proceedings of the 2020 IEEE 11th International Conference on Dependable Systems, Services and Technologies (DESSERT), Kyiv, Ukraine, 14–18 May 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 259–264. [Google Scholar] [CrossRef]

- Li, X.; Shang, W.; Cong, S. Model-Based Reinforcement Learning for Robot Control. In Proceedings of the 2020 5th International Conference on Advanced Robotics and Mechatronics (ICARM), Shenzhen, China, 18–21 December 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 300–305. [Google Scholar] [CrossRef]

- Alsamhi, S.H.; Ma, O.; Ansari, S. Convergence of Machine Learning and Robotics Communication in Collaborative Assembly: Mobility, Connectivity and Future Perspectives. J. Intell. Robot. Syst. 2020, 98, 541–566. [Google Scholar] [CrossRef]

- Samadi, G.M.; Jond, H.B. Speed Control for Leader-Follower Robot Formation Using Fuzzy System and Supervised Machine Learning. Sensors 2021, 21, 3433. [Google Scholar] [CrossRef] [PubMed]

- Krishnan, S.; Wan, Z.; Bharadwaj, K.; Whatmough, P.; Faust, A.; Neuman, S.; Wei, G.-Y.; Brooks, D.; Reddi, V.J. Machine learning-based automated design space exploration for autonomous aerial robots. arXiv 2021, arXiv:2102.02988v1. [Google Scholar]

- El-Shamouty, M.; Kleeberger, K.; Lämmle, A.; Huber, M.F. Simulation-driven machine learning for robotics and automation. Tech. Mess. 2019, 86, 673–684. [Google Scholar] [CrossRef]

- Rajawat, A.S.; Rawat, R.; Barhanpurkar, K.; Shaw, R.N.; Ghosh, A. Robotic process automation with increasing productivity and improving product quality using artificial intelligence and machine learning. Artif. Intell. Future Gener. Robot. 2021, 1, 1–13. [Google Scholar] [CrossRef]

- Yaseerz, A.; Chen, H. Machine learning based layer roughness modeling in robotic additive manufacturing. J. Manuf. Process. 2021, 70, 543–552. [Google Scholar] [CrossRef]

- Wang, X.V.; Pinter, J.S.; Liu, Z.; Wang, L. A machine learning-based image processing approach for robotic assembly system. Procedia CIRP 2021, 104, 906–911. [Google Scholar] [CrossRef]

- Mayr, A.; Kißkalt, D.; Lomakin, A.; Graichen, K.; Franke, J. Towards an intelligent linear winding process through sensor integration and machine learning techniques. Procedia CIRP 2021, 96, 80–85. [Google Scholar] [CrossRef]

- Al-Mousawi, A.J. Magnetic Explosives Detection System (MEDS) based on wireless sensor network and machine learning. Measurement 2020, 151, 107112. [Google Scholar] [CrossRef]

- Martins, P.; Sá, F.; Morgado, F.; Cunha, C. Using machine learning for cognitive Robotic Process Automation (RPA). In Proceedings of the 2020 15th Iberian Conference on Information Systems and Technologies (CISTI), Seville, Spain, 24–27 June 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 1–6. [Google Scholar] [CrossRef]

- Segreto, T.; Teti, R. Machine learning for in-process end-point detection in robot-assisted polishing using multiple sensor monitoring. Int. J. Adv. Manuf. Technol. 2019, 103, 4173–4187. [Google Scholar] [CrossRef]

- Klingspor, V.; Morik, K.; Rieger, A. Learning Concepts from Sensor Data of a Mobile Robot. Mach. Learn. 1996, 23, 305–332. [Google Scholar] [CrossRef][Green Version]

- Zheng, Y.; Song, Q.; Liu, J.; Song, Q.; Yue, Q. Research on motion pattern recognition of exoskeleton robot based on multimodal machine learning model. Neural. Comput. Appl. 2020, 32, 1869–1877. [Google Scholar] [CrossRef]

- Radouan, A.M. Deep Learning for Robotics. J. Data Anal. Inf. Process. 2021, 9, 63–76. [Google Scholar] [CrossRef]

- Teng, X.; Lijun, T. Adoption of Machine Learning Algorithm-Based Intelligent Basketball Training Robot in Athlete Injury Prevention. Front. Neurorobot. 2021, 14, 117. [Google Scholar] [CrossRef]

- Shih, B.; Shah, D.; Li, J.; Thuruthel, T.G.; Park, J.-L.; Iida, F.; Bao, Z.; Kramer-Bottiglio, R.; Tolley, M.T. Electronic skins and machine learning for intelligent soft robots. Sci. Robot. 2020, 5, 1–11. [Google Scholar] [CrossRef]

- Ibrahim, A.; Younes, H.; Alameh, A.; Valle, M. Near Sensors Computation based on Embedded Machine Learning for Electronic Skin. Procedia Manuf. 2020, 52, 295–300. [Google Scholar] [CrossRef]

- Keser, S.; Hayber, Ş.E. Fiber optic tactile sensor for surface roughness recognition by machine learning algorithms. Sens. Actuators A 2021, 332, 113071. [Google Scholar] [CrossRef]

- Gonzalez, R.; Fiacchini, M.; Iagnemma, K. Slippage prediction for off-road mobile robots via machine learning regression and proprioceptive sensing. Rob. Auton. Syst. 2018, 105, 85–93. [Google Scholar] [CrossRef]

- Wei, P.; Wang, B. Multi-sensor detection and control network technology based on parallel computing model in robot target detection and recognition. Comput. Commun. 2020, 159, 215–221. [Google Scholar] [CrossRef]

- Martinez-Hernandez, U.; Rubio-Solis, A.; Prescott, T.J. Learning from sensory predictions for autonomous and adaptive exploration of object shape with a tactile robot. Neurocomputing 2020, 382, 127–139. [Google Scholar] [CrossRef]

- Scholl, C.; Tobola, A.; Ludwig, K.; Zanca, D.; Eskofier, B.M. A Smart Capacitive Sensor Skin with Embedded Data Quality Indication for Enhanced Safety in Human–Robot Interaction. Sensors 2021, 21, 7210. [Google Scholar] [CrossRef]

- Joshi, S.; Kumra, S.; Sahin, F. Robotic Grasping using Deep Reinforcement Learning. In Proceedings of the 2020 IEEE 16th International Conference on Automation Science and Engineering (CASE), Hong Kong, China, 20–21 August 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 1461–1466. [Google Scholar] [CrossRef]

- Bilal, D.K.; Unel, M.; Tunc, L.T.; Gonul, B. Development of a vision based pose estimation system for robotic machining and improving its accuracy using LSTM neural networks and sparse regression. Robot. Comput. Integr. Manuf. 2022, 74, 102262. [Google Scholar] [CrossRef]

- Mishra, S.; Jabin, S. Recent trends in pedestrian detection for robotic vision using deep learning techniques. In Artificial Intelligence for Future Generation Robotics; Shaw, R., Ghosh, A., Balas, V., Bianchini, M., Eds.; Elsevier: Amsterdam, The Netherlands, 2021; pp. 137–157. [Google Scholar] [CrossRef]

- Long, J.; Mou, J.; Zhang, L.; Zhang, S.; Li, C. Attitude data-based deep hybrid learning architecture for intelligent fault diagnosis of multi-joint industrial robots. J. Manuf. Syst. 2021, 61, 736–745. [Google Scholar] [CrossRef]

- Subha, T.D.; Subash, T.D.; Claudia Jane, K.S.; Devadharshini, D.; Francis, D.I. Autonomous Under Water Vehicle Based on Extreme Learning Machine for Sensor Fault Diagnostics. Mater. Today 2020, 24, 2394–2402. [Google Scholar] [CrossRef]

- Severo de Souza, P.S.; Rubin, F.P.; Hohemberger, R.; Ferreto, T.C.; Lorenzon, A.F.; Luizelli, M.C.; Rossi, F.D. Detecting abnormal sensors via machine learning: An IoT farming WSN-based architecture case study. Measurement 2020, 164, 108042. [Google Scholar] [CrossRef]

- Kamizono, K.; Ikeda, K.; Kitajima, H.; Yasuda, S.; Tanaka, T. FDC Based on Neural Network with Harmonic Sensor to Prevent Error of Robot IEEE Transactions on Semiconductor Manufacturing. IEEE Trans. Semicond. Manuf. 2021, 34, 291–295. [Google Scholar] [CrossRef]

- Bidyuk, P.; Kalinina, I.; Gozhyj, A. An Approach to Identifying and Filling Data Gaps in Machine Learning Procedures. In Lecture Notes in Computational Intelligence and Decision Making. ISDMCI 2021. Lecture Notes on Data Engineering and Communications Technologies; Babichev, S., Lytvynenko, V., Eds.; Springer: Cham, Switzerland, 2021; Volume 77, pp. 164–176. [Google Scholar] [CrossRef]

- Kondratenko, Y.; Kondratenko, N. Real-Time Fuzzy Data Processing Based on a Computational Library of Analytic Models. Data 2018, 3, 59. [Google Scholar] [CrossRef]

- Kondratenko, Y.; Gordienko, E. Implementation of the neural networks for adaptive control system on FPGA. In Proceedings of the 23th Int. DAAAM Symp. Intelligent Manufacturing and Automation, Vienna, Austria, 24–27 October 2012; Volume 23, pp. 389–392. [Google Scholar]

- Choi, W.; Heo, J.; Ahn, C. Development of Road Surface Detection Algorithm Using CycleGAN-Augmented Dataset. Sensors 2021, 21, 7769. [Google Scholar] [CrossRef]

- Kondratenko, Y.P.; Kuntsevich, V.M.; Chikrii, A.A.; Gubarev, V.F. (Eds.) Advanced Control Systems: Theory and Applications; Series in Automation, Control and Robotics; River Publishers: Gistrup, Denmark, 2021; ISBN 9788770223416. [Google Scholar]

- Control Systems: Theory and Applications; Kuntsevich, V.M., Gubarev, V.F., Kondratenko, Y.P., Lebedev, D., Lysenko, V., Eds.; Series in Automation, Control and Robotics; River Publishers: Gistrup, Denmark; Delft, The Netherlands, 2018; ISBN 10-8770220247. [Google Scholar]

- Gerasin, O.; Kondratenko, Y.; Topalov, A. Dependable robot’s slip displacement sensors based on capacitive registration elements. In Proceedings of the IEEE 9th International Conference on Dependable Systems, Services and Technologies (DESSERT), Kiev, Ukraine, 24–27 May 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 378–383. [Google Scholar] [CrossRef]

- Kondratenko, Y.; Gerasin, O.; Topalov, A. A simulation model for robot’s slip displacement sensors. Int. J. Comput. 2016, 15, 224–236. [Google Scholar] [CrossRef]

- Kondratenko, Y.P.; Gerasin, O.S.; Topalov, A.M. Modern Sensing Systems of Intelligent Robots Based on Multi-Component Slip Displacement Sensors. In Proceedings of the 2015 IEEE 8th International Conference on Intelligent Data Acquisition and Advanced Computing Systems: Technology and Applications (IDAACS), Warsaw, Poland, 24–26 September 2015; IEEE: Piscataway, NJ, USA, 2015; pp. 902–907. [Google Scholar] [CrossRef]

- Kondratenko, Y.P.; Kondratenko, V.Y. Advanced Trends in Design of Slip Displacement Sensors for Intelligent Robots. In Advances in Intelligent Robotics and Collaboration Automation. Series on Automation, Control and Robotics; Kondratenko, Y., Duro, R., Eds.; River Publishing: Gistrup, Denmark, 2015; pp. 167–191. [Google Scholar]

- Zaporozhets, Y.M.; Kondratenko, Y.P.; Shyshkin, O.S. Mathematical model of slip displacement sensor with registration of transversal constituents of magnetic field of sensing element. Tech. Electrodyn. 2012, 4, 67–72. [Google Scholar]

- Kondratenko, Y.; Shvets, E.; Shyshkin, O. Modern Sensor Systems of Intelligent Robots Based on the Slip Displacement Signal Detection. In Proceedings of the 18th Int. DAAAM Symp. Intelligent Manufacturing and Automation, Vienna, Austria, 24–27 October 2007; pp. 381–382. [Google Scholar]

- Kondratenko, Y.P. Measurement Methods for Slip Displacement Signal Registration. In Proceedings of the Second International Symposium on Measurement Technology and Intelligent Instruments, Wuhan, China, 29 October–5 November 1993; Volume 2101, pp. 1451–1461. [Google Scholar] [CrossRef]

- Massalim, Y.; Kappassov, Z.; Varol, H.A.; Hayward, V. Robust Detection of Absence of Slip in Robot Hands and Feet. Sensors 2021, 21, 27897–27904. [Google Scholar] [CrossRef]

- Kondratenko, Y.; Gerasin, O.; Kozlov, O.; Topalov, A.; Kilimanov, B. Inspection mobile robot’s control system with remote IoT-based data transmission. J. Mob. Multimed. 2021, 17, 499–522. [Google Scholar] [CrossRef]

- Kondratenko, Y.; Zaporozhets, Y.; Rudolph, J.; Gerasin, O.; Topalov, A.; Kozlov, O. Modeling of clamping magnets interaction with ferromagnetic surface for wheel mobile robots. Int. J. Comput. 2018, 17, 33–46. [Google Scholar] [CrossRef]

- Kondratenko, Y.Y.; Zaporozhets, Y.; Rudolph, J.; Gerasin, O.; Topalov, A.; Kozlov, O. Features of clamping electromagnets using in wheel mobile robots and modeling of their interaction with ferromagnetic plate. In Proceedings of the 9th IEEE International Conference on Intelligent Data Acquisition and Advanced Computing Systems: Technology and Applications (IDAACS), Bucharest, Romania, 21–23 September 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 453–458. [Google Scholar] [CrossRef]

- Taranov, M.; Rudolph, J.; Wolf, C.; Kondratenko, Y.; Gerasin, O. Advanced approaches to reduce number of actors in a magnetically-operated wheel-mover of a mobile robot. In Proceedings of the 2017 13th International Conference Perspective Technologies and Methods in MEMS Design (MEMSTECH), Lviv, Ukraine, 20–23 April 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 96–100. [Google Scholar] [CrossRef]

- Kondratenko, Y.P.; Kozlov, O.V.; Gerasin, O.S.; Zaporozhets, Y.M. Synthesis and research of neuro-fuzzy observer of clamping force for mobile robot automatic control system. In Proceedings of the 2016 IEEE First International Conference on Data Stream Mining & Processing (DSMP), Lviv, Ukraine, 23–27 August 2016; IEEE: Piscataway, NJ, USA, 2016; pp. 90–95. [Google Scholar] [CrossRef]

- Kondratenko, Y.; Sichevskyi, S.; Kondratenko, G.; Sidenko, I. Manipulator’s Control System with Application of the Machine Learning. In Proceedings of the 11th IEEE International Conference on Intelligent Data Acquisition and Advanced Computing Systems: Technology and Applications (IDAACS), Cracow, Poland, 22–25 September 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 363–368. [Google Scholar]

- Ueda, M.; Iwata, K.; Shingu, H. Tactile sensors for an industrial robot to detect a slip. In Proceedings of the 2nd Int. Symp. on Industrial Robots, Chicago, IL, USA, 18–21 April 1972; pp. 63–76. [Google Scholar]

- Ueda, M.; Iwata, K. Adaptive grasping operation of an industrial robot. In Proceedings of the 3rd Int. Symp. Ind. Robots, Zurich, Switzerland, 29–31 May 1973; pp. 301–310. [Google Scholar]

- Tiwana, M.I.; Shashank, A.; Redmond, S.J.; Lovell, N.H. Characterization of a capacitive tactile shear sensor for application in robotic and upper limb prostheses. Sens. Actuators A 2011, 165, 164–172. [Google Scholar] [CrossRef]

- Kondratenko, Y.P.; Kondratenko, V.Y.; Shvets, E.A. Intelligent Slip Displacement Sensors in Robotics. In Sensors, Transducers, Signal Conditioning and Wireless Sensors Networks; Yurish, S.Y., Ed.; Book Series: Advances in Sensors: Reviews; IFSA Publishing: Barcelona, Spain, 2016; Volume 3, pp. 37–66. [Google Scholar]

- Sheng, Q.; Xu, G.Y.; Liu, G. Design of PZT Micro-displacement acquisition system. Sens. Transducers 2014, 182, 119–124. [Google Scholar]

- Zadeh, L.A. Fuzzy sets. Inf. Control. 1965, 8, 338–353. [Google Scholar] [CrossRef]

- Mamdani, E.H. Application of fuzzy algorithm for control of a simple dynamic plant. Proc. Inst. Electr. Eng. 1974, 121, 1585–1588. [Google Scholar] [CrossRef]

- Kondratenko, Y.P.; Simon, D. Structural and parametric optimization of fuzzy control and decision making systems. In Recent Developments and the New Direction in Soft-Computing Foundations and Applications. Studies in Fuzziness and Soft Computing; Zadeh, L., Yager, R., Shahbazova, S., Reformat, M., Kreinovich, V., Eds.; Springer: Cham, Switzerland, 2018; Volume 361, pp. 273–289. [Google Scholar] [CrossRef]

- Kondratenko, Y.P.; Klymenko, L.P.; Al Zu’bi, E.Y.M. Structural Optimization of Fuzzy Systems’ Rules Base and Aggregation Models. Kybernetes 2013, 42, 831–843. [Google Scholar] [CrossRef]

- Kondratenko, Y.P.; Altameem, T.A.; Al Zubi, E.Y.M. The optimisation of digital controllers for fuzzy systems design. Adv. Model. Anal. 2010, 47, 19–29. [Google Scholar]

- Kondratenko, Y.P.; Al Zubi, E.Y.M. The Optimisation Approach for Increasing Efficiency of Digital Fuzzy Controllers. In Proceedings of the Annals of DAAAM for 2009 & Proceeding of the 20th Int. DAAAM Symp. Intelligent Manufacturing and Automation, Vienna, Austria, 25–28 November 2009; pp. 1589–1591. [Google Scholar]

- Kondratenko, Y.P.; Kozlov, A.V. Parametric optimization of fuzzy control systems based on hybrid particle swarm algorithms with elite strategy. J. Autom. Inf. Sci. 2019, 51, 25–45. [Google Scholar] [CrossRef]

- Pedrycz, W.; Li, K.; Reformat, M. Evolutionary reduction of fuzzy rule-based models. In Fifty Years of Fuzzy Logic and Its Applications. Studies in Fuzziness and Soft Computing; Tamir, D., Rishe, N., Kandel, A., Eds.; Springer: Cham, Switzerland, 2015; Volume 326, pp. 459–481. [Google Scholar] [CrossRef]

- Christensen, L.; Fischer, N.; Kroffke, S.; Lemburg, J.; Ahlers, R. Cost-Effective Autonomous Robots for Ballast Water Tank Inspection. J. Ship Prod. Des. 2011, 27, 127–136. [Google Scholar]

- Souto, D.; Faiña, A.; Lypez-Peca, F.; Duro, R.J. Lappa: A new type of robot for underwater non-magnetic and complex hull cleaning. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), Karlsruhe, Germany, 6–10 May 2013; IEEE: Piscataway, NJ, USA, 2013; pp. 3394–3399. [Google Scholar] [CrossRef]

- Ross, B.; Bares, J.; Fromme, C. A Semi-Autonomous Robot for Stripping Paint from Large Vessels. Int. J. Robot. Res. 2003, 22, 617–626. [Google Scholar] [CrossRef]

- Kondratenko, Y.; Kozlov, O.; Gerasin, O. Neuroevolutionary approach to control of complex multicoordinate interrelated plants. Int. J. Comput. 2019, 18, 502–514. [Google Scholar] [CrossRef]

- Gerasin, O.; Kozlov, O.; Kondratenko, G.; Rudolph, J.; Kondratenko, Y. Neural controller for mobile multipurpose caterpillar robot. In Proceedings of the 2019 10th IEEE International Conference on Intelligent Data Acquisition and Advanced Computing Systems: Technology and Applications (IDAACS), Metz, France, 18–21 September 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 222–227. [Google Scholar] [CrossRef]

- Michael, S. Metrological Characterization and Comparison of D415, D455, L515 RealSense Devices in the Close Range. Sensors 2021, 21, 7770. [Google Scholar] [CrossRef]

- Palar, P.S.; Vargas Terres, V.; Oliveira, A.S. Human–Robot Interface for Embedding Sliding Adjustable Autonomy Methods. Sensors 2020, 20, 5960. [Google Scholar] [CrossRef]

- Atamanyuk, I.P.; Kondratenko, V.Y.; Kozlov, O.V.; Kondratenko, Y.P. The Algorithm of Optimal Polynomial Extrapolation of Random Processes. In Lecture Notes in Business Information Processing, Proceedings of the International Conference Modeling and Simulation in Engineering, Economics and Management, New Rochelle, NY, USA, 30 May–1 June 2012; Engemann, K.J., Gil-Lafuente, A.M., Merigo, J.L., Eds.; Springer: Berlin, Germany, 2012; Volume 115, pp. 78–87. [Google Scholar] [CrossRef]

- Ryguła, A. Influence of Trajectory and Dynamics of Vehicle Motion on Signal Patterns in the WIM System. Sensors 2021, 21, 7895. [Google Scholar] [CrossRef]

- Khan, F.; Ahmad, S.; Gürüler, H.; Cetin, G.; Whangbo, T.; Kim, C.-G. An Efficient and Reliable Algorithm for Wireless Sensor Network. Sensors 2021, 21, 8355. [Google Scholar] [CrossRef]

- Yu, H.; Chen, C.; Lu, N.; Lu, N.; Wang, C. Deep Auto-Encoder and Deep Forest-Assisted Failure Prognosis for Dynamic Predictive Maintenance Scheduling. Sensors 2021, 21, 8373. [Google Scholar] [CrossRef] [PubMed]

- Atamanyuk, I.; Kondratenko, Y.; Shebaninm, V.; Mirgorod, V. Method of Polynomial Predictive Control of Fail-Safe Operation of Technical Systems. In Proceedings of the XIIIth International Conference the Experience of Designing and Application of CAD Systems in Microelectronics (CADSM), Lviv, Ukraine, 24–27 February 2015; IEEE: Piscataway, NJ, USA, 2015; pp. 248–251. [Google Scholar] [CrossRef]

- Atamanyuk, I.; Shebanin, V.; Kondratenko, Y.; Volosyuk, Y.; Sheptylevskyi, O.; Atamaniuk, V. Predictive Control of Electrical Equipment Reliability on the Basis of the Non-linear Canonical Model of a Vector Random Sequence. In Proceedings of the IEEE International Conference on Modern Electrical and Energy Systems (MEES), Kremenchuk, Ukraine, 23–25 September 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 130–133. [Google Scholar] [CrossRef]

- Everitt, B.S. (Ed.) The Cambridge Dictionary of Statistics; Cambridge University Press: New York, NY, USA, 2006; pp. 1–432. [Google Scholar]

- Nagrath, I.J.; Shripal, P.P.; Chand, A. Development and Implementation of Intelligent Control Strategy for Robotic Manipulator. In Proceedings of the IEEE/IAS International Conference on Industrial Automation and Control, Hyderabad, India, 5–7 January 1995; IEEE: Piscataway, NJ, USA, 1995; pp. 215–220. [Google Scholar] [CrossRef]

- Alagöz, Y.; Karabayır, O.; Mustaçoğlu, A.F. Target Classification Using YOLOv2 in Land-Based Marine Surveillance Radar. In Proceedings of the 28th Signal Processing and Communications Applications Conference (SIU), Gaziantep, Turkey, 5–7 October 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 1–4. [Google Scholar] [CrossRef]

- Zhang, H.; Zhang, L.; Li, P.; Gu, D. Yarn-dyed Fabric Defect Detection with YOLOV2 Based on Deep Convolution Neural Networks. In Proceedings of the 7th Data Driven Control and Learning Systems Conference (DDCLS), Enshi, China, 25–27 May 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 170–174. [Google Scholar] [CrossRef]

- Wang, M.; Liu, M.; Zhang, F.; Lei, G.; Guo, J.; Wang, L. Fast Classification and Detection of Fish Images with YOLOv2. In Proceedings of the OCEANS—MTS/IEEE Kobe Techno-Oceans Conference (OTO), Kobe, Japan, 28–31 May 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 1–4. [Google Scholar] [CrossRef]

- Li, L.; Chou, W.; Zhou, W.; Lou, M. Design Patterns and Extensibility of REST API for Networking Applications. IEEE Trans. Netw. Serv. Manag. 2016, 13, 154–167. [Google Scholar] [CrossRef]

- Rivera, S.; Iannillo, A.K.; Lagraa, S.; Joly, C.; State, R. ROS-FM: Fast Monitoring for the Robotic Operating System (ROS). In Proceedings of the 25th International Conference on Engineering of Complex Computer Systems (ICECCS), Singapore, 28–31 October 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 187–196. [Google Scholar] [CrossRef]

- Görner, M.; Haschke, R.; Ritter, H.; Zhang, J. MoveIt! Task Constructor for Task-Level Motion Planning. In Proceedings of the International Conference on Robotics and Automation (ICRA), Montreal, QC, Canada, 20–24 May 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 190–196. [Google Scholar] [CrossRef]

- Deng, H.; Xiong, J.; Xia, Z. Mobile Manipulation Task Simulation using ROS with MoveIt. In Proceedings of the IEEE International Conference on Real-time Computing and Robotics (RCAR), Okinawa, Japan, 14–18 July 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 612–616. [Google Scholar] [CrossRef]

- Youakim, D.; Ridao, P.; Palomeras, N.; Spadafora, F.; Ribas, D.; Muzzupappa, M. MoveIt!: Autonomous Underwater Free-Floating Manipulation. IEEE Robot. Autom. Mag. 2017, 24, 41–51. [Google Scholar] [CrossRef]

- Salameen, L.; Estatieh, A.; Darbisi, S.; Tutunji, T.A.; Rawashdeh, N.A. Interfacing Computing Platforms for Dynamic Control and Identification of an Industrial KUKA Robot Arm. In Proceedings of the 21st International Conference on Research and Education in Mechatronics (REM), Cracow, Poland, 9–11 December 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 1–5. [Google Scholar] [CrossRef]

- Gugnani, S.; Lu, X.; Panda, D.K. Swift-X: Accelerating OpenStack Swift with RDMA for Building an Efficient HPC Cloud. In Proceedings of the 17th IEEE/ACM International Symposium on Cluster, Cloud and Grid Computing (CCGRID), Madrid, Spain, 14–17 May 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 238–247. [Google Scholar] [CrossRef]

| Number of Epochs | Loss | Time in Seconds | Training Accuracy in % | Validation Accuracy in % |

|---|---|---|---|---|

| 1000 | 2.123 | 1860 | 87 | 82 |

| 2000 | 1.211 | 3660 | 95 | 90 |

| 3000 | 1.044 | 5340 | 95 | 93 |

| 4000 | 0.857 | 7020 | 99 | 93 |

| 5000 | 0.752 | 8760 | 100 | 95 |

| Number of Epochs | Loss | Time in Seconds | Training Accuracy in % | Validation Accuracy in % |

|---|---|---|---|---|

| 450 | 1.7 | 780 | 85 | 80 |

| 510 | 1.7 | 900 | 86 | 81 |

| 1000 | 1.2 | 1800 | 87 | 82 |

| 2000 | 0.95 | 3540 | 95 | 90 |

| 3000 | 0.73 | 5220 | 95 | 93 |

| 4000 | 0.68 | 6960 | 99 | 96 |

| 5000 | 0.63 | 8700 | 100 | 100 |

| Number of Epochs | Training Loss | Testing Loss | Training Accuracy in % | Testing Accuracy in % |

|---|---|---|---|---|

| 1 | 0.1187 | 0.0857 | 96.21 | 97.07 |

| 2 | 0.0599 | 0.0611 | 98.02 | 98.00 |

| 3 | 0.0515 | 0.0661 | 98.28 | 97.87 |

| Number of Epochs | Training Loss | Testing Loss | Training Accuracy in % | Testing Accuracy in % |

|---|---|---|---|---|

| 1 | 0.0469 | 0.0341 | 98.38 | 98.68 |

| 2 | 0.0092 | 0.0582 | 99.67 | 97.68 |

| 3 | 0.0060 | 0.0263 | 99.75 | 99.20 |

| 4 | 0.0058 | 0.0677 | 99.76 | 97.16 |

| Number of Epochs | Training Loss | Testing Loss | Training Accuracy in % | Testing Accuracy in % |

|---|---|---|---|---|

| 1 | 0.6128 | 0.3412 | 77.80 | 88.40 |

| 2 | 0.1623 | 0.1902 | 94.80 | 93.60 |

| 3 | 0.1330 | 0.2070 | 95.30 | 90.90 |

| 4 | 0.1011 | 0.1240 | 96.70 | 96.80 |

| 5 | 0.0302 | 0.0601 | 99.10 | 98.00 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kondratenko, Y.; Atamanyuk, I.; Sidenko, I.; Kondratenko, G.; Sichevskyi, S. Machine Learning Techniques for Increasing Efficiency of the Robot’s Sensor and Control Information Processing. Sensors 2022, 22, 1062. https://doi.org/10.3390/s22031062

Kondratenko Y, Atamanyuk I, Sidenko I, Kondratenko G, Sichevskyi S. Machine Learning Techniques for Increasing Efficiency of the Robot’s Sensor and Control Information Processing. Sensors. 2022; 22(3):1062. https://doi.org/10.3390/s22031062

Chicago/Turabian StyleKondratenko, Yuriy, Igor Atamanyuk, Ievgen Sidenko, Galyna Kondratenko, and Stanislav Sichevskyi. 2022. "Machine Learning Techniques for Increasing Efficiency of the Robot’s Sensor and Control Information Processing" Sensors 22, no. 3: 1062. https://doi.org/10.3390/s22031062

APA StyleKondratenko, Y., Atamanyuk, I., Sidenko, I., Kondratenko, G., & Sichevskyi, S. (2022). Machine Learning Techniques for Increasing Efficiency of the Robot’s Sensor and Control Information Processing. Sensors, 22(3), 1062. https://doi.org/10.3390/s22031062