1. Introduction

Cavitation is the process of apparition, augmentation, and collapse of the bubbles created around tiny particles in special pressure conditions in a liquid [

1]. The discontinuity of the liquids’ state characterizes this phenomenon when the pressure experiences a sudden local drop [

2]. The cavitation that appears at the ultrasound propagation in fluids is named ultrasound cavitation [

3,

4,

5].

Effects as corrosion–erosion [

6,

7,

8], the apparition of vibration and noise [

9,

10,

11], sonoluminescence [

12], and solid materials’ unpassivation are associated with the ultrasonic cavitation. Ultrasonic cavitation is employed for bleaching, soldering, emulsification, cleaning, extraction, nanoparticles synthesis, and separation [

3,

9].

Different researchers have analyzed acoustic cavitation and the phenomena associated with sound propagation in liquids for the last twenty years. Still, the physical processes related to the formation and the bubbles’ collapse are not entirely explained. They result from the interaction of the bubbles whose increasing-collapse cycles are not simultaneous, giving birth to the multiple interactions [

13,

14].

Industrial processes (among which ultrasonic cleaning and sonochemical processing) depend on the acoustic cavitation, which at its turn, is influenced by the conditions of the ultrasonic process [

15]. Last period, increasing interest in understanding the ultrasound cavitation mechanism and explaining its effects was noticed [

11,

12,

16,

17,

18].

Scientists presented applications of acoustic cavitation. They are based on the analysis of the signal spectrum that appeared in the cavitation field [

19]. It has been proved that the signal generated by exciting the transducer by a high frequency (in this case, 20 kHz) depends on the liquid nature and the power regime. The electrical signal is alternative as a result of the cyclical augmentation–explosion process suffered by the bubbles [

11,

16,

17,

20].

Other researchers focused on eliminating the noise produced in acoustic cavitation [

17] and explained the apparition of the electrical signal in cavitating liquids using the theory of double layer from the physics of plasma [

16,

20]. Differential equations, ARIMA, and artificial neural networks were proposed for characterizing the signal in the time domain [

3,

19,

21,

22].

Despite the good performances of the Box–Jenkins approaches on modeling such signals, they cannot capture the nonlinear behavior of the time series. Artificial intelligence (AI) methods have come to fill a gap in this direction. Generalized Regression Neural Networks (GRNN) proved their capabilities in modeling in domains such as medicine, IoT, agriculture, meteorology, and finances [

23,

24,

25,

26,

27,

28]. Although it requires a longer time to provide the results by comparison to other algorithms, GRNN [

29] have some capabilities leading many to recommend their use: they learn quickly, do not use backpropagation, are not sensitive to the noise, and can be modified to permit a multidimensional output [

29,

30].

Many scientists, among which Anjoy and Paul [

31], Fard and Akbari-Zadehb [

32], Khandelwal et al. [

33], Lopes et al. [

34], Wang et al. [

35] showed that the hybrid approaches often provide better models that the Box–Jenkins or AI models, separately, capturing both the linear and nonlinear behavior.

In the above context, the main contributions to the knowledge in the field are the following. (1) Building an experimental installation for the study of ultrasound cavitation in different liquids. (2) Collecting the electrical signals induced by cavitation in seawater. (3) Studying the statistical properties of these signals. (4) Proposing four alternative approaches—ARIMA, Generalized Regression Neural Network (GRNN), Wavelet-ARIMA, and Wavelet-ANN (the last two being hybrid)—for modeling the collected signals in seawater. (5) Using the built models for forecasting the series. (6) Comparing the performances of these approaches based on the forecast quality.

2. Materials and Methods

2.1. Experiment

The installation used for this experiment was built for the study in stationary and circulating liquids. It has the following main components (in the brackets, we refer to the numbers from

Figure 1) [

20]:

The tank where the studied liquid (seawater, here) is introduced (1).

The generator of high frequency (HAMEG HM8130, Germany), that worked at 20 kHz in this case (8).

A ceramic transducer (7), excited by the generator.

A pair of electrodes made of copper (13), used to collect the electrical signal produced when the generator works. They can be placed at various distances (from 6 to 61 cm) from the transducer. In the experiment presented here, the distance transducer-electrodes was 30 cm.

An acquisition board (14), connected to a computer (15) for recording the signal.

A cooling fan (11), utilized for preserving a constant temperature of the liquid (in this case 20 °C) during the experiment (given that the ultrasound cavitation is an exogenous phenomenon).

The command block (12), used for selecting different powers for the generator regime (80 W, 120 W, or 180 W). Here, 80 W was selected.

When the experiment is performed in stationary liquid, the other components of the experimental setup are not involved. Details on the setup functioning are given in [

20,

22].

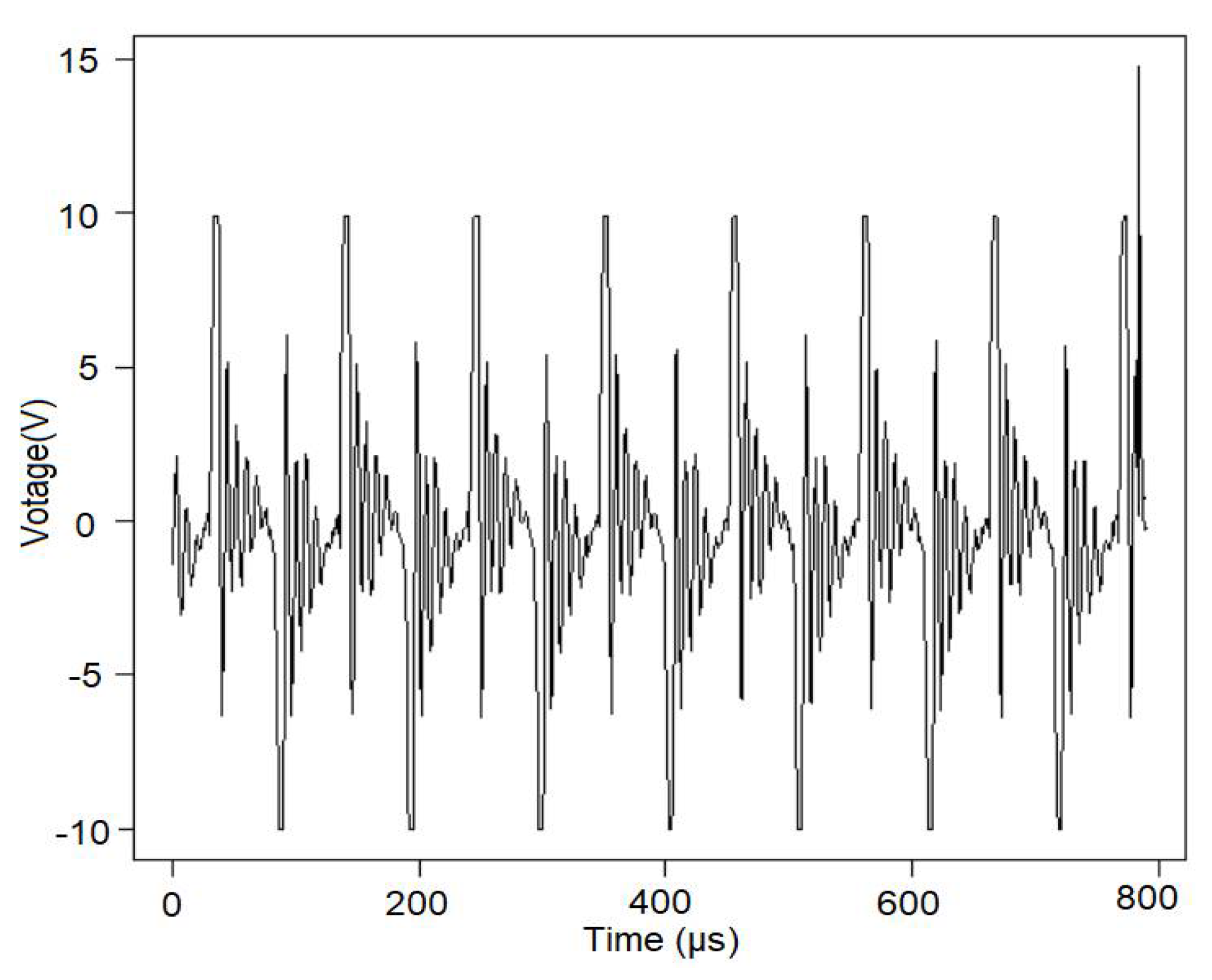

The studied signal is represented in

Figure 2.

2.2. Methodology

The signal, studied in the time domain, was subject to statistical tests performed at the significance level of 5%. The null hypotheses, the alternatives, and the tests performed are as follows:

Normality, against non-normality—the Shapiro–Wilk test [

36].

Homoskedasticity, against heteroskedasticity—the Levene test [

37].

Randomness, against non-randomness—the runs test [

38].

Stationarity against non-stationarity—KPSS test [

39].

The statistical analysis and mathematical modeling were performed in Minitab17, DTREG, and R software (version 4.0.5).

2.2.1. ARMA Models

A discrete-time process is a sequence of real random variables, (Xt; t∈Z).

A time process (Xt; t) is called stationary if it satisfies the following conditions:

and is invariant in time (M denotes the expectation),

(i.e., the covariance of and depends only on the lag h).

An ARMA(

p,

q) is a model whose equation is

where (

) {\displaystyle \varepsilon _{t}} are Gaussian, independent random variables with the same variance and the mean equal to zero.

The first p terms are autoregressive, and the last q—moving-average.

Given a data series, the model selection depends on the series stationarity or non-stationarity. If a series is not stationary, its stationarity can be reached by taking a difference of the d-th order of the series terms (.

ARMA(

p,

q) is a particular type of autoregressive integrated moving average processes, ARIMA(

p,

d,

q) [

40]. The degree of differentiation,

d, in ARIMA(

p,d,q) processes is chosen for reaching the series stationarity. If the series is stationary, then

d = 0, and the model is of ARIMA(

p,0,

d) = ARMA(

p,q) type [

40].

The chart of the series autocorrelation function provides information on selecting an ARMA(p,q). If p > q, the values of the autocorrelation function belong to a curve formed by a mixture of exponential decreasing and damped sinusoid functions.

The model validation is done by applying the

t-test to the model’s coefficients and testing the hypotheses on (

) {\displaystyle \varepsilon _{t}} (that should form a white noise). The selection of the best model among the ARMA competitors that fulfill the statistical tests on the coefficients and residual (

) {\displaystyle \varepsilon _{t}} is made based on the Akaike (AIC) or Schwarz (SCH) criteria {\displaystyle \varepsilon _{t}} [

40]. The lowest the AIC (SCH) is, the better the model is {\displaystyle \varepsilon _{t}}.

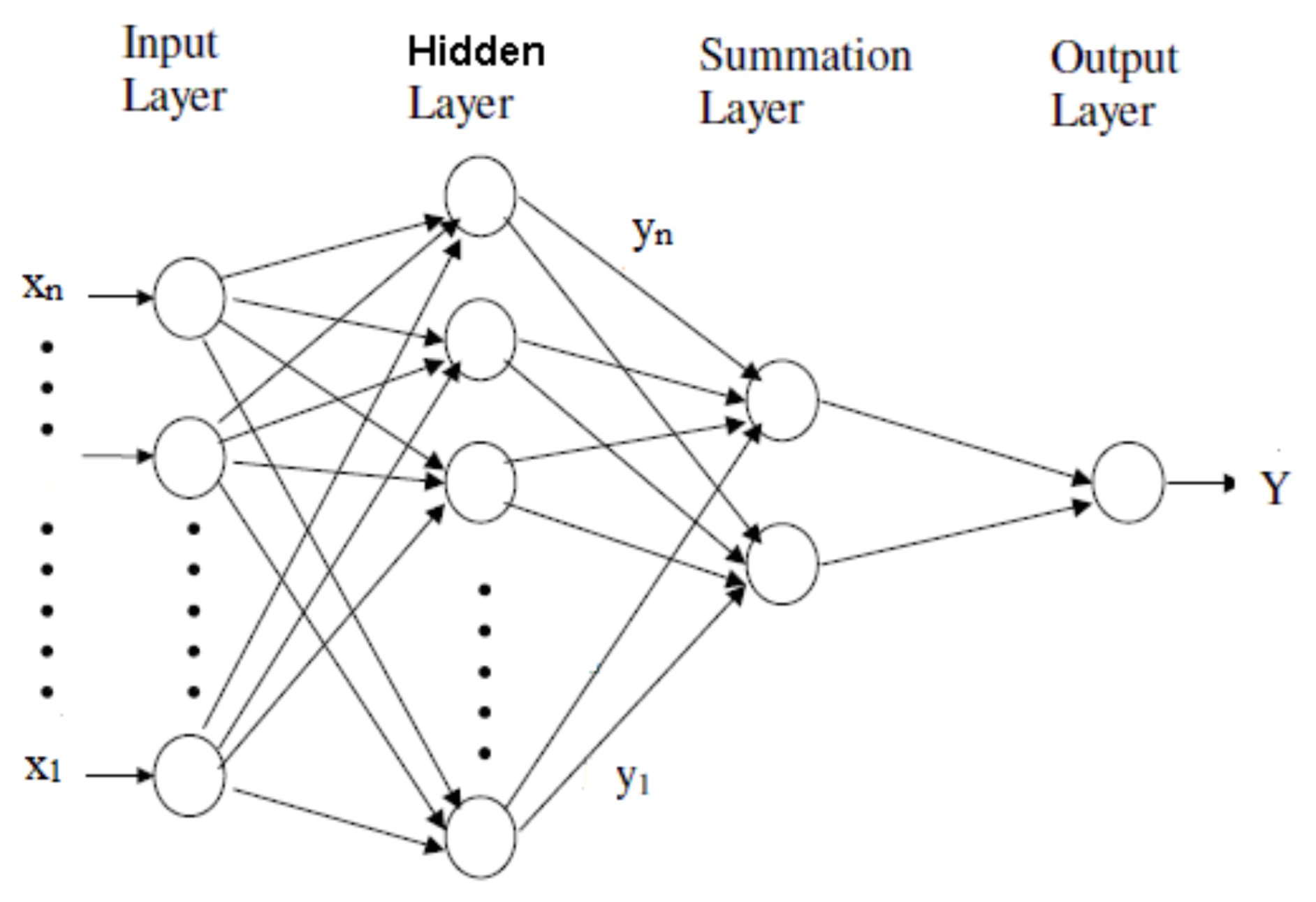

2.2.2. Generalized Regression Neural Networks

Generalized Regression Neural Network belongs to the group probabilistic neural networks. The GRNN architecture is presented in

Figure 3. It contains four layers—Input, Hidden, Summation, and Output [

29]. Given its ability to capture nonlinearities, learning without backpropagation, and the use of nonparametric regression, GRNN was widely utilized for solving classification, regression, and forecast problems that involve continuous variables [

29,

30].

The Input layer is formed of the vector of the recorded values

X = (

Figure 3), in this study, the signal’s values.

The neurons in the hidden layer apply a kernel function to the distances between the training data and the prediction point. The σ values are used for estimating the influence radius. The best σ should be determined when training the network to control the distributions of the kernel function [

29]. The most used approach for finding the optimum σ is minimizing the mean square error (MSE). This study employed the conjugate gradient algorithm to estimate the best σ for the entire model.

The RBF and reciprocal kernels have been utilized in this research. As no significant improvement was noticed in the second case, we report the results obtained using the RBF kernel.

After training, the number of neurons in the hidden layer is the same as the number of training samples involved in the modeling [

29]. The hidden layer also stores all the variables’ values.

The summation layer consists of the D- and S-summation neurons that collect the information from all the neurons from the previous layer. Both neurons sum the values from the hidden layer. The sum is weighted (with the sum of weights equal to 1) in the D-summation neuron [

29].

The output layer contains the results of dividing the values stored in each neuron from the previous layer, providing the most probable value for the dependent variable, Y.

An optimization process may also be performed to remove unnecessary neurons. In this case, the optimization criterion was error minimization.

To perform the modeling, the series was divided into a ratio training: test = 80:20 and 70:30. As the best results have been obtained using the first partition, we present this result. The number of iterations was fixed to 5000 (maximum) and 1000 (without improvement). The values of σ were searched in the interval 0.0001–10.

2.2.3. Wavelet-ARIMA Model

A discrete wavelet transform (DWT) is a used to decompose a signal into several sets; each of them is a time series of coefficients that describes the evolution of the signal in time in the corresponding frequency band [

41].

The discrete wavelet transform, usually utilized for modeling purposes, is not invariant to translation. To surpass this drawback, the non-decimated wavelet transform (NDWT) may be employed [

42].

Considering the signal

X = (

X1, …, XN), its decomposition by the à trous wavelet transform results in

where the first term represents a smooth version of the initial signal, and the term under the sum is the signal’s ‘detail’ at the scale

[

43,

44].

In this article, we used the Wavelet-ARIMA algorithm proposed in [

43] and developed by Aminghafari and Poggi [

45].

The non-decimated Haar algorithm employs the filter

h = (1/2, 1/2), leading to the following equation for the reconstruction of the value

is

where

and

are the approximation and detail coefficients in the NDWT.

Thus, for predicting

, it is necessary to estimate the non-decimated (NDW)

and

, using the equations [

45]

Therefore, the prediction equation will be written as

where

T signifies the transposition,

DN is formed by the dyadic lagged coefficients contained in the vectors w and cT,

is the vector of the unknown parameters, determined as

and

M is a fixed integer.

Two other vectors are involved in finding the solution of (5):

c, which contains the coefficients ,

w, which contains the weights in the linear combination of the actual versus the previous variables’ values.

Therefore, the procedure has the following stages [

45].

Perform the NDTW using Haar wavelets.

For a sequence from a time series (X1, ..., XN) at a level J, the output will be formed of (J + 1) vectors of size N.

Build the Equation (5).

For this aim, at all the decomposition levels, the maximum number of lagged predictor variables is set. Denote them by (, …, ). (j = 1, …, J) is defined to be “the order of the AR process fitted on the dyadically downsampled version of Wj (or CJ) starting from the last coefficient”.

Estimate Equation (5) utilizing the stepwise regression that links Xt and Dt−1.

Compute the prediction using Equation (5).

2.2.4. Wavelet-ANN Model

A wavelet-artificial neural network (WANN) is obtained by combining a feed-forward neural network with the wavelet model. Given that WANNs permit an efficient selection of the network input, can capture the nonlinearities, and converge to the global minimum objective function, they have been successfully utilized in various applications from atmospheric sciences, hydrology, and economics for short and long term forecast [

46,

47,

48,

49,

50,

51,

52].

In this study, a WANN with the following layers has been utilized (

Figure 4) [

31].

Input layer, where the regressors’ values (lagged variables) are introduced.

Hidden layer, formed by wavelons, instead of neurons. The variables coming from the previous layer are firstly transformed and decomposed in this layer. Then, the network processes separately each part, utilizing as activation functions (logistic, in this case) elements of an orthonormal wavelet basis.

In this study, the maximal overlap discrete wavelet transform (MODWT) and the Morlet function have been utilized. For details, the reader may see in [

31].

Output, which estimates the recorded (target) values.

The number of parameters in the WANN from

Figure 4 is the

pq + 2

q + 1, where

p = the lag, and

q = the number of nodes in the hidden layer.

For running the algorithm, the maximum decomposition level was determined to be 6. The number of lags was varied from 1 to 12. The best result, reported here, was obtained with lag = 5.

3. Results

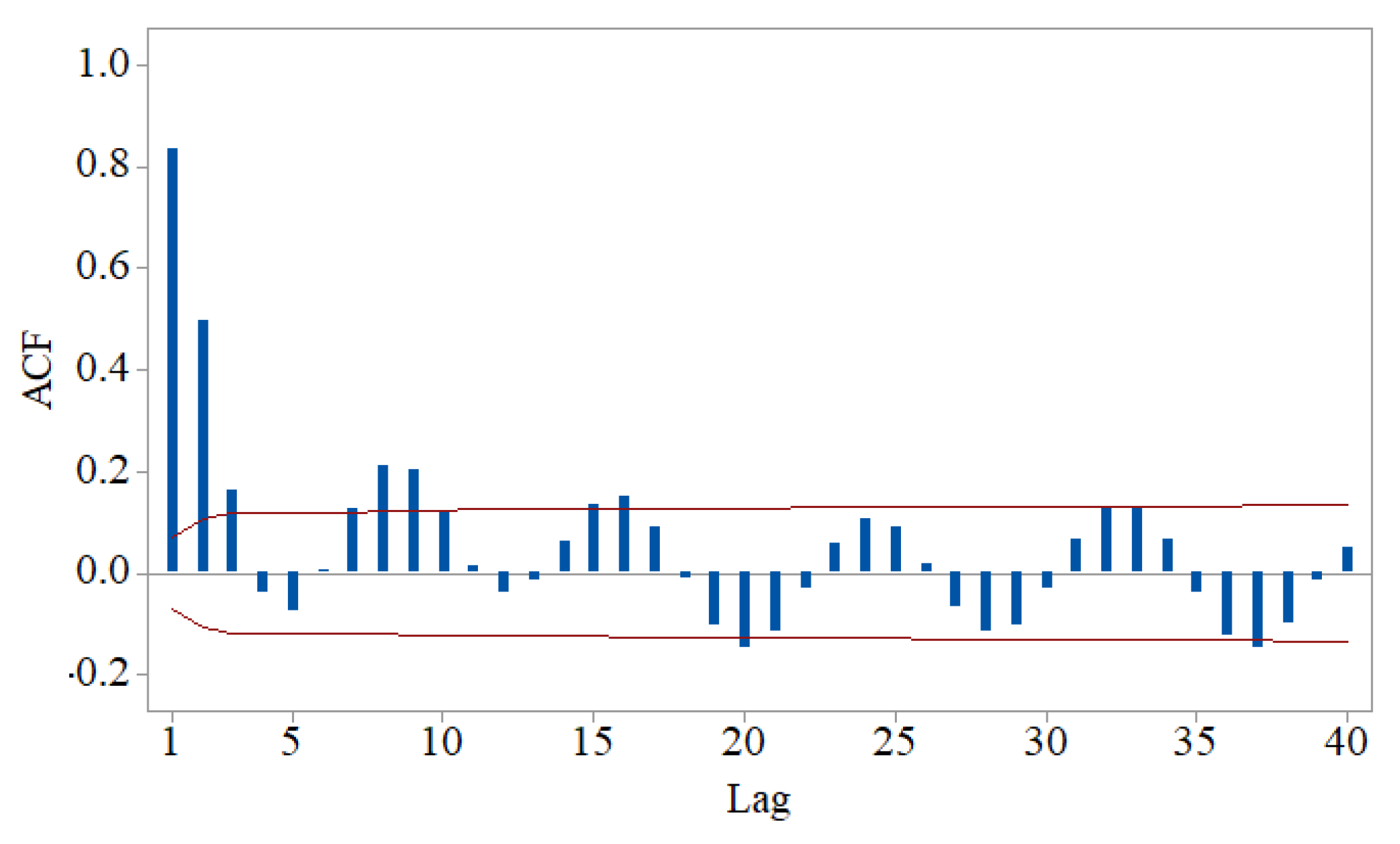

The Shapiro–Wilk and the runs test rejected the normality and randomness hypotheses, respectively. The KPSS and Levene tests did not reject the stationarity and homoskedasticity hypotheses. The autocorrelation function (ACF) chart from

Figure 5 confirms the signal’s autocorrelation.

As the stationarity hypothesis cannot be rejected, and ACF has a decreasing damped sinusoid form, one may search for an ARMA(

p,q) model with

p >

q. The best model found by applying Box–Jenkins methodology was of ARMA(3,1) type, with the coefficients (standard errors, respectively):

Therefore, the models’ equation is

After applying the Student t-test, it was found that the coefficients are significant at 0.001. The Box–Ljung test led to the rejection of the residuals’ autocorrelation. The Shapiro–Wilk and Levene test failed to reject the null hypotheses of normality and homoskedasticity, respectively, for the residual series. Thus, model (7) is correct from a statistical viewpoint.

The model was used to predict 48 values. These values are plotted in

Figure 6 (blue), after the recorded ones (black), accompanied by the confidence intervals at 0.95 and 0.99 (in grey in

Figure 6). The patterns of the signal and forecast are not similar, so the model should be improved. Therefore, the GRNN was built as described in

Section 2.2.2.

Figure 7 contains the plot of the values computed by the algorithm vs. those recorded. Remark the display of the points cloud along the green line representing the ideal situation of the superposition of the recorded and computed values, indicating a good fit.

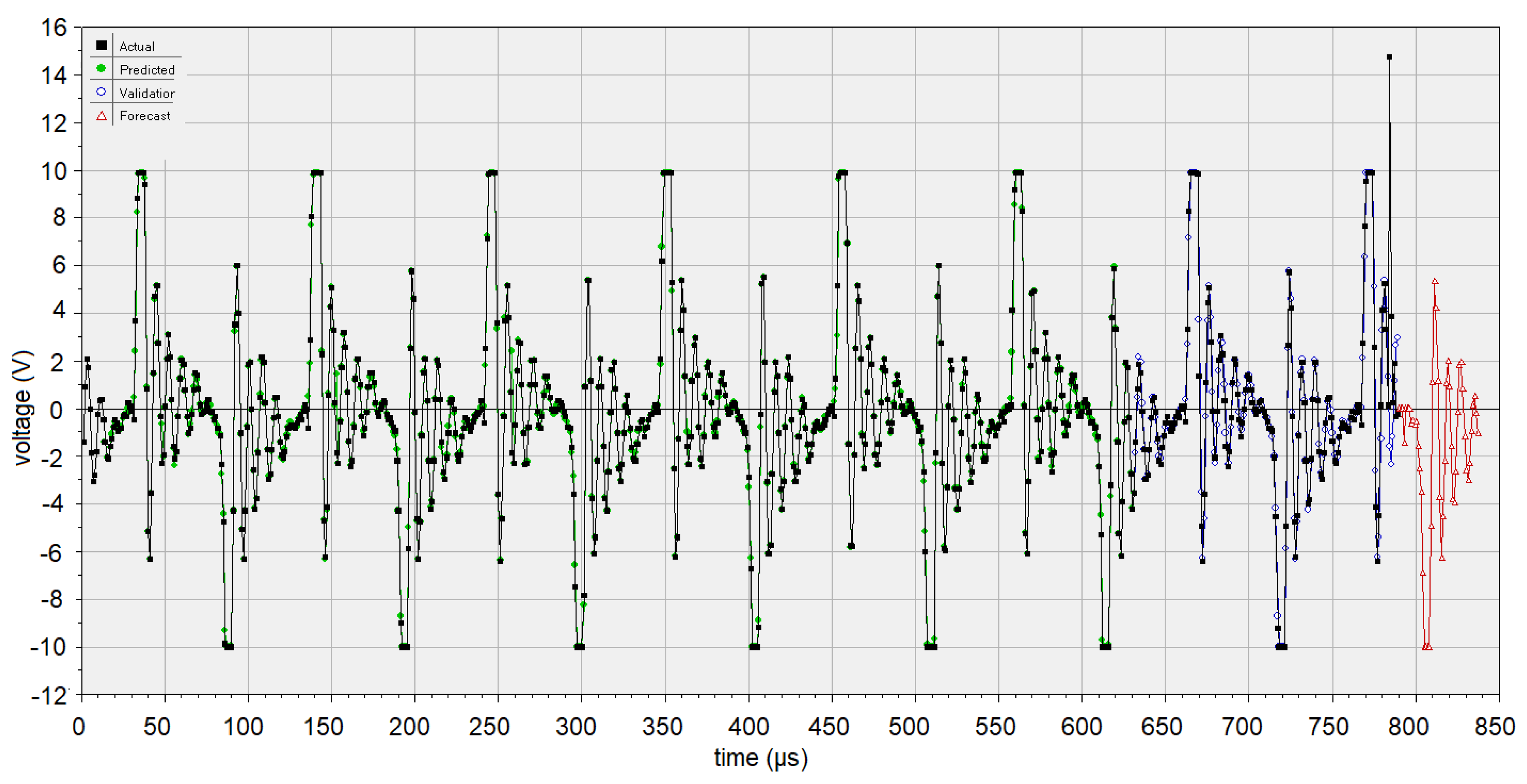

Using the trained network, the forecast of 48 values (unknown) of the data series is done. The pattern of subseries built by these new values is similar to that of the initial signal (

Figure 8, the red curve), confirming the model performances (discussed in the next section).

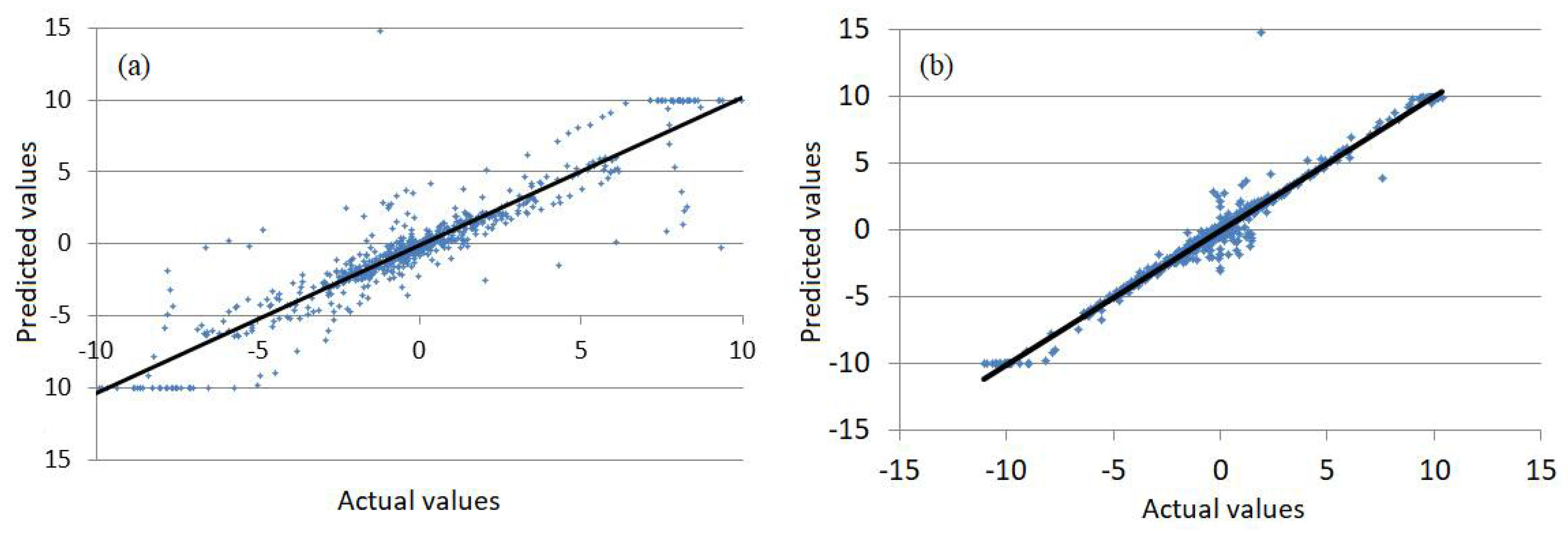

The charts of the recorded and computed values of the signal by using the hybrid algorithms are presented in

Figure 9. Comparing

Figure 9a,b, one may remark that the Wavelet-ARIMA model generally underestimates the maximum recorded values. The Wavelet-ANN model does not fit the first ten series values well but better fits the rest of the data series.

Figure 10 emphasizes the best forecast quality of the Wavelet-ANN model. It contains the chart of the computed values plotted against the recorded values. The points that have the coordinates (actual, predicted) are situated along the first bisectrice of the coordinates axes in both

Figure 10a,b. Still, they are more dissipated in

Figure 10a, corresponding to the Wavelet-ARIMA model, than in

Figure 10b, corresponding to the Wavelet-ANN model.

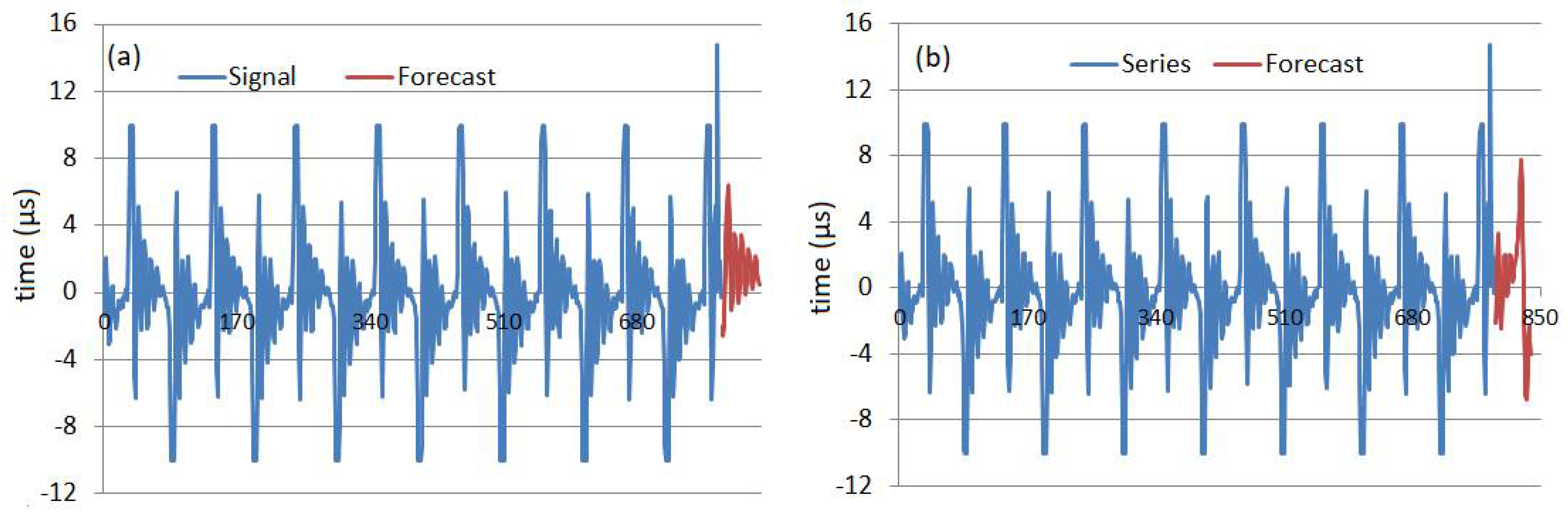

The charts of the signal and forecast of the next 48 values obtained using the Wavelet-ARIMA and Wavelet-ANN are presented in

Figure 11.

The Wavelet-ARIMA forecast series (in red,

Figure 10a) is sinusoid decreasing. The Wavelet-ANN forecast series (in red,

Figure 10b) and the signal have the same shape. Comparison of the forecast obtained by GRNN and Wavelet-ARIMA models show the same shapes and the highest amplitude for the last signal.

4. Discussion

To compare the models’ performances, we analyze the quality indicators.

For the ARIMA(3,1) model, the mean standard error (MSE) = 2.4278 and the mean absolute error (MAE) = 0.7747. Mean absolute predicted error (MAPE) could not be computed because there were some values equal to zero, so the formula did not permit the division by zero.

The indicators of the GRNN model’s quality are listed in

Table 1, together with their values.

On the training set, the variation explained by the (R2) and correlation between actual and predicted errors (rap) are very high, the mean standard error (MSE) and mean absolute error (MAE) are small, while the mean absolute predicted error (MAPE) is 18.43%, indicating a good behavior of the algorithm on the training set. On the test set, the values of R2 and rap are smaller than on the training set, and MAE and MSE remain low. A significant increase in MAPE is noticed (to 71.577%).

After using the hybrid approaches, the values computed for the goodness of fit indicators on the test sets are

for the Wavelet-ARIMA: R2 = 82.4829%, MAE = 0.858, MSE = 2.656, MAPE (%) = 98.81, rap = 0.907

for the Wavelets-ANN: R2 = 96.74%, MAE = 0.300, MSE = 0.489, MAPE (%) = 42.76, rap = 0.984.

Comparing the performances of the artificial intelligence-based models on the test sets (relevant for how well the model learns the data and can use it), the best model is WANN, no matter what indicator is considered. This is confirmed by comparing

Figure 8 and

Figure 11 that show the forecast for 48 μs.

ARIMA models have been widely used in real-life problems modeling given their ability to capture the linear dynamics of the phenomena. Still, as many processes present nonlinear patterns, the use of ARIMA is not always the best choice (given that it assumes the existence of linear correlation in the data series) [

31]. The main advantages of ANNs are their flexibility in modeling nonlinear features of data series, and the absence of the constrain on the a priori specifying the model form [

52]. The methods relying on the on wavelet transform can well describe the signal’s multiscale features [

51]. Combining these algorithms will benefit all their properties.

The results of this study are consistent with the findings of other researchers that emphasized that the use of hybrid approaches may improve the models’ performances [

31,

32,

51,

52,

53].

5. Conclusions

The statistical analysis of the electrical signal induced by ultrasound cavitation in seawater showed that the signal is not random, is stationary and homoskedastic. An ARMA model has been proposed and validated from a statistical viewpoint based on these characteristics. As the ARMA model did not provide a good forecast of the following 48 values, alternative models have been proposed and compared to find the best alternative. The first one—the GRNN model—learned the data well, gave good results on the test set, and was successfully employed for forecasting the next (unknown) 48 values of the series. The last two models were of the hybrid type.

The Wavelet—ARIMA model improved the forecast by comparison with the ARMA one; still, R2 = 82.4829%, and MAPE = 98.81% (high enough). Considering that the lowest the MAPE is, the better the model is, the best model was the Wavelet-ANN one (with R2 = 96.74%, and MAPE (%) = 42.76).

The study provided for the first time such modeling and comparison for the signal collected in seawater, in the cavitation field, using the experimental setup built by our team and built four alternative models, the hybrid ones being able to provide good forecasts. Similar analyses will be performed to verify that the last approach is the best one for modeling the signals collected in the same or different liquids (diesel, tape water, and transformer oil) to other powers of the ultrasound generator. These liquids were selected because our research team has published some preliminary results and until now good models for some of them were not obtained yet. The most important feature that recommends this hybrid model is its flexibility to capture the series’ nonlinearities. This procedure has no limitation related to the liquid type.

Author Contributions

Conceptualization, A.B.; methodology, A.B.; software, A.B. and C.Ș.D.; validation, A.B. and C.Ș.D.; formal analysis, A.B. and C.Ș.D.; investigation, A.B. and C.Ș.D.; data curation, A.B.; writing—original draft preparation, A.B. and C.Ș.D.; writing—review and editing, A.B. and C.Ș.D.; supervision, A.B.; project administration, A.B. All authors have read and agreed to the published version of the manuscript.

Funding

The APC was funded by Transylvania University of Brașov, Romania.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Data will be available on request from the authors.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Flynn, H.G. Physics of acoustic cavitation in liquids. In Physical Acoustics; Mason, W.P., Ed.; Academic Press: New York, NY, USA, 1963; Volume 1, Part B; pp. 57–172. [Google Scholar]

- Bai, L.; Yan, J.; Zeng, Z.; Ma, Y. Cavitation in thin liquid layer: A review. Ultrason. Sonochem. 2020, 66, 105092. [Google Scholar] [CrossRef] [PubMed]

- Hadi, N.A.H.; Ahmad, A. Experimental study of the characteristics of acoustic cavitation bubbles under the influence of ultrasonic wave. IOP Conf. Ser. Mater. Sci. Eng. 2020, 808, 012042. [Google Scholar] [CrossRef]

- Ferrari, A. Fluid dynamics of acoustic and hydrodynamic cavitation in hydraulic power systems. Proc. R. Soc. A Math. Phys. Eng. Sci. 2017, 473, 20160345. [Google Scholar] [CrossRef] [PubMed]

- Nguyen, T.; Asakura, Y.; Koda, S.; Yasuda, K. Dependence of cavitation, chemical effect, and mechanical effect thresholds on ultrasonic frequency. Ultrason. Sonochem. 2017, 39, 301–306. [Google Scholar] [CrossRef] [PubMed]

- Chahine, G.L.; Franc, J.P.; Karimi, A. Cavitation and cavitation erosion. In Advanced Experimental and Numerical Techniques for Cavitation Erosion Prediction. Fluid Mechanics and Its Applications; Kim, K.H., Chahine, G., Franc, J.P., Karimi, A., Eds.; Springer: Dordrecht, The Netherlands, 2014; Volume 106, pp. 3–20. [Google Scholar] [CrossRef]

- Lin, C.; Zhao, Q.; Zhao, X.; Yang, Y. Cavitation erosion of metallic materials. Int. J. Geogr. Environ. 2018, 4, 1–8. [Google Scholar] [CrossRef][Green Version]

- Wharton, J.A.; Wood, R.J.K. Influence of flow conditions on the corrosion of AISI 304L stainless steel. Wear 2004, 256, 525–536. [Google Scholar] [CrossRef]

- Vanhille, C.; Campos-Pozuelo, C. Acoustic cavitation mechanism: A nonlinear model. Ultrason. Sonochem. 2012, 19, 217–220. [Google Scholar] [CrossRef]

- Fortes-Patella, R.; Choffat, T.; Reboud, J.L.; Archer, A. Mass loss simulation in cavitation erosion: Fatigue criterion approach. Wear 2013, 300, 205–215. [Google Scholar] [CrossRef]

- Petkovsek, M.; Dular, M. Simultaneous observation of cavitation structures and cavitation erosion. Wear 2013, 300, 55–64. [Google Scholar] [CrossRef]

- Thiemann, A.; Holsteyns, F.; Cairós, C.; Mettin, R. Sonoluminescence and dynamics of cavitation bubble populations in sulfuric acid. Ultrason. Sonochem. 2017, 34, 663–676. [Google Scholar] [CrossRef]

- Ashokkumar, M. The characterization of acoustic cavitation bubbles—An overview. Ultrason. Sonochem. 2011, 18, 864. [Google Scholar] [CrossRef] [PubMed]

- Hauptmann, M.; Struyf, H.; Mertens, P.; Heyns, M.; De Gendt, S.; Glorieux, C.; Brems, S. Towards an understanding and control of cavitation activity in 1MHz ultrasound fields. Ultrason. Sonochem. 2013, 20, 77–88. [Google Scholar] [CrossRef] [PubMed]

- Menzl, G.; Gonzalez, M.A.; Geiger, P.; Caupin, F.; Abascal, J.F.; Valeriani, C.; Dellago, C. Cavitation in water under tension. Proc. Nat. Acad. Sci. USA 2016, 113, 13582–13587. [Google Scholar] [CrossRef] [PubMed]

- Bărbulescu, A.; Mârza, V. Electrical effect induced at the boundary of an acoustic cavitation zone. Acta Phys. Pol. B. 2006, 37, 507–518. [Google Scholar]

- Song, J.H.; Johansen, K.; Prentice, P. An analysis of the acoustic cavitation noise spectrum: The role of periodic shock waves. J. Acoust. Soc. Am. 2016, 140, 2494. [Google Scholar] [CrossRef]

- Li, B.; Gu, Y.; Chen, M. An experimental study on the cavitation of water with dissolved gases. Exp. Fluids 2017, 58, 164. [Google Scholar] [CrossRef]

- Bărbulescu, A.; Dumitriu, C.S. ARIMA and Wavelet-ARIMA models for the signal produced by ultrasound in diesel. In Proceedings of the 25th ICSTCC 2021, Iasi, Romania, 20–23 October 2021. [Google Scholar] [CrossRef]

- Bărbulescu, A.; Mârza, V.; Dumitriu, C.S. Installation and Method for Measuring and Determining the Effects Produced by Cavitation in Ultrasound Field in Stationary and Circulating Media. Romanian Patent No. RO 123086-B1, 30 April 2010. [Google Scholar]

- Dumitriu, C.Ș.; Dragomir, F. Modeling the signals collected in cavitation field by stochastic and Artificial intelligence methods. In Proceedings of the 13th International Conference on Electronics, Computers and Artificial Intelligence (ECAI), Pitești, Romania, 1–3 July 2021; pp. 1–4. [Google Scholar] [CrossRef]

- Dumitriu, C.Ș.; Barbulescu, A. Studies on the Copper Based Alloys Used in Naval Constructions-Modeling the Mass Loss in Different Media; Sitech: Craiova, Romania, 2007. (In Romanian) [Google Scholar]

- Hannan, S.; Manza, R.; Ramteke, R. Generalized regression neural network and radial basis function for heart disease diagnosis. Int. J. Comput. Appl. 2010, 7, 7–13. [Google Scholar] [CrossRef]

- Niu, D.; Liang, Y.; Hong, W.-C. Wind speed forecasting based on EMD and GRNN optimized by FOA. Energies 2017, 10, 2001. [Google Scholar] [CrossRef]

- Tkachenko, R.; Izonin, I.; Kryvinska, N.; Dronyuk, I.; Zub, K. An Approach towards increasing prediction accuracy for the recovery of miss ing iot data based on the GRNN-SGTM ensemble. Sensors 2020, 20, 2625. [Google Scholar] [CrossRef]

- Yu, P.; Lu, Y.; Wei, L.; Lomg, W.; Su, X. Application of general regression neural network (GRNN) on predicting yield of Cassava. Southwest China J. Agr. Sci. 2009, 22, 1709–1713. [Google Scholar]

- Kişi, Ö. Generalized regression neural networks for evapotranspiration modeling. Hydrol. Sci. J. 2006, 51, 1092–1105. [Google Scholar] [CrossRef]

- Lin, W.-Y.; Chu, Y.-D.; Liao, D.-Y. Using artificial intelligence technology for corporate financial diagnostics. Int. J. Bus. Financ. Manage. Res. 2018, 6, 7–21. [Google Scholar]

- Specht, D.F. A General Regression Neural Network. IEEE T. Neural Netw. 1991, 2, 568–576. [Google Scholar] [CrossRef] [PubMed]

- Zaknich, A. Neural Networks for Intelligent Signal Processing; World Scientific: Hackensack, NJ, USA, 2003. [Google Scholar]

- Anjoy, P.; Paul, R.K. Comparative performance of wavelet-based neural network approaches. Neural Comput. Appl. 2019, 31, 3443–3453. [Google Scholar] [CrossRef]

- Fard, A.K.; Akbari-Zadehb, M.R. A hybrid method based on wavelet, ANN and ARIMA model for short-term load forecasting. J. Exp. Theor. Artif. Intell. 2014, 26, 167–182. [Google Scholar] [CrossRef]

- Khandelwal, I.; Adhikari, R.; Verma, G. Time Series Forecasting using Hybrid ARIMA and ANN Models based on DWT Decomposition. Procedia Comp. Sci. 2015, 173–179. [Google Scholar] [CrossRef]

- Lopes, R.L.F.; Simone, G.C.; Gomes, H.S.; Lima, V.D.; Cavalcante, G.P.S. Application of hybrid ARIMA and artificial neural network modelling for electromagnetic propagation: An alternative to the least squares method and itu recommendation P.1546–5 for amazon urbanized cities. Int. J. Antennas Propag. 2020, 2020, 8494185. [Google Scholar] [CrossRef]

- Wang, L.; Zou, H.; Su, J.; Li, L.; Chaudhry, S. An ARIMA-ANN hybrid model for time series forecasting. Syst. Res. Behav. Sci. 2013, 30, 244–259. [Google Scholar] [CrossRef]

- Shapiro, S.S.; Wilk, M.B. An analysis of variance test for normality (complete samples). Biometrika 1965, 52, 591–611. [Google Scholar] [CrossRef]

- Brown, M.B.; Forsythe, A.B. Robust tests for the equality of variances. J. Am. Stat. Assoc. 1974, 69, 364–367. [Google Scholar] [CrossRef]

- Bradley, J.V. Distribution—Free Statistical Tests, 1st ed.; Prentice-Hall: Hoboken, NJ, USA, 1968. [Google Scholar]

- Kwiatkowski, D.; Phillips, P.C.B.; Schmidt, P.; Shin, Y. Testing the null hypothesis of stationarity against the alternative of a unit root. J. Econom. 1992, 54, 159–178. [Google Scholar] [CrossRef]

- Brockwell, P.J.; Davis, R.A. Time Series: Theory and Methods, 2nd ed.; Springer: New York, NY, USA, 2009. [Google Scholar]

- Hosseinzadeh, M. 4—Robust control applications in biomedical engineering: Control of depth of hypnosis. In Control Applications for Biomedical Engineering Systems; Azar, A.T., Ed.; Academic Press: Cambridge, MA, USA, 2020; pp. 89–125. [Google Scholar] [CrossRef]

- Percival, D.B.; Walden, A.T. Wavelet Methods for Time Series Analysis; Cambridge University Press: Cambridge, UK, 2000. [Google Scholar]

- Renaud, O.; Starck, J.-L.; Murtagh, F. Prediction based on a multiscale decomposition. Int. J. Wavelets Multiresolution Inf. Processing 2003, 1, 217–232. [Google Scholar] [CrossRef]

- Starck, J.L.; Murtagh, F.; Bijaoui, A. Image Processing and Data Analysis: The Multiscale Approach; Cambridge University Press: Cambridge, UK, 1998. [Google Scholar]

- Aminghafari, M.; Poggi, J.-M. Forecasting time series using wavelets. Int. J. Wavelets Multiresolution Inf. Processing 2007, 05, 709–724. [Google Scholar] [CrossRef]

- Alexandridis, A.K.; Zapranis, A.D. Wavelet neural networks: A practical guide. Neural Netw. 2013, 42, 1–27. [Google Scholar] [CrossRef] [PubMed]

- Paul, R.K.; Prajneshu, G.H. Wavelet frequency domain approach for modelling and forecasting of Indian monsoon rainfall time-series data. J. Indian Soc. Agric. Stat. 2013, 67, 319–327. [Google Scholar]

- Diaz-Robles, L.A.; Ortega, J.C.; Fu, J.S.; Reed, G.D.; Chow, J.C.; Watson, J.G.; Moncada-Herrera, J.A. A hybrid ARIMA and artificial neural networks model to forecast particulate matter in urban areas: The case of Temuco, Chile. Atmos Environ. 2008, 42, 8331–8340. [Google Scholar] [CrossRef]

- Benaouda, D.; Murtagh, G.; Starck, J.-L.; Renaud, O. Wavelet-based nonlinear multiscale decomposition model for electricity load forecasting. Neurocomputing 2006, 70, 139–154. [Google Scholar] [CrossRef]

- Chen, Y.; Yang, B.; Dong, J. Time-series prediction using a local linear wavelet neural wavelet. Neurocomputing 2006, 69, 449–465. [Google Scholar] [CrossRef]

- Solgi, A.; Nourani, V.; Pourhaghi, A. Forecasting daily precipitation using hybrid model of Wavelet-Artificial neural network and comparison with adaptive neurofuzzy inference system (Case Study: Verayneh Station, Nahavand). Adv. Civil Eng. 2014, 2014, 279368. [Google Scholar] [CrossRef]

- Zhang, G.P. Time series forecasting using a hybrid ARIMA and neural network model. Neurocomputing 2003, 50, 159–175. [Google Scholar] [CrossRef]

- Nourani, V.; Komasi, M.; Mano, A. A multivariate ANN-wavelet approach for rainfall-runoff modeling. Water Resour. Manag. 2009, 23, 2877–2894. [Google Scholar] [CrossRef]

| Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).