Digital Video Tampering Detection and Localization: Review, Representations, Challenges and Algorithm

Abstract

:1. Introduction

Organization of This Study

2. Distinction from Other Surveys

| Major Focus | References | Conference /Journal | Publisher | Publication Year | Citations |

|---|---|---|---|---|---|

| Image forgery detection with partial focus on video forgery detection | [4] | Journal | ACM | 2011 | 305 |

| [11] | Journal | Others | 2012 | 277 | |

| [10] | Journal | Elsevier | 2016 | 37 | |

| Video forgery detection | [13] | Conference | - | 2012 | 280 |

| [12] | Journal | Others | 2013 | 7 | |

| [24] | Journal | Others | 2013 | 3 | |

| [14] | Conference | IEEE | 2014 | 38 | |

| [15] | Journal | Others | 2015 | 15 | |

| [16] | Journal | Others | 2015 | 32 | |

| [9] | Journal | Elsevier | 2016 | 85 | |

| [17] | Conference | - | 2016 | 9 | |

| [18] | Conference | - | 2017 | 3 | |

| [19] | Journal | Others | 2017 | 11 | |

| [8] | Journal | Springer | 2018 | 54 | |

| [21] | Journal | Elsevier | 2019 | 16 | |

| [20] | Conference | - | 2019 | 10 | |

| [22] | Journal | Others | 2020 | 6 | |

| [25] | Journal | Springer | 2020 | 2 | |

| [23] | Journal | Springer | 2021 | 2 |

Contribution of This Work

- Almost all published papers in the domain of video forgery/tampering to date are considered to show the overall picture of research contribution in the field.

- To our knowledge, this is the first systematic comprehensive survey to filter out rich research contributions in the domain.

- This survey is categorized based on the proposed methodologies for easy comparison of their performance evaluation and the selection of the most suitable technique.

- This review will be helpful to new researchers regarding the issues and challenges faced by the community in this domain. Moreover, this paper analyzes the research gaps found in the literature that will help future researchers to identify and explore new avenues in the domain of video forensics.

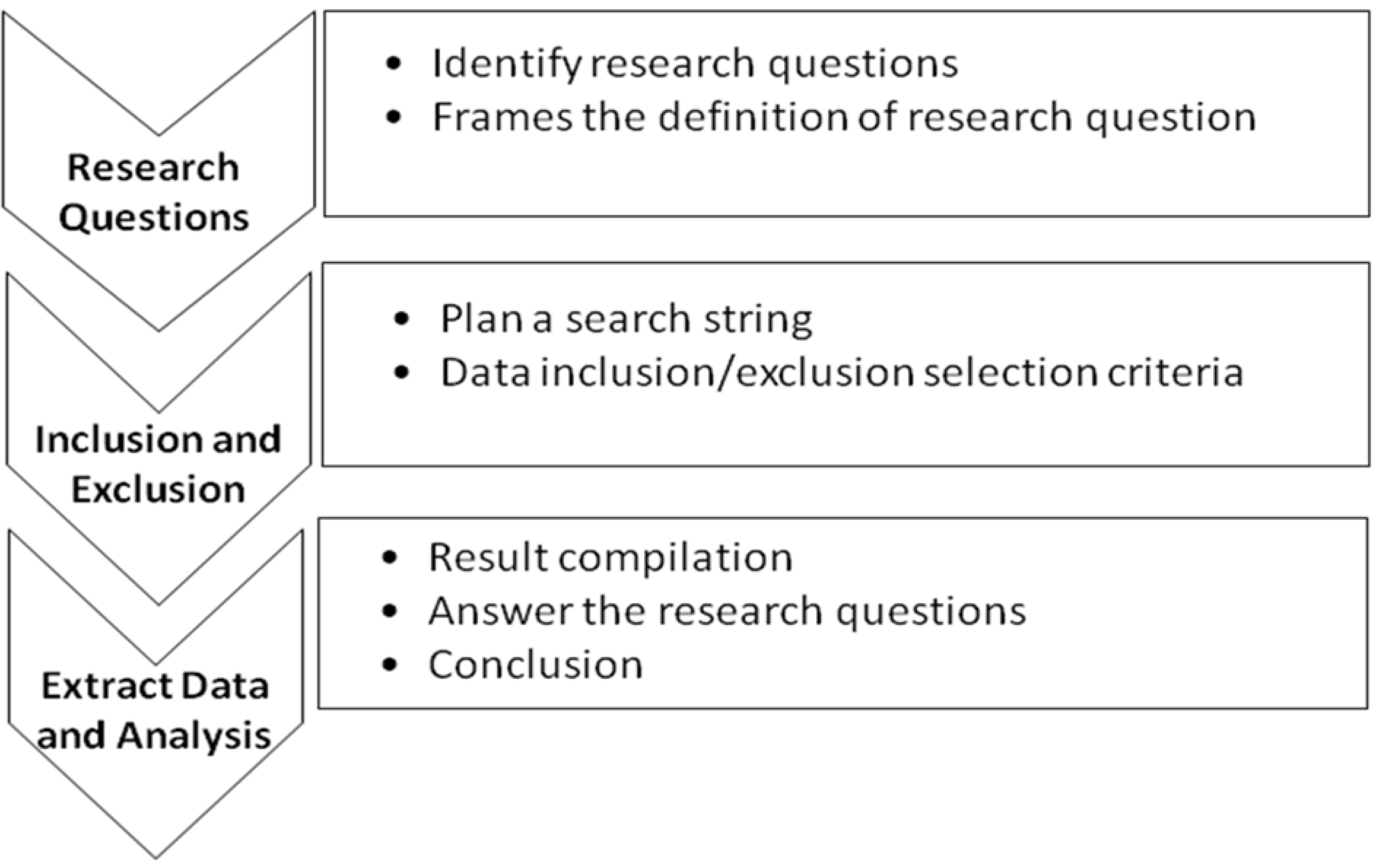

3. Survey Protocol

3.1. Research Questions

- Q1. What are various types of video tampering?

- Q2. What are the various techniques for video tampering detection available in the literature?

- Q3. What are the pros and cons of existing techniques?

- Q4. What are the challenges faced by the researchers?

- Q5. What are the evaluation measures and datasets used to evaluate video tampering detection and localization?

3.2. Search Strategy

3.3. Research Inclusion/Exclusion Criteria

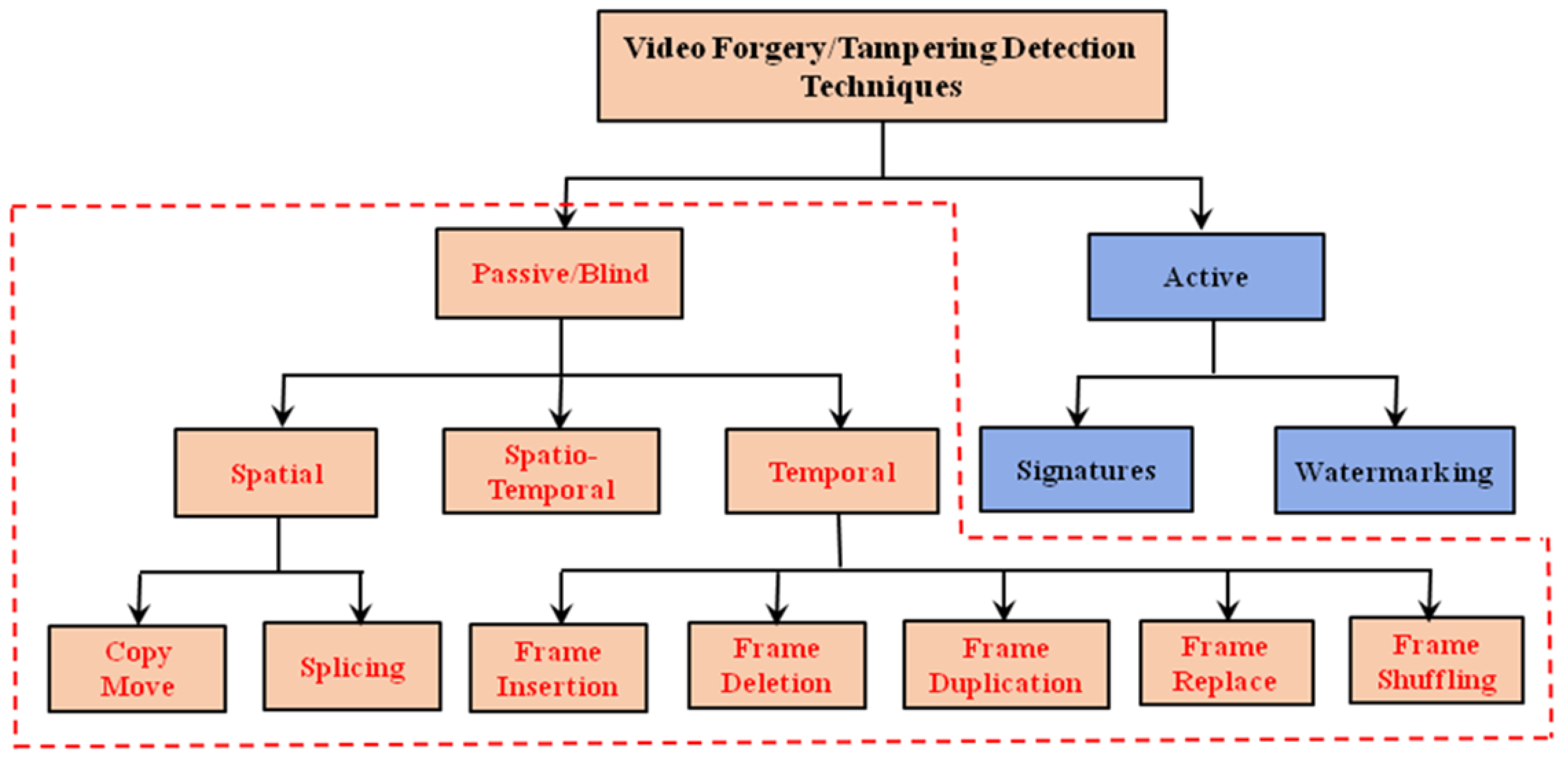

4. Types of Video Tampering (Forgery)

5. Video Tampering Detection

5.1. Active Approaches

5.2. Passive Approaches

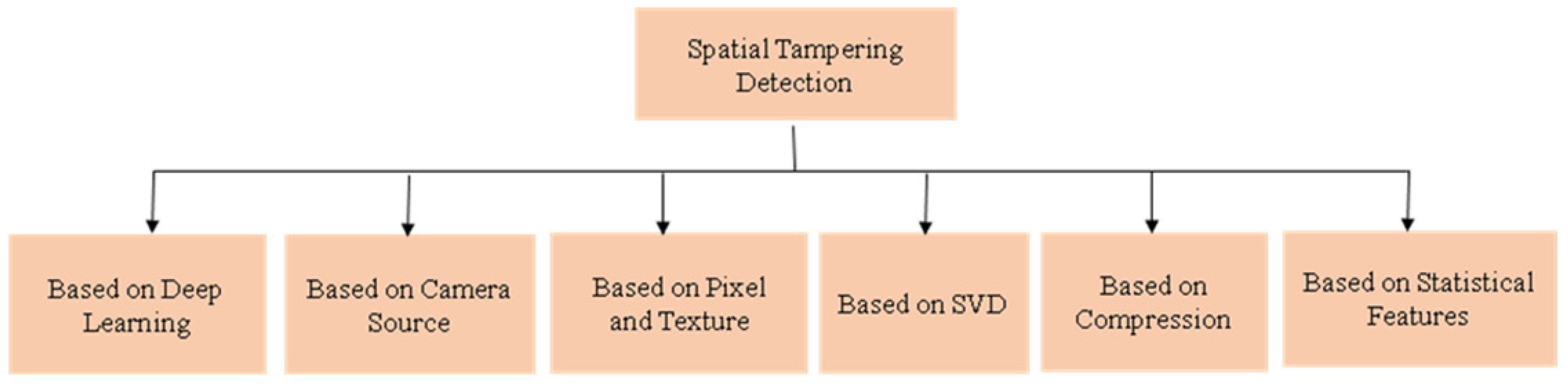

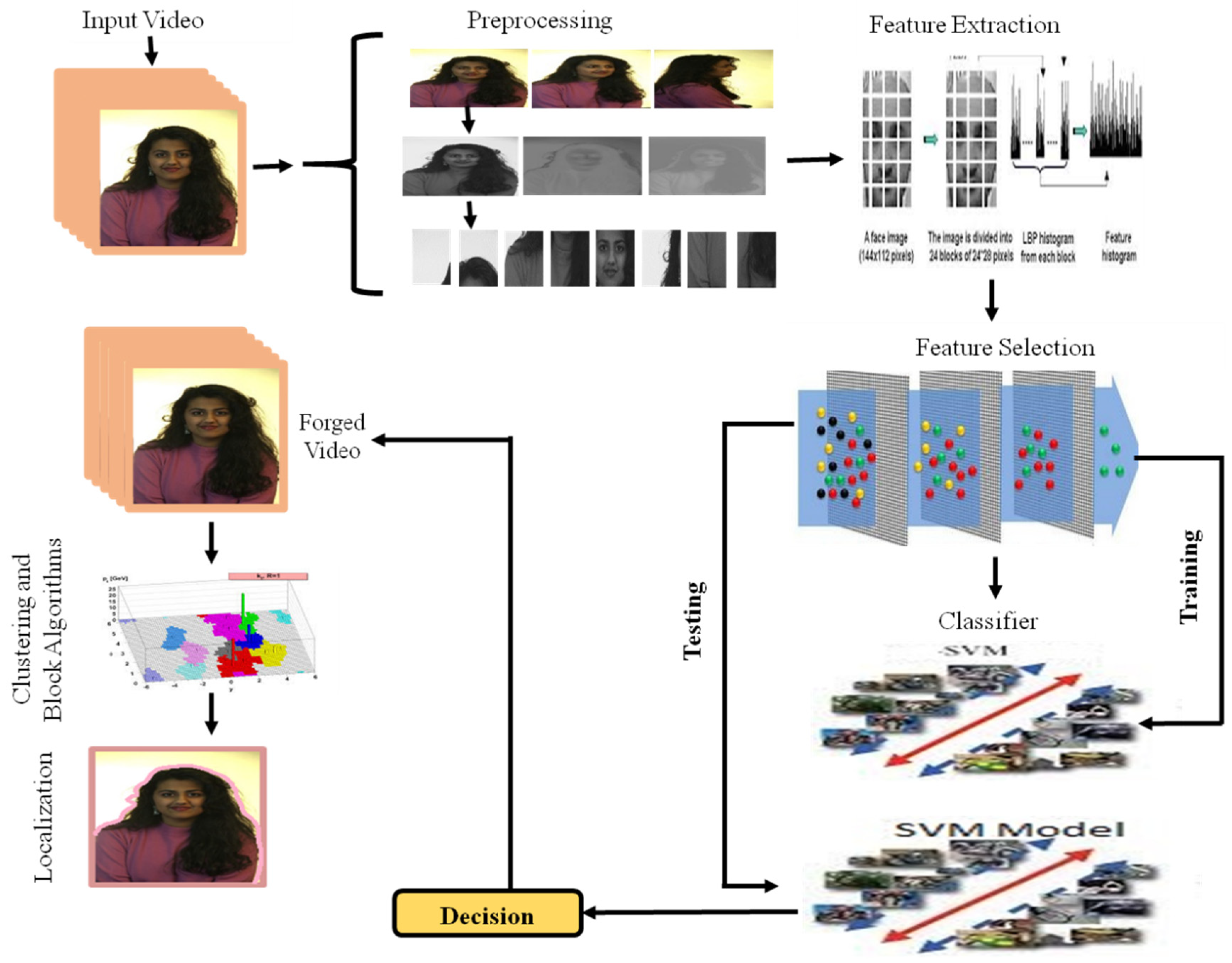

6. Review of Spatial (Intra-Frame) Video Tampering Detection Techniques

6.1. Methods Based on Deep Learning

6.2. Methods Based on Camera Source

6.3. Methods Based on Pixels and Texture Features

6.4. Methods Based on SVD

6.5. Methods Based on Compression

6.6. Methods Based on Statistical Features

6.7. Discussion and Analysis of Spatial Video Tampering Detection Techniques

7. Review of Temporal (Inter-Frame) Video Tampering Detection Techniques

7.1. Methods Based on Statistical Features

7.2. Methods Based on Frequency Domain Features

7.3. Methods Based on Residual and Optical Flow

7.4. Methods Based on Pixel and Texture

7.5. Methods Based on Deep Learning

7.6. Others

7.7. Discussion and Analysis of Temporal Video Tampering Detection Techniques

8. Research Challenges

8.1. Benchmark Dataset

8.2. Performance and Evaluation

8.3. Automation

8.4. Localization

8.5. Robustness

9. Future Directions

10. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Su, P.-C.; Suei, P.-L.; Chang, M.-K.; Lain, J. Forensic and anti-forensic techniques for video shot editing in H. 264/AVC. J. Vis. Commun. Image Represent. 2015, 29, 103–113. [Google Scholar] [CrossRef]

- Wang, W. Digital Video Forensics in Dartmouth College; Computer Science Department: Hanover, NH, USA, 2009. [Google Scholar]

- Pan, X.; Lyu, S. Region duplication detection using image feature matching. IEEE Trans. Inf. Forensics Secur. 2010, 5, 857–867. [Google Scholar] [CrossRef]

- Rocha, A.; Scheirer, W.; Boult, T.; Goldenstein, S. Vision of the unseen: Current trends and challenges in digital image and video forensics. ACM Comput. Surv. 2011, 43, 1–42. [Google Scholar] [CrossRef]

- Lee, J.-C.; Chang, C.-P.; Chen, W.-K. Detection of copy–move image forgery using histogram of orientated gradients. Inf. Sci. 2015, 321, 250–262. [Google Scholar] [CrossRef]

- Zhao, Y.; Wang, S.; Zhang, X.; Yao, H. Robust hashing for image authentication using Zernike moments and local features. IEEE Trans. Inf. Forensics Secur. 2013, 8, 55–63. [Google Scholar] [CrossRef]

- Asghar, K.; Habib, Z.; Hussain, M. Copy-move and splicing image forgery detection and localization techniques: A review. Aust. J. Forensic Sci. 2016, 49, 1–27. [Google Scholar] [CrossRef]

- Singh, R.D.; Aggarwal, N. Video content authentication techniques: A comprehensive survey. Multimed. Syst. 2018, 24, 211–240. [Google Scholar] [CrossRef]

- Sitara, K.; Mehtre, B. Digital video tampering detection: An overview of passive techniques. Digit. Investig. 2016, 18, 8–22. [Google Scholar] [CrossRef]

- Pandey, R.C.; Singh, S.K.; Shukla, K.K. Passive forensics in image and video using noise features: A review. Digit. Investig. 2016, 19, 1–28. [Google Scholar] [CrossRef]

- Milani, S.; Fontani, M.; Bestagini, P.; Barni, M.; Piva, A.; Tagliasacchi, M.; Tubaro, S. An overview on video forensics. APSIPA Trans. Signal Inf. Process. 2012, 1, 1–18. [Google Scholar] [CrossRef] [Green Version]

- Jaiswal, S.; Dhavale, S. Video Forensics in Temporal Domain using Machine Learning Techniques. Int. J. Comput. Netw. Inf. Secur. 2013, 5, 58. [Google Scholar] [CrossRef] [Green Version]

- Bestagini, P.; Fontani, M.; Milani, S.; Barni, M.; Piva, A.; Tagliasacchi, M.; Tubaro, S. An overview on video forensics. In Proceedings of the 20th European Signal Processing Conference (EUSIPCO), Bucharest, Romania, 27–31 August 2012. [Google Scholar]

- Wahab, A.W.A.; Bagiwa, M.A.; Idris, M.Y.I.; Khan, S.; Razak, Z.; Ariffin, M.R.K. Passive video forgery detection techniques: A survey. In Proceedings of the 10th International Conference on Information Assurance and Security (IAS), Okinawa, Japan, 28–30 November 2014. [Google Scholar]

- Al-Sanjary, O.I.; Sulong, G. Detection of video forgery: A review of literature. J. Theor. Appl. Inf. Technol. 2015, 74, 208–220. [Google Scholar]

- Sowmya, K.; Chennamma, H. A Survey On Video Forgery Detection. Int. J. Comput. Eng. Appl. 2015, 9, 18–27. [Google Scholar]

- Sharma, S.; Dhavale, S.V. A review of passive forensic techniques for detection of copy-move attacks on digital videos. In Proceedings of the 3rd International Conference on Advanced Computing and Communication Systems (ICACCS), Coimbatore, India, 22–23 January 2016. [Google Scholar]

- Tao, J.; Jia, L.; You, Y. Review of passive-blind detection in digital video forgery based on sensing and imaging techniques. In Proceedings of the International Conference on Optoelectronics and Microelectronics Technology and Application. International Society for Optics and Photonics, Shanghai, China, 5 January 2017. [Google Scholar]

- Mizher, M.A.; Ang, M.C.; Mazhar, A.A.; Mizher, M.A. A review of video falsifying techniques and video forgery detection techniques. Int. J. Electron. Secur. Digit. Forensics 2017, 9, 191–208. [Google Scholar] [CrossRef]

- Sharma, H.; Kanwal, N.; Batth, R.S. An Ontology of Digital Video Forensics: Classification, Research Gaps & Datasets. In Proceedings of the 2019 International Conference on Computational Intelligence and Knowledge Economy (ICCIKE), Dubai, United Arab Emirates, 11–12 December 2019. [Google Scholar]

- Johnston, P.; Elyan, E. A review of digital video tampering: From simple editing to full synthesis. Digit. Investig. 2019, 29, 67–81. [Google Scholar] [CrossRef]

- Kaur, H.; Jindal, N. Image and Video Forensics: A Critical Survey. Wirel. Pers. Commun. 2020, 112, 67–81. [Google Scholar] [CrossRef]

- Shelke, N.A.; Kasana, S.S. A comprehensive survey on passive techniques for digital video forgery detection. Multimed. Tools Appl. 2021, 80, 6247–6310. [Google Scholar] [CrossRef]

- Parmar, Z.; Upadhyay, S. A Review on Video/Image Authentication and Temper Detection Techniques. Int. J. Comput. Appl. 2013, 63, 46–49. [Google Scholar] [CrossRef]

- Alsmirat, M.A.; Al-Hussien, R.A.; Al-Sarayrah, W.a.T.; Jararweh, Y.; Etier, M. Digital video forensics: A comprehensive survey. Int. J. Adv. Intell. Paradig. 2020, 15, 437–456. [Google Scholar] [CrossRef]

- Kitchenham, B.; Brereton, O.P.; Budgen, D.; Turner, M.; Bailey, J.; Linkman, S. Systematic literature reviews in software engineering–a systematic literature review. Inf. Softw. Technol. 2009, 51, 7–15. [Google Scholar] [CrossRef]

- Wang, W.; Farid, H. Exposing digital forgeries in interlaced and deinterlaced video. IEEE Trans. Inf. Forensics Secur. 2007, 2, 438–449. [Google Scholar] [CrossRef] [Green Version]

- Wang, W.; Farid, H. Exposing digital forgeries in video by detecting duplication. In Proceedings of the 9th Workshop on Multimedia & Security, New York, NY, USA, 20–21 September 2007. [Google Scholar]

- Hsu, C.-C.; Hung, T.-Y.; Lin, C.-W.; Hsu, C.-T. Video forgery detection using correlation of noise residue. In Proceedings of the IEEE 10th Workshop on Multimedia Signal Processing, Cairns, Australia, 8–10 October 2008. [Google Scholar]

- Shih, T.K.; Tang, N.C.; Hwang, J.-N. Exemplar-based video inpainting without ghost shadow artifacts by maintaining temporal continuity. IEEE Trans. Circuits Syst. Video Technol. 2009, 19, 347–360. [Google Scholar] [CrossRef]

- Su, Y.; Zhang, J.; Liu, J. Exposing digital video forgery by detecting motion-compensated edge artifact. In Proceedings of the IEEE International Conference on Computational Intelligence and Software Engineering, CiSE, Wuhan, China, 11–13 December 2009. [Google Scholar]

- Zhang, J.; Su, Y.; Zhang, M. Exposing digital video forgery by ghost shadow artifact. In Proceedings of the First ACM Workshop on Multimedia in Forensics, Beijing, China, 23 October 2009. [Google Scholar]

- Kobayashi, M.; Okabe, T.; Sato, Y. Detecting video forgeries based on noise characteristics. In Advances in Image and Video Technology; Springer: Tokyo, Japan, 2009; pp. 306–317. [Google Scholar]

- Kobayashi, M.; Okabe, T.; Sato, Y. Detecting forgery from static-scene video based on inconsistency in noise level functions. IEEE Trans. Inf. Forensics Secur. 2010, 5, 883–892. [Google Scholar] [CrossRef]

- Chetty, G. Blind and passive digital video tamper detection based on multimodal fusion. In Proceedings of the 14th WSEAS International Conference on Communications, Corfu, Greece, 23–25 July 2010. [Google Scholar]

- Goodwin, J.; Chetty, G. Blind video tamper detection based on fusion of source features. In Proceedings of the IEEE International Conference on Digital Image Computing Techniques and Applications (DICTA), Noosa, Australia, 6–8 December 2011. [Google Scholar]

- Stamm, M.C.; Liu, K.R. Anti-forensics for frame deletion/addition in MPEG video. In Proceedings of the IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Prague, Czech Republic, 22–27 May 2011. [Google Scholar]

- Conotter, V.; O’Brien, J.F.; Farid, H. Exposing digital forgeries in ballistic motion. IEEE Trans. Inf. Forensics Secur. 2012, 7, 283–296. [Google Scholar] [CrossRef] [Green Version]

- Stamm, M.C.; Lin, W.S.; Liu, K.R. Temporal forensics and anti-forensics for motion compensated video. IEEE Trans. Inf. Forensics Secur. 2012, 7, 1315–1329. [Google Scholar] [CrossRef] [Green Version]

- Dong, Q.; Yang, G.; Zhu, N. A MCEA based passive forensics scheme for detecting frame-based video tampering. Digit. Investig. 2012, 9, 151–159. [Google Scholar] [CrossRef]

- Subramanyam, A.; Emmanuel, S. Video forgery detection using HOG features and compression properties. In Proceedings of the IEEE 14th International Workshop on Multimedia Signal Processing (MMSP), Banff, AB, Canada, 17–19 September 2012. [Google Scholar]

- Vazquez-Padin, D.; Fontani, M.; Bianchi, T.; Comesaña, P.; Piva, A.; Barni, M. Detection of video double encoding with GOP size estimation. In Proceedings of the IEEE International Workshop on Information Forensics and Security (WIFS), Tenerife, Spain, 2–5 December 2012. [Google Scholar]

- Chao, J.; Jiang, X.; Sun, T. A novel video inter-frame forgery model detection scheme based on optical flow consistency, in Digital Forensics and Watermaking. In Proceedings of the 11th International Workshop, IWDW 2012, Shanghai, China, 31 October–3 November 2012; Springer: Berlin/Heidelberg, Germany, 2012; pp. 267–281. [Google Scholar]

- Shanableh, T. Detection of frame deletion for digital video forensics. Digit. Investig. 2013, 10, 350–360. [Google Scholar] [CrossRef] [Green Version]

- Bestagini, P.; Milani, S.; Tagliasacchi, M.; Tubaro, S. Local tampering detection in video sequences. In Proceedings of the 15th International Workshop on Multimedia Signal Processing (MMSP), Pula, Italy, 30 September–2 October 2013. [Google Scholar]

- Labartino, D.; Bianchi, T.; De Rosa, A.; Fontani, M.; Vazquez-Padin, D.; Piva, A.; Barni, M. Localization of forgeries in MPEG-2 video through GOP size and DQ analysis. In Proceedings of the 15th International Workshop on Multimedia Signal Processing, Pula, Italy, 30 September–2 October 2013. [Google Scholar]

- Li, L.; Wang, X.; Zhang, W.; Yang, G.; Hu, G. Detecting removed object from video with stationary background. In Proceedings of the International Workshop on Digital Forensics and Watermarking, Taipei, Taiwan, 1–4 October 2013. [Google Scholar]

- Liao, S.-Y.; Huang, T.-Q. Video copy-move forgery detection and localization based on Tamura texture features. In Proceedings of the 6th International Congress on Image and Signal Processing (CISP), Hangzhou, China, 16–18 December 2013. [Google Scholar]

- Lin, C.-S.; Tsay, J.-J. Passive approach for video forgery detection and localization. In Proceedings of the Second International Conference on Cyber Security, Cyber Peacefare and Digital Forensic (CyberSec2013), The Society of Digital Information and Wireless Communication, Kuala Lumpur, Malaysia, 4–6 March 2013. [Google Scholar]

- Subramanyam, A.; Emmanuel, S. Pixel estimation based video forgery detection. In Proceedings of the IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Vancouver, BC, Canada, 26–31 May 2013. [Google Scholar]

- Wang, W.; Jiang, X.; Wang, S.; Wan, M.; Sun, T. Identifying video forgery process using optical flow, in Digital-Forensics and Watermarking. In Proceedings of the 11th International Workshop, IWDW 2012, Shanghai, China, 31 October–3 November 2012; Springer: Berlin/Heidelberg, Germany, 2013; pp. 244–257. [Google Scholar]

- Lin, C.-S.; Tsay, J.-J. A passive approach for effective detection and localization of region-level video forgery with spatio-temporal coherence analysis. Digit. Investig. 2014, 11, 120–140. [Google Scholar] [CrossRef]

- Richao, C.; Gaobo, Y.; Ningbo, Z. Detection of object-based manipulation by the statistical features of object contour. Forensic Sci. Int. 2014, 236, 164–169. [Google Scholar] [CrossRef]

- Wang, Q.; Li, Z.; Zhang, Z.; Ma, Q. Video Inter-Frame Forgery Identification Based on Consistency of Correlation Coefficients of Gray Values. J. Comput. Commun. 2014, 2, 51. [Google Scholar] [CrossRef] [Green Version]

- Feng, C.; Xu, Z.; Zhang, W.; Xu, Y. Automatic location of frame deletion point for digital video forensics. In Proceedings of the 2nd ACM Workshop on Information Hiding and Multimedia Security, Salzburg, Austria, 11–13 June 2014. [Google Scholar]

- Gironi, A.; Fontani, M.; Bianchi, T.; Piva, A.; Barni, M. A video forensic technique for detecting frame deletion and insertion. In Proceedings of the IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Florence, Italy, 4–9 May 2014. [Google Scholar]

- Liu, H.; Li, S.; Bian, S. Detecting frame deletion in H. 264 video. In Proceedings of the International Conference on Information Security Practice and Experience, Fuzhou, China, 5–8 May 2014. [Google Scholar]

- Pandey, R.C.; Singh, S.K.; Shukla, K. Passive copy-move forgery detection in videos. In Proceedings of the International Conference on Computer and Communication Technology (ICCCT), Allahabad, India, 26–28 September 2014. [Google Scholar]

- Wu, Y.; Jiang, X.; Sun, T.; Wang, W. Exposing video inter-frame forgery based on velocity field consistency. In Proceedings of the IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Florence, Italy, 4–9 May 2014. [Google Scholar]

- Chen, S.; Tan, S.; Li, B.; Huang, J. Automatic detection of object-based forgery in advanced video. IEEE Trans. Circuits Syst. Video Technol. 2016, 26, 2138–2151. [Google Scholar] [CrossRef]

- Zheng, L.; Sun, T.; Shi, Y.-Q. Inter-frame video forgery detection based on block-wise brightness variance descriptor. In Proceedings of the International Workshop on Digital Watermarking, Tokyo, Japan, 3 June 2014. [Google Scholar]

- Jung, D.-J.; Hyun, D.-K.; Lee, H.-K. Recaptured video detection based on sensor pattern noise. EURASIP J. Image Video Process. 2015, 2015, 40. [Google Scholar] [CrossRef] [Green Version]

- Kang, X.; Liu, J.; Liu, H.; Wang, Z.J. Forensics and counter anti-forensics of video inter-frame forgery. Multimed. Tools Appl. 2015, 75, 1–21. [Google Scholar] [CrossRef]

- Su, L.; Huang, T.; Yang, J. A video forgery detection algorithm based on compressive sensing. Multimed. Tools Appl. 2014, 74, 6641–6656. [Google Scholar] [CrossRef]

- Patel, H.C.; Patel, M.M. An Improvement of Forgery Video Detection Technique using Error Level Analysis. Int. J. Comput. Appl. 2015, 111. [Google Scholar] [CrossRef]

- Zhang, Z.; Hou, J.; Ma, Q.; Li, Z. Efficient video frame insertion and deletion detection based on inconsistency of correlations between local binary pattern coded frames. Secur. Commun. Netw. 2015, 8, 311–320. [Google Scholar] [CrossRef]

- Bidokhti, A.; Ghaemmaghami, S. Detection of regional copy/move forgery in MPEG videos using optical flow. In Proceedings of the International symposium on Artificial intelligence and signal processing (AISP), Mashhad, Iran, 3–5 March 2015. [Google Scholar]

- D’Amiano, L.; Cozzolino, D.; Poggi, G.; Verdoliva, L. Video forgery detection and localization based on 3D patchmatch. In Proceedings of the IEEE International Conference on Multimedia & Expo Workshops (ICMEW), Torino, Italy, 29 June–3 July 2015. [Google Scholar]

- Tan, S.; Chen, S.; Li, B. GOP based automatic detection of object-based forgery in advanced video. In Proceedings of the Asia-Pacific Signal and Information Processing Association Annual Summit and Conference (APSIPA), Hong Kong, China, 6–19 December 2015. [Google Scholar]

- Feng, C.; Xu, Z.; Jia, S.; Zhang, W.; Xu, Y. Motion-adaptive frame deletion detection for digital video forensics. IEEE Trans. Circuits Syst. Video Technol. 2016, 27, 2543–2554. [Google Scholar] [CrossRef]

- Yang, J.; Huang, T.; Su, L. Using similarity analysis to detect frame duplication forgery in videos. Multimed. Tools Appl. 2014, 75, 1793–1811. [Google Scholar] [CrossRef]

- Aghamaleki, J.A.; Behrad, A. Inter-frame video forgery detection and localization using intrinsic effects of double compression on quantization errors of video coding. Signal Process. Image Commun. 2016, 47, 289–302. [Google Scholar] [CrossRef]

- Yu, L.; Wang, H.; Han, Q.; Niu, X.; Yiu, S.; Fang, J.; Wang, Z. Exposing frame deletion by detecting abrupt changes in video streams. Neurocomputing 2016, 205, 84–91. [Google Scholar] [CrossRef]

- Mathai, M.; Rajan, D.; Emmanuel, S. Video forgery detection and localization using normalized cross-correlation of moment features. In Proceedings of the IEEE Southwest Symposium on Image Analysis and Interpretation (SSIAI), Santa Fe, NM, USA, 6–8 March 2016. [Google Scholar]

- Liu, Y.; Huang, T.; Liu, Y. A novel video forgery detection algorithm for blue screen compositing based on 3-stage foreground analysis and tracking. Multimed. Tools Appl. 2017, 77, 7405–7427. [Google Scholar] [CrossRef]

- Kingra, S.; Aggarwal, N.; Singh, R.D. Inter-frame forgery detection in H. 264 videos using motion and brightness gradients. Multimed. Tools Appl. 2017, 76, 25767–25786. [Google Scholar] [CrossRef]

- Singh, R.D.; Aggarwal, N. Detection and localization of copy-paste forgeries in digital videos. Forensic Sci. Int. 2017, 281, 75–91. [Google Scholar] [CrossRef]

- Fadl, S.M.; Han, Q.; Li, Q. Authentication of Surveillance Videos: Detecting Frame Duplication Based on Residual Frame. J. Forensic Sci. 2017, 63, 1099–1109. [Google Scholar] [CrossRef]

- Yao, Y.; Shi, Y.; Weng, S.; Guan, B. Deep learning for detection of object-based forgery in advanced video. Symmetry 2017, 10, 3. [Google Scholar] [CrossRef] [Green Version]

- Bozkurt, I.; Bozkurt, M.H.; Ulutaş, G. A new video forgery detection approach based on forgery line. Turk. J. Electr. Eng. Comput. Sci. 2017, 25, 4558–4574. [Google Scholar] [CrossRef]

- D’Avino, D.; Cozzolino, D.; Poggi, G.; Verdoliva, L. Autoencoder with recurrent neural networks for video forgery detection. Electron. Imaging 2017, 2017, 92–99. [Google Scholar] [CrossRef] [Green Version]

- Huang, C.C.; Zhang, Y.; Thing, V.L. Inter-frame video forgery detection based on multi-level subtraction approach for realistic video forensic applications. In Proceedings of the IEEE 2nd International Conference on Signal and Image Processing (ICSIP), Singapore, 4–6 August 2017. [Google Scholar]

- Al-Sanjary, O.I.; Ghazali, N.; Ahmed, A.A.; Sulong, G. Semi-automatic Methods in Video Forgery Detection Based on Multi-view Dimension. In Proceedings of the International Conference of Reliable Information and Communication Technology, Johor, Malaysia, 23–24 April 2017. [Google Scholar]

- D’Amiano, L.; Cozzolino, D.; Poggi, G.; Verdoliva, L. A patchmatch-based dense-field algorithm for video copy-move detection and localization. IEEE Trans. Circuits Syst. Video Technol. 2018, 29, 669–682. [Google Scholar] [CrossRef]

- Jia, S.; Xu, Z.; Wang, H.; Feng, C.; Wang, T. Coarse-to-fine copy-move forgery detection for video forensics. IEEE Access 2018, 6, 25323–25335. [Google Scholar] [CrossRef]

- Su, L.; Li, C.; Lai, Y.; Yang, J. A Fast Forgery Detection Algorithm Based on Exponential-Fourier Moments for Video Region Duplication. IEEE Trans. Multimed. 2018, 20, 825–840. [Google Scholar] [CrossRef]

- Su, L.; Li, C. A novel passive forgery detection algorithm for video region duplication. Multidimens. Syst. Signal Process. 2018, 29, 1173–1190. [Google Scholar] [CrossRef]

- Zhao, D.-N.; Wang, R.-K.; Lu, Z.-M. Inter-frame passive-blind forgery detection for video shot based on similarity analysis. Multimed. Tools Appl. 2018, 77, 25389–25408. [Google Scholar] [CrossRef]

- Huang, T.; Zhang, X.; Huang, W.; Lin, L.; Su, W. A multi-channel approach through fusion of audio for detecting video inter-frame forgery. Comput. Secur. 2018, 77, 412–426. [Google Scholar] [CrossRef]

- Al-Sanjary, O.I.; Ahmed, A.A.; Jaharadak, A.A.; Ali, M.A.; Zangana, H.M. Detection clone an object movement using an optical flow approach. In Proceedings of the IEEE Symposium on Computer Applications & Industrial Electronics (ISCAIE), Penang, Malaysia, 28–29 April 2018. [Google Scholar]

- Guo, C.; Luo, G.; Zhu, Y. A detection method for facial expression reenacted forgery in videos. In Proceedings of the Tenth International Conference on Digital Image Processing (ICDIP 2018), International Society for Optics and Photonics, Shanghai, China, 11–14 May 2018. [Google Scholar]

- Bakas, J.; Naskar, R. A Digital Forensic Technique for Inter–Frame Video Forgery Detection Based on 3D CNN. In Proceedings of the International Conference on Information Systems Security, Funchal, Purtugal, 22–24 January 2018. [Google Scholar]

- Antony, N.; Devassy, B.R. Implementation of Image/Video Copy-Move Forgery Detection Using Brute-Force Matching. In Proceedings of the 2nd International Conference on Trends in Electronics and Informatics (ICOEI), Tamil Nadu, India, 11–12 May 2018. [Google Scholar]

- Kono, K.; Yoshida, T.; Ohshiro, S.; Babaguchi, N. Passive Video Forgery Detection Considering Spatio-Temporal Consistency. In Proceedings of the International Conference on Soft Computing and Pattern Recognition, Porto, Purtugal, 13–15 December 2018. [Google Scholar]

- Bakas, J.; Bashaboina, A.K.; Naskar, R. Mpeg double compression based intra-frame video forgery detection using cnn. In Proceedings of the International Conference on Information Technology (ICIT), Bhubaneswar, India, 19–21 December 2018. [Google Scholar]

- Afchar, D.; Nozick, V.; Yamagishi, J.; Echizen, I. MesoNet: A Compact Facial Video Forgery Detection Network. arXiv 2018, arXiv:1809.00888. [Google Scholar]

- Fadl, S.M.; Han, Q.; Li, Q. Inter-frame forgery detection based on differential energy of residue. IET Image Process. 2019, 13, 52–528. [Google Scholar] [CrossRef]

- Singh, G.; Singh, K. Video frame and region duplication forgery detection based on correlation coefficient and coefficient of variation. Multimed. Tools Appl. 2019, 78, 11527–11562. [Google Scholar] [CrossRef]

- Joshi, V.; Jain, S. Tampering detection and localization in digital video using temporal difference between adjacent frames of actual and reconstructed video clip. Int. J. Inf. Technol. 2019, 78, 11527–11562. [Google Scholar] [CrossRef]

- Bakas, J.; Naskar, R.; Dixit, R. Detection and localization of inter-frame video forgeries based on inconsistency in correlation distribution between Haralick coded frames. Multimed. Tools Appl. 2018, 78, 4905–4935. [Google Scholar] [CrossRef]

- Sitara, K.; Mehtre, B. Differentiating synthetic and optical zooming for passive video forgery detection: An anti-forensic perspective. Digit. Investig. 2019, 30, 1–11. [Google Scholar] [CrossRef]

- Hong, J.H.; Yang, Y.; Oh, B.T. Detection of frame deletion in HEVC-Coded video in the compressed domain. Digit. Investig. 2019, 30, 23–31. [Google Scholar] [CrossRef]

- Aparicio-Díaz, E.; Cumplido, R.; Pérez Gort, M.L.; Feregrino-Uribe, C. Temporal Copy-Move Forgery Detection and Localization Using Block Correlation Matrix. J. Intell. Fuzzy Syst. 2019, 36, 5023–5035. [Google Scholar] [CrossRef]

- Saddique, M.; Asghar, K.; Mehmood, T.; Hussain, M.; Habib, Z. Robust Video Content Authentication using Video Binary Pattern and Extreme Learning Machine. IJACSA 2019, 10, 264–269. [Google Scholar] [CrossRef]

- Saddique, M.; Asghar, K.; Bajwa, U.I.; Hussain, M.; Habib, Z. Spatial Video Forgery Detection and Localization using Texture Analysis of Consecutive Frames. Adv. Electr. Comput. Eng. 2019, 19, 97–108. [Google Scholar] [CrossRef]

- Zampoglou, M.; Markatopoulou, F.; Mercier, G.; Touska, D.; Apostolidis, E.; Papadopoulos, S.; Cozien, R.; Patras, I.; Mezaris, V.; Kompatsiaris, I. Detecting Tampered Videos with Multimedia Forensics and Deep Learning. In Proceedings of the International Conference on Multimedia Modeling, Thessaloniki, Greece, 8–11 January 2019. [Google Scholar]

- Al-Sanjary, O.I.; Ahmed, A.A.; Ahmad, H.; Ali, M.A.; Mohammed, M.; Abdullah, M.I.; Ishak, Z.B. Deleting Object in Video Copy-Move Forgery Detection Based on Optical Flow Concept. In Proceedings of the IEEE Conference on Systems, Process and Control (ICSPC), Melaka, Malaysia, 13–14 December 2019. [Google Scholar]

- Cozzolino Giovanni Poggi Luisa Verdoliva, D. Extracting camera-based fingerprints for video forensics. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Long Beach, CA, USA, 16–20 June 2019. [Google Scholar]

- Long, C.; Basharat, A.; Hoogs, A.; Singh, P.; Farid, H. A Coarse-to-fine Deep Convolutional Neural Network Framework for Frame Duplication Detection and Localization in Forged Videos. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR) Workshops, Long Beach, CA, USA, 16–20 June 2019. [Google Scholar]

- Saddique, M.; Asghar, K.; Bajwa, U.I.; Hussain, M.; Aboalsamh, H.A.; Habib, Z. Classification of Authentic and Tampered Video Using Motion Residual and Parasitic Layers. IEEE Access 2020, 8, 56782–56797. [Google Scholar] [CrossRef]

- Fadl, S.; Megahed, A.; Han, Q.; Qiong, L. Frame duplication and shuffling forgery detection technique in surveillance videos based on temporal average and gray level co-occurrence matrix. Multimed. Tools Appl. 2020, 79, 1–25. [Google Scholar] [CrossRef]

- Kharat, J.; Chougule, S. A passive blind forgery detection technique to identify frame duplication attack. Multimed. Tools Appl. 2020, 79, 8107–8123. [Google Scholar] [CrossRef]

- Fayyaz, M.A.; Anjum, A.; Ziauddin, S.; Khan, A.; Sarfaraz, A. An improved surveillance video forgery detection technique using sensor pattern noise and correlation of noise residues. Multimed. Tools Appl. 2020, 79, 5767–5788. [Google Scholar] [CrossRef]

- Kohli, A.; Gupta, A.; Singhal, D. CNN based localisation of forged region in object-based forgery for HD videos. IET Image Process. 2020, 14, 947–958. [Google Scholar] [CrossRef]

- Wang, Y.; Hu, Y.; Liew, A.W.-C.; Li, C.-T. ENF Based Video Forgery Detection Algorithm. Int. J. Digit. Crime Forensics (IJDCF) 2020, 12, 131–156. [Google Scholar] [CrossRef]

- Kaur, H.; Jindal, N. Deep Convolutional Neural Network for Graphics Forgery Detection in Video. Wirel. Pers. Commun. 2020, 14, 1763–1781. [Google Scholar] [CrossRef]

- Huang, C.C.; Lee, C.E.; Thing, V.L. A Novel Video Forgery Detection Model Based on Triangular Polarity Feature Classification. Int. J. Digit. Crime Forensics (IJDCF) 2020, 12, 14–34. [Google Scholar] [CrossRef]

- Fadl, S.; Han, Q.; Li, Q. CNN spatiotemporal features and fusion for surveillance video forgery detection. Signal Process. Image Commun. 2021, 90, 116066. [Google Scholar] [CrossRef]

- Pu, H.; Huang, T.; Weng, B.; Ye, F.; Zhao, C. Overcome the Brightness and Jitter Noises in Video Inter-Frame Tampering Detection. Sensors 2021, 21, 3953. [Google Scholar] [CrossRef]

- Shelke, N.A.; Kasana, S.S. Multiple forgeries identification in digital video based on correlation consistency between entropy coded frames. Multimed. Syst. 2021, 34, 1–14. [Google Scholar] [CrossRef]

- Huang, Y.; Li, X.; Wang, W.; Jiang, T.; Zhang, Q. Towards Cross-Modal Forgery Detection and Localization on Live Surveillance Videos. arXiv 2021, arXiv:2101.00848. [Google Scholar]

- Bennett, E.P.; McMillan, L. Video enhancement using per-pixel virtual exposures. ACM Trans. Graph. 2005, 24, 845–852. [Google Scholar] [CrossRef]

- Hernandez-Ardieta, J.L.; Gonzalez-Tablas, A.I.; De Fuentes, J.M.; Ramos, B. A taxonomy and survey of attacks on digital signatures. Comput. Secur. 2013, 34, 67–112. [Google Scholar] [CrossRef]

- Chen, H.; Chen, Z.; Zeng, X.; Fan, W.; Xiong, Z. A novel reversible semi-fragile watermarking algorithm of MPEG-4 video for content authentication. In Proceedings of the Second International Symposium on Intelligent Information Technology Application, Shanghai, China, 20–22 December 2008. [Google Scholar]

- Di Martino, F.; Sessa, S. Fragile watermarking tamper detection with images compressed by fuzzy transform. Inf. Sci. 2012, 195, 62–90. [Google Scholar] [CrossRef]

- Hu, X.; Ni, J.; Pan, R. Detecting video forgery by estimating extrinsic camera parameters. In Proceedings of the International Workshop on Digital Watermarking, Tokyo, Japan, 7–10 October 2015. [Google Scholar]

- Haralick, R.M.; Shanmugam, K.; Dinstein, I.H. Textural features for image classification. IEEE Trans. Syst. Man Cybern. 1973, 6, 610–621. [Google Scholar] [CrossRef] [Green Version]

- Aminu Mustapha, B. Passive Video Forgery Detection Using Frame Correlation Statistical Features/Aminu Mustapha Bagiwa. Ph.D. Thesis, University of Malaya, Kuala Lumpur, Malaysia, 2017. [Google Scholar]

- Yu, J.; Srinath, M.D. An efficient method for scene cut detection. Pattern Recognit. Lett. 2001, 22, 1379–1391. [Google Scholar] [CrossRef]

- Kancherla, K.; Mukkamala, S. Novel blind video forgery detection using markov models on motion residue. In Intelligent Information and Database Systems; Springer: Berlin/Heidelberg, Germany, 2012; pp. 308–315. [Google Scholar]

- Bondi, L.; Baroffio, L.; Güera, D.; Bestagini, P.; Delp, E.J.; Tubaro, S. First steps toward camera model identification with convolutional neural networks. IEEE Signal Process. Lett. 2016, 24, 259–263. [Google Scholar] [CrossRef] [Green Version]

- Xu, G.; Wu, H.-Z.; Shi, Y.-Q. Structural design of convolutional neural networks for steganalysis. IEEE Signal Process. Lett. 2016, 23, 708–712. [Google Scholar] [CrossRef]

- Bayar, B.; Stamm, M.C. A deep learning approach to universal image manipulation detection using a new convolutional layer. In Proceedings of the 4th ACM Workshop on Information Hiding and Multimedia Security, Vigo Galicia, Spain, 20–22 June 2016. [Google Scholar]

- Rao, Y.; Ni, J. A deep learning approach to detection of splicing and copy-move forgeries in images. In Proceedings of the IEEE International Workshop on Information Forensics and Security (WIFS), Abu Dhabi, United Arab Emirates, 4–7 December 2016. [Google Scholar]

- Oh, S.; Hoogs, A.; Perera, A.; Cuntoor, N.; Chen, C.-C.; Lee, J.T.; Mukherjee, S.; Aggarwal, J.; Lee, H.; Davis, L. A large-scale benchmark dataset for event recognition in surveillance video. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Washington, DC, USA, 20–25 June 2011. [Google Scholar]

- Johnston, P.; Elyan, E.; Jayne, C. Video tampering localisation using features learned from authentic content. Neural Comput. Appl. 2020, 32, 12243–12257. [Google Scholar] [CrossRef] [Green Version]

- Qadir, G.; Yahaya, S.; Ho, A.T. Surrey university library for forensic analysis (SULFA) of video content. In Proceedings of the IET Conference on Image Processing (IPR), London, UK, 3–4 July 2012. [Google Scholar]

- Shullani, D.; Al Shaya, O.; Iuliani, M.; Fontani, M.; Piva, A. A dataset for forensic analysis of videos in the wild. In Proceedings of the International Tyrrhenian Workshop on Digital Communication, Palermo, Italy, 18–20 September 2017. [Google Scholar]

- Panchal, H.D.; Shah, H.B. Video tampering dataset development in temporal domain for video forgery authentication. Multimed. Tools Appl. 2020, 79, 24553–24577. [Google Scholar] [CrossRef]

- Ulutas, G.; Ustubioglu, B.; Ulutas, M.; Nabiyev, V.V. Frame duplication detection based on bow model. Multimed. Syst. 2018, 24, 549–567. [Google Scholar] [CrossRef]

- Le, T.T.; Almansa, A.; Gousseau, Y.; Masnou, S. Motion-consistent video inpainting. In Proceedings of the IEEE International Conference on Image Processing (ICIP), Beijing, China, 17–20 September 2017. [Google Scholar]

- Al-Sanjary, O.I.; Ahmed, A.A.; Sulong, G. Development of a video tampering dataset for forensic investigation. Forensic Sci. Int. 2016, 266, 565–572. [Google Scholar] [CrossRef]

- Guo, Z.; Zhang, L.; Zhang, D. A completed modeling of local binary pattern operator for texture classification. Image Process. IEEE Trans. 2010, 19, 1657–1663. [Google Scholar]

- Hussain, M.; Muhammad, G.; Saleh, S.Q.; Mirza, A.M.; Bebis, G. Image forgery detection using multi-resolution Weber local descriptors. In Proceedings of the IEEE International Conference on Computer as a Tool (EUROCON), Zagreb, Croatia, 1–4 July 2013. [Google Scholar]

- Satpathy, A.; Jiang, X.; Eng, H.-L. LBP-based edge-texture features for object recognition. IEEE Trans. Image Process. 2014, 23, 1953–1964. [Google Scholar] [CrossRef]

- Wold, S.; Esbensen, K.; Geladi, P. Principal component analysis. Chemom. Intell. Lab. Syst. 1987, 2, 37–52. [Google Scholar] [CrossRef]

- Suykens, J.A.; Vandewalle, J. Least squares support vector machine classifiers. Neural Process. Lett. 1999, 9, 293–300. [Google Scholar] [CrossRef]

- Muhammad, G.; Al-Hammadi, M.H.; Hussain, M.; Bebis, G. Image forgery detection using steerable pyramid transform and local binary pattern. Mach. Vis. Appl. 2014, 25, 985–995. [Google Scholar] [CrossRef]

- Chen, J.; Kang, X.; Liu, Y.; Wang, Z.J. Median Filtering Forensics Based on Convolutional Neural Networks. Signal Process. Lett. IEEE 2015, 22, 1849–1853. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. In Proceedings of the Advances in Neural Information Processing Systems, Montréal, QC, Canada, 3–6 December 2012. [Google Scholar]

- Hinton, G.; Deng, L.; Yu, D.; Dahl, G.E.; Mohamed, A.-R.; Jaitly, N.; Senior, A.; Vanhoucke, V.; Nguyen, P.; Sainath, T.N. Deep neural networks for acoustic modeling in speech recognition: The shared views of four research groups. Signal Process. Mag. IEEE 2012, 29, 82–97. [Google Scholar] [CrossRef]

- Sutskever, I.; Vinyals, O.; Le, Q.V. Sequence to sequence learning with neural networks. In Proceedings of the Advances in Neural Information Processing Systems, Montreal, QC, Canada, 8–13 December 2014. [Google Scholar]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef]

- Ma, J.; Sheridan, R.P.; Liaw, A.; Dahl, G.E.; Svetnik, V. Deep neural nets as a method for quantitative structure–activity relationships. J. Chem. Inf. Model. 2015, 55, 263–274. [Google Scholar] [CrossRef]

- Helmstaedter, M.; Briggman, K.L.; Turaga, S.C.; Jain, V.; Seung, H.S.; Denk, W. Connectomic reconstruction of the inner plexiform layer in the mouse retina. Nature 2013, 500, 168–174. [Google Scholar] [CrossRef]

- Xiong, H.Y.; Alipanahi, B.; Lee, L.J.; Bretschneider, H.; Merico, D.; Yuen, R.K.; Hua, Y.; Gueroussov, S.; Najafabadi, H.S.; Hughes, T.R. The human splicing code reveals new insights into the genetic determinants of disease. Science 2015, 347, 1254806. [Google Scholar] [CrossRef] [Green Version]

- Leung, M.K.; Xiong, H.Y.; Lee, L.J.; Frey, B.J. Deep learning of the tissue-regulated splicing code. Bioinformatics 2014, 30, i121–i129. [Google Scholar] [CrossRef] [Green Version]

- Le Cun, B.B.; Denker, J.S.; Henderson, D.; Howard, R.E.; Hubbard, W.; Jackel, L.D. Handwritten digit recognition with a back-propagation network. In Proceedings of the Advances in Neural Information Processing Systems, Lakewood, CO, USA, 26–29 June 1990. [Google Scholar]

- Peng, D.; Xu, Y.; Wang, Y.; Geng, Z.; Zhu, Q. Soft-sensing in complex chemical process based on a sample clustering extreme learning machine model. IFAC-PapersOnLine 2015, 48, 801–806. [Google Scholar] [CrossRef]

- Peng, Y.; Wang, S.; Long, X.; Lu, B.-L. Discriminative graph regularized extreme learning machine and its application to face recognition. Neurocomputing 2015, 149, 340–353. [Google Scholar] [CrossRef]

- Pan, S.J.; Yang, Q. A survey on transfer learning. IEEE Trans. Knowl. Data Eng. 2010, 22, 1345–1359. [Google Scholar] [CrossRef]

- Zhang, Z. Detect Forgery Video by Performing Transfer Learning on Deep Neural Network. Ph.D. Thesis, Sam Houston State University, Huntsville, TX, USA, 2019. [Google Scholar]

- Duan, L.; Xu, D.; Tsang, I. Learning with augmented features for heterogeneous domain adaptation. arXiv 2012, arXiv:1206.4660. [Google Scholar]

- Cook, D.; Feuz, K.D.; Krishnan, N.C. Transfer learning for activity recognition: A survey. Knowl. Inf. Syst. 2013, 36, 537–556. [Google Scholar] [CrossRef] [Green Version]

- Chen, L.; Duan, L.; Xu, D. Event recognition in videos by learning from heterogeneous web sources. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Washington, DC, USA, 23–28 June 2013. [Google Scholar]

- Nam, J.; Kim, S. Heterogeneous defect prediction. In Proceedings of the 10th Joint Meeting on Foundations of Software Engineering, Bergamo, Italy, 30 August–4 September 2015. [Google Scholar]

- Prettenhofer, P.; Stein, B. Cross-language text classification using structural correspondence learning. In Proceedings of the 48th Annual Meeting of the Association for Computational Linguistics, Association for Computational Linguistics, Uppsala, Sweden, 11–16 July 2010. [Google Scholar]

| Years | IEEE | Springer | Elsevier | Other Journals | Conferences | Total |

|---|---|---|---|---|---|---|

| 2007 | [27] | - | - | - | [28] | 2 |

| 2008 | - | - | - | - | [29] | 1 |

| 2009 | [30] | - | - | - | [31,32,33] | 4 |

| 2010 | [34] | - | - | - | [35] | 2 |

| 2011 | - | - | - | - | [36,37] | 2 |

| 2012 | [38,39] | - | [40] | - | [41,42,43] | 6 |

| 2013 | - | - | [44] | - | [45,46,47,48,49,50,51] | 8 |

| 2014 | - | - | [52,53] | [54] | [55,56,57,58,59,60] | 9 |

| 2015 | [61] | [62,63,64] | [1] | [65,66] | [67,68,69] | 10 |

| 2016 | [70] | [71] | [72,73] | - | [74] | 5 |

| 2017 | - | [75,76] | [77,78] | [79,80,81] | [82,83] | 9 |

| 2018 | [84,85,86] | [87,88] | [89] | - | [90,91,92,93,94,95,96] | 13 |

| 2019 | [97] | [98,99,100] | [21,101,102] | [103,104,105] | [20,106,107,108,109] | 15 |

| 2020 | [110] | [111,112,113] | - | [22,114,115,116,117] | - | 9 |

| 2021 | - | - | [118] | [119,120] | [121] | 4 |

| Total | 12 | 14 | 14 | 16 | 43 | 99 |

| References | Methods | Dataset | Evaluation Measures | Limitations/Issues | |||

|---|---|---|---|---|---|---|---|

| Accuracy | Recall | Precision | Others | ||||

| Methods Based on Deep Learning | |||||||

| 2019 [106] | Q4 + Cobalt forensic filters + GoogLeNet+ ResNet networks | 30 authentic and 30 forged videos for dataset Dev1 86 pairs of videos containing 44 k and 134 k frames for dataset Dev2 | 85.09% | - | 93.69% | - | Cannot detect a small size of forgery |

| 2017 [79] | CNN + absolute difference of consecutive frames + high pass filter layer | 100 authentic and 100 forged videos | 98.45% | 91.05% | 97.31% | F1 Score 94.07% FFACC 89.90% | Cannot detect a small size of forgery |

| 2018 [94] | CNN + recurrent neural network | 89 forged videos, named Inpainting-CDnet2014 34 forged videos, named Modification Database | - | - | - | AUC 0.977 and EER 0.061 | Cannot work well in presence of different types of object modifications |

| 2017 [81] | Auto-encoder + recurrent neural network | 10 authentic and 10 forged videos | - | - | - | ROC | Cannot work in presence of different types of post-processing operations (scaling, rotation, translation) |

| Methods Based on Source Camera Features | |||||||

| 2008 [29] | Noise residual + Bayesian classifier | Three videos with 200 frames Camera: JVC GZ-MG50TW Frame rate is 30 fps, Resolution 720 × 480 pixels, Bit Rate 8.5 Mbps | - | 96% | 55% | FPR 4%. Miss Rate 32% | Has no robustness to quantization noise Technique is hardware-dependent Forged regions are not localized Performance is measured relatively on a tiny dataset |

| 2009 [33] | Noise characteristics | 128 grayscale frames 30 fps Resolution 640 × 480 pixels Compressed by Huffyuv, lossless compression Codec | - | 94% | 75% | - | The algorithm is hardware-dependent Limited to spatial forgery only Dataset was relatively small |

| Methods Based Pixels and Texture Features | |||||||

| 2012 [41] | HOG features + matching module | 6000 frames from 15 different videos for spatial forgery 150 GOPs of size 12 frames each for temporal forgery Original video is compressed at 9 Mbps using MPEG-2 video codec Forgery is performed by copying and pasting regions of size 40 × 40, 60 × 60 and 80 × 80 pixels in the same and different frames | 94% for 60 × 60 pixels | - | - | - | Forgery is dependent on block size Has no robustness to a geometric operation such as large scaling. The algorithm is unable to localize the forged regions Only 12 videos are used for experimentation |

| 2013 [45] | Motion residual + correlation | 120 videos Resolution 320 × 240 pixels with 300 frames | 90% | - | - | AUC 0.92 | Experiments are performed only on 10, 15 and 20 percent compression rates Relatively poor accuracy with a compression rate exceeding up to 30% and more |

| 2018 [86] | Exponential Fourier Moments fast compression tracking algorithm | Video download from internet and SULFA dataset | 93.1% | - | - | - | Does not work on different compression rates |

| 2019 [107] | Optical flow | 3 videos from SULFA and 6 videos of video tampering dataset (VTD) | 96% | The performance of the method is not suitable in high-resolution videos | |||

| Methods Based on SVD | |||||||

| 2015 [64] | K-SVD + K-Means | Camera: SONY DSC-P10 Seven handmade videos Frame rate 25 fps Bitrate is 3 Mbps, Forged video are generated by Mokey 4.1.4 developed by the Imagineer Systems | 89.6% | 90.5% | 89.9% | - | Has not experimented on different compression rates Forged objects are not localized Dataset is small |

| Methods Based on Compression | |||||||

| 2013 [46] | Double Quantization (DQ) | Download video from http://media.xiph.org/video/derf/ (accessed on 22 November 2021) | - | - | - | AUC 0.8. ROC | Works on assumption that the video is forged (by changing the contents of a group of frames) before the second compression take place |

| 2018 [95] | CNN +DCT | Video TRACE library available online at: http://trace.eas.asu.edu/yuv (accessed on 16 November 2021) | Detection 90%, Localization 70% | High computational complexity | |||

| Methods Based on Statistical Features | |||||||

| 2013 [49] | Correlation coefficients + saliency-guided region segmentation | One video with 75 frames and Resolutions 360 × 240 pixels | High accuracy is claimed without statistical measure | Only one video is used for experimentation | |||

| 2014 [53] | Moment + average gradient + SVM | 20 videos Resolution 320 × 240 pixels | 95% | - | - | AUC 0.948 ROC | Dataset is small |

| References | Methods | Dataset | Evaluation Measures | Limitations/Issues | |||

|---|---|---|---|---|---|---|---|

| Accuracy | Recall | Precision | Others | ||||

| Methods Based on Statistical Features | |||||||

| 2007 [28] | Correlation coefficient | Camera: SONY-HDR-HC3 Two videos with 10,000 frames One video, the camera placed on a tripod and kept stationary throughout Second video hand-held moving camera is used 3, 6 and 9 Mbps bit rate | 85.7% and 95.2% | - | - | FPR 6% | Method has not worked to detect the deletion of frames Dataset is small |

| 2013 [44] | KNN + logistic regression + SVM +SRDA | 36 video sequences were used with deletion of 1 to 10 frames | - | - | - | TPR 94% FPR 5.5% | Has not worked on a localization of forgery Only detects frame deletion Dataset for training is small |

| 2014 [54] | Correlation Coefficients + SVM | 598 videos with a frame rate of 25 Five types of videos in the database Original videos 25 frames inserted 100 frames inserted 25 frames deleted 100 frames deleted | 96.21% | - | - | - | Has not worked on a localization of forgery Method is not applied on different compression levels |

| 2019 [98] | Correlation Coefficient + DCT | 24 videos are taken from SULFA 6 videos are downloaded from internet | 99.5% and 96.6% | 99% | 100% | F1 99.4% and F2 99.1% | Cannot detect a smaller number of duplicated frames Not able to detect small, duplicated regions |

| 2014 [59] | Consistency of velocity + Cross-Correlation | 120 self-created videos | 90% | - | - | - | Forge region is not localized Dataset is small |

| Methods Based on Frequency Domain Features | |||||||

| 2009 [31] | MCEA + DCT | 5 videos 3, 6, or 9 consecutive frames are deleted from the original | Impact Factor α is used | Has not worked on localization of forgery Dataset is small | |||

| 2012 [40] | MCEA + FFT spikes used after double MPEG compression | 4 videos with CIF and QCIF format 3rd, 6th, 9th, 12th and 15th frames are deleted Save with the same GOP = 15 | The quantitative measure was not used | Has not worked on localization of forgery Dataset is small | |||

| 2018 [89] | Quaternion Discrete Cosine Transform (QDCT) feature | SULFA: 101 videos OV (Open Video Project Analysis digital video collection): 14 videos Self-Recorded: 124 videos | - | 98.47% | 98.76% | - | Audio file is required with videos Poor localization No evaluation on unknown dataset |

| Methods Based on Residual and Optical Flow | |||||||

| 2014 [55] | Motion residual | 130 raw YUV videos tampered and made a video by deleting 5, 10, 15, 20, 25, 30 frames | - | - | - | TPR 90% FAR 0.8% | Only localized frame deletion point No work on frame insertion |

| 2016 [73] | Variation of prediction residual (PR) and the number of intra macro-blocks (NIMBs) | Self-created video | - | 81% | 88% | F1 score 84% | This method failed when the size of deleted frames was small, and video was in slow motion |

| 2016 [72] | Motion residual+ wavelet | 22 YUV raw video | 92.73% | - | - | ROC | Did not work well on low compression rate |

| 2013 [43] | Optical flow | TRECVID Content-Based Copy Detection (CBCD) scripts are used with 3000 for frame insertion and deletion in KTH database | - | 95.43% | 95.34% | - | Has not localized the forge region |

| 2016 [70] | Optical Flow + IPE (Inra-Prediction Elimination) Process | Group 1: 44 YUV raw files with slow motion content Group 2: 78 YUV raw files with quick motion 5, 10,15, 20, 25, 30 frames are deleted | - | - | - | True Positive Rate 90% | Not applicable to slow-motion videos False alarm rate is high for long video sequences No machine learning scheme is applied |

| 2017 [76] | OF gradient + PR (Prediction Residual) Gradient | Raw videos taken from DIC Punjab University (videos of surveillance camera and Xperia Z2 mobile) Tampered frames: 1% to 6% | Detection accuracy 83% Localization accuracy 80% | - | - | - | Performance decreases when applied on videos having high illumination |

| 2018 [85] | Correlation coefficient + optical flow | Downloaded 115 videos and self-forged with 10, 20, 40 duplicated frames | - | 5.5% | 98.5% | - | Poor performance on videos taken from static cameras |

| 2019 [99] | Frame prediction error + optical flow | 200 videos | 87.5% | Cannot work well for videos less than 7 s long | |||

| Methods Based on Pixels and Texture Features | |||||||

| 2013 [12] | DCT+ DFT + DWT from Prediction Error Sequence (PES) + SVM, ensemble-based Classifier | 20 videos Resolution 176 × 144 | - | - | - | ROC | Limited to detect frame deletion Has not localized the forged region Dataset is small for training and testing |

| 2013 [48] | Tamura texture + Euclidean Distance | 10 videos captured using stationary and moving hand-held cameras Resolution 640 × 480 Frame rate 25–30 fps | - | - | 99.6% | - | Weak to detect highly similar frames Weak to detect duplicate frames if sharpness changes slowly |

| 2015 [66] | Local Binary Pattern (LBP) + correlation | 599 videos with a frame rate of 25 Five types of videos in the database Original videos 25 frames inserted 100 frames inserted 25 frames deleted 100 frames deleted | - | 85.80% | 88.16% | - | Forge region is not localized Not tested on different compression rates |

| 2018 [88] | SVD + Euclidean distance | 10 videos | 99.01% | 100% | 98.07 | - | Does not work on grayscale videos |

| 2019 [100] | Harlalick features | 30 videos from different sources with 10, 20, 30, 40, 50 frame insertion, deletion | 96% | 86% | F1 score 91% | Does not work in presence of compression | |

| Methods Based on Deep Learning | |||||||

| 2019 [109] | 13D + ResNet network | Media Forensics Challenge dataset (MFC18) 231 videos in MFC-Eval and 1036videos in MFC-Dev, static camera raw videos from VIRAT: 12 videos Self-recorded iPhone 4 videos: 17 videos Videos of length 0.5 s, 1 s, 2 s, 5 s and 10 s are inserted into same source video | - | - | - | AUC 99.9% | Performance is degraded in presence of multiple sequences of the duplicated frames in a video |

| 2019 [106] | CNN | NIST 2018, Media Forensics Challenge2 for the video manipulation detection task, 116 tampered and 116 original 35 real and 33 fake videos are taken from InVID Fake Video Corpus | 85% | - | - | - | Labeled video data are required Localization is not completed |

| 2020 [136] | CNN | Face Forensics VTD Dataset provided by [81] | - | - | - | MCC: 0.67 F1: 0.81 | Only proposed for videos that have fixed GOP size and still background It can only deal with single type of tampering |

| 2021 [118] | 2D-CNN + SSIM + RBF-MSVM | Raw videos taken from VRAT, SULFA, LASIESTA and IVY datasets | TPR in ins, del anddup forgery are: 0.999, 0.987, 0.985 | Cross dataset evaluation was not performed on unknown dataset | |||

| Others | |||||||

| 2014 [56] | Variation of Prediction Footprint | 14 videos Resolution 352 × 288 pixels 1250 frames 100, 300, 700 frames removed and inserted | 91% | - | - | - | Has not worked to localize the forged object Dataset is small |

| Dataset Name and Reference | Number of Videos | Video Length (in s) | Video Source | Static/Moving Camera | Type of Video Forgery | Scenario (Mor, Eve, Night, Fog) | Available in Public Domain |

|---|---|---|---|---|---|---|---|

| TDTVD, Panchal, Shah et al., 2020 [139] | Original: 40 Tampered: 210 | 6–18 s | SULFA, YouTube | Static and moving | Frame insertion, deletion, duplication, and smart tampering | N/A | Yes |

| Pu, Huang et al., 2021 [119] | Original + Tampered: 200 | N/A | CDNET Video Library, SULFA, VFDD Video Library (Video Forgery Detection Database of South China University of Technology Version 1.0) | Static and moving | Frame deletion, insertion, replacement, and copy-move | N/A | No |

| Shelke and Kasana 2021 [120] | Original: 30 Tampered: 30 | N/A | SULFA, REWIND, and VTL | Static and moving | Frame insertion, deletion, duplication, and frame splicing | N/A | No |

| Test Database, Ulutas et al. [140] | Original +Tampered: 31 | SULFA and different movie scenes | Static and moving | Frame duplication | N/A | Yes | |

| Le, Almansa et al., 2017 [141] | Tampered: 53 | N/A | N/A | Static and moving camera | Video in-painting | N/A | Yes |

| VTD dataset, Al-Sanjary, Ahmed et al., 2016 [142] | Original: 7 Tampered: 26 | 14–16 s | YouTube | Static and moving camera | Copy-move, swapping frames, splicing | N/A | Yes |

| Feng, Xu et al., 2016 [70] | Original: 122 Tampered: 732 | N/A | YUV files http://trace.eas.asu.edu/yuv/ http://media.xiph.org/video/derf/ ftp://ftp.tnt.uni-hannover.de/pub/svc/testsequences/ * http://202.114.114.212/quick_motion/yuv_download.html *, (accessed on 16 November 2021) | Static and moving camera | Frame deletion | N/A | No |

| SYSU-OBJFORG, Chen et al. [61] | Total: 100 | 11 s | Commercial Surveillance Cameras | Static camera | Object-based | N/A | No |

| Su, Huang et al., 2015 [64] | Original + Tampered: 20 | N/A | SONY DSCP10 | Static camera | Copy-move | N/A | No |

| REWIND PROJECT, Bestagini et al., 2013 [45] | Original: 10 Tampered: 10 | 7–19 s | Canon SX220, Nikon S3000, Fujifilm S2800HD | Static camera | Copy-move | N/A | Yes |

| SULFA Dataset, Qadir, Yahaya et al., 2012 [137] | Original: 166 Tampered: 5 | 4–18 s | Canon SX220, Nikon S3000 Fujifilm S2800HD | Static camera | Copy-move | N/A | No * |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Akhtar, N.; Saddique, M.; Asghar, K.; Bajwa, U.I.; Hussain, M.; Habib, Z. Digital Video Tampering Detection and Localization: Review, Representations, Challenges and Algorithm. Mathematics 2022, 10, 168. https://doi.org/10.3390/math10020168

Akhtar N, Saddique M, Asghar K, Bajwa UI, Hussain M, Habib Z. Digital Video Tampering Detection and Localization: Review, Representations, Challenges and Algorithm. Mathematics. 2022; 10(2):168. https://doi.org/10.3390/math10020168

Chicago/Turabian StyleAkhtar, Naheed, Mubbashar Saddique, Khurshid Asghar, Usama Ijaz Bajwa, Muhammad Hussain, and Zulfiqar Habib. 2022. "Digital Video Tampering Detection and Localization: Review, Representations, Challenges and Algorithm" Mathematics 10, no. 2: 168. https://doi.org/10.3390/math10020168

APA StyleAkhtar, N., Saddique, M., Asghar, K., Bajwa, U. I., Hussain, M., & Habib, Z. (2022). Digital Video Tampering Detection and Localization: Review, Representations, Challenges and Algorithm. Mathematics, 10(2), 168. https://doi.org/10.3390/math10020168