1. Introduction

The paradigm of building large-scale foundation models, first established in Natural Language Processing (NLP), has now firmly taken root in Computer Vision, aiming to create single, powerful models capable of addressing a wide array of visual tasks. Among the myriad of visual tasks, image segmentation, which involves fine-grained, pixel-level understanding, stands as a cornerstone for in-depth scene perception. A class-agnostic segmentation model capable of segmenting any object in any image is not only a long-sought goal in academia but also a core technology driving critical applications such as autonomous driving and medical image analysis. The advent of the Segment Anything Model (SAM) [

1] in 2023, with its remarkable zero-shot generalization capabilities, has propelled the concept of a class-agnostic segmentation model to new heights.

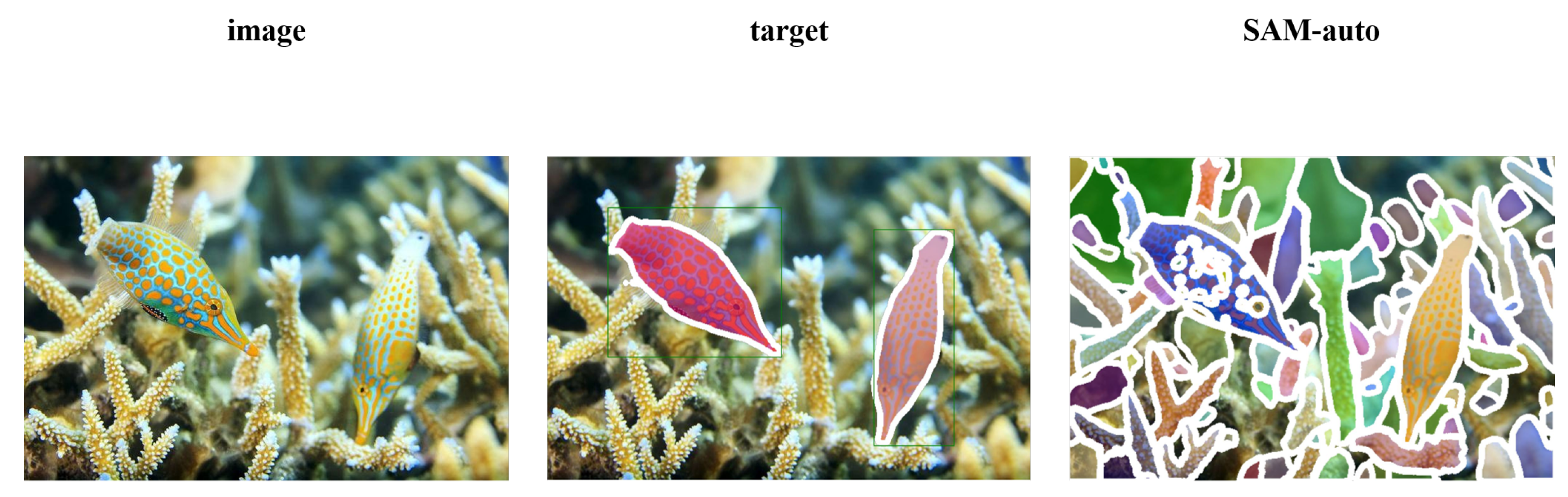

The remarkable power of SAM stems from its interactive, prompt-based design, allowing it to segment virtually any object specified by user-provided cues such as points, boxes, or masks. However, a significant gap exists between its “segment everything” automatic mode and the needs of many real-world applications that require fully automatic segmentation of specific objects of interest. The automatic mode of SAM employs an exhaustive strategy, placing a dense grid of points across the image without any localization guidance. While enabling prompt-free operation, this design philosophy leads to two critical deficiencies: first, localization blindness, where the model fails to identify and prioritize salient objects, resulting in fragmented and semantically incoherent masks (

Figure 1), and second, computational redundancy, expending resources on irrelevant background regions. Consequently, its performance on targeted segmentation tasks like Dichotomous Image Segmentation (DIS) [

2] is severely compromised. As research [

3,

4] shows, the automatic mode’s performance is drastically inferior to its prompt-guided counterpart, even when the latter is provided with a simple ground-truth bounding box (GT-Bbox). This highlights a core bottleneck: SAM’s original automatic design lacks the intrinsic capability for targeted, autonomous localization.

To overcome these limitations, existing research has predominantly explored two directions: enhancing precision through more sophisticated prompt engineering or user interaction [

5,

6], or adapting the model to specific domains via adapters or fine-tuning [

7,

8]. The former, however, compromises the autonomy of model, while the latter risks impairing its valuable generalization ability.

Diverging from these approaches, our work addresses the problem at a more fundamental level. Instead of generating sparse external prompts or performing post hoc refinement, we propose to redesign the information flow within the automatic segmentation process itself. We introduce an internal, end-to-end guidance mechanism that operates without manual prompts. This mechanism first performs a coarse global analysis to efficiently generate a preliminary mask identifying salient regions, and then leverages this mask to guide a fine, detailed segmentation within those specific areas.

To this end, we propose a Pre-Mask Guided framework for SAM (PMG-SAM). The central concept is inspired by human cognitive processes: first, a lightweight, dual-branch encoder rapidly generates a global Pre-Mask that identifies potential objects of interest within the image. Subsequently, this Pre-Mask is utilized as a high-quality internal prompt to guide a powerful decoder in performing fine-grained contour segmentation. This coarse-to-fine mechanism replaces the exhaustive search with globally informed guidance, addressing the position sensitivity issue at its source and opening a new avenue for designing efficient, class-agnostic segmentation models. In summary, the contributions of this paper are fourfold as follows:

We propose a “locate-and-refine” paradigm implemented through Pre-Mask Guidance. This approach shifts SAM’s automatic mode from an exhaustive grid-based search to a targeted process, directly addressing the core issue of localization blindness by providing a dense, global prior before segmentation.

We present an effective implementation of this paradigm, featuring a dual-branch encoder that leverages the complementary strengths of Transformer and CNN architectures. A novel Dense Residual Fusion Module (DRFM) is designed to synergistically fuse these features, generating a high-quality Pre-Mask that is crucial for the guidance mechanism.

To enhance boundary details in the “refine” stage, we integrate a high-resolution feature injection pathway. This pathway preserves crucial spatial information from the encoder, leading to more precise segmentation contours.

We conduct extensive experiments demonstrating the effectiveness and efficiency of our approach. Our fully automatic model not only significantly outperforms the standard auto-modes of SAM/SAM2 but also surpasses their GT-Bbox prompted modes. This is achieved with only M trainable parameters, showcasing a superior balance of performance and efficiency and validating the power of our proposed paradigm.

2. Related Work

2.1. Visual Backbones for Segmentation

The performance of segmentation models is heavily reliant on the quality of features extracted by their visual backbone. Modern architectures often face a trade-off between capturing fine-grained local details and robust global context.

Hierarchical Vision Transformers, such as Hiera [

9], represent a significant advancement in this area. Their strength is twofold. Architecturally, by employing window-based local attention in their early stages, they excel at preserving high-frequency spatial information like edges and textures. Furthermore, their pre-training under the masked autoencoder (MAE) framework [

10] is crucial. This self-supervised strategy forces the model to reconstruct randomly masked image patches, compelling it to learn rich and robust local representations without relying on extensive labeled data. This combination of an efficient architecture and a powerful pre-training scheme makes them ideal for tasks requiring precise boundary delineation.

On the other hand, CNN-based architectures, particularly those with a U-shaped structure like U-Net [

11], have long been the standard for semantic segmentation. Their design of progressive downsampling to capture semantic information followed by upsampling to recover spatial resolution makes them powerful at abstracting the global context of an image. U

2-Net [

12] further enhances this paradigm with its nested ReSidual U-blocks (RSUs), enabling even deeper feature abstraction across multiple scales. Our work leverages the complementary nature of these two architectural paradigms by designing a novel fusion mechanism to combine their respective strengths.

2.2. Advances in Improving SAM

Research on improving SAM has predominantly advanced along four key directions: enhancing its automation, broadening its domain adaptability, refining its output precision, and fostering synergy with other foundation models.

Automated Prompt Generation. The core interactive mechanism of SAM relies on manual prompts, which limits its efficiency in fully automated applications. Consequently, a significant line of research focuses on developing techniques for automatic prompt generation. A mainstream approach involves training an auxiliary network to predict prompt cues like points or boxes [

13,

14], or refining an initial set of prompts into a more representative sparse point collection [

15]. Another strategy leverages the semantic understanding of multimodal large models, such as CLIP [

16], to generate guiding signals like pseudo-points, pseudo-masks, textual, or auditory prompts [

17,

18], providing SAM with initial localization information. However, these methods typically generate sparse prompts (points or boxes) or depend on implicit guidance from external models, limiting their ability to provide a global, structurally rich prior for the segmentation target.

SAM Adaptation and Enhancement. To adapt SAM for specialized domains like medical imaging, remote sensing, and video, research has proceeded along two main avenues: Parameter-Efficient Fine-Tuning (PEFT) and architectural modification. PEFT methods, such as Adapters and Low-Rank Adaptation (LoRA), aim to adjust parameters of SAM with minimal computational overhead [

19,

20]. Some studies have also designed novel fine-tuning mechanisms, like prompt-bridging, to balance the optimization between the encoder and decoder [

21]. Architectural enhancements, on the other hand, focus on tailoring the model to specific data types. This includes introducing temporal modeling modules for video [

22,

23,

24] and integrating state-space models like Mamba [

25] to capture domain-specific spatiotemporal dependencies. These works concentrate on adjusting the internal parameters of model or structure to accommodate new tasks or data modalities.

Output Quality Refinement. Addressing the issue of coarse boundaries in output masks of SAM, research has primarily adopted post-processing refinement strategies. This involves introducing an additional network to polish the initial masks generated by SAM [

26]. Alternatively, some approaches focus on decoder optimization by fusing multi-scale features or improving feature aggregation methods to enhance the detail quality of the native output [

19,

20,

27]. Such methods are characteristically post hoc, concentrating on correcting or enhancing the segmentation result after it has been generated.

Collaboration with Other Foundation Models. Beyond single-model adaptation, a body of work explores the deep integration of SAM with other large models, notably CLIP, at a systemic level. This includes constructing explicit pipelines, such as using CLIP for localization followed by SAM for segmentation [

17], deconstructing SAM and coupling it with a CLIP encoder to create an efficient single-stage open-vocabulary segmentation architecture [

15], or utilizing SAM for contextual segmentation while introducing uncertainty modeling to improve robustness [

28]. These efforts demonstrate potential of SAM as a versatile component within more complex, multimodal systems.

2.3. Positioning of Our Work

Our work carves a distinct and novel path within this research landscape. While existing research has largely focused on two avenues—either enhancing the promptable mode through automated prompt generation or adapting SAM’s internal architecture for specific domains—our work addresses a more fundamental issue: the inherent limitation of the automatic mode for targeted segmentation tasks.

Unlike automated prompt generation methods that aim to replace manual clicks with predicted sparse cues like points or boxes, our Pre-Mask Guided paradigm introduces a new, fully automatic pipeline. It provides a dense, explicit mask rich in spatial and structural priors, shifting the operational model from a “prompt-and-segment” process to an end-to-end “locate-and-refine” workflow. This approach fundamentally solves the localization blindness of the grid-based segment everything approach. In contrast to methods that fine-tune SAM’s parameters or add post-processing steps to refine coarse masks, our framework operates at the input level of the decoder. By furnishing a high-quality shape prior before the main segmentation process begins, we preemptively guide the model towards a precise solution. This makes our approach a foundational, architectural innovation rather than a domain-specific adaptation or a corrective afterthought. In essence, PMG-SAM proposes a new blueprint for building general-purpose automatic segmentation models that are both efficient and target-aware, making it a versatile and powerful component for complex vision systems. In essence, while other methods focus on what to prompt SAM with, our work redefines how SAM’s automatic mode should fundamentally operate. It is a shift from a ‘prompt-and-segment’ philosophy to an integrated ‘locate-and-refine’ workflow, making it an architectural innovation rather than a prompting technique.

3. Methods

The native prompt-free mode of SAM is hampered by two fundamental limitations: a localization sensitivity defect and a redundant computation bottleneck. To address these challenges head-on, we propose PMG-SAM, a framework that replaces SAM’s exhaustive grid-based search with a targeted, cognition-inspired “locate-and-refine” paradigm. This section first revisits the architecture and limitations of SAM in

Section 3.1, before detailing the design and components of our proposed solution in

Section 3.2.

3.1. Preliminaries: SAM

3.1.1. Architectural Framework

SAM comprises three core components: an image encoder, a prompt encoder, and a mask decoder.

The image encoder, accounting for the majority of model parameters, employs a Vision Transformer (ViT) [

29] pre-trained via Masked Autoencoding (MAE) [

10] to process high-resolution inputs, with each image undergoing single-pass feature extraction.

The prompt encoder is a core component that establishes SAM as a multimodal architecture, processing two distinct prompt categories: dense and sparse. Dense prompts, which consist of mask inputs, are processed by a convolutional encoder and their embeddings are added element-wise to the image embeddings. To handle cases where no mask is provided, the model incorporates a learnable “no-mask” embedding. This special token serves as a placeholder to signify the absence of a mask, ensuring the architectural consistency of the model. In contrast, sparse prompts encompass three modalities—points and boxes encoded as positional embeddings combined with modality-specific learnable embeddings, alongside textual prompts processed with CLIP token embedding.

The mask decoder integrates image features and prompt embeddings through bidirectional cross-attention and self-attention mechanisms, subsequently employing a multilayer perceptron (MLP) to project output tokens to a dynamic linear classifier that computes foreground probability masks at each spatial location.

3.1.2. Prompt-Free Segmentation

SAM implements prompt-free segmentation through a two-stage cascade beginning with grid sampling, where a default grid () is overlaid on the input image and processed at each grid point to generate candidate object masks, with repetition on two cropped high-resolution regions using denser point sampling. This is followed by mask refinement through a three-phase filtering mechanism: (1) elimination of edge-affected masks from cropped regions; (2) application of Non-Maximum Suppression (NMS) for local-global mask merging; and (3) implementation of quality control via three criteria—retention of masks with IoU scores , stability verification through soft mask thresholding at with predictions kept only when , and rejection of masks covering of the image area as non-informative.

This paradigm reveals two critical limitations. First, it suffers from a localization sensitivity defect, where grid-based dense prompts are vulnerable to semantic fragmentation, leading to erroneous multi-fragment segmentation of single objects. This observation is corroborated by the work of Sun et al. [

5], which demonstrates that native geometric prompts of SAM struggle in complex scenes precisely due to a lack of semantic guidance. Second, the paradigm introduces a redundant computation bottleneck. The per-point cross-attention in the ViT decoder, with its

complexity, leads to a computational explosion when high-resolution grids are employed, violating linear complexity expectations. The drive to mitigate such computational inefficiencies is a key motivation in related research, such as the model merging approach proposed by Wang et al. [

30] to create a more efficient, unified model.

3.2. Architecture

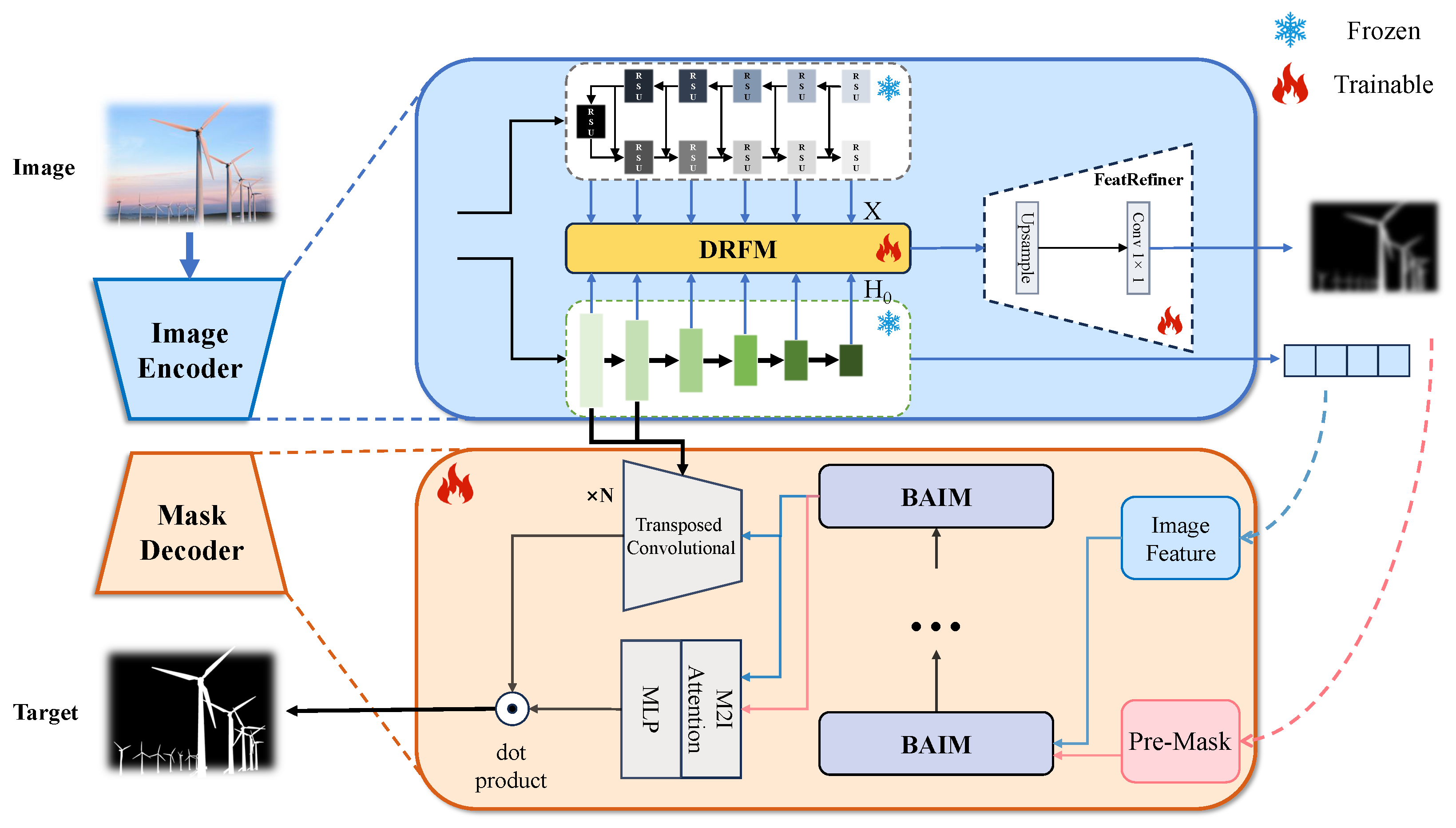

To overcome the inherent limitations of automatic mode of SAM, namely its localization blindness and computational inefficiency, we introduce a Pre-Mask Guided SAM (PMG-SAM). Our approach is inspired by the “coarse-to-fine” cognitive mechanism characteristic of human vision. The overall architecture of PMG-SAM, illustrated in

Figure 2, is streamlined and comprises two primary components: a novel image encoder and an enhanced mask decoder. To operationalize our proposed ‘locate-and-refine’ paradigm, the architecture of PMG-SAM is conceptually divided into two functional stages: a Pre-Mask Generator responsible for the ‘locate’ step comprising our dual-branch encoder and fusion modules, and a Guided Mask Decoder that executes the ‘refine’ step which leverages the generated Pre-Mask for precise segmentation.

The core innovation of our framework lies in the Pre-Mask guided paradigm. Specifically, we replace original image encoder of SAM, which relies on a grid of point prompts for automatic mask generation, with our specialized encoder. This new encoder is designed to automatically generate a high-quality, dense Pre-Mask from the input image. The Pre-Mask serves as a global, structural prompt, providing a strong prior about the locations and shapes of potential objects. This Pre-Mask is then fed into our enhanced mask decoder to guide the segmentation process. Crucially, to further boost precision, the decoder is also strategically augmented with high-resolution features extracted by our new encoder. This dual-input design—leveraging both the high-level semantic guidance of the Pre-Mask and the edge detail from the features—enables the production of highly accurate and detailed segmentation masks. By replacing a exhaustive strategy with a learnable, content-aware guidance mechanism, PMG-SAM fundamentally shifts the operational process from exhaustive search to targeted refinement.

To effectively capture the full spectrum of visual information required for high-quality segmentation, our feature extractor is designed with a dual-pathway architecture. This design is motivated by the observation that different architectural paradigms excel at capturing distinct types of features: hierarchical transformers are superior for fine-grained local details, while U-Net-like structures are powerful for abstracting global semantic context. For the local feature pathway, we adopt Hiera as our backbone, replacing the original ViT from SAM. The choice of Hiera is deliberate, owing to its powerful combination of architectural design and pre-training strategy. Its hierarchical structure with local attention is inherently suited for capturing fine-grained details, a capability significantly amplified by its MAE-based pre-training. This training regime compels the model to develop a profound understanding of local patterns and textures, which aligns with findings from [

31] on the importance of local features for precise segmentation. Concurrently, for the global feature pathway, we employ a U

2-Net-like architecture. Its deeply supervised, nested U-structure is highly adept at progressive semantic abstraction, yielding robust global feature representations that capture the overall structure and context of target objects.

The core novelty of our approach lies not in the individual backbones, but in their synergistic fusion. To combine the complementary strengths of local acuity of Hiera and the U

2-Net pathway’s global understanding, we introduce our Dense Residual Fusion Module (DRFM). This module systematically integrates feature maps from both pathways. The process involves three key stages: extraction of

corresponding feature maps from both architectures, where

is set to six by default.; pairwise feature fusion with DRFM (detailed in

Section 3.3); processing of fused features through FeatRefiner (detailed in

Section 3.4).

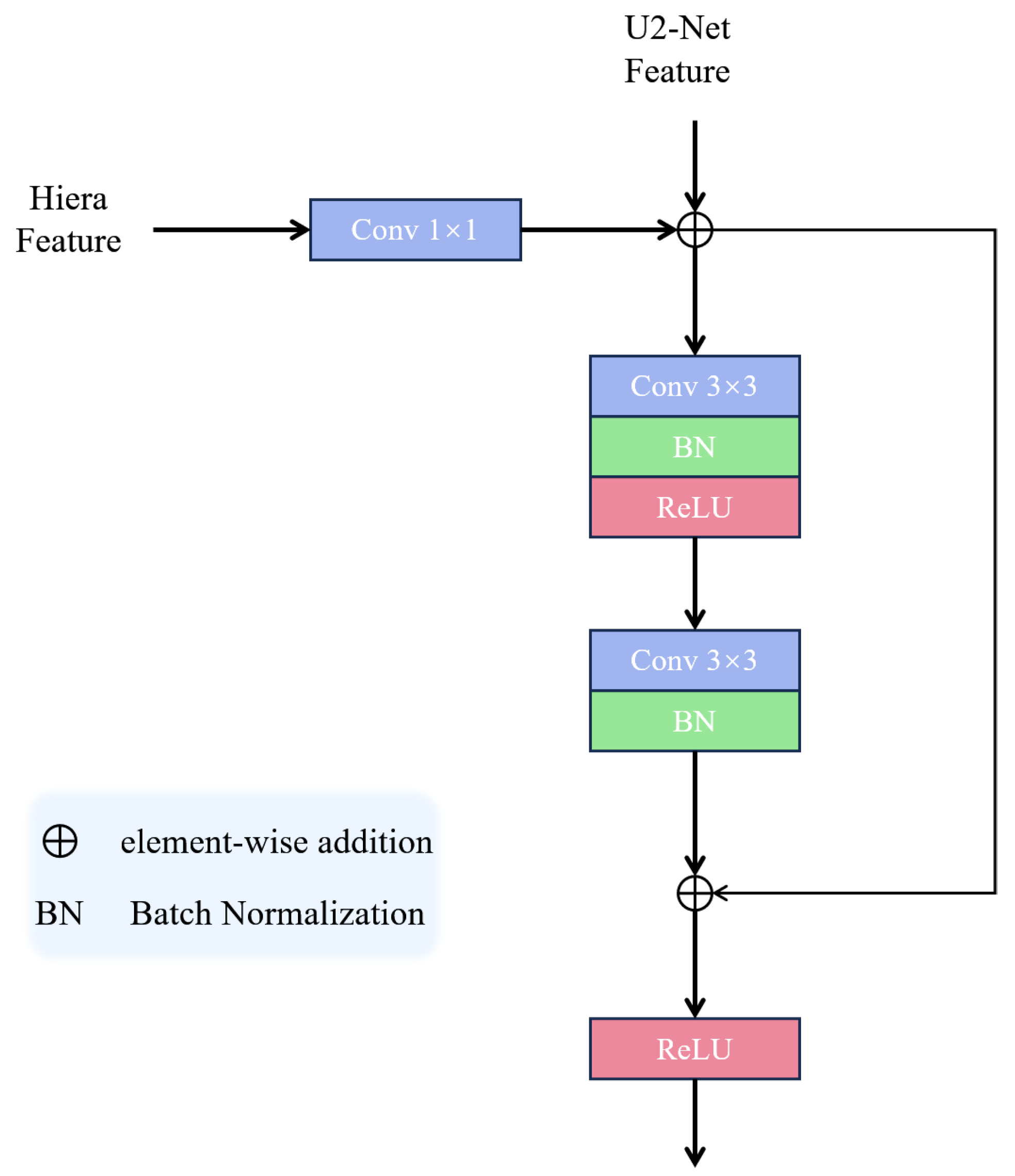

3.3. Dense Residual Fusion Module

Dense Residual Fusion Module (DRFM) is designed to effectively merge the strengths of the Hiera and U2-Net feature streams. We adopt a layer-wise fusion strategy, where features from corresponding layers of the two models are fused pairwise. This approach is rooted in the belief that integrating local, detail-oriented features (from Hiera) with multi-scale semantic features (from U2-Net) at the same hierarchical level ensures meaningful feature interaction. In contrast to a global fusion approach, this targeted strategy prevents the mixing of features from disparate semantic levels, which could lead to information misalignment and redundant interference. Furthermore, by confining interactions to corresponding layers, we significantly reduce computational overhead.

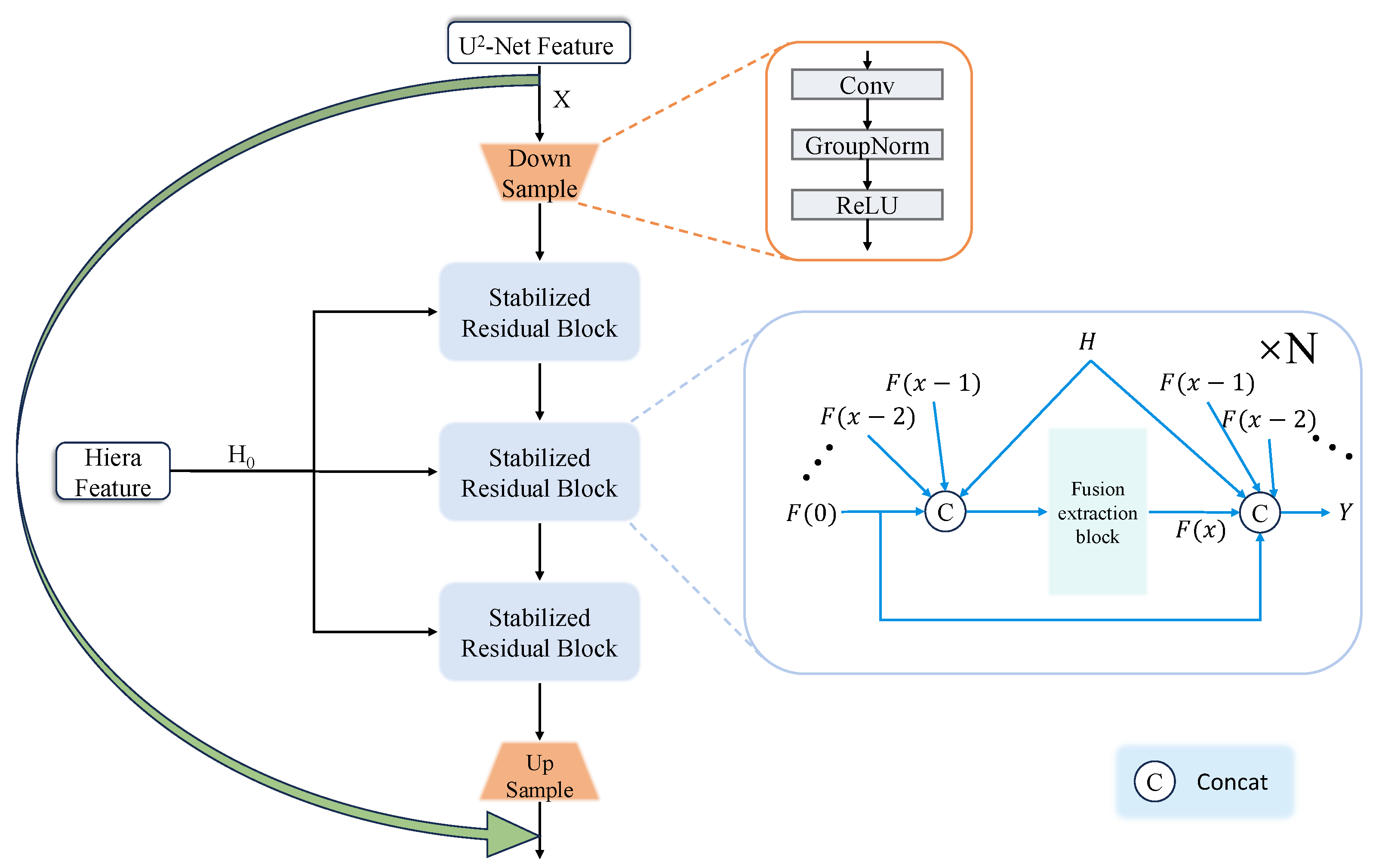

As depicted in

Figure 3, the operational flow of DRFM designates a feature map from a U

2-Net layer (U

2-Net Feature) as the primary backbone for fusion. This feature is progressively refined by passing through a cascade of

Stabilized Residual Blocks (SRBs), where

is set to three by default. Within each SRB, the corresponding feature map from the Hiera model (H-Feature) is integrated. Finally, the output from the SRB cascade is scaled and added back to the original U

2-Net Feature via a long-range skip connection. As previously discussed, this process enriches the robust global context from U

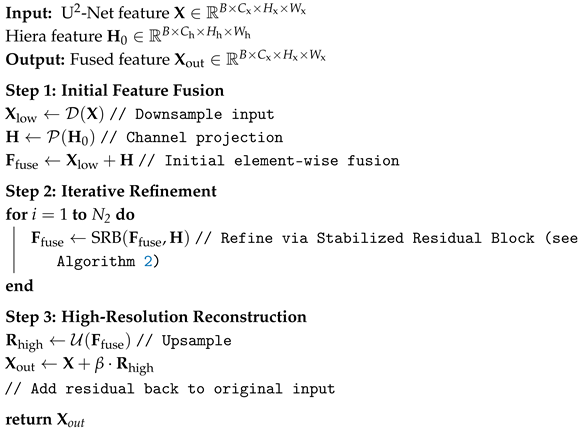

2-Net with high-fidelity local details from Hiera. Consequently, the fused features facilitate the generation of a pre-mask that possesses both precise localization and complete edge information, thereby providing superior guidance for the mask decoder to extract a highly refined mask of the object of interest. The overall fusion process of DRFM is summarized in Algorithm 1.

| Algorithm 1: The Overall Pipeline of DRFM. |

![Sensors 26 00365 i001 Sensors 26 00365 i001]() |

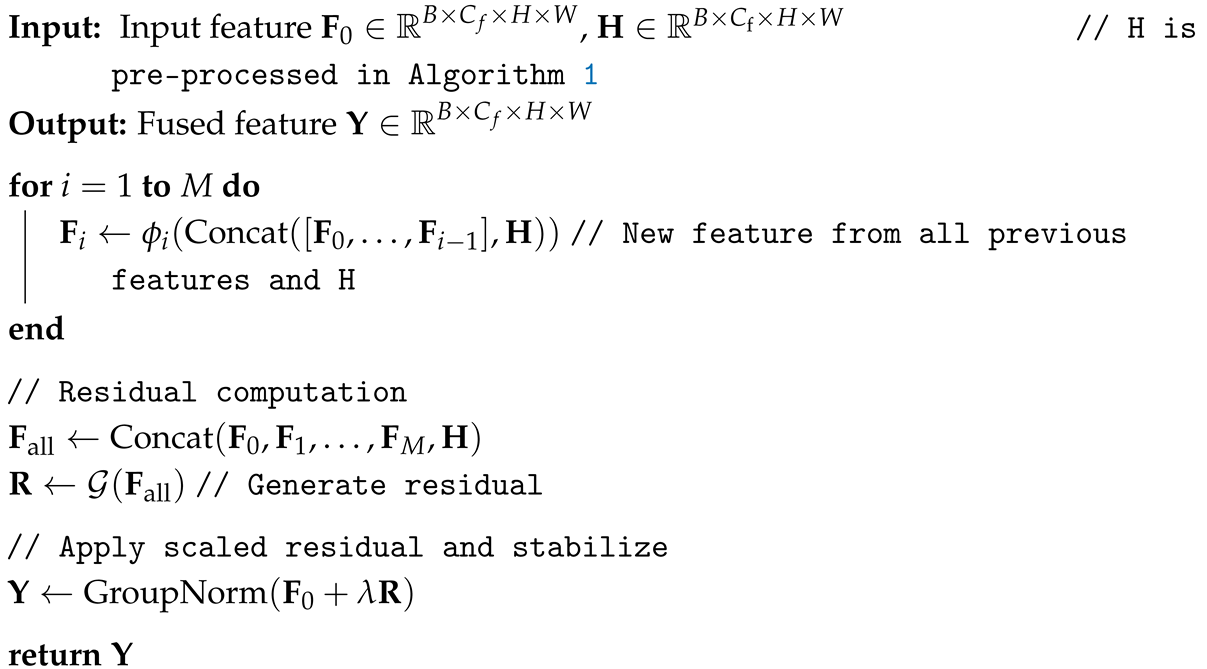

The internal architecture of the Stabilized Residual Block (SRB) is also illustrated in

Figure 3. An SRB takes an input feature

and the corresponding H-Feature

H. It performs

M iterations of residual fusion, with

M defaulting to four in our implementation. A key characteristic of this process is its dense connectivity: the new feature at each iteration is derived from a residual connection involving all preceding features in the block as well as the H-Feature

H. This mechanism is termed a “Dense Residual”. The total accumulated residual from these iterations is then scaled and added to the original input

, followed by a Group Normalization layer to produce the final output

Y. This scaling of the residual value, applied in both the overarching DRFM structure and within each SRB, serves as a crucial stabilization technique to prevent gradient explosion during training. The internal fusion process of the SRB is detailed in Algorithm 2.

| Algorithm 2: The details of SRB. |

![Sensors 26 00365 i002 Sensors 26 00365 i002]() |

3.4. FeatRefiner

The FeatRefiner module is designed with the explicit purpose of processing the multi-scale, hierarchical feature maps generated by the preceding DRFM fusion stages. Its primary function is to distill these diverse representations into a single, cohesive Pre-Mask. The refinement process commences by upsampling all fused feature maps from the different layers to a uniform spatial resolution. This ensures spatial alignment before integration. Subsequently, the resized feature maps are concatenated along the channel axis, creating a high-dimensional composite tensor that aggregates rich information from all semantic levels. To conclude the process, a lightweight convolutional layer is applied to this concatenated tensor. This operation serves the dual purpose of compressing the channel-wise information and effectively extracting the most salient features from the aggregated representation. The final output of this module is the Pre-Mask, denoted as , which provides a comprehensive initial localization of the object of interest to guide the subsequent mask decoder.

3.5. Mask Decoder

The outputs from the image encoder are subsequently processed by our enhanced mask decoder. This decoder is architecturally defined by a cascade of

Bidirectional Attention Interaction Modules (BAIMs), which iteratively refine the representations. As illustrated in the overall framework (

Figure 2), upon passing through the BAIM stack, the refined image embeddings undergo upsampling with transposed convolutions. Critically, to enhance the generation of high-resolution details, we inject fine-grained feature maps from the initial two stages of the Hiera encoder directly into these transposed convolution layers. Unlike standard ViT features which may lose high-frequency details, the early stages of Hiera, benefiting from its local attention mechanism and MAE pre-training, preserve rich spatial texture and edge information. These high-resolution features are rich in detailed edge information, proving vital for producing crisp and accurate segmentation boundaries. Finally, an MLP maps the output tokens to a dynamic linear classifier, and the foreground probability for each pixel location is computed with a dot product to yield the final mask.

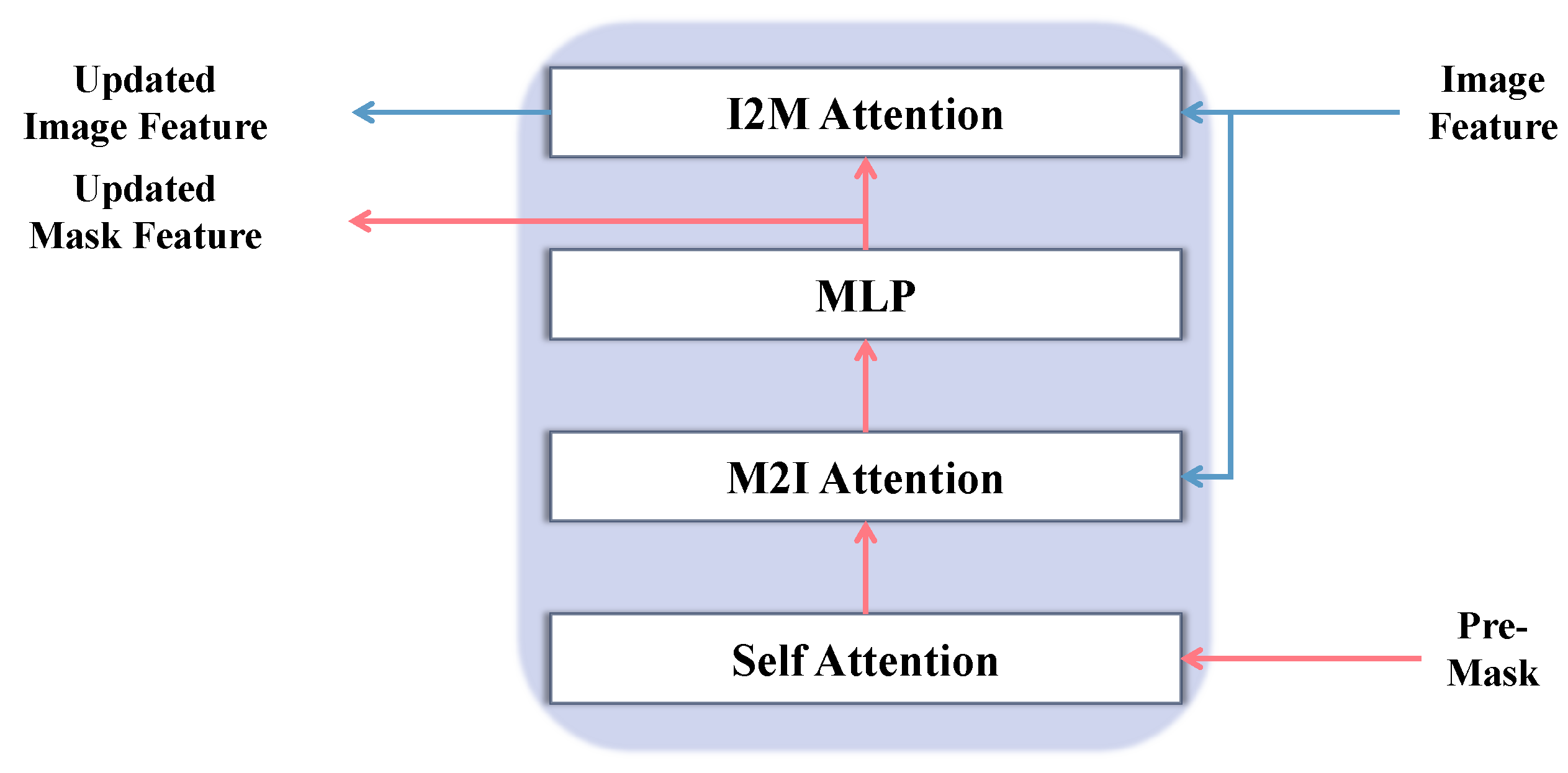

The internal architecture of the BAIM, detailed in

Figure 4, is meticulously designed to facilitate a comprehensive, bidirectional information flow. The input Pre-Mask is first partitioned into a grid of non-overlapping patches of size

(where

by default) and linearly embedded to form a sequence of

mask tokens. The processing pipeline within a single BAIM block commences with a self-attention layer applied to these mask tokens, enabling them to model their internal spatial dependencies. Following this intra-mask modeling, the refined tokens serve as queries in a Mask-to-Image (M2I) cross-attention mechanism, attending to the image features to gather contextually relevant visual information. Each token is then independently updated by a pointwise MLP block. The bidirectional interaction culminates in an Image-to-Mask (I2M) cross-attention layer. In this crucial step, the roles are inverted: the image features now act as queries to attend to the updated mask tokens, allowing the image representation itself to be refined based on the focused guidance from the mask. This complete cycle of self-attention, dual cross-attention, and MLP-based updates constitutes one interaction block, and its outputs are passed to the subsequent BAIM for further iterative refinement, ensuring the guidance from the Pre-Mask is fully leveraged.

4. Experiment

In this section, we present a comprehensive set of experiments to empirically validate our central thesis: that the Pre-Mask Guided “locate-and-refine” paradigm represents a superior architectural solution for automatic segmentation compared to SAM’s native “segment everything” approach. We conduct our evaluation on two distinct tasks that represent the opposing extremes of visual perceptibility: Dichotomous Image Segmentation (DIS) which characterized by high saliency but complex structure and Camouflaged Object Segmentation (COS) which characterized by low saliency and texture ambiguity. Validating on these extremes ensures the model’s robustness across the full spectrum of segmentation challenges. We begin by introducing the datasets and evaluation metrics employed for each task. Subsequently, we describe the implementation details, including the experimental environment, model configuration, and the specific training and inference procedures. We then report and analyze the quantitative results on the DIS5K [

2], COD10K [

32], and NC4K [

33] benchmark datasets, comparing our method against other approaches. Finally, we conduct extensive ablation studies to investigate the impact of individual components on the overall performance.

SAM-B, SAM-L, and SAM-H represent ViT-B, ViT-L, and ViT-H model types of SAM, respectively. SAM2-T, SAM2-B+, and SAM2-L represent Hiera-Tiny, Hiera-Base+, and Hiera-Large model types of SAM2, respectively.

4.1. Datasets and Evaluation Metrics

4.1.1. DIS Task: Dataset and Metrics

Our experiments use the DIS-5K dataset, the first large-scale benchmark specifically designed for high-resolution (2K, 4K, and beyond) binary image segmentation. The dataset consists of 5470 meticulously annotated images, organized into 22 groups across 225 categories. These images include diverse objects camouflaged in complex backgrounds, salient entities, and structurally dense targets. Each image was manually annotated at the pixel level, with an average labeling time of 30 min per image, extending up to 10 h for particularly complex instances. According to the official partition, the dataset is divided into 3000 training images(DIS-TR), 470 validation images(DIS-VD), and 2000 test images. The test set is further divided into four subsets (DIS-TE1 to DIS-TE4), each containing 500 images, representing ascending difficulty levels based on the product of structural complexity () and boundary complexity ().

Evaluation uses six established metrics: maximal F-measure (

) [

34], weighted F-measure (

) [

35], Mean Absolute Error (

) [

36], Structural measure (

) [

37], mean Enhanced alignment measure (

) [

38], and Human Correction Efforts (

) [

2]. Arrows indicate the preferred direction (↑: higher is better, ↓: lower is better).

The maximal F-measure evaluates the optimal trade-off between precision and recall. It calculates the F-measure scores across varying thresholds (

) and selects the maximum value. Following the standard setting in saliency detection, we set

to emphasize precision. It can be expressed as

The weighted F-measure (

) utilizes a weighted precision (

) and weighted recall (

) to address the flaw that standard measures treat all pixels equally. It considers the spatial dependence and pixel importance. In our evaluation, we set

. It can be expressed as

The Mean Absolute Error quantifies the global accuracy of predictions by computing the average absolute difference between the predicted map (

) and the ground-truth map (

) across all pixels. A lower

indicates higher consistency between predictions and ground truths. It can be expressed as

The Structural measure (S-measure) simultaneously evaluates the region-aware structural similarity (

) and object-aware structural similarity (

) between the prediction and ground truth. With the balance parameter

set to

. It can be expressed as

The mean Enhanced alignment measure calculates the average

E-value (which integrates pixel-level and region-level errors) across multiple thresholds (

), reflecting error distribution characteristics at both local and global scales. It can be expressed as

The Human Correction Efforts is a metric designed to quantify the barriers between model predictions and real-world applications. Unlike standard metrics that measure the geometric gap (e.g., IoU), HCE approximates the human efforts required to correct the faulty regions (False Positives and False Negatives) in a segmentation mask. Specifically, it estimates the number of mouse clicking operations needed for correction, including dominant point selection for boundary refinement and region selection for area fixing. A lower

value indicates a reduced manual revision workload, signifying that the model’s output satisfies high-accuracy requirements with fewer human interventions. It can be expressed as

4.1.2. COS Task: Datasets and Metrics

For the COS task, our experiments are based on the COD10K and NC4K datasets. COD10K is the first large-scale benchmark for camouflaged object detection, across 78 sub-classes and 10 super-classes, capturing diverse camouflage scenarios in natural environments. We follow the official split of 3040 images for training and 2026 for testing. The NC4K dataset, containing 4121 images of camouflaged objects in nature, serves as a supplementary benchmark to validate the generalization capability of the models.

To evaluate segmentation performance, we adopt the standard COCO-style metrics: Average Precision (), , and .

Average Precision () is the primary metric, calculated as the mean of s over multiple IoU (Intersection over Union) thresholds (from to with a step of ). It provides a comprehensive measure of instance segmentation quality. and are variants of calculated at single, fixed thresholds of and , respectively. evaluates basic detection and localization accuracy, while imposes a stricter criterion for more precise mask predictions.

4.2. Experiment Settings

Our proposed PMG-SAM is an enhanced architecture based on the SAM. To enhance the multi-scale feature representation, we employ a Feature Pyramid Network (FPN) [

39] to process the features extracted by the Hiera encoder before they are fed into the mask decoder. Our performance is benchmarked against numerous leading models, including SAM2 [

40], a recent advancement for both image and video segmentation. All experiments were conducted on a system running Ubuntu 20.04.6, equipped with a single NVIDIA Tesla A100-PCIE-40GB GPU (NVIDIA, Santa Clara, CA, USA), and built upon a stack of PyTorch 2.5.1, CUDA 11.8, and Segment-Anything 1.0.

4.3. Training and Inference Procedure

To leverage powerful prior knowledge and accelerate training, the image encoder components—Hiera and U

2-Net—are initialized with pre-trained weights and remain frozen during training. To ensure computational efficiency and prevent overfitting on limited downstream data, the image encoder components—Hiera and U

2-Net—remain fully frozen throughout the training process. The Hiera-base+ model was pre-trained on the ImageNet-1K dataset [

41] using MAE self-supervised learning framework. The U

2-Net component uses weights pre-trained on the DUTS dataset [

42]. The total loss function is a weighted sum of three standard segmentation losses: Binary Cross-Entropy (

),

, and

loss. The total loss

is computed as:

where the weights

are set to 1, 1, and 10. Following the original SAM, the number of BAIM,

, is set to 2. The batch size is set to 4. For data preprocessing, input images undergo a series of augmentations, including random cropping, random horizontal flipping, random rotation between

and

, and random color jittering. Subsequently, all images are resized and padded to a fixed resolution of

to comply with the input requirements of the Hiera encoder.

For the DIS task, the model is trained for 300 epochs. We use the AdamW optimizer [

43] with an initial learning rate of

, a weight decay of

, and momentum of

. A learning rate warm-up period is applied for the first 10 epochs, followed by a decay schedule where the learning rate is reduced by

every 40 epochs. An early stopping mechanism is in place, terminating the training if the validation loss does not improve for 40 consecutive epochs.

For the COS task, we fine-tune the model using the best-performing weights obtained from the DIS task. We conduct two separate fine-tuning processes: one on the COD10K training set and another on the NC4K training set. The NC4K dataset is first partitioned into training () and testing () sets. In both fine-tuning pipelines, the respective training set is further split into an training subset and a validation subset. The initial learning rate is set to a lower value of . We employ a learning rate scheduler that reduces the learning rate by half if the validation loss plateaus for 5 consecutive epochs, with a minimum learning rate of . The optimizer remains AdamW with a weight decay of . The model is trained for a total of 90 epochs, with a 10-epoch warm-up and an early stopping patience of 20 epochs.

During inference, the

input image is passed through PMG-SAM to generate a binary segmentation map. For the DIS task, the inference is fully end-to-end and requires no post-processing. For the COS task, a specific post-processing pipeline is employed to separate potentially overlapping or adjacent objects. First, during training, the multiple instance masks from the COD10K ground truth are merged into a single binary mask, guiding the model to learn the general concept of a “camouflaged object”. At inference time, we apply Connected Component Analysis (CCA) [

44] to the model’s binary output to separate the binary mask into individual object proposals. The Hungarian algorithm [

45] is then used to perform one-to-one matching between the predicted instances and the ground-truth masks. Finally, the matched predictions are saved in the standard COCO JSON format to enable evaluation with the

metrics.

4.4. Results on DIS Task

We begin our analysis with the DIS task, which serves as the primary benchmark to evaluate the core segmentation quality of our model against baselines and specialized methods. The fine-grained and complex nature of objects in the DIS5K dataset provides an ideal testbed to assess the efficacy of our approach.

4.4.1. Efficiency and Complexity Analysis

Table 1 presents a comprehensive comparison of model complexity and inference efficiency. A key advantage of our approach is its superior balance between parameter efficiency and practical speed.

First, regarding parameter efficiency, PMG-SAM requires only M trainable parameters, making it significantly more lightweight to train than even the smallest SAM2-T model ( M).

Second, we address the concern regarding computational cost. Although our total FLOPs ( G) are relatively high due to the utilization of powerful frozen backbones (Hiera-B+ and U2-Net), our method achieves the highest inference speed of FPS. This result reveals a critical insight: the standard “automatic mode” of SAM and SAM2 relies on a dense grid-prompting strategy, which suffers from severe computational redundancy and slows down inference. In contrast, our “locate-and-refine” paradigm generates a global prior in a single pass, avoiding exhaustive grid search. Thus, despite higher theoretical FLOPs per pass, our actual wall-clock inference time is significantly lower.

Finally, regarding memory consumption, PMG-SAM operates with a peak memory of approximately 3420 MB. This is comparable to the base-sized models and significantly lower than the large variants (e.g., SAM-H requires 5731 MB), ensuring deployability on standard GPUs.

4.4.2. Quantitative Results

We extensively evaluated PMG-SAM against baseline models (SAM, SAM2) and specialized methods including HRNet [

46], STDC [

47], IS-Net [

2], and SINetV2 [

48] across DIS-VD and DIS-TE1–4 datasets. Quantitative results on DIS5K validation and test sets (

Table 2) reveal consistent improvements across all metrics.

Table 2 presents the quantitative comparison of our method against both baseline and task-specific models on the DIS5K dataset. PMG-SAM demonstrates a comprehensive improvement across multiple evaluation metrics on both the validation and test sets. Notably, the HCE value of PMG-SAM is substantially lower than that of the baseline models, which not only signifies a numerical superiority but also reflects the targeted design of our architecture to address key segmentation challenges. Our model not only achieves significant gains over the baselines but also rivals and even surpasses the performance of methods specifically designed for the DIS task.

4.4.3. Analysis Against Baseline Models

Our analysis against baseline models is structured to answer two key questions: (1) How effective is our framework at overcoming the limitations of SAM’s original “segment everything” automatic mode? (2) Is our approach merely an automated prompter, or does it represent a fundamentally more powerful segmentation system?

As shown in

Table 2, when compared to the standard Auto mode of all SAM and SAM2 variants, PMG-SAM demonstrates a transformative leap in performance. For instance, on the challenging DIS-TE2 set, our model achieves a maximal F-measure of

, a stark contrast to the

of SAM-H and

of SAM2-L. This massive improvement across all metrics confirms that our Pre-Mask Guided paradigm effectively solves the localization blindness and fragmentation issues inherent in the grid-based approach, enabling precise and coherent segmentation of target objects without human intervention.

Crucially, to explicitly validate whether the localization failure of SAM stems from a lack of domain knowledge, we compared PMG-SAM against a fine-tuned version of SAM-H (the largest variant). As shown in

Table 2, although fine-tuning improves the performance of SAM-H in automatic mode (raising

from

to

on DIS-VD), it still lags significantly behind our PMG-SAM (

). This substantial gap confirms that parameter optimization alone cannot resolve the inherent “localization blindness” of the grid-search strategy. In contrast, our Pre-Mask paradigm provides the necessary structural prior, achieving superior performance with significantly fewer trainable parameters.

To further investigate the effectiveness of our paradigm, we compare our fully automatic method against baseline models guided by ground-truth bounding boxes (GT-Bbox). This represents an ideal scenario for prompt-based methods. As shown in the tables, our PMG-SAM consistently outperforms these perfectly prompted baselines on challenging test sets (e.g., DIS-TE2, TE3, TE4). This finding is particularly insightful. It suggests that the dense, structural information provided by our internally generated Pre-Mask offers a richer and more effective guidance signal to the decoder than a sparse bounding box. This validates that our ‘locate-and-refine’ approach creates a more capable segmentation pipeline, going beyond simple prompt automation.

In summary, these comparisons, combined with the model size analysis in

Table 1, robustly demonstrate that PMG-SAM’s performance gains stem from a superior and more efficient architectural paradigm, not merely model scaling or automated prompting. It charts a new path for creating truly automatic and highly accurate general-purpose segmentation models.

4.4.4. Analysis Against Specialized Methods

When compared with other classic DIS methods, our model also exhibits strong competitiveness. Commendably, PMG-SAM’s performance matches and in some metrics exceeds that of IS-Net, the established baseline for the DIS task, showcasing its powerful capabilities. Nevertheless, the Maximum F-measure on certain test sets still has room for improvement compared to IS-Net, which provides a clear direction for our future work. Therefore, while IS-Net stands as a strong and classic baseline specifically designed for the DIS task, our PMG-SAM demonstrates highly competitive performance. This is noteworthy because PMG-SAM is not a task-specific model but rather a general-purpose paradigm for enhancing foundation models. Its strong performance on this challenging benchmark showcases the effectiveness and potential for broader applicability of our approach.

4.5. Results on COS Task

To rigorously evaluate the zero-shot transfer capability, transfer learning efficacy, and domain generalization capacity of PMG-SAM, we conducted comprehensive experiments against the baseline SAM alongside state-of-the-art models including SAM2, Mask R-CNN [

49], PointSup [

50], Tokencut [

51], Cutler [

52], and TPNet [

53]. Benchmarking was performed on the COD10K test set and NC4K dataset.

As shown in

Table 3, we first assess the zero-shot transfer capability, a key feature of the SAM series. By directly testing our best DIS-trained model on COD10K and NC4K, we find that PMG-SAM surpasses all SAM2 variants and the unsupervised task-specific method on the COD10K test set. On NC4K, it even matches or exceeds some weakly supervised methods. Although a performance gap with the original SAM-H remains, these results demonstrate that PMG-SAM learns effective and transferable representations from the DIS task, and its generalization ability is stronger than methods that do not rely on any labels.

Next, we performed transfer learning by fine-tuning the best DIS model on the COD10K training set and the NC4K training set. As seen in the “Transfer Learning” section of

Table 3, the

score on COD10K shows a remarkable surge from

to

, closely approaching the performance of the fine-tuned SAM-H (

).

Finally, we tested the domain generalization of the fine-tuned model on the COD10K testing set and the NC4K testing set. The results for domain generalization—evaluated by testing the model on a dataset it was not trained on (e.g., testing the COD10K-trained model on the NC4K test set)—are particularly striking. The score reaches , and the stricter metric hits , surpassing all compared SAM and SAM2 variants, and even the fully supervised Mask R-CNN baseline.

Furthermore, we included a comparison with the fine-tuned SAM-H. On the COD10K dataset, the fine-tuned SAM-H achieves the highest AP of , surpassing our transfer learning result. This is expected given SAM-H’s massive parameter count compared to our model. However, on the NC4K dataset, our PMG-SAM in the ‘Transfer Learning’ setting achieves an AP of , outperforming the fine-tuned SAM-H. This result highlights a key advantage of our architecture: “locate-and-refine” paradigm learns a robust, class-agnostic notion of objectness that generalizes better to unseen distributions.

Collectively, these three sets of experiments demonstrate that our model, pre-trained on the DIS dataset, acquires a powerful foundational segmentation capability. The fact that its performance can be elevated to a state-of-the-art level on new domains with only minimal fine-tuning underscores its excellent domain generalization ability.

This robust performance across diverse settings provides strong evidence that our “locate-and-refine” paradigm is not a narrow, task-specific trick. Instead, it endows the model with a powerful and generalizable foundational segmentation capability. The fact that this capability can be efficiently transferred to new domains to achieve good results positions PMG-SAM as a new and effective blueprint for building the next generation of versatile, fully automatic segmentation models.

Qualitative Analysis

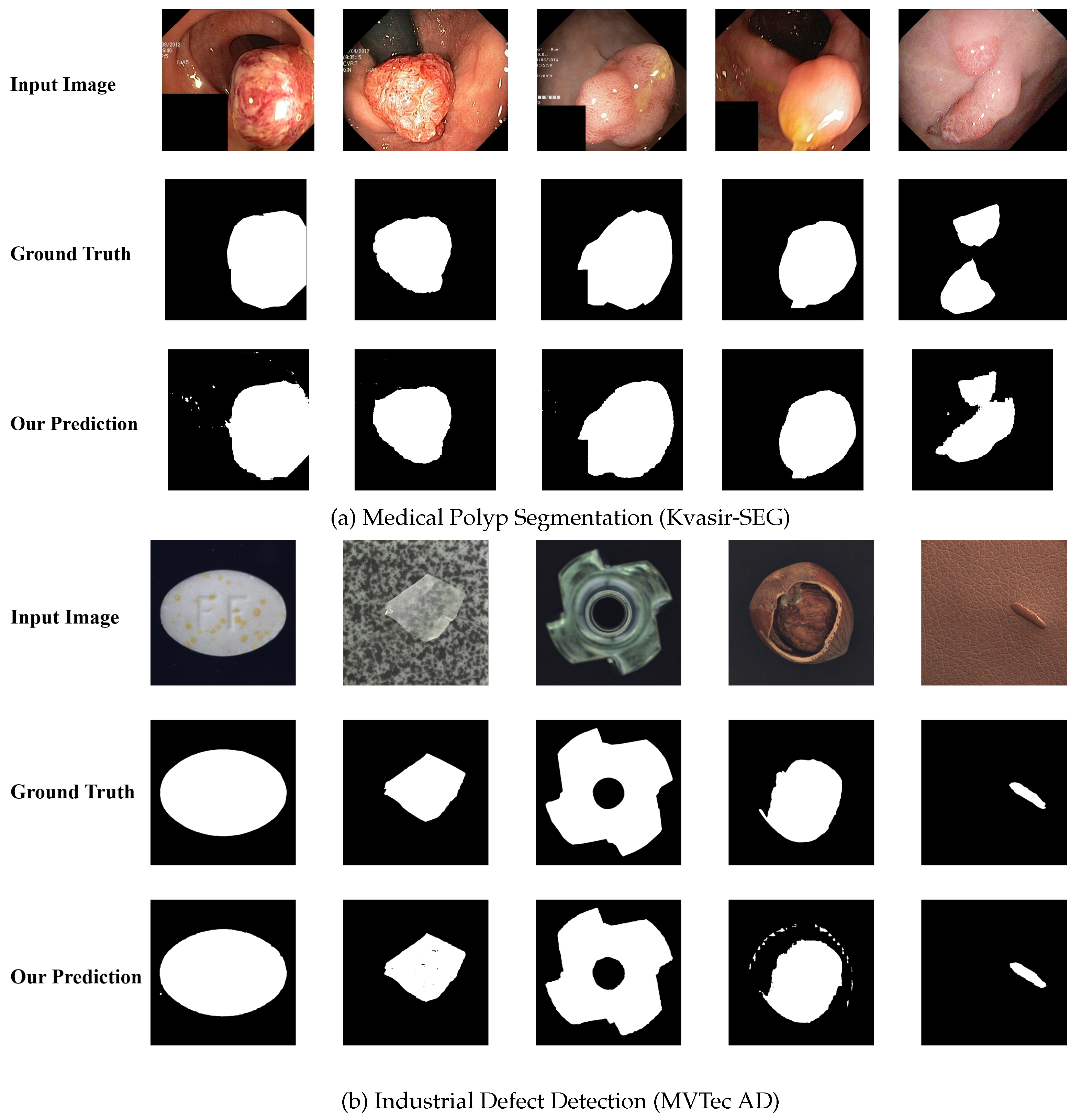

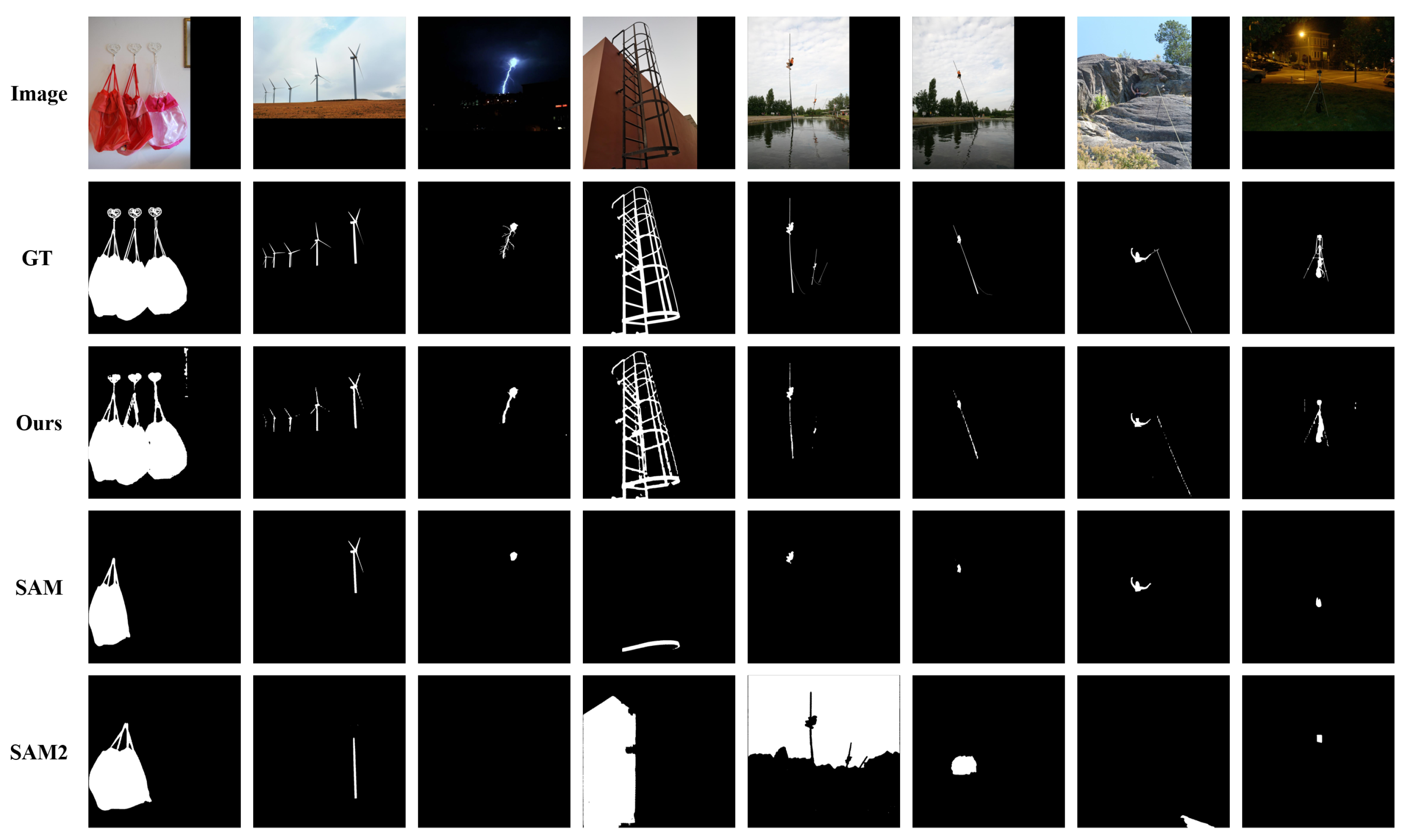

To provide an intuitive understanding of our model’s capabilities, we present a series of visual comparisons in

Figure 5 for the DIS task and

Figure 6 for the COS task. As shown in

Figure 5, PMG-SAM exhibits a marked superiority over baseline models across various challenging scenarios in the DIS5K dataset. First, in scenes with complex backgrounds or multiple objects (e.g., columns 1 and 2), our method accurately segments the contours of the bag and wind turbine, whereas the baseline fails to identify the objects completely. Second, our model demonstrates a clear advantage in edge handling. For regions with intricate details, such as the lightning in column 3, PMG-SAM captures the fine edges with high fidelity, while the baseline suffers from significant omissions. Third, our method shows stronger background suppression. In column 5, where the background contains multiple distracting elements, PMG-SAM remains focused on the primary target, unlike the baseline, which is disturbed by background noise. Finally, for small or low-contrast objects, such as the faint support structure in column 8, our model successfully identifies and segments it, a task where the baseline fails. In summary, these qualitative results corroborate our quantitative findings, proving that PMG-SAM is a more robust and precise solution for fully automatic segmentation, particularly in complex scenes.

The qualitative results for the COS task in

Figure 6 further underscore the advantages of our method. When dealing with challenging camouflaged targets, PMG-SAM consistently outperforms SAM and SAM2. For instance, it successfully preserves the slender legs of the crab (column 6), which are entirely missed by the baselines. Similarly, it produces a single, coherent mask for the sea snake (column 3), while the baseline outputs are fragmented. These examples highlight superior ability of our model to understand global context and perceive boundaries, enabling it to generate far more accurate and structurally complete masks. This provides compelling visual evidence for the effectiveness and generalization capability of our Pre-Mask-Guided segmentation mechanism.

To further demonstrate the robust localization capability of PMG-SAM across diverse visual domains, we provide additional qualitative results on medical (Kvasir-SEG) and industrial defect (MVTec AD) datasets in

Appendix B. These zero-shot inference results confirm that our Pre-Mask mechanism can effectively locate salient targets even in domains completely unseen during training.

4.6. Limitation and Future Work

To systematically analyze the boundaries of PMG-SAM, we evaluated the model under extreme scenarios. As illustrated in

Figure 7, we categorize the primary failure cases into four distinct types, revealing different underlying limitations:

First, Instance Distinctions (Column a): In scenarios with overlapping instances (e.g., the shrimp), the model correctly identifies the salient region but merges adjacent objects into a single connected component. This explicitly exposes a fundamental limitation of our current architecture: since the Pre-Mask paradigm focuses on generating a high-quality binary prior, it relies on post-processing (CCA) rather than a genuine end-to-end mechanism to distinguish instances. Consequently, without instance-specific queries or a separate mask head, the model struggles to separate topologically connected instances.

To strictly quantify this limitation, we filtered the COD10K and NC4K test sets to create specific ‘Overlapping Subsets.’ Statistical analysis reveals that overlapping instances are relatively rare, accounting for only () of COD10K and () of NC4K. However, on these specific subsets, the performance of PMG-SAM drops significantly. As shown in the supplementary analysis, the model achieves an AP of only on the COD10K overlapping subset and on the NC4K overlapping subset. Compared to the overall performance (AP 30–40%), this drastic degradation confirms that the non-end-to-end reliance on CCA is insufficient for complex instance separation, marking a clear boundary of our current architecture.

Second, Environmental Constraints (Column b): Under low illumination conditions (e.g., the black cat), the reduced contrast gradient between the object and the background impedes the Pre-Mask Generator’s ability to capture precise boundaries, leading to noisy and overflowing edges.

Third, Structural and Scale Challenges (Columns c and d): For objects with complex topologies (e.g., the transmission tower) or tiny scales (e.g., the frog), the downsampling operations in the visual encoder inevitably result in the loss of high-frequency spatial details. This causes fine grid structures to disappear and tiny objects to be missed or blurred in the final mask.

Fourth, Texture Ambiguity (Column e): When dealing with strong camouflage where the foreground texture is statistically nearly identical to the background (e.g., the snake), the model suffers from “feature confusion,” resulting in severe fragmentation of the segmentation map.

These findings point to two clear directions for future work: enhancing the high-resolution feature preservation in the encoder to handle complex structures and tiny objects, and replacing the post hoc CCA with an end-to-end instance-aware mechanism to fundamentally resolve the limitation in separating overlapping targets.

4.7. Ablation Study

In this section, we conduct a series of ablation studies to dissect the core mechanisms of PMG-SAM and rigorously evaluate the individual contributions of its key components.

4.7.1. Experimental Design

All ablation studies are conducted on the combined DIS-TE1-4 test sets. We use the full suite of six metrics (, , , , , ) for a comprehensive evaluation. All experiments share the same training environment and hyperparameters to ensure a fair comparison.

4.7.2. Analysis of Key Components of PMG-SAM

Table 4 presents comprehensive ablation study results to verify the effectiveness of each key component in our PMG-SAM. The study is organized into two main groups: (a) evaluating the effectiveness of our proposed Dual-branch Refined Fusion Module (DRFM), and (b) examining the impact of introducing high-resolution features from different sources.

(a) Feature Fusion Module: In this group, we validate the necessity of our carefully designed DRFM by comparing it with no feature fusion and a simple residual fusion alternative. The results reveal a critical insight: naive feature fusion is detrimental. The Residual Fusion variant, which performs simple element-wise addition followed by a Conv-BN-ReLU block (see

Figure 8), achieves significantly worse performance (

:

) than both our DRFM-equipped model (

) and even the baseline with no fusion at all (

).

This strongly suggests that due to the vast architectural and feature distribution differences between Hiera and U2-Net, direct addition introduces conflicting information and noise, corrupting the original feature representations. In contrast, our DRFM, with its sophisticated structure, successfully aligns and enhances these heterogeneous features, leading to consistent improvements across all metrics.

This leads to another key insight: the quality and relevance of high-resolution features are more important than their mere presence. Our results show that selectively injecting high-fidelity features (from Hiera) is the optimal strategy for boundary refinement.

(b) High-Resolution Feature Strategy: This group identifies the optimal strategy for supplementing the mask decoder with high-resolution features. We examine three approaches: introducing no high-resolution features, only from first two stages of U2-Net, or from both Hiera and U2-Net backbones. The results offer another profound insight: not all high-resolution features are beneficial.

Introducing only U2-Net’s features degrades performance (: ) compared to the baseline without any high-res features (), indicating that shallow U2-Net features may contain excessive background noise or task-irrelevant details. The variant using features from both backbones () shows improved performance, suggesting that high-quality of Hiera features can partially offset the negative impact of U2-Net’s features.

This result strongly corroborates our architectural analysis in

Section 3.2: U

2-Net features are rich in semantic context but noisy for fine details, whereas Hiera features are structurally precise. Therefore, selectively injecting high-fidelity features (from Hiera) is the optimal strategy for boundary refinement, validating the necessity of this specific dual-backbone design.

Full Model Performance: Our final PMG-SAM model combines DRFM with high-resolution features from Hiera only, achieving the best overall performance across all metrics. This configuration yields the highest scores in (), (), (), and (), while achieving the lowest error rates in M () and (). These results comprehensively validate both the independent effectiveness of our two core innovations and their powerful synergy when combined.

These results comprehensively validate our design choices and demonstrate that the powerful synergy between a well-designed fusion module and a selective feature injection strategy is the key to PMG-SAM’s good performance.

4.7.3. Hyperparameter Sensitivity Analysis

To verify the rationality and robustness of the proposed framework, we conducted comprehensive sensitivity analyses on critical hyperparameters. All experiments were performed on the DIS-TE(1-4) dataset. The results are visualized in

Figure 9. For detailed numerical results across all DIS5K datasets, please refer to

Table A1 in

Appendix A.

(1) Structure of DRFM (

and

M): As shown in

Figure 9a,b, we analyzed the number of Stabilized Residual Blocks (

) and residual fusion iterations (

M). The model achieves peak performance at

and

. Reducing these parameters limits the network’s capacity to capture fine-grained details, while increasing them introduces redundancy without performance gains. Note that

is fixed at 6 due to the inherent structure of the frozen backbones.

(2) Configuration of BAIM (

): We further investigated the optimal number of BAIM modules (

) and the necessity of the bidirectional mechanism. As illustrated in

Figure 9c, the bidirectional configuration with

significantly outperforms the unidirectional counterpart(Uni-dir) and other quantity settings (

or 3). This confirms that two bidirectional interaction stages are sufficient to align multi-modal features effectively.

(3) Loss Function Weights: Finally, we evaluated the weight ratio of the loss function

. Since the IoU loss value is numerically smaller than BCE and Dice losses, a larger weight is typically required to balance the gradients. We tested different ratios for

(5, 10, 15) while keeping

.

Figure 9d demonstrates that the setting of 1:1:10 yields the best convergence and segmentation accuracy, validating our default configuration.

4.7.4. Analysis of Prior Guidance Quality and Error Propagation

To validate the rationale behind our dual-branch encoder, we conducted a quantitative analysis of the intermediate Pre-Mask generated by the U2-Net branch.

Superiority of Structural Prior. We compared the Pre-Mask against semantic priors, specifically CLIPSeg [

54], using the official weights and a generic prompt. As shown in

Table 5, CLIPSeg yields an extremely low IoU of

, indicating that semantic-based models struggle to capture the intricate boundary details required for DIS tasks. In contrast, our Pre-Mask achieves an IoU of

and an S-measure of

, verifying that the U

2-Net branch provides superior, shape-aware structural guidance.

Refinement and Error Correction. Furthermore, our Final-Mask achieves a remarkable performance leap, boosting the IoU to

and reducing MAE to

. To investigate the error propagation mechanism, we visualized the correlation between Pre-Mask and Final-Mask quality in

Figure 10. The plot reveals a Pearson correlation of

. Notably, a dense cluster of data points appears in the upper-left region (where Pre-Mask IoU

but Final-Mask IoU

). This demonstrates a powerful error correction mechanism: even when the prior guidance is erroneous or fails to locate the target, the subsequent decoder effectively leverages image features to autonomously recover the correct segmentation, preventing error propagation.

5. Conclusions

In this paper, we presented PMG-SAM, a novel framework that represents a paradigm shift in automatic segmentation. Our work is motivated by the fundamental limitations of SAM’s “segment everything” mode: its localization blindness in complex scenes and the computational inefficiency of its grid-based prompting. To overcome these challenges, we proposed a new “locate-and-refine” architecture.

This new paradigm is operationalized by a Pre-Mask Generator, which performs the critical “locate” step. It leverages a synergistic dual-branch encoder and a novel Dense Residual Fusion Module (DRFM) to produce a high-quality, dense Pre-Mask that provides a strong global prior for the target. This internal guidance signal is then passed to an enhanced mask decoder, which executes the “refine” step, augmented by high-resolution features to ensure precise boundary delineation. This two-stage process replaces SAM’s exhaustive search with intelligent, targeted refinement, achieving both high accuracy and efficiency.

The effectiveness of this paradigm is empirically validated through extensive experiments on both salient and camouflaged targets. Our parameter-efficient PMG-SAM not only drastically outperforms the automatic modes of SAM and SAM2 but also surpasses their performance when provided with perfect ground-truth bounding box prompts. This key result highlights that our dense, internal guidance is a more powerful mechanism than sparse, external prompting. Furthermore, on the COS task, PMG-SAM demonstrates exceptional transfer learning and domain generalization capabilities, achieving good performance after minimal fine-tuning. Our ablation studies further confirmed that each component of our design is crucial to the framework’s success.

In summary, PMG-SAM and its underlying ‘locate-and-refine’ paradigm offer an effective and efficient solution to the inherent localization bottleneck of SAM’s automatic mode. Our work provides a valuable blueprint for developing future foundation models that are not only powerful and versatile but also truly and intelligently automatic in prompt-free scenarios.