Low-Light Image Segmentation on Edge Computing System

Abstract

1. Introduction

2. Background and Related Work

2.1. Illumination Enhancement Method

2.1.1. Non-ML-Based Method

2.1.2. ML-Based Method

2.2. Segmentation Method

3. Low-Light Image Segmentation Algorithm

3.1. Illumination Enhancement

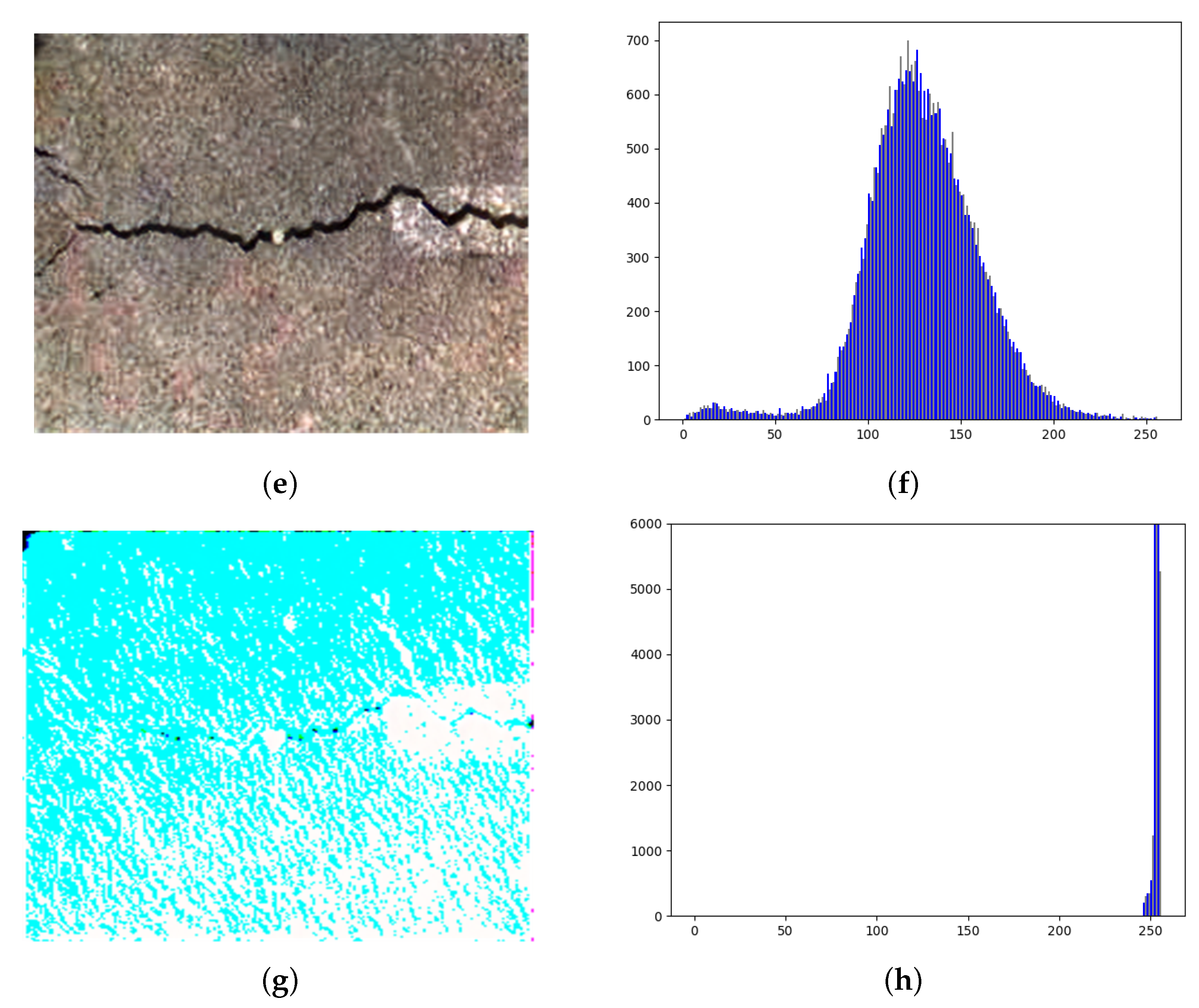

3.2. Contrast Enhancement

3.3. Image Segmentation

| Algorithm 1 Low-light Image Segmentation Algorithm |

|

4. Experimental Result

4.1. Experimental Setup

4.2. Image Enhancement Performance

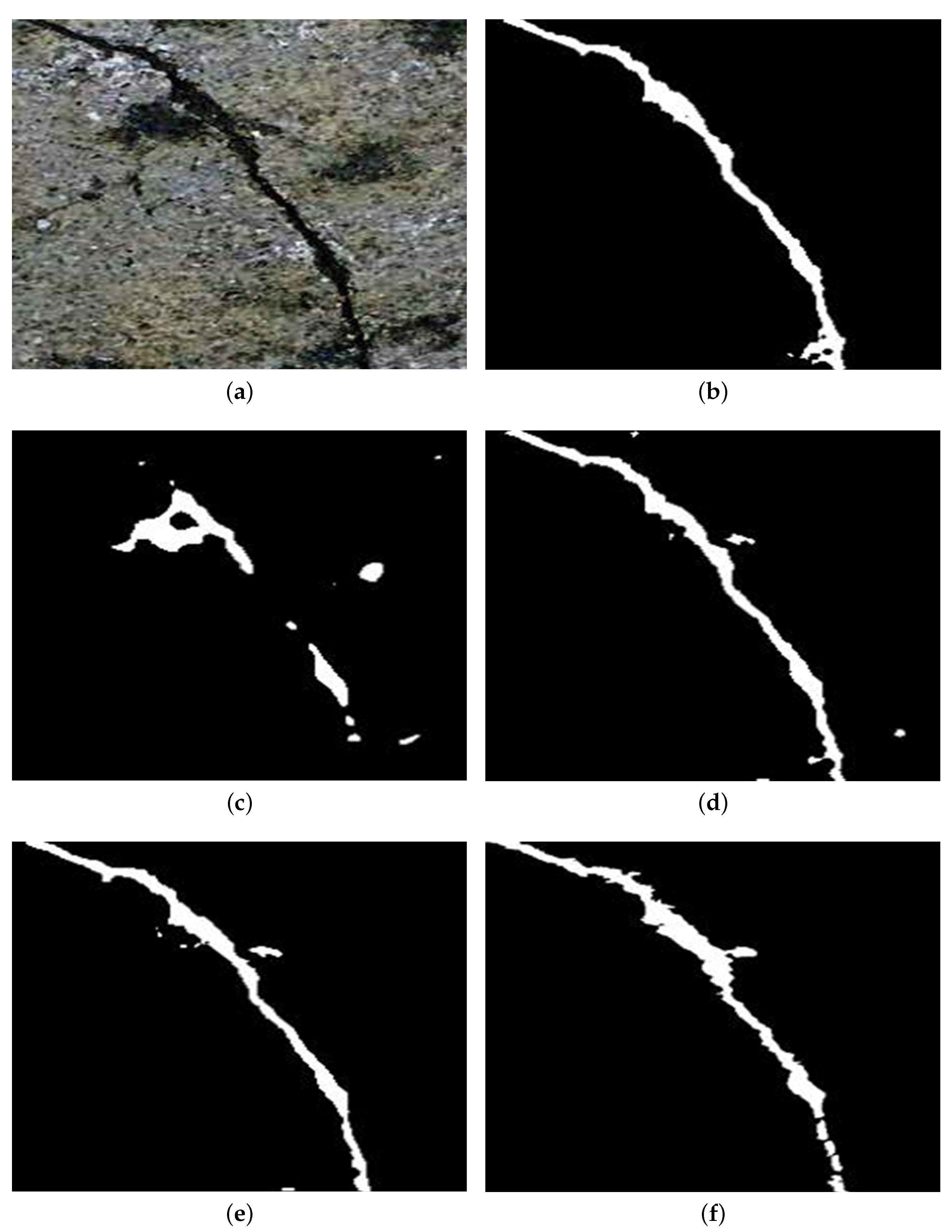

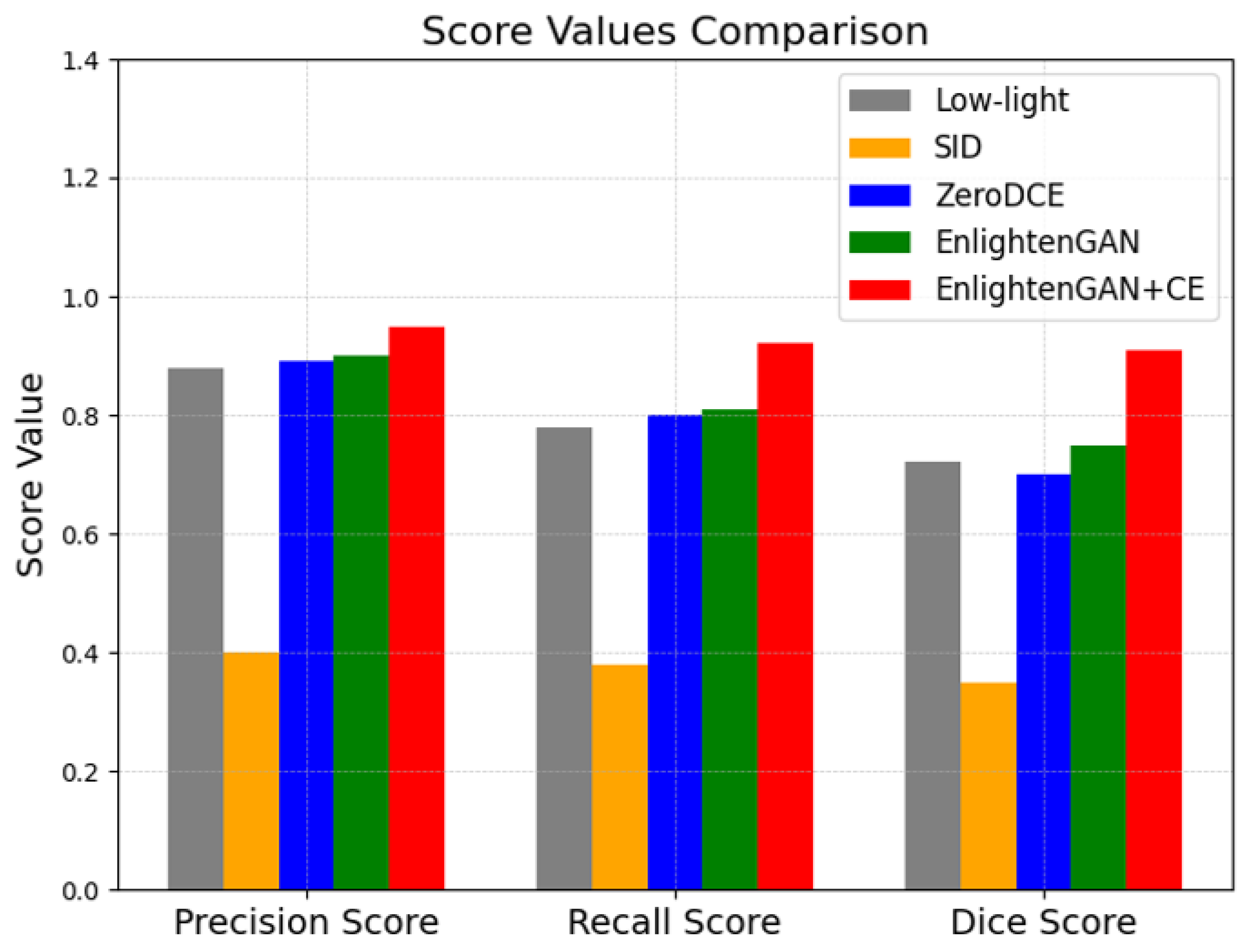

4.3. Accuracy Performance

4.4. Performance Comparison

4.5. Implementation Consideration

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Appendix A

References

- Yao, Y.; Tung, S.T.E.; Glisic, B. Crack Detection and Characterization Techniques—An Overview. Struct. Control Health Monit 2014, 21, 1387–1413. [Google Scholar] [CrossRef]

- Munawar, H.S.; Hammad, A.W.; Haddad, A.; Soares, C.A.P.; Waller, S.T. Image-based Crack Detection Methods: A Review. Infrastructures 2021, 6, 115. [Google Scholar] [CrossRef]

- Kulkarni, S.; Singh, S.; Balakrishnan, D.; Sharma, S.; Devunuri, S.; Korlapati, S.C.R. CrackSeg9k: A Collection and Benchmark for Crack Segmentation Datasets and Frameworks. ECCV 2022, 13807, 179–195. [Google Scholar] [CrossRef]

- Zhang, L.; Yang, F.; Zhang, Y.D.; Zhu, Y.J. Road Crack Detection Using Deep Convolutional Neural Network. In Proceedings of the 2016 IEEE International Conference on Image Processing (ICIP), Phoenix, AZ, USA, 25–28 September 2016; pp. 3708–3712. [Google Scholar]

- Cha, Y.J.; Choi, W.; Büyüköztürk, O. Deep Learning-Based Crack Damage Detection Using Convolutional Neural Networks. Comput.-Aided Civ. Infrastruct. Eng. 2017, 32, 361–378. [Google Scholar] [CrossRef]

- Dorafshan, S.; Thomas, R.J.; Maguire, M. Comparison of Deep Convolutional Neural Networks and Edge Detectors for Image-Based Crack Detection in Concrete. Constr. Build. Mater. 2018, 186, 1031–1045. [Google Scholar] [CrossRef]

- Chen, F.C.; Jahanshahi, M.R. NB-CNN: Deep Learning-Based Crack Detection Using Convolutional Neural Network and Naïve Bayes Data Fusion. IEEE Trans. Ind. Electron. 2017, 65, 4392–4400. [Google Scholar] [CrossRef]

- Zou, Q.; Zhang, Z.; Li, Q.; Qi, X.; Wang, Q.; Wang, S. DeepCrack: Learning Hierarchical Convolutional Features for Crack Detection. IEEE Trans. Image Process. 2018, 28, 1498–1512. [Google Scholar] [CrossRef]

- Han, J.H.; Cho, Y.C.; Lee, H.G.; Yang, H.S. Crack Detection Method on Surface of Tunnel Lining. In Proceedings of the 2019 34th International Technical Conference on Circuits/Systems, Computers and Communications (ITC-CSCC), JeJu, Republic of Korea, 23–26 June 2019; pp. 1–5. [Google Scholar]

- Gong, Q.; Wang, Y.; Yu, Z.; Zhu, L.; Shi, H. A Tunnel Crack Identification Algorithm with Convolutional Neural Networks. In Proceedings of the 2018 IEEE 4th Information Technology and Mechatronics Engineering Conference (ITOEC), Chongqing, China, 14–16 December 2018; pp. 175–180. [Google Scholar]

- Liu, X.; Hong, Z.; Shi, W.; Guo, X. Image-processing-based subway tunnel crack detection system. Sensors 2023, 23, 6070. [Google Scholar] [CrossRef] [PubMed]

- Hu, D.; Tian, T.; Yang, H.; Xu, S. Wall Crack Detection Based on Image Processing. In Proceedings of the 2012 Third International Conference on Intelligent Control and Information Processing (ICICIP), Dalian, China, 15–17 July 2012; pp. 597–600. [Google Scholar]

- Jang, K.; Kim, N.; An, Y.K. Deep Learning–Based Autonomous Concrete Crack Evaluation Through Hybrid Image Scanning. Struct. Health Monit. 2019, 18, 1722–1737. [Google Scholar] [CrossRef]

- Nguyen, A.; Nguyen, C.L.; Gharehbaghi, V.; Perera, R.; Brown, J.; Yu, Y.; Tran, M.T. A Computationally Efficient Crack Detection Approach Based on Deep Learning Assisted by Stockwell Transform and Linear Discriminant Analysis. Eng. Struct. 2022, 261, 114124. [Google Scholar] [CrossRef]

- Padsumbiya, M.; Brahmbhatt, V. Automatic Crack Detection Using Morphological Filtering and CNNs. J. Soft Comput. Civ. Eng. 2022, 6, 53–64. [Google Scholar] [CrossRef]

- Wu, X.; Liu, Z.; Huang, M. A Tunnel Crack Extraction Method Based on Point Cloud. In Proceedings of the 2022 3rd International Conference on Geology, Mapping and Remote Sensing (ICGMRS), Zhoushan, China, 22–24 April 2022; pp. 1–4. [Google Scholar]

- Altabey, W.A.; Noori, M.; Wang, T.; Ghiasi, R.; Kuok, S.-C.; Wu, Z. Deep Learning-Based Crack Identification for Steel Pipelines by Extracting Features from 3D Shadow Modeling. Appl. Sci. 2021, 11, 6063. [Google Scholar] [CrossRef]

- Tang, J.; Gu, Y. Automatic Crack Detection and Segmentation Using a Hybrid Algorithm for Road Distress Analysis. In Proceedings of the 2013 IEEE International Conference on Systems, Man, and Cybernetics, ICSMC, Manchester, UK, 13–16 October 2013; pp. 3026–3030. [Google Scholar]

- Xu, H.; Tian, Y.; Lin, S.; Wang, S. Research of Image Segmentation Algorithm Applied to Concrete Bridge Cracks. In Proceedings of the 2013 IEEE Third International Conference on Information Science and Technology (ICIST), Yangzhou, China, 23–25 March 2013; pp. 1637–1640. [Google Scholar]

- Li, B.; Guo, H.; Wang, Z.; Li, M. Automatic Crack Classification and Segmentation on Concrete Bridge Images Using Convolutional Neural Networks and Hybrid Image Processing. Intell. Transp. Infrastruct. 2022, 1, 1–9. [Google Scholar] [CrossRef]

- Zhou, Q.; Qu, Z.; Li, Y.X.; Ju, F.R. Tunnel Crack Detection with Linear Seam Based on Mixed Attention and Multiscale Feature Fusion. IEEE Trans. Intell. Transp. Syst. 2022, 23, 3401–3412. [Google Scholar] [CrossRef]

- Yang, X. Research on Bridge Crack Recognition Algorithm Based on Image Processing. In Proceedings of the 2022 IEEE 5th International Conference on Knowledge Innovation and Invention (ICKII ), Hualien, Taiwan, 22–24 July 2022; pp. 189–192. [Google Scholar]

- Sabouri, M.; Mohammadi, M. Hybrid Method: Automatic Crack Detection of Asphalt Pavement Images Using Learning-Based and Density-Based Techniques. Int. J. Pavement Res. Technol. 2023, 16, 356–372. [Google Scholar] [CrossRef]

- Li, L.F.; Wang, N.; Wu, B.; Zhang, X. Segmentation Algorithm of Bridge Crack Image Based on Modified PSPNet. Laser Optoelectron. Prog. 2021, 58, 183–190. [Google Scholar] [CrossRef]

- Fan, Z.; Li, C.; Chen, Y.; Wei, J.; Loprencipe, G.; Chen, X. Automatic Crack Detection on Road Pavements Using Encoder-Decoder Architecture. Materials 2020, 13, 2960. [Google Scholar] [CrossRef]

- Zhang, H.; Zhang, A.A.; Dong, Z.; He, A.; Liu, Y.; Zhan, Y.; Wang, K.C.P. Robust Semantic Segmentation for Automatic Crack Detection Within Pavement Images Using Multi-Mixing of Global Context and Local Image Features. IEEE Trans. Intell. Transp. Syst. 2024, 25, 11282–11303. [Google Scholar] [CrossRef]

- Belloni, V.; Sjölander, A.; Ravanelli, R.; Crespi, M.; Nascetti, A. Tack Project: Tunnel and Bridge Automatic Crack Monitoring Using Deep Learning and Photogrammetry. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2020, XLIII-B4-2020, 741–745. [Google Scholar] [CrossRef]

- Wu, S.; Xiong, A.; Luo, X.; Lai, J. Bridge Crack Detection Based on Image Segmentation. In Proceedings of the 2022 5th International Conference on Advanced Electronic Materials, Computers and Software Engineering (AEMCSE), Wuhan, China, 22–24 April 2022; pp. 598–601. [Google Scholar] [CrossRef]

- Zhao, F.; Chao, Y.; Liu, X.; Li, L. A Novel Crack Segmentation Method Based on Morphological-Processing Network. In Proceedings of the 2022 15th International Congress on Image and Signal Processing, BioMedical Engineering and Informatics (CISP-BMEI), Beijing, China, 5–7 November 2022; pp. 1–6. [Google Scholar]

- Man, K.; Liu, R.; Liu, X.; Song, Z.; Liu, Z.; Cao, Z.; Wu, L. Water leakage and crack identification in tunnels based on transfer-learning and convolutional neural networks. Water 2022, 14, 1462. [Google Scholar] [CrossRef]

- Su, H.; Wang, X.; Han, T.; Wang, Z.; Zhao, Z.; Zhang, P. Bridge Crack Detection Using Full Attention U-Net. Buildings 2022, 12, 1561. [Google Scholar]

- Luan, S.; Gao, X.; Wang, C.; Zhang, H. CrackF-Net: Pixel-Level Segmentation for Pavement Cracks. J. Electron. Imaging 2023, 32, 063002. [Google Scholar] [CrossRef]

- Liang, Z.; Liu, W.; Yao, R. Contrast Enhancement by Nonlinear Diffusion Filtering. IEEE Trans. Image Proc. 2016, 25, 673–686. [Google Scholar] [CrossRef] [PubMed]

- Lin, Y.H.; Lu, Y.C. Low-Light Enhancement Using a Plug-and-Play Retinex Model With Shrinkage Mapping for Illumination Estimation. IEEE Trans. Image Process. 2022, 31, 4897–4908. [Google Scholar] [CrossRef]

- Yu, S.Y.; Zhu, H. Low-Illumination Image Enhancement Algorithm Based on a Physical Lighting Model. IEEE Trans. Circuits Syst. Video Technol. 2019, 29, 28–37. [Google Scholar] [CrossRef]

- Pu, T.; Zhu, Q. Non-Uniform Illumination Image Enhancement via a Retinal Mechanism Inspired Decomposition. IEEE Trans. Consum. Electron. 2024, 70, 747–756. [Google Scholar] [CrossRef]

- Wang, S.; Luo, G. Naturalness Preserved Image Enhancement Using a Priori Multi-Layer Lightness Statistics. IEEE Trans. Image Process. 2018, 27, 938–948. [Google Scholar] [CrossRef]

- Guo, C.; Li, C.; Guo, J.; Loy, C.C.; Hou, J.; Kwong, S.; Cong, R. Zero-Reference Deep Curve Estimation for Low-Light Image Enhancement. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 1777–1786. [Google Scholar] [CrossRef]

- Jiang, Y.; Gong, X.; Liu, D.; Cheng, Y.; Fang, C.; Shen, X.; Yang, J.; Zhou, P.; Wang, Z. EnlightenGAN: Deep Light Enhancement without Paired Supervision. IEEE Trans. Image Process. 2021, 30, 2340–2349. [Google Scholar] [CrossRef]

- Chen, C.; Chen, Q.; Xu, J.; Koltun, V. Learning to See in the Dark. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018; pp. 3291–3300. [Google Scholar]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional Networks for Biomedical Image Segmentation. In Proceedings of the 18th International Conference on Medical Image Computing and Computer-Assisted Intervention (MICCAI), Munich, Germany, 5–9 October 2015; pp. 234–241. [Google Scholar]

- Zunair, H.; Hamza, A.B. Masked Supervised Learning for Semantic Segmentation. In Proceedings of the 2022 British Machine Vision Conference (BMVC), London, UK, 21–24 November 2022; pp. 1–13. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2026 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license.

Share and Cite

Choi, S.-C.; Kim, S.-Y. Low-Light Image Segmentation on Edge Computing System. Sensors 2026, 26, 327. https://doi.org/10.3390/s26010327

Choi S-C, Kim S-Y. Low-Light Image Segmentation on Edge Computing System. Sensors. 2026; 26(1):327. https://doi.org/10.3390/s26010327

Chicago/Turabian StyleChoi, Sung-Chan, and Sung-Yeon Kim. 2026. "Low-Light Image Segmentation on Edge Computing System" Sensors 26, no. 1: 327. https://doi.org/10.3390/s26010327

APA StyleChoi, S.-C., & Kim, S.-Y. (2026). Low-Light Image Segmentation on Edge Computing System. Sensors, 26(1), 327. https://doi.org/10.3390/s26010327