1. Introduction

With the rapid development of industrial manufacturing technology, inner-wall-shaped parts are widely used in industrial production, such as engine cylinder housings [

1] and pipeline ball valves [

2,

3]. Among them, an inner-wall-shaped part with a narrow aperture and large cavity is a type of part with special structural and critical functions that plays an irreplaceable role in the field of industrial manufacturing. For example, in the manufacture of precision optical components, the inner wall surface quality of the injection mold will affect the molding accuracy and optical performance of the optical components [

4,

5]. In addition, such parts are also used as carriers of certain special substances in industrial manufacturing for research in fluid mechanics and explosion characteristics [

6,

7]. The opening size of such parts is usually in the order of tens of millimeters, while the diameter of the inner cavity can reach hundreds of millimeters. Therefore, it is of great significance to perform a complete measurement of the inner surface 3D profile of such parts to obtain the critical geometric dimensions of structures on their inner walls. However, there are few studies on inner wall 3D measurement methods for narrow-aperture and large-cavity parts, and the special structural characteristics of such parts also bring difficulties to the design of sensors and measurement systems.

For inner-wall-shaped parts, the existing 3D measurement methods are mainly based on optical non-contact measurement methods. Compared with the low efficiency and poor flexibility of contact measurement methods [

8], optical measurement methods are widely adopted due to being non-contact, with fast speed and high precision. The measurement form can be divided into three types: point, line, and surface. The point measurement method is limited by measurement efficiency and data density, and its reliance on multi-degree-of-freedom motion mechanisms introduces motion errors into the measurement process, which makes it unsuitable for high-precision and rapid measurement in industrial settings. Wu et al. [

9] designed an inner-wall measurement system for pipelines based on a position-sensitive detector, which realized 3D reconstruction of small-diameter pipelines. However, the measurement accuracy of the system for the inner diameter of the pipe is limited to 0.1 mm, indicating insufficient precision. Zhang et al. [

10] achieved 3D measurement of the groove structure on the inner surface of ball screw nuts with an inner diameter of 40.5 mm based on spectral confocal technology. The sensor measurement accuracy can achieve 1 μm. However, the confocal measurement principle has problems such as high cost and small measurement range, which makes it unsuitable for the full-profile measurement of the inner wall. There are two main surface measurement methods: fringe projection and digital holography [

11]. Masayuki et al. [

12] designed a multi-wavelength digital holographic detection system to detect and classify defects on the inner wall of copper tubes. César-Cruz et al. [

13] designed a measurement system for 3D reconstruction of cylindrical objects based on the principle of fringe projection. The system can realize 3D reconstruction of objects placed on the inner surface of a cylinder, but the measurement deviation is only on the millimeter scale, resulting in low accuracy. The sensor constructed by the surface measurement principle is relatively complex in structure and imposes higher hardware requirements on the measurement system, which makes it impossible to achieve full-profile measurement of the inner wall of the blind hole structure, limiting the measurement range and flexibility. In contrast, linear measurement methods have the advantages of high measurement accuracy and efficiency. Due to its inherent simplicity in implementation, the line-structured light measurement principle can be applied to a variety of different measurement occasions. This technique has been extensively implemented for dimensional inspection of pipeline and cylinder inner walls [

14,

15], as well as precision measurement of small bores and slots, with equal effectiveness [

16,

17].

However, most of the current research on linear measurement methods for inner wall parts employs linear axis scanning to measure structures such as cylindrical inner walls, and the measurement accuracy is limited by the motion precision of the linear axis. In contrast, the rotation axis scanning method is more suitable for 3D measurement of narrow-aperture and large-cavity parts. Additionally, due to the circular closure property of angular indexing devices, the rotation axis can achieve higher motion accuracy compared to the linear axis [

18,

19]. Therefore, this article adopts a line-structured light rotary scanning method to build the measurement system. In order to avoid the problem in practical situations where the measured object has been fixed and is not suitable for movement, and to reduce the influence of the measured object load on the movement of the rotation axis, the system adopts a self-rotating scanning measurement method where the sensor rotates around the axis. When calibrating the relative position between the rotation axis and the sub-sensor in the self-rotating measurement system, the main challenge lies in the continuous change of the measurement range during rotation. This makes it difficult to calibrate the axis position by constructing feature points around the axis in the sensor coordinate system [

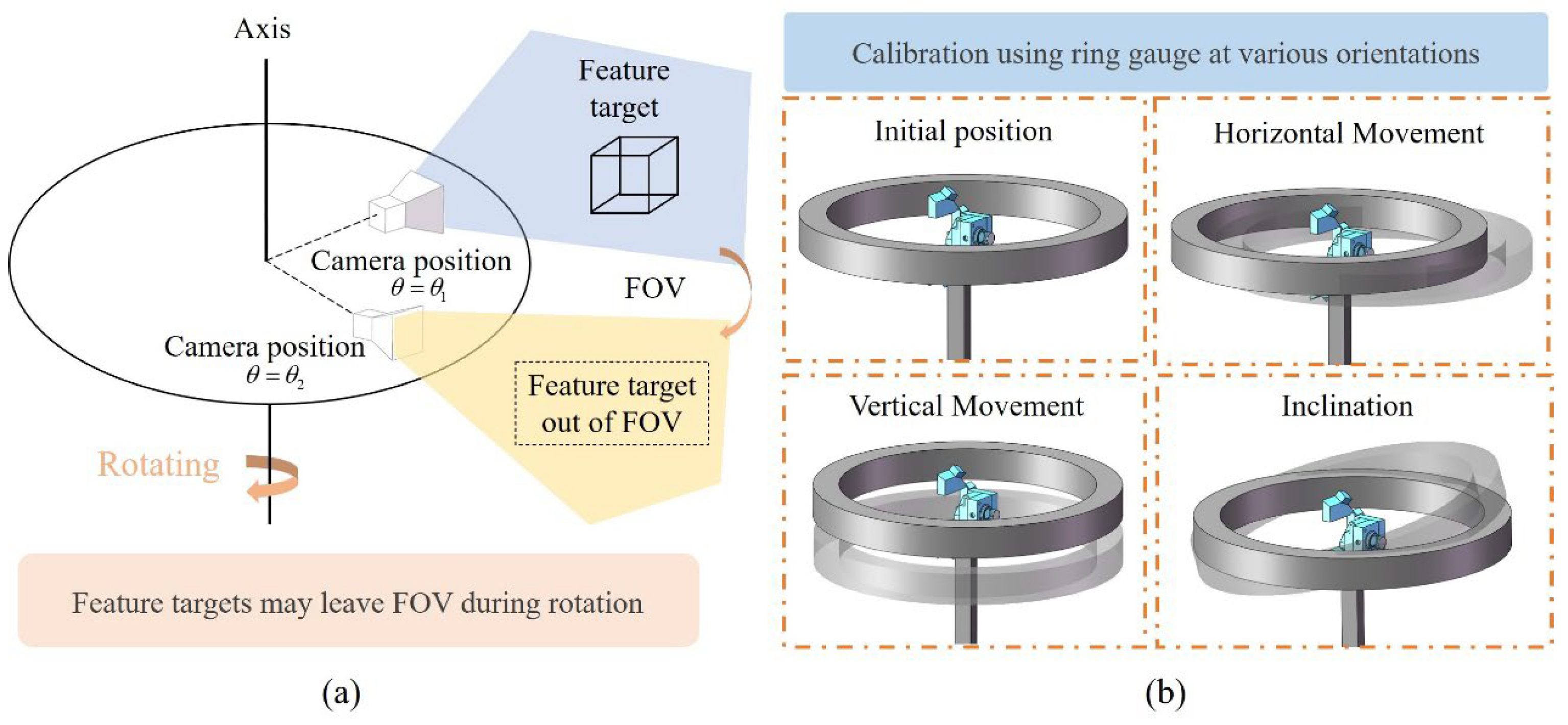

20]. In current research on calibration methods for the rotation axis in a self-rotating sensor coordinate system, Niu et al. [

21] employed an auxiliary camera and checkerboard to calibrate the rotation axis. They utilized stereo vision principles to determine the relative position between the camera on the rotating platform and the rotation axis. However, this method suffers from cumulative errors introduced by distributed calibration, and it also has limitations due to the fixed position of the auxiliary target. Liu et al. [

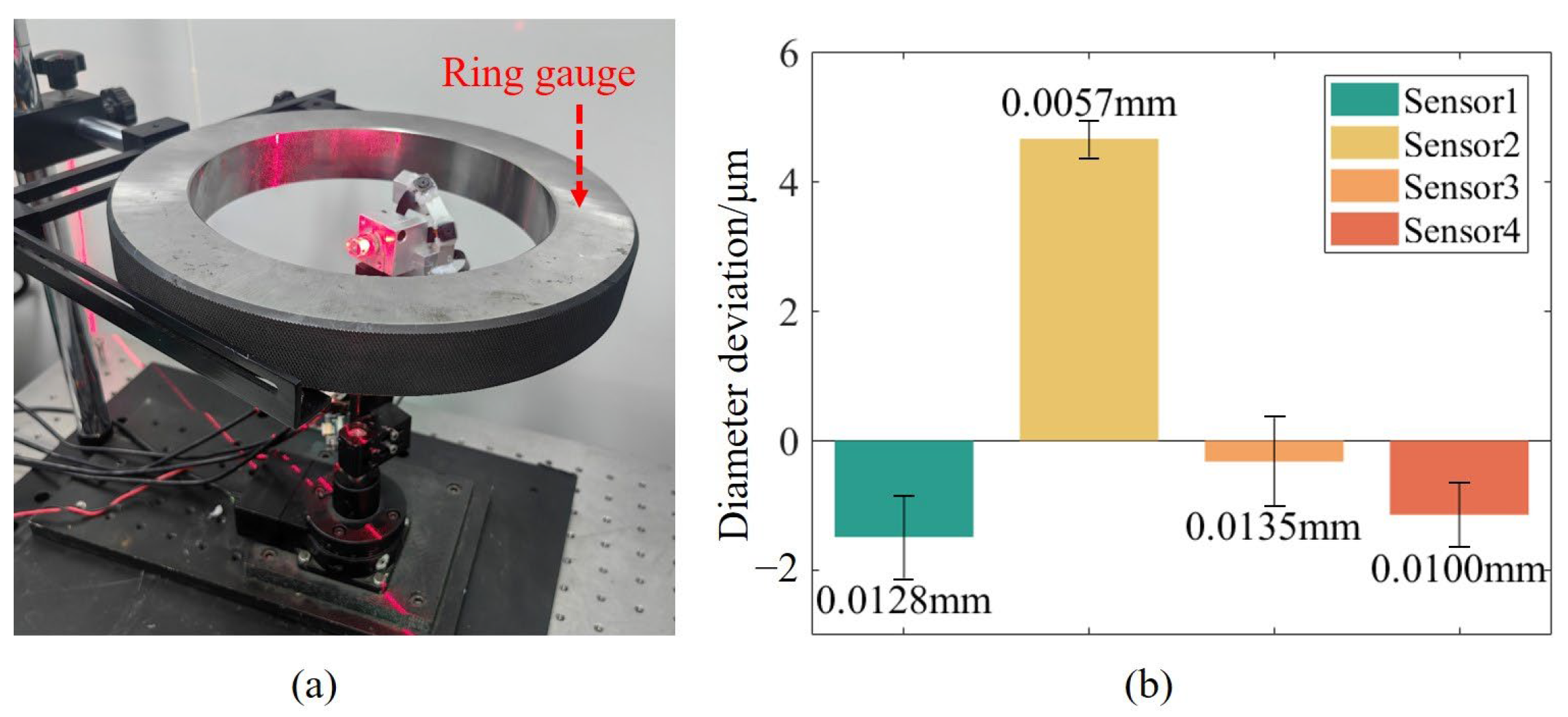

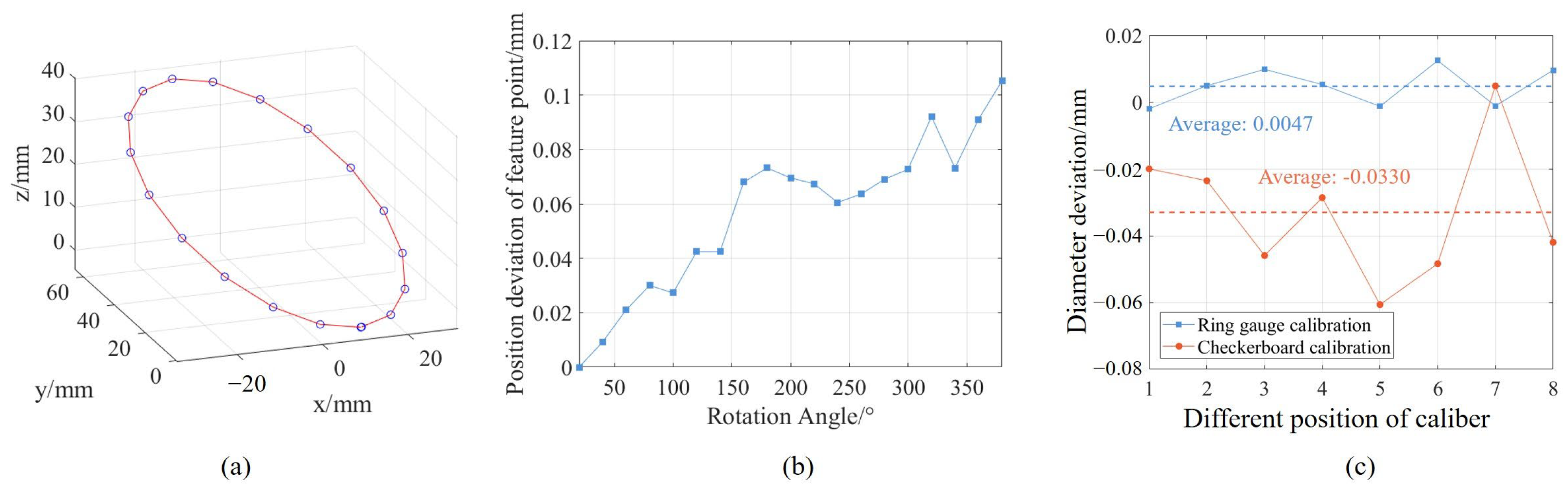

22] proposed a method where the camera and projector are rotated to different angles using a rotating mechanism to capture point cloud data of the same object from various positions. Then, they performed point cloud registration sequentially to establish the relationship between the camera and the rotation axis. However, this method tends to introduce significant cumulative errors in practical applications. To address this problem, this article proposes a calibration method for a rotation axis based on optimization, where the rotation axis position is optimized to obtain the precise relationship between the sensor and the rotation center by scanning ring gauges at different positions.

In order to achieve full-profile measurement of inner-wall-shaped parts, the measurement system must have a large field of view (FOV). Two solutions are commonly used to address the issue: a multi-axis measurement system and a multi-sensor measurement system [

23,

24]. Since the inner-wall-shaped parts have the characteristic of a small space, using a multi-axis measurement system will lead to a risk of collision during measurement and increase system complexity. In contrast, the multi-sensor configuration can provide complete coverage of the measurement field and has higher measurement efficiency. For narrow-aperture and large-cavity parts, their structural constraints lead to a contradiction between the measurement accuracy and the geometric parameters of the sensor. Therefore, it is necessary to design the structural parameters of the line-structured light sub-sensor to meet the various constraints present in practical measurements. Currently, many studies have analyzed the impact of parameters in line-structured light systems on measurement accuracy from various perspectives [

25,

26]. They have proposed many optimization design strategies to provide theoretical guidance for the design of line-structured light sensors [

27,

28]. However, there is a lack of application to real engineering measurement problems with multiple complex constraints.

To ensure that the sensor size meets the aperture limitations of the parts, this article adopts a configuration of low overlapping FOV for arranging multiple sub-sensors in the multi-sensor measurement system. Precisely determining the relative position among the sub-sensors is critical for achieving high-precision measurements. The key to the multi-sensor registration problem under low overlapping FOV is to construct a global feature. There are mainly two types of methods. One of them uses auxiliary targets to establish the relative position between two sub-sensors [

29,

30]. Another method uses high-precision 3D equipment such as theodolites or laser trackers to obtain global feature points [

31,

32]. These methods still have limitations in practice, as they depend on customized large calibration targets, auxiliary equipment, and complex operational procedures. This results in a lack of flexibility and generalizability, as well as higher implementation costs. Moreover, most existing calibration methods primarily focus on establishing multi-camera relationships from the perspective of camera imaging within the sensor, without analyzing the final measurement data generated by the sensor.

To address the limitations of existing measurement methods, this article proposes a multi-sensor self-rotating scanning measurement system based on the principle of line-structured light. This approach overcomes the constraints of traditional methods in addressing the 3D measurement of narrow-aperture and large-cavity parts, providing an innovative solution for the 3D measurement of inner walls with complex structures. The main contributions of this article are as follows:

(1) To address the issues of high measurement costs and low accuracy caused by axis hardware in traditional linear axis scanning measurement systems, this article proposes a self-rotating axis scanning approach to construct the measurement system. This method reduces hardware costs while improving measurement accuracy and efficiency, providing a reference for the design of three-dimensional measurement systems for inner-wall parts. In response to the challenge of rotation axis calibration in self-rotating scanning measurement systems, this article introduces a simple and high-precision method for calibration the self-rotating axis.

(2) Due to the various structural constraints in the full-profile measurement of narrow-aperture and large-cavity parts, there exists a contradiction between the measurement accuracy and the geometric size of the sensor. This article proposes a structural parameters optimization design algorithm to achieve the best theoretical measurement accuracy while satisfying the constraints. It provides a reference solution for structural optimization under geometric constraints.

(3) To address the issues of low accuracy and high calibration costs in existing multi-sensor registration methods under low overlapping FOV, this article proposes a registration method based on the structural constraints of the measurement system itself. It provides a low-cost and high-precision solution for multi-sensor registration in such conditions.

The rest of this article is organized as follows.

Section 2 introduces the geometric principle of the measurement system.

Section 3 introduces the parameter analysis and optimization strategy for the line-structured light system.

Section 4 introduces the rotation axis calibration and multi-sensor registration methods.

Section 5 presents the experimental results of this article.

Section 6 provides a summary of this article. The technical route of this article is shown in

Figure 1.

2. Measurement System and Principles

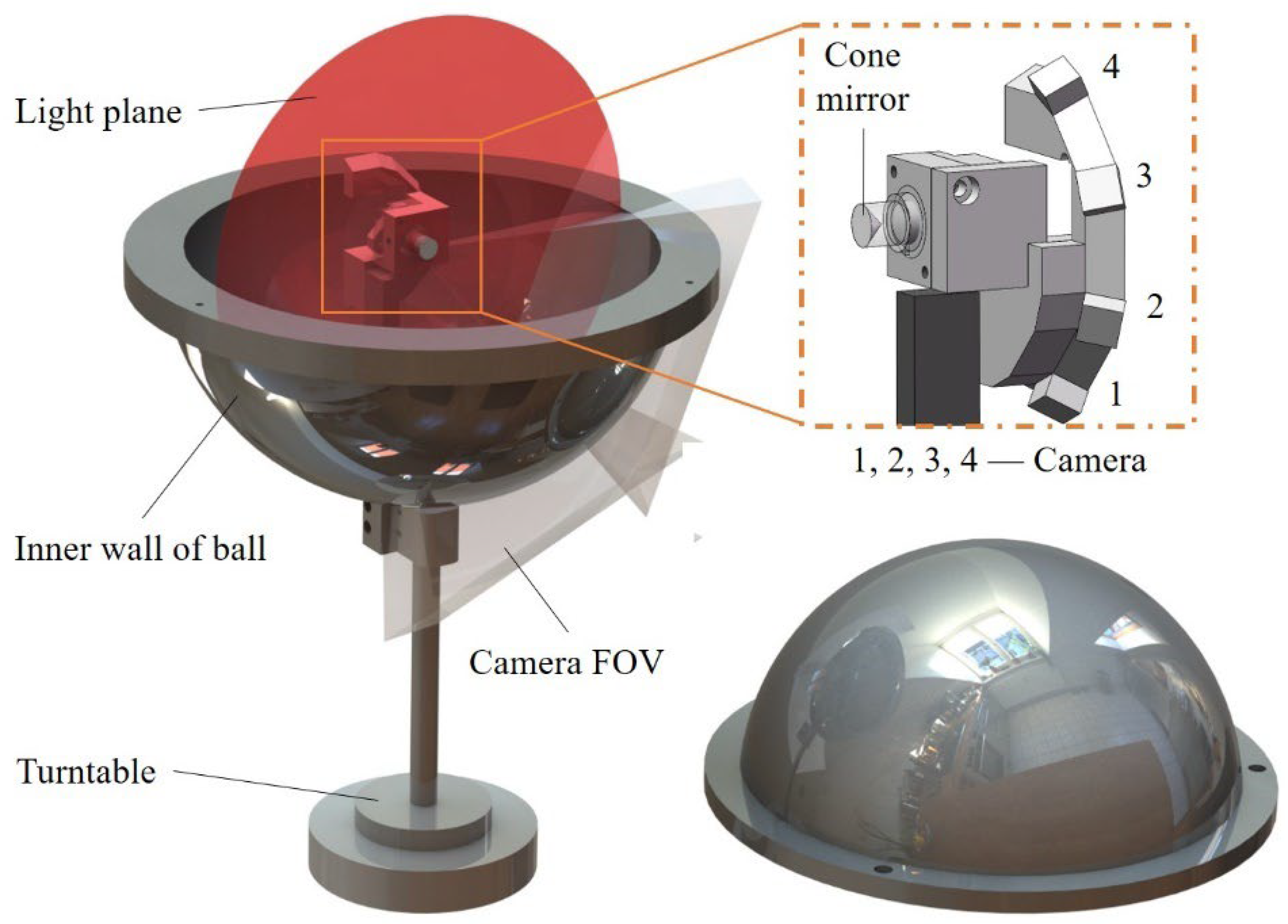

This article utilizes spherical inner cavity parts as the test objects for analysis. As shown in

Figure 2, the sensor of the measurement system consists of four small cameras and a laser. The light emitted by the laser diode passes through a collimating lens and is directed onto a conical mirror with a 90° apex angle, generating the light plane. This plane intersects with the inner wall of the spherical cavity and produces a circular light stripe. The fields of view of the four cameras collectively cover half of the inner wall area. The full profile of the inner wall of the spherical cavity can be measured by driving the sensor to rotate 360° via a turntable.

Each camera and laser form a sub-sensor, and it follows the laser triangulation principle. As shown in Equation (1), the camera imaging process is regarded as a pinhole model [

33] and is combined with the light plane equation.

In Equation (1),

u and

v represent the pixel coordinates of the center of the light strip in the image captured by the camera, and (

Xc,

Yc,

Zc) represents the three-dimensional coordinates of the space point corresponding to the center pixel of the light strip in the camera coordinate system.

A,

B,

C, and

D are the light plane parameters.

Fx and

Fy are the equivalent focal lengths in the X and Y directions in the camera coordinate system.

u0 and

v0 represent the coordinates of the principal point.

The point cloud data of the measured surface can be obtained by integrating the point cloud data of the four sensors, as shown in Equation (2), where (Xw, Yw, Zw) represents the point cloud coordinates in the world coordinate system and (Xci, Yci, Zci) represents the three-dimensional coordinates in the i-th camera coordinate system. RTi represents the rigid body transformation matrix from the i-th camera coordinate system to the world coordinate system.

3. Structural Optimization Design of Full-Profile Measurement System Under Aperture Constraints

3.1. Precision Analysis of Line-Structured Light Measurement

The measurement accuracy of a line-structured light sensor is influenced by various hardware structural parameters. When addressing practical sensor design requirements, the values of these parameters are often subject to certain constraints. Therefore, it is necessary to analyze the impact of different parameters on measurement accuracy, which guarantees that the designed sensor achieves optimal theoretical measurement accuracy while satisfying external constraints.

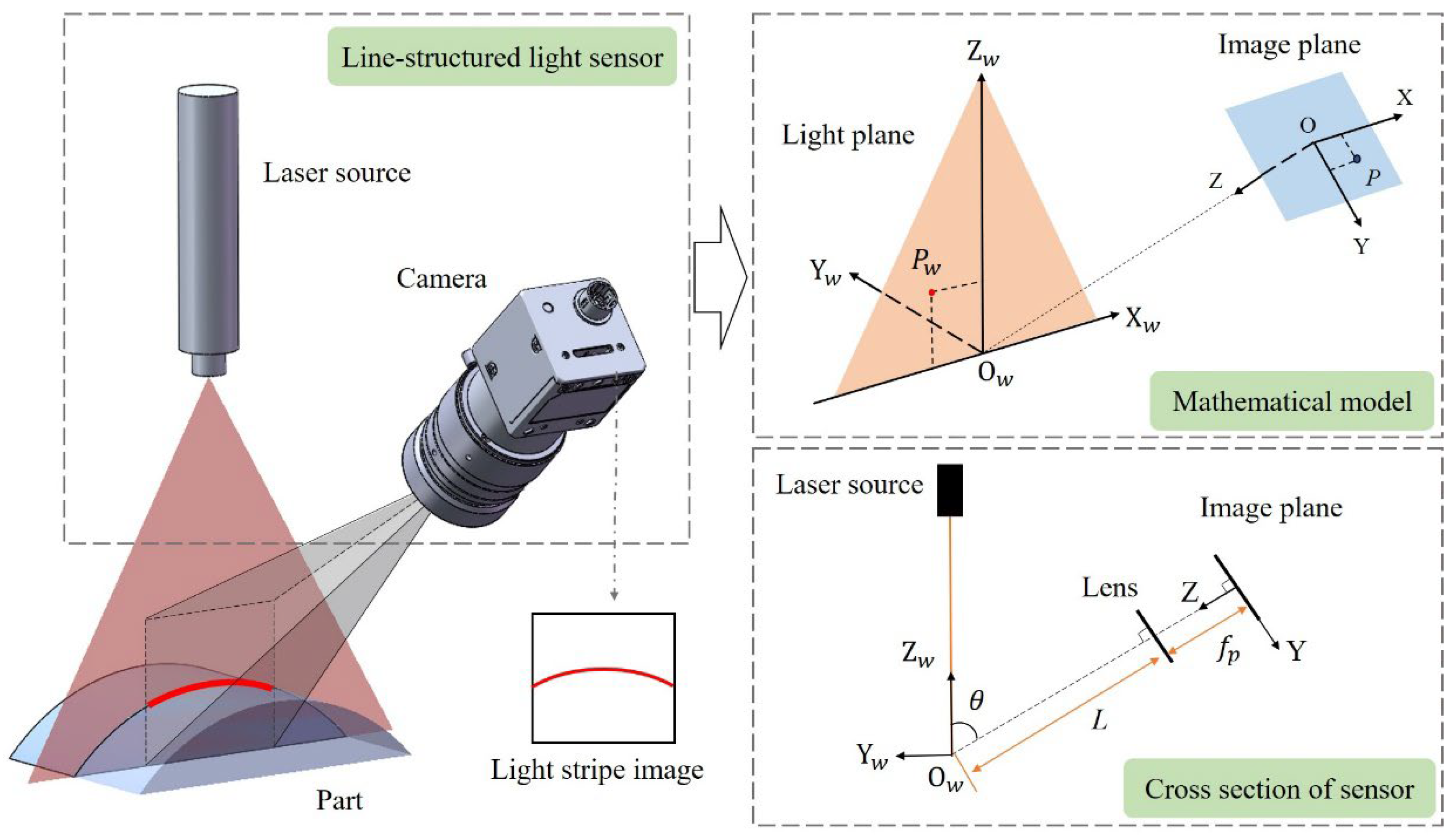

According to the laser triangulation principle, the measurement principle of the line-structured light measurement system is shown in

Figure 3. The correspondence between the 2D pixel coordinates in the camera target surface and the 3D spatial coordinates in the light plane is established by calibrating the relative position between the camera and the light plane. The world coordinate system O

w-X

wY

wZ

w is established based on the position of the light plane. O-XYZ is the image coordinate system, and the X-axis in the image coordinate system is parallel to the X

w-axis in the world coordinate system.

θ represents the angle between the camera optical axis

OOw and the light plane;

L represents the working distance of the camera;

fp represents the distance from the optical center of the lens to the imaging plane. According to the geometric relationship, for any point

Pw in the light plane, the coordinates of its corresponding point

P in the imaging plane can be expressed by Equation (3), where

d represents the pixel size of the camera.

The measurement accuracy of a line-structured light measurement system can be reflected by its measurement resolving power, which is defined as the minimum change in the measured quantity that can cause a detectable change in the indication value. In the line-structured light measurement system, the change of the indicated value can be considered as the positional change of pixels in the imaging plane of the camera. Based on Equation (3), the resolving power of the system in the X

w and Z

w directions can be obtained by calculating the partial derivatives of

u and

v. For the convenience of analysis, the expression for the system’s measurement resolving power

μ is defined as shown in Equation (4).

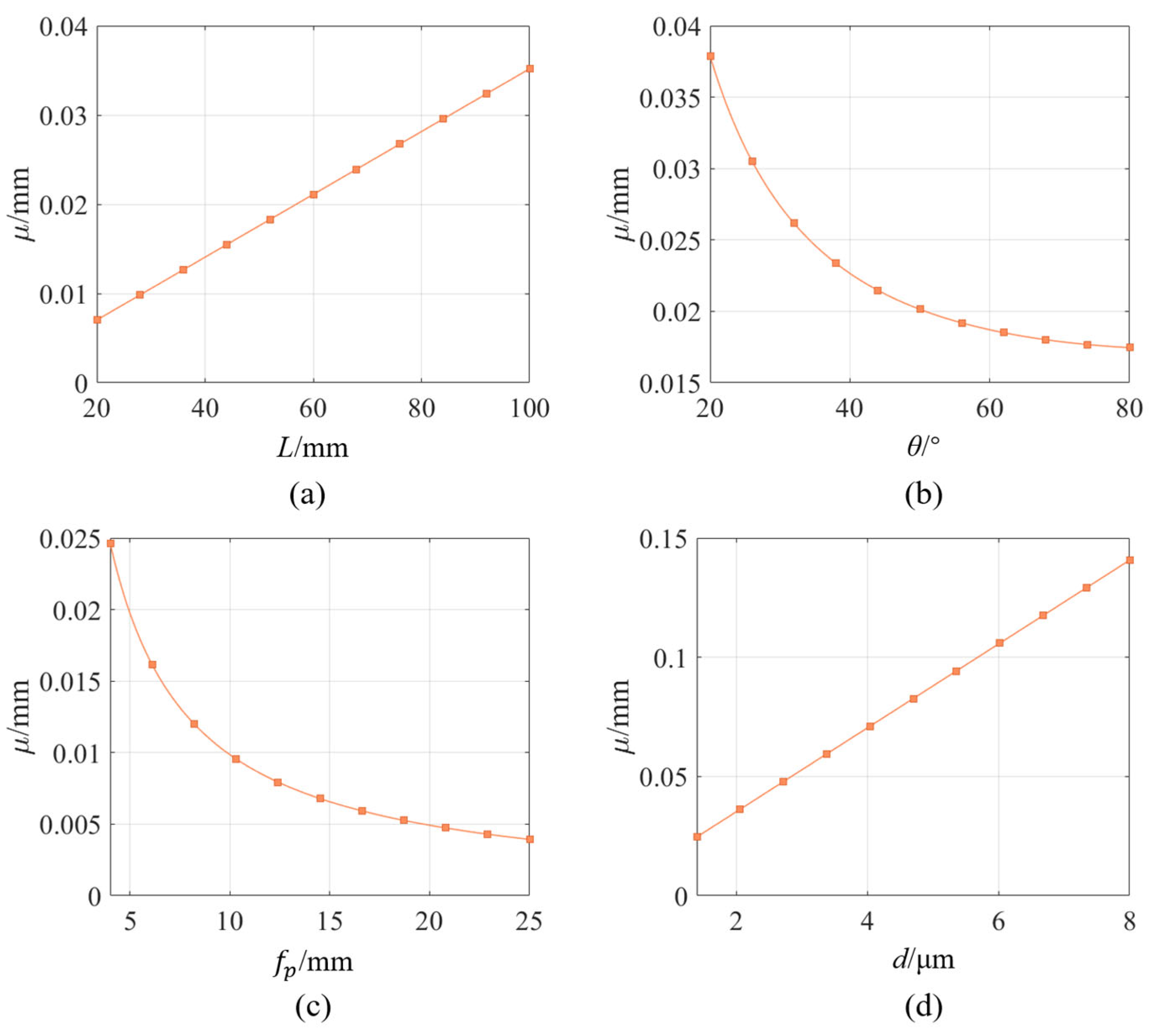

According to Equation (4), it can be seen that the resolving power of the line-structured light system is related to multiple hardware structural parameters, including the camera working distance L, focal length fp, the size of pixel d, and the angle θ between the camera’s optical axis and the light plane (observation angle). Additionally, the value of resolving power varies at different positions on the imaging plane of the camera. To further explore the influence of these structural parameters on measurement resolving power, this article simulates the relationship between each parameter and the resolving power, and plots the corresponding curves for illustration.

The preset sensor structure parameters in the simulation are as follows: working distance 70 mm, lens focal length 4 mm, observation angle 35°, pixel size 1.4 μm, and camera target resolution 2592 × 1944. From Equation (4), it can be noted that the measurement resolving power at different pixel positions in the camera imaging plane is different, but their variation with the structural parameters is uniform. Therefore, in the simulation, the measurement resolving power at the center point of the imaging plane is taken as an example for analysis, while only modifying one single variable parameter at a time.

Figure 4 illustrates the influence of different parameters on the resolving power of the line-structured light system. It can be seen that the resolving power is proportional to the working distance

L and the pixel size

d, and inversely proportional to the observation angle

θ and the focal length

fp. According to the definition of resolving power, an increase in resolving power means that a one-pixel change in the imaging plane corresponds to a larger displacement change in real space. This can lead to significant positional deviations in the three-dimensional space due to minor errors in extracting pixel coordinates from images, which degrade the system’s measurement accuracy. Therefore, when designing a line-structured light measurement system, it is necessary to comprehensively consider the influence of multiple structural parameters to achieve the optimal resolving power for the system.

3.2. Optimal Accuracy Structural Optimization Design

Equation (4) illustrates the structural parameters that influence the resolving power of the line-structured light measurement system. However, in practical engineering problems, there are still many constraints that limit the range of variation for these parameters. For the multi-sensor inner wall measurement system designed for spherical cavity parts in this article, there are primarily two constraints. One of them is the limitation of the aperture for the parts. To ensure that the sensor can penetrate into the part and avoid collision risks during measurement, the sensor size should not be too large. On the other hand, the measurement system adopts multiple sub-sensors, requiring overlapping FOV between adjacent sub-sensors to ensure complete 3D measurement of the inner wall. When improving measurement accuracy by increasing the observation angle and reducing the sensor working distance, the geometric size of the sensor increases and the sensor’s FOV decreases, resulting in gaps in the 3D data measured by the system. The influence of these structural parameters and constraints on the system’s measurement accuracy is contradictory. Therefore, it is necessary to find the optimal solution for the system’s structural parameters while considering the limitations imposed by the constraints.

The measurement system designed in this article adopts four independent cameras, each paired with a light plane to form sub-sensors, collectively performing scanning measurements on the inner wall of the spherical cavity part. Due to hardware limitations, the parameters of the cameras in the measurement system are fixed. Additionally, for the measurement of inner-wall-shaped parts, the geometric size of the sensor is a more critical requirement in practical engineering applications, which will directly determine the feasibility of the measurement system. Therefore, the optimization design focuses more on the structural parameters that are more sensitive to geometric constraints, namely the camera’s observation angle and working distance.

Based on the above approach, the constraints in the measurement process are shown in

Figure 5. The two constraints mentioned above are used to define the boundary conditions in the optimization objective function. The first constraint is to ensure that there is an overlapping FOV between the adjacent cameras, but this is difficult to calculate directly due to the differences in the poses of the cameras. Therefore, the measurement range of each sub-sensor is transformed into the central angle of the circular light stripe captured by each camera, as shown in

Figure 5a. In the system, the light plane intersects with the inner wall of the spherical cavity to form a circular stripe, and the four cameras are uniformly distributed within a 180° range. Considering the symmetry of the measured part, it is only necessary to ensure that the central angle of the light stripe captured by a single sub-sensor is greater than 45° to satisfy the condition. For the convenience of analysis, by neglecting the size of hardware components such as cameras and lasers in the system, the critical dimension affecting sensor penetration can be calculated using the geometric relationship between the camera’s working distance and observation angle. This dimension must be smaller than the aperture of the parts, as shown in

Figure 5b.

Based on the above analysis, regarding the resolving power as the objective function, the expression for this optimization problem is shown in Equation (5). Ω represents the value range of

θ and

L. α represents the central angle of the light stripe captured by the sensor.

Dsensor represents the critical dimension of the sensor. In the boundary conditions of the sensor, α

0 and

D0 denote the central angle of the light stripe and the spatial dimension constraints of the sensor, respectively. According to the expression of optimization, a penalty function approach can be used to construct the optimization objective function as shown in Equation (6), where σ represents the penalty factor, which is a sufficiently large positive number that forces the optimization objective function to achieve the optimal value while satisfying the constraints.