1. Introduction

Computer vision systems are becoming increasingly essential in several scientific fields, especially robotics and machine perception. Understanding a scene and the shape of the objects therein is a major challenge in many surveillance, navigation, and segmentation approaches. In this context, inferring the geometry of a scene using visual cues from camera images acquired at sparse locations, also known as the “stereo reconstruction”, is a well-established approach.Variational methods fall into this class of applications, where the reconstruction problem is cast into a classic inversion scheme using a 2D/3D geometric model (i.e., a curve or surface), and this model is then evolved to outline the object of interest [

1].

On the one hand, the mathematical framework of shape reconstruction from images is well understood. On the other hand, stereo vision is inherently limited by its reliance on information within the visible spectrum and becomes unreliable in low ambient light or in the presence of obstructing factors such as rain, fog, or smoke. The integration of radar sensing within such inversion frameworks would be highly beneficial, as radars can operate effectively at night and can probe scenes even when adverse weather conditions render the optical alternatives ineffective [

2].

In order to probe the investigated scene, classical radar imaging techniques leverage actively generated signals, often linear frequency-modulated (LFM) chirps, emitted by a moving antenna. The back-scattered echoes recorded at different locations on the antenna flight path are then combined (i.e., coherently summed) to generate a high-resolution image of the area of interest. This technique is known as Synthetic Aperture Radar (SAR) imaging [

3,

4,

5]. It should be noted that coherent summation requires the synchronization of signals and the careful control of the antenna’s position along the flight path. In addition, processing techniques typically rely on regular sampling in space. As such, SAR applications are restricted to linear or circular antenna paths, and data acquisition becomes more complex as the signal frequency increases.

SAR has also been used in connection with additional post-processing techniques to retrieve the geometric properties of scenes. Interferometry and differential interferometry [

6,

7], for example, are routinely used to obtain land topography or to monitor changes in the scene over time. SAR technology has been extended to three-dimensional imaging applications, where the scene is modeled as a 3D reflectivity function with values computed from measurements [

8,

9]. Complementing these tomographic approaches, some near-field techniques have also been proposed for 3D SAR [

10].

SAR does not offer directly retrievable representations of shapes within the scene, and shape reconstruction is typically achieved by post-processing the radar imaging products, often leveraging computer vision techniques originally designed for optical images.

Although image formation requires simple but rigid antenna configurations, a reconstruction where both radar and camera data are collected jointly at sparse locations and are capable of combining both sources of information within the same mathematical framework would be highly desirable. However, before tackling such a joint inversion task, we first demonstrate that radars can be used successfully by themselves, even if less practically, in a framework of shape reconstruction. In principle, embedding radar signals into an inversion scheme can be achieved by constructing an energy functional (or cost function) using a least-squares approach aimed at minimizing the differences between recorded signals and their simulated counterparts (with the latter computed from a suitable reference model). This methodology is commonly known as Full Waveform Inversion (FWI) and has demonstrated a good degree of success with acoustic signals both in geophysics, medical applications, and non-destructive testing [

11,

12,

13], where the model being optimized typically consists of the distribution of sought physical properties within the cells (or at the nodes) of a discretized volume. However, despite its sound mathematical foundation, practical FWI applications suffer from the “cycle-skipping” phenomenon [

11], which becomes increasingly severe at higher frequencies. Cycle skipping is already a significant limitation when dealing with acoustic frequencies, and it would render radar-based applications, which typically operate at frequencies of the order of MHz or GHz, effectively unfeasible. In this context, Yildirim et al., 2018 [

14] and Bignardi et al., 2021 [

15] demonstrated that it is possible to leverage variational methods to build an inversion framework in all aspects analogous to stereo reconstruction from images, but radar signals can be leveraged instead. Even when the return signal is demodulated, their inclusion in a least-squares form leads to optimization strategies affected by a large number of local minima. For this reason, shape inversion has rarely been used with this kind of data. Nevertheless, Bignardi et al., 2021 [

15] demonstrated that shape reconstruction can still be achieved after applying a suitable preprocessing strategy (specifically designed to mitigate the cycle-skipping problem), showing that radar-based inversion is robust enough to enable shape reconstruction, even by itself.

Therefore, in contrast to traditional radar imaging, where the focus is to create 2D/3D images (a grid of discrete pixels/voxels), we tackle the reconstruction problem by inverting the high-frequency radar signal back-reflected from the scene using a deformable shape model. In particular, while Bignardi et al. [

15] formulated the inversion of pulse-compressed signals in the time domain and demonstrated that the approach is suitable for shape reconstruction using the level-set method as an implicit representation of the scene geometry [

16], we investigate here the shape reconstruction problem from deramped (i.e., stretch-processed) radar echoes in the frequency domain. We obtain a variational approach where we seek to decrease an energy functional designed to be minimal when the shape’s radar response is most similar to the input data. During minimization, our initial shape will evolve continuously until the desired geometry is found.

Despite the different formulations, several of the results from our previous work [

15,

16] are immediately applicable here as well. In our formulation, the inversion problem relies on the capability of optimizing the geometric shape and location of a scene so that the amount of power back-reflected toward the receiving antennas from the portions of the scene sitting at specific distances reproduces the data. In carrying this out, the problem becomes dependent on the amplitude of the signals rather than on the Doppler shift (i.e., the signal phases). Unfortunately however, the pulse compression approach [

15] cannot be straightforwardly carried over to the frequency domain but requires a change in the preprocessing strategy. We have found that leveraging stretch processing can be successful, as it allows one to cast the back-scattered time series (i.e., time-dependent information) in a range-dependent electric field strength profile over a certain range. Our approach depends mainly on the signal strength over the range for each measurement, and we do not make use of the phase relations across different measurements, which makes our method noncoherent. The immediate advantages of combining a noncoherent inversion approach with an explicit-shape model-based inversion are (1) the ability to handle sparse measurements and independence from hardware precision and tolerance considerations (coherence becomes harder to implement as the signal frequencies increase) and (2) the natural ability to handle visibility and occlusions [

17,

18].

Since some of these aspects were shared and discussed in depth in Bignardi et al. (2021, 2023) [

15,

16], where a purely time domain approach was taken using pulse compression, the goal of this paper is to utilize the stretch processing technique to lay the foundation of a frequency-domain formulation for shape reconstruction within a similar explicit geometric framework. We will show, in a simplified 2D context at this initial stage, that active contours and the level-set method (LSM) can be leveraged for shape evolution in conjunction with deramping techniques and will therefore be an ideal environment for further three-dimensional development.

In the following,

Section 2 describes how we formulate the cost functional for our inversion using preprocessed data and details how we compute the observable quantities in the frequency domain. In particular, while the mathematical framework developed here is valid for general three-dimensional problems, since we already know that this class of problems can be easily formulated in three dimensions [

15], we consider two-dimensional geometries for ease of implementation and focus our attention on assessing the success of the frequency-domain reconstruction instead.

Section 3 will demonstrate some example reconstructions in which the geometry of the scene is represented by a polygonal object. Finally, we discuss our findings and conclusions in

Section 4.

2. Materials and Methods

We consider a scene/object that we want to reconstruct, and it is probed by a set of antennas arbitrarily located around the scene. We assume that the antenna locations are known, and these antennas are probing the scene by transmitting linear frequency-modulated (LFM) chirp pulses and collecting the echos. These echos are then deramped, which provides the key observable that used in our method: that is, an electric field strength density profile over the antenna range. This is possible from the fact that all reflections coming from a certain range are represented by a unique frequency signature in the deramped signal, and thus, the spectral analysis of the deramped signal yields, on average, the amount of electric field strength reflected from a given range. We will briefly discuss why extracting such information from the echos is feasible and formulate our forward model based on this observable quantity. As a result, our forward model, given an antenna location and a shape, will generate a vector of average electric field strengths over a range decomposition of the space centered around the antenna. Our inverse model will then be responsible for comparing these vectors of electric field strengths to those obtained from our simulated measurements (output of the forward model for the actual scene we do not know) and evolving our shape model over iterations to match our deformable shape model to the actual scene. For ease of implementation, we will use a polygonal shape representation for the scene.

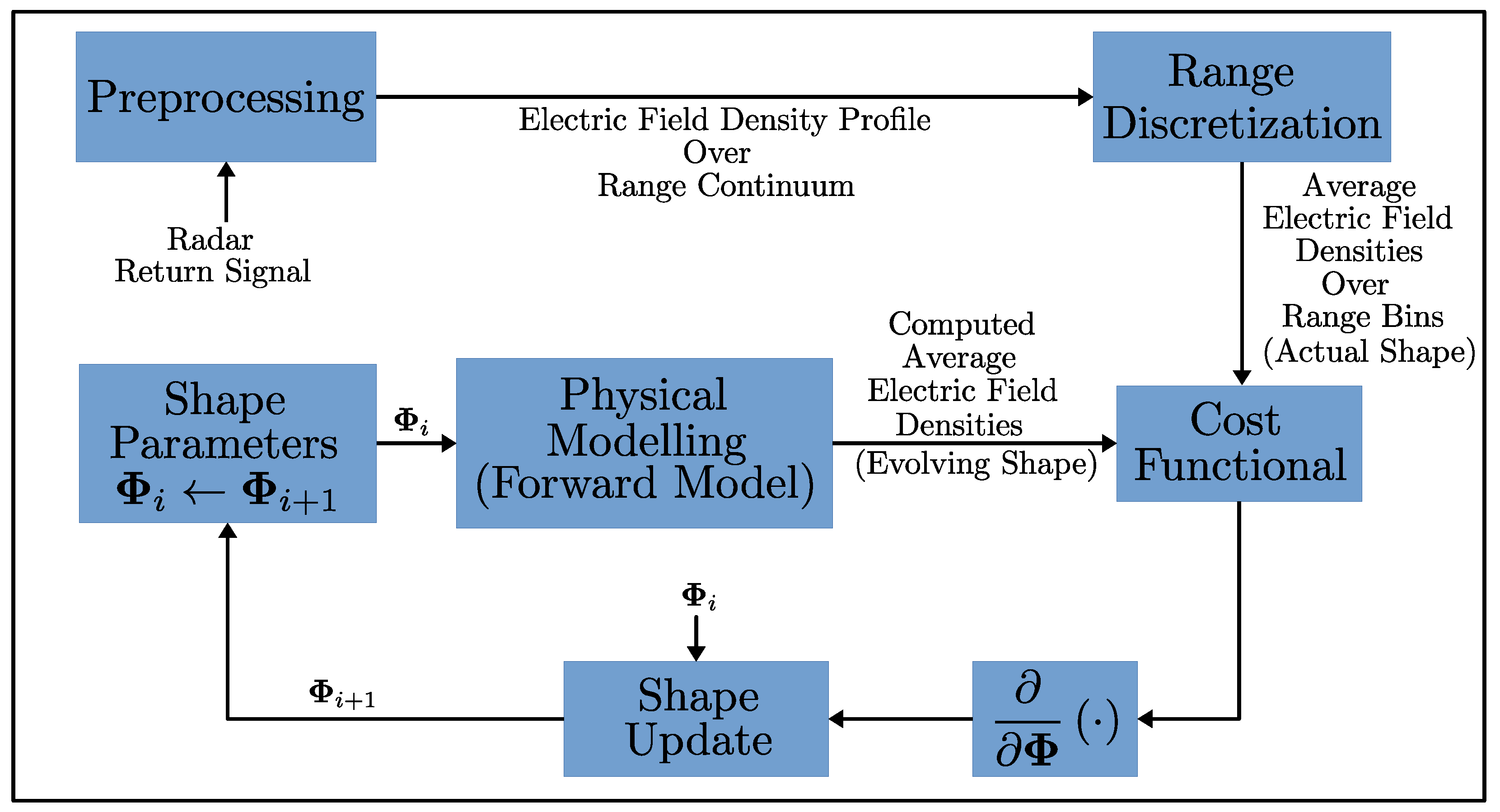

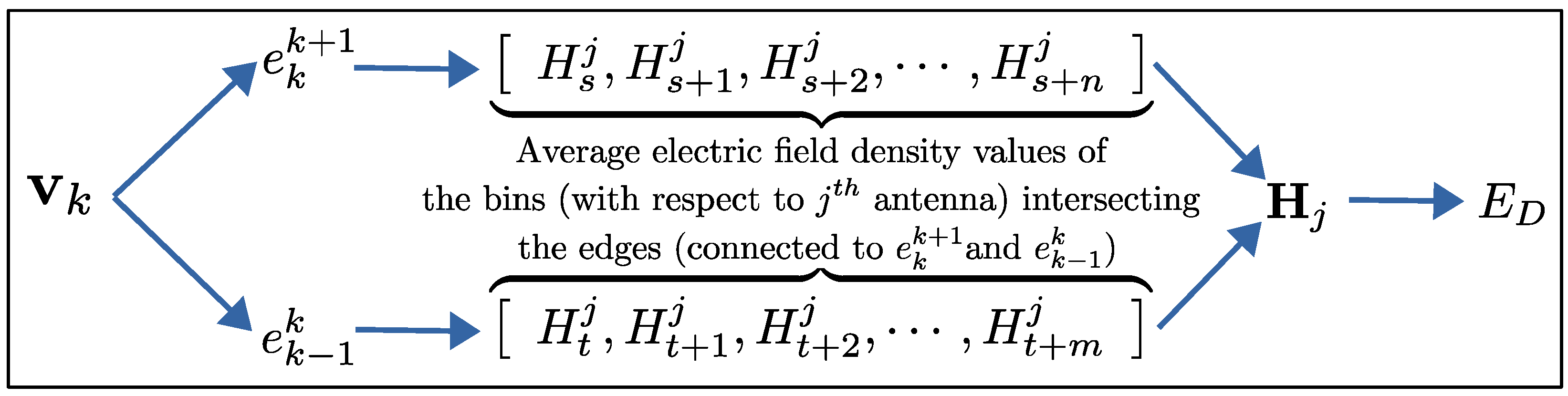

Figure 1 shows an overview of our shape reconstruction method, while the following subsections will detail several aspects of the different components listed therein.

2.1. Preprocessing

Since our shape estimation scheme relies on iteratively evolving a shape model to match the actual scene, careful design of the cost functional is critical. This is particularly challenging for radar sensing, as our primary information sources are signals oscillating at high frequencies. Incorporating radar data within a least-squares energy functional presents a significant challenge, as the resulting functional would be affected by an intractable number of local minima [

15]. Consequently, a simplistic design for the cost functional would transfer this high-frequency behavior into the parameter space (shape), populating our functional with unwanted local minima due to cycle-skipping phenomena [

11]. For an iterative algorithm that depends on gradient information, this complication renders the approach impractical, as gradient information will drive the shape’s evolution toward the nearest local minimum. This concern is especially relevant for applications like ours that utilize high-bandwidth waveforms. To address this issue, we propose a novel preprocessing method for our return signal that enables us to design our cost functional using information independent of the high-frequency waveform structure. Ideally, the information (observable) to be extracted from the radar signal should possess the following three properties for our method to work:

It should maximize the retention of the geometric information contained within the radar echo.

It should be as decoupled from the high-frequency oscillatory structure of the carrier waveform as possible.

It should exhibit smooth variations with respect to shape geometry, ensuring gradient information for guiding evolving shape models to the actual shape.

2.2. Extraction of Field Strength Profile over Range

Radar measurements comprise back-reflected returns from individual scatterers within the scene that interfere both constructively and destructively to form our observed signal. Consequently, scene shape information is embedded in the radar’s return signal in a highly convoluted manner, making the raw data unsuitable for our applications. Furthermore, significant hardware constraints must be addressed, as most radar systems operate at frequencies substantially exceeding the sampling capabilities of even advanced analog-to-digital converters (ADC) [

19]. Although demodulation techniques can partially mitigate sampling requirements, challenges persist when processing high-bandwidth signals. However, since a geometric object placed before the radar spans a continuum of range values, the resulting echo represents a composite of return signals with varying time delays. To address these challenges, we propose a two-step preprocessing methodology to decompose the return signal into its range-dependent components.

The first step uses the established deramping technique (also termed stretch processing [

20]). This approach involves mixing the radar return signal with a heterodyne signal—a time-delayed replica of the transmitted signal. This process generates a new signal with substantially reduced frequency components, thereby significantly lowering sampling requirements. Additionally, when the transmitted signal is a linear frequency-modulated (LFM) pulse, this technique effectively produces a range decomposition of the radar echo. From a mathematical perspective, for a scene scatterer with a range (round-trip distance) of

relative to a transmitter–receiver pair, the stretch processor output (excluding pulse windowing) is expressed as follows:

where

;

represents the strength of the return signal;

denotes the center frequency of the transmitted pulse;

is the chirp rate;

and

are the delay of the echo and heterodyne signal (delayed replica of the transmitter signal), respectively. The equation reveals that only the final exponential term exhibits a time dependence with a frequency of

. Since

and

are related through the speed of light

c, we observe a frequency value that varies linearly with range.

In our case, a geometric shape with its visible portion spanning a continuum of ranges results in a deramped signal that contains a continuum of frequencies within interval . This interval is determined by the minimum and maximum range values () of the visible portion of the object relative to the corresponding antenna pair. Consequently, the frequency spectrum of the deramped signal encodes the distribution of the electric-field strength density along the shape’s range profile.

It is important to recognize that even though the density of the electric field’s strength reflected from a specific range is represented by

in Equation (

1), we also have two new terms in the equation (second and third terms that are carrier-dependent and residual video phase terms) that introduce complex phase expressions that vary with time delay (or range) and manifest themselves in the frequency spectrum of the stretch-processed signal.

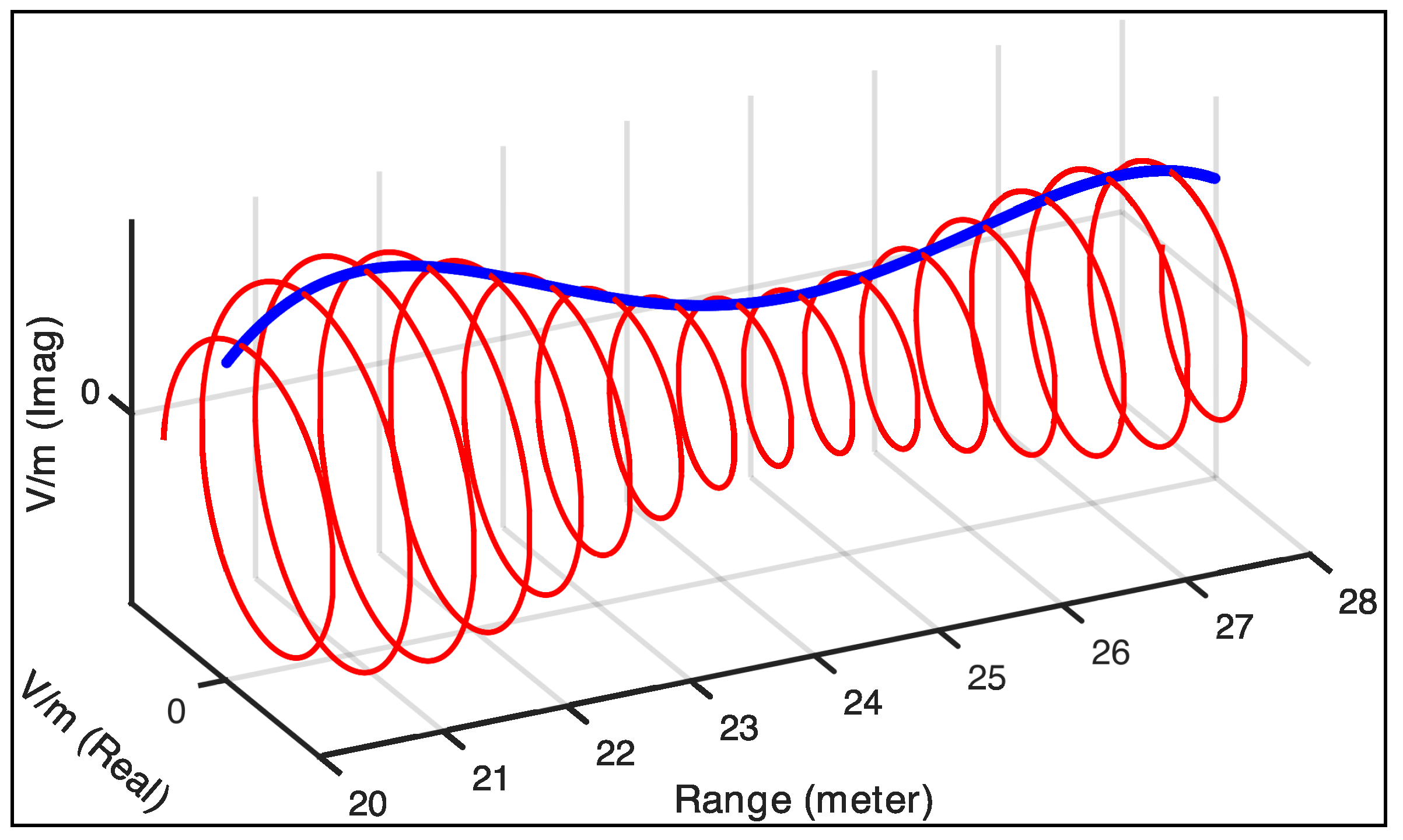

Figure 2 illustrates a typical frequency spectrum of a stretch-processed signal between the range interval

assuming that there is no windowing in the time domain. Our primary interest lies in the electric field’s strength density (

), as this component carries the geometric information relevant to our method.

Notably, our method does not require estimating the continuous profile, as we utilize a discretized version of the electric field strength density over range bins. Thus, our objective is to estimate a set of average electric field strength values across specified ranges using time samples of the stretch-processed signal. Although we have proposed an approach for this estimation [

21], it has not been implemented in the current paper. Similar decomposition techniques (combining stretch processing with discrete Fourier transforms) have been established in the SAR literature, such as polar formatting [

22], demonstrating the viability of range decomposition from stretch-processed radar returns. Furthermore, compensation methods for the phase terms in Equation (

1) have also been studied [

23].

2.3. Forward Model

Our forward model is one of the core components of our inversion, and it is used to simulate the radar response of a given shape. The inputs to this component are the evolving shape estimate

, the associated reflectivity that rules the strength of the scattered signal, and the configuration of the transmitting/receiving antenna pair (TX/RX). For simplicity, let us consider just one transmitter–receiver pair with known locations. The scene

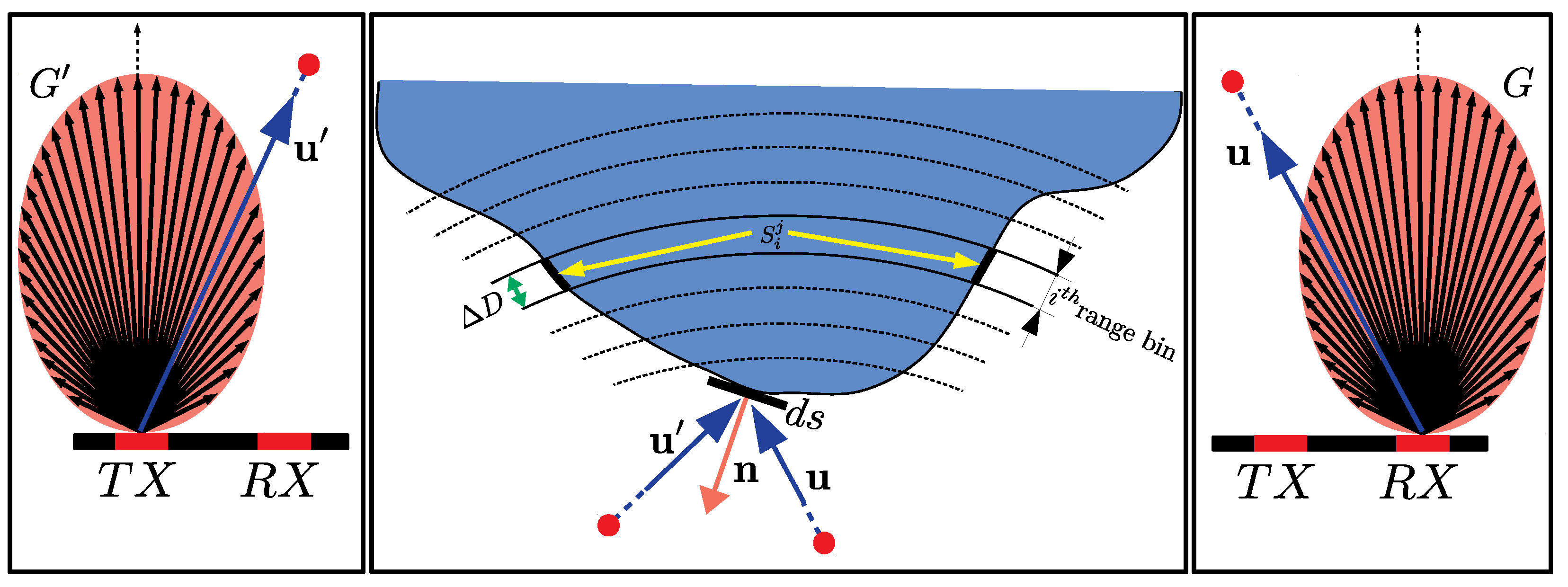

is partitioned into range bins. The part of the scene with a range that falls within a specific bin determines the value of our observable quantity

for that bin. The range bins can be visualized as a set of ellipsoids with foci that coincide with the locations of TX and RX (

Figure 3). For a given range bin, the value of the corresponding sample

is obtained by integrating over the portion of the surface included within the bin, which is also visible from both the transmitting and receiving antennas.

In our simplified 2D development, we model the infinite-small electric field strength on the receiver induced by an infinitely small length scatterer

located at

, which is described as follows:

where the scatterer unit’s normal is denoted with

. In practice, for computation purposes, we adopt several assumptions, namely the following: (1) the transmitting and receiving antennas are directional; (2) the scene consists of a continuum of ideal point scatterers that behave as Lambertian reflectors; (3) multi-path scattering is neglected. Consequently,

becomes the following:

where

and

are unit vectors from TX and RX pointing to the scene,

and

R are the corresponding distances, and

and

G are antenna directivity multipliers for transmitted/received signal strengths in the direction of point

with respect to the antenna’s normal. In this paper, we will use the term “antenna gains” to refer to these quantities, though it should be noted that within our model framework, these values are characterized in terms of the electric field strength rather than the conventional definition that expresses them in terms of transmitted/received power.

In summary, given an estimate of the shape of the scene

and the known locations of TX and RX, the radar signal is emitted by TX probes in the scene and is scattered back toward receiver RX. Our observable quantity

, while being a function of the frequency (discretized in bins), still retains information on the depth (the increasing frequency is associated with increasing ranges), at which different portions of the scene are encountered. In addition, the strength of the electric field measured from a specific depth carries information about the physical aspects and the size of the portion of the scene included within the corresponding bin. The latter is computed as follows:

where

is the width of the range bin, and

is the set of scatterers on the shape boundary contained within the

i-th range bin associated with the

j-th antenna pair. Finally,

is an indicator function that takes into account whether the scene at

is hidden either from the emitter or the receiver’s point of view. In this way, our model explicitly accounts for visibility and self-occlusions (which is not the case for radar imaging). The present model retains the same properties both in three dimensions, where the shape is a surface, and the simplified 2D case, in which the scene is modeled as a curve and Equation (

4) becomes a contour integral.

2.4. Inversion

As previously demonstrated, the aim of our inversion is to minimize the discrepancy between preprocessed radar data and their simulated counterpart (evolving shape) and, by carrying this out, to obtain an evolving shape that matches the actual scene.

It is important to understand that a radar echo contains range information (i.e., distance from the antennas). This is the reason why point scatterers located in the same range contribute similarly to the signal (same frequency signature in the deramped signal) regardless of the actual location. Therefore, there is a natural directional ambiguity that can be solved by collecting data from different view points, i.e., with multiple transmitter–receivers or with a moving antenna pair.

Since we have a discretized range profile, the fidelity term of our cost functional will use the vector of the average electric field strength values of the range bins for a given antenna. For the

j-th antenna pair, we denote this vector with

. Based on this, the data fidelity term is given as follows:

where

is the number of antenna pairs,

is the simulated radar response computed using Equation (

4), and

is the observable extracted from the measured data (preprocessed). The vector

consists of the average range values of the range bins, which are used to compensate for the range attenuation of the signal strength over the range so that each bin contributes to the error functional similarly.

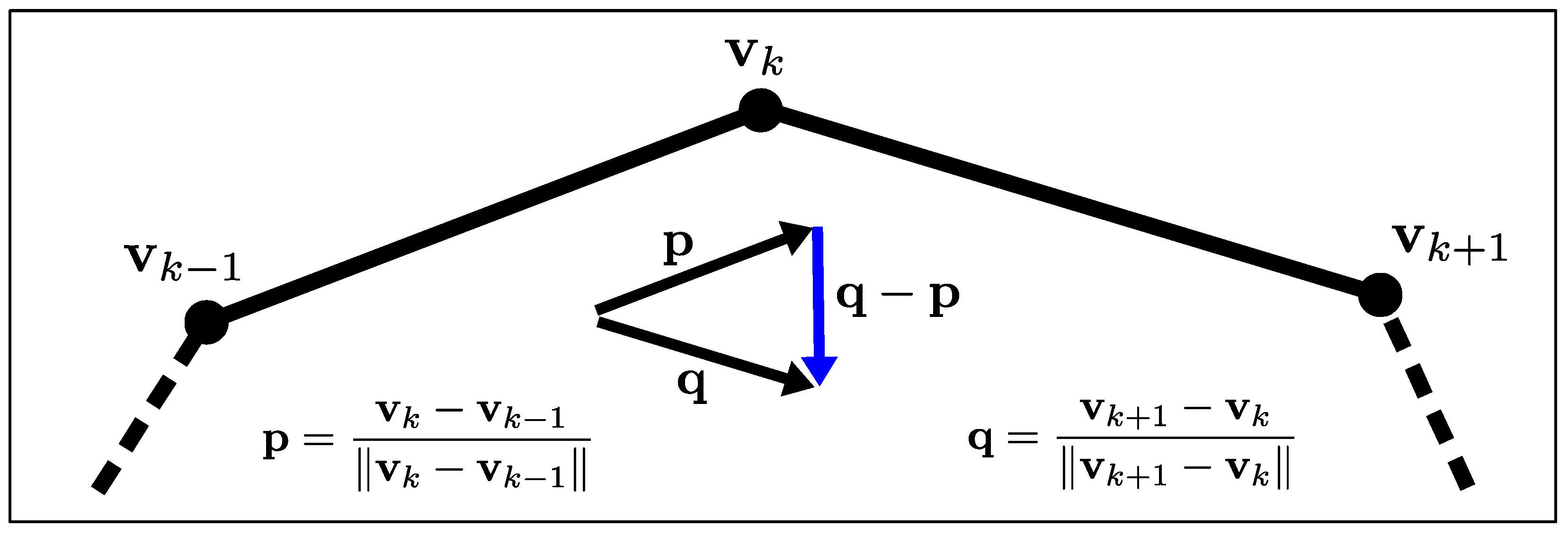

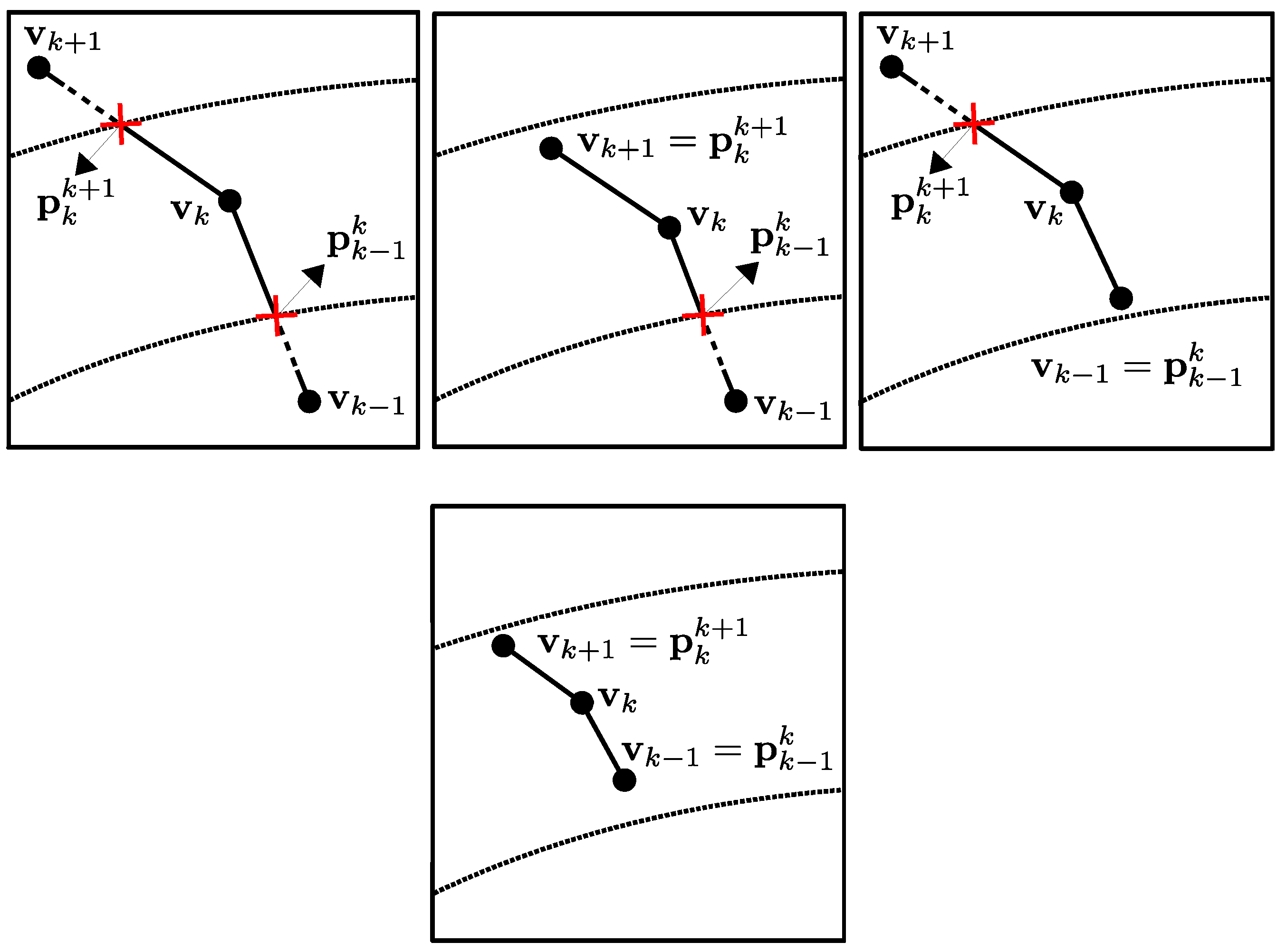

Regarding the regularization term, we have considerable flexibility in incorporating various shape priors. For the scope of this work, we define it as the shape’s curvature, which naturally promotes smooth geometries. The parameterization selected for the shape becomes crucial at this stage, as we must customize our curvature regularizer to accommodate this specific representation. For ease of implementation, we focus on 2D star-shaped objects. This class of geometries offers dual advantages: They can be readily approximated using polygonal shapes (i.e., sets of interconnected segments) and can be represented with particular elegance via polar coordinates. This approach simplifies curve discretization, allowing us to treat the shape as a polygonal entity and transform the shape optimization problem into one of optimizing the radial positions of the vertex points (or control points) that define the shape relative to a predetermined origin. Our regularizer (illustrated in

Figure 4) is consequently expressed as follows:

where

is the number of vertices (

and

because of the circular topology of the shape). By combining Equations (

5) and (

6), we formulate our complete energy functional as follows:

where

promotes reductions in the disparity between the evolving shape and the actual shape (data fidelity), while

encourages smoothness in the evolving shape, with

serving as the regularization weight factor.

2.5. Optimization

To perform the iterative evolution of the scene, we calculate the gradient of the energy functional in Equation (

7) with respect to our chosen parameterization, specifically the coordinates of the points of the vertex.

Each vertex determines the location of the two edges directly connected to it. Considering one pair of antennas, displacing either of the two edges will correspondingly affect the radar’s response provided that such edges are visible to both the transmitter and receiver. In particular, such perturbations will affect the range bins that include the affected edges. As such, for every antenna pair configuration and every associated range bin, we need to find out which edges, or portions of edges, fall within the range span of the bin and whether such segments are visible. This task is relatively simple in 2D and can be achieved using Algorithm 1.

| Algorithm 1 Visibility analysis for the edge of the polygonal shape model. denotes the edge connecting to . |

for do

midpoint of

antenna pair location

hypothetical line segment connecting to

for do

if intersects then

break

end if

end for

end for

return |

The pseudocode (Algorithm 1) accepts a list of input control nodes

n and returns those visible from the transmitter and receiver pair of interest. This algorithm has a complexity of

, which we chose due to its ease of implementation. Note that there are other alternatives that are more computationally efficient and can be used when moving toward 3D applications [

24,

25,

26].

After establishing visibility, we derive the energy gradient using the chain rule.

Figure 5 illustrates the relationship between the energy functional data fidelity component (

) and the coordinates of a vertex

.

Although it is relatively straightforward to calculate the partial derivative of

with respect to

and that of

with respect to

, determining the partial derivatives of

with respect to the location of the vertex

requires particular attention. This complexity arises because edges may span multiple range bins, causing integration boundaries to depend on polygon vertices where the connected edge intersects with the range boundaries.

Figure 6 shows several possible configurations of this relationship. The expression for the partial derivative of

with respect to the

k-th vertex is given as follows:

Here,

represents the set of shape boundary points contained within the

i-th range bin relative to the

j-th antenna pair. The domain of integration becomes the portion of the edge contained within the range bin. Taking the derivative of this expression yields the following:

where

and

are boundary terms that take the following form:

We have

when the edge connecting

to

is fully contained in a single range bin, and similarly, we have

when the edge connecting

and

is contained in a single range bin. In these cases, the dependency of the integration domain on

vanishes. In summary, our selection of star-shaped objects allows us to utilize a polar representation in which control nodes maintain fixed angular positions while only their radial distances vary. Consequently, we adopt the radii of the vertices as our parameter set for shape evolution. Our gradient vector incorporates the component of the derivative of the energy functional with respect to the vertex coordinates along the radial direction. For the

k-th vertex, this gradient component is expressed as follows:

Finally, the shape update is performed by perturbing the nodes in the direction opposite to this gradient. As a final remark, the rationale behind our choice of data preprocessing was to address the oscillatory nature of radar signals and obtain a well-behaved cost functional for inversion. However, several shallow local minima can still persist in the energy landscape. Therefore, to help avoid these shallow minima during optimization and improve the convergence rate, we improved gradient descent by adopting the accelerated descent algorithm in [

27], resulting in the following updated scheme:

where

represents the velocity;

denotes the radii of the vertices being updated;

is the momentum coefficient;

indicates the step size of the gradient descent.

3. Results

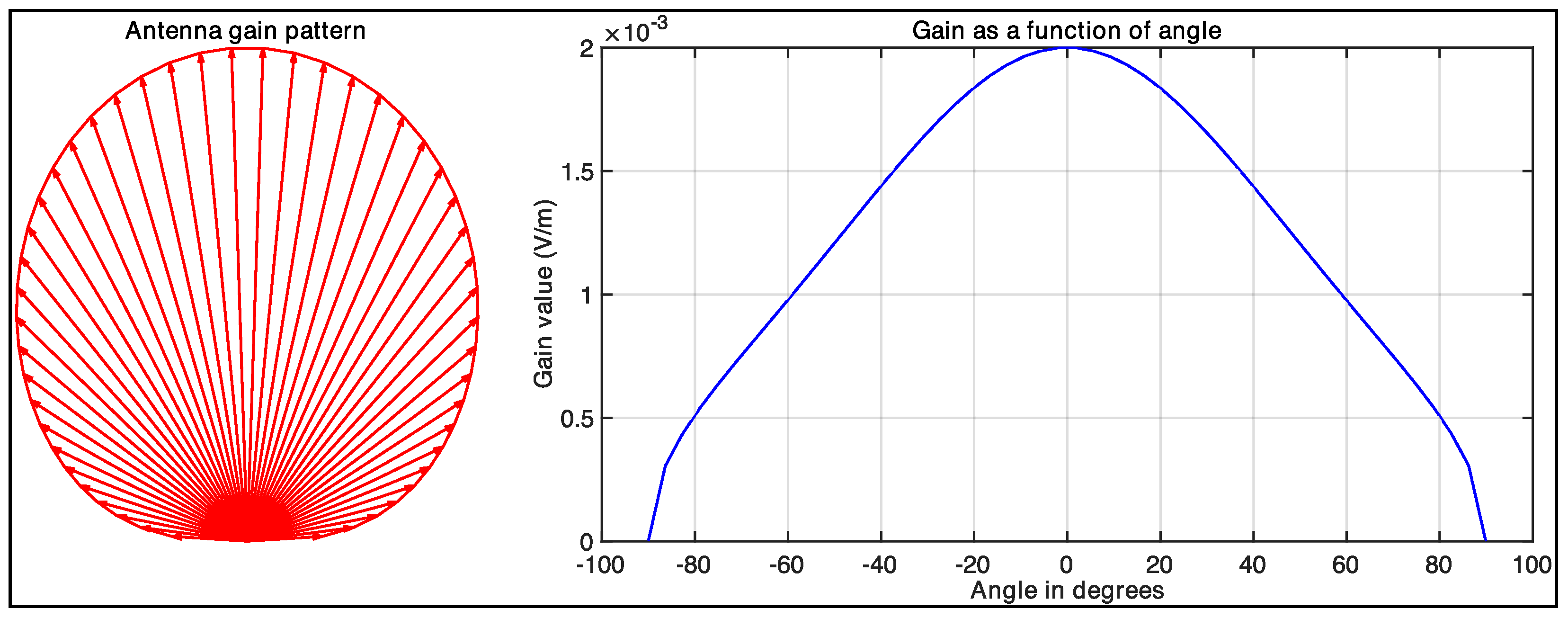

To evaluate the effectiveness of the algorithm, we conducted simulations in three distinct scenarios, each involving multiple pairs of antennas. Since we are working with a 2D case, we assume that both the transmitter (TX) and receiver (RX) antenna apertures have geometries consisting of side-by-side line segments sharing the same unit normal.

Table 1 presents several parameters that remained constant throughout all simulations.

The antenna gain terms are assumed as follows:

In these expressions,

and

represent the aperture lengths for the transmitter and receiver antennas, respectively. Angles

and

denote the angles formed by the transmitted and received rays relative to the antenna’s surface normal, while

c represents the speed of light. Using the parameters specified in

Table 1, we compute the antenna gain pattern and plotted the gain values as a function of angle, as shown in

Figure 7.

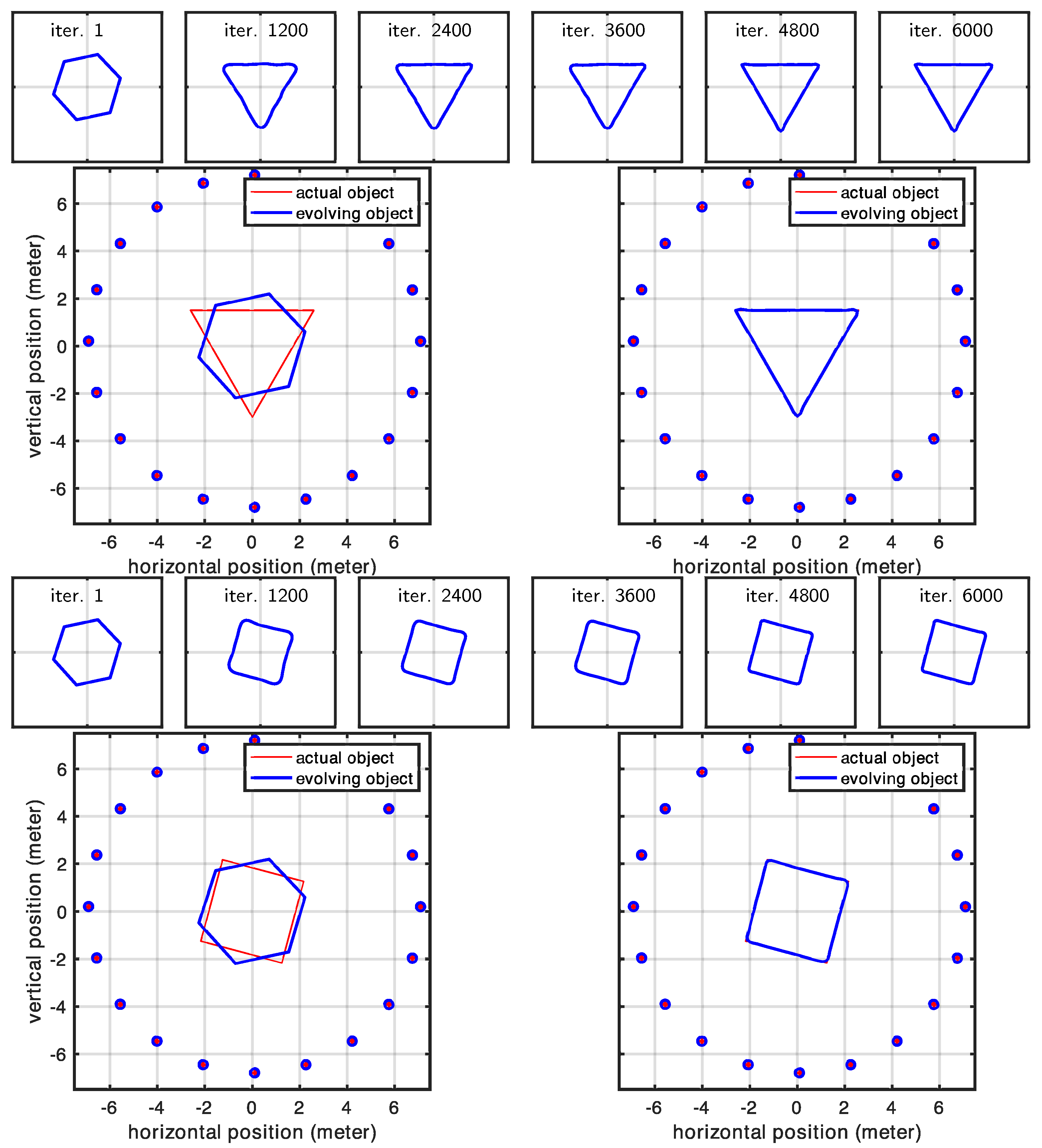

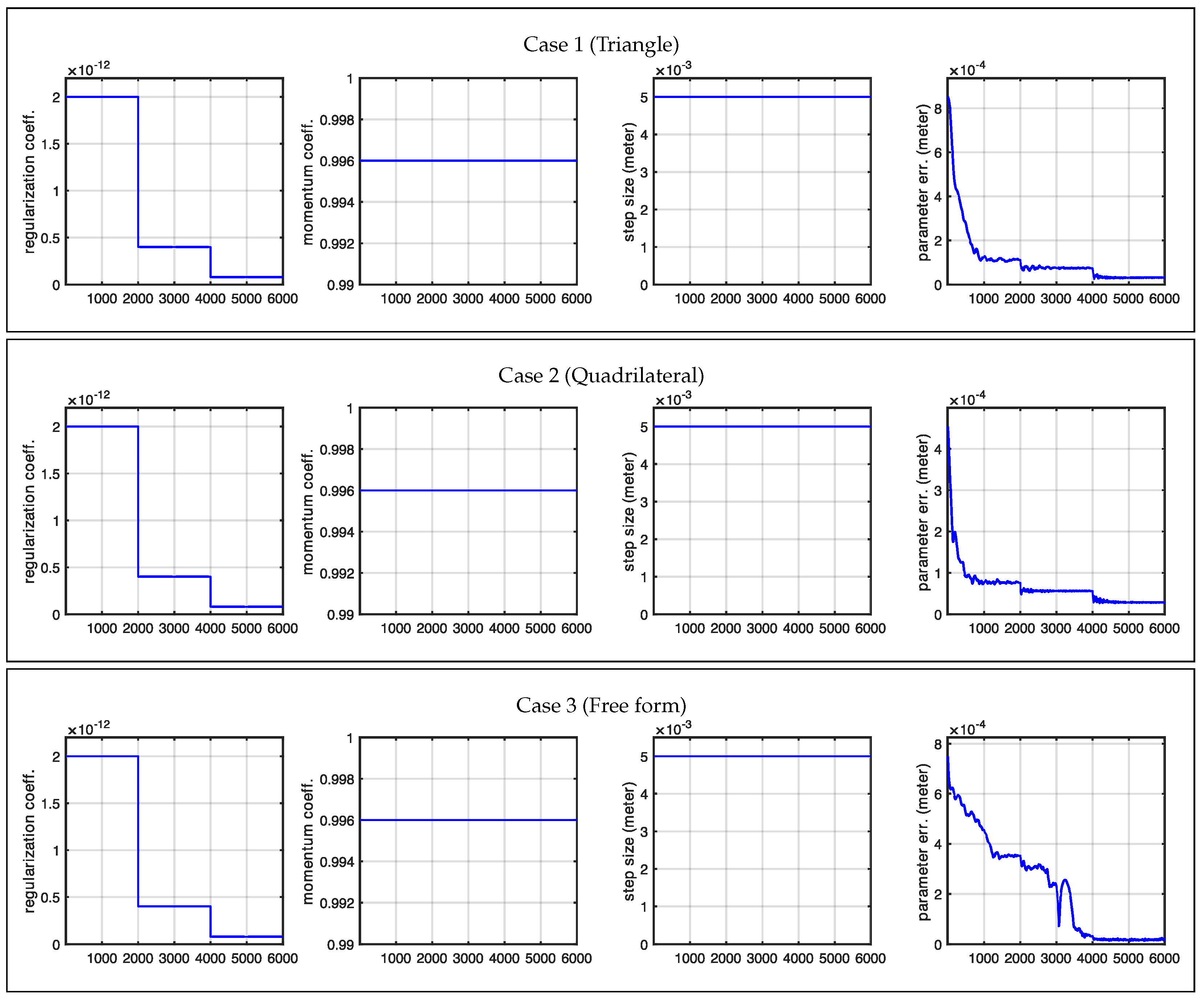

For each simulation, we used different shape configurations (consisting of an initial object and an actual object) and tested two distinct antenna arrangements for each configuration. In our first setup, we utilized a circular antenna array where each antenna pair was oriented toward the center of the array, ensuring that at least one antenna pair could detect each portion of the target object. Our second setup featured antennas arranged in a linear array, mimicking configurations more commonly found in traditional radar applications. Throughout each experiment, we initially applied a high regularization coefficient to maintain shape regularity; then, we gradually reduced this coefficient to progressively capture increasingly finer details.

Table 2 provides a summary of the shapes used in our experiments.

3.1. Circular Array of Antennas

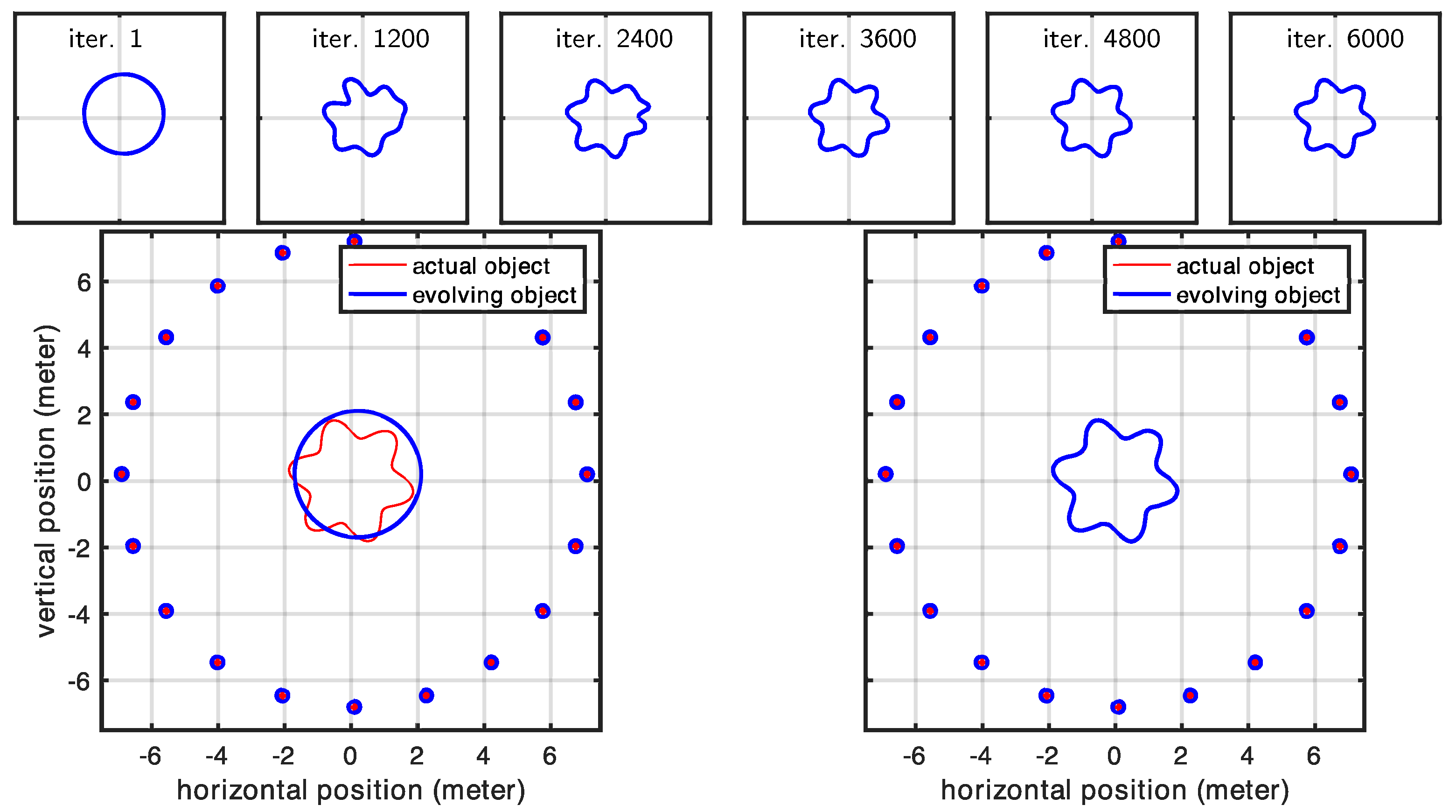

In this experiment, we examined a shape surrounded by antennas positioned to ensure that each portion of the investigated shape was visible to at least one pair of antennas. This arrangement led us to expect that our initial shape estimate would properly converge to the actual shape. Our expectations were largely met with impressive high-quality reconstruction results. The only exceptions occurred at the very sharp corners in Cases 1 and 2 (in

Figure 8). However, these minor discrepancies were entirely expected, as the regularization term naturally favors smooth shapes and suppresses high-curvature details, causing the corners of the evolving model to appear rounded. In contrast, Case 3 (in

Figure 9), where the true shape had no sharp corners, showed correctly retrieved contours throughout the entire shape.

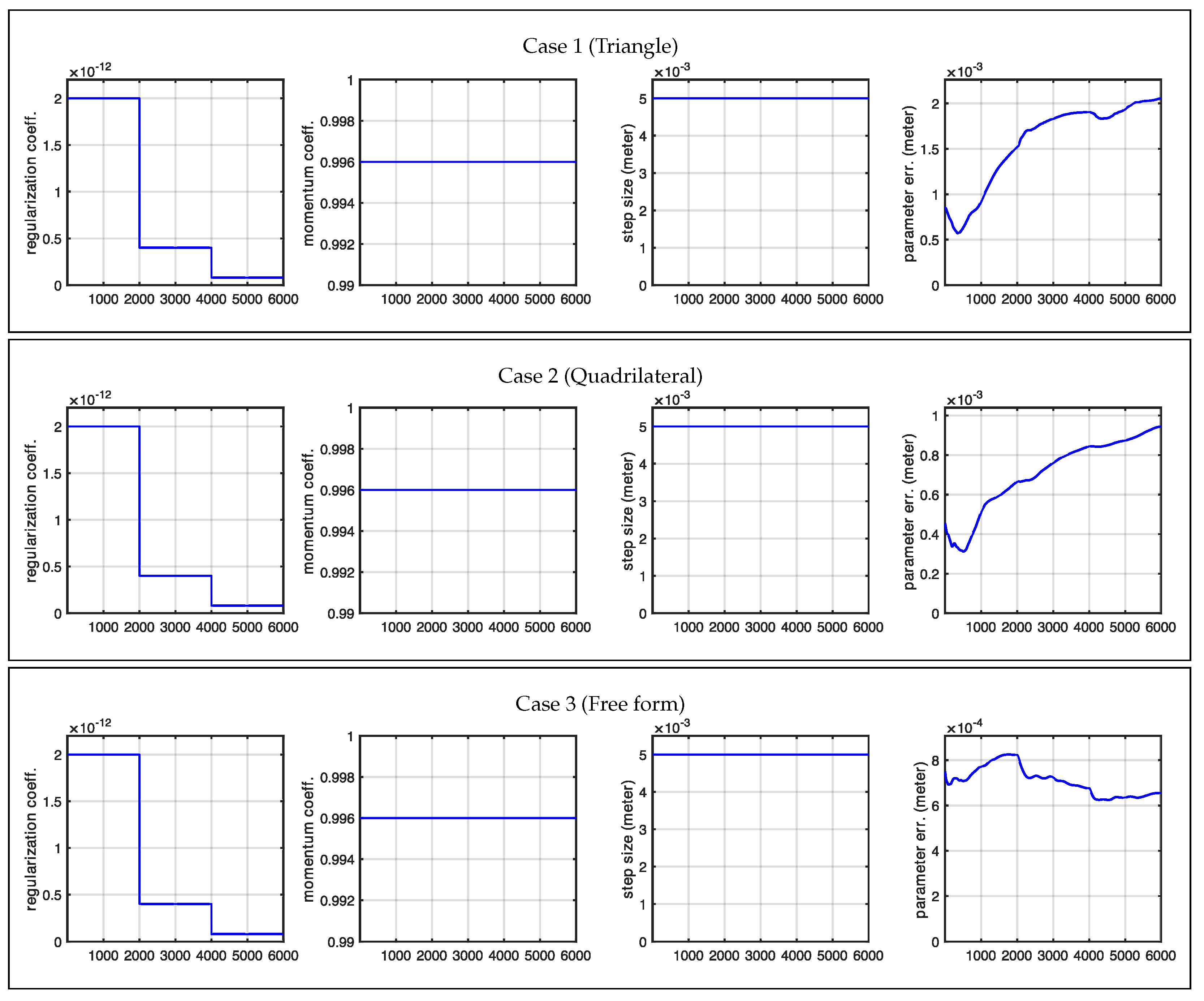

Figure 10 shows the statistics related to this experiment. In particular, the figure shows the changes we made in the regularization coefficient, which was progressively lowered to reduce the effect of the regularization term, and the cumulative distances of the shape control points from the desired location (true locations). A rough approximation of the desired shape was reached after 2000 iterations, while full convergence required 6000 iterations. The total computational time was less than two minutes for each case.

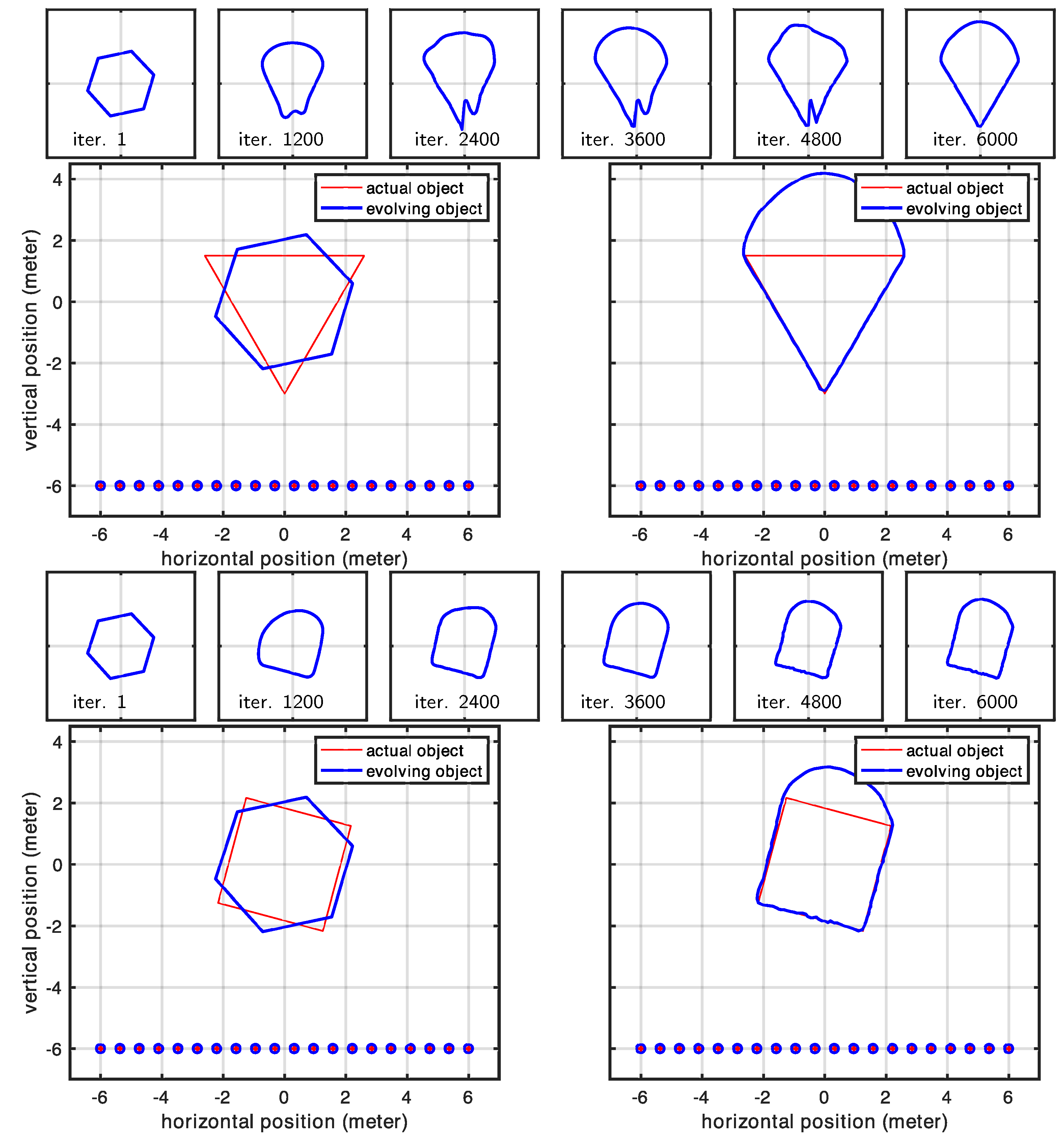

3.2. Linear Array of Antennas

In this experiment, we investigated a linear antenna array configuration. Since the shape was illuminated from only one side, portions of the scene contour remained invisible and therefore could not be reconstructed. Our results confirmed this expected behavior while also highlighting the effect of the regularizer, which served as the only force driving the evolution of the invisible parts of the contour. In contrast, the contour facing the antenna array was perfectly reconstructed, as shown in

Figure 11 and

Figure 12. The shaded part of the scene, evolving solely according to the regularizing term, was also responsible for the increased parameter error observed in all cases (see

Figure 13). We should emphasize that although the dominance of the regularizer initially appears to create an undesired artifact (the curved boundary), this behavior is intentional by design. In practice, since we know exactly which portions of the contour are illuminated and which are not, we can precisely identify which parts of the scene are correctly retrieved and expected to match the actual shape.

Figure 13 shows the statistics related to this second experiment. Similarly to

Section 3.1, full convergence required 6000 iterations, where each case took less than 2 min to complete. The larger error observed here is due to the part of the shape that was invisible to the antennas, which could not be recovered.

3.3. Shape Parametrization Using Active Contours and the Level-Set Method

Note that the continuous variational framework developed here is not limited to any particular class of discrete geometric representation. In our work with respect to the time domain, we began from a graph-based representation and subsequently generalized our algorithm to 3D applications. Similarly, we decided to provide a proof of concept in 2D before generalizing it to 3D. However, the graph-based approach poses several limitations. Therefore, while we restricted this investigation to 2D for simplicity, we nevertheless selected a flexible finite element representation that is capable of representing objects both in the forms of a graph and compact objects. Additionally, the present approach can be easily reformulated in 3D. Although moving from 3D to 2D simulations seems to represent a step backward, it nonetheless demonstrates greater geometric flexibility compared to the graph representations used in our early work. Ultimately, we seek a fully 3D method that has the flexibility to represent a broad class of geometries, including compact surfaces, graphs, multiple separate surfaces, and surfaces of complex topology (with holes). For these reasons, implicit representations (i.e., level-set methods) offer a much better choice in 3D.

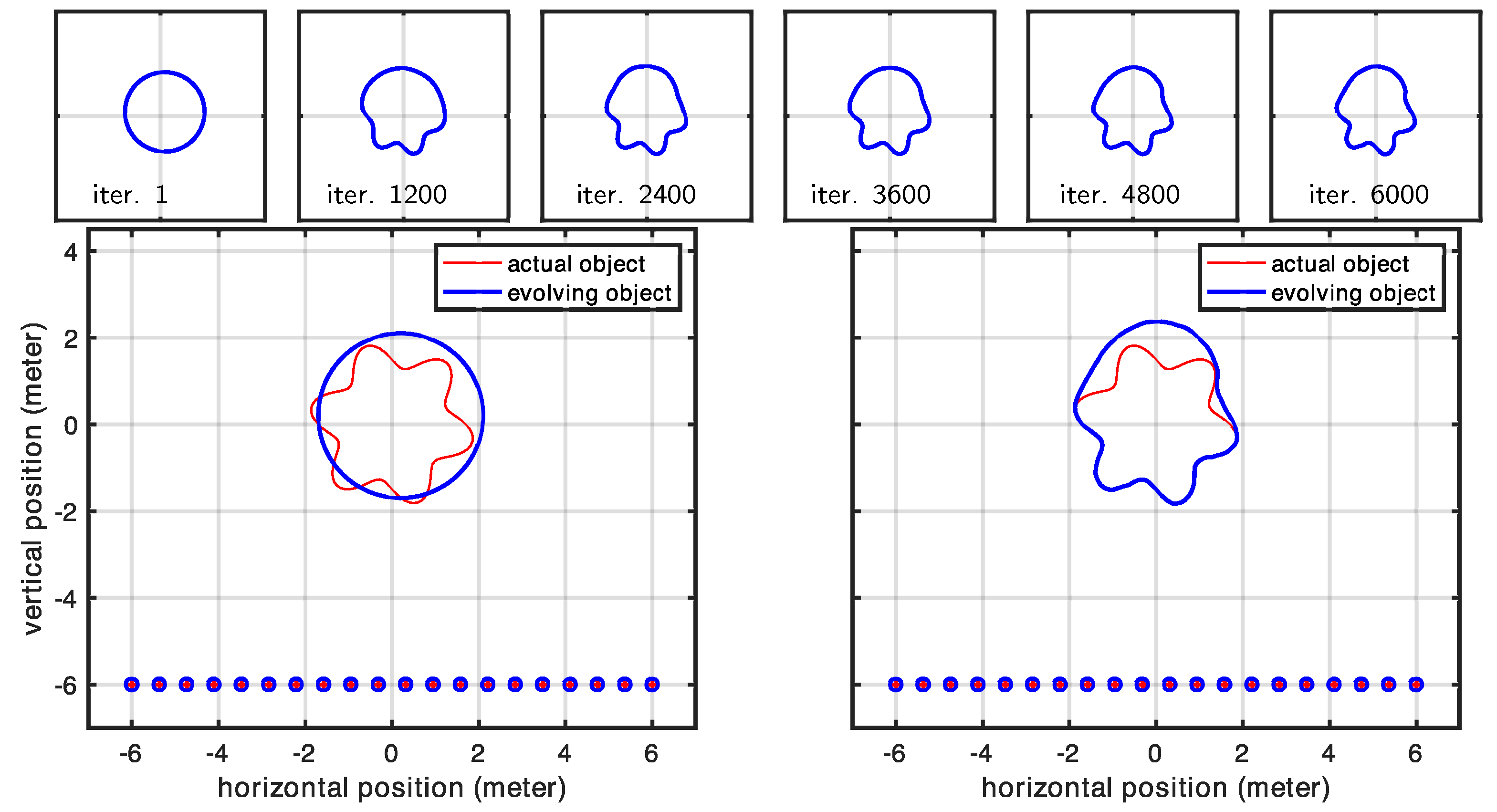

However, the additional details needed to develop and implement a 3D level-set method are beyond the scope of this paper, but they will indeed be the focus of an upcoming paper dedicated to fully developing the level-set framework for this radar inversion strategy. However, we do take a first step in this direction by including a 2D level-set example in our experimental results, which demonstrates another level of flexibility. While the 2D finite element approach gives us the ability to capture a prior-known number of compact shapes, the 2D level set extends this to a prior unknown number by allowing contour splitting and merging. We have omitted the implementation details here for the 2D level-set method, as the same (and many more) details will, by necessity, be covered with the fully 3D method that is being developed for publication.

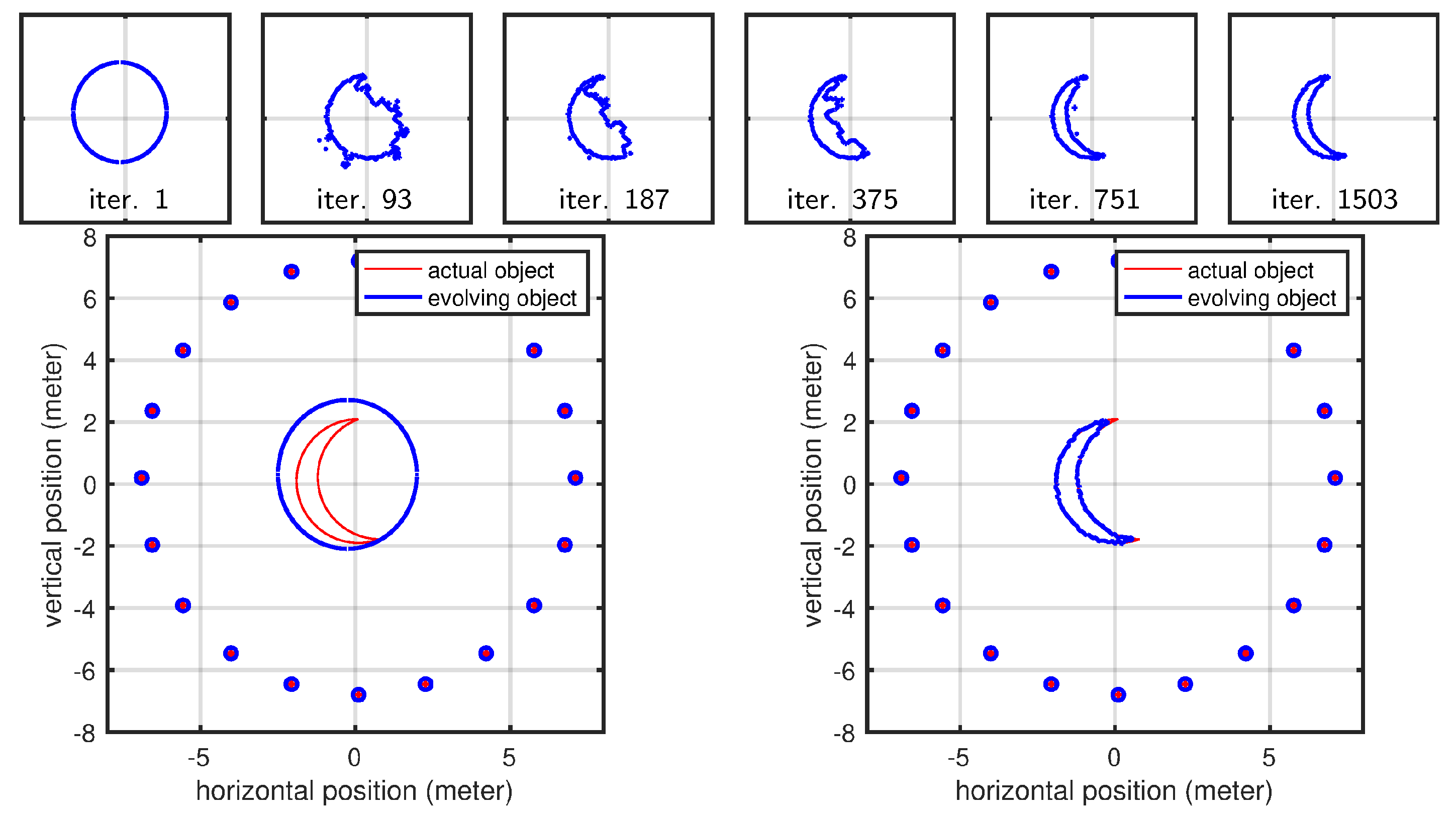

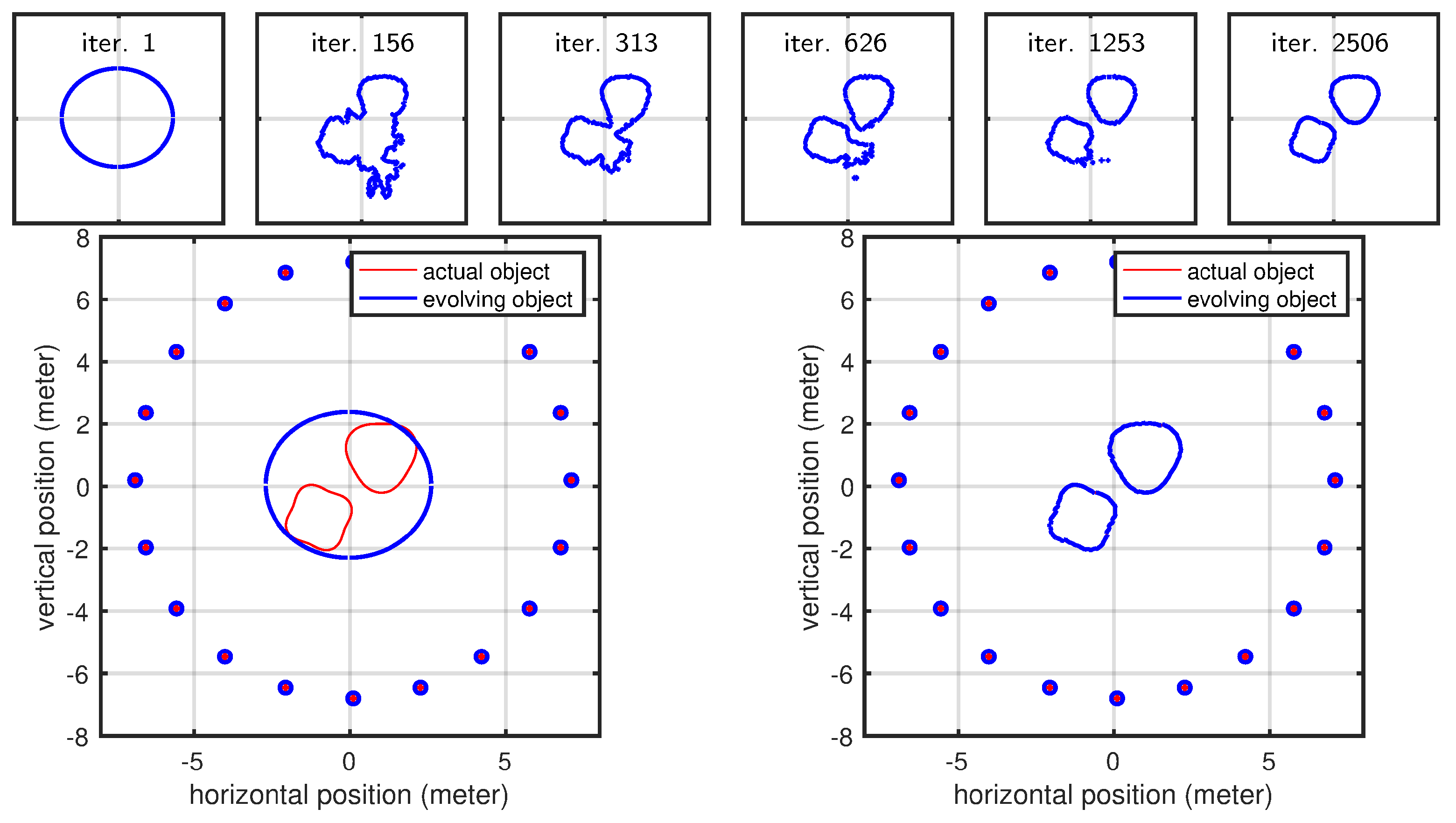

Here, we demonstrate the level-set approach using two different cases that cannot be handled by our polygonal shape model: (1) a crescent shape that cannot be expressed as a polygonal shape used for other results (we parameterized the polygonal shape model using a polar representation, and for a crescent-shaped object, the same angle value corresponds to multiple radii, meaning that a ray emanating from the center can intersect with the shape at multiple points) and (2) a geometric shape that is topologically different compared to the initial shape mode (level-set parameterization helps us here since we do not have to assume a specific topology as our initial shape model). The evolution of the curve for the crescent-shaped object is shown in

Figure 14. The curve evolution of the second case is shown in

Figure 15. The present section anticipates early results using active contours and the level-set method (LSM). The motivation for using these methodologies to parameterize the scene is twofold: (1) LSM describes the scene implicitly, with greater flexibility (and simplicity) in representing any shape virtually; (2) it naturally handles topological changes. We reserve the full discussion of such approaches for a subsequent article, in which we will address the problem in the full three-dimensional case, which is more complex but also more relevant for real-world applications. Nevertheless, we include this early result in 2D to demonstrate that this geometric approach can indeed be used in the context of radar-based inversion.

4. Conclusions and Future Work

We propose a frequency-domain radar-based shape inversion method that is analogous, yet complementary, to the time-domain approach described in [

15,

16]. Our 2D inversion examples demonstrate that stretch-processed radar signals can effectively be used to reconstruct scene shapes when proper observables are extracted from them. Unlike classical radar imaging techniques that rely solely on signal processing, our approach leverages data inversion using an explicit geometric model that attempts to capture the scene shape by iteratively minimizing a cost functional. This modeling approach allows us to incorporate various shape priors directly into the estimation process. In this paper, we specifically implemented a regularization term that promotes smooth shapes. Nevertheless, we should emphasize that any geometric shape prior knowledge could be integrated naturally as a regularization term in our cost functional. Another important benefit of using geometric models is their ability to handle visibility issues and occlusions effectively. Our approach implements visibility analysis to identify which segment of objects are detectable by radar antennas and thus influence the received signal’s measurements. We observe the effects of the visibility analysis in

Section 3.2, where the visible parts of the scene successfully converge to the true shape while the occluded parts evolve toward a configuration that satisfies the mathematical prior built into the regularizer. The latter is therefore an artifact. However, this is a design choice. Since we explicitly computed visibility, we can discern exactly which part of the scene is correctly reconstructed.

Although the current experiments used the parameterization of the scene relative to polar coordinates and investigated polynomial shapes, such assumptions do not impact the validity of our findings, which carry over to more complex scene representations. Similarly to our early work in the time domain, we provide a proof of concept in a simplified environment. However, in contrast to pursuing a graph-based representation of the geometry (which posed several limitations), we instead opted for a more flexible shape based on finite elements. Although implemented in 2D for simplicity, the chosen representation not only removes the limitations of the graph-based approach but also provides us with a flexible environment to test the viability of the method without the burden of implementing the approach directly into 3D.

It should be noted that even though our method was implemented in C++17 (both forward and inverse models and visibility analysis), our main focus was on correctness and simplicity (e.g., the visibility analysis has computational complexity ) and not performance. Therefore, considering the fact that numeric integration is the computation-heavy part of our method and these computations are known to significantly benefit from parallel programming and vector extensions available in modern processors, we can comfortably say that there is significant room for improvement in future work. While, in previous work, we demonstrated that radar-based shape reconstruction is feasible in the time domain, the present experiments, although exploring the simplified case of two-dimensional geometries, nevertheless demonstrate that the same shape reconstruction framework can also be formulated in the frequency domain. This conclusion motivates us to pursue the challenge of implementing the approach using active surfaces, as these methods provide the flexibility necessary to handle any topological change (i.e., evolving an initial contour to reconstruct multiple separated objects within the same scene). However, such an effort is a matter for future work.