Abstract

The increasing adoption of high-resolution imaging sensors across various fields has led to a growing demand for techniques to enhance video quality. Video super-resolution (VSR) addresses this need by reconstructing high-resolution videos from lower-resolution inputs; however, directly applying single-image super-resolution (SISR) methods to video sequences neglects temporal information, resulting in inconsistent and unnatural outputs. In this paper, we propose FDI-VSR, a novel framework that integrates spatiotemporal dynamics and frequency-domain analysis into conventional SISR models without extensive modifications. We introduce two key modules: the Spatiotemporal Feature Extraction Module (STFEM), which employs dynamic offset estimation, spatial alignment, and multi-stage temporal aggregation using residual channel attention blocks (RCABs); and the Frequency–Spatial Integration Module (FSIM), which transforms deep features into the frequency domain to effectively capture global context beyond the limited receptive field of standard convolutions. Extensive experiments on the Vid4, SPMCs, REDS4, and UDM10 benchmarks, supported by detailed ablation studies, demonstrate that FDI-VSR not only surpasses conventional VSR methods but also achieves competitive results compared to recent state-of-the-art methods, with improvements of up to 0.82 dB in PSNR on the SPMCs benchmark and notable reductions in visual artifacts, all while maintaining lower computational complexity and faster inference.

1. Introduction

Over the past few years, there has been a growing demand for high-quality and high-resolution images and videos across diverse industries. Fields such as autonomous driving, medical imaging, and crime scene investigations rely heavily on converting low-resolution (LR) images or frames into high-resolution (HR) counterparts, underscoring the importance of super-resolution (SR) technologies. SR not only enhances resolution but also effectively reduces noise and blur, ultimately improving overall visual quality [1].

In particular, single-image super-resolution (SISR) has matured significantly, driven by breakthroughs in deep learning-based methods. From early convolutional architectures such as SRCNN [2] to more recent transformer-based models such as SwinIR [3], SISR methods have shown remarkable success in restoring fine details. However, directly applying these SISR solutions to videos typically processes each frame independently, ignoring temporal cues and leading to inconsistent or unnatural video outputs [4]. Therefore, video super-resolution (VSR) methods must address motion estimation, frame alignment, and temporal fusion [5], often requiring more sophisticated architectures than those used in SISR.

Early VSR research, such as the Bayesian adaptive method proposed by Liu and Sun [6], used optical flow [7] or LSTM-based networks [8] to capture inter-frame relationships, but these approaches still struggle with large motions and complex artifacts. Recent studies have endeavored to reduce computational overhead while improving temporal consistency in VSR. For example, Lee et al. [9] introduced a deformable convolution-based alignment network for better efficiency, while Zhu and Li [10] proposed a lightweight recurrent grouping attention network to aggregate temporal information effectively. Lu and Zhang [11] further addressed real-world degradations with a degradation-adaptive approach, highlighting the importance of handling a broad range of noise and blur conditions.

Building upon these advances, this paper addresses the following scientific questions and objectives explicitly: (1) How can we effectively integrate temporal dynamics into existing SISR architectures without extensive architectural redesigns? (2) How can we adaptively handle complex and non-uniform motion between consecutive video frames? (3) How can frequency-domain analysis improve the reconstruction of subtle textures and high-frequency details that are typically challenging for purely spatial approaches?

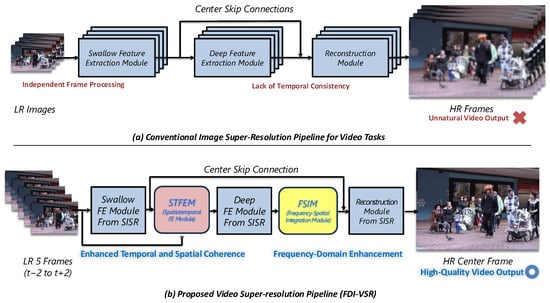

To answer these questions, we propose FDI-VSR, a novel framework designed to enhance existing single-image super-resolution architectures to better address video super-resolution tasks (Figure 1). Our method specifically introduces two innovative modules: a Spatiotemporal Feature Extraction Module (STFEM), which learns adaptive offsets to handle complex and non-uniform motions between neighboring frames without relying on explicit optical flow estimation [12], and a Frequency–Spatial Integration Module (FSIM), which transforms spatial features into the frequency domain to capture global context and enhance the recovery of subtle textures and high-frequency details beyond the limited receptive field of standard convolutions [13].

Figure 1.

Motivation for the proposed video super-resolution framework.

The main contributions of this paper are as follows: First, we propose a flexible framework that extends conventional single-image super-resolution models to video super-resolution tasks without extensive modifications, facilitating easier adoption and deployment in practical scenarios. Second, we introduce the STFEM, which effectively manages complex motions via dynamic offset estimation and enhances temporal consistency using multi-stage temporal aggregation based on residual channel attention mechanisms [14,15]. Third, we develop the FSIM, which incorporates frequency-domain processing to complement spatial features, enabling the network to reconstruct subtle textures, periodic patterns, and low-contrast regions more effectively. Finally, extensive experiments on four widely used benchmarks (Vid4 [4], SPMCs [16], REDS4 [17], and UDM10 [18]) demonstrate that our approach achieves superior performance compared to conventional methods and competitive performance against recent state-of-the-art VSR methods while maintaining lower computational complexity.

2. Related Work

2.1. Single-Image Super-Resolution (SISR)

Single-image super-resolution (SISR) aims to reconstruct a high-resolution (HR) image from a low-resolution (LR) input by restoring lost details. Early approaches primarily employed interpolation-based methods such as bilinear or bicubic scaling, often supplemented by edge priors [19] or example-based patch matching [20]. Significant progress was later achieved with the advent of deep learning, especially convolutional neural networks (CNNs). SRCNN [2], as one of the earliest CNN-based models, demonstrated the feasibility of learning an end-to-end mapping from LR to HR domains. Subsequently, residual learning inspired by ResNet [21] led to notable improvements, exemplified by EDSR [22]. Additionally, the introduction of adversarial training, such as SRGAN [23], further enhanced the realism and perceptual quality of super-resolved images. More recently, transformer-based architectures like SwinIR [3] have set new performance benchmarks by effectively modeling local and global dependencies through self-attention mechanisms. Furthermore, HMANet [24], employing a hierarchical transformer structure combined with multi-axis attention, has demonstrated state-of-the-art performance by adaptively capturing multi-scale contextual information, thereby significantly enhancing fine-detail reconstruction capabilities.

2.2. Video Super-Resolution (VSR)

Video Super-Resolution (VSR) reconstructs a high-resolution (HR) central frame from multiple adjacent low-resolution (LR) frames, exploiting both spatial details and temporal correlations [5]. Unlike single-image super-resolution (SISR), VSR involves challenges like motion estimation, frame alignment, and temporal aggregation, adding significant complexity.

Early VSR methods often relied on explicit motion estimation such as optical flow [4] to warp neighboring frames for alignment. While straightforward, these approaches frequently faced difficulties in handling large motions, occlusions, or complex movements. To mitigate these limitations, frame-recurrent models employing recurrent neural networks like LSTM were proposed, propagating temporal information without explicit motion estimation [25]. However, these recurrent methods suffered from error accumulation over multiple frames, degrading quality over time.

Recently, implicit alignment techniques based on deformable convolutions have emerged, effectively managing complex and irregular motion without explicit optical flow. Models such as TDAN [5] and EDVR [26] demonstrated significant performance gains using adaptive feature sampling. Following this trend, research has further focused on lightweight and efficient architectures. Lee et al. [9] proposed deformable convolution-based networks aiming at computational efficiency, while Zhu and Li [10] introduced recurrent grouping attention for effective temporal fusion. Lu and Zhang [11] emphasized robustness against real-world degradation scenarios, highlighting the practical applicability of modern VSR methods.

Despite these advancements, current VSR techniques still face limitations, particularly in effectively aggregating spatial details across frames and fully utilizing frequency-domain information. Approaches employing single-image super-resolution (SISR) architectures frame by frame simplify development but often fail to recover subtle textures and high-frequency details, especially in low-contrast areas where frequency-domain insights are crucial.

Thus, there remains considerable room for improvement in VSR methods, specifically in effectively integrating spatial–temporal dynamics and leveraging frequency-domain analysis for superior reconstruction quality.

3. Proposed Method

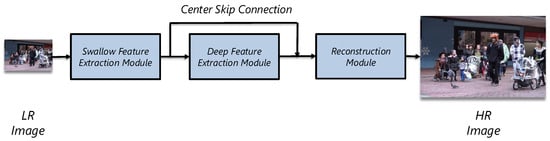

To introduce our VSR approach, we first describe a typical single-image super-resolution (SISR) pipeline, which generally comprises three core stages, as illustrated in Figure 2.

Figure 2.

A general architecture of single-image super-resolution (SISR) models, comprising shallow feature extraction, deep feature extraction, and reconstruction stages.

A standard SISR architecture initiates with shallow feature extraction, capturing fundamental low-level features such as edges, lines, and basic textures. It then proceeds to deep feature extraction, where complex, hierarchical patterns are learned through deeper convolutional or transformer-based layers. Finally, the reconstruction stage maps these rich features back to the high-resolution (HR) image space through upsampling and refinement.

Despite recent advancements, explicitly designed video super-resolution (VSR) methods often demand extensive architectural modifications and computational resources to effectively integrate spatial and temporal information. Conversely, directly applying SISR models frame by frame simplifies development but inadequately leverages inter-frame spatial correlations and frequency-domain information, thus failing to recover subtle textures and high-frequency details, especially in low-contrast scenarios.

Motivated by these limitations, our proposed FDI-VSR framework enhances mature and computationally efficient SISR architectures by introducing two novel modules: the Spatiotemporal Feature Extraction Module (STFEM) and the Frequency–Spatial Integration Module (FSIM). The theoretical advantages of this integration are threefold:

- Minimal architectural overhead: By building upon mature SISR models, we avoid extensive redesign, maintaining computational efficiency and allowing easy integration into existing pipelines.

- Effective temporal integration: The STFEM addresses temporal misalignment through adaptive feature sampling using deformable convolutions, implicitly modeling motion without explicit optical flow estimation, thus efficiently leveraging temporal dynamics.

- Enhanced frequency-domain awareness: The FSIM incorporates frequency-domain analysis, explicitly capturing global structural patterns and subtle high-frequency details often overlooked by purely spatial convolutional methods, significantly improving texture and detail reconstruction, particularly in low-contrast scenarios.

Our choice to build upon mature SISR models stems from their established computational efficiency and robustness. Unlike conventional VSR methods, which often rely on complex temporal modeling such as optical flow estimation or 3D convolutions, our proposed STFEM implicitly aligns temporal features using adaptive deformable sampling, significantly reducing computational complexity. Meanwhile, FSIM explicitly incorporates frequency-domain analysis to capture global structural context overlooked by traditional convolution-based methods.

Both STFEM and FSIM modules are deliberately designed to be modular and flexible. Their modular and adaptable design enables straightforward integration into a variety of SISR architectures without requiring structural modifications. Specifically, STFEM seamlessly fits between shallow and deep feature extraction stages, and FSIM operates directly after deep feature extraction, preserving the original SISR framework’s simplicity and eliminating the need for extensive architectural redesign. These theoretical advantages and modularity underline the practical significance of our proposed approach, clearly distinguishing it from conventional VSR methodologies.

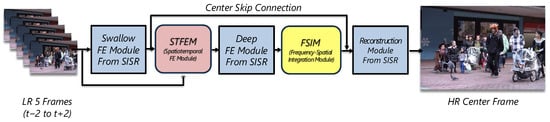

As shown in Figure 3, the input is a sequence of five consecutive LR frames: , where is the center frame targeted for super-resolution. Initially, shallow feature extraction processes each LR frame individually. Subsequently, these shallow features, along with the original LR frames, are fed into the STFEM, which incorporates motion information and aligns features across frames. The aggregated features from STFEM are then passed to the deep feature extractor, utilizing the inherent capability of SISR models for high-level feature learning. After deep feature extraction, features undergo global frequency analysis through the FSIM, refining them via frequency-domain characteristics. Finally, these refined features are utilized in the reconstruction stage to produce the high-resolution center frame.

Figure 3.

Overall structure of the proposed method. We integrate the STFEM and the FSIM into a baseline SISR model for effective video super-resolution.

The detailed architecture and operational mechanisms of STFEM and FSIM will be elaborated on in the following subsections.

3.1. Spatiotemporal Feature Extraction Module

The Spatiotemporal Feature Extraction Module (STFEM) is designed to handle complex inter-frame motions by adaptively aligning and aggregating temporal information into spatial features. This module enhances the network’s ability to capture complex motions and temporal consistency through three sequential submodules: offset estimation, spatial aggregation, and temporal aggregation. Figure 4 illustrates the detailed architecture of STFEM, and the following subsections provide further explanations of each submodule.

Figure 4.

Architecture of the Spatiotemporal Feature Extraction Module (STFEM). The STFEM consists of offset estimation (blue), spatial aggregation (green), and temporal aggregation (red) submodules.

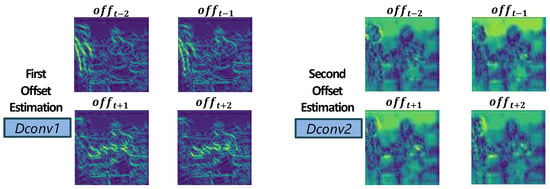

3.1.1. Offset Estimation

For each frame (where ), shallow features are extracted:

Here, denotes a residual block, and is a standard convolution layer. The index denotes the center frame .

We next estimate offsets that implicitly model motion between each neighboring frame and the center frame. For this purpose, we adopt deformable convolution [12,27], which adaptively adjusts sampling locations through learned offsets. This adaptive sampling enables effective alignment of features even under complex or irregular motion conditions without requiring explicit optical flow estimation. Specifically, we concatenate the shallow features of the center with those of the neighbor and feed them into two consecutive deformable convolution layers:

The first deformable convolution captures coarse motion, while the second refines it further. Figure 5 visualizes these learned offsets, where regions of large or complex motion receive correspondingly larger offset values.

Figure 5.

Visualization of estimated offsets between the center and adjacent frames. Green regions indicate areas of high motion.

3.1.2. Spatial Aggregation

Once offsets are estimated, we align the shallow features for each neighboring frame. Specifically,

where is a deformable convolution that uses the estimated offsets to spatially align the neighboring frame’s features with the center frame. A subsequent convolutional layer refines this alignment, ensuring that objects and edges from different frames are well matched. Figure 6 illustrates how the spatial aggregation step refines the alignment in areas of complex motion.

Figure 6.

Visualization of spatial aggregation. DConv refines alignment in regions with complex motion.

3.1.3. Temporal Aggregation

After spatial alignment, the aligned features from all frames, , are concatenated and fused via repeated residual channel attention blocks (RCABs) [14,15]:

The RCABs adaptively re-weight channels to emphasize important spatiotemporal features. Intermediate convolutions can reduce or restore channel dimensions, controlling memory usage while preserving essential information. The fused output,

is then fed into the deep feature extractor () from the baseline SISR network. This effectively injects video-specific cues into the subsequent SR layers with minimal architectural overhead.

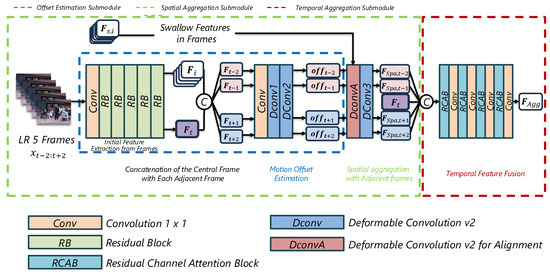

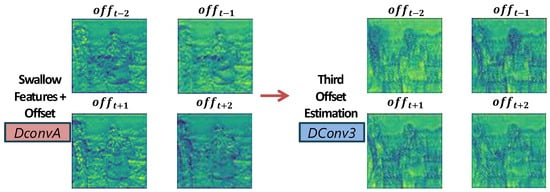

3.2. Frequency–Spatial Integration Module

While the Spatiotemporal Feature Extraction Module addresses motion and temporal consistency, convolutions alone still have a limited receptive field for capturing global context. We therefore introduce the Frequency–Spatial Integration Module (FSIM) that processes features in the frequency domain, complementing local convolutions with more global information [13]. The FSIM transforms spatial features into frequency representations, which inherently encode global structural patterns and periodic textures often missed by spatial convolutions. Figure 7 illustrates the detailed architecture of FSIM.

Figure 7.

Architecture of the Frequency–Spatial Integration Module (FSIM). The FSIM consists of local operation (red), global operation (blue), and fusion (green) submodules.

3.2.1. Local Operation

We first apply a residual-style local convolution to refine spatial details:

where is the LeakyReLU activation function [28]. This captures fine textures and edges locally, while preserving information from .

3.2.2. Global Operation (Frequency Domain)

To capture global structural information and subtle high-frequency details typically lost in low-resolution images, we integrate frequency-domain analysis using the 2D Fast Fourier Transform (FFT) [13,29,30]. Working in the frequency domain complements local spatial processing, enabling more effective reconstruction of fine textures and global patterns. Specifically, we transform the deep feature into a frequency-aware feature :

Then, we apply a real-valued 2D FFT (RFFT):

Working in the frequency domain allows the module to capture large-scale structure and handle both high- and low-frequency components more flexibly than purely local convolutions. After inverse RFFT, we merge the transformed features through additional convolution and residual connections.

3.2.3. Feature Fusion and Reconstruction

We concatenate the local and frequency-domain features:

Finally, the fusion result is skip-connected with the shallow feature from the center frame and passed through the reconstruction layer(s) to produce the final HR output:

This skip connection helps preserve low-level details from the center frame, boosting reconstruction quality.

Overall, by combining local spatial operations and frequency-domain processing, the FSIM enriches the network’s receptive field and enhances its ability to reconstruct both fine texture and global structure. When integrated with the STFEM, this design offers a powerful yet lightweight extension of standard SISR architectures to handle the challenges of video super-resolution. To provide a step-by-step summary of our entire pipeline, Algorithm 1 outlines the major procedures from shallow feature extraction through final reconstruction:

| Algorithm 1: Proposed Video Super-Resolution Framework |

|

4. Experimental Results

4.1. Training Details

Our FDI-VSR model was trained on the Vimeo-90K dataset [17], which contains 64,612 seven-frame sequences at a resolution of 448 × 256. This large and diverse dataset provides a wide range of dynamic motions and scene types, making it well suited for learning spatiotemporal features required in video super-resolution tasks. For each high-resolution frame, 256 × 256 patches were randomly extracted and then downsampled to 64 × 64 via bicubic interpolation, forming corresponding low–high resolution pairs. Data augmentation through random flips and rotations was employed to enhance the model’s robustness and mitigate overfitting. Each training sample consists of five consecutive frames with a center frame with two preceding and two following frames, to adequately capture temporal context.

4.2. Evaluation Details

Proposed model was evaluated on four widely recognized VSR benchmarks: Vid4 [4], SPMCs [16], REDS4 [17], and UDM10 [18]. The Vid4 dataset comprises four sequences (city, walk, calendar, and foliage), each containing approximately 30 frames at a resolution of 720 × 480. Vid4 has long served as a standard benchmark in video super-resolution research due to its moderate motion and challenging textures. The SPMCs dataset includes 11 sequences with roughly 31 frames each at a resolution of 960 × 520, providing a more diverse and dynamic testing environment with pronounced motion variations. REDS4 is a subset of the REDS dataset with complex scenes and diverse motion patterns. UDM10 [18] consists of 10 different video sequences with 2K resolution frames, offering high-quality content for evaluation. Performance was measured using PSNR and SSIM computed on the Y-channel (except REDS4, which uses RGB-channel), after discarding a four-pixel border to mitigate boundary artifacts, and our approach was compared against leading VSR models.

4.3. Implementation Details

All models were trained using a batch size of 16. We employed the mean squared error (MSE) loss:

which correlates directly with improving PSNR. We used the Adam optimizer [31] with and . The learning rate was initially and halved at 300k, 500k, 650k, 700k, and 750k iterations, for a total of 800k iterations. This multi-step decay strategy balanced stable convergence with efficient training.

Implementation was performed in Python 3.8.19, PyTorch 2.1.2, and experiments were run on an NVIDIA A6000 GPU. For reference SISR architectures, we primarily used HMANet [24] as the baseline model, which is a transformer-like model integrated with multi-axis attention.

4.4. Quantitative Evaluation

Table 1 summarizes the quantitative performance of our FDI-VSR approach compared with several state-of-the-art methods at 4× upscaling across four benchmark datasets. As demonstrated, our FDI-VSR method achieves superior performance on all datasets, with 27.29 dB PSNR and 0.8230 SSIM on Vid4, 29.84 dB PSNR and 0.8597 SSIM on SPMCs, 31.11 dB PSNR and 0.8674 SSIM on REDS4, and 39.30 dB PSNR and 0.9629 SSIM on UDM10.

Table 1.

Quantitative comparison results (PSNR/SSIM) between FDI-VSR and several state-of-the-art methods on the Vid4, SPMCs, REDS4, and UDM10 datasets for a 4× scale factor. Values were calculated on the Y-channel except REDS4 (RGB-channel).

Notably, our method outperforms recent approaches like FDDCC-VSR [40] and L-VSR [41] across all datasets. On Vid4, our approach improves PSNR by +0.34 dB over L-VSR and +0.50 dB over FDDCC-VSR. The performance gap is even more significant on SPMCs, where our method achieves a +0.82 dB higher PSNR than L-VSR. On REDS4, our approach attains a +0.40 dB improvement over L-VSR and +0.56 dB over FDDCC-VSR.

For the UDM10 dataset [18], our method achieves state-of-the-art performance with 39.30 dB PSNR, slightly outperforming L-VSR (39.25 dB) and significantly surpassing LRGAN (37.93 dB) and earlier methods like PFNL (35.79 dB) and DBPN (35.39 dB).

These comprehensive results across multiple benchmark datasets demonstrate the effectiveness of our proposed FDI-VSR framework, which successfully integrates spatiotemporal alignment and frequency-domain integration to achieve superior video super-resolution performance.

4.5. Model Complexity and Inference Speed

Beyond PSNR and SSIM, evaluating computational complexity and real-time feasibility is crucial for practical VSR applications. Table 2 compares the FLOPs and inference speed (FPS) on inputs for several VSR models, including our proposed FDI-VSR.

Table 2.

Comparative analysis of model complexity and inference speed for various VSR models on input sequences. GFLOPs indicate the number of floating-point operations (in billions) per forward pass. Note: Models marked with “†” are quoted directly from their respective publications and may have been measured on different hardware. The values for our FDI-VSR model were measured on an NVIDIA A6000 GPU.

As shown in Table 2, FDI-VSR requires fewer FLOPs than most recent approaches and sustains a competitive 22.54 FPS on an NVIDIA A6000 GPU. This efficiency arises from our lightweight spatiotemporal alignment (STFEM) and frequency–spatial fusion (FSIM), both of which enhance performance without incurring heavy overhead. Consequently, FDI-VSR demonstrates real-time feasibility alongside its superior reconstruction quality, making it well suited for practical video enhancement scenarios.

4.6. Ablation Studies

Comprehensive ablation experiments were conducted to investigate the contribution of individual components within our framework. The studies examined the impact of various submodules in the STFEM, the influence of the number of residual channel attention blocks (RCABs) in temporal fusion, the benefit of substituting a standard convolution layer with our FSIM, and the difference between Deformable Convolution v1 and v2.

Effect of STFEM Submodules: Table 3 presents an ablation study evaluating the contributions of individual submodules in the proposed STFEM, based on the HMANet SISR backbone. The first row corresponds to the baseline model, where none of the submodules are applied. In the first variant (second row of Table 3), only the spatial aggregation submodule is activated, utilizing shallow features from neighboring frames without offset guidance, and integrating them using a convolution. This setting provides only marginal improvement over the baseline. The second variant (third row of Table 3) introduces the offset estimation module, which leverages both the center frame and neighboring frames to guide the spatial aggregation. This results in a substantial boost in performance—e.g., on the Vid4 dataset, the PSNR increases from 25.77 dB to 26.95 dB. Finally, the complete variant (fourth row of Table 3) incorporates all submodules, including the temporal aggregation unit, channel-wise attention, and progressive channel shrinking. This variant achieves the best performance, highlighting the effectiveness of motion-adaptive alignment and attention-driven temporal feature fusion in enhancing video reconstruction quality.

Table 3.

Ablation study on STFEM (HMANet-based) on Vid4 and SPMCs.

These results confirm that both motion-adaptive alignment and temporal feature fusion contribute meaningfully to performance gains in video reconstruction tasks.

Number of RCABs in Temporal Aggregation: Table 4 details the impact of varying the number of RCAB blocks. An increase from one to four RCAB layers leads to consistent improvements in both PSNR and SSIM, suggesting that a deeper channel attention mechanism effectively fuses temporal information, although incremental gains diminish as the number of blocks increases.

Table 4.

Effect of RCAB block repetitions.

The performance improvements observed with the addition of more RCAB blocks confirm the effectiveness of deeper channel attention in consolidating temporal information.

Frequency–Spatial Integration Module (FSIM) vs. Standard Convolution: Table 5 compares a standard convolution layer with our proposed FSIM. The FSIM provides a notable increase in PSNR and SSIM, demonstrating its ability to capture global frequency-domain features that enhance high-frequency detail recovery.

Table 5.

Comparison of standard convolution vs. FSIM.

The enhancements achieved by the FSIM indicate that incorporating global frequency-domain information is critical for recovering subtle textures and ensuring structural consistency.

Deformable Convolution Versions: Table 6 presents a comparison between Deformable Convolution v1 and v2. The switch to v2, which features learnable modulation scalars for finer control, yields modest yet consistent improvements, particularly in challenging scenarios involving complex motion.

Table 6.

Comparison of deformable convolution versions.

The improved performance observed when using DCN v2 suggests that the learnable modulation scalars enhance the alignment capability, especially in cases of non-rigid or complex motion.

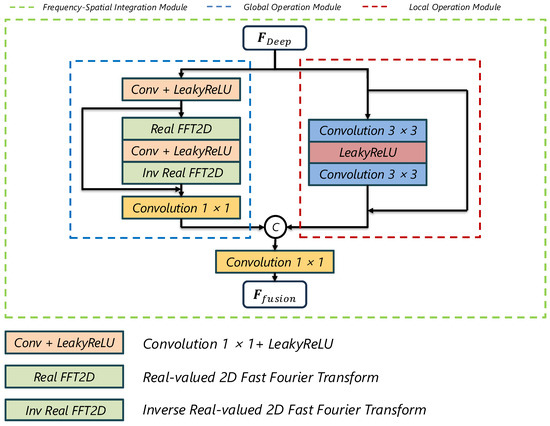

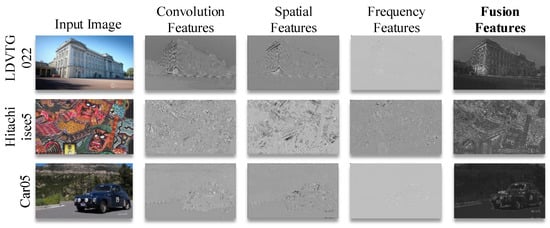

4.7. Visualization of Temporal Flow and Frequency Features

To further illustrate how our proposed modules enhance VSR performance, we visualize temporal flow alignment and attention maps in Figure 8.

Figure 8.

Visualization of temporal flow alignment and attention map.

The circular color chart encodes motion direction and magnitude through hue and saturation, where darker tones indicate larger displacements. Offset flow fields for neighboring frames (t − 2, t − 1, t + 1, t + 2) relative to the center frame t demonstrate adaptive spatial alignment. Temporal attention maps reveal selective focus on regions with stable textures and edges, showing how the model prioritizes informative pixels across frames. Brighter areas in the attention maps correspond to regions where the network assigns higher importance during temporal fusion, particularly around moving objects and structural boundaries.

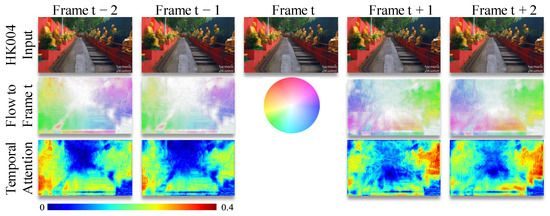

Figure 9 compares FSIM-fused features with standard convolutional features. The FSIM’s fused features exhibit enhanced high-frequency details compared to standard convolutional features. Local spatial features preserve edge structures while frequency-domain components capture periodic patterns and global context. This synergy enables better reconstruction of both textured surfaces (e.g., brick walls) and regular geometries (e.g., window frames). The frequency-domain analysis particularly improves recovery of repeating patterns and low-contrast details that are challenging for spatial-only operations.

Figure 9.

Comparison between FSIM-fused features and standard convolutional features.

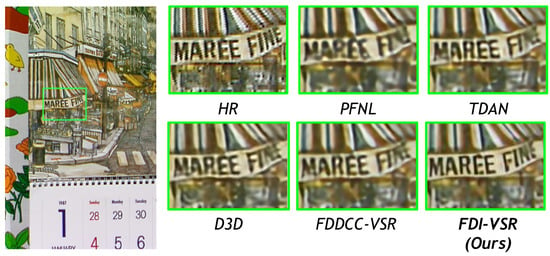

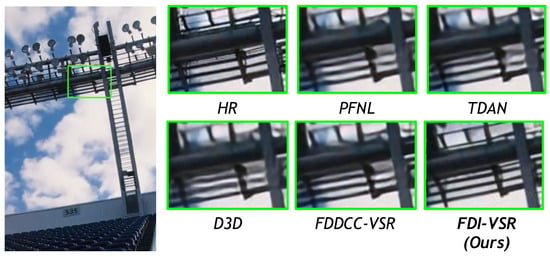

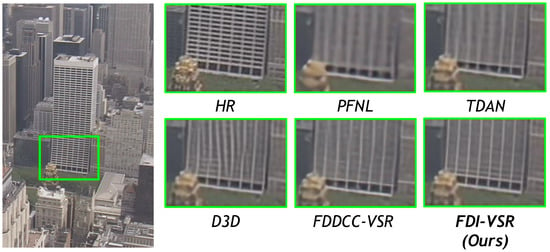

4.8. Qualitative Performance Evaluation

We compare our FDI-VSR framework against several state-of-the-art (SOTA) methods—PFNL, TDAN, D3D, and FDDCC-VSR—on a variety of challenging scenes. Overall, our approach more effectively handles complex motion and recovers subtle details such as text, thin structures, and high-frequency textures. These improvements arise from two key factors: dynamic offset estimation, which adaptively aligns frames without relying on explicit optical flow, and frequency-domain fusion, which captures global context and enhances high-frequency components. The combination of these modules allows FDI-VSR to produce sharper edges, clearer text, and fewer artifacts than competing methods.

In Figure 10, the competing methods struggle to restore the small lettering on the shop sign, leading to blurring or noise around the letters. FDI-VSR recovers more legible text and cleaner edges, indicating robust temporal alignment and effective frequency-domain enhancement.

Figure 10.

Qualitative comparison of Vid4 (Calendar) dataset for ×4 video SR.

In Figure 11, thin metallic bars and subtle gradients pose challenges for preserving straight lines and avoiding jagged artifacts. FDI-VSR yields smoother edges and fewer distortions than PFNL or TDAN, reflecting the benefit of our dual-domain fusion in maintaining structural coherence.

Figure 11.

Qualitative comparison of UDM10 (002) dataset for ×4 video SR.

In Figure 12, the grid structure of the high-rise building poses alignment challenges due to high-frequency details and motion. Competing methods tend to exhibit distortion or discontinuity in the grid lines. In contrast, FDI-VSR accurately reconstructs the grid without distortion, presenting sharp and clean vertical/horizontal lines. This highlights FDI-VSR’s superior motion compensation and the effectiveness of frequency-domain fusion in preserving structural features.

Figure 12.

Qualitative comparison of SPMCs (HKVTG) dataset for ×4 video SR.

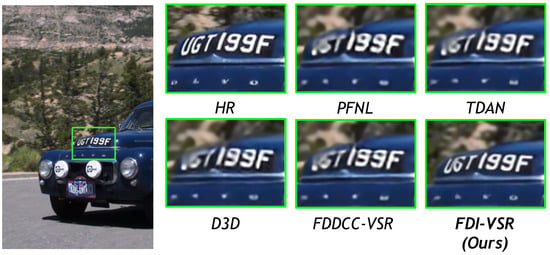

In Figure 13, license plate text demands precise reconstruction of alphanumeric characters. FDI-VSR offers visibly sharper letters than baseline methods, highlighting the synergy between accurate frame alignment and frequency-domain analysis in resolving fine details.

Figure 13.

Qualitative comparison of the SPMCs (car05) dataset for ×4 video SR.

Overall, these visual results confirm that FDI-VSR consistently outperforms existing methods across diverse scenarios. By avoiding explicit optical flow and incorporating global frequency cues, our framework is able to reconstruct sharper textures, clearer text, and more stable temporal consistency, thus demonstrating strong practical utility in video enhancement tasks.

5. Conclusions

In this paper, we presented FDI-VSR, a novel framework for adapting single-image super-resolution (SISR) models to the video super-resolution (VSR) task by integrating two key modules: the STFEM and the FSIM. The STFEM employs dynamic offset estimation, spatial alignment, and multi-stage temporal fusion via residual channel attention blocks (RCABs) to capture complex inter-frame motion, while the FSIM leverages 2D FFT-based processing to extract global contextual information and improve the recovery of high-frequency details. Together, these components broaden the network’s receptive field and enable more accurate reconstruction of subtle textures and fine structures.

Extensive experiments on multiple benchmark datasets (Vid4, SPMCs, REDS4, and UDM10) demonstrate that our approach outperforms conventional VSR baselines such as TDAN and achieves competitive, if not state-of-the-art, performance compared to recent methods. In addition to delivering superior quantitative metrics, our framework attains these improvements with a significantly lower parameter count, thereby reducing computational complexity—a critical factor for deployment in resource-constrained environments.

Future work will focus on extending the proposed approach to Video Frame Interpolation, with the aim of developing a unified space–time video enhancement network that simultaneously addresses both spatial resolution improvement and temporal frame rate upscaling. We also plan to explore advanced attention mechanisms and refined loss functions to further boost reconstruction accuracy, particularly in challenging scenarios characterized by complex motion patterns and variable lighting conditions.

Author Contributions

Methodology, D.L.; Investigation, J.C.; Writing—original draft, D.L.; Writing—review & editing, J.C. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the Republic of Korea Government (Ministry of Science and ICT (MSIT)) through the research fund of the National Research Foundation of Korea (NRF) and Institute of Information and Communications Technology Planning and Evaluation (IITP) under grants NRF-2021R1C1C2095450, RS-2024-00437756, and RS-2023-00242528.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

This study uses publicly available datasets. The Vimeo-90K dataset used for training is available at http://toflow.csail.mit.edu/. The evaluation datasets are accessible via the following links (all links accessed and verified on 9 April 2025): Vid4 (https://people.csail.mit.edu/celiu/CVPR2011/videoSR.zip), SPMCs (https://github.com/jiangsutx/SPMC_VideoSR), REDS4 (https://seungjunnah.github.io/Datasets/reds.html), and UDM10 (https://drive.google.com/file/d/1G4V4KZZhhfzUlqHiSBBuWyqLyIOvOs0W/).

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Wang, L.; Wang, Y.; Li, Z.; Zhao, D.; Lin, J.; Tang, S.; Zhang, G.; Zhang, Y. Deep Learning for Video Super-Resolution: A Survey. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 44, 7853–7872. [Google Scholar]

- Dong, C.; Loy, C.; He, K.; Tang, X. Learning a Deep Convolutional Network for Image Super-Resolution. In Proceedings of the European Conference on Computer Vision (ECCV), Zurich, Switzerland, 6–12 September 2014; pp. 184–199. [Google Scholar] [CrossRef]

- Liang, J.; Cao, J.; Sun, G.; Zhang, K.; Van Gool, L.; Timofte, R. SwinIR: Image Restoration Using Swin Transformer. In Proceedings of the IEEE/CVF International Conference on Computer Vision Workshops (ICCVW), Montreal, BC, Canada, 11–17 October 2021; pp. 1833–1844. [Google Scholar]

- Caballero, J.; Ledig, C.; Aitken, A.; Agustin, A.; Totz, J.; Wang, Z.; Shi, W. Real-Time Video Super-Resolution with Spatio-Temporal Networks and Motion Compensation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 2848–2857. [Google Scholar]

- Tian, Y.; Fan, Y.; Loy, C.; Wang, J. TDAN: Temporally-Deformable Alignment Network for Video Super-Resolution. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 3360–3369. [Google Scholar]

- Liu, C.; Sun, D. On Bayesian Adaptive Video Super-Resolution. IEEE Trans. Pattern Anal. Mach. Intell. 2014, 36, 346–360. [Google Scholar] [CrossRef] [PubMed]

- Zhang, L.; Lu, Z.; Liao, Q. Optical Flow Super-Resolution Based on Image Guidance Using Convolutional Neural Network. IEEE Trans. Circuits Syst. Video Technol. 2020, 30, 2622–2635. [Google Scholar]

- Lee, K. Single Image Super-Resolution Using Spatial LSTM. Master’s Thesis, Seoul National University, Seoul, Republic of Korea, 2017. [Google Scholar]

- Lee, Y.; Cho, S.; Jun, D. Video Super-Resolution Method Using Deformable Convolution-Based Alignment Network. Sensors 2022, 22, 8476. [Google Scholar] [CrossRef] [PubMed]

- Zhu, Y.; Li, G. A Lightweight Recurrent Grouping Attention Network for Video Super-Resolution. Sensors 2023, 23, 8574. [Google Scholar] [CrossRef] [PubMed]

- Lu, M.; Zhang, P. Real-World Video Super-Resolution with a Degradation-Adaptive Model. Sensors 2024, 24, 2211. [Google Scholar] [CrossRef] [PubMed]

- Dai, J.; Qi, H.; Xiong, Y.; Li, Y.; Zhang, G.; Han, H.; Wei, Y. Deformable Convolutional Networks. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 764–773. [Google Scholar]

- Xu, J.; Guo, Q.; Wang, H.; Zhang, Y.; Dong, C.; Zhang, L. Fourier-Based Global Convolution for Image Restoration. IEEE Trans. Image Process. 2021, 30, 5674–5688. [Google Scholar]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-Excitation Networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018; pp. 7132–7141. [Google Scholar] [CrossRef]

- Zhang, Y.; He, K.; Sun, J. Image Super-Resolution Using Very Deep Residual Channel Attention Networks. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 286–301. [Google Scholar] [CrossRef]

- Tao, X.; Gao, H.; Liao, R.; Liu, J.; Chen, X.; Jia, J. Detail-Revealing Deep Video Super-Resolution. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 4472–4480. [Google Scholar]

- Xue, T.; Chen, B.; Fang, C.; Wang, M.; Wang, Z.; Kuo, C.C. Video Enhancement with Task-Oriented Flow. Int. J. Comput. Vis. (IJCV) 2019, 127, 1106–1125. [Google Scholar] [CrossRef]

- Yi, P.; Wang, Z.; Jiang, K.; Ma, L.; Zheng, W.; Wang, J. Progressive Fusion Video Super-Resolution Network via Exploiting Non-Local Spatio-Temporal Correlations. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019; pp. 3106–3115. [Google Scholar]

- Fattal, R. Image Upsampling via Imposed Edge Statistics. ACM Trans. Graph. (TOG) 2007, 26, 95. [Google Scholar] [CrossRef]

- Freeman, W.; Jones, T.; Pasztor, E. Example-Based Super-Resolution. IEEE Comput. Graph. Appl. 2002, 22, 56–65. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Lim, B.; Son, S.; Kim, H.; Nah, S.; Lee, K. Enhanced Deep Residual Networks for Single Image Super-Resolution. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Honolulu, HI, USA, 21–26 July 2017; pp. 1132–1140. [Google Scholar]

- Ledig, C.; Theis, L.; Huszar, F.; Caballero, C.; Aitken, A.; Tejani, A.; Totz, J.; Wang, Z.; Shi, W. Photo-Realistic Single Image Super-Resolution Using a Generative Adversarial Network. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 4681–4690. [Google Scholar] [CrossRef]

- Dou, B.; Qin, Z.; Yan, X.; Cao, Y.; Jiang, J.; Fan, D.P.; Cheng, M.M. HMANet: Hybrid Multi-Axis Aggregation Network for Image Super-Resolution. arXiv 2024, arXiv:2405.05001. [Google Scholar]

- Sajjadi, M.; Vemulapalli, R.; Vidal, R. Frame-Recurrent Video Super-Resolution. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018; pp. 6626–6634. [Google Scholar] [CrossRef]

- Wang, X.; Chan, K.C.; Yu, K.; Loy, C.C.; Yuan, C. EDVR: Video Restoration With Enhanced Deformable Convolutional Networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Long Beach, CA, USA, 16–17 June 2019. [Google Scholar]

- Zhu, X.; Qi, H.; Zhu, J.; Li, Y.; Lin, S.; Huang, G.; Bai, S. Deformable ConvNets v2: More Deformable, Better Results. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 9497–9506. [Google Scholar]

- Maas, A.; Hannun, A.; Ng, A. Rectifier Nonlinearities Improve Neural Network Acoustic Models. In Proceedings of the International Conference on Machine Learning (ICML), Atlanta, GA, USA, 16–21 June 2013; Volume 28, pp. 3–8. [Google Scholar]

- Chi, Y.; Lin, Y.; Liu, H.; Han, S. Fast Fourier Convolution. In Proceedings of the Advances in Neural Information Processing Systems (NeurIPS), Vancouver, BC, Canada, 6–12 December 2020; Volume 33, pp. 4479–4488. [Google Scholar]

- Bracewell, R. The Fourier Transform and Its Applications, 2nd ed.; McGraw-Hill: New York, NY, USA, 1986. [Google Scholar]

- Kingma, D.; Ba, J. Adam: A Method for Stochastic Optimization. In Proceedings of the International Conference on Learning Representations (ICLR), San Diego, CA, USA, 7–9 May 2015. [Google Scholar]

- Haris, M.; Shakhnarovich, G.; Ukita, N. Deep Back-Projection Networks for Super-Resolution. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018; pp. 1664–1673. [Google Scholar]

- Wang, L.; Guo, Y.; Liu, L.; Cao, Z.; Cui, Y.; Wang, J. Deep Video Super-Resolution Using HR Optical Flow Estimation. IEEE Trans. Image Process. 2020, 29, 4323–4336. [Google Scholar] [CrossRef] [PubMed]

- Xiang, X.; Tian, Y.; Zhang, Y.; Fu, Y.; Loy, C.; Lin, D. Zooming Slow-Mo: Fast and Accurate One-Stage Space-Time Video Super-Resolution. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 3370–3379. [Google Scholar]

- Ying, X.; Wang, L.; Wang, Y.; Tan, X.; Wen, S.; Yuan, Y.; Tian, Q. Deformable 3D Convolution for Video Super-Resolution. IEEE Signal Process. Lett. 2020, 27, 1500–1504. [Google Scholar] [CrossRef]

- Yi, P.; Wang, Z.; Jiang, K.; Ma, L.; Zheng, W.; Wang, J. Omniscient Video Super-Resolution. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Montreal, QC, Canada, 10–17 October 2021; pp. 4429–4438. [Google Scholar]

- Xu, G.; Xu, J.; Li, Z.; Guo, Y.; Xu, J.; Zhang, Y.; Yan, X.; Dong, C.; Loy, C.; Dai, B.; et al. Temporal Modulation Network for Controllable Space-Time Video Super-Resolution. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 20–25 June 2021; pp. 6388–6397. [Google Scholar] [CrossRef]

- Geng, Z.; Xie, L.; Zhou, D.; Wang, L.; Lu, X.; Wang, J. RSTT: Real-Time Spatial Temporal Transformer for Space-Time Video Super-Resolution. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 18–24 June 2022; pp. 17441–17451. [Google Scholar]

- Wang, H.; Xiang, X.; Tian, Y.; Yi, W.; Liao, Q. STDAN: Deformable Attention Network for Space-Time Video Super-Resolution. IEEE Trans. Neural Netw. Learn. Syst. 2023, 35, 10606–10616. [Google Scholar] [CrossRef] [PubMed]

- Wang, X.; Yang, X.; Li, H.; Li, T. FDDCC-VSR: A Lightweight Video Super-Resolution Network Based on Deformable 3D Convolution and Cheap Convolution. Vis. Comput. 2024, 41, 3581–3593. [Google Scholar] [CrossRef]

- Huang, G.; Li, N.; Liu, J.; Zhang, M.; Zhang, L.; Li, J. LightVSR: A Lightweight Video Super-Resolution Model with Multi-Scale Feature Aggregation. Appl. Sci. 2025, 15, 1506. [Google Scholar] [CrossRef]

- Haris, M.; Shakhnarovich, G.; Ukita, N.; Li, K.; Sohn, K. Recurrent Back-Projection Networks For Video Super-Resolution. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 1920–1929. [Google Scholar]

- Chan, K.C.; Zhou, S.; Xu, X.; Loy, C.C. BasicVSR++: Improving Video Super-Resolution with Contrastive Backward Propagation. IEEE Trans. Image Process. 2022, 31, 7203–7216. [Google Scholar]

- Liang, S.; Fu, J.; Cao, D.; Huang, W.; Zhang, X.; Tian, Q. VRT: Video Restoration Transformer. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 18–24 June 2022; pp. 5794–5803. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).