Invisible CMOS Camera Dazzling for Conducting Adversarial Attacks on Deep Neural Networks

Abstract

1. Introduction

- The peak irradiance is sufficient to dazzle the sensor temporarily;

- The average irradiance remains below the sensitivity threshold of the human eye.

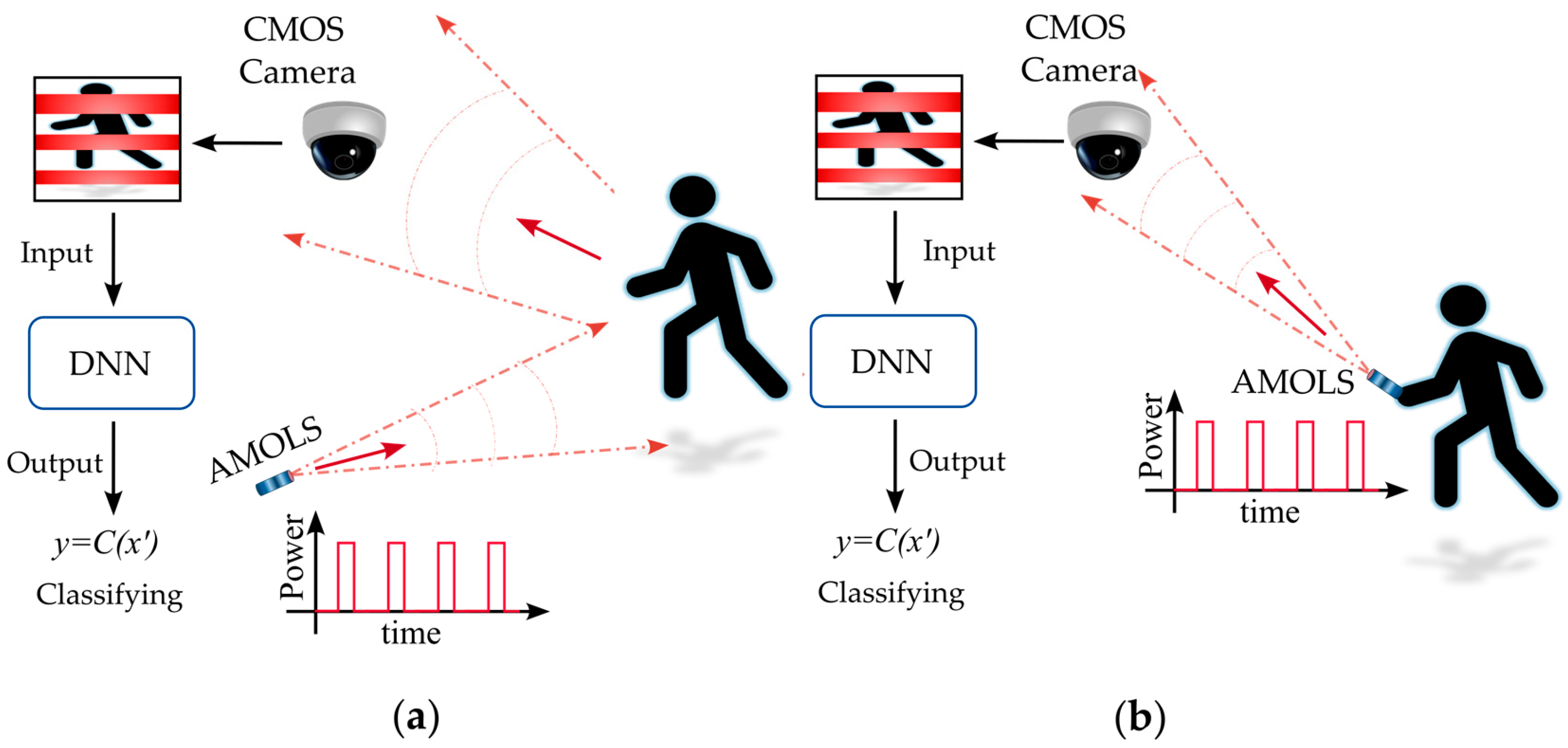

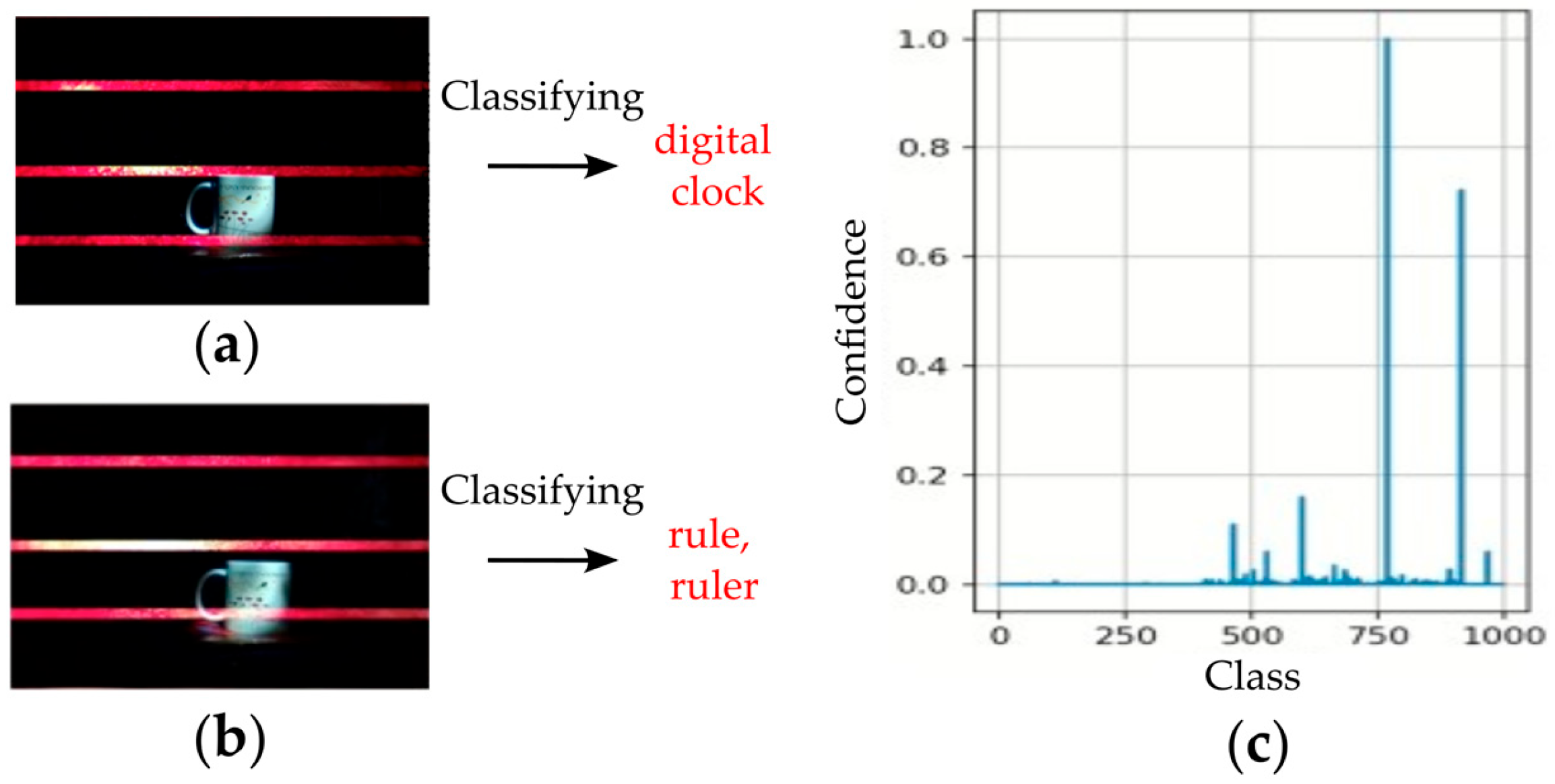

- We propose a physical domain adversarial attack on DNNs that receive images from a CMOS camera. The attack involves directing a light source toward the camera; however, the presence of the projected light is completely unnoticed by observers in the scene.

- We introduce an optical attack that is based on dazzling a camera sensor by sending short pulses. We investigate the effect of the projected pulses on the image captured by the CMOS camera. We evaluate the irradiance required to attack the image.

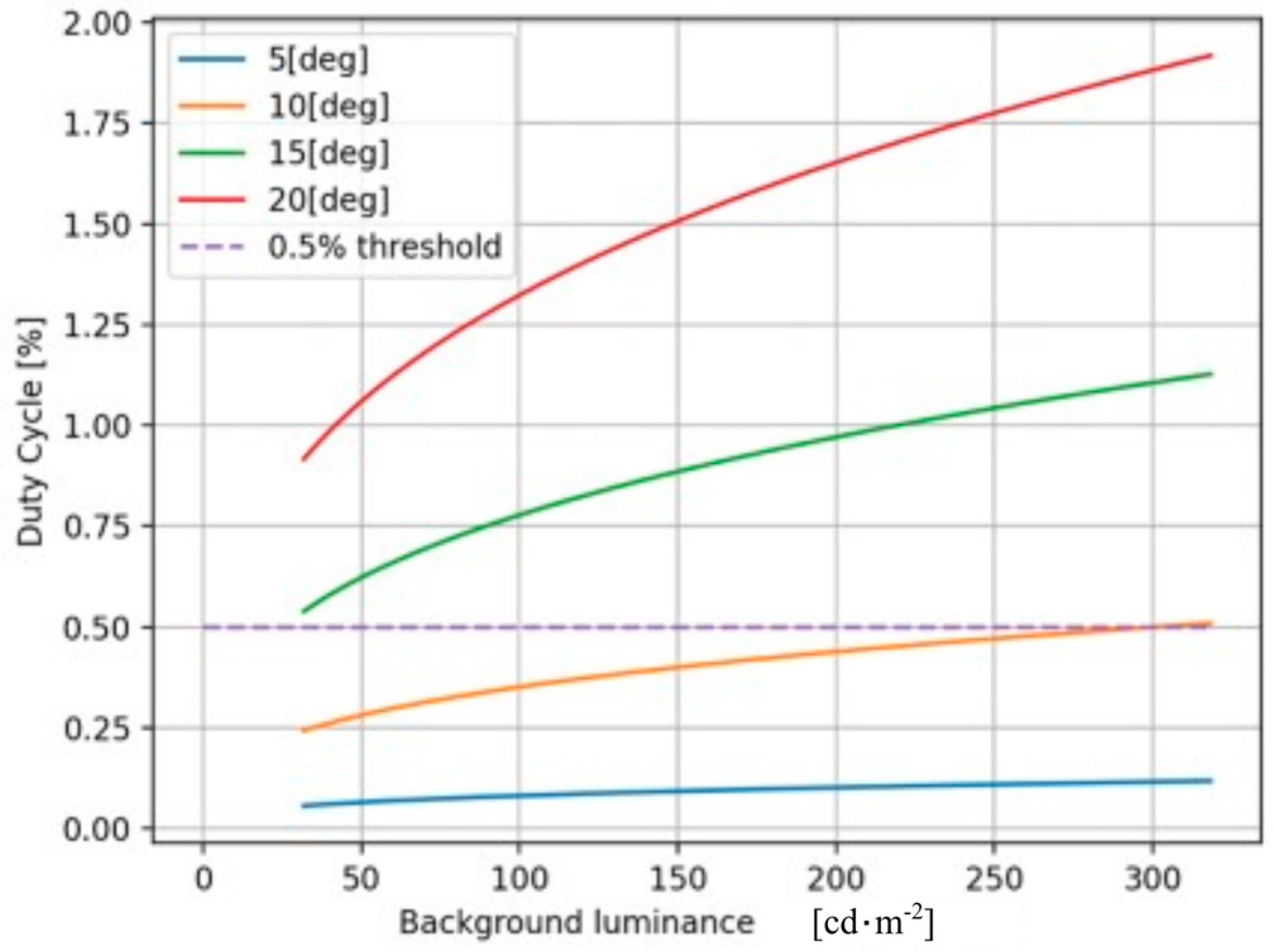

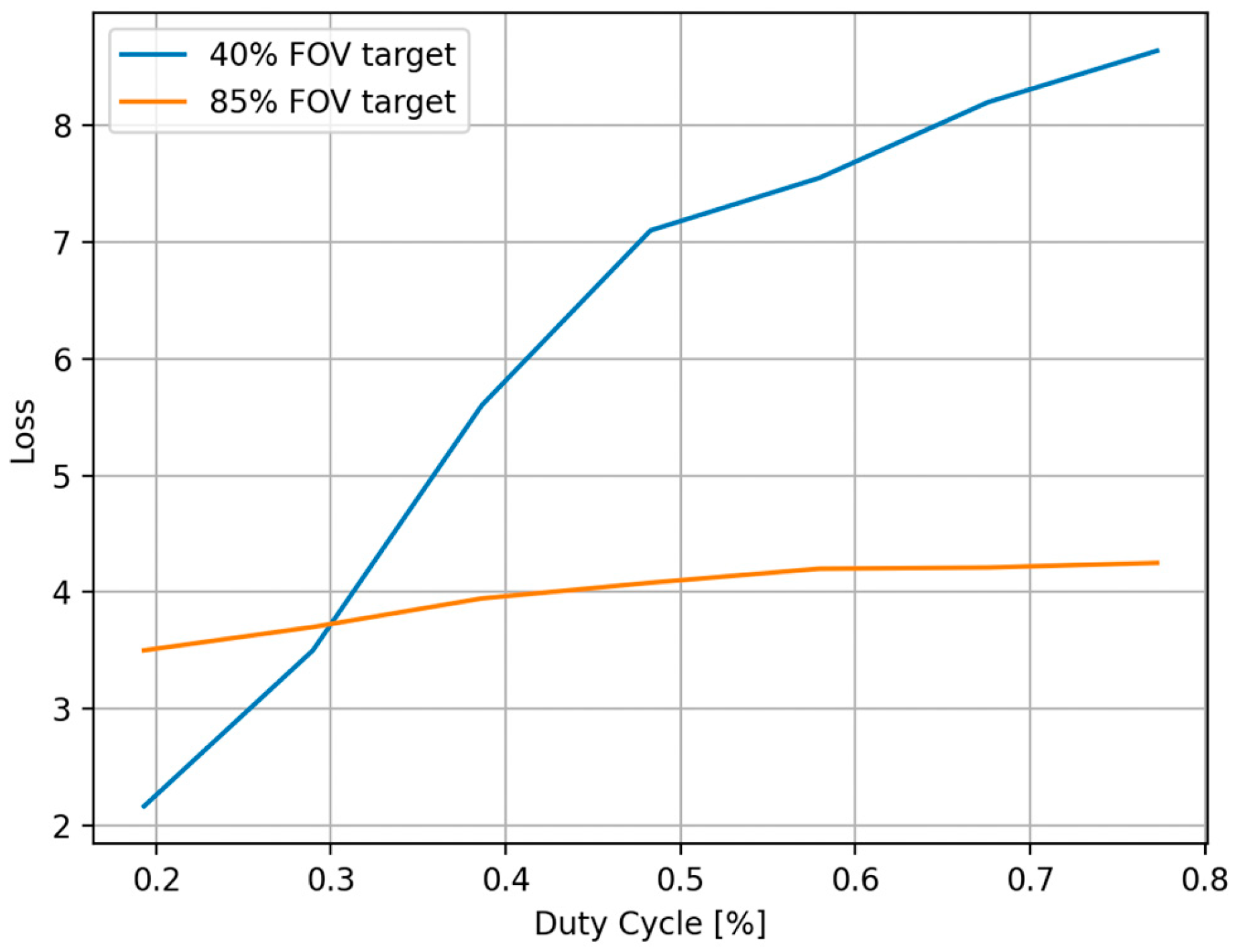

- We explore the relationship between the human eye’s ability to distinguish the attacking light source directed at the camera and the disruption of DNN performance caused by the influence of the pulsed laser beam. We analyze the photopic conditions required to ensure that the attacking light source remains invisible to human observers while still effectively disrupting the acquired image to mislead the classifier model.

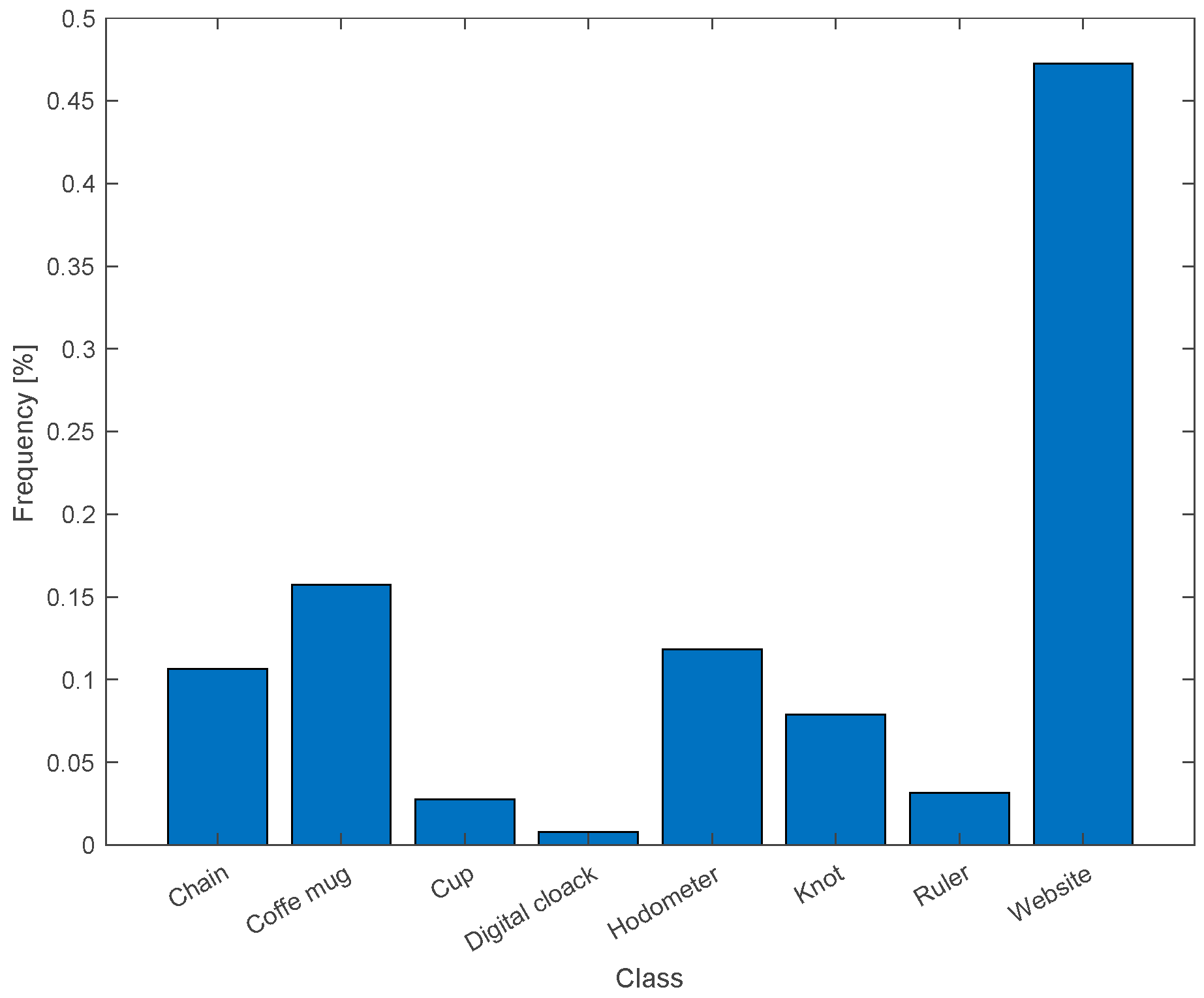

- We evaluate the trade-off between the success of DNN attacks caused by dazzling pulses and their invisibility to the human eye. Our findings indicate that the duty cycle of the light source can be adjusted to manage the balance between the attack’s success rate and the level of concealment required.

- We present simulated and real experimental results to demonstrate the effectiveness of our attack.

2. Related Works

3. Materials and Methods

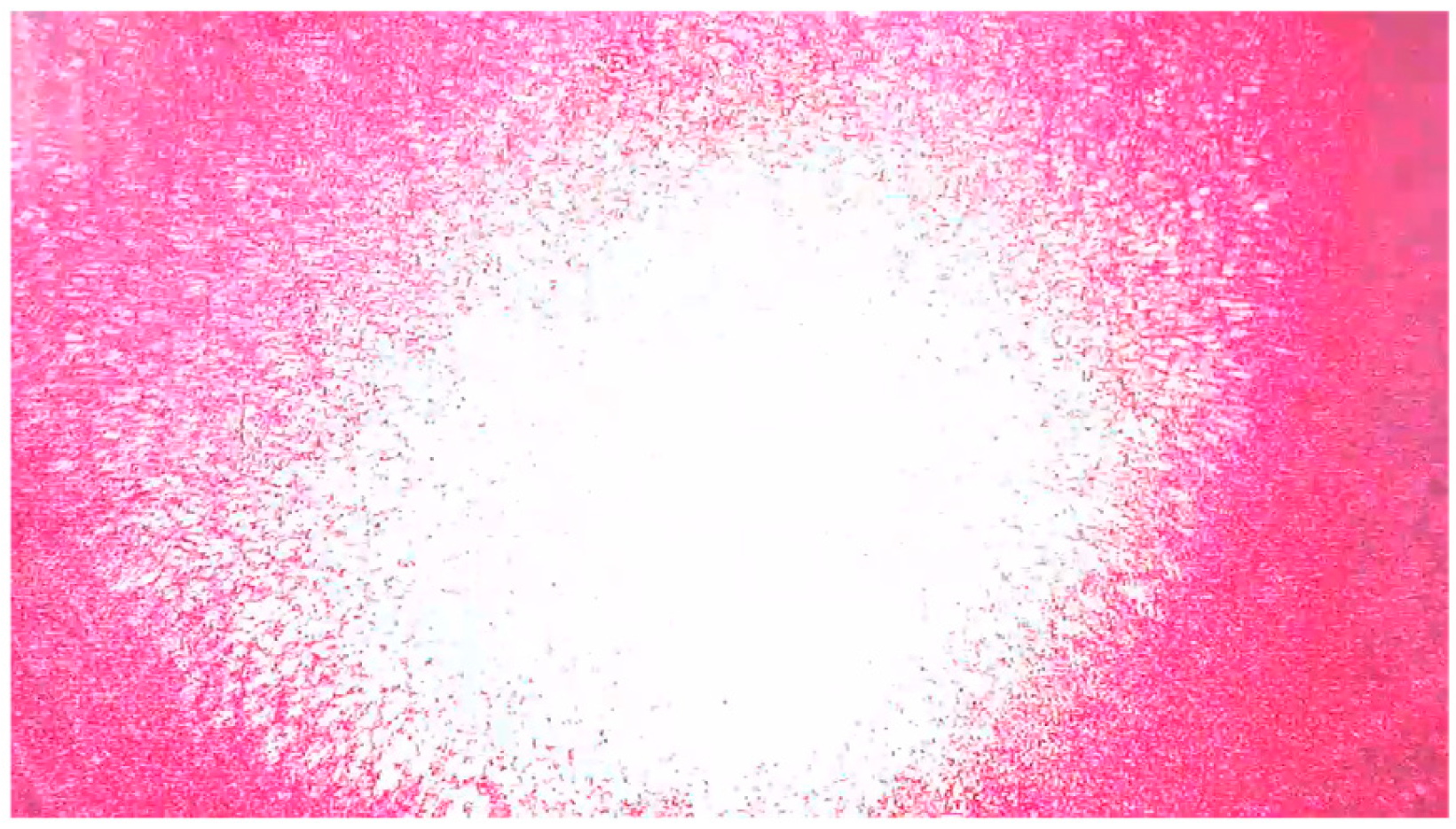

3.1. Dazzle Effect with Rolling Shutter Camera

3.2. Photopic Conditions for Invisibility

3.3. Generating the Physical Adversarial Attack

4. Results and Discussion

4.1. Effectiveness of the AMOLS

4.2. Real Experiments on Physical-World Adversarial Attack

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Appendix A

| Physical World Attack | Attack Mechanism | Targeting Camera Sensors | Adversary Physical Access | Achievable Attack Success Rate | Invisibility Criterion |

|---|---|---|---|---|---|

| EM Injection [12] | CCD interface | ✓ | X Near distances | a | ✓ |

| [13] | Spatial laser beam | X | X | b | X |

| CamData Lane [14] | Camera data lane | X | ✓ Camera interface | c | |

| RS Backdoor Attack [18] | CMOS dazzling | ✓ | X | d | X f |

| Adversarial RS [19] | CMOS dazzling | ✓ | X | X f | |

| Our Attack | Invisible AMOLS | ✓ | X | e | ✓ |

References

- Heaven, D. Why deep-learning AIs are so easy to fool. Nature 2019, 574, 163–166. [Google Scholar] [CrossRef] [PubMed]

- Szegedy, C.; Zaremba, W.; Sutskever, I.; Bruna, J.; Erhan, D.; Goodfellow, I.; Fergus, R. Intriguing properties of neural networks. In Proceedings of the International Conference on Learning Representations (ICLR), Banff, AB, Canada, 14–16 April 2014. [Google Scholar]

- Yuan, X.; He, P.; Zhu, Q.; Li, X. Adversarial Examples: Attacks and Defenses for Deep Learning. IEEE Trans. Neural Netw. Learn. Syst. 2019, 30, 2805–2824. [Google Scholar] [CrossRef] [PubMed]

- Goodfellow, I.J.; Shlens, J.; Szegedy, C. Explaining and harnessing adversarial examples. In Proceedings of the International Conference on Learning Representations (ICLR), San Diego, CA, USA, 7–9 May 2015. [Google Scholar]

- Fang, J.; Jiang, Y.; Jiang, C.; Jiang, Z.L.; Liu, C.; Yiu, S.M. State-of-the-art optical-based physical adversarial attacks for deep learning computer vision systems. Expert Syst. Appl. 2024, 250, 123761. [Google Scholar] [CrossRef]

- Wang, J.; Wang, C.; Lin, Q.; Luo, C.; Wu, C.; Li, J. Adversarial attacks and defenses in deep learning for image recognition: A survey. Neurocomputing 2022, 514, 162–181. [Google Scholar]

- Wei, H.; Tang, H.; Jia, X.; Wang, Z.; Yu, H.; Li, Z.; Satoh, S.I.; Van Gool, L.; Wang, Z. Physical adversarial attack meets computer vision: A decade survey. IEEE Trans. Pattern Anal. Mach. Intell. 2024, 46, 9797–9817. [Google Scholar] [CrossRef] [PubMed]

- Lovisotto, G.; Turner, H.; Sluganovic, I.; Strohmeier, M.; Martinovic, I. SLAP: Improving Physical Adversarial Examples with Short-Lived Adversarial Perturbations. arXiv 2020, arXiv:2007.04137. [Google Scholar]

- Zhou, Z.; Tang, D.; Wang, X.; Han, W.; Lu, X.; Zhang, K. Invisible Mask: Practical attacks on face recognition with infrared. arXiv 2018, arXiv:1803.04683v1. [Google Scholar]

- Athalye, A.; Engstrom, L.; Ilyas, A.; Kwok, K. Synthesizing Robust Adversarial Examples. In Proceedings of the 35th International Conference on Machine Learning, Stockholm, Sweden, 10–15 July 2018. [Google Scholar]

- Cui, J.; Guo, W.; Huang, H.; Lv, X.; Cao, H.; Li, H. Adversarial examples for vehicle detection with projection transformation. IEEE Trans. Geosci. Remote Sens. 2024, 62, 5632418. [Google Scholar] [CrossRef]

- Liu, Z.; Lin, F.; Ba, Z.; Lu, L.; Ren, K. MagShadow: Physical Adversarial Example Attacks via Electromagnetic Injection. IEEE Trans. Dependable Secur. Comput. 2025, 1–17. [Google Scholar] [CrossRef]

- Duan, R.; Mao, X.; Qin, A.K.; Chen, Y.; Ye, S.; He, Y.; Yang, Y. Adversarial laser beam: Effective physical-world attack to dnns in a blink. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 16062–16071. [Google Scholar]

- Liu, W.; He, W.; Hu, B.; Chang, C.H. A practical man-in-the-middle attack on deep learning edge device by sparse light strip injection into camera data lane. In Proceedings of the 2022 IEEE 35th International System-on-Chip Conference (SOCC), Belfast, UK, 5–8 September 2022; IEEE: Piscataway, NJ, USA, 2022; pp. 1–6. [Google Scholar]

- Wang, X.; Xu, Z.; Zhong, H.; Cheng, X.A.; Xing, Z.; Zhang, J. Fresnel Diffraction Model for Laser Dazzling Spots of Complementary Metal Oxide Semiconductor Cameras. Sensors 2024, 24, 5781. [Google Scholar] [CrossRef] [PubMed]

- Chia-Kai, L. Analysis and Compensation of Rolling Shutter Effect. IEEE Trans. Image Process. 2008, 17, 1323–1330. [Google Scholar] [CrossRef] [PubMed]

- Danakis, C.; Afgani, M.; Povey, G.; Underwood, I.; Haas, H. Using a CMOS Camera Sensor for Visible Light Communication. In Proceedings of the IEEE Globecom Workshops, Anaheim, CA, USA, 3–7 December 2012. [Google Scholar]

- Li, H.; Wang, Y.; Xie, X.; Liu, Y.; Wang, S.; Wan, R.; Chau, L.P.; Kot, A.C. Light Can Hack Your Face! Black-box Backdoor Attack on Face Recognition Systems. arXiv 2020, arXiv:2009.06996. [Google Scholar]

- Sayles, A.; Hooda, A.; Gupta, M.; Chatterjee, R.; Fernandes, E. Invisible Perturbations: Physical Adversarial Examples Exploiting the Rolling Shutter Effect. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 14666–14675. [Google Scholar]

- Chen, Z.; Lin, P.; Jiang, Z.L.; Wei, Z.; Yuan, S.; Fang, J. An illumination modulation-based adversarial attack against automated face recognition system. In Proceedings of the Information Security and Cryptology: 16th International Conference, Inscrypt 2020, Guangzhou, China, 11–14 December 2020; Revised Selected Papers. Springer International Publishing: Cham, Switzerland, 2021; pp. 53–69. [Google Scholar]

- Shen, Y.; Cheng, Y.; Lin, Y.; Long, S.; Jiang, C.; Li, D.; Dai, S.; Jiang, Y.; Fang, J.; Jiang, Z.L.; et al. MLIA: Modulated LED illumination-based adversarial attack on traffic sign recognition system for autonomous vehicle. In Proceedings of the 2022 IEEE International Conference on Trust, Security and Privacy in Computing and Communications (TrustCom), Wuhan, China, 9–11 December 2022; IEEE: Piscataway, NJ, USA, 2022; pp. 1020–1027. [Google Scholar]

- Fang, J.; Yang, Z.; Dai, S.; Jiang, Y.; Jiang, C.; Jiang, Z.L.; Liu, C.; Yiu, S.M. Cross-task physical adversarial attack against lane detection system based on LED illumination modulation. In Proceedings of the Chinese Conference on Pattern Recognition and Computer Vision (PRCV), Xiamen, China, 13–15 October 2023; Springer Nature: Singapore, 2023; pp. 478–491. [Google Scholar]

- Köhler, S.; Lovisotto, G.; Birnbach, S.; Baker, R.; Martinovic, I. They See Me Rollin’: Inherent Vulnerability of the Rolling Shutter in CMOS Image Sensors. In Proceedings of the 37th Annual Computer Security Applications Conference, Virtual, 6–10 December 2021; pp. 399–413. [Google Scholar]

- Yan, C.; Xu, Z.; Yin, Z.; Mangard, S.; Ji, X.; Xu, W.; Zhao, K.; Zhou, Y.; Wang, T.; Gu, G.; et al. Rolling colors: Adversarial laser exploits against traffic light recognition. In Proceedings of the 31st USENIX Security Symposium (USENIX Security 22), Boston, MA, USA, 10–12 August 2022; pp. 1957–1974. [Google Scholar]

- Nilson, D.G.; Hill, D.N.; Evans, J.C. Thomson Scattering Stray Light Reduction Techniques Using a CCD Camera; Lawrence Livermore National Laboratory: Livermore, CA, USA, 1997. [Google Scholar]

- Schleijpen, R.H.M.A.; Dimmeler, A.; Eberle, B.; van den Heuvel, J.C.; Mieremet, A.L.; Beckman, H.; Mellier, B. Laser Dazzling of Focal Plane Array Cameras. In Proceedings of the Defense and Security Symposium, Orlando, FL, USA, 10 October 2007. [Google Scholar]

- Schleijpen, H.M.A.; Carpenter, S.R.; Mellier, B.; Dimmeler, A. Imaging Seeker Surrogate for IRCM evaluation. In Proceedings of the Optics/Photonics in Security and Defence, Stockholm, Sweden, 5 October 2006. [Google Scholar]

- Santos, C.N.; Chrétien, S.; Merella, L.; Vandewal, M. Visible and near-infrared laser dazzling of CCD and CMOS cameras. In Proceedings of the Technologies for Optical Countermeasures XV, Berlin, Germany, 9 October 2018. [Google Scholar]

- Eberle, B.; Kinerk, W.T.; Koerber, M.; Öhgren, J.; Ritt, G.; Santos, C.N.; Schwarz, B.; Steinvall, O.; Tipper, S.M.; Vandewal, M.; et al. NATO SET-249 joint measurement campaign on laser dazzle effects in airborne scenarios. In Proceedings Volume 11161, Technologies for Optical Countermeasures XVI; SPIE: Bellingham, WA, USA, 2019; pp. 119–138. [Google Scholar]

- Blackwell, H.R. Contrast Thresholds of the Human Eye. J. Opt. Soc. Am. 1946, 36, 624–643. [Google Scholar] [CrossRef] [PubMed]

- Adrian, W. Visibility of targets: Model for calculation. Light Res. Technol. 1989, 21, 181–188. [Google Scholar]

- Paschotta, R. RP Photonics Encyclopedia. Available online: https://www.rp-photonics.com/radiance.html (accessed on 1 April 2025).

- Williamson, C.A.; McLin, L.N. Nominal ocular dazzle distance (NODD). Appl. Opt. 2015, 54, 1564–1572. [Google Scholar] [CrossRef]

- McLin, L.N.; Smith, P.A.; Barnes, L.E.; Dykes, J.R. Scaling laser disability glare functions with “K” factors to predict dazzle. In International Laser Safety; AIP Publishing: Albuquerque, NM, USA, 2015. [Google Scholar]

- Vos, J.; Cole, B.; Bodmann, H.-W.; Colombo, E.; Takeuchi, T.; van den Berg, T.J.T.P. CIE Equations for Disability Glare; CIE TC: Vienna, Austria, 2002. [Google Scholar]

- Carlini, N.; Wagner, D. Towards Evaluating the Robustness of Neural Networks. In Proceedings of the 2017 IEEE Symposium on Security and Privacy (SP), San Jose, CA, USA, 22–26 May 2017. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Stein, Z.; Hazan, A.; Stern, A. Invisible CMOS Camera Dazzling for Conducting Adversarial Attacks on Deep Neural Networks. Sensors 2025, 25, 2301. https://doi.org/10.3390/s25072301

Stein Z, Hazan A, Stern A. Invisible CMOS Camera Dazzling for Conducting Adversarial Attacks on Deep Neural Networks. Sensors. 2025; 25(7):2301. https://doi.org/10.3390/s25072301

Chicago/Turabian StyleStein, Zvi, Adir Hazan, and Adrian Stern. 2025. "Invisible CMOS Camera Dazzling for Conducting Adversarial Attacks on Deep Neural Networks" Sensors 25, no. 7: 2301. https://doi.org/10.3390/s25072301

APA StyleStein, Z., Hazan, A., & Stern, A. (2025). Invisible CMOS Camera Dazzling for Conducting Adversarial Attacks on Deep Neural Networks. Sensors, 25(7), 2301. https://doi.org/10.3390/s25072301