Vocal Emotion Perception and Musicality—Insights from EEG Decoding

Abstract

1. Introduction

2. Materials and Methods

2.1. Participants

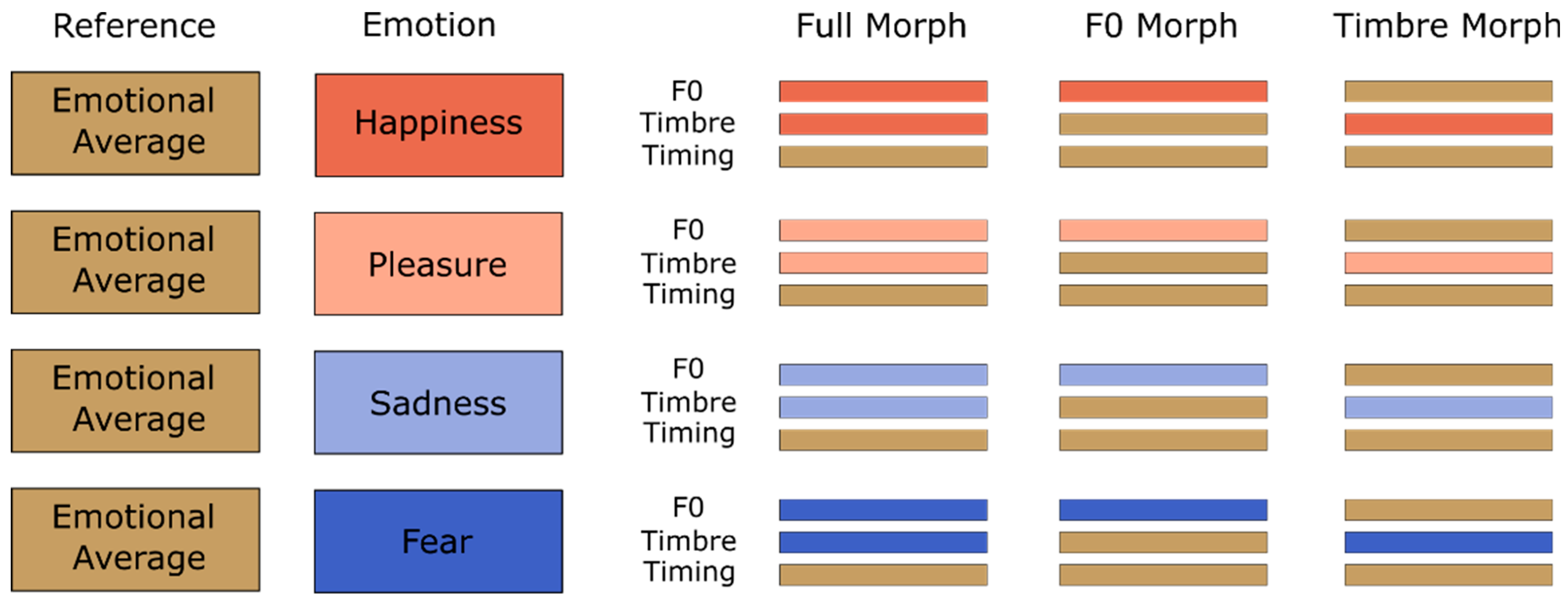

2.2. Stimuli

2.3. Design

2.3.1. EEG-Setup

2.3.2. Procedure

2.4. EEG Preprocessing

2.5. Vocal Emotion Decoding

2.6. Training and Testing Procedure

2.7. Statistical Testing

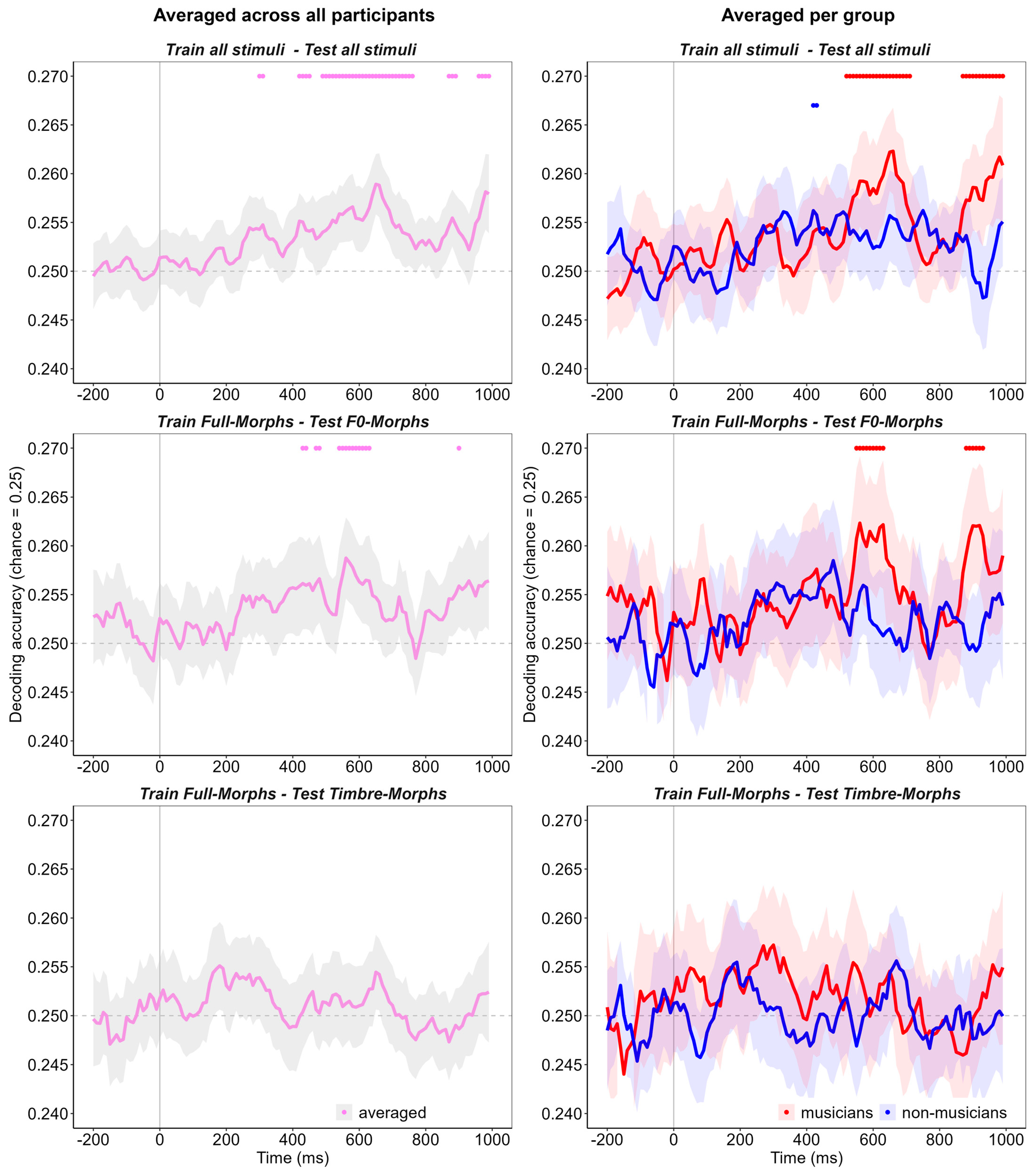

3. Results

3.1. General Emotion Decoding

3.2. Emotion Decoding and Musicality

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Schirmer, A.; Kotz, S.A. Beyond the right hemisphere: Brain mechanisms mediating vocal emotional processing. Trends Cogn. Sci. 2006, 10, 24–30. [Google Scholar] [CrossRef] [PubMed]

- Paulmann, S.; Kotz, S.A. The Electrophysiology and Time Course of Processing Vocal Emotion Expressions. In The Oxford Handbook of Voice Perception; Frühholz, S., Belin, P., Frühholz, S., Belin, P., Paulmann, S., Kotz, S.A., Eds.; Oxford University Press: Oxford, UK, 2018; pp. 458–472. ISBN 9780198743187. [Google Scholar]

- Frühholz, S.; Schweinberger, S.R. Nonverbal auditory communication—Evidence for integrated neural systems for voice signal production and perception. Prog. Neurobiol. 2021, 199, 101948. [Google Scholar] [CrossRef] [PubMed]

- Jessen, S.; Kotz, S.A. The temporal dynamics of processing emotions from vocal, facial, and bodily expressions. Neuroimage 2011, 58, 665–674. [Google Scholar] [CrossRef] [PubMed]

- Paulmann, S.; Bleichner, M.; Kotz, S.A. Valence, arousal, and task effects in emotional prosody processing. Front. Psychol. 2013, 4, 345. [Google Scholar] [CrossRef]

- Schirmer, A.; Escoffier, N. Emotional MMN: Anxiety and heart rate correlate with the ERP signature for auditory change detection. Clin. Neurophysiol. 2010, 121, 53–59. [Google Scholar] [CrossRef]

- Sander, D.; Grandjean, D.; Scherer, K.R. An Appraisal-Driven Componential Approach to the Emotional Brain. Emot. Rev. 2018, 10, 219–231. [Google Scholar] [CrossRef]

- Schirmer, A.; Kotz, S.A. ERP evidence for a sex-specific Stroop effect in emotional speech. J. Cogn. Neurosci. 2003, 15, 1135–1148. [Google Scholar] [CrossRef]

- Martins, M.; Lima, C.F.; Pinheiro, A.P. Enhanced salience of musical sounds in singers and instrumentalists. Cogn. Affect. Behav. Neurosci. 2022, 22, 1044–1062. [Google Scholar] [CrossRef]

- Juslin, P.N.; Laukka, P. Communication of emotions in vocal expression and music performance: Different channels, same code? Psychol. Bull. 2003, 129, 770–814. [Google Scholar] [CrossRef]

- Breyer, B.; Bluemke, M. Deutsche Version der Positive and Negative Affect Schedule PANAS; GESIS Panel: Mannheim, Germany, 2016. [Google Scholar]

- Banse, R.; Scherer, K.R. Acoustic profiles in vocal emotion expression. J. Pers. Soc. Psychol. 1996, 70, 614–636. [Google Scholar] [CrossRef]

- Nussbaum, C.; Schirmer, A.; Schweinberger, S.R. Contributions of Fundamental Frequency and Timbre to Vocal Emotion Perception and their Electrophysiological Correlates. Soc. Cogn. Affect. Neurosci. 2022, 17, 1145–1154. [Google Scholar] [CrossRef] [PubMed]

- Schirmer, A.; Striano, T.; Friederici, A.D. Sex differences in the preattentive processing of vocal emotional expressions. Neuroreport 2005, 16, 635–639. [Google Scholar] [CrossRef]

- Schirmer, A.; Kotz, S.A.; Friederici, A.D. On the role of attention for the processing of emotions in speech: Sex differences revisited. Brain Res. Cogn. Brain Res. 2005, 24, 442–452. [Google Scholar] [CrossRef]

- Nussbaum, C.; Schweinberger, S.R. Links Between Musicality and Vocal Emotion Perception. Emot. Rev. 2021, 13, 211–224. [Google Scholar] [CrossRef]

- Martins, M.; Pinheiro, A.P.; Lima, C.F. Does Music Training Improve Emotion Recognition Abilities? A Critical Review. Emot. Rev. 2021, 13, 199–210. [Google Scholar] [CrossRef]

- Thompson, W.F.; Schellenberg, E.G.; Husain, G. Decoding speech prosody: Do music lessons help? Emotion 2004, 4, 46–64. [Google Scholar] [CrossRef]

- Lima, C.F.; Castro, S.L. Speaking to the trained ear: Musical expertise enhances the recognition of emotions in speech prosody. Emotion 2011, 11, 1021–1031. [Google Scholar] [CrossRef]

- Nussbaum, C.; Schirmer, A.; Schweinberger, S.R. Musicality—Tuned to the Melody of Vocal Emotions. Br. J. Psychol. 2024, 115, 206–225. [Google Scholar] [CrossRef] [PubMed]

- Nussbaum, C.; Schirmer, A.; Schweinberger, S.R. Electrophysiological Correlates of Vocal Emotional Processing in Musicians and Non-Musicians. Brain Sci. 2023, 13, 1563. [Google Scholar] [CrossRef]

- Grootswagers, T.; Wardle, S.G.; Carlson, T.A. Decoding Dynamic Brain Patterns from Evoked Responses: A Tutorial on Multivariate Pattern Analysis Applied to Time Series Neuroimaging Data. J. Cogn. Neurosci. 2017, 29, 677–697. [Google Scholar] [CrossRef]

- Norman, K.A.; Polyn, S.M.; Detre, G.J.; Haxby, J.V. Beyond mind-reading: Multi-voxel pattern analysis of fMRI data. Trends Cogn. Sci. 2006, 10, 424–430. [Google Scholar] [CrossRef] [PubMed]

- Hebart, M.N.; Baker, C.I. Deconstructing multivariate decoding for the study of brain function. Neuroimage 2017, 180 Pt A, 4–18. [Google Scholar] [CrossRef]

- Ambrus, G.G.; Eick, C.M.; Kaiser, D.; Kovács, G. Getting to Know You: Emerging Neural Representations during Face Familiarization. J. Neurosci. 2021, 41, 5687–5698. [Google Scholar] [CrossRef] [PubMed]

- Kaiser, D. Characterizing Dynamic Neural Representations of Scene Attractiveness. J. Cogn. Neurosci. 2022, 34, 1988–1997. [Google Scholar] [CrossRef]

- Kaiser, D.; Oosterhof, N.N.; Peelen, M.V. The Neural Dynamics of Attentional Selection in Natural Scenes. J. Neurosci. 2016, 36, 10522–10528. [Google Scholar] [CrossRef] [PubMed]

- Contini, E.W.; Wardle, S.G.; Carlson, T.A. Decoding the time-course of object recognition in the human brain: From visual features to categorical decisions. Neuropsychologia 2017, 105, 165–176. [Google Scholar] [CrossRef]

- Wiese, H.; Schweinberger, S.R.; Kovács, G. The neural dynamics of familiar face recognition. Neurosci. Biobehav. Rev. 2024, 167, 105943. [Google Scholar] [CrossRef]

- Kappenman, E.S.; Luck, S.J. ERP Components: The Ups and Downs of Brainwave Recordings. In The Oxford Handbook of Event-Related Potential Components; Oxford University Press: Oxford, UK, 2012. [Google Scholar] [CrossRef]

- Petit, S.; Badcock, N.A.; Grootswagers, T.; Woolgar, A. Unconstrained multivariate EEG decoding can help detect lexical-semantic processing in individual children. Sci. Rep. 2020, 10, 10849. [Google Scholar] [CrossRef]

- Kawahara, H.; Morise, M.; Takahashi, T.; Nisimura, R.; Irino, T.; Banno, H. Tandem-STRAIGHT: A temporally stable power spectral representation for periodic signals and applications to interference-free spectrum, F0, and aperiodicity estimation. In ICASSP 2008, Proceedings of the 2008 IEEE International Conference on Acoustics, Speech and Signal Processing, Las Vegas, NV, USA, 31 March–4 April 2008; IEEE Service Center: Piscataway, NJ, USA, 2008; pp. 3933–3936. ISBN 978-1-4244-1483-3. [Google Scholar]

- Nussbaum, C.; Pöhlmann, M.; Kreysa, H.; Schweinberger, S.R. Perceived naturalness of emotional voice morphs. Cogn. Emot. 2023, 37, 731–747. [Google Scholar] [CrossRef]

- Kawahara, H.; Skuk, V.G. Voice Morphing. In The Oxford Handbook of Voice Perception; Frühholz, S., Belin, P., Frühholz, S., Belin, P., Paulmann, S., Kotz, S.A., Eds.; Oxford University Press: Oxford, UK, 2018; ISBN 9780198743187. [Google Scholar]

- Boersma, P.; Weenink, D. Praat: Doing Phonetics by Computer; Blackwell Publishers Ltd.: Oxford, UK, 2022. [Google Scholar]

- Psychology Software Tools, Inc. E-Prime, version 3.0; Psychology Software Tools, Inc.: Sharpsburg, PA, USA, 2016.

- Delorme, A.; Makeig, S. EEGLAB: An open source toolbox for analysis of single-trial EEG dynamics including independent component analysis. J. Neurosci. Methods 2004, 134, 9–21. [Google Scholar] [CrossRef]

- The MathWorks Inc. MATLAB, version 2018b; The MathWorks Inc.: Portola Valley, CA, USA, 2018.

- Oostenveld, R.; Fries, P.; Maris, E.; Schoffelen, J.-M. FieldTrip: Open source software for advanced analysis of MEG, EEG, and invasive electrophysiological data. Comput. Intell. Neurosci. 2011, 2011, 156869. [Google Scholar] [CrossRef] [PubMed]

- Vanrullen, R. Four common conceptual fallacies in mapping the time course of recognition. Front. Psychol. 2011, 2, 365. [Google Scholar] [CrossRef] [PubMed]

- van Driel, J.; Olivers, C.N.L.; Fahrenfort, J.J. High-pass filtering artifacts in multivariate classification of neural time series data. J. Neurosci. Methods 2021, 352, 109080. [Google Scholar] [CrossRef] [PubMed]

- Oosterhof, N.N.; Connolly, A.C.; Haxby, J.V. CoSMoMVPA: Multi-Modal Multivariate Pattern Analysis of Neuroimaging Data in Matlab/GNU Octave. Front. Neuroinform. 2016, 10, 27. [Google Scholar] [CrossRef]

- Ambrus, G.G.; Kaiser, D.; Cichy, R.M.; Kovács, G. The Neural Dynamics of Familiar Face Recognition. Cereb. Cortex 2019, 29, 4775–4784. [Google Scholar] [CrossRef]

- Kaiser, D.; Turini, J.; Cichy, R.M. A neural mechanism for contextualizing fragmented inputs during naturalistic vision. Elife 2019, 8, e48182. [Google Scholar] [CrossRef]

- Smith, S.M.; Nichols, T.E. Threshold-free cluster enhancement: Addressing problems of smoothing, threshold dependence and localisation in cluster inference. Neuroimage 2009, 44, 83–98. [Google Scholar] [CrossRef]

- Laukka, P.; Månsson, K.N.T.; Cortes, D.S.; Manzouri, A.; Frick, A.; Fredborg, W.; Fischer, H. Neural correlates of individual differences in multimodal emotion recognition ability. Cortex 2024, 175, 1–11. [Google Scholar] [CrossRef]

- Globerson, E.; Amir, N.; Golan, O.; Kishon-Rabin, L.; Lavidor, M. Psychoacoustic abilities as predictors of vocal emotion recognition. Atten. Percept. Psychophys. 2013, 75, 1799–1810. [Google Scholar] [CrossRef]

- Lolli, S.L.; Lewenstein, A.D.; Basurto, J.; Winnik, S.; Loui, P. Sound frequency affects speech emotion perception: Results from congenital amusia. Front. Psychol. 2015, 6, 1340. [Google Scholar] [CrossRef]

- Klink, H.; Kaiser, D.; Stecher, R.; Ambrus, G.G.; Kovács, G. Your place or mine? The neural dynamics of personally familiar scene recognition suggests category independent familiarity encoding. Cereb. Cortex 2023, 33, 11634–11645. [Google Scholar] [CrossRef] [PubMed]

- Li, Y.; Zhang, M.; Liu, S.; Luo, W. EEG decoding of multidimensional information from emotional faces. Neuroimage 2022, 258, 119374. [Google Scholar] [CrossRef]

- Kaiser, D.; Stecher, R.; Doerschner, K. EEG Decoding Reveals Neural Predictions for Naturalistic Material Behaviors. J. Neurosci. 2023, 43, 5406–5413. [Google Scholar] [CrossRef]

- Saeidi, M.; Karwowski, W.; Farahani, F.V.; Fiok, K.; Taiar, R.; Hancock, P.A.; Al-Juaid, A. Neural Decoding of EEG Signals with Machine Learning: A Systematic Review. Brain Sci. 2021, 11, 1525. [Google Scholar] [CrossRef] [PubMed]

- Guney, G.; Yigin, B.O.; Guven, N.; Alici, Y.H.; Colak, B.; Erzin, G.; Saygili, G. An Overview of Deep Learning Algorithms and Their Applications in Neuropsychiatry. Clin. Psychopharmacol. Neurosci. 2021, 19, 206–219. [Google Scholar] [CrossRef]

- Puffay, C.; Accou, B.; Bollens, L.; Monesi, M.J.; Vanthornhout, J.; van Hamme, H.; Francart, T. Relating EEG to continuous speech using deep neural networks: A review. J. Neural Eng. 2023, 20, 041003. [Google Scholar] [CrossRef] [PubMed]

- Li, P.; Cai, S.; Su, E.; Xie, L. A Biologically Inspired Attention Network for EEG-Based Auditory Attention Detection. IEEE Signal Process. Lett. 2022, 29, 284–288. [Google Scholar] [CrossRef]

- Lobmaier, J.S.; Klatt, W.K.; Schweinberger, S.R. Voice of a woman: Influence of interaction partner characteristics on cycle dependent vocal changes in women. Front. Psychol. 2024, 15, 1401158. [Google Scholar] [CrossRef]

- Charlton, B.D.; Filippi, P.; Fitch, W.T. Do women prefer more complex music around ovulation? PLoS ONE 2012, 7, e35626. [Google Scholar] [CrossRef][Green Version]

- Iamshchinina, P.; Karapetian, A.; Kaiser, D.; Cichy, R.M. Resolving the time course of visual and auditory object categorization. J. Neurophysiol. 2022, 127, 1622–1628. [Google Scholar] [CrossRef]

- Simanova, I.; van Gerven, M.A.J.; Oostenveld, R.; Hagoort, P. Identifying object categories from event-related EEG: Toward decoding of conceptual representations. PLoS ONE 2010, 5, e14465. [Google Scholar] [CrossRef] [PubMed]

- Giordano, B.L.; Whiting, C.; Kriegeskorte, N.; Kotz, S.A.; Gross, J.; Belin, P. The representational dynamics of perceived voice emotions evolve from categories to dimensions. Nat. Hum. Behav. 2021, 5, 1203–1213. [Google Scholar] [CrossRef] [PubMed]

- Paquette, S.; Gouin, S.; Lehmann, A. Improving emotion perception in cochlear implant users: Insights from machine learning analysis of EEG signals. BMC Neurol. 2024, 24, 115. [Google Scholar] [CrossRef] [PubMed]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lehnen, J.M.; Schweinberger, S.R.; Nussbaum, C. Vocal Emotion Perception and Musicality—Insights from EEG Decoding. Sensors 2025, 25, 1669. https://doi.org/10.3390/s25061669

Lehnen JM, Schweinberger SR, Nussbaum C. Vocal Emotion Perception and Musicality—Insights from EEG Decoding. Sensors. 2025; 25(6):1669. https://doi.org/10.3390/s25061669

Chicago/Turabian StyleLehnen, Johannes M., Stefan R. Schweinberger, and Christine Nussbaum. 2025. "Vocal Emotion Perception and Musicality—Insights from EEG Decoding" Sensors 25, no. 6: 1669. https://doi.org/10.3390/s25061669

APA StyleLehnen, J. M., Schweinberger, S. R., & Nussbaum, C. (2025). Vocal Emotion Perception and Musicality—Insights from EEG Decoding. Sensors, 25(6), 1669. https://doi.org/10.3390/s25061669