Machine Vision-Assisted Design of End Effector Pose in Robotic Mixed Depalletizing of Heterogeneous Cargo

Abstract

1. Introduction

2. Literature Review

2.1. Automated Depalletizing

2.2. Depalletizing-Focused Machine Vision

2.3. Mechanical Grasping in Pallets

3. Materials and Methods

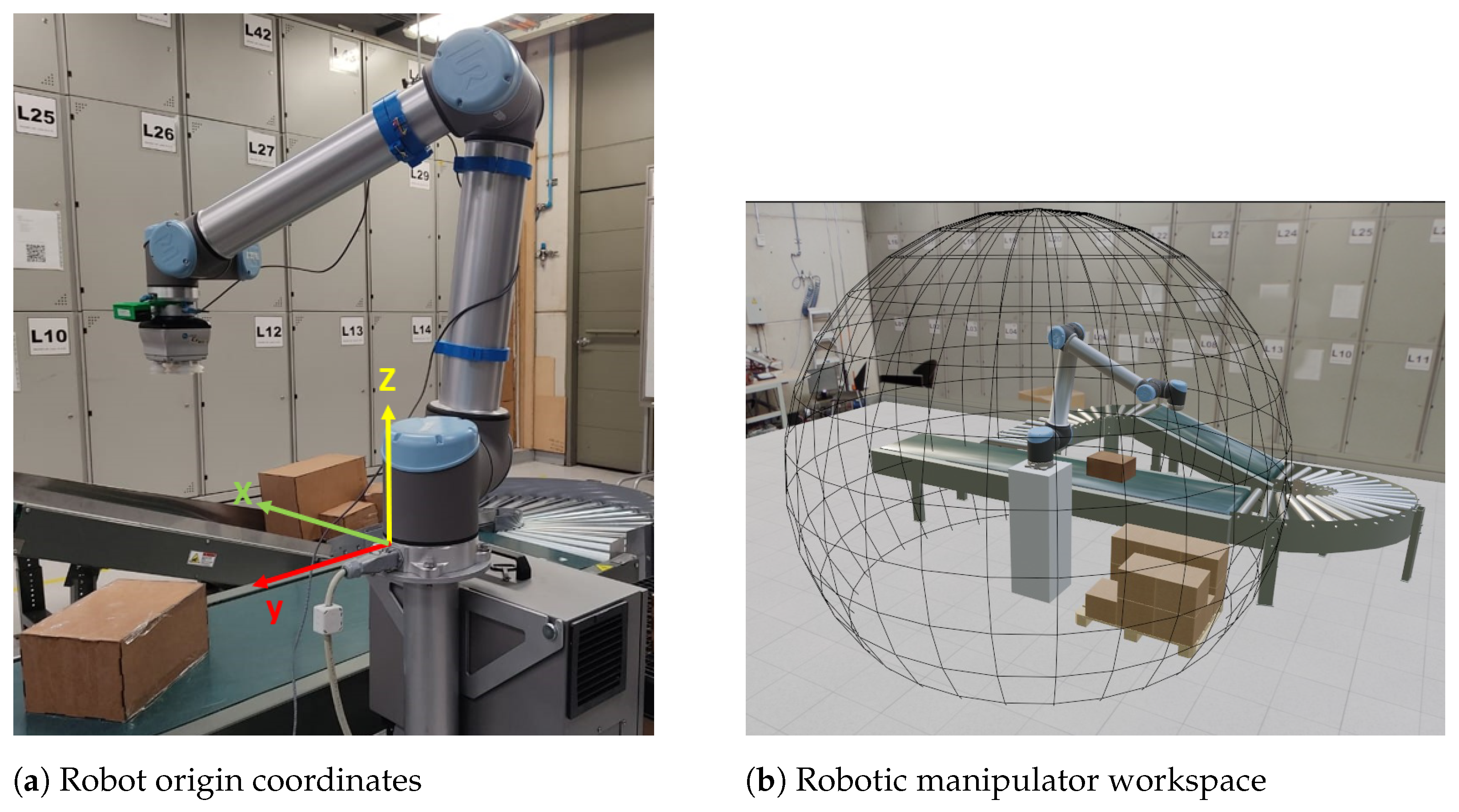

3.1. Proposed Depalletizing Scheme

3.2. Box Acquisition

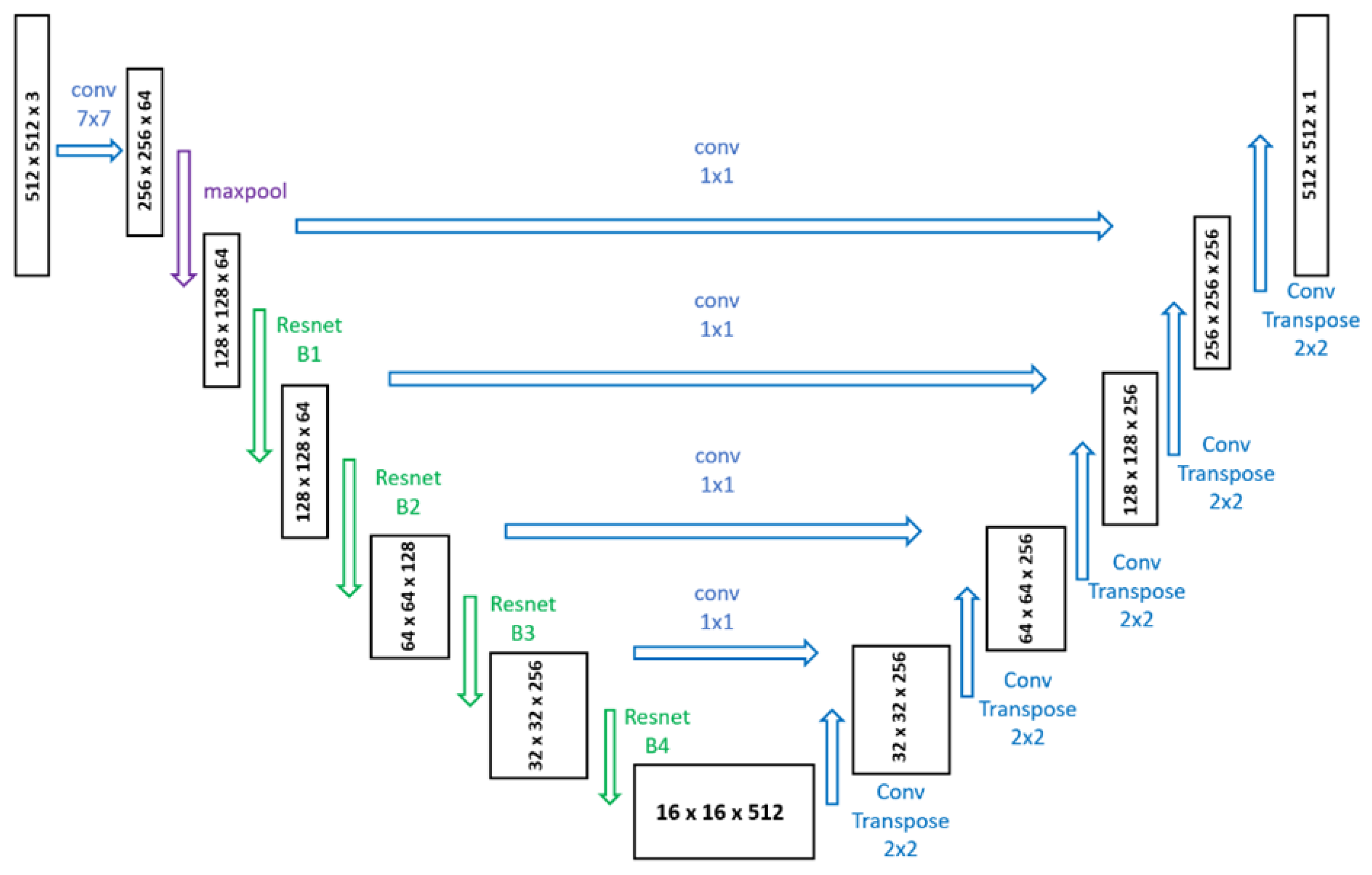

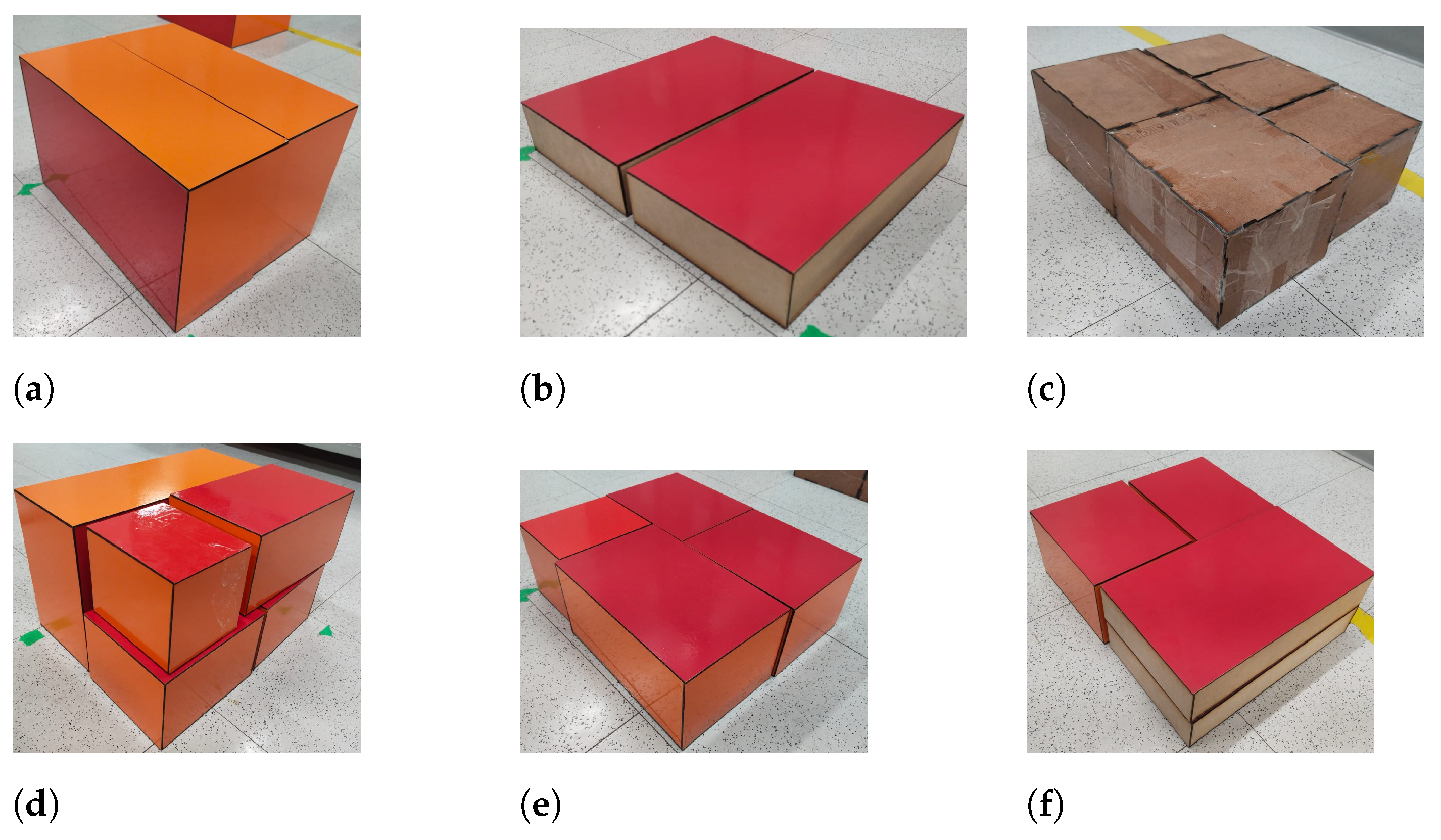

3.2.1. Box Detection

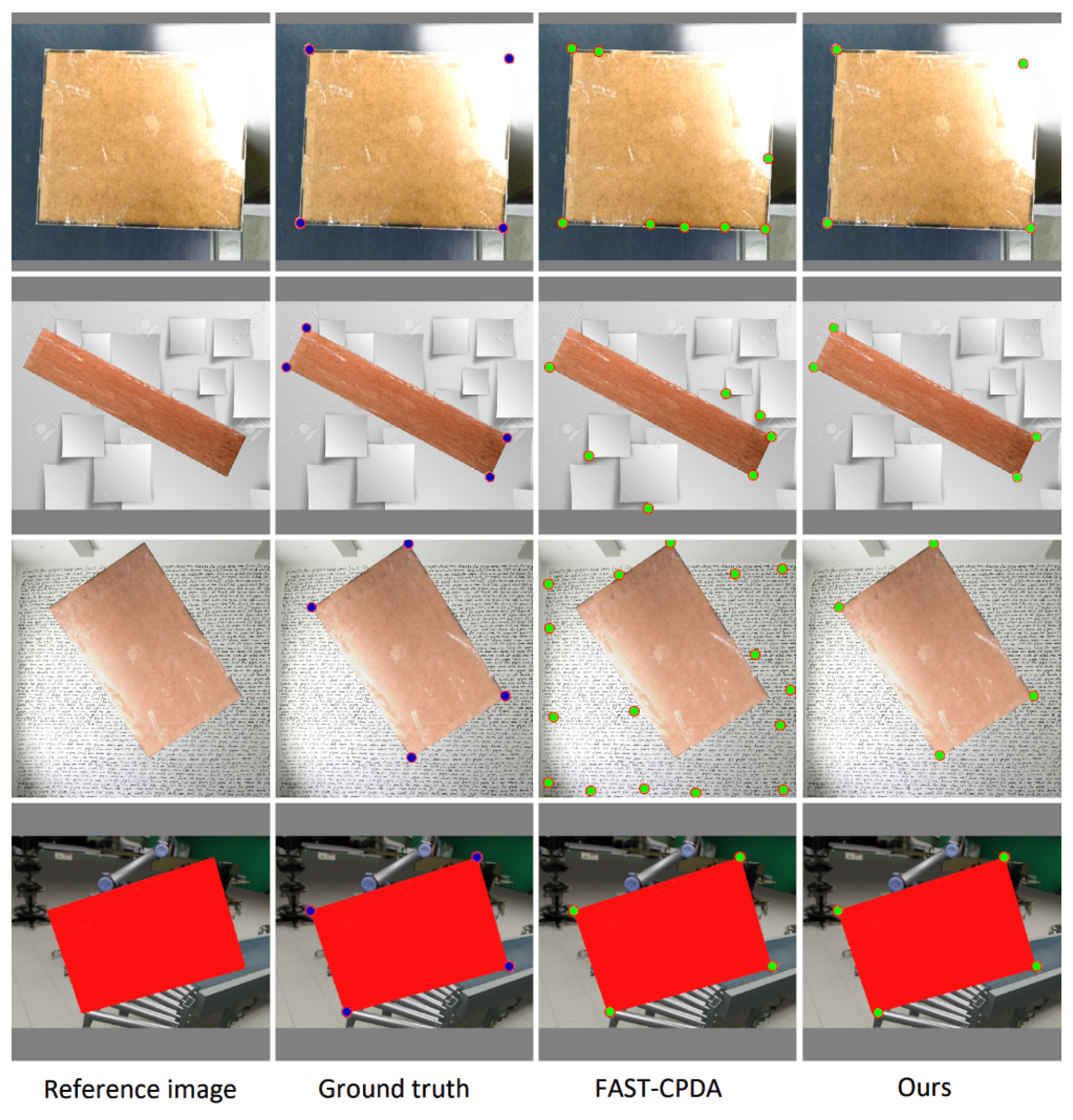

3.2.2. Pose Estimation from Corner Position

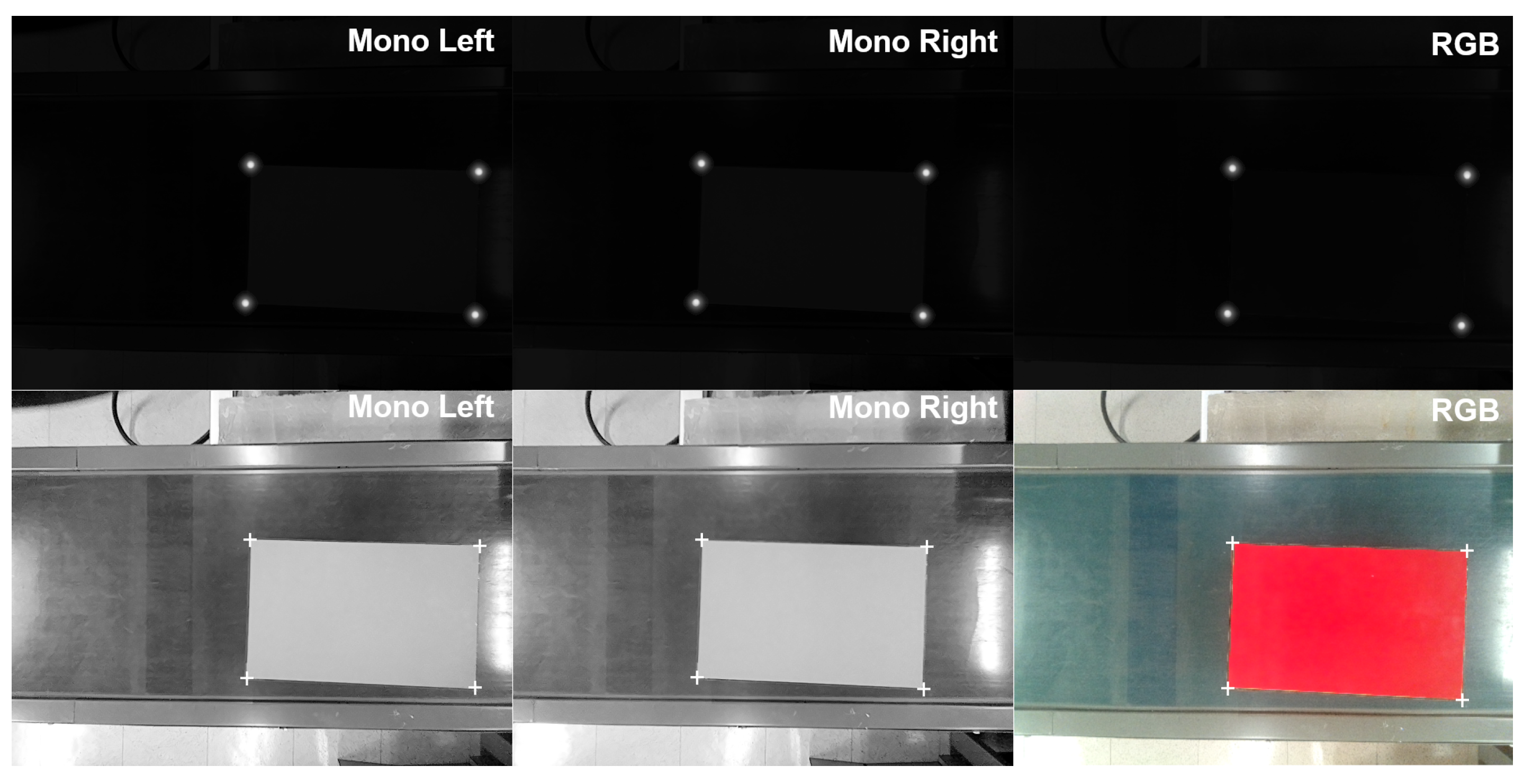

3.2.3. Hand–Eye Calibration

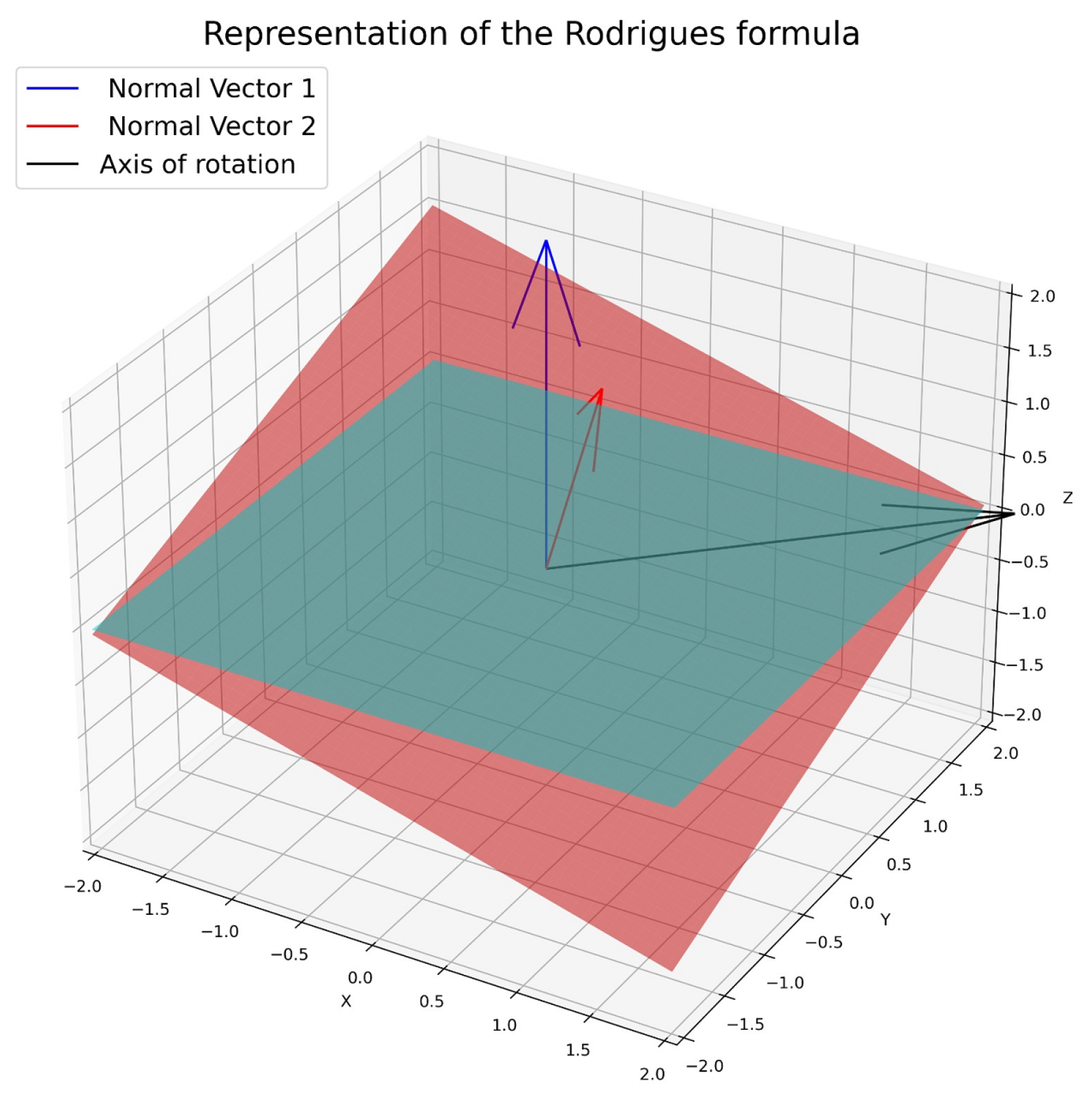

3.3. Estimating the End Effector Pose in Depalletizing Processes

| Algorithm 1 Box orientation estimation | |

Input: (4 × 3 box corner coordinate matrix) Output: (3 × 3 rotation matrix defining box orientation) | |

| ▹ Define reference plane at the origin (z = 0) |

| ▹ Generate plane mesh points |

| ▹ Compute plane equation for the box surface |

| ▹ Compute rotation vector from cross-product |

| ▹ Convert rotation vector to rotation matrix |

| |

3.4. Motion Planning

4. Results

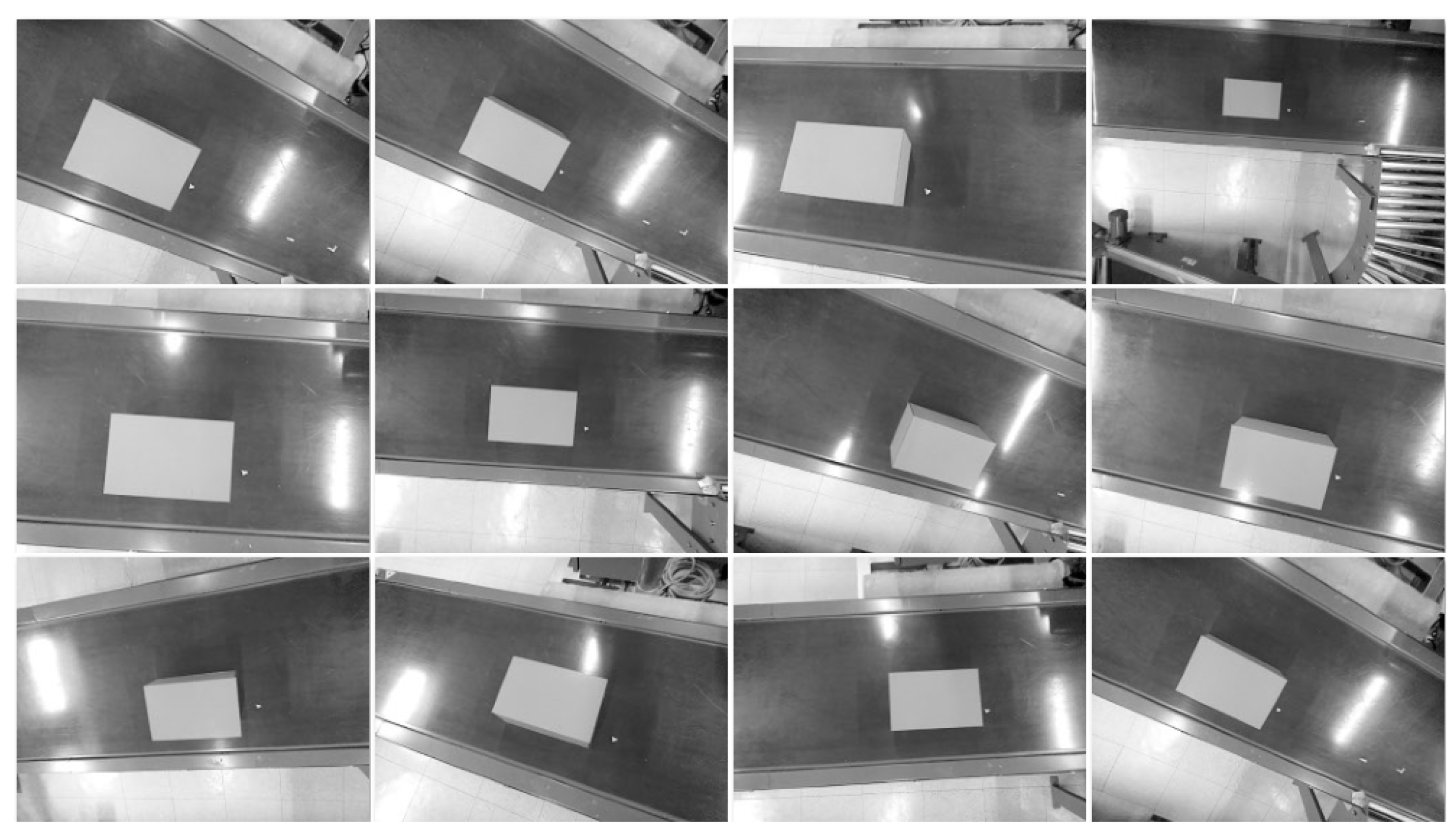

4.1. System Configuration

4.2. Box Acquisition

4.2.1. Corner Detection

4.2.2. Box Pose Estimation

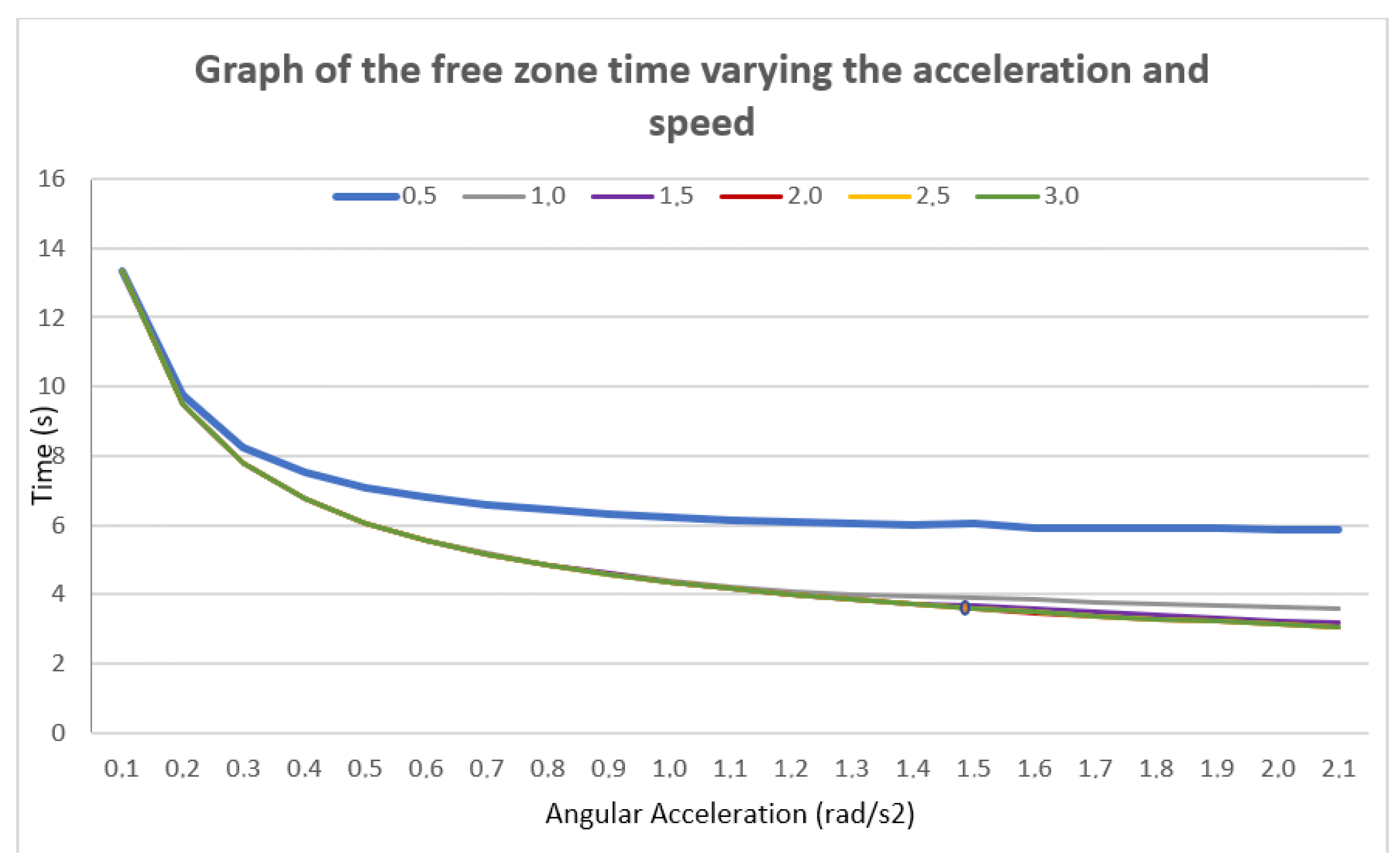

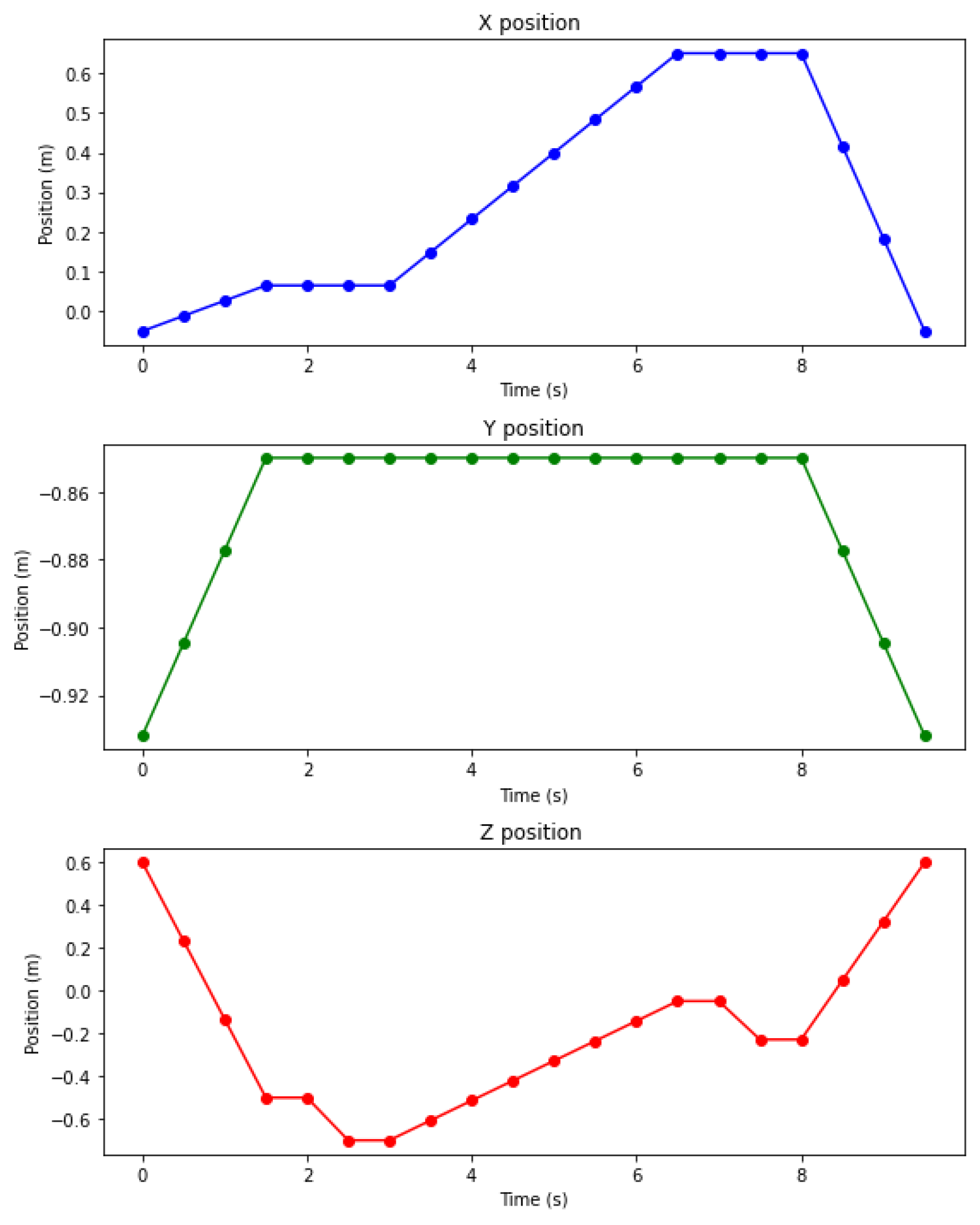

4.3. Motion Planning

4.3.1. Velocity and Acceleration Limits

4.3.2. Waypoint-Based Path Planning

4.3.3. Traveled Distance and Path Execution Time

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| A I | Artificial Intelligence |

| IoT | Internet of Things |

| YOLO | You Only Look Once |

| PPHT | Probabilistic Hough Transform |

| DOF | Degree of freedom |

Appendix A

| Identify | Down to Pallet | On Gripper | Moving | Off Gripper | Return | Sum | |

|---|---|---|---|---|---|---|---|

| 1 | 1.9439 | 0.5321 | 2.7916 | 0.3327 | 2.0186 | 7.6189 | |

| 2 | 1.2079 | 0.5326 | 2.2246 | 0.3325 | 1.8036 | 6.1012 | |

| 3 | 1.9361 | 0.5333 | 2.223 | 0.3333 | 1.9224 | 6.9481 | |

| 4 | 2.279 | 0.5323 | 2.224 | 0.3315 | 2.0198 | 7.3866 | |

| 5 | 2.2239 | 0.5324 | 2.143 | 0.333 | 1.8034 | 7.0357 | |

| 6 | 2.2549 | 0.5333 | 2.0166 | 0.3315 | 1.8276 | 6.9639 | |

| 7 | 2.4877 | 0.5332 | 2.1522 | 0.3328 | 1.9228 | 7.4287 | |

| 8 | 2.7197 | 0.5325 | 2.152 | 0.3327 | 2.0195 | 7.7564 | |

| 9 | 2.6562 | 0.5329 | 2.0239 | 0.3313 | 1.9963 | 7.5406 | |

| 10 | 2.4794 | 0.5329 | 2.0724 | 0.3322 | 1.8033 | 7.2202 | |

| 11 | 2.5198 | 0.5328 | 1.9278 | 0.3319 | 1.8271 | 7.1394 | |

| 12 | 2.711 | 0.5333 | 2.0716 | 0.3318 | 1.9242 | 7.5719 | |

| 13 | 3.0157 | 0.5321 | 2.0717 | 0.332 | 2.0193 | 7.9708 | |

| 14 | 2.9515 | 0.5332 | 1.9273 | 0.3331 | 1.9948 | 7.7399 | |

| 15 | 2.6558 | 0.5327 | 2.0074 | 0.3321 | 1.8038 | 7.3318 | |

| 16 | 3.0642 | 0.5322 | 2.0166 | 0.3314 | 1.972 | 7.9164 | |

| 17 | 2.6878 | 0.532 | 1.8402 | 0.3315 | 1.8275 | 7.219 | |

| 18 | 3.1117 | 0.5329 | 1.8391 | 0.3331 | 1.9942 | 7.811 | |

| 19 | 3.2318 | 0.5317 | 1.8246 | 0.3326 | 1.9948 | 7.9155 | |

| 20 | 2.8079 | 0.532 | 1.9281 | 0.3323 | 1.8272 | 7.4275 | |

| 21 | 2.8066 | 0.5336 | 1.7277 | 0.3325 | 1.8275 | 7.2279 | |

| 22 | 3.2718 | 0.5326 | 1.8237 | 0.3323 | 1.9949 | 7.9553 | |

| 23 | 3.1430 | 0.5332 | 1.9126 | 0.3317 | 1.9237 | 7.8442 | |

| 24 | 3.1355 | 0.5322 | 1.7273 | 0.3326 | 1.9238 | 7.6514 | |

| 25 | 3.3756 | 0.5328 | 1.8235 | 0.3328 | 1.8269 | 7.8916 | |

| 26 | 3.5902 | 0.5323 | 1.8235 | 0.332 | 1.9953 | 8.2733 | |

| 27 | 3.4067 | 0.5335 | 1.7273 | 0.3327 | 1.8029 | 7.8031 | |

| 28 | 3.616 | 0.5326 | 1.7278 | 0.3326 | 1.9704 | 8.1794 | |

| 29 | 3.5431 | 0.5334 | 1.9204 | 0.3318 | 1.9226 | 8.2513 | |

| 30 | 3.5363 | 0.5327 | 1.7269 | 0.3333 | 1.8274 | 7.9566 | |

| 31 | 3.7756 | 0.5326 | 1.7281 | 0.3327 | 1.9952 | 8.3642 | |

| Mean | 2.8434 | 0.5327 | 1.9725 | 0.3323 | 1.914 | 7.5949 | |

| Sd | 0.5807 | 0.0005 | 0.2227 | 0.0006 | 0.0839 | 0.4789 |

| Down to Pallet | Moving | Return | Sum | |

|---|---|---|---|---|

| 0.3202 | 1.5165 | 1.1311 | 2.9678 | |

| 0.2133 | 1.3142 | 1.0873 | 2.6148 | |

| 0.2771 | 1.3154 | 1.1018 | 2.6943 | |

| 0.3766 | 1.3165 | 1.1311 | 2.8242 | |

| 0.4053 | 1.2081 | 1.0873 | 2.7007 | |

| 0.4263 | 1.046 | 1.0889 | 2.5612 | |

| 0.4417 | 1.2092 | 1.1018 | 2.7527 | |

| 0.5096 | 1.2103 | 1.1311 | 2.851 | |

| 0.5031 | 1.0478 | 1.1218 | 2.6727 | |

| 0.5237 | 1.0881 | 1.0873 | 2.6991 | |

| 0.541 | 0.926 | 1.0889 | 2.5559 | |

| 0.5521 | 1.0892 | 1.1018 | 2.7431 | |

| 0.6075 | 1.0903 | 1.1311 | 2.8289 | |

| 0.603 | 0.9278 | 1.1218 | 2.6526 | |

| 0.6426 | 0.9681 | 1.0873 | 2.698 | |

| 0.6866 | 0.9747 | 1.1146 | 2.7759 | |

| 0.6575 | 0.806 | 1.0889 | 2.5524 | |

| 0.7092 | 0.8078 | 1.1218 | 2.6388 | |

| 0.8099 | 0.845 | 1.1218 | 2.7767 | |

| 0.7642 | 0.935 | 1.0889 | 2.7881 | |

| 0.7752 | 0.765 | 1.0889 | 2.6291 | |

| 0.8663 | 0.845 | 1.1218 | 2.8331 | |

| 0.8969 | 0.92 | 1.1009 | 2.9178 | |

| 0.908 | 0.76 | 1.1009 | 2.7689 | |

| 1.1237 | 0.845 | 1.0889 | 3.0576 | |

| 1.1539 | 0.845 | 1.1218 | 3.1207 | |

| 1.19 | 0.76 | 1.0873 | 3.0373 | |

| 1.2128 | 0.765 | 1.1146 | 3.0924 | |

| 1.1921 | 0.93 | 1.1009 | 3.223 | |

| 1.3098 | 0.765 | 1.0889 | 3.1637 | |

| 1.3356 | 0.765 | 1.1218 | 3.2224 | |

| Mean | 0.7269 | 0.9873 | 1.1056 | 2.8198 |

| Sd | 0.3207 | 0.2045 | 0.0167 | 0.1992 |

References

- Jocas, M.; Kurrek, P.; Zoghlami, F.; Gianni, M.; Salehi, V. Ai-based learning approach with consideration of safety criteria on example of a depalletization robot. In Proceedings of the Design Society: International Conference on Engineering Design; Cambridge University Press: Cambridge, UK, 2019; Volume 1, pp. 2041–2050. [Google Scholar]

- Setchi, R.M.; Lagos, N. Reconfigurability and reconfigurable manufacturing systems: State-of-the-art review. In Proceedings of the 2nd IEEE International Conference on Industrial Informatics, 2004. INDIN’04. 2004, Berlin, Germany, 24–26 June 2004; pp. 529–535. [Google Scholar]

- Sonoda, A.; Onoue, K.; Shimomura, N.; Kegasa, Y.; Hashimoto, Y.; Inada, T.; Enomoto, M.; Miyamoto, Y. Development of Palletizing and Depalletizing Robot Systems. [Palletize depalletize robot system no kaihatsu]. Kawasaki Tech. Rev. J. 1994, 13–19. [Google Scholar]

- Baldassarri, A.; Innero, G.; Di Leva, R.; Palli, G.; Carricato, M. Development of a mobile robotized system for palletizing applications. In Proceedings of the 2020 25th IEEE International Conference on Emerging Technologies and Factory Automation (ETFA), Vienna, Austria, 8–11 September 2020; Volume 1, pp. 395–401. [Google Scholar]

- Zaccaria, F.; Baldassarri, A.; Palli, G.; Carricato, M. A mobile robotized system for depalletizing applications: Design and experimentation. IEEE Access 2021, 9, 96682–96691. [Google Scholar] [CrossRef]

- Lee, J.D.; Chang, C.H.; Cheng, E.S.; Kuo, C.C.; Hsieh, C.Y. Intelligent robotic palletizer system. Appl. Sci. 2021, 11, 12159. [Google Scholar] [CrossRef]

- Chiaravalli, D.; Palli, G.; Monica, R.; Aleotti, J.; Rizzini, D.L. Integration of a multi-camera vision system and admittance control for robotic industrial depalletizing. In Proceedings of the 2020 25th IEEE International Conference on Emerging Technologies and Factory Automation (ETFA), Vienna, Austria, 8–11 September 2020; Volume 1, pp. 667–674. [Google Scholar]

- Mingyang, G.; Zhen, L.L.; Hettiarachchi, R. Multi-robot cooperation for mixed depalletizing. In Proceedings of the 2022 IEEE 17th Conference on Industrial Electronics and Applications (ICIEA), Chengdu, China, 16–19 December 2022; pp. 1528–1533. [Google Scholar]

- Aleotti, J.; Baldassarri, A.; Bonfè, M.; Carricato, M.; Chiaravalli, D.; Di Leva, R.; Fantuzzi, C.; Farsoni, S.; Innero, G.; Lodi Rizzini, D.; et al. Toward future automatic warehouses: An autonomous depalletizing system based on mobile manipulation and 3d perception. Appl. Sci. 2021, 11, 5959. [Google Scholar] [CrossRef]

- Opaspilai, P.; Vongbunyong, S.; Dheeravongkit, A. Robotic System for Depalletization of Pharmaceutical Products. In Proceedings of the 2021 7th International Conference on Engineering, Applied Sciences and Technology (ICEAST), Pattaya, Thailand, 1–3 April 2021; pp. 133–138. [Google Scholar] [CrossRef]

- Arpenti, P.; Caccavale, R.; Paduano, G.; Fontanelli, G.A.; Lippiello, V.; Villani, L.; Siciliano, B. RGB-D recognition and localization of cases for robotic depalletizing in supermarkets. IEEE Robot. Autom. Lett. 2020, 5, 6233–6238. [Google Scholar] [CrossRef]

- Caccavale, R.; Arpenti, P.; Paduano, G.; Fontanellli, A.; Lippiello, V.; Villani, L.; Siciliano, B. A flexible robotic depalletizing system for supermarket logistics. IEEE Robot. Autom. Lett. 2020, 5, 4471–4476. [Google Scholar] [CrossRef]

- Kalapyshina, I.I.; Nuzhdin, K.A. Design and Implementation the Robotics System for Glass Containers Depalletizing. In Proceedings of the I4SDG Workshop 2021: IFToMM for Sustainable Development Goals 1; Springer: Berlin/Heidelberg, Germany, 2022; pp. 299–306. [Google Scholar]

- Holz, D.; Topalidou-Kyniazopoulou, A.; Stückler, J.; Behnke, S. Real-time object detection, localization and verification for fast robotic depalletizing. In Proceedings of the 2015 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Hamburg, Germany, 28 September–2 October 2015; pp. 1459–1466. [Google Scholar]

- Kim, S.; Truong, V.D.; Lee, K.H.; Yoon, J. Revolutionizing Robotic Depalletizing: AI-Enhanced Parcel Detecting with Adaptive 3D Machine Vision and RGB-D Imaging for Automated Unloading. Sensors 2024, 24, 1473. [Google Scholar] [CrossRef]

- Costa, J.P.G. Robotic System for Depalletization 2023. Available online: https://repositorio-aberto.up.pt/bitstream/10216/152053/2/636770.pdf (accessed on 1 December 2024).

- Li, J.; Kang, J.; Chen, Z.; Cui, F.; Fan, Z. A workpiece localization method for robotic de-palletizing based on region growing and PPHT. IEEE Access 2020, 8, 166365–166376. [Google Scholar] [CrossRef]

- Zou, X.; Ye, M.; Luo, C.; Xiong, J.; Luo, L.; Wang, H.; Chen, Y. Fault-tolerant design of a limited universal fruit-picking end-effector based on vision-positioning error. Appl. Eng. Agric. 2016, 32, 5–18. [Google Scholar]

- Cutkosky, M.R. Robotic Grasping and Fine Manipulation; Springer Science & Business Media: Berlin/Heidelberg, Germany, 2012; Volume 6. [Google Scholar]

- Fantoni, G.; Santochi, M.; Dini, G.; Tracht, K.; Scholz-Reiter, B.; Fleischer, J.; Lien, T.K.; Seliger, G.; Reinhart, G.; Franke, J.; et al. Grasping devices and methods in automated production processes. CIRP Ann. 2014, 63, 679–701. [Google Scholar] [CrossRef]

- Jaiswal, A.; Kumar, B. Vacuum cup grippers for material handling in industry. Int. J. Innov. Sci. Eng. Technol. 2017, 4, 187–194. [Google Scholar]

- Ciocarlie, M.; Hicks, F.M.; Holmberg, R.; Hawke, J.; Schlicht, M.; Gee, J.; Stanford, S.; Bahadur, R. The Velo gripper: A versatile single-actuator design for enveloping, parallel and fingertip grasps. Int. J. Robot. Res. 2014, 33, 753–767. [Google Scholar] [CrossRef]

- Marwan, Q.M.; Chua, S.C.; Kwek, L.C. Comprehensive review on reaching and grasping of objects in robotics. Robotica 2021, 39, 1849–1882. [Google Scholar] [CrossRef]

- Kim, S.; Lee, K.H.; Kim, C.; Yoon, J. Vision-centric 3D point cloud technique and custom gripper process for parcel depalletisation. J. Intell. Manuf. 2024, 1–17. [Google Scholar] [CrossRef]

- Guo, A.; Jing, H.; Ge, C. Design and implementation of robot depalletizing system based on 3D vision. In Proceedings of the Fourth International Conference on Sensors and Information Technology (ICSI 2024), Xiamen, China, 5–7 January 2024; Volume 13107, pp. 392–397. [Google Scholar]

- Fontanelli, G.A.; Paduano, G.; Caccavale, R.; Arpenti, P.; Lippiello, V.; Villani, L.; Siciliano, B. A reconfigurable gripper for robotic autonomous depalletizing in supermarket logistics. IEEE Robot. Autom. Lett. 2020, 5, 4612–4617. [Google Scholar] [CrossRef]

- Tanaka, J.; Ogawa, A.; Nakamoto, H.; Sonoura, T.; Eto, H. Suction pad unit using a bellows pneumatic actuator as a support mechanism for an end effector of depalletizing robots. ROBOMECH J. 2020, 7, 1–30. [Google Scholar] [CrossRef]

- Eto, H.; Nakamoto, H.; Sonoura, T.; Tanaka, J.; Ogawa, A. Development of automated high-speed depalletizing system for complex stacking on roll box pallets. J. Adv. Mech. Des. Syst. Manuf. 2019, 13, JAMDSM0047. [Google Scholar] [CrossRef]

- Jocher, G.; Qiu, J.; Chaurasia, A. Ultralytics YOLO, 2023. Available online: https://github.com/ultralytics/ultralytics (accessed on 15 November 2024).

- Koks, D. Explorations in Mathematical Physics: The Concepts Behind an Elegant Language; Springer: Berlin/Heidelberg, Germany, 2006; Volume 1. [Google Scholar]

- Dai, J.S. Euler–Rodrigues formula variations, quaternion conjugation and intrinsic connections. Mech. Mach. Theory 2015, 92, 144–152. [Google Scholar] [CrossRef]

- Barrientos, A.; Álvarez, M.; Hernández, J.; Del Cerro, J.; Rossi, C. Modelado de Caden as Cinemáticas mediante Matrices de Desplazamiento. Una alternativa al método de Denavit-Hartenberg. Rev. Iberoam. Autom. Inform. Ind. 2012, 9, 371–382. [Google Scholar] [CrossRef]

- Kavraki, L.E.; Svestka, P.; Latombe, J.C.; Overmars, M.H. Probabilistic roadmaps for path planning in high-dimensional configuration spaces. IEEE Trans. Robot. Autom. 1996, 12, 566–580. [Google Scholar] [CrossRef]

- Duchoň, F.; Babinec, A.; Kajan, M.; Beňo, P.; Florek, M.; Fico, T.; Jurišica, L. Path planning with modified a star algorithm for a mobile robot. Procedia Eng. 2014, 96, 59–69. [Google Scholar] [CrossRef]

- LaValle, S.M.; Kuffner, J.J., Jr. Randomized kinodynamic planning. Int. J. Robot. Res. 2001, 20, 378–400. [Google Scholar] [CrossRef]

- Awrangjeb, M.; Lu, G.; Fraser, C.S.; Ravanbakhsh, M. A Fast Corner Detector Based on the Chord-to-Point Distance Accumulation Technique. In Proceedings of the 2009 Digital Image Computing: Techniques and Applications, Melbourne, VIC, Australia, 1–3 December 2009; pp. 519–525. [Google Scholar] [CrossRef]

- Zhang, Y.; Zhong, B.; Sun, X. A Benchmark for the Evaluation of Corner Detectors. Appl. Sci. 2022, 12, 11984. [Google Scholar] [CrossRef]

- Antonello, M.; Gobbi, A.; Michieletto, S.; Ghidoni, S.; Menegatti, E. A fully automatic hand-eye calibration system. In Proceedings of the 2017 European Conference on Mobile Robots (ECMR), Paris, France, 6–8 September 2017; pp. 1–6. [Google Scholar] [CrossRef]

| Box | Width | Length | Height | Quantity | Weight [g] |

|---|---|---|---|---|---|

| c1 | 12 | 15 | 12 | 10 | 326.2 |

| c2 | 16 | 19 | 12 | 9 | 474.6 |

| c3 | 15 | 39 | 24 | 3 | 1262.8 |

| c4 | 16 | 25 | 12 | 10 | 588.0 |

| c5 | 19 | 32 | 6 | 4 | 600.6 |

| FAST-CPDA | Ours | |

|---|---|---|

| Average position error [px] | 0.91 | 1.26 |

| Precision | 0.791 | 0.939 |

| Recall | 0.837 | 0.939 |

| Average processing time [ms] | 36 | 52 |

| Pose | Position Error [m] | Orientation Error [°] |

|---|---|---|

| 1 | 0.0183 | 3.026 |

| 2 | 0.0091 | 2.6753 |

| 3 | 0.0223 | 4.8284 |

| 4 | 0.0446 | 3.3983 |

| 5 | 0.1091 | 2.1888 |

| 6 | 0.0207 | 2.2291 |

| 7 | 0.012 | 1.4445 |

| 8 | 0.0081 | 2.7595 |

| 9 | 0.0094 | 0.8262 |

| 10 | 0.0045 | 5.352 |

| 11 | 0.0128 | 2.1061 |

| 12 | 0.0238 | 2.3623 |

| Average | 0.0245 | 2.7663 |

| Distribution | Num Samples | Connection Radius | Num Neighbors | Time (s) | Total Samples Generated | Waypoints |

|---|---|---|---|---|---|---|

| Random centered Basic | 100 | 1.14 | 26 | 11,59 | 120 | 3 |

| Improved Random | 100 | 0.96 | 26 | 8.49 | 135 | 5 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Valero, S.; Martinez, J.C.; Montes, A.M.; Marín, C.; Bolaños, R.; Álvarez, D. Machine Vision-Assisted Design of End Effector Pose in Robotic Mixed Depalletizing of Heterogeneous Cargo. Sensors 2025, 25, 1137. https://doi.org/10.3390/s25041137

Valero S, Martinez JC, Montes AM, Marín C, Bolaños R, Álvarez D. Machine Vision-Assisted Design of End Effector Pose in Robotic Mixed Depalletizing of Heterogeneous Cargo. Sensors. 2025; 25(4):1137. https://doi.org/10.3390/s25041137

Chicago/Turabian StyleValero, Sebastián, Juan Camilo Martinez, Ana María Montes, Cesar Marín, Rubén Bolaños, and David Álvarez. 2025. "Machine Vision-Assisted Design of End Effector Pose in Robotic Mixed Depalletizing of Heterogeneous Cargo" Sensors 25, no. 4: 1137. https://doi.org/10.3390/s25041137

APA StyleValero, S., Martinez, J. C., Montes, A. M., Marín, C., Bolaños, R., & Álvarez, D. (2025). Machine Vision-Assisted Design of End Effector Pose in Robotic Mixed Depalletizing of Heterogeneous Cargo. Sensors, 25(4), 1137. https://doi.org/10.3390/s25041137