1. Introduction

The manufacturing capabilities of high-end equipment used in major projects such as aviation, aerospace, maritime engineering, and energy serve as a critical indicator of a nation’s technological advancement [

1,

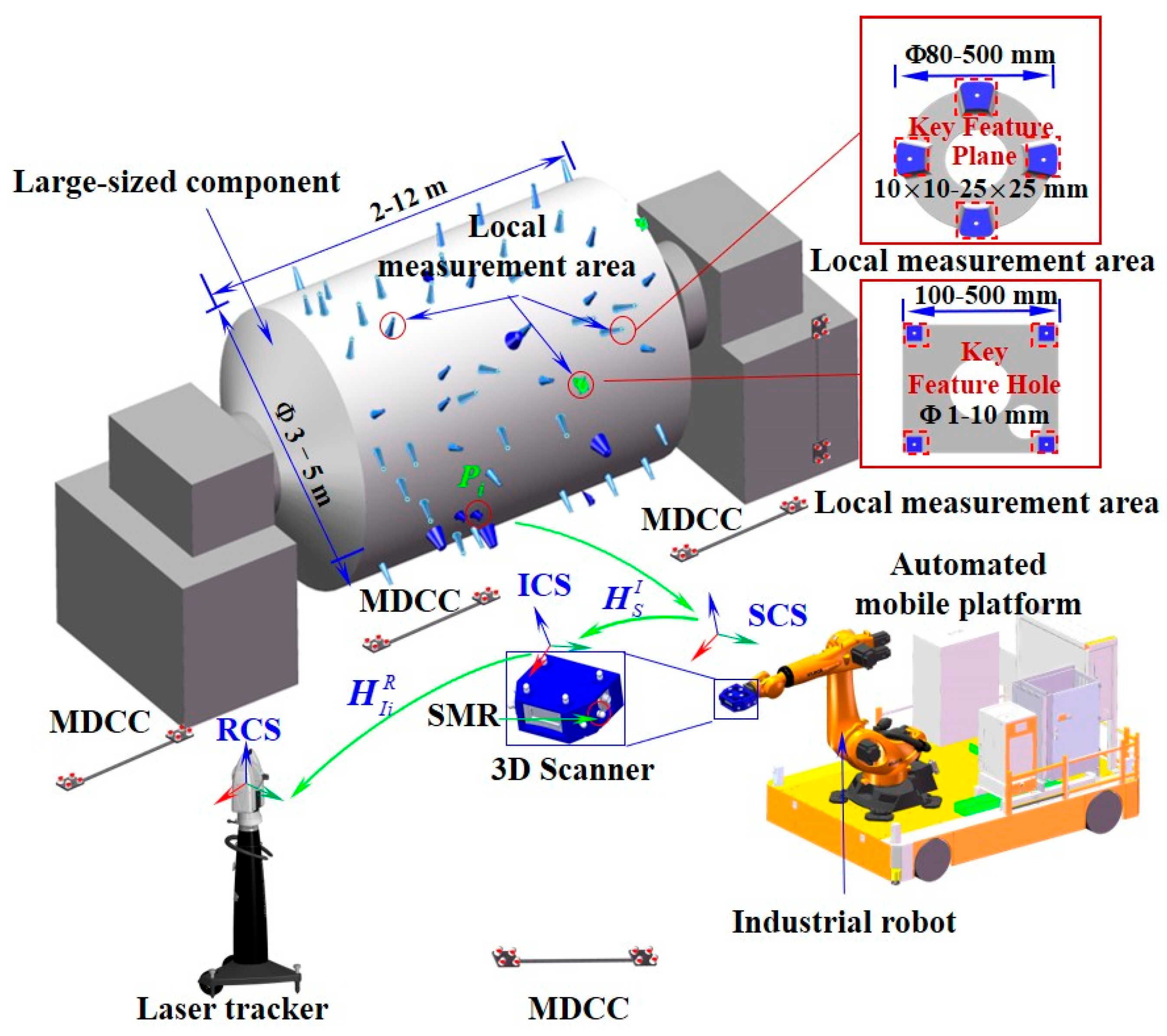

2]. Large-sized components (LSCs) serve as the core load-bearing structure of high-end equipment, featuring large size and complex structure. Among them, the small-scale key local features (KLFs, 1 × 1 mm to 25 × 25 mm) on LSCs, such as feature holes and feature surfaces, are distributed discretely over a wide area (2 to 12 m). Ensuring precise and automated 3D shape measurement of these features plays a vital role in quality assurance, product reliability improvement, and cost reduction in manufacturing processes [

3,

4]. For instance, in the production process of large-sized components, in order to ensure high-precision integrated manufacturing, it is necessary to conduct online detection of key features and use the detection results to guide the subsequent manufacturing process. Moreover, to ensure the assembly accuracy, cross-scale high-precision inspection of key features is essential to avoid forced assembly caused by geometric interference at connection interfaces. Therefore, the research on high-precision online detection methods for local small-scale key features within a large-scale space is of great significance for ensuring the high-quality and reliable manufacturing of large-sized components.

With the increasing extremity in both the size and manufacturing precision of core structural components, the measurement requirements for their key features, such as size and accuracy, have also shown a polarized trend. Consequently, hybrid measurement methods that combine large-scale spatial coordinate measurement systems with small-range vision-based systems have become an essential means. According to the measurement principle, the main large-sized spatial coordinate measurement systems include laser tracker [

5], total station [

6], indoor global position system (i-GPS) [

7], laser radar [

8], photogrammetry [

9], etc. Among small-range vision-based measurement systems, those based on structured light are the most widely used. To guarantee reliable measurement of key local features (KLFs), the hybrid measurement system (HMS) undergoes a series of calibration steps prior to data acquisition: (1) camera calibration, (2) intrinsic calibration of the 3D scanner, (3) local extrinsic parameter calibration, and (4) global calibration for constructing the measurement field. Within the HMS, extrinsic parameter calibration establishes the spatial transformation between the 3D scanner’s coordinate system and an intermediate reference frame. Within the global measurement domain, global calibration plays a critical role in minimizing cumulative errors in final measurements and maintaining overall accuracy across large-scale workspaces. Because camera and 3D scanner calibration is usually predefined, system setup primarily focuses on performing global and local calibration.

For extrinsic parameter calibration, among global direct pose estimation methods in structured-light vision measurement systems, the extrinsic parameter calibration method based on cooperative targets is the most widely used [

10]. Barone et al. [

11,

12] utilized a two-dimensional planar checkerboard calibration plate, combined with Singular Value Decomposition (SVD), to perform the extrinsic parameter calibration of a hybrid measurement system integrating a 3D structured-light scanner with global stereo vision. Liu et al. [

13] combined the indirect calibration method based on rigid body transformation to achieve the rapid calibration of extrinsic parameters. Du et al. [

14] utilized target balls that could be simultaneously identified by both global and local measurement devices as cooperative targets. Based on the single-point multiple-point method and the rotation method, they constructed common feature points with non-coplanar distribution and pose reference coordinate systems, respectively, achieving the rapid calculation of the extrinsic parameters matrix of the 3D scanner. To enhance the calibration accuracy of extrinsic parameters, Qu et al. [

15] designed a cooperative target and combined the adjustment optimization technology to establish a calibration model that joins multiple independent reference frame transformations, achieving high-accuracy and rapid calibration of extrinsic parameters. However, it did not take into account the issue of differences in measurement data accuracy levels. To address this issue, Jiang et al. [

16,

17,

18] proposed an extrinsic parameter calibration method that takes into account the scale factor. This method first established a coordinate fusion model for the combined measurement of global binocular vision and local optical scanning, and then, based on numerical simulation, explored the influence law of scale differences on the global positioning accuracy, established an extrinsic parameter calibration model considering the scale factor, and combined multi-view adjustment optimization technology to enhance the calibration accuracy of extrinsic parameters. Garcia-D’Urso et al. [

19] proposed a method for estimating multi-camera extrinsic parameters based on structured 3D markers and iterative optimization. By integrating AI-driven deep learning or regression modules, it offers significant advantages in feature extraction, outlier removal, and initial value estimation, thereby enhancing the robustness and automation level of external parameter solution. Pan et al. [

20] proposed an online extrinsic calibration method that unifies LiDAR and camera data into depth-map representations and leverages depth-edge discontinuities as robust geometric constraints. By incorporating a miscalibration detection module and on-manifold optimization for continuous parameter refinement, the method effectively addresses vibration- and deformation-induced extrinsic drift and enhances the resilience and autonomy of multi-sensor calibration in dynamic environments. This line of work highlights emerging AI- and optimization-driven approaches for achieving resilient, autonomous extrinsic calibration in dynamic environments. In summary, current studies on extrinsic parameter calibration of terminal measurement systems have achieved significant results in aspects such as the construction of calibration targets and algorithms for solving extrinsic parameters. However, the critical challenges of severe accumulation of multi-baseline transformation errors caused by the difficulty in tracing, constraining, and compensating heterogeneous measurement errors remain effectively unresolved. Moreover, multi-reference frame transformations involve large-angle rotations, which lead to poor stability in the calculation of transformation parameters, making it difficult to ensure solution accuracy.

For global calibration, constructing a large-scale global measurement field based on laser tracker is one of the most effective means for achieving global accuracy control. Among its key challenges, the measurement error control of global common points (GCPs) is the key research direction to ensure the accuracy of such field construction [

21]. Jin et al. [

22] elaborated in detail on the construction method of a large-scale measurement field using station-transfer method based on a laser tracker and established a transfer station error model that takes into account both the global common point configuration and measurement errors. The study revealed the correlation between the layout of common points, the measurement errors of the laser tracker, the parameter errors of the transfer station, and the transfer station errors. Predmore [

23] proposed a method for optimizing the coordinate transformation parameters considering the ellipsoid of the measurement uncertainty of the GCP, which utilized the Marhalobanb beam adjustment technique to solve the orientation problem of the multi-base station measurement system and the weighted optimization of the common point coordinate measurements. To address the issue of unknown and uncontrollable measurement errors in GCPs, Wang et al. [

24] proposed a large-scale coordinate unification method based on standard artifact. This method first introduces standard artifact with prior geometric knowledge to replace the traditional independent common points and eliminates gross errors by setting allowable error limits, thereby obtaining qualified initial values for common reference point measurements. On this basis, a coordinate value optimization method based on geometric constraints is proposed, further improving the measurement accuracy of common reference points. Additionally, through the Procrustes spatial coordinate system registration method, the coordinate unification of large-scale measurement systems was achieved. However, this method cannot effectively reduce the impact of laser tracker angle measurement errors on the measurement accuracy of spatial points. To improve the global measurement accuracy in the combined measurement of large-sized and complex components, Lin et al. [

25] developed a strategy to establish a large-scale spatial measurement field based on laser interferometric distance constraints. The approach initially applied a multi-station measurement scheme to derive the preliminary 3D coordinates of the GCPs, followed by singular value decomposition (SVD) to determine the stations’ initial orientation. An error model incorporating high-precision laser interferometric distance constraints is established to mitigate the impact of angular measurement errors from the laser tracker on spatial point accuracy. In addition, by introducing multiple one-dimensional carbon fiber rods to form spatial length references, a precision enhancement strategy based on spatial distance constraints is proposed. Length and angular constraint equations are formulated, and a nonlinear optimization algorithm is applied to calculate the correction values of the reference point coordinates, thereby refining the transfer errors and improving measurement accuracy in localized areas. Fan et al. [

26], based on the construction of the weighted rank deficiency adjustment model for laser interferometry, introduced a known-length reference ruler, constructed length constraints for a single posture, and established an adjustment model with additional constraints, thereby further improving the overall accuracy of point distribution across the measurement field. However, under complex on-site conditions, the aforementioned methods face significant challenges in simultaneously identifying and controlling outlier measurements, which limits their ability to effectively suppress the influence of angular measurement errors from laser trackers on spatial point accuracy. Recent advances in large-scale metrology have also highlighted the importance of dynamic error compensation and multi-station self-calibration for laser tracker networks. Zou et al. [

27] proposed a high-precision construction strategy for multi-station laser tracker measurement networks, incorporating ERS-based weighted optimization, iterative refinement of inter-station transformation parameters, and optimal LTMS configuration planning (including station number and spatial placement) to minimize global network error. This line of research demonstrates the growing emphasis on intelligent, optimization-driven frameworks for improving large-scale automated measurement accuracy. Ma et al. [

28] introduced an enhanced registration strategy that integrates Enhanced Reference System (ERS) point-weighted self-calibration with thermal deformation compensation, significantly improving the stability of multi-station global measurements under varying environmental conditions. However, due to the limitations of the difficulty in constructing multi-domain and multi-pose geometric constraints and the insufficient constraints of external high-precision features, it is difficult to effectively control the global datum accuracy under the restricted layout.

To address the aforementioned limitations, we introduce an accuracy-enhanced calibration method that integrates error control and compensation strategies. Firstly, an accurate extrinsic parameter calibration method is proposed, which integrates robust target sphere center estimation with distance-constrained-based optimization of local common point coordinates. This method establishes a correction model for reference frame transformation parameters, effectively compensating for multi-source heterogeneous measurement errors. Subsequently, to construct a high-accuracy large-scale spatial measurement field, an improved global calibration method is proposed. This method incorporates coordinate measurement value optimization and applies a hierarchical strategy for measurement error control. Finally, a robot-assisted laser scanning hybrid measurement system was developed, followed by calibration and validation experiments to verify its performance.

This paper is organized as follows.

Section 2 presents the proposed measurement method along with its fundamental principles.

Section 3 describes the procedure for calibrating the extrinsic parameters of the 3D scanner.

Section 4 illustrates the method for constructing a large-scale spatial measurement field.

Section 5 presents the calibration and validation experiments, whereas

Section 6 summarizes the key outcomes and future work of the study.

3. Extrinsic Parameter Calibration

A high-precision coordinate transformation model between the SCS and ICS is constructed through extrinsic parameter calibration. As calibration is required before the RLSHS is applied to large-scale measurement tasks and cannot be repeated once operation begins, the reliability of the entire measurement process critically depends on the calibration accuracy. To suppress transformation errors, we propose a refined extrinsic calibration approach that combines robust estimation of target sphere centers with a distance-constrained optimization of local common point (LCP) coordinates.

3.1. Extrinsic Parameter Calibration Model

During the calibration process, a specially designed multi-source, non-coplanar cooperative target (MNCT), comprising several SMRs and standard ceramic balls (SCBs), was fixed within the measurement workspace. A schematic of the calibration procedure is shown in

Figure 2.

Let

denote the 3D coordinates of the observed target point center

on the MNCT in the SCS, and let

denote the corresponding coordinates of the same target point center

in the RCS. Based on the principle of coordinate transformation, the following equation is established:

Let

represent the 3D coordinates of the pose observation points

arranged on the surface of the scanner in the ICS, and

be the measured coordinate values of the corresponding observation points in the RCS, then the following equation can be established:

By combining Equations (3)–(5), the transformation matrix between SCS and ICS is obtained:

The coordinate values of the target points obtained by the measurement equipment contain measurement errors, which lead to deviations in each stage of reference frame transformation. To improve the estimation accuracy of transformation parameter, it is necessary to reduce the multi-source heterogeneous measurement errors, thereby establishing an optimization calibration model that jointly constrains the errors of each independent transformation, that is:

where

,

and

represent the optimized values of

,

and

, respectively.

3.2. Robust Target Sphere Center Estimation via Denoised Point Cloud Fitting

As mentioned earlier, the three-dimensional coordinate values of the center of the scanning target ball (SCB) in the SCS are one of the important input coordinate values for solving the transformation matrix

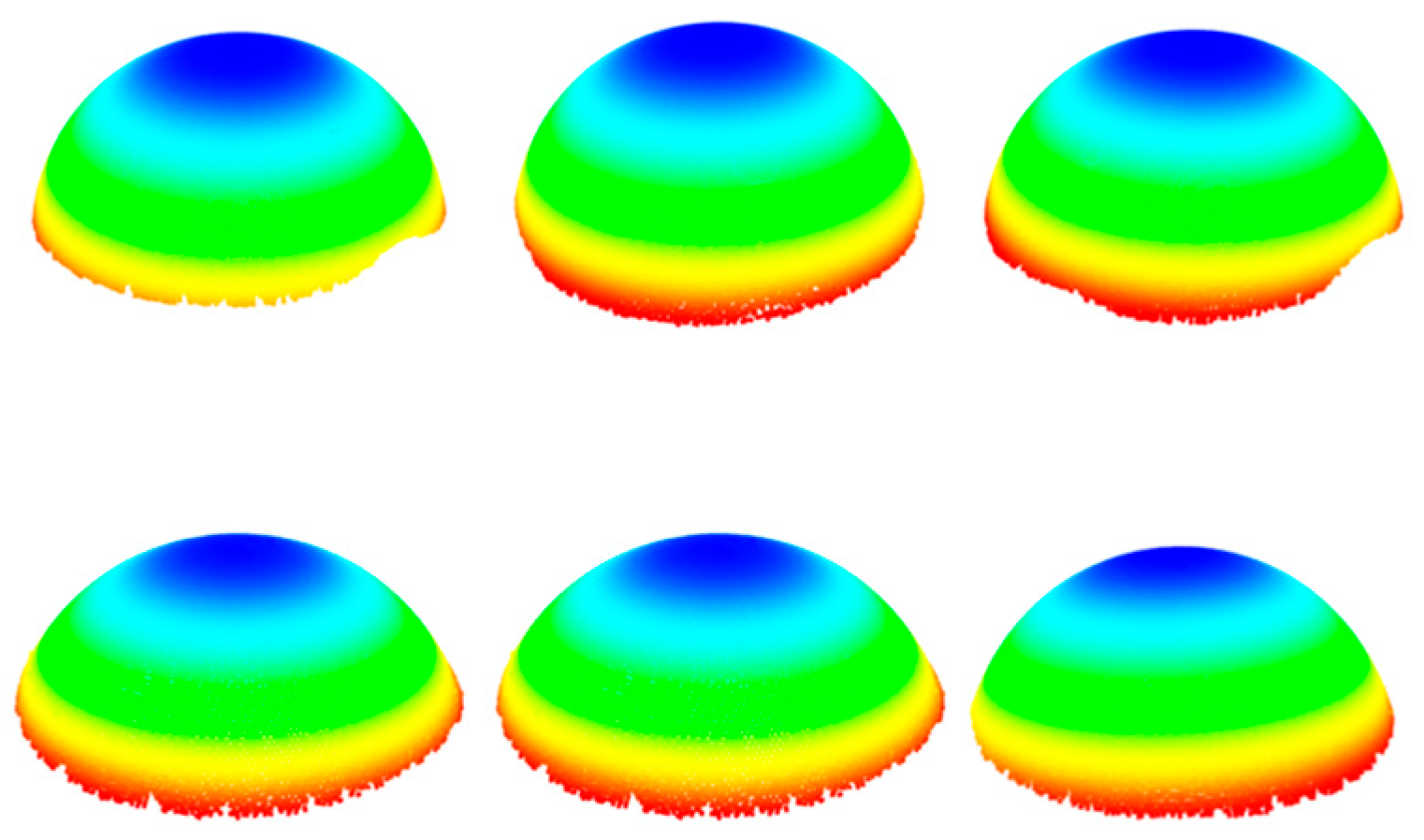

, and the measurement accuracy directly affects the accuracy of solving the transformation parameters. During the measurement process, a 3D scanner was used to scan the SCB, and the obtained original point cloud of the SCB’s spherical top surface is shown in

Figure 3.

Due to the large volume of point cloud data and the presence of complex background noise, the 3D spatial coordinates of the SCB’s center cannot be directly obtained. Therefore, a point cloud preprocessing algorithm is required to process the SCB’s scanned data and accurately fit the center coordinates of the sphere. However, in the scanned data, there are various types of complex noises, such as high-density redundant points and sparse spatial discrete points, which affect the fitting accuracy of the sphere’s center. To address this issue, this study investigates a scanning target ball center-fitting method based on point cloud denoising. This method uses the radius filtering method, the random sample consensus (RANSAC) algorithm, and the Euclidean clustering segmentation method to remove noise and accurately fit the coordinates of the ball center, providing a reliable data basis for the subsequent solution of the reference transformation matrix.

First, to improve processing efficiency of the point cloud and ensure the accuracy of sphere center fitting, the initial point cloud is subjected to downsampling. Based on this, radius filtering method is applied to eliminate the sparse space discrete points, obtaining the sphere top surface point cloud that only contains high-density redundant noise points, as shown in

Figure 4a. Further, for the high-density redundant noise points, considering that their point cloud density is basically the same as that of the sphere’s top surface point cloud, the Random Sample Consensus (RANSAC) algorithm is utilized to fit them into a plane and eliminate them, yielding the point cloud of the sphere’s top surface, as shown in

Figure 4b.

Subsequently, the point cloud is segmented into multiple groups of individual target spherical point clouds using the Euclidean clustering segmentation method, as shown in

Figure 5. Finally, the RANSAC algorithm is applied to fit the spherical surfaces, yielding the coordinates of the SCB sphere centers.

3.3. Distance-Constraint-Based Optimization Method for Local Common Point Coordinates

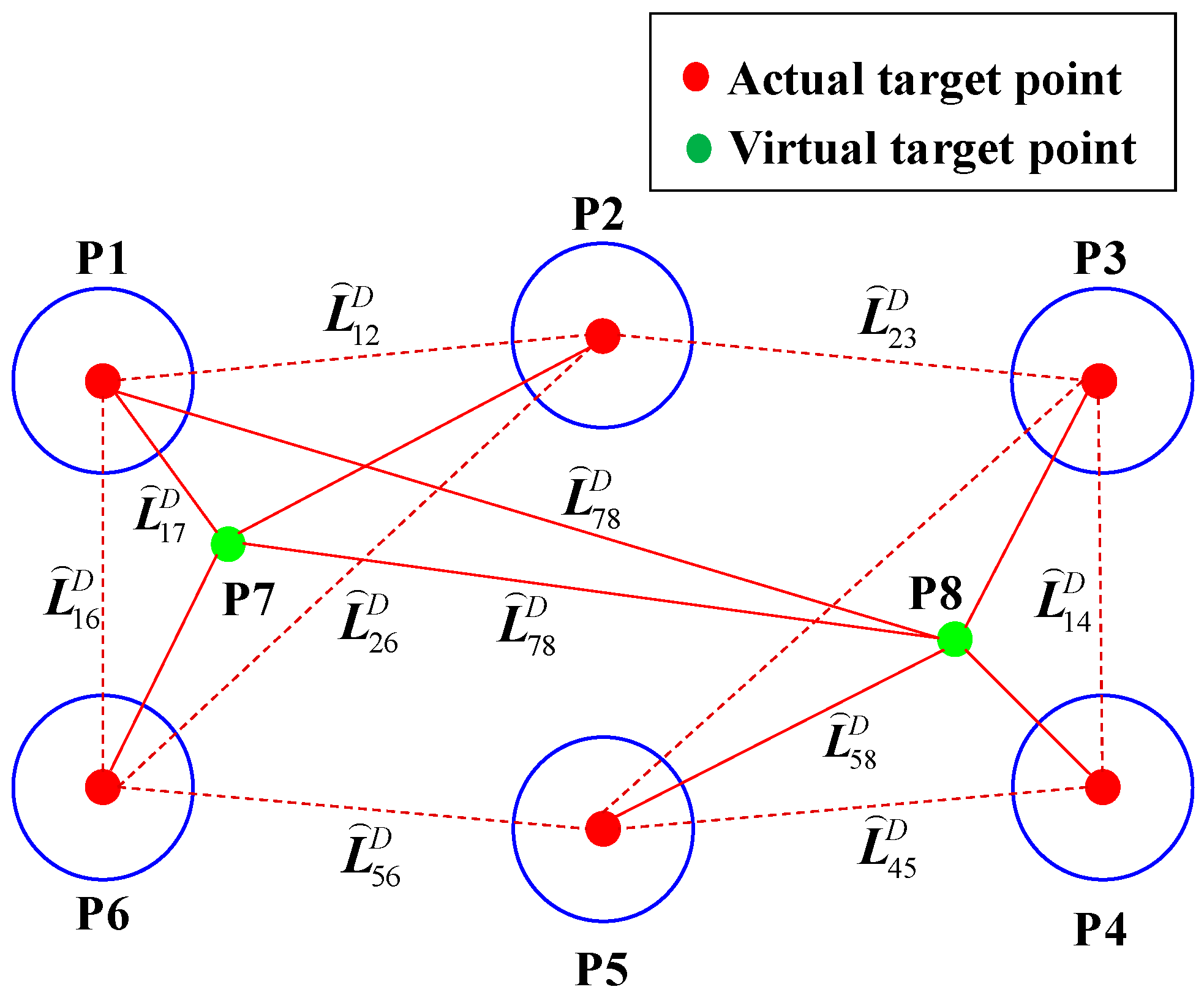

To address the difficulties in tracing, constraining and compensating for the multi-source heterogeneous measurement errors during the extrinsic parameter calibration, a coordinate optimization method based on multi-distance constraints is proposed. This method utilizes the prior distances between target points on the MNCT as reference values. First, a constraint model is formulated using these known distances. Then, the model is linearized, and the spatial relationships between measurement points are represented as a system of equations. Finally, conditional least-squares adjustment is applied to estimate coordinate corrections, thereby compensating for errors from heterogeneous sources.

According to the principles of conditional adjustment, when the number of constraint distance equations must be greater than the number of coordinates to be solved, that is, the number of local common points is no less than 7, there will be redundant equations. To reduce the number of actual physical targets, this study introduces a hybrid layout strategy that incorporates both real and virtual points. This method determines a virtual point coordinate value by calculating the coordinates of three actual points and then constructs constraint distances, as shown in

Figure 6. Finally, it realizes the optimization of coordinate measurement value errors.

This section takes the local common points on the MNCT as an example to elaborate on the coordinate value optimization method proposed in this study. Denote

as the measured three-dimensional coordinates and

as the corresponding theoretical coordinates of the LCPs on the MNCT, acquired using either the 3D scanner or the laser tracker. Accordingly, the theoretical distance

between any two LCPs can be expressed as a function of their theoretical coordinates:

Suppose the following condition holds:

The distance constraint is linearized by performing a second-order Taylor expansion and disregarding higher-order terms:

where

denotes the measured distance between two LCPs, while

represents the correction vector accounting for their on-site measurement errors;

is the partial derivative.

Further, we obtain:

where

is the closure error.

Accordingly, Equation (11) can be expressed in matrix form as:

where

is the coefficient matrix;

is the closure error vector.

In Equation (12), there are 3

n unknowns and

equations. By employing Lagrange multipliers to solve conditional extrema, the objective function is derived as follows:

where

is the contact number vector.

Setting the first derivative of

to zero yields:

Then, by transposing both sides, the following expression is obtained:

Therefore, in conclusion, we can obtain:

Finally, the optimized results of the observed values are obtained:

The above optimized process for obtaining local common point coordinates is summarized, and the pseudo-code is provided in Algorithm 1.

| Algorithm 1: Distance-constraint-based optimization for local common point coordinates |

| Input: | | Initial coordinates of observation points |

| Output: | | Coordinates correction data of observation points |

| | 1 | Compute coordinate corrections and optimized coordinates |

| | 2 | For i = 2 to n do |

| | 3 | For j = I − 1 do |

| | 4 | Build the measurement equation of the distance observation (Equation (9)); |

| | 5 | Build the residual equation (Equation (12)); |

| | 6 | End for; |

| | 7 | End for; |

| | 8 | Do begin; |

| | 9 | Build the objective function (Equation (13)); |

| | 10 | Calculate the minimum value for (Equation (14)); |

| | 11 | Set ; |

| | 12 | Compute the contact number vector (Equation (15)); |

| | 13 | Return

|

| | 14 | Set

|

3.4. Correction Model for Coordinate Transformation Parameters Considering Multi-Source Heterogeneous Measurement Error Compensation

During the estimation of reference frame transformation parameters, challenges arise due to the limited field of view of the 3D scanner and the dense distribution of local common points on the MNCT, which increases the correlation among those points. Moreover, large-angle rotations often lead to instability in the solution of transformation parameters, and the accumulation of multi-source heterogeneous measurement errors further degrades the accuracy and reliability of the computed results. Therefore, based on the above error optimization strategy for coordinate measurement values, this section constructs a transformation parameter correction model that takes into account the compensation for multi-source heterogeneous measurement errors.

As an example, the estimation of the transformation matrix

parameters between the SCS and RCS is carried out using a stepwise strategy while accounting for arbitrary rotation angles, as illustrated in

Figure 7. Specifically, this strategy begins by applying the coordinate optimization method introduced in

Section 3.3 to compensate for measurement errors of local common points. Subsequently, a middle reference frame is constructed using three non-collinear points to derive an initial estimate of the transformation parameters. Finally, the parameters are refined using the Bursa–Wolf model combined with a weighted least-squares criterion, resulting in improved accuracy of the final solution.

Let

,

, and

denote the compensated coordinates of three non-collinear points on the MNCT in the SCS, and let

,

, and

represent their corresponding compensated coordinates in the RCS. Using point

as the origin, a transitional coordinate frame

is constructed via the three-point method. Based on this, the transformation matrix from the SCS to the middle transitional coordinate system (MCS) can be derived as follows:

where

,

and

are unit direction vectors.

Similarly, by establishing a transitional coordinate system centered at point

, the transformation matrix of the MCS relative to RCS can be obtained as:

By combining Equations (18) and (19), the initial transformation matrix between the SCS and the RCS is obtained as follows:

where

,

and

represent the corresponding initial translation parameters, while

,

and

represent the initial rotation angle parameters:

where

represents the element in the

i-th row and

j-th column of the rotation matrix

.

Let

be the measured coordinates of a common point in the SCS, and let

be the coordinates after the initial transformation. The relationship between them can be computed using the following equation:

At this stage, the reference transformation angle between

and

satisfies the small-angle condition. According to the Bursa–Wolf model, the transformation relationship between them is established as follows:

where the specific forms of

and

are as follows:

where

is the scaling factor;

,

and

are the rotational parameter errors based on the initial transformation, while

,

and

are the translational parameter errors.

Due to the existence of multi-source heterogeneous measurement errors, the corrected model of Equation (23) is obtained:

where

represents the actual measurement error of the point obtained using the laser tracker, while

refers to the initial transformation value of the measurement error for the 3D scanner-acquired point.

By omitting higher-order terms, the equation is further simplified as follows:

For each local common point, Equation (26) can be extended accordingly:

where

denotes the difference vector between the local common point coordinates and the transformed measured coordinates in the RCS;

is the coefficient matrix;

represents the vector of transformation parameter errors to be solved;

is the measurement error vector obtained from the laser tracker; and

denotes the vector of the values obtained after the initial transformation of the measurement error values of the scanner.

Let

, then the error equation can be expressed as follows:

The above equation is a classic indirect adjustment model. Obviously, the measurement errors of laser trackers and scanners are independent of each other and follow the principle of weighted sum of squares minimum:

where

represents the variance–covariance matrix of the measurement errors for the local common point coordinates in the RCS, which can be calculated using the method described in reference [

25];

is the variance–covariance matrix of measurement errors for the local common point coordinates after the initial coordinate transformation in the SCS:

where

is the variance matrix of the scanner measurement error:

where

,

and

are the variances of the coordinate measurement errors of the scanner in the X, Y, and Z directions, respectively.

The objective function is formulated using the Lagrange extremum method as follows:

where

is the contact vector; By taking the partial derivatives of

,

and

with respect to their respective parameters and setting them to zero, the following result is obtained:

Thus, the least-squares solution of

can be expressed as:

Finally, by incorporating the initial reference transformation parameters, the optimized transformation matrix is obtained. Similarly, the optimized transformation matrix is derived. Based on Equation (7), the optimized calibration matrix is then calculated.

4. Construction of Large-Scale Spatial Measurement Field

To address the issue of effectively controlling measurement errors of GCPs during the construction of large-scale spatial measurement fields for global calibration under constrained layouts, this section proposes an optimization method for coordinate measurement values considering the hierarchical measurement error control, which further improved the position measurement accuracy of GCPs across the entire measurement range. As illustrated in

Figure 8, the principle of the proposed method is schematically shown.

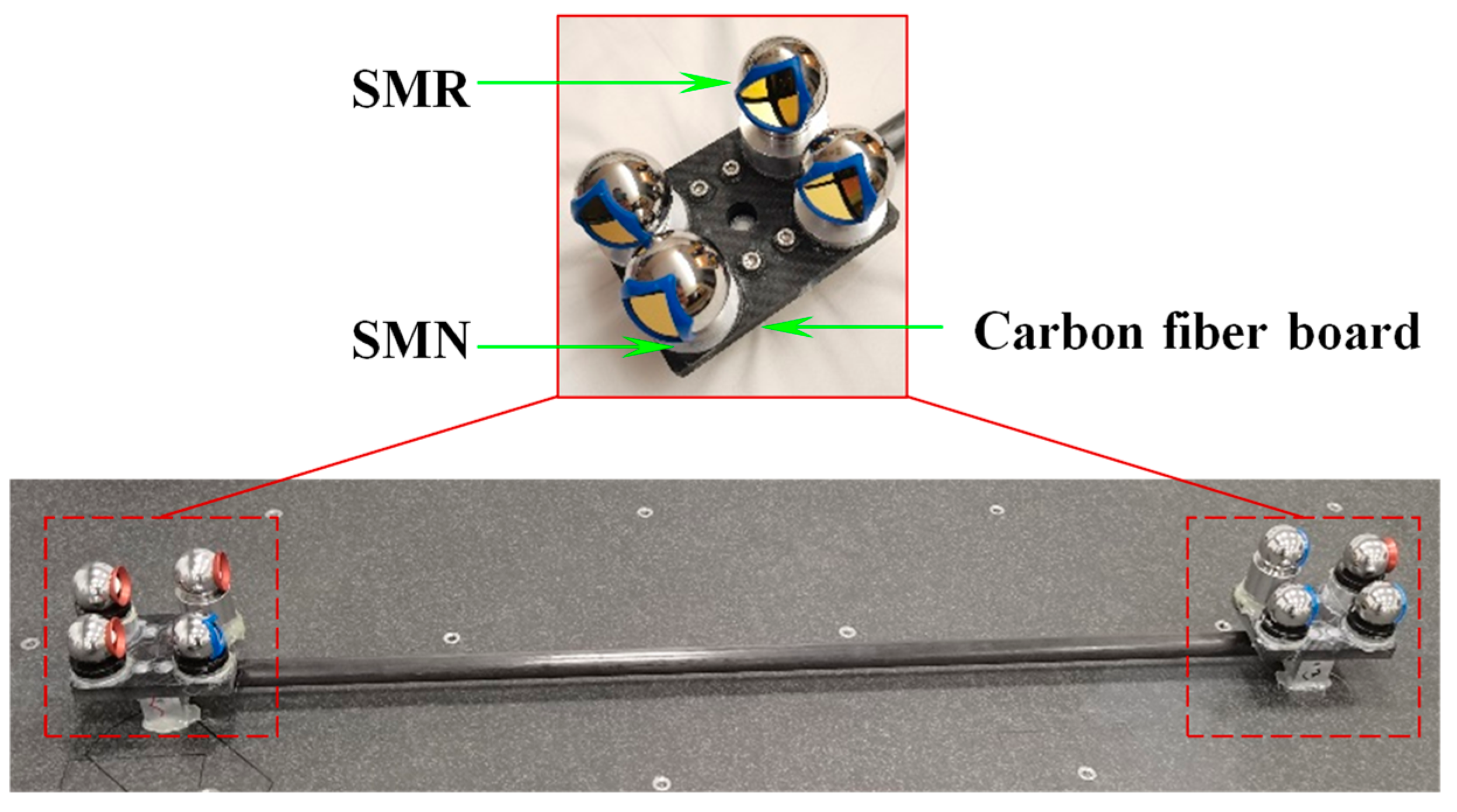

First, to eliminate gross errors in the measurement of GCPs and to monitor the working performance of the laser tracker on-site, thereby ensuring measurement consistency, a distance-based model was developed to reject out-of-tolerance measurement points. A multi-dimensional cooperative calibrator (MDCC) based on the four-point non-coplanar principle is introduced, consisting of carbon fiber plates, carbon fiber rods, SMNs, and SMRs, as shown in

Figure 9. By leveraging the characteristic that the distance between any two points is independent of the coordinate system, the geometric relationship between the target points on the MDCC is calibrated using a high-precision CMM, thereby constructing multiple posture distance constraints. The laser tracker acquires measurements of the target points on the MDCC, which serve as GCPs. By calculating the difference between the measured and calibrated distances between two GCPs and comparing this difference with the allowable error limit, it can determine whether the measurement value of the GCP is out of tolerance.

Let

and

represent the measured coordinates of two points used to construct the spatial constraint distance, as measured by the laser tracker at station

. Then, the following equation can be established:

where

denotes the measured spatial constraint distance,

represents the nominal distance between the two points, and

is the difference between the measured and nominal distance.

The allowable tolerance for each constraint distance is defined according to the measured distance and the required measurement accuracy:

where

is the allowable tolerance.

In the measured data at the site, if the measurement error of a certain distance on the MDCC exceeds the allowable error limit, it can be judged that one of the two associated points has a significant error, indicating the presence of an unqualified point. Conversely, if the distances from a given target point to all other target points fall within the specified tolerance range, the point can be considered a qualified GCP. After obtaining the qualified GCPs, further control of their measurement errors is required. In this study, the large-scale spatial measurement field, established through multi-station laser tracker measurements combined with multiple MDCCs, essentially forms an edge-type measurement network. During the adjustment stage, the precision with which the initial GCP coordinates are assigned plays a decisive role in the accuracy of the final coordinate transformation parameters.

To enhance the assignment accuracy of the initial coordinates of both the laser tracker stations and the GCPs, this section first employs the 7-parameter Procrustes method to establish the preliminary orientation of multiple stations. Subsequently, a measurement model is developed for each station to obtain the corresponding covariance and Jacobian matrices. Following the matrix-weighted linear minimum variance fusion criterion, the weight matrix is derived to effectively fuse the multi-station measurement data and estimate the 3D coordinates of the GCPs. Finally, based on the common point transformation method, the initial 3D coordinates of the laser tracker stations are determined. Building on this foundation, the high-precision interferometric distance measurements of the laser tracker, combined with multi-domain and multi-pose length constraints, are utilized to further optimize the 3D coordinates of the GCPs. Through this procedure, the impact of angular measurement errors on spatial point localization is mitigated, thereby enabling refined correction of GCP measurement deviations.

Let

denote the measurement station coordinates of the

k-th

laser tracker, and let

denote the coordinates of the

i-th

GCP. Based on the distance formula, the corresponding error equation is established as follows:

where

represents the laser interferometric measurement length value of the laser tracker at the

k-th station for the

i-th common point, and

represents the corresponding error.

By linearizing Equation (38), the following expression is obtained:

where

and

represent the correction values for the measurement station center coordinates and the GCP coordinates, respectively;

is the oblique distance computed from the initial coordinate values, and

,

, and

are the corresponding coefficients.

Based on the above analysis,

n constraint equations can be formulated under a single laser tracker measurement station. Therefore, for

m independent measurement stations, a total of

m ×

n equations can be established. Equation (39) can thus be rewritten in matrix form as follows:

where

,

; Vector

consists of the correction terms for both the measurement station coordinates and the measured coordinates of the common points, while

denotes the corresponding coefficient matrix.

In Equation (40), the unknown parameters to be estimated are the 3D correction values of both the GCPs and the laser tracker measurement stations. The total number of unknown parameters is 3m + 3n, and the number of equations in the error equation system is

m ×

n. Due to the rank deficiency of the system matrix

, a unique solution can be obtained by introducing centroid-based reference constraints in accordance with the principles of rank-deficient network adjustment. However, this approach may lead to uneven distribution of point accuracy across the measurement field. To address this issue, based on the previously established error model, in this section, by combining the multi-region and multi-attitude length constraint conditions in space, an optimization model for coordinate measurement values based on spatial multi-attitude length constraints is established:

where vectors

and

represent the coordinate corrections of the constrained points, while

,

, and

denote the associated coefficient matrices, and

is the constant term. By combining Equations (40) and (41), the following system can be obtained:

where

is a computationally large sparse matrix composed of first-order derivative terms.

Under the constrained optimization method, the objective function is given by:

where

is the vector of association coefficients.

Finally, the correction values for coordinate optimization are obtained as:

where

is the invertible matrix,

.

5. Experimental and Discussion

5.1. System Construction

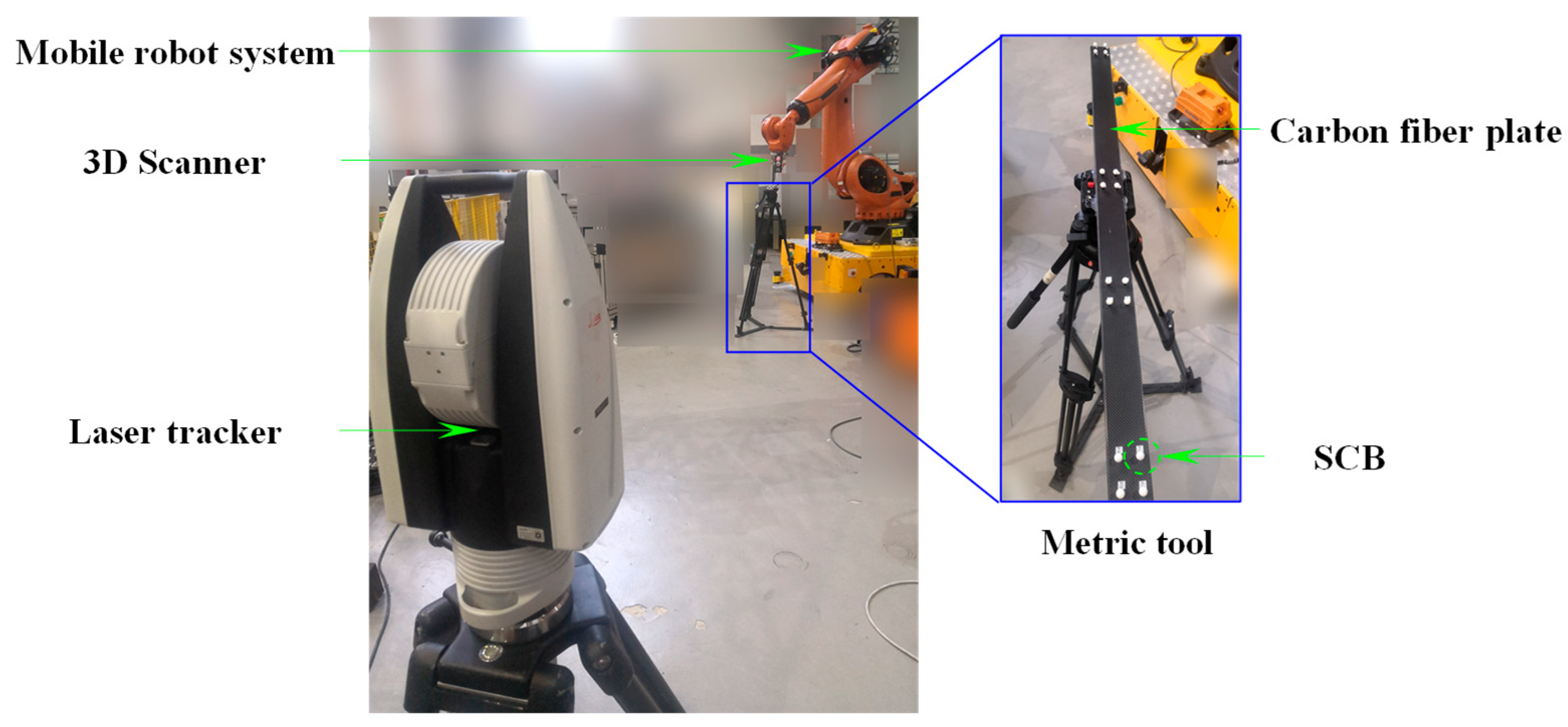

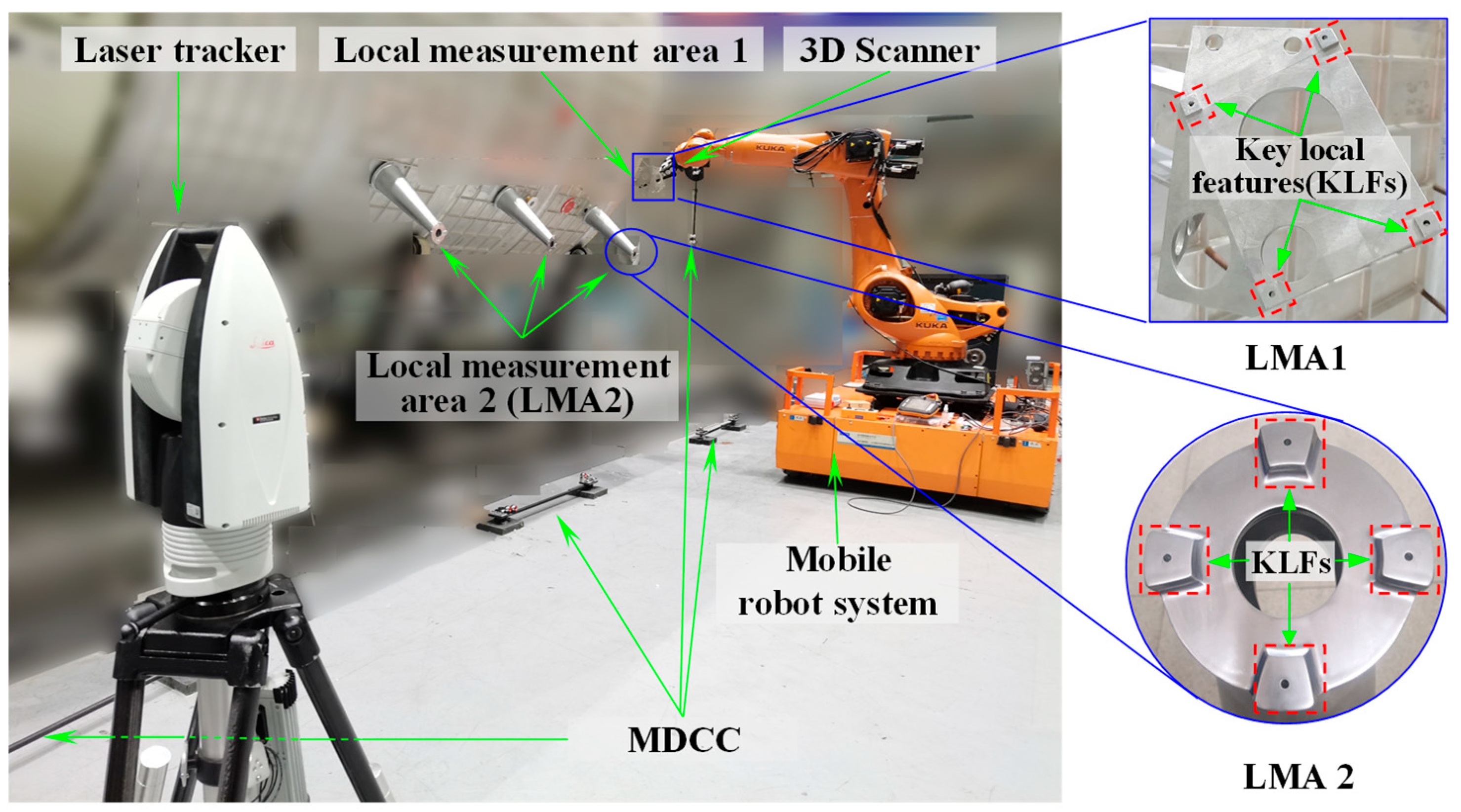

To validate the effectiveness of the proposed calibration method, a hybrid measurement system integrating global laser tracking and high-precision local scanning was constructed, and corresponding calibration experiments were conducted. As illustrated in

Figure 10, the setup consists of a laser tracker, a structured-light 3D scanner, and a mobile robotic platform that integrates an industrial robot with an AGV. Specifically, the main structure of the MNCT is made of stable performance carbon fiber material, with eight SMNs for placing 0.5-inch target balls (SCB and SMR) on the surface, achieving a repeatability accuracy of up to 0.001 mm. The observation target of the laser tracker is the 0.5-inch SMR, and the spherical deviation is approximately 0.002 mm. The observation target of the 3D scanner is the 0.5-inch SCB, and the spherical deviation is approximately 0.0015 mm. Therefore, the center positions of these two types of observation targets have a high spatial co-location accuracy. Furthermore, several 0.5-inch SMNs were arranged on the surface of the 3D scanner to accommodate the 0.5-inch SMR, enabling the laser tracker to accurately locate and track the position and orientation of the 3D scanner.

The binocular structured-light 3D scanner used in this study was the LMI Gocator3 series (LMI Technologies Inc., Burnaby, BC, Canada), featuring a resolution of 5 million pixels, an X-Y spatial resolution of 0.025 mm, a measurement depth range of 87 mm, a field of view (FOV) of 27 mm × 45 mm, and a verified VDE accuracy of 0.025 mm. The laser tracker employed in this study was the Leica AT960-MR (Leica Geosystems AG, Heerbrugg, Switzerland), offering a full 360° horizontal rotation and a ±145° vertical tilt range. It features an angular resolution of 0.07 arcseconds, an angular accuracy of 1.7 arcseconds, and a maximum measurement range of 80 m. The full-range measurement accuracy is specified as ± (0.015 + 6 ppm × L) mm, where L represents the measured distance in meters. Prior to the experimental procedure, the spatial relationships among the centers of the target spheres in MDCCs and MNCT were calibrated in advance using a Zeiss PRISMO coordinate measuring machine (Carl Zeiss Industrielle Messtechnik GmbH, Oberkochen, Germany), ensuring high measurement accuracy and experimental reliability. For the typical distances between target spheres in our experiments, the resulting measurement error is less than 0.0015 mm. A conservative combined uncertainty of ±0.002 mm (k = 2) was determined by integrating Type A analysis from repeated measurements with Type B estimates derived from instrument specifications and environmental stability. The integrated uncertainty provides a reliable reference for evaluating the calibration accuracy of the developed method. All experimental procedures were performed under controlled laboratory conditions, where the ambient temperature was maintained within 22–23 °C and the relative humidity was kept at 55–60%.

5.2. Extrinsic Parameter Calibration Experiments

Firstly, the relative pose of the laser tracker, 3D scanner, and MNCT was varied to acquire several distinct measurement positions for the two instruments, while the MNCT itself was kept fixed. At each measurement position, both instruments repeatedly measured the respective observation target points on the MNCT, thereby generating redundant datasets. After noise reduction and centroid estimation of the scanning sphere (

Section 3.2), the optimization procedure in

Section 3.3 and

Section 3.4 was applied to calculate refined and corrected target point coordinates of LCPs acquired by 3D scanner and laser tracker, as reported in

Table 1 and

Table 2.

In addition, the angle-constrained coordinate optimization method reported in reference [

25] was applied to derive optimal and corrected coordinates for the scanning pose reference point, with results summarized in

Table 3.

Based on the above data and in combination with the correction model for the reference transformation parameters, the optimized extrinsic calibration matrix

is obtained as follows:

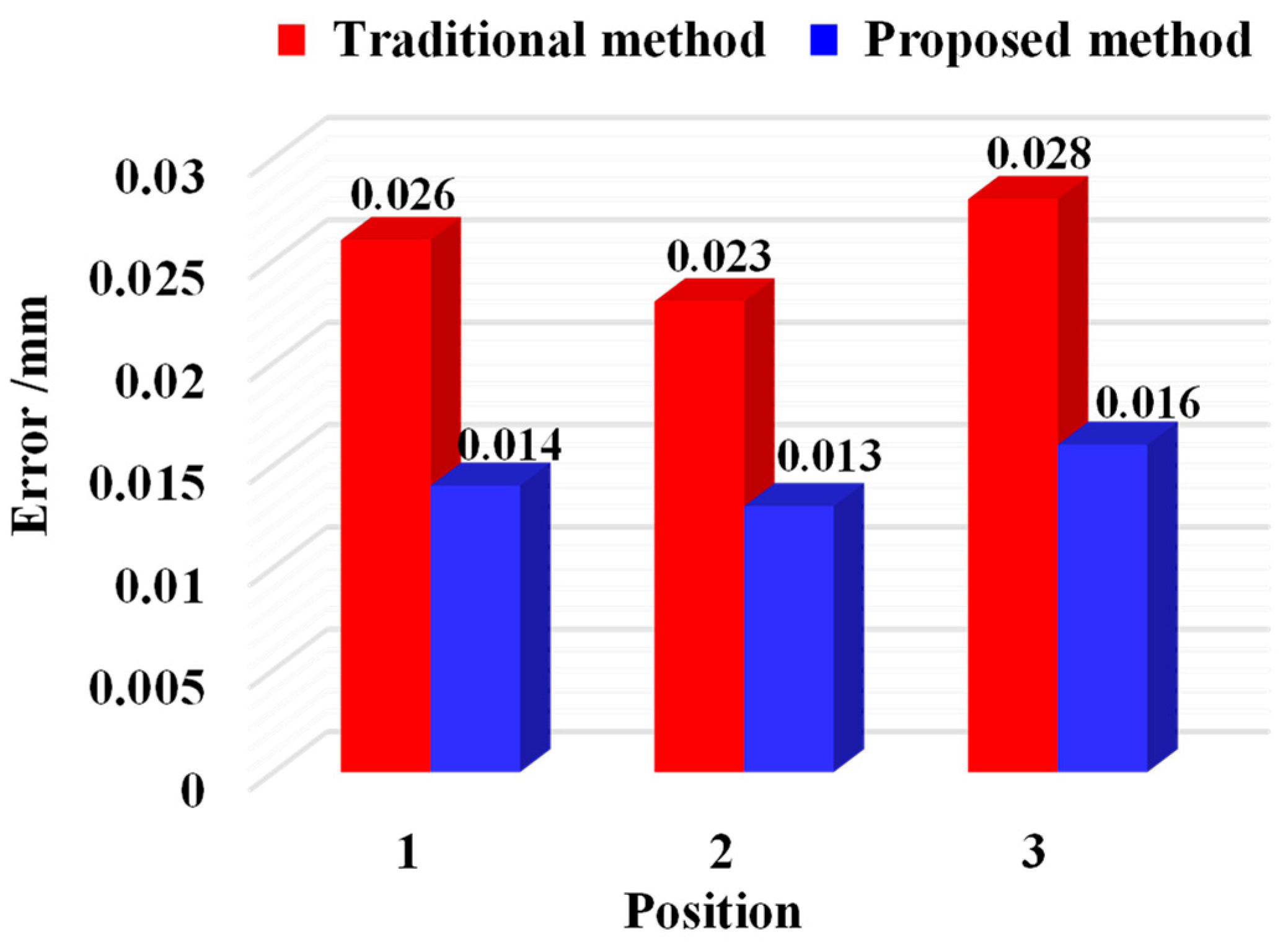

To validate the calibration accuracy, the three-dimensional coordinates dispersion of local common points is calculated using the calibration matrix before and after optimization; that is, the calibration accuracy of extrinsic parameters is measured by the root mean square error of the distance between the two points before and after optimization. In this study, the traditional indirect calibration method and the calibration method proposed are used to calculate the calibration errors of the extrinsic parameters, respectively, and the mean calibration errors under multiple positions are obtained, as shown in

Figure 11. The conventional calibration approach resulted in mean extrinsic parameter calibration errors of 0.026, 0.023, and 0.028 mm at Positions 1–3, whereas the distance-constrained method reduced these errors to 0.014, 0.013, and 0.016 mm, respectively.

The proposed distance-constrained method maintained a mean calibration error below 0.016 mm across all positions, outperforming the conventional indirect calibration, which showed a mean error of 0.028 mm, thereby confirming its effectiveness.

5.3. Experiments on the Construction of Large-Scale Spatial Measurement Field

Following the method outlined in

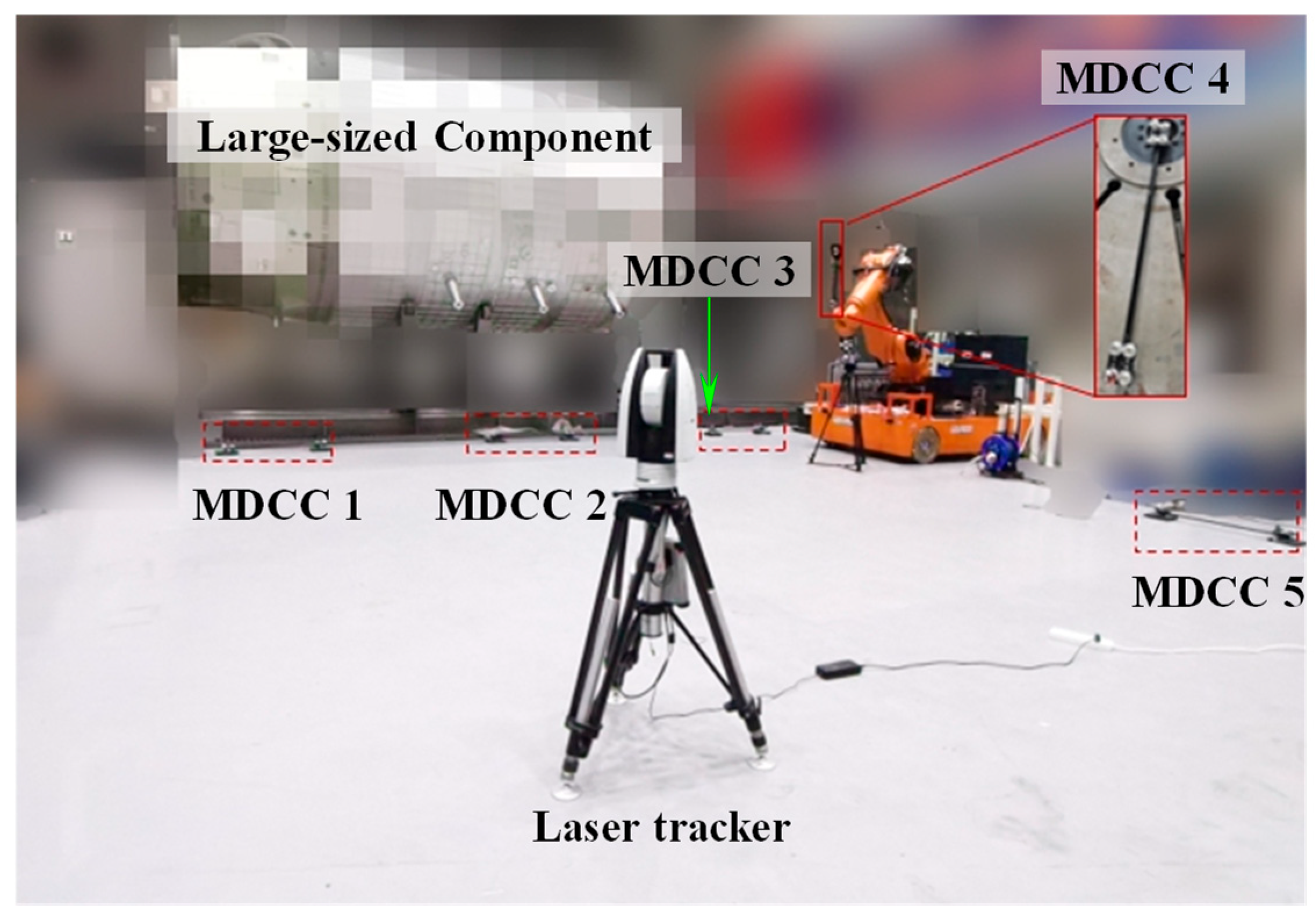

Section 4, a high-precision spatial measurement field was established over a 14 × 6 × 3 m volume, serving as a global reference framework for precise measurements. The experimental site configuration is illustrated in

Figure 12. For accurate global calibration, several MDCCs were developed specifically. As shown in

Figure 13, the MDCC is primarily constructed from carbon fiber and has an approximate length of 1000 mm. Eight 1.5-inch SMNs for 1.5-inch SMRs are rigidly attached along its surface. Before conducting the experiments, the spatial arrangements of all SMR centers were accurately calibrated with a high-precision CMM to guarantee reliable and precise experimental data.

Firstly, five MDCCs were deployed across the measurement site, with the maximum elevation difference between common points reaching approximately 3 m. Four measurement stations are set up at different locations. At each station, the laser tracker measured the GCPs on the MDCCs. The first station’s coordinate system served as the GCS, while the remaining three were treated as local coordinate systems for subsequent measurements.

Subsequently, based on the measured distance between the laser tracker and the common points, permissible error thresholds were defined to identify and exclude outliers on site. Once the gross errors were removed and qualified data were retained, the measured coordinates of the common points from the three LCSs were transformed into the GCS. Then, by adopting the method proposed in this study, the coordinate measurement values of the GCPs with redundant measurements are optimized. For each MDCC, two representative GCPs were selected. Applying the method presented in

Section 4, optimized coordinates along with their associated correction values for the selected GCPs within the GCS and the LCS1 were determined, as summarized in

Table 4 and

Table 5.

Currently, evaluating the accuracy of large-scale spatial measurement networks often relies on computing station transfer errors of GCPs [

4]. For this, three methods were applied: the best-fit algorithm in Spatial Analyzer 2023.1 [

29,

30], the single-distance constraint method, and the proposed method. The maximum registration (MPE) and mean registration errors (ME) of selected GCPs obtained from these methods are listed in

Table 6.

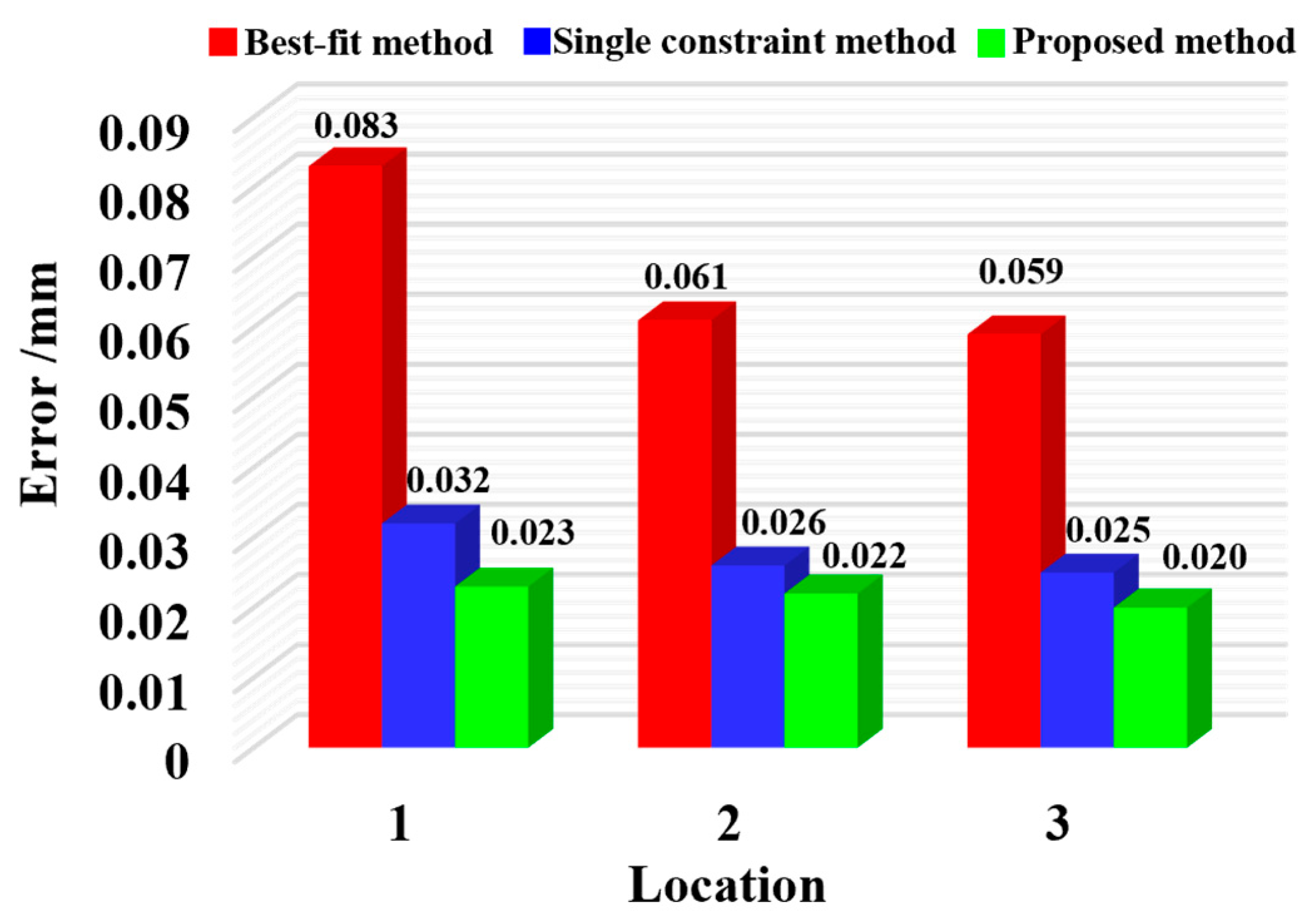

In the GCS, the average station transfer error of the GCPs was calculated to be 0.078 mm using the best-fit method. In comparison, the single-distance constraint method reduced the error to 0.036 mm. Furthermore, the proposed method achieved an even lower average error of 0.033 mm, demonstrating improved accuracy in coordinate transformation. Furthermore, the average station transfer errors of all GCPs under each local measurement station were evaluated, as illustrated in

Figure 14. Using the single-distance constraint method, the error magnitudes at local stations 1 through 3 decreased from 0.083, 0.061, and 0.059 mm to 0.032, 0.026, and 0.025 mm, respectively. Applying the method proposed in this study further reduced these errors to 0.023, 0.022, and 0.020 mm. Collectively, these results demonstrate the high effectiveness of the proposed calibration method.

5.4. Accuracy Verification and Analysis of the RLSHS

To ensure reliable measurement performance, a standardized and widely accepted accuracy verification method is essential for evaluating the measurement accuracy of RLSHS. There are generally two approaches for verifying measurement system accuracy. One involves using a higher-precision metrology device to evaluate the test results. However, this method has notable drawbacks: such devices are often costly, require experienced personnel for operation and maintenance, and demand significant time and resources for setup and calibration. Moreover, discrepancies in accuracy, measurement range, and system characteristics between different instruments can introduce uncertainties and compromise the consistency of the evaluation results. An alternative and more cost-effective method for accuracy evaluation involves using standard artifacts. In this method, the measurement system follows a predefined procedure to measure the calibrated reference object, and the results are then compared with the known pre-calibration values to assess measurement accuracy. Due to its practicality and lower cost, this method is more widely used for evaluating the accuracy of measurement systems.

This study adopts the “sphere spacing error” (SSE) evaluation approach by measuring a calibrated metric tool, and the corresponding tests were performed in accordance with the international accuracy verification standard VDI/VDE 2634 Part 3 [

31]. Sphere-to-sphere spacing error is commonly used as a primary indicator to assess measurement system accuracy. It is quantified as the deviation between the measured inter-sphere distance and the reference distance obtained from calibration. Evaluating this metric provides a comprehensive assessment of system performance and offers a reliable reference for ensuring overall measurement quality. To address considerations such as manufacturing complexity and cost, a customized metric tool was designed in this study. As illustrated in

Figure 15, the metric tool consists of multiple spheres, corresponding holders, and a carbon fiber plate. The inter-sphere distances were precisely calibrated using a high-accuracy coordinate measuring machine.

The accuracy validation procedure for the laser scanning hybrid measurement method is outlined as follows. First, a calibrated reference artifact mounted on an adjustable tripod is sequentially positioned at multiple heights and orientations throughout the measurement volume, while the laser tracker remains fixed. The mobile robot system transports the 3D scanner to various spatial locations, where the scanner captures the metric tool to assess measurement accuracy. Metric tool placements were configured to fully cover the measurement space. Since the sphere spacing exceeded the 3D scanner’s view, a multi-view strategy was employed to reconstruct sphere centers, while the laser tracker simultaneously captured reference points to determine the scanner’s position. All reconstructed sphere centers were then transformed from the SCS to the RCS. The reconstructed sphere centers were subsequently transformed into the global coordinate frame using the established large-scale spatial measurement field. Finally, the inter-sphere distance errors are computed for each metric tool position to evaluate the system’s measurement precision. The accuracy verification setup is illustrated in

Figure 16.

Four spheres were selected from the metric tool as representatives, with calibrated inter-sphere distances of D1 = 851.476 mm and D2 = 1007.418 mm.

Table 7 presents the measured inter-sphere distances along with their corresponding errors. For distance D1, the maximum error (MPE) and mean error (ME) were 0.113 mm and 0.108 mm, respectively, while for distance D2, these values were 0.117 mm and 0.112 mm. All measured errors are below the 0.2 mm threshold, indicating that the RLSHS developed using the proposed method meets the required accuracy standards.

5.5. Experimental Study and Analysis of On-Site Measurement for Key Geometrical Features of Large-Sized Components

To validate the effectiveness and applicability of the proposed method, the RLSHS was deployed on-site for measurement of key geometrical features of large-sized components. On-site measurements were conducted on four key geometric features located on two typical local measurement areas (LMAs), as illustrated in

Figure 17.

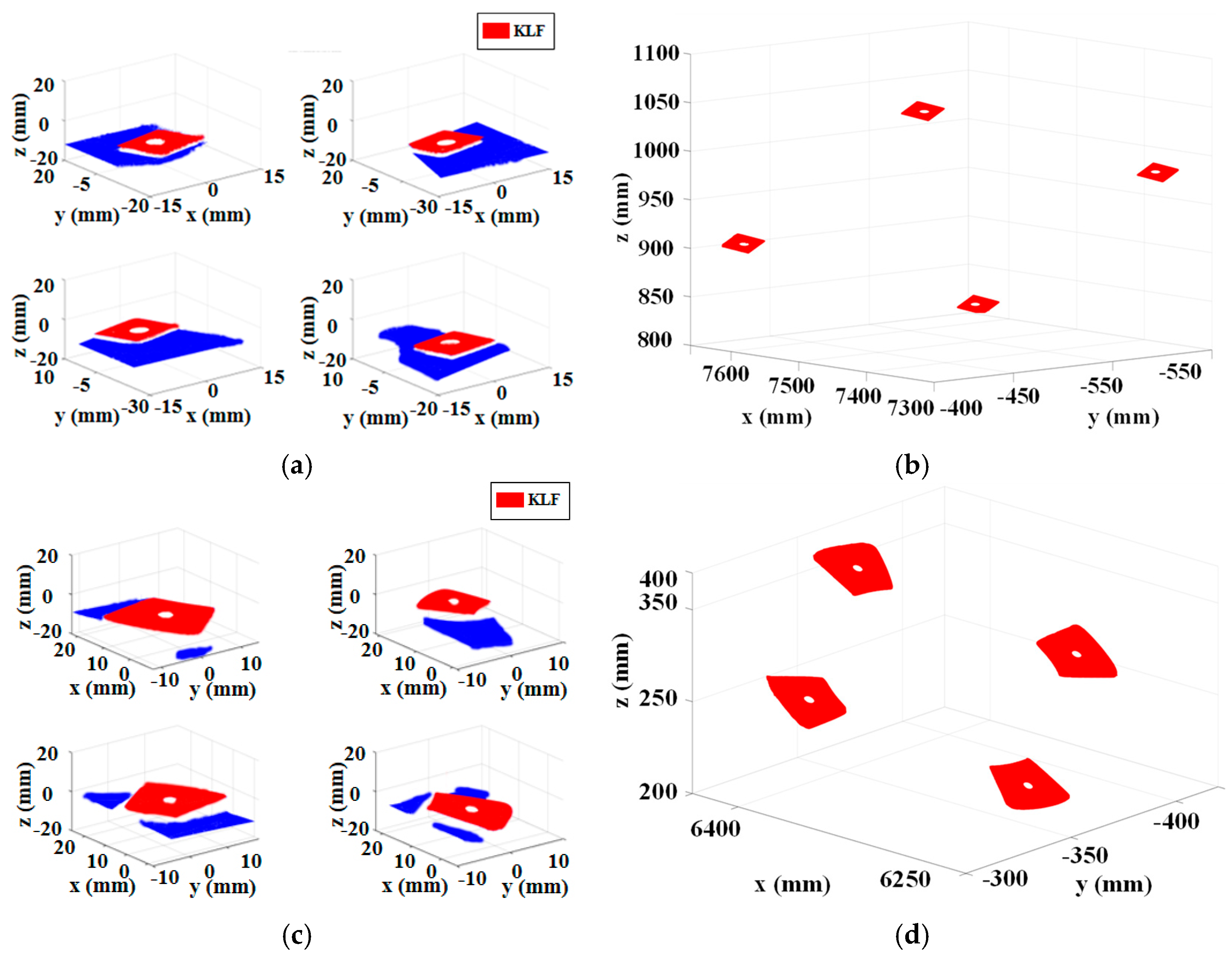

At the initial stage of measurement, the mobile platform first transports the industrial robot to a position near the target features. Subsequently, the scanner pose is established through laser tracker measurements of the SMRs fixed on the scanner. Then, the robot is used to position the 3D scanner at a series of predefined locations. At each location, the scanner captures the target area to generate the initial point cloud of the key geometric features, as illustrated in

Figure 18a,c.

Subsequently, point cloud preprocessing was performed to eliminate noise and extract the target region, as illustrated in

Figure 18b,d. The center coordinates of the holes in the SCS were subsequently derived based on the reconstruction method outlined in

Section 3.2. Meanwhile, the laser tracker captured the reference observation point mounted on the 3D scanner to determine its current pose. Based on this pose, the computed hole centers were transformed from the SCS to the RCS. After completing the measurement of KLFs within the current workspace, the mobile robotic system advanced to the next station, where the same procedure was repeated until all target features had been inspected.

Finally, the three-dimensional global coordinates of the hole centers for all KLFs were obtained, as summarized in

Table 8. The proposed RLSHS completed the inspection of the KLFs in approximately 8 min, whereas manual measurement required about 30 min for the same task. Quantitative comparative experiments indicate that the proposed measurement system and methodology significantly outperform traditional manual measurement in terms of time efficiency and measurement stability. Moreover, our experimental observations indicate that the system exhibits good robustness within moderate thermal fluctuations (approximately ±5–10 °C) typically encountered in industrial workshops. Overall, the developed method demonstrates strong applicability and provides reliable and effective measurement data to support high-quality manufacturing of large-scale components.

6. Conclusions

In this study, a robot-assisted laser scanning hybrid measurement method was developed to achieve accurate and automated 3D shape measurement of large-sized components with numerous small key local features. The proposed method integrates a laser tracker, a 3D scanner, and a mobile robotic system, thereby enabling flexible large-scale measurements. An accuracy-enhanced calibration strategy was introduced, including an accurate extrinsic parameter calibration method based on robust target sphere center estimation and distance-constrained optimization of local common points, as well as an improved global calibration method incorporating coordinate measurement value optimization and hierarchical error control. The accuracy validation experiments demonstrated that, over a measurement span of 14 m, the maximum error (MPE) reached 0.117 mm, while the mean error (ME) was 0.112 mm, thereby verifying the calibration method’s high precision. Moreover, large-scale scanning experiments conducted on representative local measurement areas of a complex large-sized component further verified the method’s applicability and its ability to provide reliable measurement data for high-quality manufacturing of large-sized components.

Future work will focus on developing advanced error modeling and compensation strategies to address environmental influences and robot-induced disturbances, such as thermal drift, vibration, and long-term stability issues, while also conducting a detailed analysis of the spatial distribution of measurement errors, including potential accuracy attenuation in edge regions. In addition, intelligent measurement planning methods, such as adaptive scanning and path optimization, will be further investigated to improve measurement efficiency and ensure comprehensive coverage of complex geometries.