4.2. Comparative Analysis with Common Models

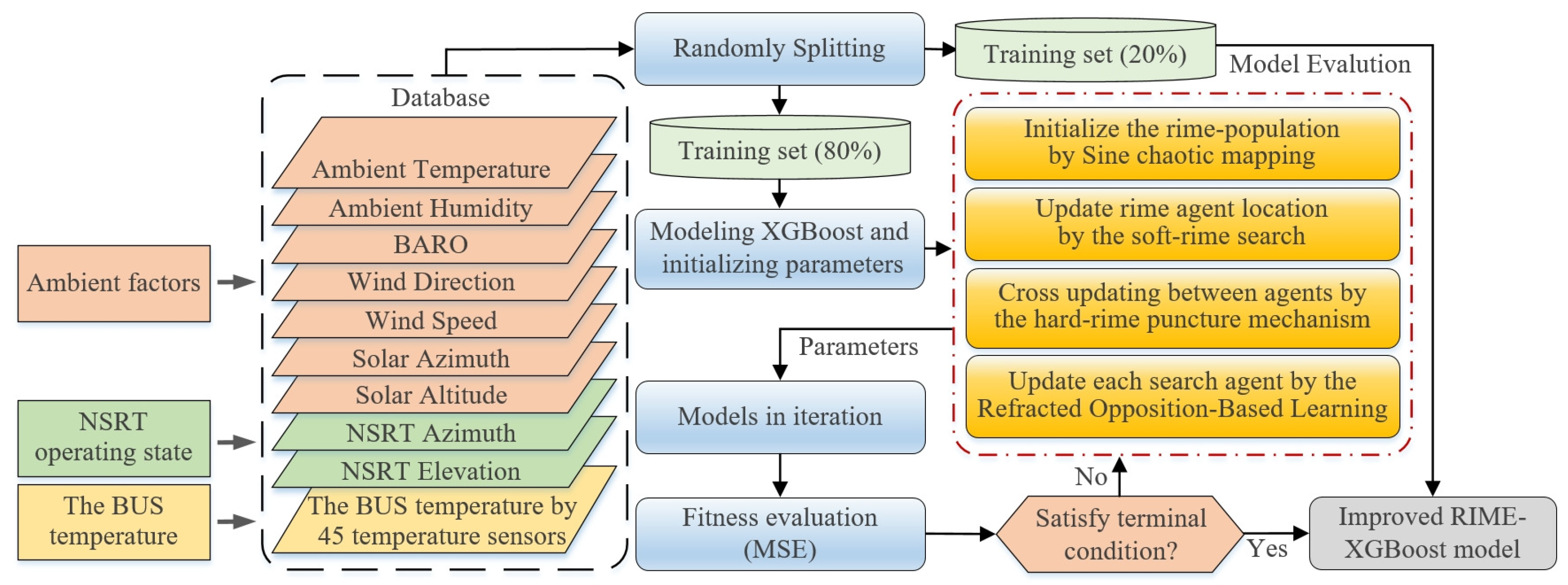

In the context of model prediction, the model frameworks adopted for similar application scenarios should generally be quite similar. Indeed, some researchers have employed neural networks, and scholars have attempted to realize real-time temperature prediction of structures without temperature sensors by leveraging machine learning or neural network models based on long-term historical temperature data [

7,

8,

9,

19]. Although these models are all used for structure thermal prediction scenarios, the specific prediction objects differ significantly, leading to distinct differences in the training datasets of the models. Consequently, the scene migration adaptability of different models varies. Therefore, to comprehensively analyze the performance of the proposed model in this paper, a comparative analysis will be conducted between GRU (Gated Recurrent Unit), CNN (Convolutional Neural Network), LSTM (Long Short-term Memory), Transformer-BiLSTM, and the proposed model.

Table 3 shows the model parameters required for each model. The training and testing results are presented in

Table 4.

Combined with the performance results of each model in

Table 4, the prediction accuracy and fitting ability are analyzed. The improved RIME-XGBoost model demonstrates the highest prediction accuracy and fitting capability across all evaluated metrics. It achieves an extremely low MAE of 0.331 K (train) and 0.621 K (test), an RMSE of 0.369 K (train) and 0.897 K (test), and an

of 0.999 K (train) and 0.996 K (test) in the training and test stages, respectively. This indicates that the improved ensemble learning framework (XGBoost) excels in capturing complex patterns within the data. Following this, the Transformer-BiLSTM model exhibits remarkable performance, with MAE values of 1.08 K (train) and 1.166 K (test), RMSE values of 1.458 K (train) and 1.543 K (test), and

values of 0.988 (train) and 0.984 (test). The integration of Transformer and BiLSTM enables effective capture of long-range dependencies and sequential features, leading to superior accuracy compared to traditional deep learning models. In contrast, traditional deep learning models such as GRU, CNN, and LSTM exhibit moderate performance. Among them, CNN has a relatively better

(0.962 for training and 0.959 for testing), while LSTM performs the least competitively in terms of both error metrics and fitting ability.

From the analysis of the generalization ability of each model, the Transformer-BiLSTM model displays excellent generalization ability, as evidenced by the minimal differences between its training and testing metrics. The consistency in MAE, RMSE, and across stages suggests that it can adapt well to unseen data. Models like CNN and GRU also maintain reasonable generalization, with small gaps between their training and testing performances. This implies that these models have relatively stable performance when applied to new data. For the improved RIME-XGBoost model, although there is a noticeable difference between the training and testing metrics, its overall performance remains at a very high level, indicating strong yet slightly less consistent generalization compared to Transformer-BiLSTM. LSTM, on the other hand, exhibits the relatively weakest generalization ability among the models, with the most significant increase in error metrics from the training to the testing stage.

Through the analysis of each model architecture, ensemble learning, as exemplified by the improved RIME-XGBoost model, proves to be highly effective in achieving ultra-high prediction accuracy, making it a preferred choice when the highest precision is the primary goal. The fusion architecture of Transformer-BiLSTM combines the strengths of Transformer’s self-attention mechanism and BiLSTM’s sequential processing capability, resulting in both high accuracy and strong generalization, which is particularly suitable for tasks involving sequential data with long-range dependencies. Traditional deep learning models (GRU, CNN, and LSTM) offer decent performance but lack the breakthroughs in accuracy and generalization seen in the more advanced architectures. Among them, CNN demonstrates better performance in capturing spatial features, while GRU and LSTM handle sequential data with varying degrees of effectiveness, with GRU being relatively more efficient and stable.

In conclusion, the improved RIME-XGBoost stands out for its unparalleled prediction accuracy, Transformer-BiLSTM excels in balancing accuracy and generalization, and traditional models like CNN and GRU provide viable options for scenarios with moderate performance requirements. The choice of model should be tailored to specific task priorities, such as precision, generalization, or computational efficiency.

4.3. Performance Comparisons of XGBoost Based on Other Heuristic Optimization

As discussed in

Section 4.2, the comprehensive predictive performance of the proposed model surpasses that of several comparable models.

Section 4.3 evaluates the performance of the proposed model against other XGBoost models based on different heuristic algorithms. The goal is to investigate whether the hyperparameters optimized by improved RIME significantly outperform heuristic settings and to determine if improved RIME effectively addresses the limitations of XGBoost in hyperparameter selection. The heuristic algorithms employed in these comparative models are either those proposed in recent journal papers or widely used optimization algorithms. They include Particle Swarm Optimization (PSO) [

23], Sparrow Search Algorithm (SSA) [

24], Pelican Optimization Algorithm (POA) [

25], Ivy Algorithm (IVY) [

26], Hiking Optimization Algorithm (HOA) [

27], RIME, and Improved RIME. Detailed information about them is shown in

Table 5. Furthermore, regarding the parameter settings of these heuristic optimization algorithms, after parameter debugging, it was found that the number of hyperparameters is relatively small in this scenario. When the population size exceeds 10, there is no significant change in the model’s prediction accuracy. Therefore, for all the heuristic algorithms involved in the comparison, the population size is set to 20 and the maximum number of iterations is set to 50.

As shown in

Table 5, PSO is renowned for its fast convergence, which enables rapid solution exploration. It is also robust, maintaining stable performance across various problem landscapes, and is easy to implement. SSA has strong global search ability and can explore a wide range of solution spaces to avoid falling into local optimization. POA-XGBoost has a simple structure, which simplifies its understanding and deployment. It can also achieve efficient optimization to ensure that the XGBoost model is effectively optimized with minimal computational overhead. IVY is flexible and can adapt well to different problem forms. It is especially good at dealing with multimodal problems, that is, problems with multiple optimal solutions, and can efficiently explore the complex solution space. HOA has fast convergence, similar to Particle Swarm Optimization (PSO), and can effectively balance exploration (searching for new areas) and development (optimizing known good areas). It can be seen that these heuristic algorithms have their own advantages, but the performance in the specific context of realizing the temperature prediction of telescopes’ back-up structure based on XGBoost still needs to be analyzed experimentally.

Table 6 shows the performance results of the model optimized by these heuristic algorithms.

Comparing the performance of the models in

Table 6 and

Table 4, it can be seen that the XGboost model has good prediction accuracy in the thermal field of the reflector antenna’s BUS. All the heuristic algorithms selected in the experiment combined with the XGBoost model show that the performance of these models’ MAE is within 1 K, RMSE is within 1.5 K, and

is more than 98%, which are significantly better than other mainstream models (such as GRU, CNN, LSTM, etc.). It can be concluded that in the specific data prediction scenario, the selection of the baseline model largely determines the final model architecture.

From the perspective of the overall prediction accuracy of the model, the performance of these XGBoost models optimized directly by heuristic algorithms is basically similar. It can be seen that the performance results of the new optimization algorithms proposed in recent years are better than those proposed in earlier years. Most importantly, the performance of the improved RIME-XGBoost model proposed in this paper is significantly better than other XGBoost models optimized directly by heuristic algorithms. The improved RIME-XGBoost also has the lowest MAE (0.331 K for the training set and 0.621 K for the test set) and RMSE (0.369 K for training set and 0.897 K for test set) in all models. In contrast, the error index of POA-XGBoost is relatively high (MAE on the test set is 1.07 K; RMSE is 1.498 K). In summary, the integration of heuristic algorithms with XGBoost yields promising predictive performance, and improved RIME-XGBoost emerges as the most robust model in terms of accuracy, generalization, and error control. The variability in performance across models underscores the importance of selecting or adapting heuristic algorithms based on the specific requirements of the prediction task.

4.4. Performance Verification of Improved Model Under Extreme Conditions

Through the comparative analysis of various models and optimization algorithms, it can be concluded that the improved RIME-XGBoost model proposed in this paper has excellent performance in the scenario of obtaining the antenna’s BUS temperature.

Figure 9 shows the RMSE distribution between the BUS temperature values predicted by the improved RIME-XGBoost model and the actual temperature data in the field. As shown in

Figure 9, the temperature prediction accuracy in the area with more dense temperature sensors is almost within 0.7 K, and the overall prediction accuracy of the BUS temperature is within 1 K.

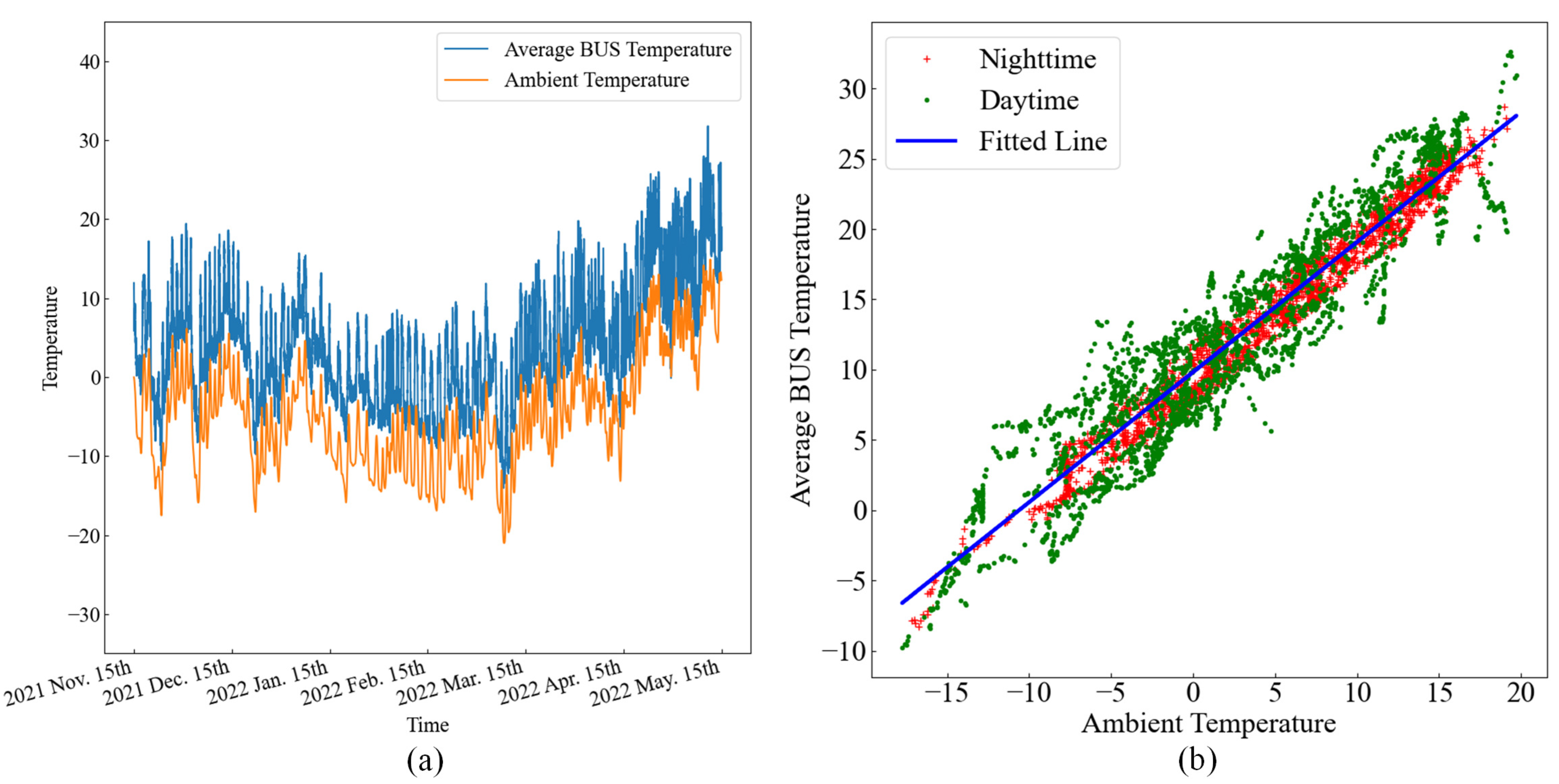

In order to verify the performance of this model under extreme environmental conditions, we selected the three features with the strongest correlation as the key variables influencing the environmental conditions from

Figure 5. These three variables are ambient temperature, relative humidity, and solar altitude. We divided these three feature variables into intervals to test the performance of the model under different environmental conditions.

Table 7 represents the parameter range division information for extreme working conditions, and

Table 8 presents the specific performance results of the model under each environmental condition.

Figure 10 shows the visualization results of prediction accuracy.

From the analysis of the overall performance and stability of the model, the model demonstrates high predictive accuracy across most environmental conditions, as indicated by the consistently high values (all above 0.973). However, performance metrics (MAE and RMSE) exhibit variations under different extreme scenarios, suggesting that extreme environmental factors impose challenges on the model’s predictive capability.

As for the influence of ambient temperature variables on the model, when the operating condition is extremely cold or extremely hot, both temperature extremes lead to increased prediction errors (higher MAE and RMSE) and a slight decrease in . Notably, extremely hot conditions affect the model more significantly than extremely cold conditions, implying that the model is more sensitive to high-temperature extremes.

For humidity conditions, compared with medium humidity, extremely dry and extremely wet environments will reduce the performance of the model. Among these, extremely dry conditions cause the most significant increase in MAE and RMSE, indicating that extreme dryness is a more critical factor for the model’s temperature prediction accuracy than extreme wetness.

As for the influence of the solar altitude angle variable on the model, the error is higher than medium solar altitude (MAE = 0.462 K, RMSE = 0.493 K, = 0.999) for both low and high solar altitudes. High solar altitude has a slightly more pronounced effect on MAE and RMSE than low solar altitude, while remains relatively high (above 0.989) under both extreme solar altitude scenarios, suggesting that the model still maintains reasonable explanatory power even under such conditions.

In summary, the model exhibits strong performance under moderate environmental conditions but experiences measurable degradation in prediction accuracy under various extreme conditions (extremely cold/hot, extremely dry/wet, and extreme solar altitudes). Among these extreme conditions, extremely dry, extremely hot, and high solar altitude conditions are the most impactful on the model’s MAE and RMSE. These findings highlight the need for further model optimization or adaptation strategies to enhance robustness in extreme environments.

4.5. In-Depth Discussion of Model Performance

Through a comprehensive comparative analysis of the performance data of various models across

Section 4.2,

Section 4.3 and

Section 4.4, it can be concluded that the selection of the baseline model is crucial in the initial stage of designing the model architecture for specific prediction scenarios. An inappropriate choice is likely to result in the designed model failing to achieve the expected prediction accuracy. Additionally, if a model exhibits poor accuracy on the training set, it will inevitably perform poorly when validated on the test set. Furthermore, a model with considerable prediction accuracy on the training set does not necessarily demonstrate excellent performance on the test set. This perspective is exemplified by the model proposed in this paper: although its performance in the training set is MAE = 0.331 K, RMSE = 0.369 K, and

= 0.999, its performance in the test set is MAE = 0.621 K, RMSE = 0.897 K,

= 0.996 (nevertheless, its performance on the test set remains optimal compared to other models).

From the performance results, the proposed model in this paper shows an excessively rapid decline in prediction accuracy error from the training set to the test set, indicating that the generalization ability of the proposed model needs to be improved. An analysis of the model’s generalization ability under extreme operating conditions reveals that the model exhibits unsatisfactory prediction accuracy in extremely dry, extremely cold, and extremely hot environments. After analyzing the dataset, we found that this may be related to changes in the physical parameters of heat transfer involved in the structure thermal scenarios. Under extremely dry or extremely cold conditions, the convective heat transfer coefficient of the air medium in the external environment undergoes significant changes, leading to alterations in the duration of the structure temperature response. Consequently, the temperature predicted by the model at such times may suffer from temporal misalignment. Although such cases are not common, they should be the focus of future research. In addition, regarding the model’s poor performance under extremely hot conditions, an analysis of the original collected data shows that the training dataset consists of data from November to June of the following year, thus lacking summer data. This deficiency ultimately results in the model’s subpar prediction performance in extremely hot environments.