1. Introduction

Pichia pastoris has become a key platform for the production of exogenous proteins, and is widely used in various fields such as biomedicine, cosmetic skincare, and industrial enzyme preparations [

1]. However, the highly nonlinear and time-varying characteristics with strong coupling of the

Pichia pastoris fermentation process makes it difficult to directly measure some key biochemical parameters online. Accurate measurement of these parameters is essential for optimizing fermentation processes and improving product quality. Therefore, as an indirect measurement method, soft sensor technology has been extensively researched and applied in predicting the fermentation process of

Pichia pastoris [

2].

Traditional soft sensor methods primarily include linear models such as Partial Least Squares (PLS) and nonlinear models like Artificial Neural Networks (ANNs) [

3,

4,

5]. Wang (2025) highlighted the effectiveness of PLS in addressing multi-variable correlation in batch processes but noted its inadequacy in capturing nonlinear relationships [

6]. With the advancement of machine learning, ANNs and deep learning variants (e.g., Deep Neural Networks, DNNs) have been widely adopted in fermentation soft sensors due to their strong nonlinear fitting capabilities [

7,

8]. However, most of these methods have relied on global modeling frameworks, having assumed uniform data distribution throughout the fermentation cycle—an assumption that has contradicted the actual characteristics of

Pichia pastoris fermentation. Specifically,

Pichia pastoris fermentation is characterized by multi-phase features, including the lag, exponential, stationary, and decline phases, and data distributions vary across these phases [

9]. Global models trained on full-cycle data often result in the averaging of average local phase information, resulting in reduced fitting accuracy for individual phases.

To address the multi-phase issue, several local modeling strategies have been proposed. Zhou, Y. et al. (2023) developed a phase-based soft sensor for fed-batch fermentation using fuzzy C-means (FCM) clustering for phase division and Support Vector Regression (SVR) for sub-modeling, and achieved higher accuracy than global models [

10]. However, these local methods only focused on phase partitioning within a single batch and failed to address batch-to-batch data distribution heterogeneity—a critical challenge in

Pichia pastoris fermentation. Variations in medium composition, inoculum concentration, and operating conditions often result in significant distribution differences between batches. This heterogeneity causes trained models to exhibit poor generalization when applied to new target batches [

11].

Transfer Learning (TL) has emerged as a promising approach to address cross-domain distribution shifts by leveraging knowledge from data-rich source domains to improve learning in target domains [

12,

13,

14,

15]. In recent years, Deep Transfer Learning (DTL), which integrates deep learning’s feature extraction ability with TL’s knowledge transfer capability, has been introduced into fermentation soft sensors. For example, Li et al. (2024) proposed a DTL-based soft sensor for DO concentration in industrial fermentation, and achieved better performance than traditional models by transferring pre-trained deep models between batches [

16,

17]. However, most existing DTL-based methods adopt global transfer frameworks, transferring knowledge from the entire source domain to the target domain without considering the multi-phase local characteristics of fermentation. This inconsistency between global transfer and local phase features limited the extraction of phase-specific information and restricts further performance improvement [

17].

Additionally, optimization of local sub-models and selection of relevant source domains require further refinement [

18]. Heuristic optimization algorithms, such as the Firefly Algorithm (FA), have demonstrated considerable efficacy in soft sensor parameter optimization owing to their robust global search capabilities [

19,

20], but the application of improved FA in optimizing local sub-models remains underexplored. For source domain selection, Euclidean distance is widely used for batch data similarity analysis [

21], yet its integration into a local transfer framework to select the most relevant sub-source domain has not been systematically studied.

To address these research gaps, this study aims to develop a high-performance soft sensor method for Pichia pastoris fermentation that simultaneously handles multi-phase characteristics and batch-to-batch heterogeneity. A local transfer modeling framework is proposed by integrating local modeling theory and DTL (deep transfer learning strategies): (1) K-means clustering divided fermentation data into multiple phases, and partitions the training set into sub-source domains; (2) DNN-based sub-source domain models were established, with an improved FA which was used to optimize DNN hyperparameters to enhance local fitting accuracy; (3) Euclidean distance was computed to measure the similarity between target samples and sub-source domain centroids, to select the most relevant sub-source domain; and (4) DTL fine-tuning was applied to adapt the optimal sub-source domain model to the target domain, yielding the final prediction model.

Experimental results demonstrated that the proposed method effectively extracted local phase features and alleviated batch-to-batch heterogeneity. Compared with traditional global models and existing local and transfer learning methods, it exhibits significantly higher prediction accuracy and generalization performance. This work provided a new solution for Pichia pastoris fermentation soft sensor under multi-operational conditions and serves as a framework for addressing similar challenges in other microbial fermentation processes.

Theoretically, it integrates local modeling and deep transfer learning for the first time to address the dual challenges of multi-phase characteristics and batch heterogeneity in Pichia pastoris fermentation, thereby addressing limitations in existing soft sensor approaches. Practically, the proposed method enables the effective extraction of local phase features and transfer domain adaptive knowledge, leading to improved prediction accuracy and generalization performance. It provides a new technical solution for real-time monitoring of key biochemical parameters in industrial Pichia pastoris fermentation processes under multi-operating conditions, which can support the dynamic optimization of fermentation processes and reduce production costs, thereby ensure product quality stability—thus promoting the intelligent advancement of microbial fermentation industries.

2. Theoretical Analysis

2.1. Multi-Model Local Modeling Framework Based on K-Means Clustering

Conventional global modeling approaches treat the entire fermentation dataset as a homogeneous unit, which fails to capture the distinct metabolic patterns of

Pichia pastoris fermentation—specifically, the cell proliferation phase, fed-batch transition phase, and protein induction phase. To address this limitation, a

K-means clustering-based phase division strategy is employed to partition the historical source batch data into phase-specific sub-source domains, laying the foundation for subsequent local model training. The core of

K-means clustering lies in minimizing the Within-Cluster Sum of Squares (WCSS), which quantifies the cohesion of data points within each cluster. The WCSS is mathematically defined as:

where

denote the number of clusters;

represent the set of data points comprising the

-th cluster;

signify a data point

;

correspond to the centroid of the

-th cluster, calculated as

; and

is the squared Euclidean distance between data point

and cluster centroid

,with the Euclidean distance itself defined as:

where

represents the number of features (or dimensions) in the dataset,

is the

j-th feature of

, and

is the

j-th component of

.

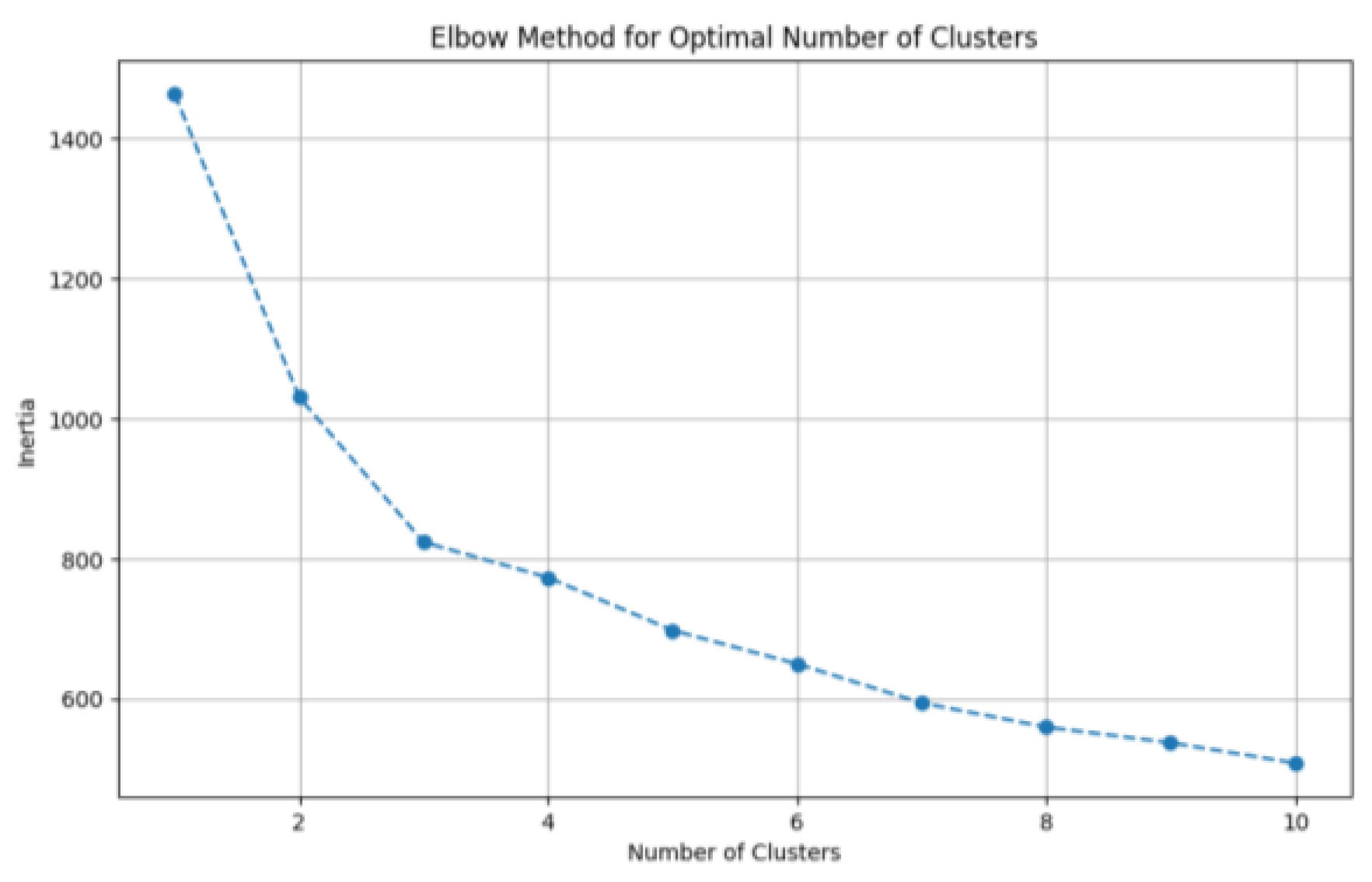

During the clustering process, as the number of clusters increases, the partition of data samples becomes more refined, which leads to higher intra-cluster cohesion. Consequently, the sum of squared errors (SSE, a pivotal metric in the elbow method) gradually decreases. The SSE is defined as:

Let denote the -th cluster, represent the set of all data points belonging to, and be the centroid of . When the number of clusters is set below the true number of underlying groups, increasing significantly enhances the intra-cluster cohesion of each partition. Consequently, the decline rate of the error metric SSE; when the number of clusters reaches the true number of underlying groups, further increments in yield rapidly diminishing returns in intra-cluster cohesion. Consequently, the marginal decrease in the error metric SSE sharply attenuates and gradually plateaus as continues to increase beyond the true cluster count. The relationship plot between the number of clusters and the error exhibits an “elbow” shape, where the inflection point on the curve corresponds to the true number of clusters in the data.

The elbow method was applied to a dataset of

Pichia pastoris samples after dimensionality reduction via Principal Component Analysis (PCA). The resulting elbow plot, where the vertical axis (inertia) represents the within-cluster sum of squared errors (SSE) was illustrated in

Figure 1. As depicted in the plot, the maximum curvature occurs at

= 3 clusters, corresponding to the “elbow” identified by the elbow method. Consequently, this paper selected

= 3 as the optimal number of clusters for

K-means clustering.

Figure 2 presents the scatter plot of PCA reduced data partitioned into three clusters, each corresponding to one fermentation phase. These three clusters were further designated as sub-source domains

D1,

D2, and

D3 for subsequent local model training.

For a given target batch sample, its Euclidean distance to the centroid of each sub-source domain was computed using Equation (2). The sub-source domain with the smallest distance was identified as the most relevant with the target sample, and the corresponding local model was selected as the pretrained model for transfer learning.

2.2. Sub-Source Domain Prediction Modeling Using Deep Neural Networks

In soft sensor applications for Pichia pastoris fermentation, deep neural network (DNN) modeling exhibits powerful learning and nonlinear approximation capabilities to precisely uncover intricate relationships within fermentation data. This enables real-time, high-precision prediction of key biochemical parameters, thereby providing robust data support for the optimization and control of fermentation processes.

Deep Neural Network (DNN), a cornerstone technology machine learning, construct artificial neural network models by emulating the structural and functional architectures of biological neuronal networks in the human brain. These models consist of multiple interconnected layers of artificial neurons.

When categorized by their positions within a Deep Neural Network (DNN), the internal layers of a DNN can be classified into three types: the input layer, the hidden layer (s), and the output layer.

Given an input X, the weight connecting node in layer to node j in layer − 1 is denoted as . The bias term for node in layer is . The network’s output is , and both the hidden layers and the output layer employ the sigmoid activation function.

The computational process of deep neural networks consists of two fundamental components: forward propagation and backward propagation. The forward propagation phase can be formally represented as follows:

The output of the

-th neuron in the

-th layer is mathematically expressed as follows:

The forward propagation equations for a layer in a Deep Neural Network (DNN) can be compactly expressed in matrix form. Given:

The forward propagation process iteratively computes layer-wise outputs using Equation (5), culminating in the model’s final prediction, However, forward propagation alone only yields the model’s predicted outputs; it does not update the model parameters. Model parameters are updated by backpropagating the error between predictions (from forward pass) and ground truth labels (from experimental data).

Let Y = {

,

, …,

} denote the ground truth. The loss function is defined as the mean squared error (MSE) between the model’s predictions and ground truth labels:

Parameters are updated using a gradient descent-based optimization method, according to the general update rule:

where

is a function of parameter

,

denotes the gradient operator, and

represents the learning rat.

Based on the above derivations, the gradient descent algorithm for updating parameters in deep neural networks is defined as

where

,

.

The calculated weights are and bias update:

At this stage, the backpropagation phase is completed, and a deep neural network model with a predicted output closer to the ground truth is trained.

2.3. Model Optimization via Improved Firefly Algorithm

The performance of DNN models is highly dependent on hyperparameters (e.g., initial weights, learning rate, number of hidden neurons). The traditional Firefly Algorithm (FA)—a nature-inspired metaheuristic—tends to suffer from premature convergence to local optima when optimizing DNN hyperparameters [

19]. To address this, an Improved Firefly Algorithm (IFA) was developed by incorporating a random perturbation strategy, Differential Evolution (DE)-based crossover, and selection mechanism.

2.3.1. Traditional FA Fundamentals

In FA, each firefly is encoded as a candidate solution vector (representing DNN hyperparameters) [

22]. The brightness of a firefly is proportional to its fitness value, defined as the reciprocal of the DNN’s MSE (Equation (6)):

where

Ii is the brightness of the

i-th firefly, and

are the DNN parameters corresponding to the

i-th firefly.

The attractiveness between firefly

i and firefly

j (where

) is:

where

is the maximum attractiveness at

r = 0,

is the light absorption coefficient, and

is the Cartesian distance between firefly

i and

j in the solution space:

where

DS is the dimension of the solution space (number of DNN hyperparameters), and

xi,d,

xj,d are the d-the components of the

i-th and

j-the firefly vectors, respectively.

Firefly

i moves toward firefly

j (brighter firefly) as follows:

where

is the step size (

), and

is a random number following a uniform distribution

U(0,1).

2.3.2. Improved Firefly Algorithm

(1) stochastic perturbation strategy.

To avoid local optima, a random perturbation was added to the updated position of each firefly [

23]:

where

is the perturbation factor, and

are randomly selected firefly indices (distance from

i).

(2) DE-Based Crossover.

Fireflies were crossed with the current global best firefly (

) to refine optimal solutions. The crossover probability was proportional to the firefly’s fitness:

where

is the number of fireflies. The crossover operation generated a new solution:

(3) Levy Flight.

To enhance global search capability, Levy Flight was integrated into the position update [

24,

25,

26]. The step size of Levy Flight follows a Levy distribution with probability density function:

where

is the gamma function. The step size

s was generated using the Mantegna algorithm, and the position update was adjusted to:

The IFA optimization process terminates when the maximum number of iterations is reached or the fitness value converges. The optimal firefly vector is decoded to derive the DNN hyperparameters for each sub-source domain model. A flowchart of the IFA is provided in

Figure 3 and Algorithm 1.

| Algorithm 1: Parameter Settings of the Improved Firefly Algorithm. |

Input: Population size , Max iterations , Objective function

Output: Global best solution - 1.

Initialize firefly population randomly - 2.

Compute brightness for each firefly - 3.

// Initialize global best - 4.

for to do - 5.

for to do - 6.

for to do - 7.

if then - 8.

Compute distance - 9.

Compute attractiveness - 10.

- 11.

- 12.

Update brightness - 13.

if then - 14.

end if - 15.

end for - 16.

end for - 17.

for to do - 18.

if then - 19.

- 20.

if then // Greedy selection - 21.

end if - 22.

end for - 23.

end for - 24.

return

|

2.4. Deep Transfer Learning for Batch Heterogeneity Mitigation

During the fermentation process of

Pichia pastoris, variations in operational conditions across different fermentation batches lead to differences in data distribution during the fermentation. However, since these batches essentially represent the same reaction process, models trained on data from different batches must share certain similarities. Therefore, useful information learned from other fermentation batches can be utilized to assist the target fermentation batch in completing its tasks [

14].

Transfer learning applies the model architecture knowledge learned from an old task (source task) to a new task (target task). Even when the target task has a limited dataset, structure-based transfer learning can achieve more accurate predictions by transferring structural knowledge from the source task. In transfer learning, it is essential to first address the issue of data probability distribution in the datasets, followed by employing strategies such as freezing and fine-tuning [

26] (see

Figure 4).

Due to the highly nonlinear characteristics of Pichia pastoris fermentation data, which implies more complex data distribution discrepancies between source and target domains, it is necessary to minimize the Maximum Mean Discrepancy (MMD) metric to reduce the divergence in data probability distributions.

Assuming the source domain data

and the target domain data

, this paper use a deep neural network

as the feature extractor, we obtain source domain features

and target domain features

, the Maximum Mean Discrepancy (MMD) is defined as:

Through the optimization of , the parameters in DNN are updated to enhance the model’s predictive accuracy for target domain data.

After obtaining

, freezing and fine-tuning operations are performed as illustrated in

Figure 1. The freezing strategy preserves most or all pre-trained model parameters during transfer learning. The depth of the neural network was optimized prior to the main experiments. We conducted a grid search over architectures with 5 to 11 hidden layers and found that a 9-hidden-layer network yielded the lowest validation loss. This empirically determined architecture is employed herein, with the first

layers initially frozen to facilitate knowledge transfer from the source domains. Training begins with all layers locked, followed by sequential layer-by-layer unfreezing. The process stops when unfreezing additional layers fails to boost validation set performance beyond a preset threshold.

where

denotes the parameters of the

-th layer at the

-th iteration,

represents the parameters of the

-th layer at the (

)-th iteration,

stands for the extremely small learning rate applied to frozen layers,

indicates the maximum learning rate for fine-tuning layers, and

signifies the gradient of the loss function with respect to the parameters. The parameter set

comprises both weights and biases, and the loss function is defined as follows:

where

is the predicted value of the model, and

is the true value.

Fine-tuning Strategy refers to the selective updating of a subset of parameters (typically the topmost or final layers) in a pre-trained model while keeping other layers frozen during task-specific training.

The fine-tuning process for frozen layers proceeds as follows:

where

represents the weights of the fine-tuning layer,

denotes an extremely small learning rate applied to the frozen layers, while

and

are the gradients of the loss function

with respect to the weights and biases, respectively.

where

denotes the weights of the fine-tuning layer;

represents an exceptionally large learning rate applied to the frozen layers; while

and

correspond to the gradients of the loss function

with respect to the weights and biases, respectively.

2.5. Transfer Learning Modeling Based on K-IFA-DNN

The IFA-DNN sub-models trained on phase-specific sub-source domains exhibit strong fitting capabilities for historical source batches. However, batch-to-batch heterogeneity in

Pichia pastoris fermentation (e.g., variations in raw material purity or seed culture activity) results in distribution shifts between source and target batch data. This mismatch reduces the generalization capability of pre-trained sub-models when directly applied to target batches. To address this, a local transfer learning framework was proposed, which leverages the phase-specific knowledge of IFA-DNN sub-models and adapts it to the target domain via layer-wise freezing and fine-tuning. This integration ensures the model retains phase-specific feature extraction capabilities while adapting to batch-wise distribution differences. The complete workflow is illustrated in

Figure 5, with detailed steps as follows:

Step 1:

Sub-Source Domain Partitioning: Historical source batch data was clustered into 3 sub-source domains (

D1,

D2,

D3) using K-means (

Section 2.1). For each sub-domain, the cluster centroid was calculated using

and stored as

μ1,

μ2,

μ3. These centroids serve as phase-feature benchmarks for subsequent target sample matching.

Step 2:

IFA-DNN Sub-Model Training: For each D

k (

k = 1, 2, 3) an IFA-DNN sub-model

Mk was constructed via the DNN architecture and IFA hyperparameter optimization (

Section 2.2 and

Section 2.3), yielding three pre-trained sub-models

M1,

M2,

M3.

Step 3: Target Sub-Source Domain Selection: For a given target sample (with auxiliary variables measured but key biochemical parameters unknown), using the Euclidean distance formula compute the distance between and each stored centroid (). Identify the sub-source domain with the smallest distance (e.g., M2). The corresponding IFA-DNN sub-model (M2 in this case) was selected as the base transfer model, as its trained phase features (e.g., fed-batch transition phase) are most aligned with -ensuring transferred knowledge is relevant to the target sample’s metabolic state.

Step 4:

Deep Transfer Fine-Tuning: The base model

M2 was fine-tuned using limited labeled target domain data

, minimizing distribution mismatch between

D2 (source) and

(target) (

), and applying layer-wise freezing/fine-tuning (

Section 2.4) to obtain the target-adapted model

.

Note: For feature extracted by the DNN’S hidden layers (denoted as from D2 and from .

Step 5:

Target Sample Prediction: The optimized model

was applied to

to predict the key biochemical parameter value

via forward propagation (Equation (4)).To validate the framework’s effectiveness, the prediction performance was evaluated using metrics including Root Mean Squared Error (RMSE) and Coefficient of Determination (R

2), benchmarked against traditional method (e.g., global DNN, non-optimized FA-DDN) to confirm improvements in accuracy and generalization.

3. Emulation

To validate the proposed soft sensor modeling method based on the local transfer framework for addressing the multi-stage characteristics and batch-to-batch data heterogeneity in Pichia pastoris fermentation, a systematic emulation has been designed, including data preprocessing (to provide high-quality input for modeling) and model construction (to implement the local transfer logic). The detailed methodology is outlined below:

Data Preprocessing

(1) Data acquisition.

Sample data are collected at 4-h intervals during the fermentation process. Environmental parameters (e.g., temperature, pH, dissolved oxygen) and input variables (e.g., feed flow rates) are automatically measured using built-in instrumentation or sensors integrated with the bioreactor. These measurements are recorded ten times within the four-hour interval and subsequently converted into a 4-h average using the difference quotient method. In contrast, key biochemical parameters—including product concentration (inulinase activity), Pichia pastoris biomass concentration, and methanol concentration—are determined through off-line laboratory assays conducted every 4 h (i.e., coinciding with the 4-h sampling timepoints).

(2) Increase the amount of data.

Given the scarcity of experimental data with only three batches available, each containing 61 data points, it is necessary to augment the dataset using a method that increases the number of internal samples for training the soft sensor model, thereby improving prediction accuracy. This study employs linear interpolation as the data augmentation technique. Linear interpolation involves constructing an interpolant as a first-degree polynomial, ensuring zero interpolation error at the given data nodes. The augmentation strategy inserts two new data points between each pair of adjacent original data points, effectively expanding each batch from 61 to 181 total points and generating 120 additional interpolated samples per batch. These interpolated samples are partitioned into two distinct subsets of 60 points each for model. Compared to higher-order methods (e.g., quadratic interpolation), linear interpolation offers simplicity and computational efficiency, making it a pragmatic choice for dataset expansion in resource-constrained scenarios while maintaining data consistency between auxiliary and target variables.

where

and

denote the horizontal and vertical coordinates of the interpolated data points, while

,

,

,

represent the coordinates of two adjacent original data points used for interpolation.

(3) Dimensionality reduction (selection of auxiliary variables).

Given the high dimensionality of the measured dataset due to the multitude of features, the direct use of raw data for model construction often results in inaccurate or unstable models owing to the curse of dimensionality. To address this challenge, this study employs Principal Component Analysis (PCA) as a dimensionality reduction technique. PCA is a linear transformation method that projects high-dimensional data onto a lower-dimensional subspace while preserving the dominant variance structures and eliminating redundant or correlated features. By doing so, PCA facilitates the extraction of latent principal components that encapsulate the most informative patterns in the data, thereby enhancing model interpretability and generalization performance.

where

(where

) denotes a column vector representing the values of the

-th feature across all data points in the dataset.

The mean vector of the calculated sample is:

The dataset is centered by subtracting the mean value from each feature (column vector), such that for every feature,

the centered feature

is computed as:

The covariance matrix of the dataset is computed as follows:

The covariance matrix is subjected to eigenvalue decomposition, yielding eigenvalues ⋯⋯ and their corresponding eigenvectors ⋯⋯ .

The top

k eigenvectors corresponding to the largest eigenvalues are selected as principal components, forming a projection matrix

where each column represents an eigenvector. The dataset is projected onto the subspace spanned by the top

k eigenvectors, yielding a low-dimensional representation of the data. Mathematically, the projection is computed as:

where

denotes the dimension-reduced dataset.

For determining the number of principal components to retain, the cumulative explained variance method can be employed. This approach quantifies the total variance proportion explained by the first

n principal components, reflecting their collective ability to represent the data. Its purpose is to guide the selection of

n such that most of the information in the original dataset is preserved. The calculation involves summing the individual explained variance ratios of each principal component. An example of this is illustrated in

Figure 6, which depicts the cumulative explained variance plot for PCA.

As depicted in

Figure 6, the selection of 8 principal components from the original set of 9 features achieved an optimal balance, yielding the highest cumulative explained variance. This dimensionality reduction from 9 to 8 components successfully retained the most critical information from the original dataset. Consequently, this optimization streamlined the model by reducing computational overhead and resource consumption while simultaneously enhancing its predictive accuracy and generalization performance.

5. Conclusions

This study aimed to address the core challenges of multi-stage characteristics and batch-to-batch data distribution heterogeneity in Pichia pastoris fermentation soft sensor modeling—issues that often result in low fitting accuracy and poor generalization of traditional methods. To this end, a novel local transfer modeling framework (K-IFA-DNN-TL) was proposed, integrating K-means-based source domain partitioning, an improved firefly algorithm (IFA)-optimized deep neural network (DNN), and Euclidean distance-guided deep transfer fine-tuning.

The simulation results confirmed the dual advantages of the proposed local transfer framework: ① Local partitioning resolved multi-stage characteristics: By dividing the source domain into stage-specific sub-domains via K-means, the model avoids “average fitting” of global data and accurately captures the nonlinear dynamics of each fermentation stage (e.g., exponential biomass growth, stationary phase inulinase synthesis). ② Improved firefly algorithm optimizes DNN performance: The IFA’s dynamic inertia weight and modified attractiveness function address the conventional FA’s local optima trapping and slow convergence, enhancing the DNN’s ability to fit local stage features. ③ Targeted transfer mitigated batch heterogeneity: Selecting the most similar sub-source domain via Euclidean distance ensures “focused transfer” of relevant features (avoiding negative transfer from irrelevant stages), while deep fine-tuning further adapts the model to batch-specific differences (e.g., earlier phase transitions, higher enzyme yield).

These findings collectively demonstrated that the proposed method outperformed traditional global models and conventional transfer learning approaches, providing a reliable solution for soft sensor modeling in multi-condition Pichia pastoris fermentation processes.

Despite its advantages, this study had several limitations that need to be addressed: ① Dependence on manual cluster number selection for K-means: The number of sub-source domains (set to 4 via the elbow method) relies on subjective judgment of the WCSS curve. In cases where fermentation stages overlap (e.g., prolonged transition between exponential and stationary phases), the elbow method may fail to identify the optimal cluster number, leading to suboptimal local modeling. ② Lack of integration with real-time data streams: The current framework used offline preprocessed data for modeling, rather than directly processing real-time sensor data (which may contain noise or missing values). This limited its application in fully automated fermentation control systems.

To address the above limitations and expand the framework’s applicability, future work will focus on the following directions: ① Develop adaptive clustering algorithms: Replace K-means with a fuzzy C-means (FCM) algorithm with adaptive cluster number, which uses fuzzy membership to handle overlapping stages and automatically optimizes the number of sub-domains via the Davies–Bouldin index (DBI). ② Realize online learning for continuous fermentation: Develop a lightweight online learning module that updates the model parameters in real time using streaming data from industrial sensors, enabling adaptive prediction for long-term continuous fermentation processes (e.g., 1000-h fed-batch fermentation).

In summary, the proposed local transfer modeling framework effectively resolved the multi-stage and batch-heterogeneity challenges in Pichia pastoris fermentation soft sensor. Its theoretical innovations and practical value provided a new paradigm for soft sensor development in complex biological processes, while the identified limitations and future directions lay the groundwork for further optimization and industrial application.